Estimating Pruning Wood Mass in Grapevine Through Image Analysis: Influence of Light Conditions and Acquisition Approaches

Abstract

1. Introduction

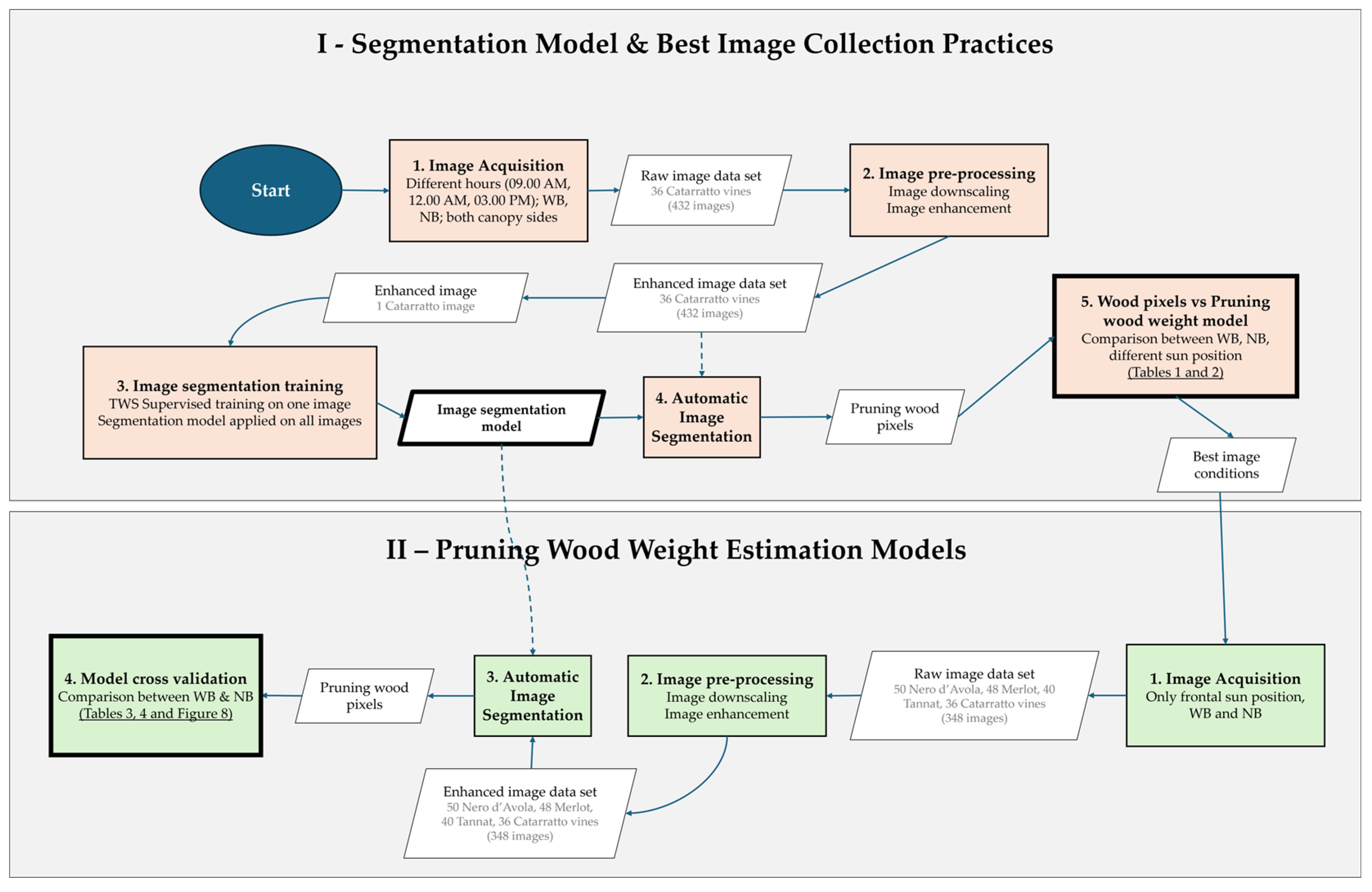

2. Materials and Methods

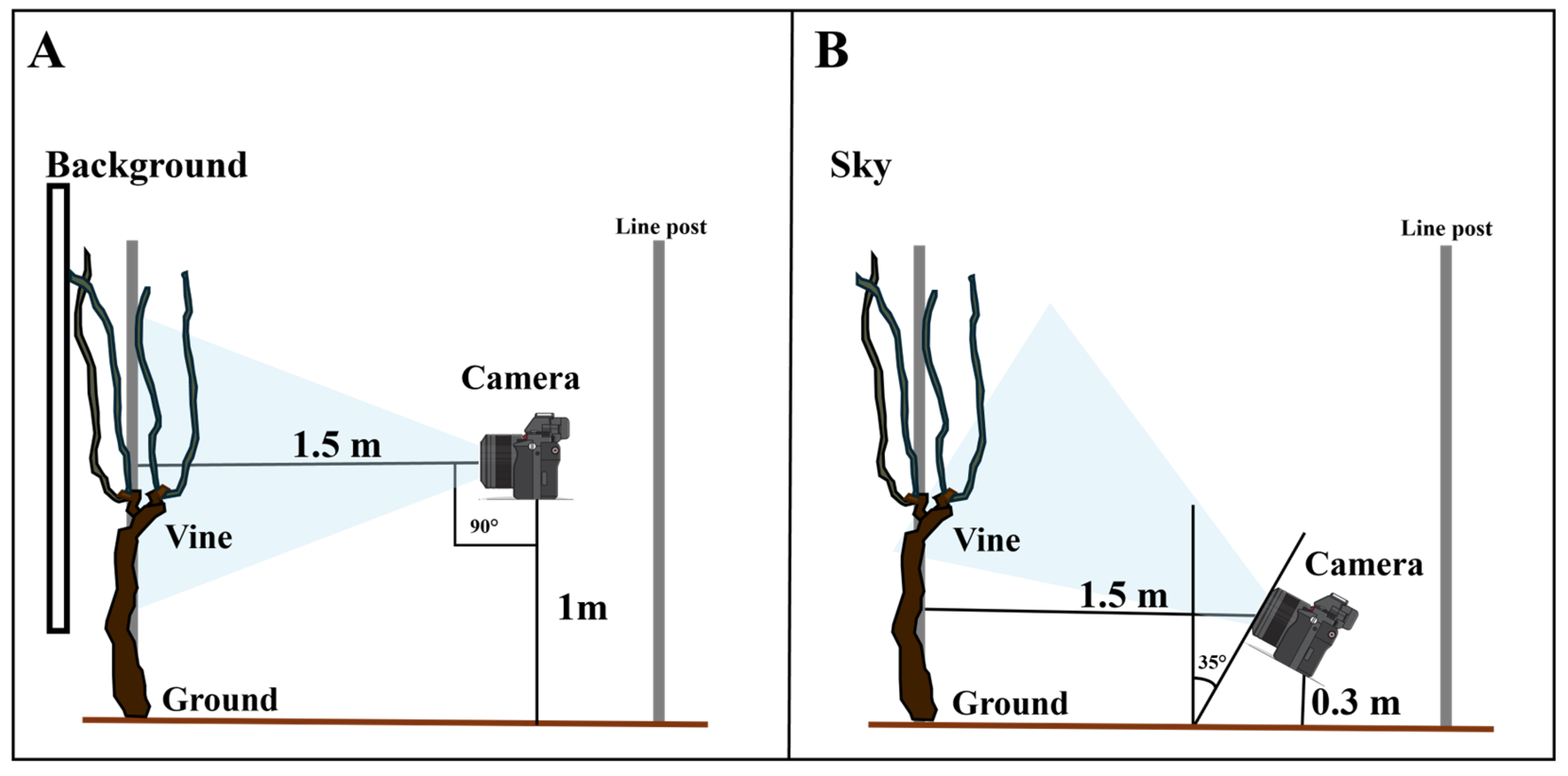

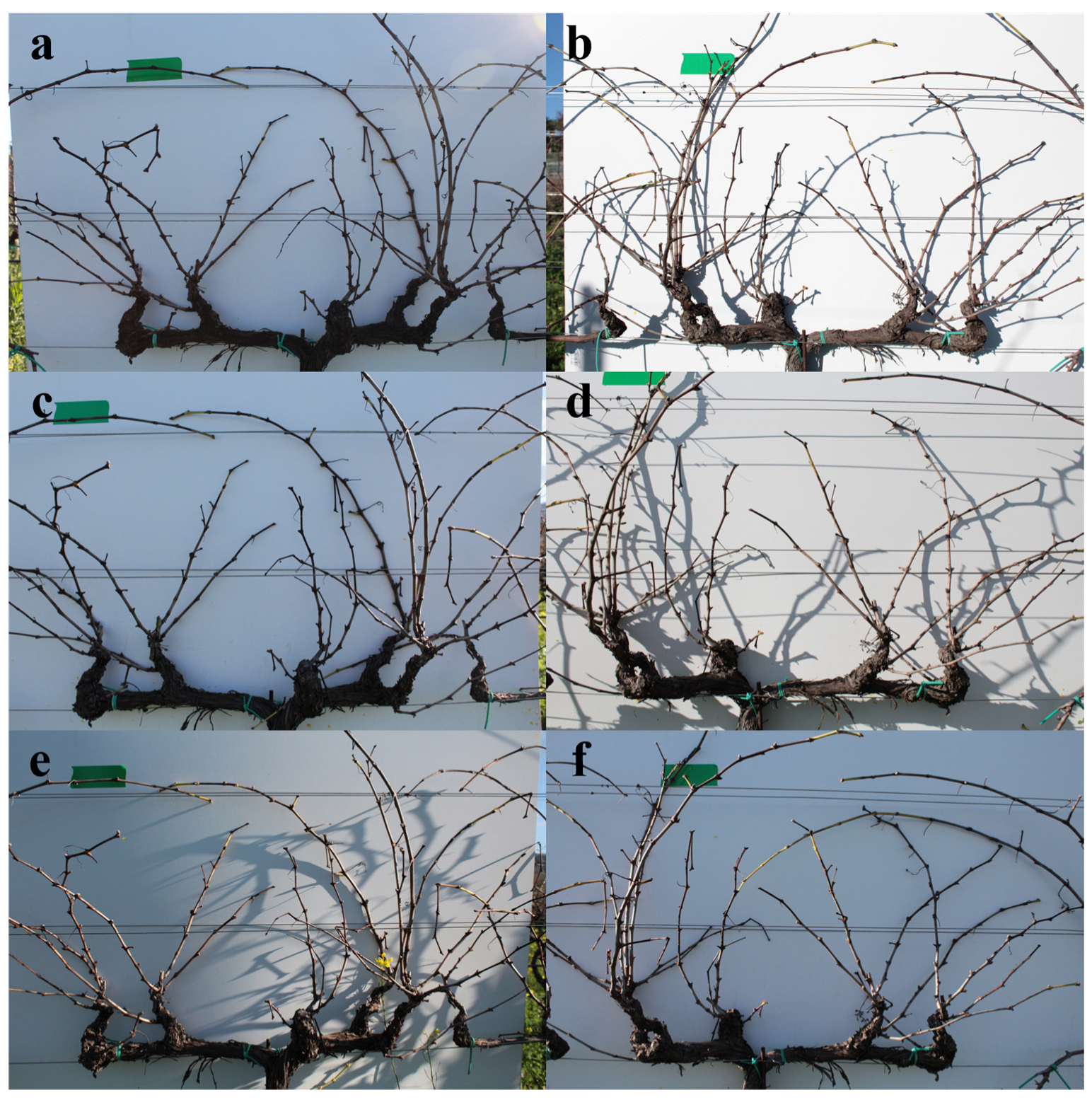

2.1. Experimental Design and Image Acquisition

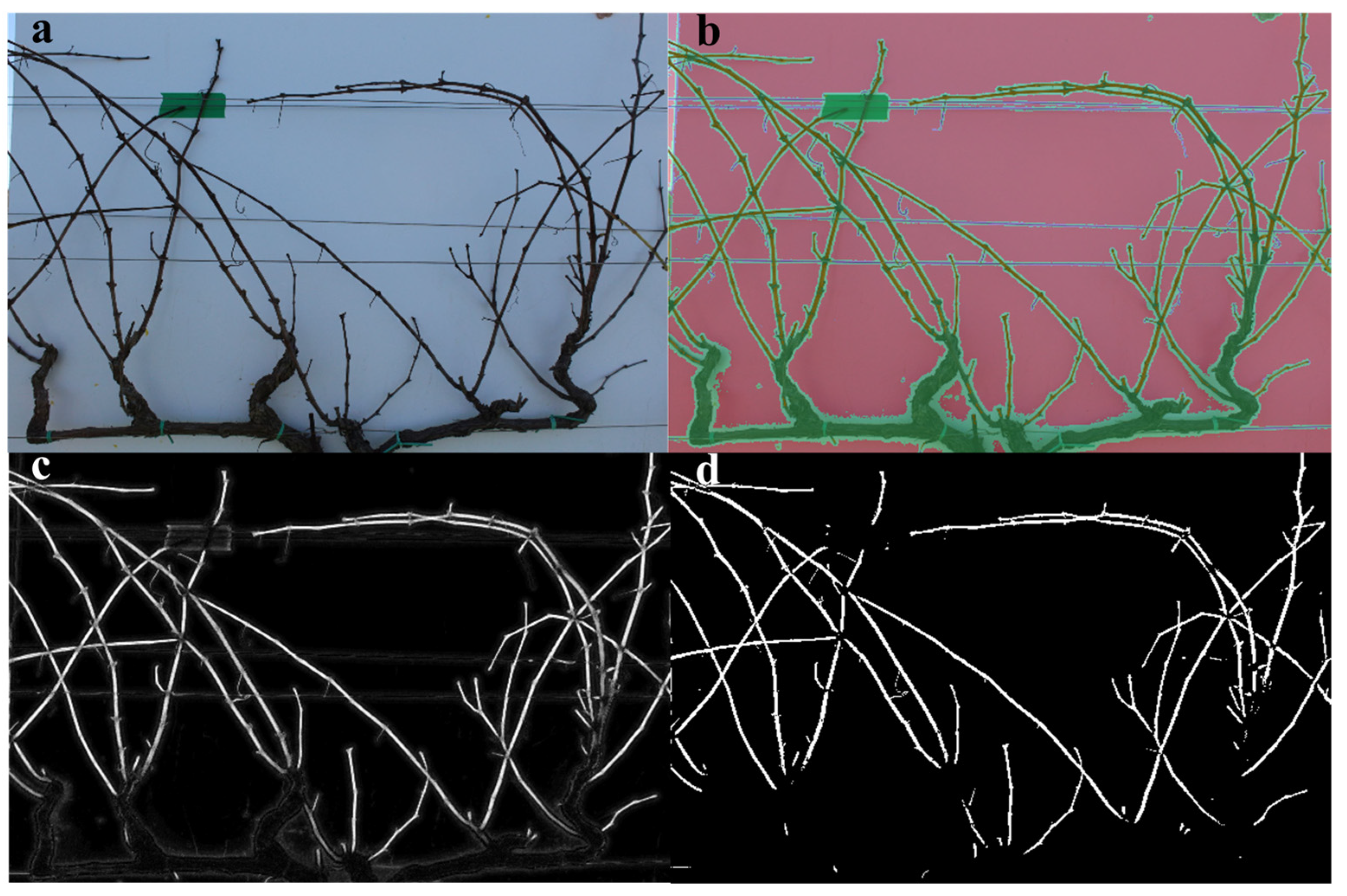

2.2. Image Analysis for Pruning Weight Assessment

2.3. Statistical Analysis and Models Evaluation

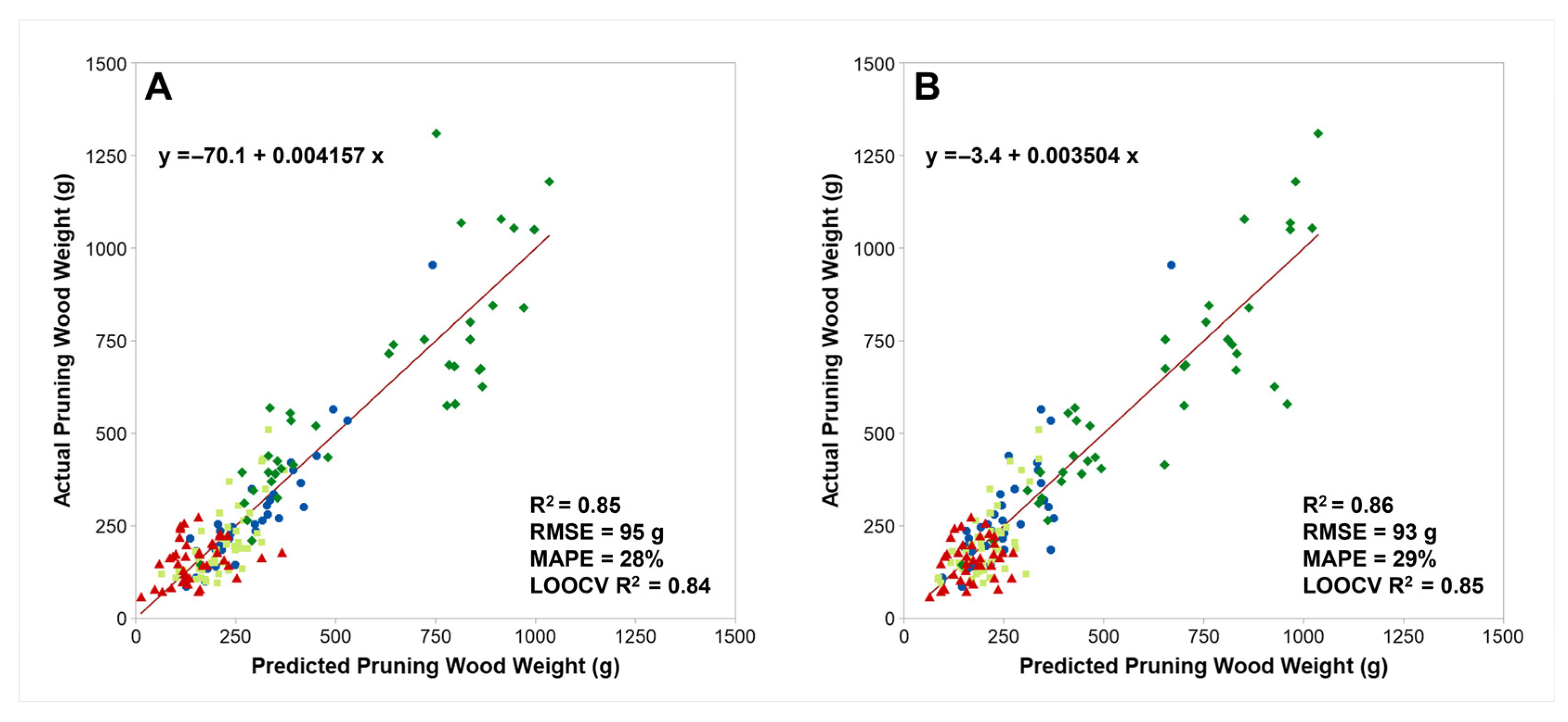

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Petrie, P.R.; Trought, M.C.; Howell, G.S. Growth and Dry Matter Partitioning of Pinot Noir (Vitis vinifera L.) in Relation to Leaf Area and Crop Load. Aust. J. Grape Wine Res. 2000, 6, 40–45. [Google Scholar] [CrossRef]

- Howell, G.S. Sustainable Grape Productivity and the Growth-Yield Relationship: A Review. Am. J. Enol. Vitic. 2001, 52, 165–174. [Google Scholar] [CrossRef]

- Petrie, P.R.; Trought, M.C.; Howell, G.S. Fruit Composition and Ripening of Pinot Noir (Vitis vinifera L.) in Relation to Leaf Area. Aust. J. Grape Wine Res. 2000, 6, 46–51. [Google Scholar] [CrossRef]

- Smart, R.; Robinson, M. Sunlight into Wine: A Handbook for Winegrape Canopy Management; Winetitles: Underdale, Australia, 1991. [Google Scholar]

- Viala, P.; Ravaz, L. American Vines (Resistant Stock): Their Adaptation, Culture, Grafting and Propagation; Press of Freygang-Leary: Tokyo, Japan, 1908. [Google Scholar]

- Tomasi, D.; Gaiotti, F.; Petoumenou, D.; Lovat, L.; Belfiore, N.; Boscaro, D.; Mian, G. Winter Pruning: Effect on Root Density, Root Distribution and Root/Canopy Ratio in Vitis vinifera Cv. Pinot Gris. Agronomy 2020, 10, 1509. [Google Scholar] [CrossRef]

- Palliotti, A.; Poni, S.; Silvestroni, O. Manuale di Viticoltura; Edagricole: Bologna, Italy, 2018; ISBN 88-506-5533-9. [Google Scholar]

- Poni, S.; Tombesi, S.; Palliotti, A.; Ughini, V.; Gatti, M. Mechanical Winter Pruning of Grapevine: Physiological Bases and Applications. Sci. Hortic. 2016, 204, 88–98. [Google Scholar] [CrossRef]

- Kliewer, W.M.; Dokoozlian, N.K. Leaf Area/Crop Weight Ratios of Grapevines: Influence on Fruit Composition and Wine Quality. Am. J. Enol. Vitic. 2005, 56, 170–181. [Google Scholar] [CrossRef]

- Calonnec, A.; Burie, J.B.; Langlais, M.; Guyader, S.; Saint-Jean, S.; Sache, I.; Tivoli, B. Impacts of Plant Growth and Architecture on Pathogen Processes and Their Consequences for Epidemic Behaviour. Eur. J. Plant Pathol. 2013, 135, 479–497. [Google Scholar] [CrossRef]

- Pisciotta, A.; Di Lorenzo, R.; Novara, A.; Laudicina, V.A.; Barone, E.; Santoro, A.; Gristina, L.; Barbagallo, M.G. Cover Crop and Pruning Residue Management to Reduce Nitrogen Mineral Fertilization in Mediterranean Vineyards. Agronomy 2021, 11, 164. [Google Scholar] [CrossRef]

- Taylor, J.A.; Bates, T.R. Comparison of Different Vegetative Indices for Calibrating Proximal Canopy Sensors to Grapevine Pruning Weight. Am. J. Enol. Vitic. 2021, 72, 279–283. [Google Scholar] [CrossRef]

- Dobrowski, S.Z.; Ustin, S.L.; Wolpert, J.A. Grapevine Dormant Pruning Weight Prediction Using Remotely Sensed Data. Aust. J. Grape Wine Res. 2003, 9, 177–182. [Google Scholar] [CrossRef]

- Santesteban, L.G. Precision Viticulture and Advanced Analytics. A Short Review. Food Chem. 2019, 279, 58–62. [Google Scholar] [CrossRef]

- Bramley, R.G.V.; Ouzman, J.; Boss, P.K. Variation in Vine Vigour, Grape Yield and Vineyard Soils and Topography as Indicators of Variation in the Chemical Composition of Grapes, Wine and Wine Sensory Attributes. Aust. J. Grape Wine Res. 2011, 17, 217–229. [Google Scholar] [CrossRef]

- Urretavizcaya, I.; Miranda, C.; Royo, J.B.; Santesteban, L.G. Within-Vineyard Zone Delineation in an Area with Diversity of Training Systems and Plant Spacing Using Parameters of Vegetative Growth and Crop Load. In Precision Agriculture ’15; Wageningen Academic Publishers: Wageningen, The Netherlands, 2015; pp. 479–486. ISBN 978-90-8686-267-2. [Google Scholar]

- Urretavizcaya, I.; Royo, J.B.; Miranda, C.; Tisseyre, B.; Guillaume, S.; Santesteban, L.G. Relevance of Sink-Size Estimation for within-Field Zone Delineation in Vineyards. Precis. Agric. 2017, 18, 133–144. [Google Scholar] [CrossRef]

- Aquino, A.; Millan, B.; Gutiérrez, S.; Tardáguila, J. Grapevine Flower Estimation by Applying Artificial Vision Techniques on Images with Uncontrolled Scene and Multi-Model Analysis. Comput. Electron. Agric. 2015, 119, 92–104. [Google Scholar] [CrossRef]

- Diago, M.P.; Krasnow, M.; Bubola, M.; Millan, B.; Tardaguila, J. Assessment of Vineyard Canopy Porosity Using Machine Vision. Am. J. Enol. Vitic. 2016, 67, 229–238. [Google Scholar] [CrossRef]

- Archer, E.; van Schalkwyk, D. The Effect of Alternative Pruning Methods on the Viticultural and Oenological Performance of Some Wine Grape Varieties. S. Afr. J. Enol. Vitic. 2007, 28, 107–139. [Google Scholar] [CrossRef]

- Fiorani, F.; Schurr, U. Future Scenarios for Plant Phenotyping. Annu. Rev. Plant Biol. 2013, 64, 267–291. [Google Scholar] [CrossRef]

- Mohimont, L.; Alin, F.; Rondeau, M.; Gaveau, N.; Steffenel, L.A. Computer Vision and Deep Learning for Precision Viticulture. Agronomy 2022, 12, 2463. [Google Scholar] [CrossRef]

- Íñiguez, R.; Palacios, F.; Barrio, I.; Hernández, I.; Gutiérrez, S.; Tardaguila, J. Impact of Leaf Occlusions on Yield Assessment by Computer Vision in Commercial Vineyards. Agronomy 2021, 11, 1003. [Google Scholar] [CrossRef]

- Aquino, A.; Millan, B.; Diago, M.-P.; Tardaguila, J. Automated Early Yield Prediction in Vineyards from On-the-Go Image Acquisition. Comput. Electron. Agric. 2018, 144, 26–36. [Google Scholar] [CrossRef]

- Millan, B.; Aquino, A.; Diago, M.P.; Tardaguila, J. Image Analysis-based Modelling for Flower Number Estimation in Grapevine. J. Sci. Food Agric. 2017, 97, 784–792. [Google Scholar] [CrossRef]

- Palacios, F.; Bueno, G.; Salido, J.; Diago, M.P.; Hernández, I.; Tardaguila, J. Automated Grapevine Flower Detection and Quantification Method Based on Computer Vision and Deep Learning from On-the-Go Imaging Using a Mobile Sensing Platform under Field Conditions. Comput. Electron. Agric. 2020, 178, 105796. [Google Scholar] [CrossRef]

- Diago, M.P.; Sanz-Garcia, A.; Millan, B.; Blasco, J.; Tardaguila, J. Assessment of Flower Number per Inflorescence in Grapevine by Image Analysis under Field Conditions. J. Sci. Food Agric. 2014, 94, 1981–1987. [Google Scholar] [CrossRef]

- Victorino, G.; Braga, R.; Santos-Victor, J.; Lopes, C.M. Yield Components Detection and Image-Based Indicators for Non-Invasive Grapevine Yield Prediction at Different Phenological Phases. OENO One 2020, 54, 833–848. [Google Scholar] [CrossRef]

- Lopes, C.M.; Cadima, J. Grapevine Bunch Weight Estimation Using Image-Based Features: Comparing the Predictive Performance of Number of Visible Berries and Bunch Area. OENO One 2021, 55, 209–226. [Google Scholar] [CrossRef]

- Luo, L.; Tang, Y.; Zou, X.; Wang, C.; Zhang, P.; Feng, W. Robust Grape Cluster Detection in a Vineyard by Combining the AdaBoost Framework and Multiple Color Components. Sensors 2016, 16, 2098. [Google Scholar] [CrossRef] [PubMed]

- Casser, V. Using Feedforward Neural Networks for Color Based Grape Detection in Field Images. In Proceedings of the CSCUBS, Computer Science Conference for University of Bonn Students, Bonn, Germany, 25 May 2016; pp. 23–33. [Google Scholar]

- Diago, M.-P.; Correa, C.; Millán, B.; Barreiro, P.; Valero, C.; Tardaguila, J. Grapevine Yield and Leaf Area Estimation Using Supervised Classification Methodology on RGB Images Taken under Field Conditions. Sensors 2012, 12, 16988–17006. [Google Scholar] [CrossRef]

- Dunn, G.M.; Martin, S.R. Yield Prediction from Digital Image Analysis: A Technique with Potential for Vineyard Assessments Prior to Harvest. Aust. J. Grape Wine Res. 2004, 10, 196–198. [Google Scholar] [CrossRef]

- De Bei, R.; Fuentes, S.; Gilliham, M.; Tyerman, S.; Edwards, E.; Bianchini, N.; Smith, J.; Collins, C. VitiCanopy: A Free Computer App to Estimate Canopy Vigor and Porosity for Grapevine. Sensors 2016, 16, 585. [Google Scholar] [CrossRef]

- Gatti, M.; Dosso, P.; Maurino, M.; Merli, M.C.; Bernizzoni, F.; José Pirez, F.; Platè, B.; Bertuzzi, G.C.; Poni, S. MECS-VINE®: A New Proximal Sensor for Segmented Mapping of Vigor and Yield Parameters on Vineyard Rows. Sensors 2016, 16, 2009. [Google Scholar] [CrossRef]

- Diago, M.P.; Aquino, A.; Millan, B.; Palacios, F.; Tardaguila, J. On-the-Go Assessment of Vineyard Canopy Porosity, Bunch and Leaf Exposure by Image Analysis. Aust. J. Grape Wine Res. 2019, 25, 363–374. [Google Scholar] [CrossRef]

- Klodt, M.; Herzog, K.; Töpfer, R.; Cremers, D. Field Phenotyping of Grapevine Growth Using Dense Stereo Reconstruction. BMC Bioinform. 2015, 16, 143. [Google Scholar] [CrossRef] [PubMed]

- Guadagna, P.; Fernandes, M.; Chen, F.; Santamaria, A.; Teng, T.; Frioni, T.; Caldwell, D.; Poni, S.; Semini, C.; Gatti, M. Using Deep Learning for Pruning Region Detection and Plant Organ Segmentation in Dormant Spur-Pruned Grapevines. Precis. Agric. 2023, 24, 1547–1569. [Google Scholar] [CrossRef]

- Fernandes, M.; Scaldaferri, A.; Fiameni, G.; Teng, T.; Gatti, M.; Poni, S.; Semini, C.; Caldwell, D.; Chen, F. Grapevine Winter Pruning Automation: On Potential Pruning Points Detection through 2D Plant Modeling Using Grapevine Segmentation. In Proceedings of the 2021 IEEE 11th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Jiaxing, China, 27–31 July 2021; pp. 13–18. [Google Scholar]

- Botterill, T.; Paulin, S.; Green, R.; Williams, S.; Lin, J.; Saxton, V.; Mills, S.; Chen, X.; Corbett-Davies, S. A Robot System for Pruning Grape Vines. J. Field Robot. 2017, 34, 1100–1122. [Google Scholar] [CrossRef]

- Gao, M.; Lu, T. Image Processing and Analysis for Autonomous Grapevine Pruning. In Proceedings of the 2006 International Conference on Mechatronics and Automation, Luoyang, China, 25–28 June 2006; pp. 922–927. [Google Scholar]

- Cubero, S.; Diago, M.P.; Blasco, J.; Tardaguila, J.; Prats-Montalbán, J.M.; Ibáñez, J.; Tello, J.; Aleixos, N. A New Method for Assessment of Bunch Compactness Using Automated Image Analysis. Aust. J. Grape Wine Res. 2015, 21, 101–109. [Google Scholar] [CrossRef]

- Hall, A.; Wilson, M.A. Object-Based Analysis of Grapevine Canopy Relationships with Winegrape Composition and Yield in Two Contrasting Vineyards Using Multitemporal High Spatial Resolution Optical Remote Sensing. Int. J. Remote Sens. 2013, 34, 1772–1797. [Google Scholar] [CrossRef]

- García-Fernández, M.; Sanz-Ablanedo, E.; Pereira-Obaya, D.; Rodríguez-Pérez, J.R. Vineyard Pruning Weight Prediction Using 3D Point Clouds Generated from UAV Imagery and Structure from Motion Photogrammetry. Agronomy 2021, 11, 2489. [Google Scholar] [CrossRef]

- Siebers, M.H.; Edwards, E.J.; Jimenez-Berni, J.A.; Thomas, M.R.; Salim, M.; Walker, R.R. Fast Phenomics in Vineyards: Development of GRover, the Grapevine Rover, and LiDAR for Assessing Grapevine Traits in the Field. Sensors 2018, 18, 2924. [Google Scholar] [CrossRef]

- Tagarakis, A.; Koundouras, S.; Fountas, S.; Gemtos, T. Evaluation of the Use of LIDAR Laser Scanner to Map Pruning Wood in Vineyards and Its Potential for Management Zones Delineation. Precis. Agric. 2018, 19, 334–347. [Google Scholar] [CrossRef]

- Kicherer, A.; Klodt, M.; Sharifzadeh, S.; Cremers, D.; Töpfer, R.; Herzog, K. Automatic Image-Based Determination of Pruning Mass as a Determinant for Yield Potential in Grapevine Management and Breeding. Aust. J. Grape Wine Res. 2017, 23, 120–124. [Google Scholar] [CrossRef]

- Millán Prior, B.; Diago, M.P.; Aquino Martín, A.; Palacios, F.; Tardaguila, J. Vineyard Pruning Weight Assessment by Machine Vision: Towards an on-the-Go Measurement System. OENO One 2019, 53, 307–319. [Google Scholar] [CrossRef]

- Herzog, K. Initial Steps for High-Throughput Phenotyping in Vineyards. Vitis 2014, 53, 1–8. [Google Scholar]

- Roscher, R.; Herzog, K.; Kunkel, A.; Kicherer, A.; Töpfer, R.; Förstner, W. Automated Image Analysis Framework for High-Throughput Determination of Grapevine Berry Sizes Using Conditional Random Fields. Comput. Electron. Agric. 2014, 100, 148–158. [Google Scholar] [CrossRef]

- Lormand, C.; Zellmer, G.F.; Németh, K.; Kilgour, G.; Mead, S.; Palmer, A.S.; Sakamoto, N.; Yurimoto, H.; Moebis, A. Weka Trainable Segmentation Plugin in ImageJ: A Semi-Automatic Tool Applied to Crystal Size Distributions of Microlites in Volcanic Rocks. Microsc. Microanal. 2018, 24, 667–675. [Google Scholar] [CrossRef] [PubMed]

- Witten, I.H.; Frank, E.; Trigg, L.; Hall, M.; Holmes, G. Weka: Practical Machine Learning Tools and Techniques with Java Implementations, Computer Science Working Papers; Department of Computer Science, University of Waikato: Hamilton, New Zealand, 1999. [Google Scholar]

- Broeke, J.; Pérez, J.M.M.; Pascau, J. Image Processing with ImageJ; Packt Publishing Ltd.: Birmingham, UK, 2015. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. Automatica 1975, 11, 23–27. [Google Scholar] [CrossRef]

- Paulus, S.; Behmann, J.; Mahlein, A.-K.; Plümer, L.; Kuhlmann, H. Low-Cost 3D Systems: Suitable Tools for Plant Phenotyping. Sensors 2014, 14, 3001–3018. [Google Scholar] [CrossRef]

- Liu, S.; Cossell, S.; Tang, J.; Dunn, G.; Whitty, M. A computer vision system for early stage grape yield estimation based on shoot detection. Comput. Electron. Agric. 2017, 137, 88–101. [Google Scholar] [CrossRef]

- Rudolph, R.; Herzog, K.; Töpfer, R.; Steinhage, V. Efficient identification, localization and quantification of grapevine inflorescences and flowers in unprepared field images using Fully Convolutional Networks. Vitis. J. Grapevine Res. 2019, 58, 95–104. [Google Scholar] [CrossRef]

- Nuske, S.; Wilshusen, K.; Achar, S.; Yoder, L.; Narasimhan, S.; Singh, S. Automated Visual Yield Estimation in Vineyards. J. Field Robot. 2014, 31, 996. [Google Scholar] [CrossRef]

- Călugăr, A.; Cordea, M.I.; Babeş, A.; Fejer, M. Dynamics of starch reserves in some grapevine varieties (Vitis vinifera L.) during dormancy. Horticulture 2019, 76, 185–192. [Google Scholar]

- Victorino, G.; Poblete-Echeverría, C.; Lopes, C.M. A Multicultivar Approach for Grape Bunch Weight Estimation Using Image Analysis. Horticulturae 2022, 8, 233. [Google Scholar] [CrossRef]

- Aquino, A.; Diago, M.P.; Millán, B.; Tardáguila, J. A new methodology for estimating the grapevine-berry number per cluster using image analysis. Biosyst. Eng. 2017, 156, 80–95. [Google Scholar] [CrossRef]

- Jaramillo, J.; Wilhelm, A.; Napp, N.; Heuvel, J.V.; Petersen, K. Inexpensive, Automated Pruning Weight Estimation in Vineyards. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; IEEE: New York, NY, USA, 2024; pp. 11869–11875. [Google Scholar] [CrossRef]

| Time (±1 h) | Canopy Side | Sun Position | p-Value | R2 | RMSE (g) | MAPE | AIC | BIC | LOOCV R2 |

|---|---|---|---|---|---|---|---|---|---|

| 09.00 a.m. | A | Frontal | 0.00 | 0.84 | 66.57 | 18.61 | 409.13 | 413.13 | 0.77 |

| B | Behind | 0.00 | 0.30 | 138.25 | 41.00 | 461.75 | 467.75 | 0.16 | |

| 12.00 a.m. | A | Top | 0.00 | 0.69 | 91.34 | 22.54 | 431.91 | 435.91 | 0.58 |

| B | Top | 0.00 | 0.42 | 125.23 | 33.83 | 454.63 | 458.63 | 0.27 | |

| 03.00 p.m. | A | Behind | 0.00 | 0.27 | 141.25 | 44.34 | 463.32 | 467.32 | 0.09 |

| B | Frontal | 0.00 | 0.89 | 55.80 | 15.74 | 396.42 | 400.42 | 0.84 |

| Time (±1 h) | Canopy Side | Sun Position | p-Value | R2 | RMSE (g) | MAPE | AIC | BIC | LOOCV R2 |

|---|---|---|---|---|---|---|---|---|---|

| 09.00 a.m. | A | Frontal | 0.00 | 0.90 | 51.75 | 17.41 | 391.00 | 395.00 | 0.86 |

| B | Behind | 0.00 | 0.32 | 136.54 | 40.67 | 460.85 | 464.58 | 0.17 | |

| 12.00 a.m. | A | Top | 0.00 | 0.74 | 83.60 | 22.10 | 425.54 | 429.54 | 0.62 |

| B | Top | 0.00 | 0.48 | 118.40 | 33.01 | 450.59 | 454.59 | 0.32 | |

| 03.00 p.m. | A | Behind | 0.00 | 0.33 | 135.00 | 43.59 | 460.02 | 464.02 | 0.11 |

| B | Frontal | 0.00 | 0.93 | 44.24 | 16.62 | 379.70 | 383.71 | 0.92 |

| Training Set | Validation | n | p-Value | R2 | RMSE (g) | MAPE | AIC | BIC |

|---|---|---|---|---|---|---|---|---|

| Merlot, Nero d’Avola, Tannat | Catarratto | 36 | 0.00 | 0.73 | 85.95 | 26.26 | 427.53 | 431.53 |

| Catarratto, Nero d’Avola, Tannat | Merlot | 48 | 0.00 | 0.44 | 74.01 | 32.93 | 553.93 | 559.00 |

| Catarratto, Merlot, Tannat | Nero d’Avola | 40 | 0.00 | 0.78 | 131.96 | 15.15 | 496.14 | 500.45 |

| Catarratto, Merlot, Nero d’Avola | Tannat | 40 | 0.13 | 0.06 | 56.46 | 36.90 | 440.82 | 445.22 |

| Training Set | Validation | n | p-Value | R2 | RMSE (g) | MAPE | AIC | BIC |

|---|---|---|---|---|---|---|---|---|

| Merlot, Nero d’Avola, Tannat | Catarratto | 36 | 0.00 | 0.89 | 55.8 | 15.74 | 396.42 | 400.42 |

| Catarratto, Nero d’Avola, Tannat | Merlot | 48 | 0.00 | 0.55 | 66.23 | 27.46 | 543.26 | 548.33 |

| Catarratto, Merlot, Tannat | Nero d’Avola | 40 | 0.00 | 0.72 | 150.66 | 18.19 | 506.26 | 510.56 |

| Catarratto, Merlot, Nero d’Avola | Tannat | 40 | 0.05 | 0.09 | 55.46 | 36.55 | 493.38 | 443.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Puccio, S.; Miccichè, D.; Victorino, G.; Lopes, C.M.; Di Lorenzo, R.; Pisciotta, A. Estimating Pruning Wood Mass in Grapevine Through Image Analysis: Influence of Light Conditions and Acquisition Approaches. Agriculture 2025, 15, 966. https://doi.org/10.3390/agriculture15090966

Puccio S, Miccichè D, Victorino G, Lopes CM, Di Lorenzo R, Pisciotta A. Estimating Pruning Wood Mass in Grapevine Through Image Analysis: Influence of Light Conditions and Acquisition Approaches. Agriculture. 2025; 15(9):966. https://doi.org/10.3390/agriculture15090966

Chicago/Turabian StylePuccio, Stefano, Daniele Miccichè, Gonçalo Victorino, Carlos Manuel Lopes, Rosario Di Lorenzo, and Antonino Pisciotta. 2025. "Estimating Pruning Wood Mass in Grapevine Through Image Analysis: Influence of Light Conditions and Acquisition Approaches" Agriculture 15, no. 9: 966. https://doi.org/10.3390/agriculture15090966

APA StylePuccio, S., Miccichè, D., Victorino, G., Lopes, C. M., Di Lorenzo, R., & Pisciotta, A. (2025). Estimating Pruning Wood Mass in Grapevine Through Image Analysis: Influence of Light Conditions and Acquisition Approaches. Agriculture, 15(9), 966. https://doi.org/10.3390/agriculture15090966