A Lightweight YOLO-Based Architecture for Apple Detection on Embedded Systems

Abstract

1. Introduction

Related Work

2. Materials and Methods

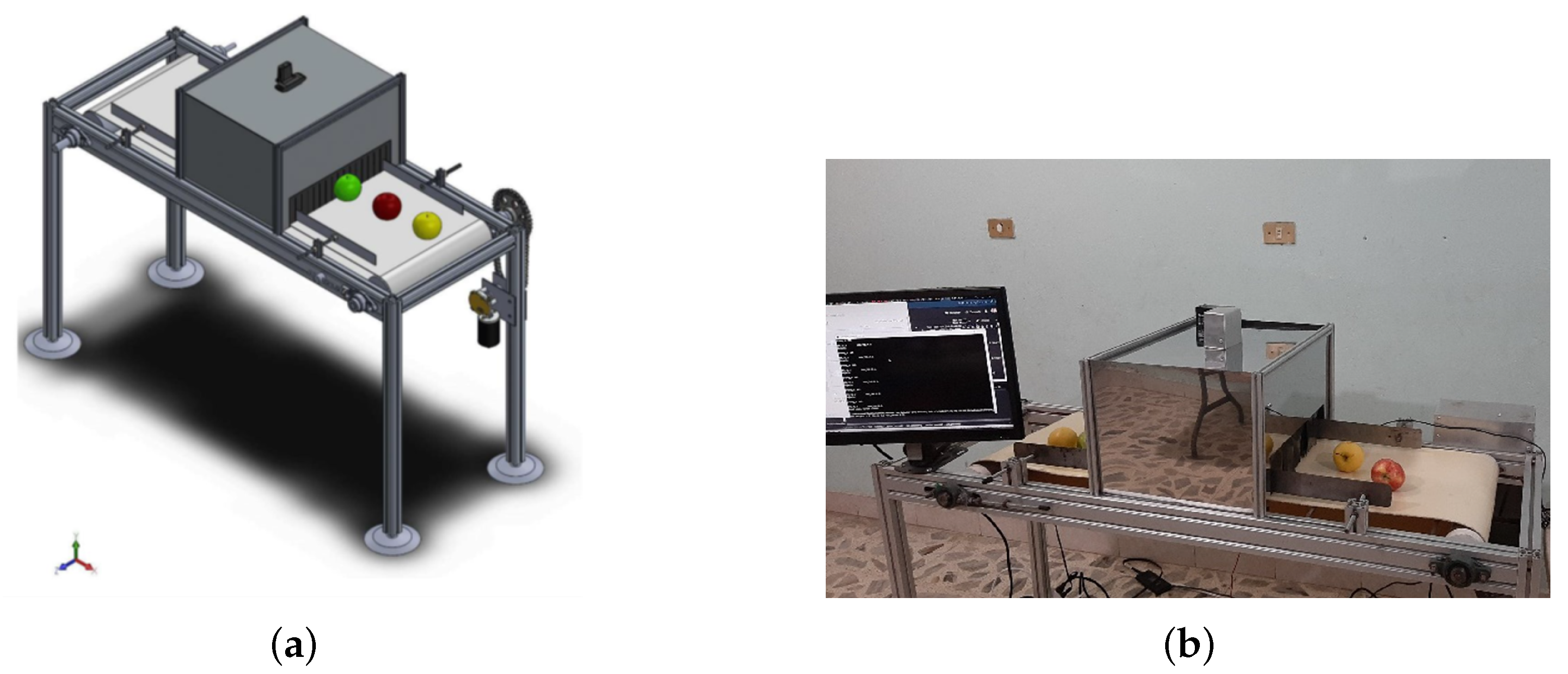

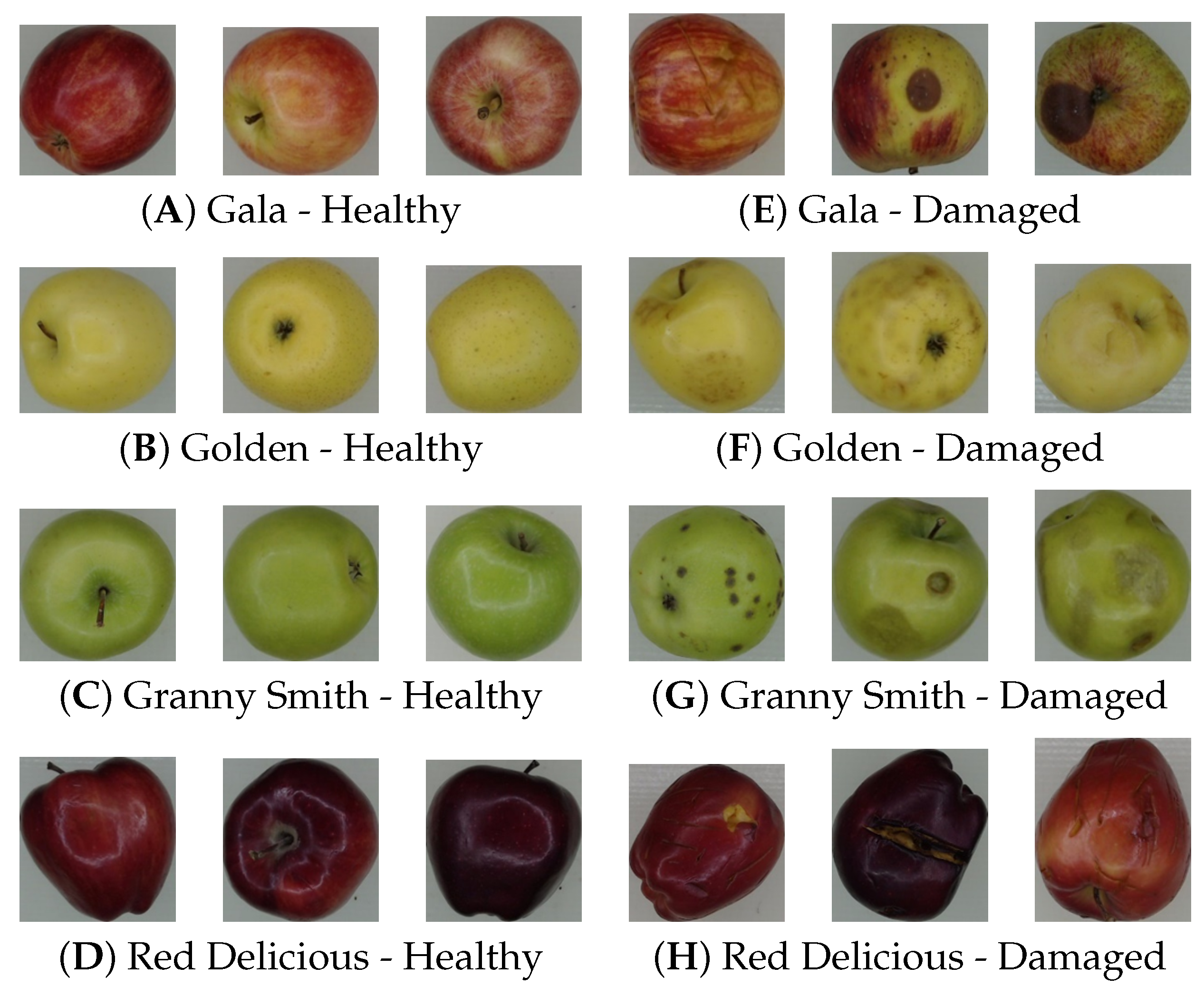

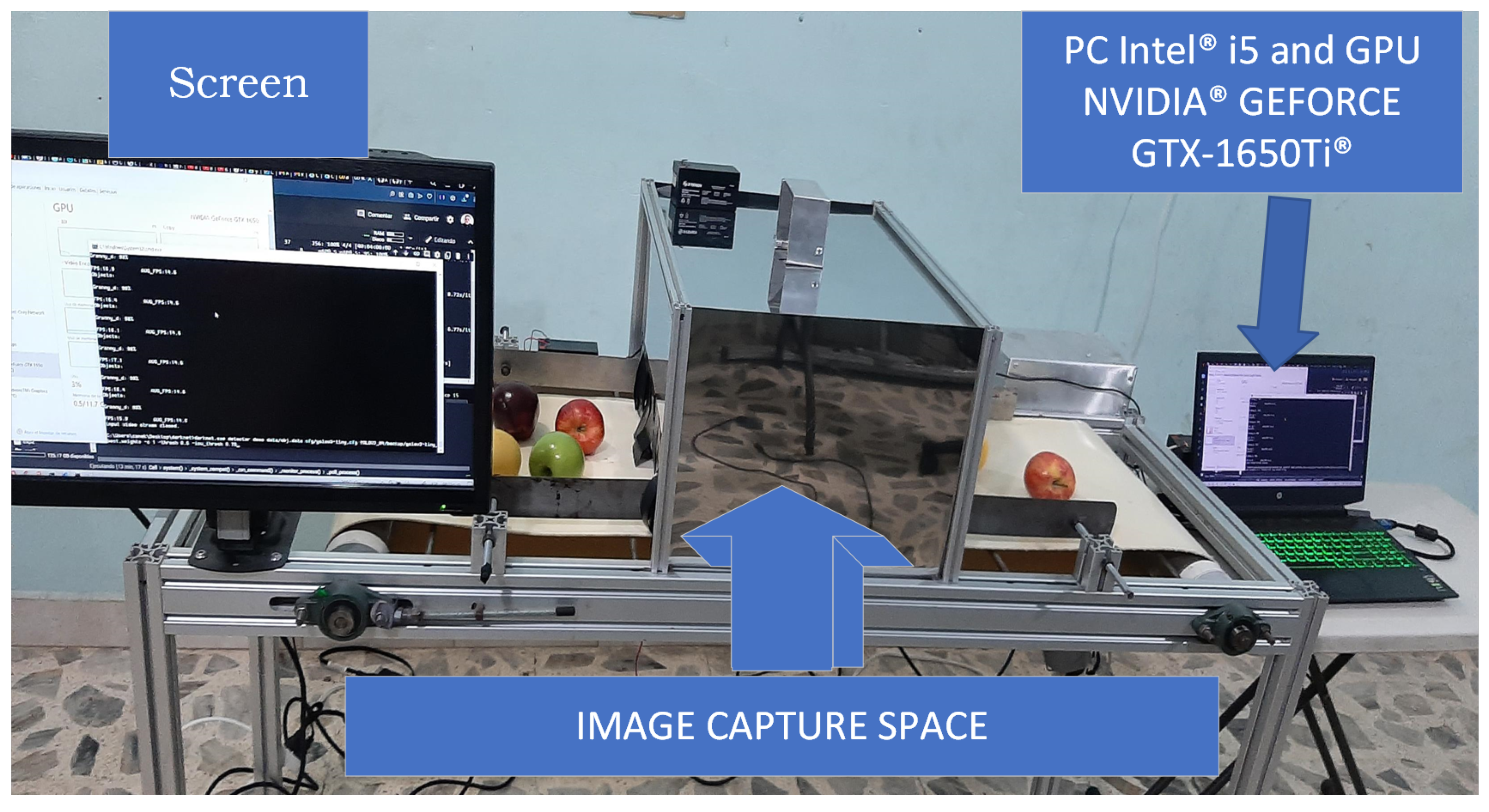

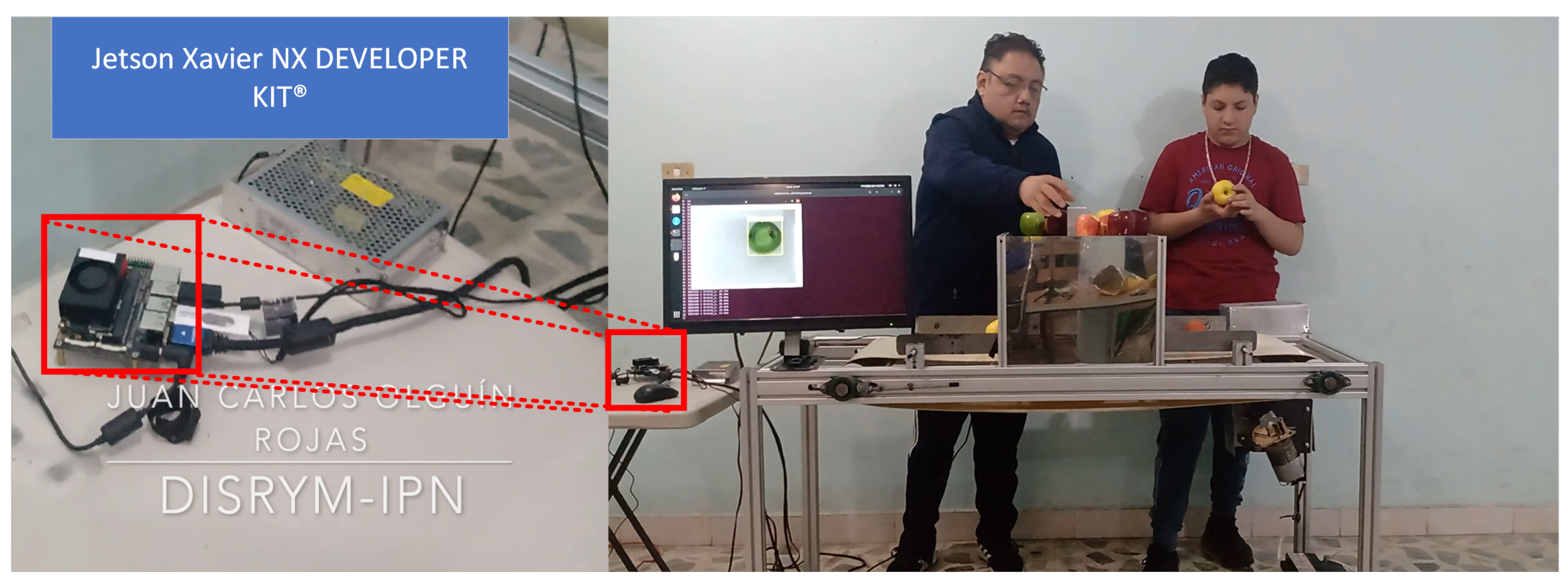

2.1. Data Collection

2.2. Dataset

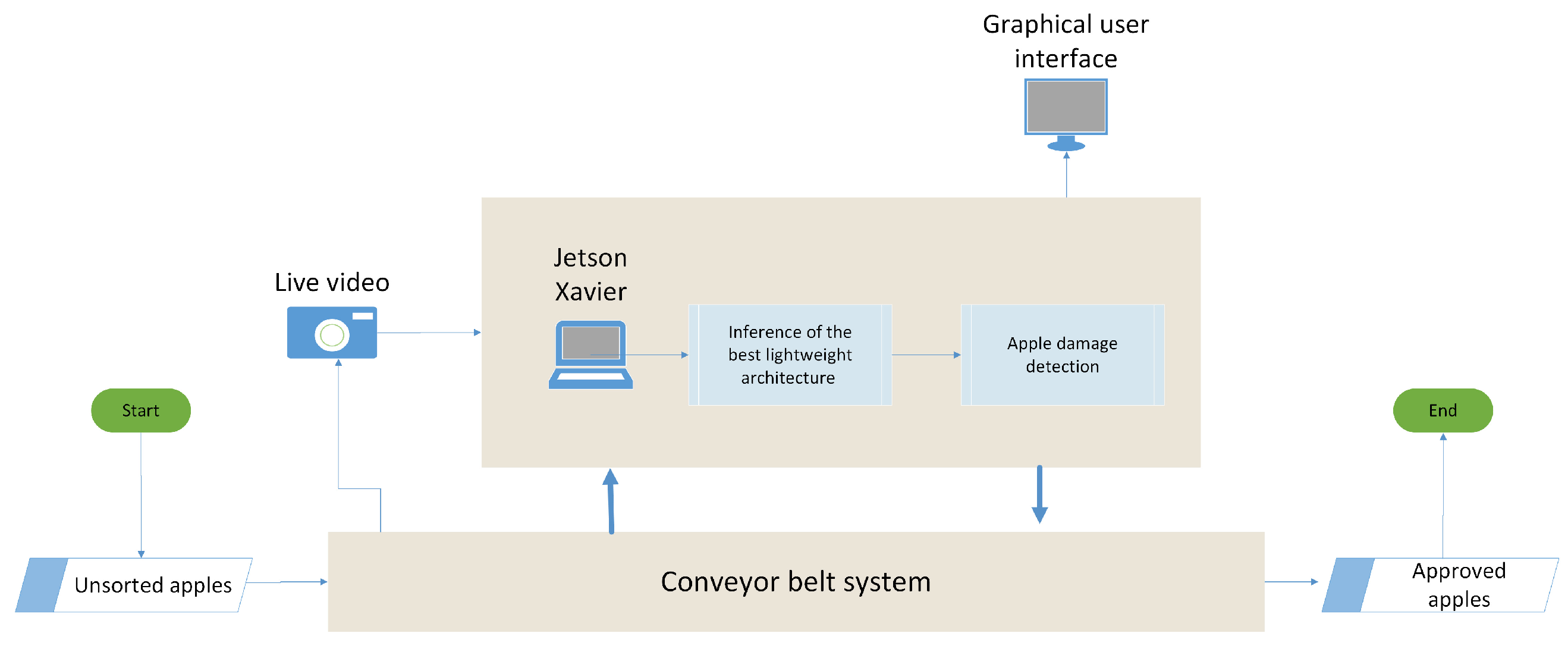

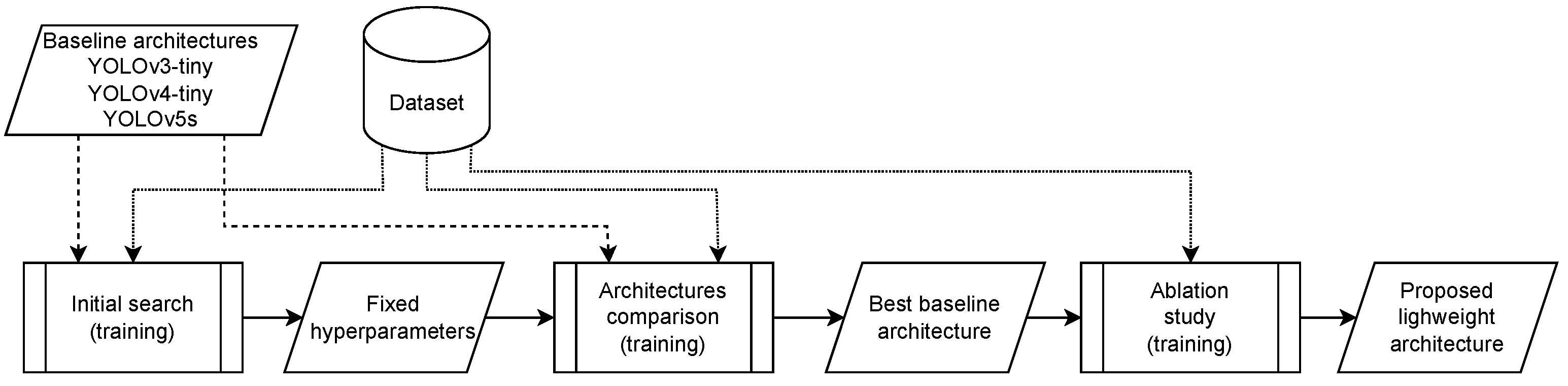

2.3. General Workflow

2.4. Apple Detection with Neural Networks

2.4.1. Baseline Neural Network YOLOv3-Tiny

2.4.2. Baseline Neural Network YOLOv4-Tiny

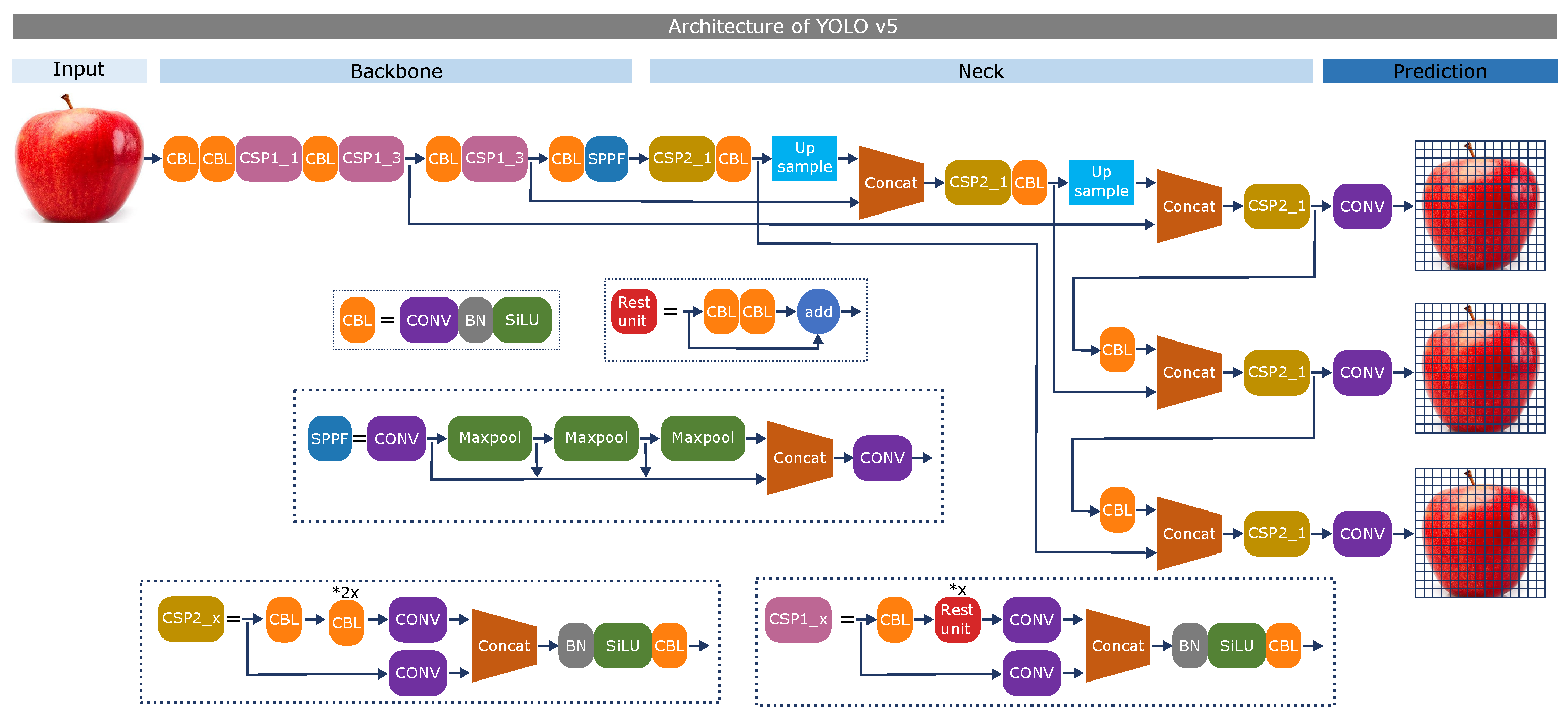

2.4.3. Baseline Neural Network YOLOv5s

2.5. Evaluation Metrics

2.6. Design of the Experiment

2.6.1. Initial Hyperparameter Search

2.6.2. Baseline Architectures Comparison

2.6.3. Ablation Study of the Best Baseline Architecture

2.7. Implementation

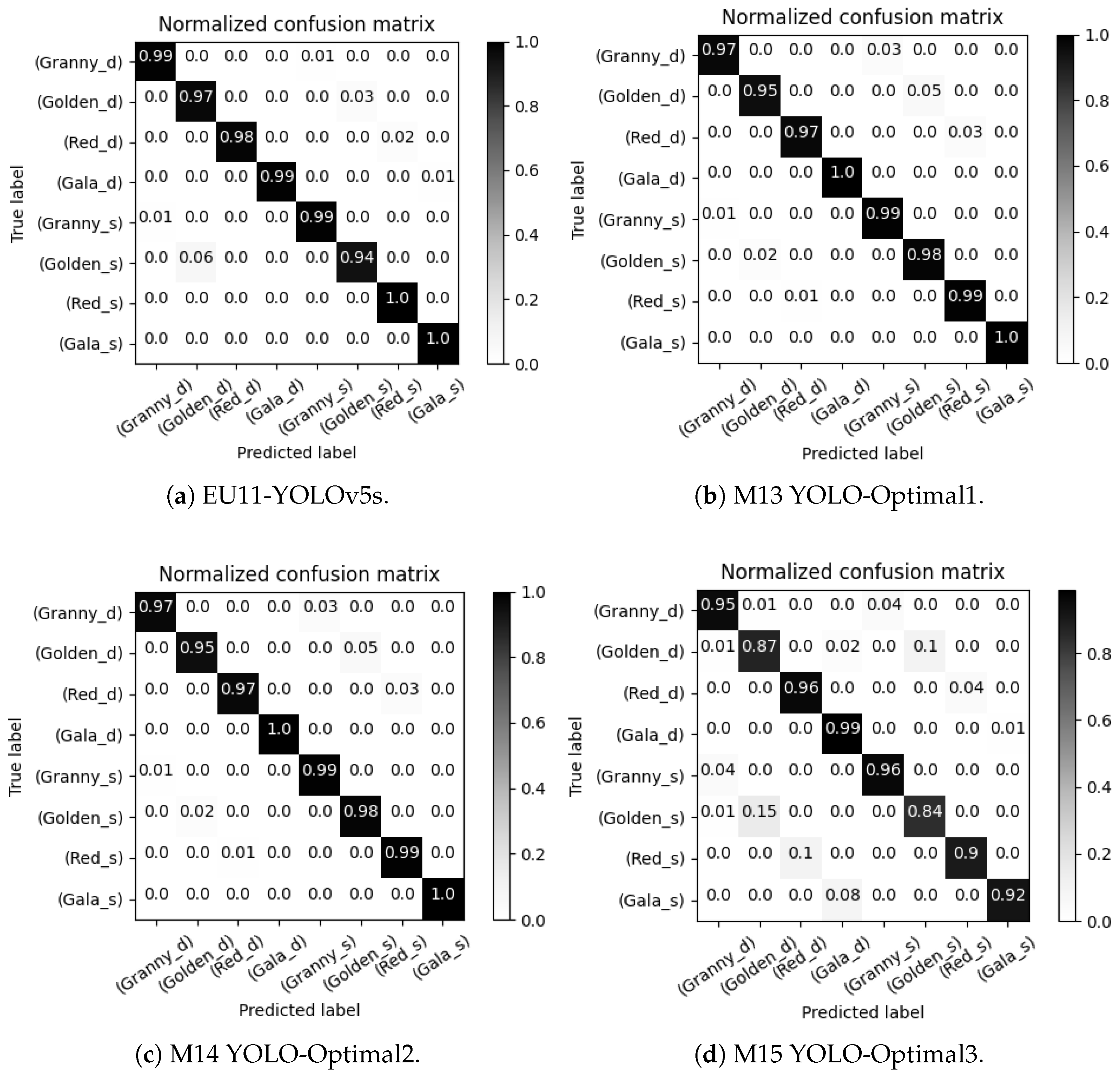

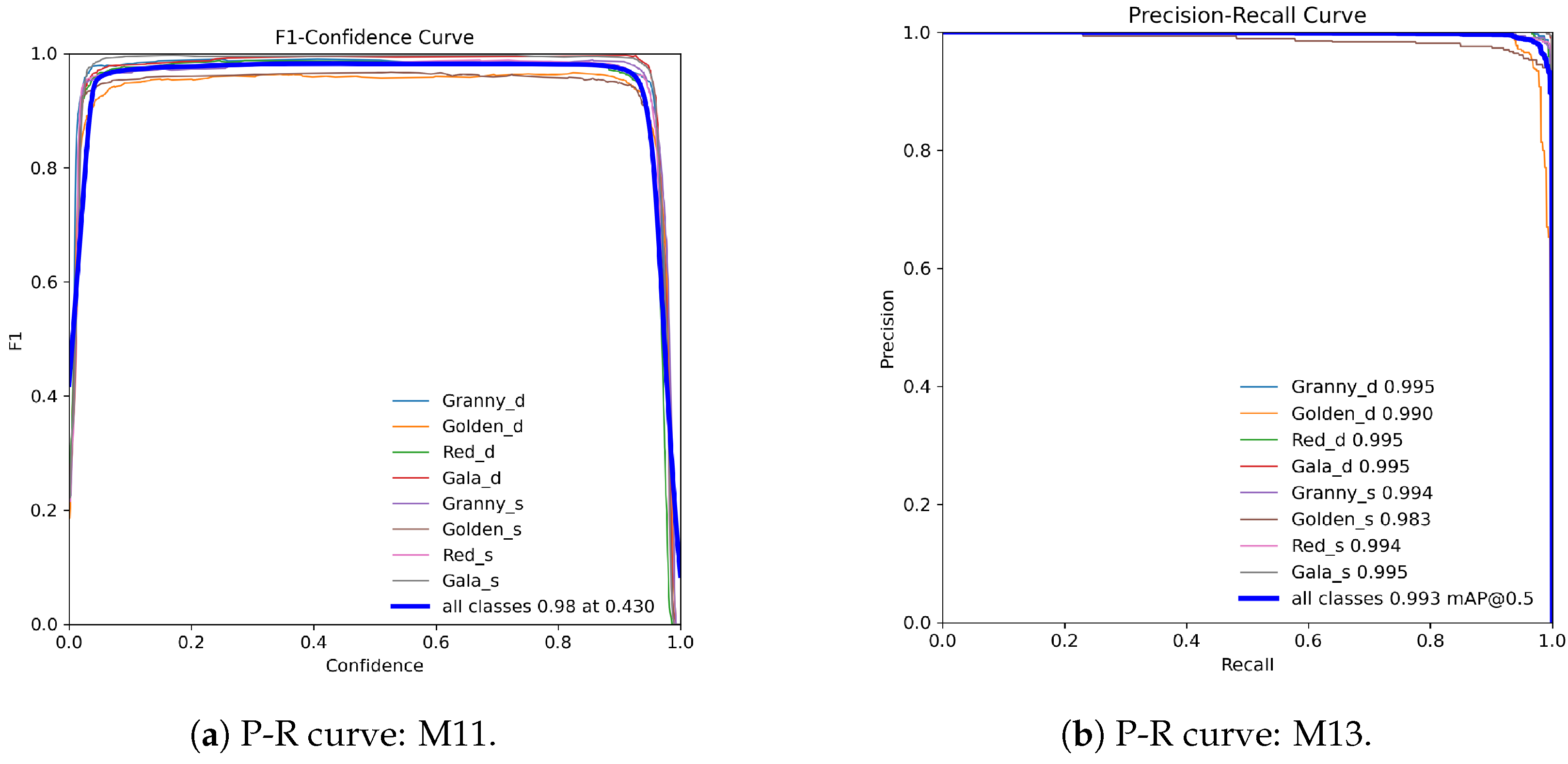

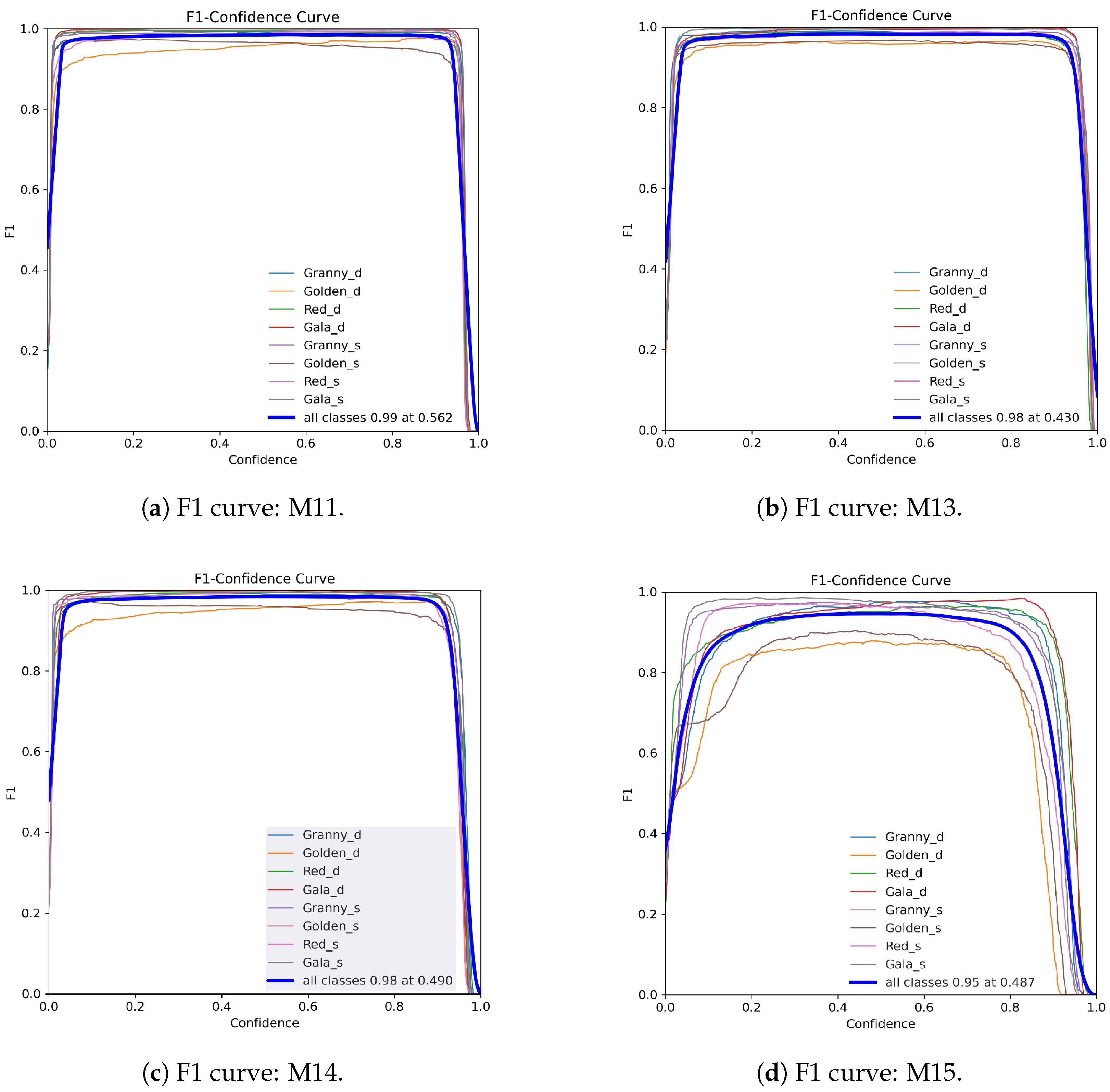

3. Results

On the Proposed Lightweight YOLOv5

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Leo, L.S.C.; Hernández-Martínez, D.M.; Meza-Márquez, O.G. Analysis of physicochemical parameters, phenolic compounds and antioxidant capacity of peel, pulp and whole fruit of five apple varieties (Malus domestica) harvested in Mexico. BIOtecnia 2019, 22, 166–174. [Google Scholar] [CrossRef]

- Nguyen, N.H.; Michaud, J.; Mogollon, R.; Zhang, H.; Hargarten, H.; Leisso, R.; Torres, C.A.; Honaas, L.; Ficklin, S. Rating pome fruit quality traits using deep learning and image processing. Plant Direct 2024, 8, e70005. [Google Scholar] [CrossRef] [PubMed]

- Ghazal, S.; Munir, A.; Qureshi, W.S. Computer vision in smart agriculture and precision farming: Techniques and applications. Artif. Intell. Agric. 2024, 13, 64–83. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer Nature: Berlin, Germany, 2022. [Google Scholar]

- Meng, F.; Li, J.; Zhang, Y.; Qi, S.; Tang, Y. Transforming unmanned pineapple picking with spatio-temporal convolutional neural networks. Comput. Electron. Agric. 2023, 214, 108298. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2017; Volume 1. [Google Scholar]

- Li, H.; Gu, Z.; He, D.; Wang, X.; Huang, J.; Mo, Y.; Li, P.; Huang, Z.; Wu, F. A lightweight improved YOLOv5s model and its deployment for detecting pitaya fruits in daytime and nighttime light-supplement environments. Comput. Electron. Agric. 2024, 220, 108914. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Zhang, Z.; Pothula, A.K.; Lu, R. Economic evaluation of Apple Harvest and In-Field sorting technology. Trans. Asabe 2017, 60, 1537–1550. [Google Scholar] [CrossRef]

- Lu, Y.; Lu, R. Non-Destructive defect detection of Apples by Spectroscopic and Imaging Technologies: A review. Trans. Asabe 2017, 60, 1765–1790. [Google Scholar] [CrossRef]

- Wang, S.H.; Chen, Y. Fruit category classification via an eight-layer convolutional neural network with parametric rectified linear unit and dropout technique. Multimed. Tools Appl. 2020, 79, 15117–15133. [Google Scholar] [CrossRef]

- Sun, K.; Li, Y.; Peng, J.; Tu, K.; Pan, L. Surface gloss Evaluation of Apples based on Computer Vision and Support Vector Machine Method. Food Anal. Methods 2017, 10, 2800–2806. [Google Scholar] [CrossRef]

- Li, X.; Hu, Y.; Jie, Y.; Zhao, C.; Zhang, Z. Dual-Frequency LiDAR for Compressed Sensing 3D Imaging Based on All-Phase Fast Fourier Transform. J. Opt. Photonics Res. 2024, 1, 74–81. [Google Scholar] [CrossRef]

- Sofu, M.; Er, O.; Kayacan, M.; Cetişli, B. Design of an automatic apple sorting system using machine vision. Comput. Electron. Agric. 2016, 127, 395–405. [Google Scholar] [CrossRef]

- Sachin, C.; Manasa, N.L.; Sharma, V.; Kumaar, N.A.A. Vegetable Classification Using You Only Look Once Algorithm. In Proceedings of the 2019 International Conference on Cutting-Edge Technologies in Engineering (ICon-CuTE), Uttar Pradesh, India, 14–16 November 2019; pp. 101–107. [Google Scholar]

- Tian, Y.; Yang, G.; Wang, Z.; Li, E.; Liang, Z. Detection of Apple Lesions in Orchards Based on Deep Learning Methods of CycleGAN and YOLOV3-Dense. J. Sens. 2019, 2019, 7630926. [Google Scholar] [CrossRef]

- Siddiqi, R. Automated apple defect detection using state-of-the-art object detection techniques. SN Appl. Sci. 2019, 1, 1345. [Google Scholar] [CrossRef]

- Chen, W.; Zhang, J.; Guo, B.; Wei, Q.; Zhu, Z. An Apple Detection Method Based on Des-YOLO v4 Algorithm for Harvesting Robots in Complex Environment. Math. Probl. Eng. 2021, 2021, 7351470. [Google Scholar] [CrossRef]

- Huang, Z.; Zhang, P.; Liu, R.; Li, D. Immature Apple detection method based on improved Yolov3. Asp. Trans. Internet Things 2021, 1, 9–13. [Google Scholar] [CrossRef]

- Hu, G.; Zhang, E.; Zhou, J.; Zhao, J.; Gao, Z.; Sugirbay, A.; Jin, H.; Zhang, S.; Chen, J. Infield Apple Detection and grading based on Multi-Feature Fusion. Horticulturae 2021, 7, 276. [Google Scholar] [CrossRef]

- Nguyen, C.N.; Lam, V.L.; Le, P.H.; Ho, H.T.; Nguyen, C.N. Early detection of slight bruises in apples by cost-efficient near-infrared imaging. Int. J. Electr. Comput. Eng. (IJECE) 2022, 12, 349–357. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Sharpe, S.M.; Schumann, A.W.; Boyd, N.S. Goosegrass Detection in Strawberry and Tomato Using a Convolutional Neural Network. Sci. Rep. 2020, 10, 9548. [Google Scholar] [CrossRef]

- Junos, M.H.; Khairuddin, A.S.M.; Thannirmalai, S.; Dahari, M. An optimized YOLO-based object detection model for crop harvesting system. IET Image Process. 2021, 15, 2112–2125. [Google Scholar] [CrossRef]

- Sekharamantry, P.K.; Melgani, F.; Malacarne, J. Deep Learning-Based Apple Detection with Attention Module and Improved Loss Function in YOLO. Remote Sens. 2023, 15, 1516. [Google Scholar] [CrossRef]

- Liu, J.; Zhao, G.; Liu, S.; Liu, Y.; Yang, H.; Sun, J.; Yan, Y.; Fan, G.; Wang, J.; Zhang, H. New Progress in Intelligent Picking: Online Detection of Apple Maturity and Fruit Diameter Based on Machine Vision. Agronomy 2024, 14, 721. [Google Scholar] [CrossRef]

- Zuo, Z.; Gao, S.; Peng, H.; Xue, Y.; Han, L.; Ma, G.; Mao, H. Lightweight Detection of Broccoli Heads in Complex Field Environments Based on LBDC-YOLO. Agronomy 2024, 14, 2359. [Google Scholar] [CrossRef]

- Han, B.; Lu, Z.; Zhang, J.; Almodfer, R.; Wang, Z.; Sun, W.; Dong, L. Rep-ViG-Apple: A CNN-GCN Hybrid Model for Apple Detection in Complex Orchard Environments. Agronomy 2024, 14, 1733. [Google Scholar] [CrossRef]

- Chen, W.; Lu, S.; Liu, B.; Chen, M.; Li, G.; Qian, T. CitrusYOLO: A Algorithm for Citrus Detection under Orchard Environment Based on YOLOv4. Multimed. Tools Appl. 2022, 81, 31363–31389. [Google Scholar] [CrossRef]

- Olguín-Rojas, J.C.; Vasquez-Gomez, J.I.; López-Canteñs, G.D.J.; Herrera-Lozada, J.C. Clasificación de manzanas con redes neuronales convolucionales. Rev. Fitotec. Mex. 2022, 45, 369. [Google Scholar] [CrossRef]

- Kolosova, T.; Berestizhevsky, S. Supervised Machine Learning: Optimization Framework and Applications with SAS and R; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Kelleher, J.D.; Mac Namee, B.; D’Arcy, A. Fundamentals of Machine Learning for Predictive Data Analytics: Algorithms, Worked Examples, and Case Studies; MIT Press: Cambridge, MA, USA, 2020. [Google Scholar]

- Valdez, P. Apple Defect Detection Using Deep Learning Based Object Detection for Better Post Harvest Handling. arXiv 2020, arXiv:2005.06089. [Google Scholar] [CrossRef]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Wang, Z.; Jin, L.; Wang, S.; Xu, H. Apple Stem/Calyx Real-Time Recognition Using YOLO-v5 Algorithm for Fruit Automatic Loading System. Postharvest Biol. Technol. 2021, 185, 111808. [Google Scholar] [CrossRef]

| ID | Hyperparameter | Value |

|---|---|---|

| 1 | Image size | 416 × 416 |

| 2 | Image channels | 3 |

| 3 | Randon rotation angle | 0 |

| 4 | Random hue | 0.1 |

| 5 | Random saturation | 1.5 |

| 6 | Random exposure | 1.5 |

| 7 | Optimizer | SGD |

| 8 | Batch size | 64 |

| ID | Learning Rate | Momentum | Decay |

|---|---|---|---|

| HC-1 | 0.000001 | 0.5 | 0.00005 |

| HC-2 | 0.00001 | 0.75 | 0.0005 |

| HC-3 | 0.0001 | 0.9 | 0.005 |

| HC-4 | 0.01 | 0.95 | 0.05 |

| ID | Architecture | Hyperparameters | T.M. |

|---|---|---|---|

| EU1–EU3 | YOLOv3-Tiny | HC-1 to HC-3 | T.L. |

| EU4 | YOLOv3-Tiny | HC-4 | T.S. |

| EU5–EU7 | YOLOv4-Tiny | HC-1 to HC-3 | T.L. |

| EU8 | YOLOv4-Tiny | HC-4 | T.S. |

| EU9–EU11 | YOLOv5s | HC-1 to HC-3 | T.L. |

| EU12 | YOLOv5s | HC-4 | T.S. |

| ID | Architecture | Modification |

|---|---|---|

| M13 | Yolo-Optimal1 | Removal of one detection scale (only two) |

| M14 | Yolo-Optimal2 | Use of a single detection scale |

| M15 | Yolo-Optimal3 | Single detection scale and reduced depth |

| ID. | P | R | mAP50 | T.P. | AVG-FPS |

|---|---|---|---|---|---|

| EU1 | 0.95 | 0.97 | 0.993 | 49.2 | |

| EU2 | 0.80 | 0.92 | 0.944 | 48.3 | |

| EU3 | 0.96 | 0.98 | 0.994 | 45.9 | |

| EU4 | 0.94 | 0.96 | 0.988 | 47.4 | |

| EU5 | 0.98 | 0.98 | 0.991 | 46.4 | |

| EU6 | 0.92 | 0.97 | 0.983 | 45.8 | |

| EU7 | 0.98 | 0.99 | 0.994 | 45.2 | |

| EU8 | 0.96 | 0.97 | 0.989 | 43.6 | |

| EU9 | 0.87 | 0.85 | 0.922 | 59.4 | |

| EU10 | 0.56 | 0.95 | 0.710 | 60.4 | |

| EU11 | 0.99 | 0.99 | 0.998 | 63.2 | |

| EU12 | 0.88 | 0.90 | 0.970 | 62.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Olguín-Rojas, J.C.; Vasquez, J.I.; López-Canteñs, G.d.J.; Herrera-Lozada, J.C.; Mota-Delfin, C. A Lightweight YOLO-Based Architecture for Apple Detection on Embedded Systems. Agriculture 2025, 15, 838. https://doi.org/10.3390/agriculture15080838

Olguín-Rojas JC, Vasquez JI, López-Canteñs GdJ, Herrera-Lozada JC, Mota-Delfin C. A Lightweight YOLO-Based Architecture for Apple Detection on Embedded Systems. Agriculture. 2025; 15(8):838. https://doi.org/10.3390/agriculture15080838

Chicago/Turabian StyleOlguín-Rojas, Juan Carlos, Juan Irving Vasquez, Gilberto de Jesús López-Canteñs, Juan Carlos Herrera-Lozada, and Canek Mota-Delfin. 2025. "A Lightweight YOLO-Based Architecture for Apple Detection on Embedded Systems" Agriculture 15, no. 8: 838. https://doi.org/10.3390/agriculture15080838

APA StyleOlguín-Rojas, J. C., Vasquez, J. I., López-Canteñs, G. d. J., Herrera-Lozada, J. C., & Mota-Delfin, C. (2025). A Lightweight YOLO-Based Architecture for Apple Detection on Embedded Systems. Agriculture, 15(8), 838. https://doi.org/10.3390/agriculture15080838