Abstract

The accurate detection of citrus surface defects is essential for automated citrus sorting to enhance the commercialization of the citrus industry. However, previous studies have only focused on single-modal defect detection using visible light images (RGB) or near-infrared light images (NIR), without considering the feature fusion between these two modalities. This study proposed an RGB-NIR multimodal fusion method to extract and integrate key features from both modalities to enhance defect detection performance. First, an RGB-NIR multimodal dataset containing four types of citrus surface defects (cankers, pests, melanoses, and cracks) was constructed. Second, a Multimodal Compound Domain Attention Fusion (MCDAF) module was developed for multimodal channel fusion. Finally, MCDAF was integrated into the feature extraction network of Real-Time DEtection TRansformer (RT-DETR). The experimental results demonstrated that RT-DETR-MCDAF achieved Precision, Recall, mAP@0.5, and mAP@0.5:0.95 values of 0.914, 0.919, 0.90, and 0.937, respectively, with an average detection performance of 0.598. Compared with the model RT-DETR-RGB&NIR, which used simple channel concatenation fusion, RT-DETR-MCDAF improved the performance by 1.3%, 1.7%, 1%, 1.5%, and 1.7%, respectively. Overall, the proposed model outperformed traditional channel fusion methods and state-of-the-art single-modal models, providing innovative insights for commercial citrus sorting.

1. Introduction

Citrus plants are cultivated worldwide and are of significant economic importance [1], ranking as the largest fruit crop in China. According to the Food and Agriculture Organization of the United Nations, global citrus production reached approximately 140 million tons in 2022, with China being one of the leading producers. According to the China Rural Statistical Yearbook, by 2022, the citrus planting area in China has exceeded 45 million acres, with an annual consumption of nearly 60 million tons and a production value of nearly 200 billion yuan. The commercial value of citrus is largely determined by the quality of its surface, as fruits with defects not only command lower market prices but can also compromise the health of other citrus fruits during storage and transportation, leading to substantial economic losses [2]. Therefore, accurate detection and classification of citrus surface defects is crucial for enhancing market competitiveness and maximizing profitability across the supply chain.

In recent years, various methods and technologies have been developed for citrus defect detection, with image processing being the most widely used approach for extracting color and texture features. Lopez found that defect characteristics can be identified in different color spaces, and defect regions can be segmented using techniques such as Sobel operators [3]. Bhargava and Bansal [4] applied color space transformation and a gray-level co-occurrence matrix (GLCM) to extract defect features in citrus and three other fruits, classifying them using multiple neural network classifiers. Similarly, López-García et al. [5] utilized Principal Component Analysis (PCA) to extract surface color and texture information from citrus, further enhancing defect detection accuracy.

Traditional image processing methods rely on manual feature extraction and struggle to detect and locate multiple defects within a single image, thereby limiting their effectiveness. Models trained on manually extracted features often exhibit poor generalization and robustness. Deep learning techniques have been widely adopted for object detection and classification [6]. Cai et al. [7] proposed a citrus surface defect segmentation method using the SegFormer model to detect citrus cankers, achieving an mIoU of 89.33% with real-time detection capabilities. Additionally, Xu et al. [8] developed an improved U-Net segmentation network incorporating superpixels and multiscale channel cross-fusion transformers to detect defects, such as canker and pest scars. Although segmentation methods can accurately identify defect shapes, they require additional computational resources and extensive pixel-level annotations [9]. Thus, object detection methods are more practical for citrus defect detection. Y. Chen et al. [10] implemented the YOLO detection model with MobileNet-V2 as the backbone to enable real-time citrus defect detection on a commercial grading machine, classifying fruit into categories such as healthy, mechanically damaged, and skin-damaged. Similarly, Da Costa et al. [11] utilized the ResNet deep residual network to classify tomato fruits on a commercial grading machine and achieved an mAP of 94.6%. These approaches have demonstrated the potential of deep learning for defect detection; however, they primarily focus on classifying the entire fruit rather than pinpointing the defect locations or identifying multiple defect types simultaneously. Hu et al. [12] utilized the fluorescence response of hidden citrus defects under ultraviolet light to collect images and proposed an improved YOLOv5 algorithm, achieving an mAP of 95.5% while increasing the average detection speed by 22.1 ms per image. However, this method is only applicable to a limited number of citrus varieties with strong fluorescence reactions, which restricts its generalizability. Jia et al. [13] developed YOLOv7-CACT to detect citrus brown spots, sunburns, and aphid diseases by integrating a coordinate attention (CA) module into the backbone network of YOLOv7 and introducing a contextual transformer (CT) module in the head network. This approach achieved a maximum mAP of 91.1% and satisfied the sorting requirement of 10 fruits/s for citrus production lines. This single-modal method focused solely on the three types of surface pests and did not cover other types of defects. Feng et al. [2] proposed a real-time citrus surface defect detection method using an improved YOLOX model for identifying defects such as scars, deformities, and disease spots. Their method achieved the highest F1-score of 90.6% among various object detection models, with a real-time inference speed of 64.2 FPS, meeting real-time detection requirements. However, it relies on ultra-low resolution images of 320 × 320 as input, which may lead to problems such as potential small-target defects being unrecognizable [2]. Lu et al. [14] further enhanced the citrus surface defect detection by improving the YOLOv5 model and incorporating the fruit-shape indicators such as symmetry and roundness, exploring the downstream applications in citrus quality inspection. However, this method only studied punctate defects in oranges and did not involve the identification of defects of varying shapes and sizes, such as pests and melanoses. Among the aforementioned deep learning-based detection methods for citrus and other fruits, only the detection of RGB images has been explored thus far.

In recent years, near-infrared (NIR) imaging has been increasingly applied for detecting fruit defects. S. Fan et al. [15] proposed an apple grading machine incorporating NIR imaging with a YOLOv5 model for surface defect detection. B. Zhang et al. [16] applied near-infrared spectroscopy to identify the early apple rot and determine the optimal detection wavelengths. Blasco et al. [17] combined the NIR and ultraviolet imaging on a commercial sorting machine and applied classification algorithms to distinguish between anthracnose and mold diseases, achieving classification accuracies of 86% and 94%, respectively. Abdelsalam and Sayed [18] employed adaptive thresholding techniques on NIR and RGB citrus images and processed seven different color components (NIR, gray (Y), red (R), green (G), saturation (sat), Cr, and Cb) to classify defects in the output sat and NIR images. Although these combined NIR and NIR-RGB methods have provided valuable references for citrus and other fruit surface defect detection, they have notable limitations. First, single NIR images lack RGB information, making it challenging to differentiate between similar defects such as pest damage and canker, which appear similar in NIR images. Second, even when RGB images are considered, these methods process NIR and RGB images separately and fail to extract and integrate higher-level semantic features. As a result, these models suffer from poor generalization and robustness, whereas image processing remains overly complex.

Research on citrus fruit defect detection using RGB and NIR images has made significant progress. However, relying on a single sensor limits the amount of information captured in each image. RGB images can provide rich color differentiation between healthy and defective surfaces, whereas NIR images may reduce the impact of fine surface textures, smooth healthy epidermis, and enhance the defect boundaries. A promising approach to overcome these limitations is multimodal data fusion, in which complementary information from different modalities improves detection accuracy. Previous studies on RGB-NIR fusion have demonstrated promising results for agricultural applications, including field fruit detection, plant leaf disease identification, and strawberry defect detection [19,20,21,22,23].

This study aimed to develop an effective solution for detecting common surface defects in citrus by focusing on the following key research objectives:

- This study proposed the application of an RGB-NIR multimodal fusion method for citrus defect detection and the development of a multimodal dataset utilizing coaxial RGB and NIR imaging.

- This study developed a composite domain channel fusion model, namely the Real-Time DEtection TRansformer—Multimodal Compound Domain Attention Fusion (RT-DETR-MCDAF), based on vision transformers. It integrates the Multimodal Compound Domain Attention Fusion (MCDAF) module to extract and fuse features from both time and frequency domains for RGB and NIR images.

- This study conducted extensive comparative tests of the RT-DETR-MCDAF model to validate the effectiveness and superiority of the proposed multimodal approach.

The remainder of this paper is organized as follows. Section 2 and Section 3 outline the data collection process, including the location, methodology, dataset size, annotation examples, and data augmentation techniques. Section 4 presents the experimental findings and evaluation. Section 5 addresses the study’s limitations and future research directions. Finally, Section 6 provides a summary of the study’s key contributions.

2. Materials

This section first introduces the collection of citrus samples, followed by the acquisition of RGB and NIR images. Subsequently, the annotation of citrus defects is described, and finally, image enhancement methods are presented.

2.1. Image Acquisition

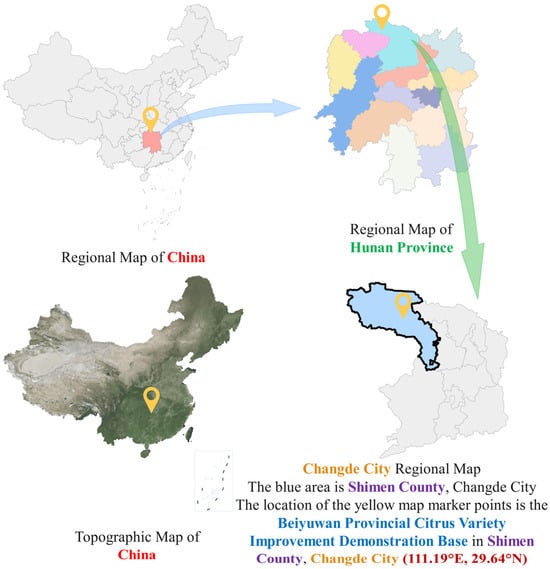

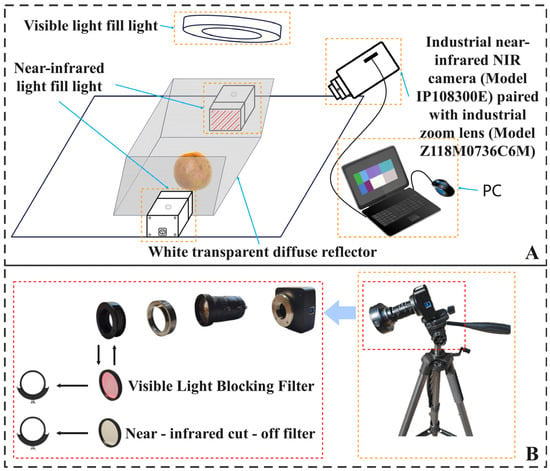

As shown in Figure 1, the citrus samples used in this study were collected from the Beiyuwan Provincial Citrus Variety Improvement Demonstration Base in Shimen County, Changde City, Hunan Province, China (111.19° E, 29.64° N). Data collection was conducted at this site in November 2024. Citrus samples were harvested from the fields on sunny days and then transported indoors for further processing. As illustrated in Figure 2A, citrus fruits collected from the fields were placed on an indoor flat surface, and images were captured using a near-infrared industrial camera (IP108300D model, TQ-Tech, Hangzhou, China). A diffuse shadowless light source and 850 nm infrared light were employed for illumination, with other light sources shielded during image acquisition. The camera was equipped with an industrial zoom lens (Z118M0736C6M model, Tuqian Technology, Hangzhou, China) with a focal length of 7–36 mm and an aperture of F2.8 (Figure 2B). To capture RGB and NIR images simultaneously, a manual filter-switching method was employed utilizing a dedicated threaded interface to fix the filter drawer in front of the lens. The system used an infrared cutoff filter (SPF650 model, Weidon, Shenzhen, China) that allowed wavelengths below 700 nm to pass and a visible light cutoff filter (SPF750 model, Weidon, Shenzhen, China) that permitted wavelengths above 750 nm. The images were saved in JPG format with a resolution of 1920 × 1080. A total of 1680 images (840 sets) were captured, encompassing common citrus surface defects, such as cankers, pests, melanoses, and cracks.

Figure 1.

Location of Image Data Collection.

Figure 2.

Image Acquisition Method and Collection Device. (A) Sample collection method; (B) Explosion diagram of the camera and lens group.

2.2. Image Annotation

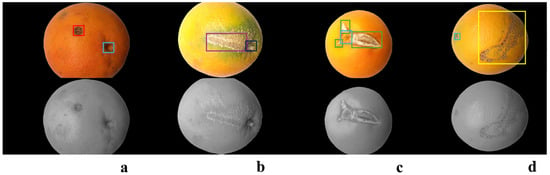

The X-Anylabeling [24] annotation tool was used by a researcher to label citrus defects in RGB images by creating minimum bounding rectangles for four defect types: cankers, pests, melanoses, and cracks. The annotations were saved in TXT format. To prevent the model from misidentifying stems and navels as defects, stems and navels were annotated separately. To demonstrate the effect of image annotation, we replaced the annotation bounding boxes of X-Anylabeling with rectangles of different colors, as shown in Figure 3. Figure 3 presents the captured visible-light and NIR images, with different colored rectangles indicating the annotated examples: red for canker, purple for pests, green for cracks, yellow for melanose, blue for the navel, and black for the stem.

Figure 3.

Examples of RGB + NIR images and annotations in the dataset. Red rectangular box (a): example of canker annotation; purple rectangular box (b): example of pest annotation; green rectangular box: example of crack annotation (c); yellow rectangular box (d): example of melanose annotation; blue rectangular box (a,c,d): example of navel annotation; and black rectangular box (b): example of peduncle annotation.

2.3. Data Augmentation Methods

To mitigate overfitting in small datasets and enhance the generalization ability of the detection model, data augmentation techniques were applied to both visible light and paired near-infrared images. These included geometric transformations, such as image translation (ratio: 0.3, probability: 0.5) and flipping (probability: 0.5), as well as pixel-level transformations, such as brightness adjustment (factor range: 0.35 to 1), salt-and-pepper noise, and Gaussian noise (both with a 0.5 addition ratio). As the focal length of the camera was fixed, citrus fruits that needed to fully fit within the field of view, cropping, and scaling were excluded. As a result, 2522 paired RGB and NIR images (1261 sets) were generated and split into training and testing sets in a 7:3 ratio using a random splitting method.

3. Methods

3.1. Overview of Multimodal Models

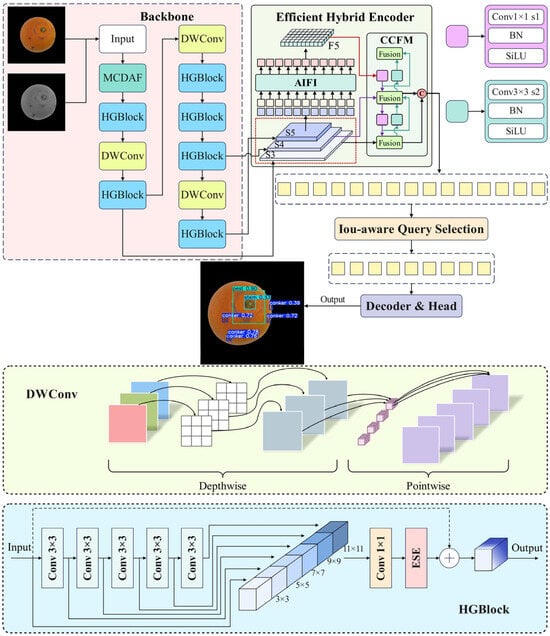

Convolutional Neural Networks (CNNs) have demonstrated exceptional performance in image processing by leveraging local receptive fields to capture nearby features. However, their reliance on convolution and pooling operations often limits their ability to model global long-range dependencies. In contrast, the Vision Transformer (ViT) architecture with its multi-layer encoder-decoder structure excels in sequential processing and effectively captures high-level semantic features by focusing on long-range dependencies. This makes it particularly suitable for detecting defects, such as pests and melanoses, which vary in morphology and size. In the object detection field, RT-DETR offers a robust end-to-end Detection Transformer (DETR) framework that integrates CNN and transformer-based architectures [25]. It consists of a CNN backbone, a transformer-based hybrid encoder, and a transformer decoder with auxiliary detection heads. The CNN backbone extracts the feature maps with downsampling factors of 8, 16, and 32, whereas the hybrid encoder combines Attention-based Intra-scale Feature Interaction (AIFI) and CNN-based Cross-scale Feature Fusion (CCFF) modules to enable inter-scale feature fusion and multi-dimensional integration. In the decoding stage, the Uncertainty-minimal Query Selection constraint detector enhances detection accuracy by prioritizing targets with high Intersection over Union (IoU) and classification confidence.

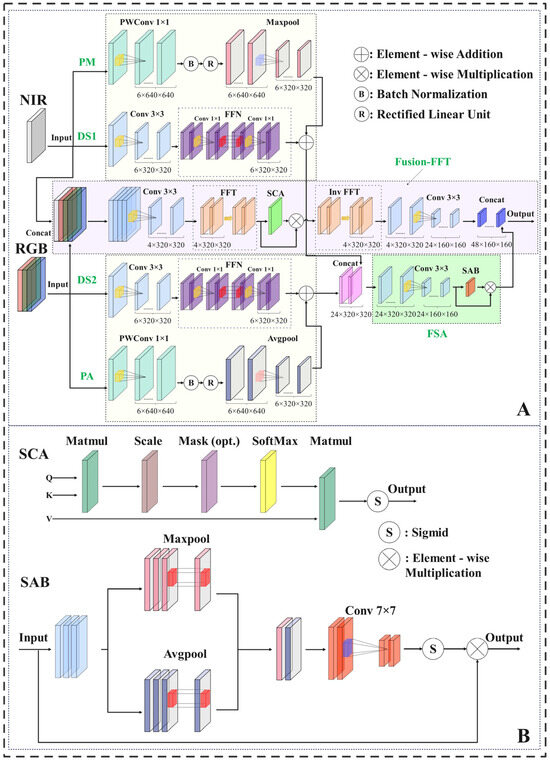

This study aimed to enhance citrus defect detection by integrating visible light and NIR information, leveraging the strengths of both modalities for improved performance. To achieve this, we proposed RT-DETR-MCDAF serving as a multimodal citrus defect detection network based on RT-DETR. It efficiently fused multimodal features while utilizing the Vision Transformer (ViT) network’s global processing capabilities for more precise defect detection. As illustrated in Figure 4, we introduced a Multimodal Compound Domain Attention Fusion (MCDAF) module designed with an attention mechanism to effectively integrate RGB and NIR image features. The fused feature space was then adopted as an input for RT-DETR, enabling more accurate and robust defect detection.

Figure 4.

RT-DETR-MCDAF model Architecture Diagram.

3.2. MCDAF Fusion Structure

Traditional modal fusion methods (Figure 5) directly fuse visible light and NIR images along the channel dimension. As illustrated in Figure 3, NIR images enhance defect edge visibility, smooth surface textures, and reduce color interference compared with RGB images. However, NIR images also have certain limitations, including inadequate representation of color variations within certain defects and the absence of color information necessary to distinguish defects from normal citrus surfaces. In severe cases, the contrast between certain defects and healthy citrus skin may disappear, thereby highlighting the limitations of traditional RGB and NIR imaging. To address these features extracted from spatial- or frequency-domain images have been employed in agricultural product defect classification, offering a more robust approach to defect detection [26]. The proposed MCDAF fusion module (Figure 6) established a data flow for integrating images and extracting features across both time and frequency domains. In the frequency domain, fusion occurred at the channel level before feature extraction through the Fusion-FFT branch. In the time domain, the process began with feature extraction followed by channel fusion, ensuring comprehensive multimodal feature integration (Algorithm 1).

| Algorithm 1: Multimodal Compound Domain Attention Fusion (MCDAF) |

| Input: Multi-modal image input X with NIR and RGB channels, Number of input channels c1, Number of feature channels c2 |

| Output: Fused feature representation of X |

|

1. Initialize NIR_channels ← c1/4 2. Initialize RGB_channels ← c1 − NIR_channels 3. Initialize scale_factor ← (c2/c1)/2 4. NIR_features1 ← PWConv (NIR_channels, NIR_channels × scale_factor, kernel = 1) 5. NIR_features1 ← BatchNorm (NIR_features1) 6. NIR_features1 ← ReLU (NIR_features1) 7. NIR_features1 ← MaxPool (NIR_features1, kernel = 2, stride = 2) 8. NIR_features2 ←Conv (NIR_channels, NIR_channels × scale_factor, kernel = 3) 9. NIR_features2 ← FFN (NIR_features2) 10. NIR_features ← NIR_features1 + NIR_features2 11. RGB_features1 ← PWConv (RGB_channels, RGB_channels × scale_factor, kernel = 1) 12. RGB_features1 ← BatchNorm (RGB_features1) 13. RGB_features1 ← ReLU (RGB_features1) 14. RGB_features1 ← AvgPool (RGB_features1, kernel = 2, stride = 2) 15. RGB_features2 ← Conv (RGB_channels, RGB_channels × scale_factor, kernel = 3) 16. RGB_features2 ← FFN (RGB_features2) 17. RGB_features ← RGB_features1 + RGB_features2 18. FFT_features ← Conv (X, c1, kernel = 3, stride = 2) 19. FFT_features ← FFT (FFT_features) 20. FFT_features ← SCA (FFT_features) 21. FFT_features ← InverseFFT (FFT_features) 22. FFT_features ← Conv (FFT_features, c1 × scale_factor, kernel = 3, stride = 2) 23. Concatenated_features ← Concat (RGB_features, NIR_features) 24. Fusion_features ← Conv (Concatenated_features, c1 × scale_factor, kernel = 3, stride = 2) 25. SAB_features ← SAB (Fusion_features) 26. Enhanced_features ← SAB_features × Fusion_features 27. Output ← Concat (FFT_features, Enhanced_features) 28. if Output is valid then 29. return Output 30. else 31. return ErrorMessage (“Fusion Failed”) 32. end if |

Figure 5.

Traditional channel fusion method.

Figure 6.

MCDAF Fusion Module. (A) Structural diagram of MCDAF; (B) SCA and SAB attention mechanism structure diagram.

3.2.1. MCDAF Frequency Domain Direction

The Fusion-FFT module (Figure 6A) represented the frequency-domain processing pathway. The visible light and NIR images were first resized to 640 pixels in width and height, with the RGB image containing three channels and the NIR image containing a single channel. These images were concatenated along the channel dimensions to form a four-channel fused image similar to the method shown in Figure 5. However, simple concatenation did not sufficiently enhance the cross-modal feature correlations. To address this, convolutional operations were applied for downsampling, thereby reducing the image dimensions to 320 × 320 pixels. This convolution fused the RGB and NIR information by performing a local weighted summation through kernels, effectively modeling the cross-channel correlations, and extracting the higher-level semantic features while reducing noise. Subsequently, the feature map underwent a Fast Fourier Transform (FFT) to shift it into the frequency domain, where high-frequency components such as defect edges and texture details were separated from low-frequency components such as uniform surface regions. To enhance feature representation, a Self-Attention-driven Channel Attention (SCA) module with residual structures was introduced. The self-attention mechanism mapped the key, value, and query within the same sequence block, establishing dependencies between local and global features. The frequency domain provided clearer feature distinctions, thereby improving self-attention effectiveness. A Sigmoid-based channel gating mechanism was applied at the output to activate the key features while reducing overfitting, while the residual structure ensured that the essential frequency details were preserved. After frequency-domain processing, the feature map was transformed into the time domain using inverse FFT to restore its original dimensions. A final 3 × 3 convolution was then applied for additional downsampling, further refining the local semantic information while adjusting the feature dimensions to 24 × 160 × 160 and preparing them for subsequent processing. The frequency-domain transformation can be expressed by Equation (1).

where ‘;’ represents the Concat operation, applicable to other formulas in this section.

3.2.2. MCDAF Time Domain Direction

As illustrated by the yellow background of Figure 6, two parallel data flows, the PM and PA modules, were utilized to compress the features from the NIR and RGB images, respectively, before fusion. Additionally, a DS downsampling module was introduced to process the NIR and RGB images in parallel with PM and PA, corresponding to DS1 and DS2.

In the PM module, a 1 × 1 pointwise convolution was applied to increase the channel dimensions, whereas max pooling was adopted to downsample the width and height dimensions, adjusting the feature map to 6 × 320 × 320. The max pooling preserved the edge features, whereas the expanded channel dimensions enhanced the feature mapping capability. The PA module followed a similar structure using a 1 × 1 pointwise convolution to expand the channel dimensions and pooling to reduce the width and height dimensions. Additionally, the PA module performed 6× upsampling on the RGB image, increasing the number of channels from 3 to 18 and adjusting the dimensions to 320. Given that the RGB images provided a richer color representation and feature interaction for normal citrus surfaces and defects of varying colors, increasing the channel dimensions enhanced the color feature interaction across different channels, thereby improving the feature extraction and representation.

In the pooling layer, the PA module differed from the PM module in that it utilized average pooling (AvgPool) rather than max pooling. This was based on the richer color representation in visible-light images, where the color features played a crucial role in distinguishing the defects from normal skin. Smoothing the color features in a shallow network helped preserve the spatial details and prevent a decline in color perception in deeper networks. To mitigate the potential feature loss caused by pooling, a DS module was incorporated to retain essential information.

The DS module comprised a 3 × 3 convolution for feature extraction and dimension adjustment along with a feedforward neural network (FFN). It performed the downsampling on both the NIR and RGB paths, adjusting the feature map dimensions before combining the output feature maps with the PM and PA modules through element-wise addition. We fused the output of the element-wise addition along the channel dimension before feeding the resulting feature map into the FSA module. This module includes a 3×3 convolutional layer that captures the channel dependencies between fused RGB and NIR features. Simultaneously, the FSA module integrated a spatial attention mechanism with residual connections, known as SAB. Spatial attention was first extracted along the spatial dimension, followed by channel interactions using large receptive fields, resulting in a feature map with dimensions of 24 × 160 × 160.

where T represents the final output in the time-domain direction.

Finally, the feature maps from the time- and frequency-domain branches were fused along the channel dimensions to produce an output with dimensions of 48 × 160 × 160, which was then fed into RT-DETR. In summary, time-domain processing applied distinct strategies to NIR and visible light images to maximize the advantages of both modalities. NIR image processing enhanced edge feature extraction, while visible light image processing emphasized color and global features, enabling the model to leverage complementary information for improved defect detection (Equation (3)).

where F is the output after frequency-domain fusion, and T is the output after time-domain fusion.

3.3. Experimental Environment and Hyperparameter Configuration

This study was conducted on an Ubuntu 20.04 operating system with an NVIDIA RTX 4090 GPU (24GB RAM, NVIDIA Corporation, Santa Clara, CA, USA), utilizing CUDA v11.8 and CUDNN v8.6.4 for the GPU-accelerated inference. All programs were implemented in Python 3.10.8, using the PyTorch 2.1.2 deep learning framework.

For all experiments, the batch size was set to 8, and the SGD optimizer was used with a momentum of 0.937 and a weight decay of 0.0005. The model was trained for 100 epochs, starting with an initial learning rate of 0.01 and gradually decreasing to 0.0001. During training, the CPU thread count for image loading was set to 8, and the images were resized to 640 × 640 pixels. Mosaic augmentation was applied online for image enhancement, and a warm-up strategy was implemented for the first three epochs, during which the momentum of the SGD optimizer was set to 0.8. Mosaic augmentation was disabled in the final 10 epochs to refine the learning of the model.

3.4. Evaluation Metrics

In this study, the model was evaluated using precision (P), recall (R), F1 score, and mean Average Precision (mAP). P measures the proportion of correctly predicted positive samples (TP) among all samples classified as positive (TP + FP), as defined in Equation (4).

R measures the proportion of correctly predicted positive samples (TP) among all actual positive samples (TP + FN), as defined in Equation (5).

The F1 score is the harmonic mean of P and R considering the balance between the two. The calculation formula is as follows:

The “m” in mAP stands for “mean”, while “AP” represents the average precision for a given category at a specific IoU threshold based on the confusion matrix. The mAP evaluates how precision varies with recall, where a higher mAP indicates a better model performance in maintaining high precision at higher recall levels. mAP@0.5 measures the average precision across all the samples at an IoU threshold of 0.5, whereas mAP@0.5:0.95 represents the mean AP across multiple IoU thresholds ranging from 0.5 to 0.95 in increments of 0.05. The calculation formula is as follows:

where C represents the number of categories, n represents the quantity of a certain category, and P represents the AP value.

4. Results and Analysis

4.1. Analysis of Multimodal Model Training Results

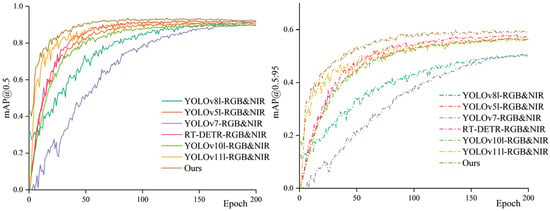

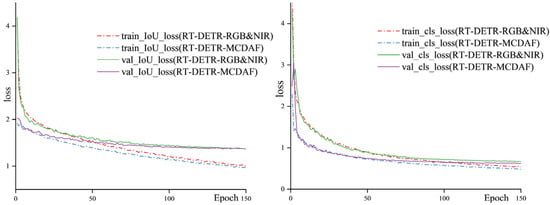

Wu et al. [6] used a unimodal RGB detection model as the baseline, while our proposed method fused the data along the channel dimension. However, comparing the unimodal RT-DETR model with our multimodal fusion approach could not be entirely fair. Hence, we developed a basic channel fusion multimodal model, RT-DETR-RGB&NIR (Figure 5), and compared it with our proposed RT-DETR-MCDAF using multiple performance metrics (Table 1). The results indicated that integrating multimodal data into shallow layers significantly enhanced the detection performance. Compared with the baseline, RT-DETR-MCDAF improved P, R, F1-score, and mAP@0.5 by 1.3%, 1.7%, 1%, and 1.5%, respectively, while mAP@0.5:0.95 increased by 1.7%. The MCDAF module effectively extracted and fused key semantic features across the time and frequency domains. Figure 7 presents the mAP@0.5 values for RT-DETR-MCDAF and RT-DETR-RGB&NIR, showing rapid performance gains during the initial training phase, which stabilized after approximately 70 epochs. The loss curves (Figure 8) indicate that RT-DETR-MCDAF achieved faster convergence, with lower IoU and classification losses than RT-DETR-RGB&NIR, suggesting that simple channel concatenation did not necessarily improve the detection and classification. Efficient multimodal fusion significantly enhanced early-stage semantic feature extraction and interaction. Notably, the fusion module did not increase the model size or parameter count, making it advantageous for future deployment in automated commercial citrus quality grading systems (Table 2).

Table 1.

Ablation Experiment.

Figure 7.

mAP@0.5 and mAP@0.5 0.95 curve chart, illustrating a comparison between the proposed model and baseline.

Figure 8.

Comparison of Loss Curve between RT-DETR-MCDAF and RT-DETR-RGB&NIR.

Table 2.

Model Parameter Quantity and Model Size.

4.2. Ablation Experiment

To evaluate the contribution of each component within the MCDAF structure, we conducted an ablation study on RT-DETR-MCDAF and systematically analyzed the model performance after removing individual modules. MCDAF operated along two processing directions: the frequency and time domains. The frequency domain incorporated two Fast Fourier Transforms (FFT) embedded with self-attention mechanisms, whereas the time domain consisted of the PM and PA modules with feedforward neural networks, along with the parallel DS module. The ablation study examined the impact of removing the SCA and SAB attention mechanisms in the frequency domain and the DS module in the time domain. The results presented in Table 1 highlighted the significance of each component in enhancing model performance.

RT-DETR-MCDAF I removed the SCA attention mechanism and the corresponding FFT, exhibiting a performance drop below that of the baseline model, with the most significant decline observed in mAP@0.5:0.95. This decline was likely due to the absence of the SCA attention mechanism in the frequency domain, where downsampling after channel fusion led to substantial feature loss, and the unfiltered noise further degraded the performance. Moreover, RT-DETR-MCDAF II, which removed the SAB spatial attention mechanism in the time domain, exhibited a notable decrease in mAP compared with the baseline. The lack of spatial attention resulted in ineffective filtering of spatial information in the fused time-domain feature map, whereas simple downsampling through convolutional layers failed to extract meaningful information. This likely caused poor alignment with the frequency-domain features, increased noise, and ultimately reduced overall model performance.

The ablation study of the DS module in RT-DETR-MCDAF III and RT-DETR-MCDAF IV revealed that its absence in any position negatively affected model performance. This indicated that without feature supplementation during spatial filtering in shallow networks, a certain degree of feature loss occurred. Notably, the absence of DS2 had a more pronounced effect, likely because the RGB images contained richer color features, and losing this information in the shallow network significantly affected model accuracy.

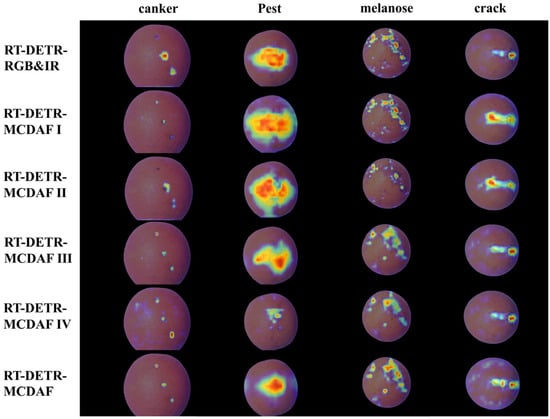

We employed gradient-weighted class activation mapping (Grad-CAM) [27] to visualize the heatmap and provide a visual representation of the attention of the model to citrus defect locations. Grad-CAM used target gradients to indicate attention intensity, with colors ranging from red (the highest attention) to yellow, green, and blue (the lowest attention). As shown in Figure 9, significant differences in the model performance were observed, particularly in the first column for small-area cankers. Models I–IV exhibited varying degrees of small-target perception loss, with Models I and II lacking attention mechanisms and showing the most severe deficiencies.

Figure 9.

Ablation Experiment Heatmaps.

The second column presents examples of pest-damage defects, where RT-DETR-MCDAF demonstrated a superior focus on key defect features compared with the other models. This improvement was attributed to the complete integration of attention mechanisms and feature extraction across both the time- and frequency-domain spaces. Consequently, RT-DETR-MCDAF effectively concentrated on the center of the pest-damage defect, outperforming the other models in the ablation experiment.

In the third column, RT-DETR-MCDAF effectively identified melanose defects of varying shapes and sizes, whereas the other models exhibited a limited attention range and inadequate focus on the target areas owing to their suboptimal fusion structures.

In the fourth column, RT-DETR-MCDAF accurately focused on the entire crack area, maintaining strong attention to its interior. In contrast, the baseline model and Models III and IV demonstrated weak attention to crack location, whereas Models I and II exhibited deviations in focus, likely owing to the absence of an attention mechanism during the modal fusion process.

4.3. Comparison Experiment of Fusion Module Attention Mechanisms

To assess the performance of SAB in MCDAF, a comparative experiment was conducted on the attention mechanisms. Without altering the other components of the MCDAF module, five spatial attention mechanisms were evaluated: SE [28], CBAM [29], ECA [30], CA [31], and EMA [32]. Table 3 presents the results of this comparison. SAB in the proposed model outperformed several well-structured attention mechanisms, thereby achieving a better balance between P and R. Specifically, mAP@0.5 was higher by 0.9%, 1.1%, 0.5%, 0.3%, and 0.2%, whereas mAP@0.5:0.95 showed improvements of 1%, 1.1%, 0.8%, 0.6%, and 0.3%, respectively. These results confirmed the effectiveness of the SAB design in enhancing the spatial feature extraction and overall model performance.

Table 3.

Comparison Experiment of Attention Mechanisms.

4.4. Comparison with Other Fusion Models

We developed multiple RGB-NIR multimodal fusion models based on state-of-the-art CNN architectures using the fusion method shown in Figure 5 and conducted comparative experiments with RT-DETR-MCDAF on the same dataset. As shown in Table 4, RT-DETR-RGB&NIR outperformed the other CNN-based multimodal baseline models, likely owing to the transformer encoder-decoder structure in the DETR architecture, which effectively captured long-range dependencies and enhanced the semantic relationships between distant pixels. This advantage was particularly beneficial for detecting defects, such as pests and melanoses, which varied in shape and spatial extent. Compared to all the baseline models, the proposed model achieved a higher P and the highest R, mAP@0.5, and mAP@0.5:0.95 scores.

Table 4.

Baseline Model Comparison Experiment.

In the frequency domain, the direct fusion of feature maps, the frequency-domain transformation of low-dimensional feature maps, and the SCA attention mechanism enabled early interaction between modalities, facilitating the capture of interrelationships and collaborative information. In the time domain, the features were first extracted independently for each modality and then fused in a higher-level semantic space, preserving the essential characteristics of both RGB and NIR data and preventing the loss of critical modality-specific features before fusion. Furthermore, the SAB spatial attention mechanism in high-dimensional semantic space enhanced the model’s perception of multimodal spatial information and improved channel interaction, further strengthening feature integration.

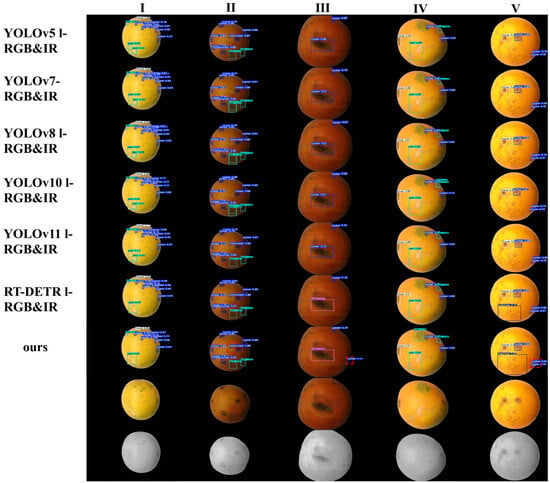

Figure 7 compares the mAP@0.5 and mAP@0.5:0.95 curves of the models from Table 4 during training, highlighting the superior convergence speed and accuracy of RT-DETR-MCDAF, particularly under stricter IoU thresholds. This demonstrated that MCDAF enhanced modality fusion, enabling clearer guidance for subsequent feature learning. Figure 10 presents a comparison of the detection results of the models listed in Table 4.

Figure 10.

Comparison of Detection Results. I–V are examples of detection results that include all defects.

The canker defects varied in severity, appearing as small oily spots or spongy bulges, typically round in shape, as shown in Figure 10II,III,V (red dashed boxes). The proposed multimodal model accurately detected small oily spots and effectively identified canker lesions on both citrus surface edges and non-edge positions, demonstrating superior robustness in detecting small target canker defects.

In this study, citrus pest damage primarily appeared as white spots on the fruit surface. In Figure 10I, all models effectively detected small white pest spots in the upper left corner. However, in the bottom-left corner, irregular pest damage with green background interference and faint pest spots presented challenges. Although YOLO-based multimodal models predicted pest spots, they generated multiple redundant bounding boxes. In contrast, RT-DETR-based models accurately detected all pest spots in that area using a single bounding box, likely because of the transformer structure’s ability to capture global relationships. The proposed model further improved the prediction confidence compared with the RT-DETR-RGB&NIR baseline models.

In Figure 10IV, pest damage at the top position was minimal. The green background interfered with traditional channel fusion methods, causing them to fail in accurately predicting pest location, whereas the proposed model demonstrated superior performance.

Melanose on citrus fruits primarily appeared as uneven rust spots. As illustrated in Figure 10V, the proposed model accurately identified the concentrated areas of rust spots in cases of uneven melanose, achieving higher prediction confidence than the baseline models. The proposed model exhibited superior robustness and higher prediction confidence for crack identification. Table 5 quantitatively shows the AP@0.5 values of each category, demonstrating that our model achieved higher average precision across most categories than other traditional fusion models.

Table 5.

AP@0.5 values for each category.

The results indicated that MCDAF effectively extracted and fused features from different signal domains and semantic spaces. By employing two attention mechanisms to focus on the key features, the model fully integrated and utilized essential information from both modalities, providing an advantage in small-target detection, managing defect shape transformations, and mitigating interference from color variations.

4.5. Analysis of Comparison with Single-Modal Models

To demonstrate the superiority of RGB and NIR images in citrus defect detection and classification over traditional unimodal methods, RT-DETR-MCDAF was compared with advanced unimodal algorithms trained and tested on RGB images from the same dataset (Table 6). The proposed model outperformed the unimodal models, achieving the best balance of P, R, and F1 scores, along with the highest mAP@0.5 and mAP@0.5:0.95 scores. Although the two-stage Faster-RCNN model had a complex design, it achieved mAP@0.5 and mAP@0.5:0.95 scores 3.7% and 4.8% lower than the proposed model, respectively. Among the one-stage models, the best-performing YOLOv5l had mAP@0.5 and mAP@0.5:0.95 scores that were 1.3% and 1.7% lower, whereas the worst performing SSD was 4.7% and 3.8% lower, respectively.

Table 6.

Comparison of Proposed Model with RGB Models.

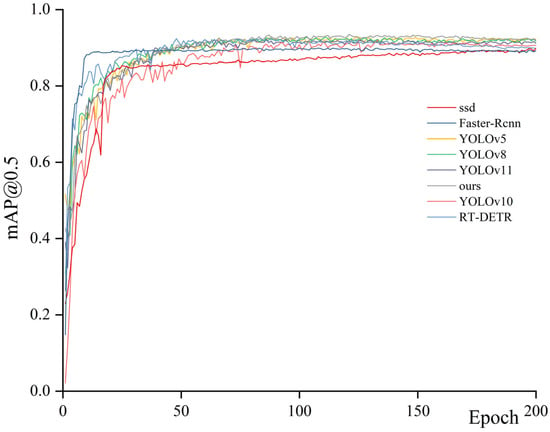

Figure 11 presents the mAP@0.5 curve comparison during training, demonstrating that the proposed model achieved balanced mean accuracy and faster convergence than the other unimodal models. The efficient fusion module effectively leveraged the defect information from the NIR images alongside the RGB data, resulting in a better performance than the unimodal methods.

Figure 11.

mAP@0.5 Curve comparing the proposed model with the RGB unimodal model.

5. Discussion

In this study, an RGB-NIR multimodal fusion citrus defect detection algorithm was developed based on the ViT-based object detection model RT-DETR, effectively meeting the detection requirements for citrus defects such as cankers, pests, melanoses, and cracks. However, these experiments revealed certain issues that may require further investigation in future studies.

This study primarily focused on developing a multimodal fusion method, and RGB and NIR image datasets were collected using a simple single-camera filter-switching method. However, this approach was labor-intensive and time-consuming, rendering it unsuitable for image collection using commercial citrus grading machines. Future work will involve the adoption of a binocular setup with separate NIR and RGB cameras aligned using algorithms. In addition, the model can be deployed in commercial citrus grading machines. With our higher-performance model, more accurate defect classification information can be provided, thus achieving better grading and enhancing the digital capabilities of the citrus industry.

In the future, we will continue to refine the model to enhance its ability to recognize pest and canker defects. Meanwhile, we plan to expand the dataset to enable the model to identify a broader range of defects, beyond common and typical defects, thereby strengthening its applicability.

6. Conclusions

This study proposed a multimodal approach for detecting external defects on citrus surfaces with the following key contributions.

- A deep learning-based multimodal method was proposed to fuse RGB and NIR images to detect external defects in citrus fruits, leveraging the unique characteristics of each modality to enhance defect detection.

- The first coaxial imaging multimodal dataset for citrus external defect detection, comprising RGB and NIR images, was developed to cover defect types such as cankers, pests, melanoses, and cracks.

- A multimodal fusion model, RT-DETR-MCDAF, was developed based on the advanced ViT-based object detection model RT-DETR. It integrated RGB and NIR data through the multimodal module MCDAF, which fused RGB and NIR images in the channel dimension, leveraging both time- and frequency-domain spaces. Meanwhile, a traditional channel-fusion model, RT-DETR-RGB&IR, was developed as the baseline. Experiments demonstrated that RT-DETR-MCDAF achieved optimal detection performance, with mAP@0.5 reaching 0.937 and mAP@0.5:0.95 reaching 0.598. Each module within MCDAF significantly contributed to enhancing the multimodal fusion performance.

- Traditional channel-fusion models built upon advanced single-modal object detection frameworks were developed and compared with RT-DETR-MCDAF. The results showed that RT-DETR-MCDAF outperformed numerous models, attaining the highest mAP@0.5 and mAP@0.5:0.95 scores and achieving the highest AP@0.5 scores for the vast majority of categories in the dataset. Visual analysis of the model’s detection effect indicated that RT-DETR-MCDAF exhibited excellent generalization capabilities in complex scenarios involving defects of varying sizes, shapes, and background color interference.

- Comparative experiments were conducted between the spatial attention mechanism SAB and the existing spatial attention mechanisms. The results indicated that SAB exhibited the best performance.

- The RGB detection performance of RT-DETR-MCDAF was compared with that of current advanced single-modal detection models. The results showed that RT-DETR-MCDAF achieved the best balance of Precision, Recall, and F1, as well as the highest mAP@0.5 and mAP@0.5:0.95 scores.

In conclusion, the proposed RGB-NIR multimodal fusion model, RT-DETR-MCDAF, meets the requirements for detecting external defects in citrus and achieves superior detection results, thereby providing a solid research foundation for the automated grading of citrus outer surfaces.

Author Contributions

Conceptualization, D.L.; methodology, Z.Y. and J.L.; software, Z.Y., Y.C. and J.L.; validation, D.L. and T.W.; formal analysis, J.L. and Z.Y.; investigation, Y.C. and D.L.; resources, D.L.; data curation, Z.Y.; writing—original draft preparation, J.L. and Z.Y.; writing—review and editing, J.L.; visualization, J.L. and T.W.; supervision, Y.C.; project administration, D.L. and T.W.; funding acquisition, T.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Tao Wen for the Distinguished Young Scholars Fund of the Hunan Provincial Natural Science Foundation under Grant No. 2023JJ10099.

Data Availability Statement

The source code and dataset can be obtained by contacting the corresponding author.

Acknowledgments

The authors would like to express their sincere gratitude to the administrative and technical support teams for their contributions. Additionally, special thanks are extended to all the reviewers and editors. Their efforts and professional insights have significantly enhanced the quality of this manuscript, and their contributions are highly appreciated.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Chen, H.; Xuan, Z.; Yang, L.; Zhang, S.; Cao, M. Managing virus diseases in citrus: Leveraging high-throughput sequencing for versatile applications. Hortic. Plant J. 2024, 11, 57–68. [Google Scholar] [CrossRef]

- Feng, J.; Wang, Z.; Wang, S.; Tian, S.; Xu, H. MSDD-YOLOX: An enhanced YOLOX for real-time surface defect detection of oranges by type. Eur. J. Agron. 2023, 149, 126918. [Google Scholar] [CrossRef]

- Lopez, J.J.; Cobos, M.; Aguilera, E. Computer-based detection and classification of flaws in citrus fruits. Neural Comput. Appl. 2011, 20, 975–981. [Google Scholar] [CrossRef]

- Bhargava, A.; Barisal, A. Automatic Detection and Grading of Multiple Fruits by Machine Learning. Food Anal. Methods 2020, 13, 751–761. [Google Scholar] [CrossRef]

- López-García, F.; Andreu-García, G.; Blasco, J.; Aleixos, N.; Valiente, J.-M. Automatic detection of skin defects in citrus fruits using a multivariate image analysis approach. Comput. Electron. Agric. 2010, 71, 189–197. [Google Scholar] [CrossRef]

- Wu, Y.; Chen, J.; Wu, S.; Li, H.; He, L.; Zhao, R.; Wu, C. An improved YOLOv7 network using RGB-D multi-modal feature fusion for tea shoots detection. Comput. Electron. Agric. 2024, 216, 108541. [Google Scholar] [CrossRef]

- Cai, X.; Zhu, Y.; Liu, S.; Yu, Z.; Xu, Y. FastSegFormer: A knowledge distillation-based method for real-time semantic segmentation of surface defects in navel oranges. Comput. Electron. Agric. 2024, 217, 108604. [Google Scholar] [CrossRef]

- Xu, X.; Xu, T.; Li, Z.; Huang, X.; Zhu, Y.; Rao, X. SPMUNet: Semantic segmentation of citrus surface defects driven by superpixel feature. Comput. Electron. Agric. 2024, 224, 109182. [Google Scholar] [CrossRef]

- Liu, D.; Parmiggiani, A.; Psota, E.; Fitzgerald, R.; Norton, T. Where’s your head at? Detecting the orientation and position of pigs with rotated bounding boxes. Comput. Electron. Agric. 2023, 212, 108099. [Google Scholar] [CrossRef]

- Chen, Y.; An, X.; Gao, S.; Li, S.; Kang, H. A deep learning-based vision system combining detection and tracking for fast on-line citrus sorting. Front. Plant Sci. 2021, 12, 622062. [Google Scholar] [CrossRef]

- Da Costa, A.Z.; Figueroa, H.E.; Fracarolli, J.A. Computer vision based detection of external defects on tomatoes using deep learning. Biosyst. Eng. 2020, 190, 131–144. [Google Scholar] [CrossRef]

- Hu, W.; Xiong, J.; Liang, J.; Xie, Z.; Liu, Z.; Huang, Q.; Yang, Z. A method of citrus epidermis defects detection based on an improved YOLOv5. Biosyst. Eng. 2023, 227, 19–35. [Google Scholar] [CrossRef]

- Jia, X.; Zhao, C.; Zhou, J.; Wang, Q.; Liang, X.; He, X.; Huang, W.; Zhang, C. Online detection of citrus surface defects using improved YOLOv7 modeling. Trans. Chin. Soc. Agric. Eng. 2023, 39, 142–151. [Google Scholar]

- Lu, J.; Chen, W.; Lan, Y.; Qiu, X.; Huang, J.; Luo, H. Design of citrus peel defect and fruit morphology detection method based on machine vision. Comput. Electron. Agric. 2024, 219, 108721. [Google Scholar] [CrossRef]

- Fan, S.; Liang, X.; Huang, W.; Zhang, V.J.; Pang, Q.; He, X.; Li, L.; Zhang, C. Real-time defects detection for apple sorting using NIR cameras with pruning-based YOLOV4 network. Comput. Electron. Agric. 2022, 193, 106715. [Google Scholar] [CrossRef]

- Zhang, B.; Fan, S.; Li, J.; Huang, W.; Zhao, C.; Qian, M.; Zheng, L. Detection of Early Rottenness on Apples by Using Hyperspectral Imaging Combined with Spectral Analysis and Image Processing. Food Anal. Methods 2015, 8, 2075–2086. [Google Scholar] [CrossRef]

- Blasco, J.; Aleixos, N.; Gómez, J.; Moltó, E. Citrus sorting by identification of the most common defects using multispectral computer vision. J. Food Eng. 2007, 83, 384–393. [Google Scholar] [CrossRef]

- Abdelsalam, A.M.; Sayed, M.S. Real-time defects detection system for orange citrus fruits using multi-spectral imaging. In Proceedings of the 2016 IEEE 59th International Midwest Symposium on Circuits and Systems (MWSCAS), Abu Dhabi, United Arab Emirates, 16–19 October 2016; pp. 1–4. [Google Scholar]

- Fan, X.; Ge, C.; Yang, X.; Wang, W. Cross-Modal Feature Fusion for Field Weed Mapping Using RGB and Near-Infrared Imagery. Agriculture 2024, 14, 2331. [Google Scholar] [CrossRef]

- Liu, C.; Feng, Q.; Sun, Y.; Li, Y.; Ru, M.; Xu, L. YOLACTFusion: An instance segmentation method for RGB-NIR multimodal image fusion based on an attention mechanism. Comput. Electron. Agric. 2023, 213, 108186. [Google Scholar] [CrossRef]

- Lu, Y.; Gong, M.; Li, J.; Ma, J. Strawberry Defect Identification Using Deep Learning Infrared–Visible Image Fusion. Agronomy 2023, 13, 2217. [Google Scholar] [CrossRef]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. Deepfruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Shen, D.; Chen, D.; Ming, D.; Ren, D.; Diao, Z. ISMSFuse: Multi-modal fusing recognition algorithm for rice bacterial blight disease adaptable in edge computing scenarios. Comput. Electron. Agric. 2024, 223, 109089. [Google Scholar] [CrossRef]

- Wang, W. Advanced Auto Labeling Solution with Added Features. 2023. Available online: https://github.com/CVHub520/X-AnyLabeling (accessed on 21 December 2024).

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Vijayarekha, K.; Govindaraj, R. Citrus fruit external defect classification using wavelet packet transform features and ANN. In Proceedings of the 2006 IEEE International Conference on Industrial Technology, Mumbai, India, 15–17 December 2006; pp. 2872–2877. [Google Scholar]

- Selvaraju, R.R.; Das, A.; Vedantam, R.; Cogswell, M.; Parikh, D.; Batra, D. Grad-CAM: Why did you say that? arXiv 2016, arXiv:1611.07450. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11534–11542. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Li, X.; Zhong, Z.; Wu, J.; Yang, Y.; Lin, Z.; Liu, H. Expectation-maximization attention networks for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 9167–9176. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).