3.2. Ablation Experiments

To validate the effectiveness of the improvements proposed in this paper for soybean pod detection, an ablation experiment was conducted. The objective of this experiment was to investigate the impact of replacing, relocating, or adding specific modules on the performance of the detection algorithm. The following table details the results of the ablation experiment, specifically assessing the efficacy of these modules.

- (1)

Impact of Different Backbone Networks

After establishing the YOLOv8 base model, we sought to enhance its performance by integrating mainstream backbone networks. These networks provide richer and more discernible feature representations, which are crucial for subsequent object detection tasks. Each of the incorporated networks brings unique innovations and advantages to the field of object detection.

YOLOv8n-LAWDS: Incorporates the LAWDS module, achieving local attention and dense shortcut connections, thereby improving accuracy and robustness.

YOLOv8n-CSP-EDLAN: Utilizes the CSPDarknet53 and EDLAN modules for effective feature aggregation, enhancing performance and efficiency.

YOLOv8n-MLCA-ATTENTION: Combines the MLCA and attention mechanisms, enriching feature information and boosting precision and robustness.

YOLOv8n-RevCol: Optimizes the use of low-level information through reverse connections, enhancing overall performance.

YOLOv8n-EfficientViT: Merges the design philosophies of transformer and EfficientDet, improving accuracy and efficiency.

YOLOv8n-LSKNet: Utilizes the lightweight LSKNet module for spatial and knowledge aggregation, increasing performance and efficiency.

YOLOv8n-VanillaNet: Employs the lightweight VanillaNet structure, simplifying the network and increasing speed and efficiency.

As shown in

Table 3, although these backbone networks play significant roles in different scenarios and applications, the actual improvements in pod detection performance were not pronounced in our specific experimental setup. This suggests that the effectiveness of these networks may vary depending on the specific requirements and characteristics of the detection task.

- (2)

Modifications to the C2f

Subsequent modifications were made to the convolutions within the backbone network. The following variations were introduced:

YOLOv8n-C2f-GOLDYOLO: Incorporates the GOLDYOLO algorithm, enhancing the network structure and loss function to improve accuracy and robustness in object detection tasks.

YOLOv8n-C2f-EMSC: Integrates the Enhanced Multi-Scale Context (EMSC) module, enhancing object detection by fusing multi-scale features and incorporating contextual information.

YOLOv8n-C2f-SCcConv: Introduces the Spatial Channel-wise Convolution (SCcConv) module, which improves model perception and accuracy by enhancing spatial relationships among channels.

YOLOv8n-C2f-EMBC: Utilizes the Enhanced Multi-Branch Context (EMBC) module, improving object detection accuracy and robustness by refining multi-branch feature extraction and context utilization.

YOLOv8n-C2f-DCNV3: Incorporates the Dilated Convolutional Network V3 (DCNV3) module, expanding the field of view and enhancing the accuracy of object detection through dilated convolution and multi-scale feature fusion.

YOLOv8n-C2f-DAttention: Introduces the Dual Attention (DAttention) module, enhancing object detection perception and accuracy through channel and spatial attention mechanisms.

YOLOv8n-C2f-DBB: Utilizes the Dilated Bi-directional Block (DBB) module, expanding the field of view and improving the efficiency of context information utilization through dilated convolution and bi-directional connections.

The experimental results presented in

Table 4 demonstrate that the inclusion of all the aforementioned modules can improve the detection accuracy of YOLOv8 to a certain extent. Relatively speaking, the DBB module exhibits greater potential in enhancing the network’s feature extraction capabilities.

- (3)

Impact of Different Detector Head Modules

Following the investigation of the effects of various backbone networks on model performance, common detector head modules were selected for integration to assess their impact on target detection performance. Specifically, the DyHead, P2, and Efficient Head detector head modules were incorporated into the model. As evident from

Table 5, the DyHead detection head contributes more significantly to the performance enhancement of the model. Essentially, in the aforementioned experiments, the current fusion improvement was achieved by replacing the detection head with Detect_DyHead while also incorporating the C2f-DBB module.

- (4)

Effects of Loss Functions and Attention Mechanisms

Loss functions and attention mechanisms play pivotal roles in optimizing the model learning process and enhancing its adaptability to specific agricultural scenarios. As shown in

Table 6, attempts to modify the loss function values did not yield ideal results and did not significantly enhance model performance. Consequently, the focus shifted toward refining the model through the integration of attention mechanisms.

Various attention mechanisms were introduced to bolster target detection performance through diverse approaches. These included Multi-Scale Positional Context Attention (MPCA), Channel Dimension Positional Context Attention (CPCA), BiLevel Routing Attention (BiLevelRoutingAttention_nchw), Squeeze-and-Excitation Attention (SEAttention), Bottleneck Attention Module (BAMBlock), and the Separated and Enhancement Attention Module (SEAM).

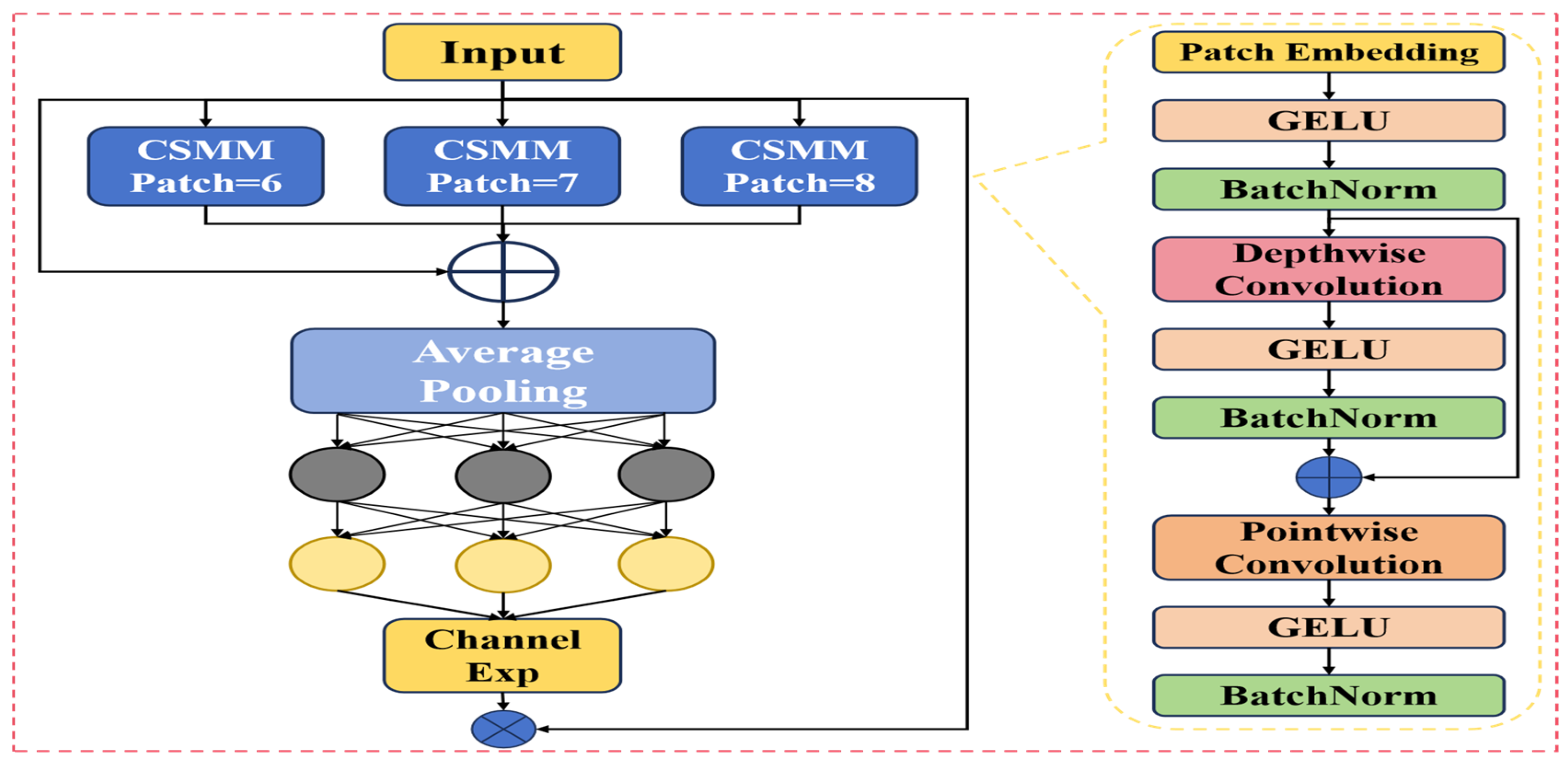

After incorporating these different attention mechanisms, it was observed that the SEAM attention mechanism was particularly advantageous for detecting small objects. By specifically enhancing the features of small targets, the SEAM makes these features more prominent within the model, thereby improving the visibility and recognition rates of small targets. By effectively addressing the challenges associated with small targets, SEAM enhances the model’s target detection capabilities across various scales, improving its generalization and reliability in practical agricultural applications. Ultimately, the integration of SEAM led to the development of the YOLOv8-POD model.

3.4. Results of Pod Counting

To gain a deeper understanding of the differences in counting results among various models, we selected representative images of soybean plants, including those with sparse pods (A and B) and dense pods (C and D), as illustrated in

Figure 12. The red numbers in the top left corner of each image indicate the counting results for the respective image. In pod detection, many predicted bounding boxes are often underestimated due to the detector’s non-maximum suppression filtering. The visual results further confirm that the YOLOv8n-POD model exhibits robust adaptability when dealing with variations in soybean pod size and density. In comparison, YOLOv8n-POD is capable of capturing additional global information, thereby achieving outstanding performance.

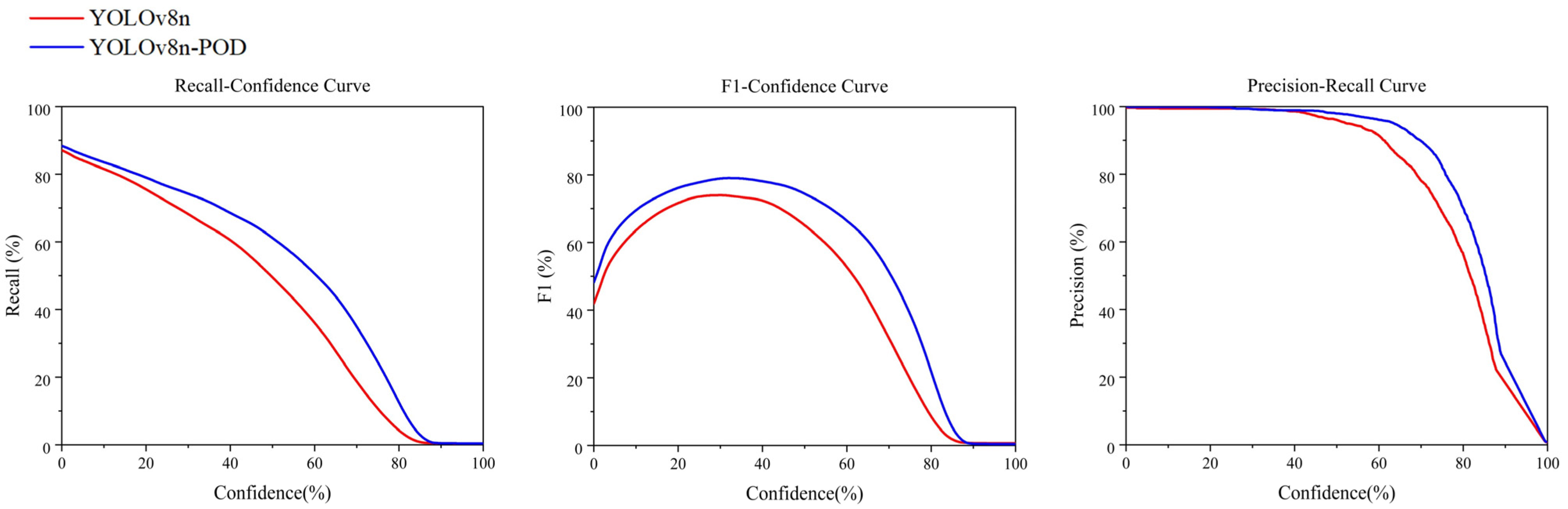

In this study, we introduced the YOLOv8n-POD model, specifically optimized for the detection of soybean pods, and conducted a comprehensive evaluation of its detection performance. The results were compared with those of traditional object detection models, including SSD, YOLOv3, YOLOv4, YOLOv5, YOLOv7 tiny, YOLOv8, and YOLOX, demonstrating the superior efficacy of our model. Specifically, manual counting of soybean pods in four test images yielded counts of 11, 24, 55, and 50 pods, respectively. In comparison, the detection results were as follows: SSD detected 4, 14, 30, and 23 pods; YOLOv3 identified 9, 17, 28, and 24 pods; YOLOv4 counted 10, 20, 38, and 29 pods; YOLOv5 detected 11, 24, 46, and 40 pods; YOLOv7 tiny counted 11, 21, 46, and 39 pods; YOLOv8 identified 11, 22, 57, and 42 pods; YOLOX detected 5, 9, 13, and 21 pods; and the YOLOv8n-POD model detected 11, 25, 53, and 45 pods. The minimal errors between the YOLOv8n-POD results and manual counts further underscore the high accuracy and practicability of the model.

The YOLOv8n-POD model incorporates the Dilated Bi-directional Block (DBB), the Separated and Enhancement Attention Module (SEAM), and a dynamically adjustable Dynamic Head, significantly enhancing its ability to recognize soybean pods of various sizes, shapes, and growth stages. The application of these innovative technologies not only optimizes feature extraction and preserves spatial information but also demonstrates exceptional precision and robustness in detecting small targets in complex agricultural settings. Compared to other models, the YOLOv8n-POD maintains high accuracy while more effectively adapting to the diversity and variability in soybean pods.

3.5. Evaluation of Generalization Ability and Practicality

To further validate the effectiveness of our approach and to assess the generalization capability and broader applicability of our model, we conducted experiments using the YOLOv8n-POD model and the YOLOv8 model on three public datasets: tomatoes, chili peppers, and wheat spikes. The comparative detection results are depicted in

Figure 13.

The Tomato Detection dataset, publicly accessible on the Kaggle platform at

https://www.kaggle.com/datasets/andrewmvd/tomato-detection (accessed on 28 July 2024), comprises 895 images annotated with bounding boxes. This dataset showcases tomatoes of diverse sizes, shapes, and colors, capturing their growth progression from immaturity to maturity. It encompasses images of both mature and immature tomatoes, along with leaves and stems, thereby enriching the dataset’s randomness and diversity.

The Chili-data dataset, accessible on the Kaggle platform at

https://www.kaggle.com/datasets/jingxiche/chili-data (accessed on 28 July 2024), comprises 976 images annotated with bounding boxes. This dataset includes chili peppers of varying sizes, shapes, and colors, capturing their growth progression from small to large sizes. The images within this dataset depict both small and large chili peppers, along with leaves and stems, reflecting the different developmental stages of the chili peppers.

The GWHD_2021 dataset, available online

https://www.global-wheat.com/#about (accessed on 28 July 2024), comprises over 6000 images with a resolution of 1024 × 1024 pixels, annotated with more than 300,000 unique wheat spike instances. This dataset represents a significant advancement over its predecessor, GWHD_2020, incorporating several enhancements. It includes the addition of 1722 images from five diverse countries, contributing to a total of 81,553 new wheat head annotations, resulting in a comprehensive dataset containing 275,187 wheat heads. To further improve the quality of the dataset, GWHD_2021 involved the meticulous removal of low-quality images and the reannotation of certain images to enhance accuracy. Additionally, the dataset was expanded to include more growth stages and images of wheat under various environmental conditions, providing a richer and more diverse representation of wheat phenotypes.

To guarantee the precision of the experimental findings, two rounds of trials were conducted on three publicly accessible datasets, with comparisons made against the YOLOv8 model. In the first trial, a network Batch Size parameter of 48 was employed, whereas the second trial utilized a Batch Size of 8. The outcomes of these trials are comprehensively detailed in

Table 8.

As detailed in

Table 5, the chili pepper dataset exhibited the most pronounced improvement when evaluated with a larger Batch Size (48), achieving a 4.60% increase in the mAP@0.5 and a substantial 12.48% rise in the mAP@0.5:0.95. These enhancements underscore the enhanced robustness and accuracy of the YOLOv8n-POD model in managing complex scenarios. In contrast, the tomato dataset demonstrated minimal gains at this Batch Size, with only a 0.06% increase in the mAP@0.5 and a 0.27% improvement in the mAP@0.5:0.95. Notably, reducing the Batch Size to 8 led to more significant performance improvements across all the datasets. For the tomato dataset, the YOLOv8n-POD model achieved a 2.14% increase in the mAP@0.5 and a 2.18% improvement in the mAP@0.5:0.95. These results highlight the model’s enhanced precision and recognition capabilities when utilizing smaller batch sizes. The chili pepper dataset also showed remarkable enhancements, with a 4.50% increase in the mAP@0.5 and an 8.27% rise in the mAP@0.5:0.95, indicating a substantial improvement in overall performance. Additionally, while the wheat dataset exhibited inconsistent performance with a larger Batch Size, it demonstrated positive improvements with a Batch Size of 8, achieving a 1.28% increase in the mAP@0.5 and a 1.01% improvement in the mAP@0.5:0.95.

It is evident from these results that although the YOLOv8n-POD model was specifically developed for pod detection in complex scenarios, it has demonstrated improved performance on the three public datasets compared to the original YOLOv8, showcasing enhanced detection capabilities and generalization ability. This indicates that the YOLOv8n-POD model is not only suitable for pod detection but also applicable to the detection and counting of other crops in complex scenarios.