TeaPickingNet: Towards Robust Recognition of Fine-Grained Picking Actions in Tea Gardens Using an Attention-Enhanced Framework

Abstract

1. Introduction

- Construction and open-sourcing of a novel, large-scale, labeled dataset tailored to tea garden behavior recognition.

- Enhancement of the YOLOv5 model using attention and context-aware modules for high-speed, high-accuracy object detection.

- Design of a channel-attentive SE-Faster R-CNN model to better handle occluded and multi-scale object features.

| Ref. | Year | Data Source | Main Task | Main Techniques | Key Findings |

|---|---|---|---|---|---|

| [27] | 2016 | Binocular images of maize and weeds (2–5 leaf stage) | Weed recognition for precision weeding | SVM with fused height and monocular image; feature optimization via GA and PSO | Achieved 98.3% accuracy; fusion of height and image features improved weed discrimination by 5% |

| [28] | 2017 | 3637 wheat disease images (2014–2016 field captures from Spain/Germany) | Early detection of wheat diseases (septoria, rust, tan spot) using mobile devices | Hierarchical algorithm with: (1) Color constancy normalization (2) SLIC superpixel segmentation (3) Hot-spot detection (Naive Bayes) (4) LBP texture features (5) Random Forest classification | Achieved AuC > 0.8 for all diseases in field tests. Maintained performance across 7 mobile devices and variable lighting conditions |

| [35] | 2018 | 1184 cucumber disease images (field captures + PlantVillage/ ForestryImages) | Weed recognition for precision weeding | SVM with fused height and monocular image; feature optimization via GA and PSO | Achieved 98.3% accuracy; fusion of height and image features improved weed–crop discrimination by 5% |

| [37] | 2019 | 1747 coffee leaf images (4 biotic stresses) | Multi-task classification and severity estimation | (1) Modified ResNet50 with parallel FC layers (2) Mixup data augmentation (3) t-SNE feature visualization | Achieved 94.05% classification accuracy and 84.76% severity estimation. Multi-task learning reduced training time by 40% compared to single-task models. |

| [29] | 2020 | Plant Village, Farm images, Internet images | Improve plant disease detection accuracy using segmented images | Segmented CNN with image segmentation, Full-image CNN for comparison, CNN architecture with convolution/pooling layers | S-CNN achieves 98.6% accuracy (vs. 42.3% for F-CNN) on independent test data. Segmentation improves model confidence by 23.6% on average |

| [33] | 2021 | UCF-101 dataset (13,320 videos) | Human action recognition using transfer learning | (1) CNN-LSTM (2) Transfer learning from ImageNet (3) Two-stream spatial -temporal fusion (4) Data augmentation techniques | (1) Best architecture achieved 94.87% accuracy (2) Transfer learning improved performance by 4–7% (3) Two-stream models outperformed single-stream (4) VGG16 performed best among pre-trained models |

| [34] | 2022 | Field studies from Chinese tea plantations | Review effects of intercropping on tea plantation ecosystems | Meta-analysis of 88 studies on tea intercropping systems | Intercropping improves microclimate (+15% temp stability), soil nutrients (+50% SOM), and tea quality (+20% amino acids) while reducing pests (−30%) |

| [39] | 2022 | UCF101, HMDB51, Kinetics-100, SSV2 datasets | Few-shot action recognition | (1) Prototype Aggregation Adaptive Loss (PAAL) (2) Cross-Enhanced Prototype (CEP) (3) Dynamic Temporal Transformation (DTT) (4) Reweighted Similarity Attention (RSA) | (1) Achieves 5-way 5-shot accuracy of 75.1% on HMDB51 (2) Outperforms TRX by 1.2% with fewer parameters (3) Adaptive margin improves performance by 0.6% (4) Temporal transformation enhances generalization |

| [38] | 2023 | 15 benchmark video datasets (NTU RGB+D, Kinetics, etc.) | Comprehensive review of deep learning for video action recognition | (1) Taxonomy of 9 architecture types (2) Performance comparison of 48 models (3) Temporal analysis frameworks | Identified 3D CNNs and hybrid architectures as most effective, with ViT-based models showing 12% accuracy gains over CNN baselines |

| [30] | 2024 | ETHZ Food-101, Vireo Food-172, UEC Food-256, ISIA Food-500 | Develop lightweight food image recognition model | Global Shuffle Convolution, Parallel block structure (local + global convolution), Reduced network layers | Achieves SOTA performance with fewer parameters (28% reduction) and FLOPs (37% reduction) while improving accuracy by 0.7% compared to MobileViTv2 |

| [31] | 2024 | FF++, DFDC, CelebDF, HFF datasets | Lightweight face forgery detection | (1) Spatial Group-wise Enhance (SGE) module (2) TraceBlock for global texture extraction (3) Dynamic Fusion Mechanism (DFM) (4) Dual-branch architecture | (1) Achieves 94.87% accuracy with only 963k parameters (2) Outperforms large models while being 10× smaller (3) Processes 236 fps for real-time detection (4) Effective cross-dataset generalization |

| [32] | 2025 | 4 pest datasets (D1-5869, D1-500, D2-1599, D2-545) | Optimal integration of attention mechanisms with MobileNetV2 for pest recognition | (1) Systematic attention integration framework (2) 6 attention variants (3) Layer-wise optimization (4) Performance-efficiency tradeoff analysis | BAM12 configuration achieved 96.7% accuracy (D1-5869) with only 3.8M parameters. MobileViT showed poorest performance (83% accuracy). Attention maps revealed improved pest localization |

| [36] | 2025 | UAV-based MS, thermal infrared, and RGB imagery | Drought stress classification in tea plantations | Multi-source data fusion (MS+TIR); Improved GA-BP neural network (RSDCM) | Multi-source fusion (MS+TIR) outperformed single-source data; RSDCM achieved the highest accuracy (98.3%) and strong generalization across all drought levels |

2. Materials and Methods

2.1. Dataset Introduction

- Enhanced data diversity and model robustness: Through data augmentation techniques such as rotation, cropping, contrast adjustment, and flipping, the dataset significantly improves sample diversity, thereby enhancing the model’s robustness in complex and variable field conditions.

- Precise and comprehensive annotations: The dataset includes accurate annotations such as bounding box coordinates, category labels, and object size parameters. These detailed annotations provide a solid foundation for effective feature extraction and model performance optimization.

- Support for multi-scale training: The dataset enables multi-scale training strategies, which effectively improve the model’s accuracy in detecting targets at varying distances and sizes, ensuring strong adaptability in real-world deployment scenarios.

| Category | Details |

|---|---|

| Research Field | Behavior Recognition in Tea Garden Scenarios |

| Data Type | Raw Data: A total of 12,195 images. Image data are saved in “jpg” format. The annotated labels of the raw data are summarized in “xlsx” format. |

| Data Source | The image data used in this study come from two sources: 1. Public video data, collected via web scraping from mainstream platforms like Baidu’s Haokan Video, Xigua Video, and Bilibili, mostly related to tea picking, was sliced into individual frames. 2. Collected through on-site filming at tea gardens in the Yuhuatai area of Nanjing. |

| Accessibility |

|

2.1.1. Data Collection

2.1.2. Data Filtering

2.1.3. Data Augmentation

2.1.4. Data Annotation

2.2. Methods

2.2.1. Improvement of Picking Behavior Recognition Model Based on YOLO

- (1)

- Efficient Multi-scale Attention (EMA)

- (2)

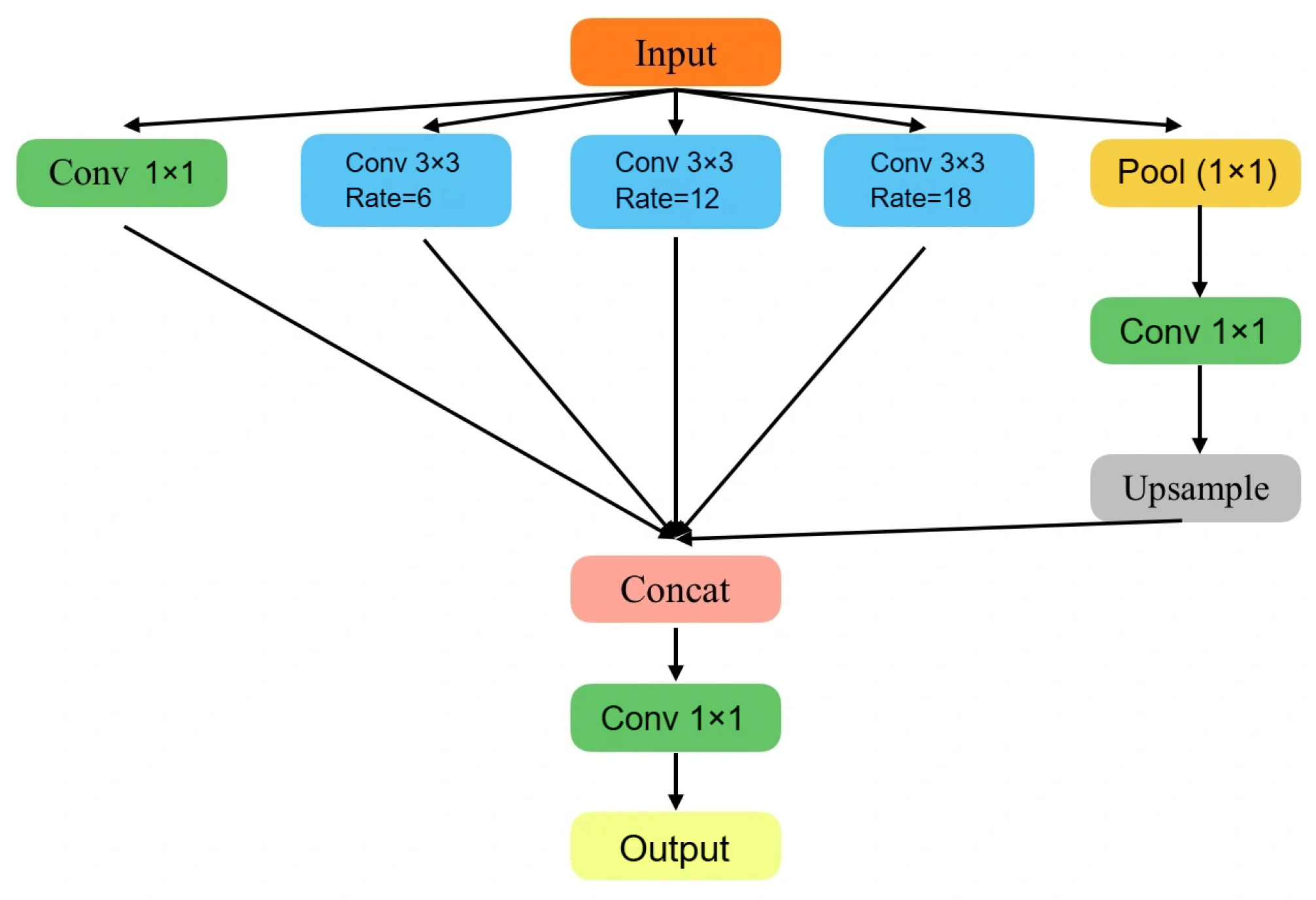

- The Atrous Spatial Pyramid Pooling (ASPP) Module

- (3)

- The Atrous Spatial Pyramid Pooling (ASPP) Module

- The angle term effectively handles detection box rotation caused by the body tilt of the picker.

- The distance term adapts to minor position differences among dense plants.

- It maintains high sensitivity to partially occluded targets. Therefore, this experiment considers introducing SIoU to improve accuracy.

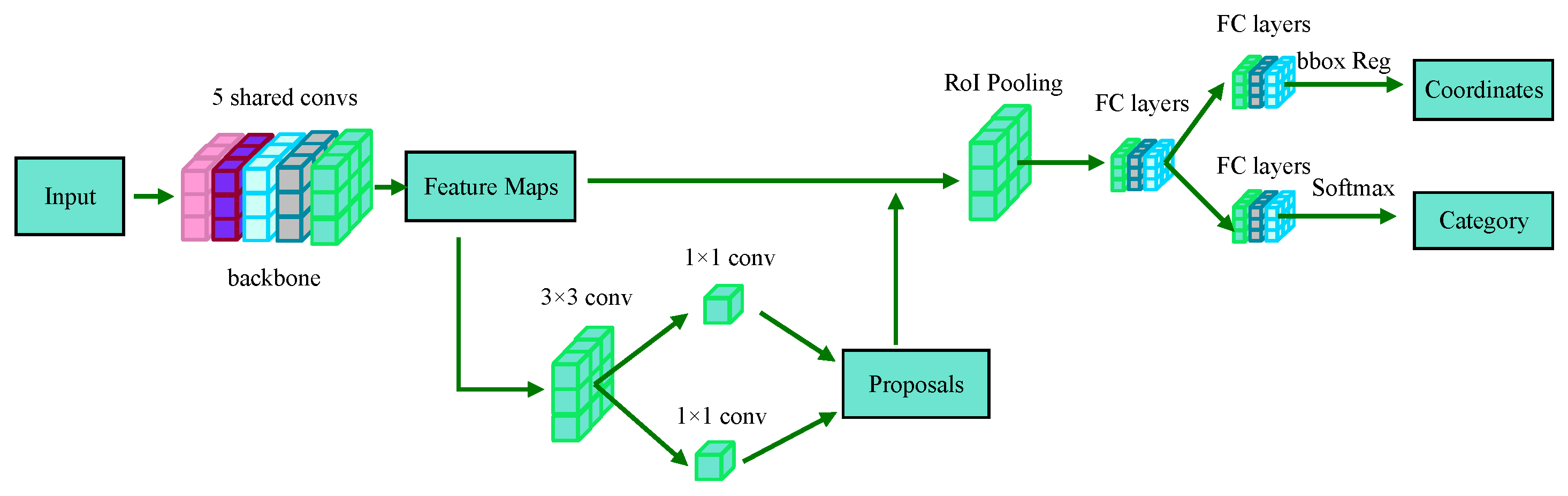

2.2.2. Algorithm Improvement Based on Faster R-CNN

- (1)

- The Region Proposal Network (RPN)

- (2)

- The Anchor Size Setting

- (3)

- Network Architecture Enhancement Based on The Attention Mechanism

3. Results

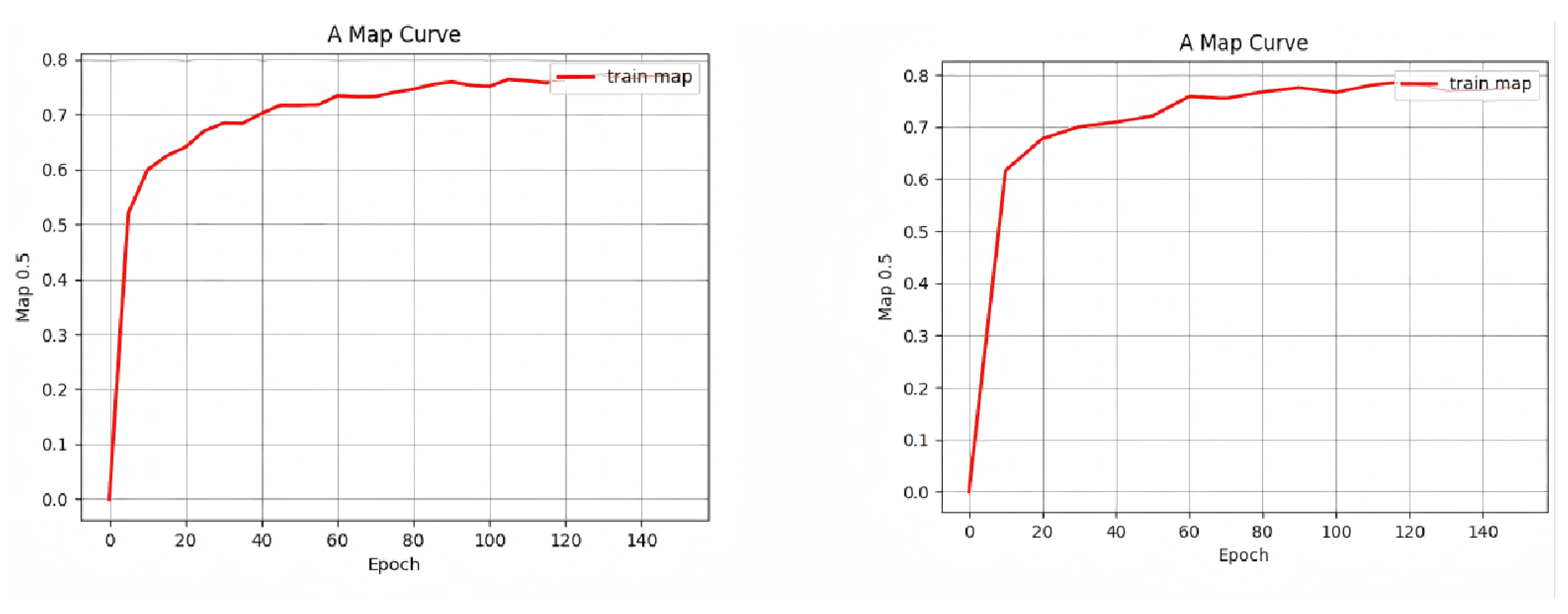

3.1. Ablation Experiment

- The choice of loss function has a significant impact on model performance. Compared with GIoU and CIoU, SIoU can increase detection accuracy by an average of 1.1% to 1.8%.

- Among the three attention mechanisms, EMA exhibits the best generalization performance. Although SE achieves the highest precision of 0.979, it has a relatively low recall, indicating that SE may excessively suppress effective features of some occluded targets. The SA module performs stably across all performance metrics and can be used when resources are limited.

3.2. The Training Efficiency and Loss Convergence Characteristics

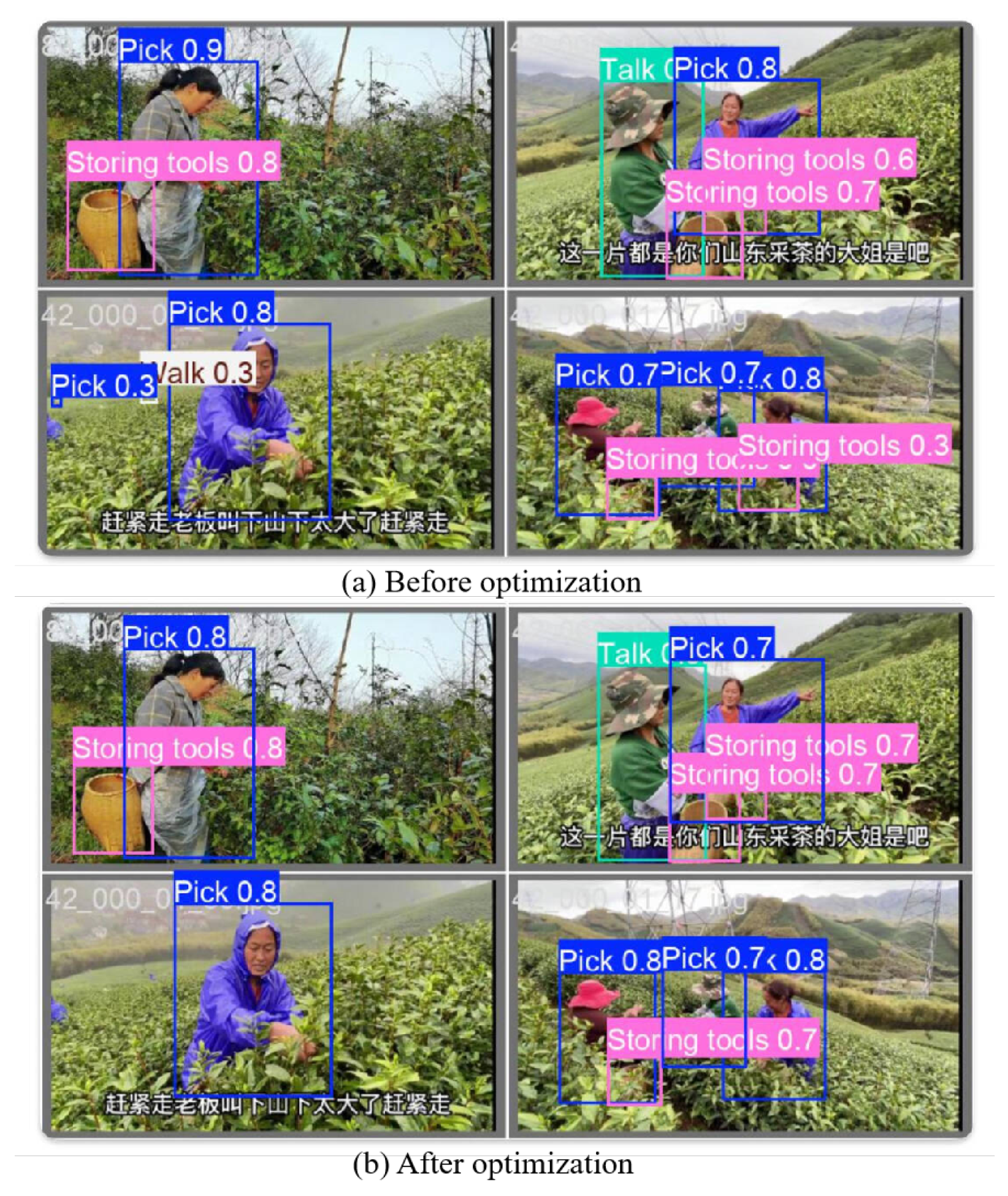

4. Discussion

4.1. Model Improvements for Complex Tea Garden Scenarios

- The YOLOv5 branch, enhanced with EMA attention and ASPP modules, enables more effective multi-scale feature extraction and background suppression, improving localization accuracy under cluttered conditions.

- The adoption of SIoU loss, incorporating angle and distance penalties, improves bounding box regression in scenes where pickers exhibit diverse postures and orientations, which is common in freeform tea-picking activities.

- The Faster R-CNN branch, augmented with multi-level SE attention and anchor box optimization, further enhances detection of small or occluded targets, particularly in long-shot or top-view images commonly seen in surveillance and UAV-based data collection.

4.2. Ablation Insights: Performance Gains from Attention and Loss Functions

- The EMA + SIoU combination achieved the highest mAP@0.5 (0.76), precision (0.96), and recall (0.94), showing its strong generalization ability in dynamic outdoor scenes.

- While SE attention achieved the highest precision (0.97), it slightly reduced recall, indicating potential overemphasis on dominant features and reduced sensitivity to occluded objects.

- GIoU and CIoU, while computationally simpler, demonstrated less robustness in bounding box localization for tea-picking scenarios with irregular movements and partial visibility.

4.3. Dataset Generalization and Real-World Deployability

- High diversity: covering 138 unique scenarios with variations in terrain, lighting, weather, camera angle, and distance.

- Comprehensive annotations: enabling multi-scale training and robust model learning.

- Balance between field and web-collected data: ensuring both real-world variability and controlled quality.

4.4. Remaining Challenges and Future Work

- Lack of temporal modeling: Current frame-based recognition ignores temporal dependencies across frames, which are vital for understanding dynamic behaviors (e.g., transitions from standing to picking, sequential hand movements in plucking). This causes inaccuracies in recognizing multi-frame actions, such as misclassifying a mid-picking pause as a static posture.

- Future work will adopt video-based architectures (TSM, SlowFast) to capture motion patterns. TSM will model short-term motion via temporal feature shifting; SlowFast will combine high-resolution spatial details (slow pathway) and high-frame-rate temporal dynamics (fast pathway) for short/long-term dependencies. Temporal attention will weight key frames, aiming to reduce dynamic behavior recognition errors by at least 15%.

- Model efficiency and deployment: The architecture increases computational load and memory usage, limiting deployment on resource-constrained edge devices (drones, embedded systems) in agriculture. For example, drone-based real-time monitoring may suffer delays due to high computational demands.

- Lightweight strategies (pruning, quantization, edge framework integration) will be explored. Pruning removes redundant neurons/layers; quantization converts to lower bit-width to reduce complexity.

- Scene-specific data limitations: The dataset, though diverse in tea varieties/methods, is mainly from Chinese tea gardens. Unique local factors (soil, climate, plant growth) may cause overfitting, degrading performance in other regions or agricultural contexts (e.g., fruit picking).

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mao, Y.; Li, H.; Wang, Y.; Xu, Y.; Fan, K.; Shen, J.; Han, X.; Ma, Q.; Shi, H.; Bi, C.; et al. A Novel Strategy for Constructing Ecological Index of Tea Plantations Integrating Remote Sensing and Environmental Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 12772–12786. [Google Scholar] [CrossRef]

- Li, H.; Wang, Y.; Fan, K.; Mao, Y.; Shen, Y.; Ding, Z. Evaluation of important phenotypic parameters of tea plantations using multi-source remote sensing data. Front. Plant Sci. 2022, 13, 898962. [Google Scholar] [CrossRef]

- Luo, B.; Sun, H.; Zhang, L.; Chen, F.; Wu, K. Advances in the tea plants phenotyping using hyperspectral imaging technology. Front. Plant Sci. 2024, 15, 1442225. [Google Scholar] [CrossRef]

- Li, H.; Mao, Y.; Wang, Y.; Fan, K.; Shi, H.; Sun, L.; Shen, J.; Shen, Y.; Xu, Y.; Ding, Z. Environmental Simulation Model for Rapid Prediction of Tea Seedling Growth. Agronomy 2022, 12, 3165. [Google Scholar] [CrossRef]

- Ndlovu, H.S.; Odindi, J.; Sibanda, M.; Mutanga, O. A systematic review on the application of UAV-based thermal remote sensing for assessing and monitoring crop water status in crop farming systems. Int. J. Remote Sens. 2024, 45, 4923–4960. [Google Scholar] [CrossRef]

- Wang, S.M.; Yu, P.C.; Ma, J.; Ouyang, J.X.; Zhao, Z.M.; Xuan, Y.; Fan, D.M.; Yu, J.F.; Wang, X.C.; Zheng, X.Q. Tea yield estimation using UAV images and deep learning. Ind. Crops Prod. 2024, 212, 118358. [Google Scholar] [CrossRef]

- Luo, D.; Gao, Y.; Wang, Y.; Shi, Y.C.; Chen, S.; Ding, Z.; Fan, K. Using UAV image data to monitor the effects of different nitrogen application rates on tea quality. J. Sci. Food Agric. 2021, 102, 1540–1549. [Google Scholar] [CrossRef] [PubMed]

- Sun, L.; Shen, J.; Mao, Y.; Li, X.; Fan, K.; Qian, W.; Wang, Y.; Bi, C.; Wang, H.; Xu, Y.; et al. Discrimination of tea varieties and bud sprouting phenology using UAV-based RGB and multispectral images. Int. J. Remote Sens. 2025, 46, 6214–6234. [Google Scholar] [CrossRef]

- Zhao, H.; Zhuge, Y.; Wang, Y.; Wang, L.; Lu, H.; Zeng, Y. Learning Universal Features for Generalizable Image Forgery Localization. arXiv 2025, arXiv:2504.07462. [Google Scholar] [CrossRef]

- Møller, H.; Berkes, F.; Lyver, P.O.; Kislalioglu, M.S. Combining Science and Traditional Ecological Knowledge: Monitoring Populations for Co-Management. Ecol. Soc. 2004, 9, 2. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.S.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Yang, X.; Pan, L.; Wang, D.; Zeng, Y.; Zhu, W.; Jiao, D.; Sun, Z.; Sun, C.; Zhou, C. FARnet: Farming Action Recognition From Videos Based on Coordinate Attention and YOLOv7-tiny Network in Aquaculture. J. ASABE 2023, 66, 909–920. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef]

- Li, H.; Mao, Y.; Shi, H.; Fan, K.; Sun, L.; Zaman, S.; Shen, J.; Li, X.; Bi, C.; Shen, Y.; et al. Establishment of deep learning model for the growth of tea cutting seedlings based on hyperspectral imaging technique. Sci. Hortic. 2024, 331, 113106. [Google Scholar] [CrossRef]

- Mazumdar, A.; Singh, J.; Tomar, Y.S.; Bora, P.K. Universal Image Manipulation Detection using Deep Siamese Convolutional Neural Network. arXiv 2018, arXiv:1808.06323. [Google Scholar] [CrossRef]

- Esgario, J.G.M.; Castro, P.B.C.; Tassis, L.M.; Krohling, R.A. An app to assist farmers in the identification of diseases and pests of coffee leaves using deep learning. Inf. Process. Agric. 2022, 9, 38–47. [Google Scholar] [CrossRef]

- Guo, Z.; Wang, L.; Yang, W.; Yang, G.; Li, K. LDFnet: Lightweight Dynamic Fusion Network for Face Forgery Detection by Integrating Local Artifacts and Global Texture Information. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 1255–1265. [Google Scholar] [CrossRef]

- Zou, M.; Zhou, Y.; Jiang, X.; Gao, J.; Yu, X.; Ma, X. Spatio-Temporal Behavior Detection in Field Manual Labor Based on Improved SlowFast Architecture. Appl. Sci. 2024, 14, 2976. [Google Scholar] [CrossRef]

- Mazumdar, A.; Bora, P.K. Siamese convolutional neural network-based approach towards universal image forensics. IET Image Process. 2020, 14, 3105–3116. [Google Scholar] [CrossRef]

- Dai, C.; Liu, X.; Lai, J. Human action recognition using two-stream attention based LSTM networks. Appl. Soft Comput. 2020, 86, 105820. [Google Scholar] [CrossRef]

- Chen, S.; Wang, Y.; Lei, Z. Two Stream Convolution Fusion Network based on Attention Mechanism. J. Phys. Conf. Ser. 2021, 1920, 012070. [Google Scholar] [CrossRef]

- Ullah, H.; Munir, A. Human Activity Recognition Using Cascaded Dual Attention CNN and Bi-Directional GRU Framework. J. Imaging 2022, 9, 130. [Google Scholar] [CrossRef]

- Zhang, M.; Hu, H.; Li, Z.; Chen, J. Action detection with two-stream enhanced detector. Vis. Comput. 2022, 39, 1193–1204. [Google Scholar] [CrossRef]

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D.D. Machine Learning in Agriculture: A Review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Liu, J.; Ke, Q.; Rahmani, H. Human Action Recognition From Various Data Modalities: A Review. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 45, 3200–3225. [Google Scholar] [CrossRef]

- Han, R.; Shu, L.; Li, K. A Method for Plant Disease Enhance Detection Based on Improved YOLOv8. In Proceedings of the 2024 IEEE 33rd International Symposium on Industrial Electronics (ISIE), Ulsan, Republic of Korea, 18–21 June 2024; pp. 1–6. [Google Scholar]

- Can, W.; Zhiwei, L. Weed recognition using SVM model with fusion height and monocular image features. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2016, 32, 165–174. [Google Scholar] [CrossRef]

- Johannes, A.; Picon, A.; Alvarez-Gila, A.; Echazarra, J.; Rodriguez-Vaamonde, S.; Navajas, A.D.; Ortiz-Barredo, A. Automatic plant disease diagnosis using mobile capture devices, applied on a wheat use case. Comput. Electron. Agric. 2017, 138, 200–209. [Google Scholar] [CrossRef]

- Sharma, P.; Berwal, Y.P.S.; Ghai, W. Performance analysis of deep learning CNN models for disease detection in plants using image segmentation. Inf. Process. Agric. 2020, 7, 566–574. [Google Scholar] [CrossRef]

- Sheng, G.; Min, W.; Yao, T.; Song, J.; Yang, Y.; Wang, L.; Jiang, S. Lightweight Food Image Recognition With Global Shuffle Convolution. IEEE Trans. AgriFood Electron. 2024, 2, 392–402. [Google Scholar] [CrossRef]

- Gong, H.; Zhuang, W. An Improved Method for Extracting Inter-Row Navigation Lines in Nighttime Maize Crops Using YOLOv7-Tiny. IEEE Access 2024, 12, 27444–27455. [Google Scholar] [CrossRef]

- Janarthan, S.; Thuseethan, S.; Joseph, C.; Vigneshwaran, P.; Rajasegarar, S.; Yearwood, J. Efficient Attention-Lightweight Deep Learning Architecture Integration for Plant Pest Recognition. IEEE Trans. AgriFood Electron. 2025, 3, 548–560. [Google Scholar] [CrossRef]

- Abdulazeem, Y.; Balaha, H.M.; Bahgat, W.M.; Badawy, M. Human Action Recognition Based on Transfer Learning Approach. IEEE Access 2021, 9, 82058–82069. [Google Scholar] [CrossRef]

- Lei, X.; Wang, T.; Yang, B.; Duan, Y.; Zhou, L.; Zou, Z.; Ma, Y.; Zhu, X.; Fang, W. Progress and perspective on intercropping patterns in tea plantations. Beverage Plant Res. 2022, 2, 18. [Google Scholar] [CrossRef]

- Ma, J.; Du, K.; Zheng, F.; Zhang, L.; Gong, Z.; Sun, Z. A recognition method for cucumber diseases using leaf symptom images based on deep convolutional neural network. Comput. Electron. Agric. 2018, 154, 18–24. [Google Scholar] [CrossRef]

- Xu, Y.; Mao, Y.; Li, H.; Li, X.; Sun, L.; Fan, K.; Li, Z.; Gong, S.; Ding, Z.; Wang, Y. Construction and application of a drought classification model for tea plantations based on multi-source remote sensing. Smart Agric. Technol. 2025, 12, 101132. [Google Scholar] [CrossRef]

- Esgario, J.G.; Krohling, R.A.; Ventura, J.A. Deep learning for classification and severity estimation of coffee leaf biotic stress. Comput. Electron. Agric. 2019, 169, 105162. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, Y.; Liu, Z.; Zheng, Y. Deep Neural Networks in Video Human Action Recognition: A Review. arXiv 2023, arXiv:2305.15692. [Google Scholar] [CrossRef]

- Liu, S.; Jiang, M.; Kong, J. Multidimensional Prototype Refactor Enhanced Network for Few-Shot Action Recognition. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6955–6966. [Google Scholar] [CrossRef]

- Iwana, B.K.; Uchida, S. An empirical survey of data augmentation for time series classification with neural networks. PLoS ONE 2020, 16, e0254841. [Google Scholar] [CrossRef]

- Wen, Q.; Sun, L.; Song, X.; Gao, J.; Wang, X.; Xu, H. Time Series Data Augmentation for Deep Learning: A Survey. In Proceedings of the International Joint Conference on Artificial Intelligence, Virtual, 11–17 July 2020. [Google Scholar]

- Sawicki, A.; Saeed, K. Application of LSTM Networks for Human Gait-Based Identification. In Theory and Engineering of Dependable Computer Systems and Networks; Zamojski, W., Mazurkiewicz, J., Sugier, J., Walkowiak, T., Kacprzyk, J., Eds.; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Abujrida, H.; Agu, E.O.; Pahlavan, K. DeepaMed: Deep learning-based medication adherence of Parkinson’s disease using smartphone gait analysis. Smart Health 2023, 30, 100430. [Google Scholar] [CrossRef]

- Chen, Y.; Dai, X.; Chen, D.; Liu, M.; Dong, X.; Yuan, L.; Liu, Z. Mobile-Former: Bridging MobileNet and Transformer. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5260–5269. [Google Scholar]

- Tang, Z.; Li, M.; Wang, X. Mapping Tea Plantations from VHR Images Using OBIA and Convolutional Neural Networks. Remote Sens. 2020, 12, 2935. [Google Scholar] [CrossRef]

- Tu, J.; Liu, H.; Meng, F.; Liu, M.; Ding, R. Spatial-Temporal Data Augmentation Based on LSTM Autoencoder Network for Skeleton-Based Human Action Recognition. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3478–3482. [Google Scholar]

- Sawicki, A.; Zieliński, S.K. Augmentation of Segmented Motion Capture Data for Improving Generalization of Deep Neural Networks. In Proceedings of the International Conference on Computer Information Systems and Industrial Management Applications, Bialystok, Poland, 16–18 October 2020. [Google Scholar]

- Thakur, D.; Kumar, V. FruitVision: Dual-Attention Embedded AI System for Precise Apple Counting Using Edge Computing. IEEE Trans. AgriFood Electron. 2024, 2, 445–459. [Google Scholar] [CrossRef]

- Han, R.; Zheng, Y.; Tian, R.; Shu, L.; Jing, X.; Yang, F. An image dataset for analyzing tea picking behavior in tea plantations. Front. Plant Sci. 2025, 15, 1473558. [Google Scholar] [CrossRef]

| Number | The Significance of Picking Behavior Identification |

|---|---|

| 1 | Intervene in unauthorized picking in non-tourist areas promptly |

| 2 | Identify under-picked zones via data analysis for timely inspection |

| 3 | Monitor visitor picking activities to maintain order in tourist plantations |

| 4 | Analyze historical data for scientific guidance to boost tea garden profits |

| 5 | Prohibit picking in rare tree plantations and ensure standardized picking in production gardens |

| Division | Classification | Number of Slices |

|---|---|---|

| Number of Pickers | Single Person | 5654 |

| Multiple People | 6541 | |

| Picking Method | Manual | 11,896 |

| Machine | 299 | |

| Picking Weather | Sunny | 3001 |

| Cloudy | 4271 | |

| Overcast | 3852 | |

| Foggy | 482 | |

| Rainy | 214 | |

| Shooting Distance | Close-up | 10,400 |

| Long-shot | 754 | |

| Close-up + Long-shot | 1037 |

| Parameter Name | Parameter Setting | Parameter Name | Parameter Setting |

|---|---|---|---|

| Cache | TRUE | Close mosaic | 20 |

| Epoch | 300 | Scale | 0.75 |

| Single cls | False | Mosaic | 1.0 |

| Batch | 32 | Mixup | 0.2 |

| Anchor Size | Anchor Aspect Ratio |

|---|---|

| 12 × 12 | 1:1 |

| 12 × 12 | 2:3 |

| 12 × 12 | 3:2 |

| 24 × 24 | 1:1 |

| 24 × 24 | 4:3 |

| 24 × 24 | 3:4 |

| 96 × 96 | 1:1 |

| 96 × 96 | 4:3 |

| 96 × 96 | 16:9 |

| Model | Precision | Recall | mAP@0.5 |

|---|---|---|---|

| CIoU+EMA | 0.92 | 0.91 | 0.73 |

| GIoU+EMA | 0.93 | 0.95 | 0.74 |

| SIoU + EMA | 0.96 | 0.94 | 0.76 |

| CIoU + SA | 0.93 | 0.94 | 0.71 |

| GIoU + SA | 0.93 | 0.92 | 0.76 |

| SIoU + SA | 0.96 | 0.93 | 0.74 |

| CIoU + SE | 0.96 | 0.92 | 0.79 |

| GIoU + SE | 0.94 | 0.94 | 0.76 |

| SIoU + SE | 0.97 | 0.90 | 0.77 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, R.; Zheng, Y.; Shu, L.; Cielniak, G. TeaPickingNet: Towards Robust Recognition of Fine-Grained Picking Actions in Tea Gardens Using an Attention-Enhanced Framework. Agriculture 2025, 15, 2441. https://doi.org/10.3390/agriculture15232441

Han R, Zheng Y, Shu L, Cielniak G. TeaPickingNet: Towards Robust Recognition of Fine-Grained Picking Actions in Tea Gardens Using an Attention-Enhanced Framework. Agriculture. 2025; 15(23):2441. https://doi.org/10.3390/agriculture15232441

Chicago/Turabian StyleHan, Ru, Ye Zheng, Lei Shu, and Grzegorz Cielniak. 2025. "TeaPickingNet: Towards Robust Recognition of Fine-Grained Picking Actions in Tea Gardens Using an Attention-Enhanced Framework" Agriculture 15, no. 23: 2441. https://doi.org/10.3390/agriculture15232441

APA StyleHan, R., Zheng, Y., Shu, L., & Cielniak, G. (2025). TeaPickingNet: Towards Robust Recognition of Fine-Grained Picking Actions in Tea Gardens Using an Attention-Enhanced Framework. Agriculture, 15(23), 2441. https://doi.org/10.3390/agriculture15232441