A RAG-Augmented LLM for Yunnan Arabica Coffee Cultivation

Abstract

1. Introduction

2. Materials and Methods

2.1. RAG Pipeline

2.2. Knowledge Base Construction and Stable Citation Identifiers

2.3. Hybrid Retrieval and Fusion (RRF)

2.4. Cross-Encoder Reranking

2.5. History-Aware Query Rewriting and In-Prompt History Injection (HAR and IHI)

2.6. Evaluation Data and Gold Construction

3. Results

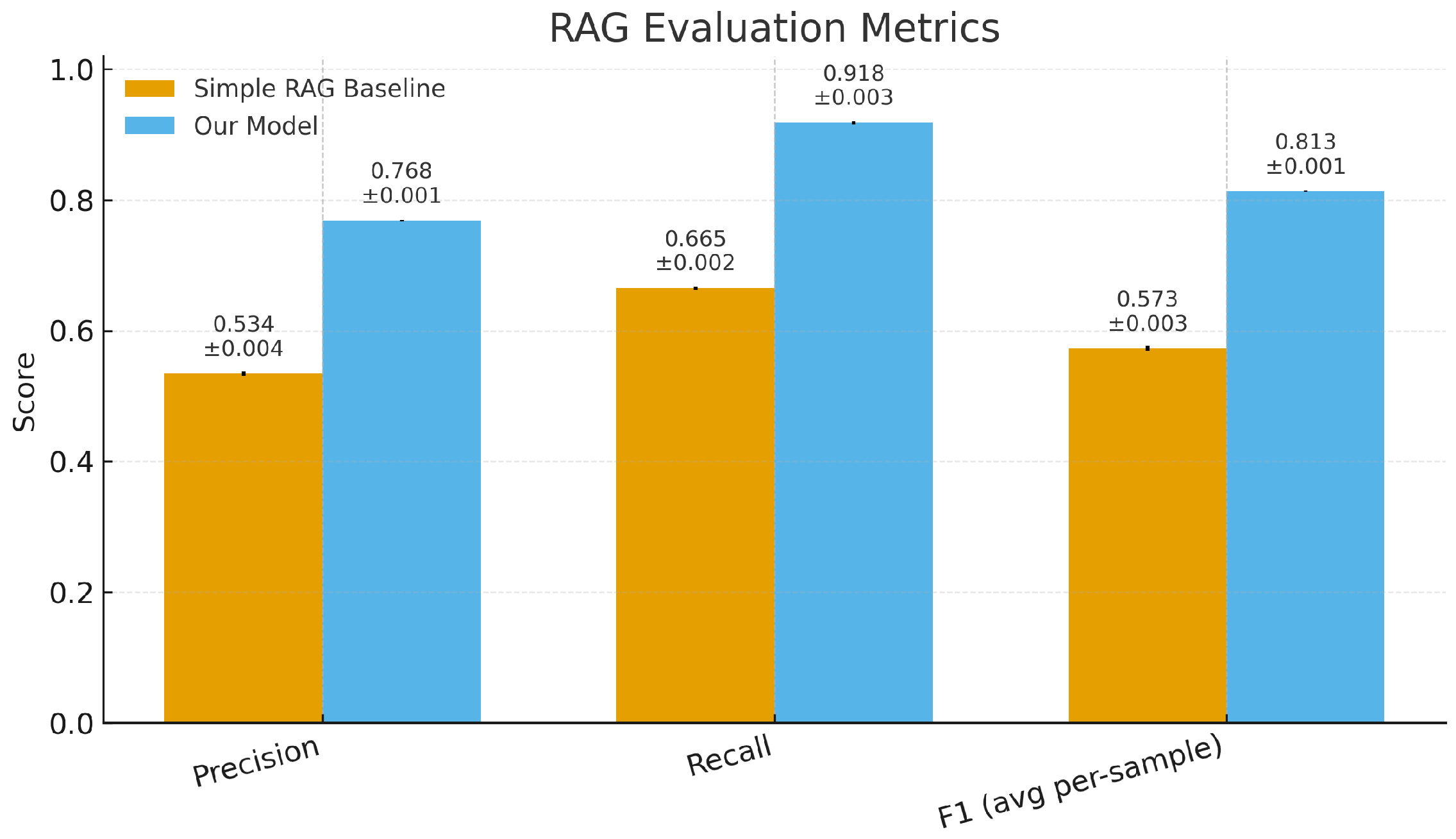

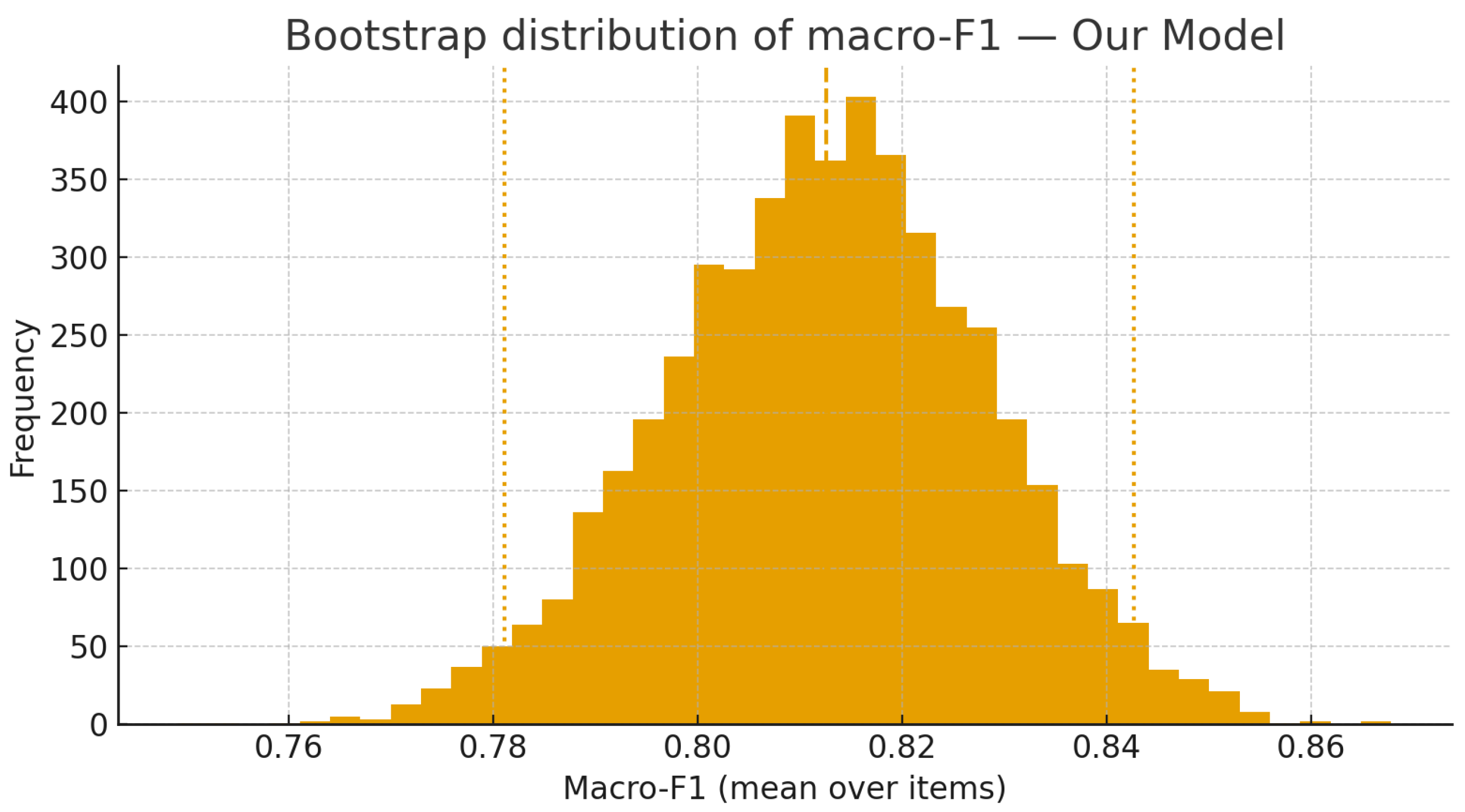

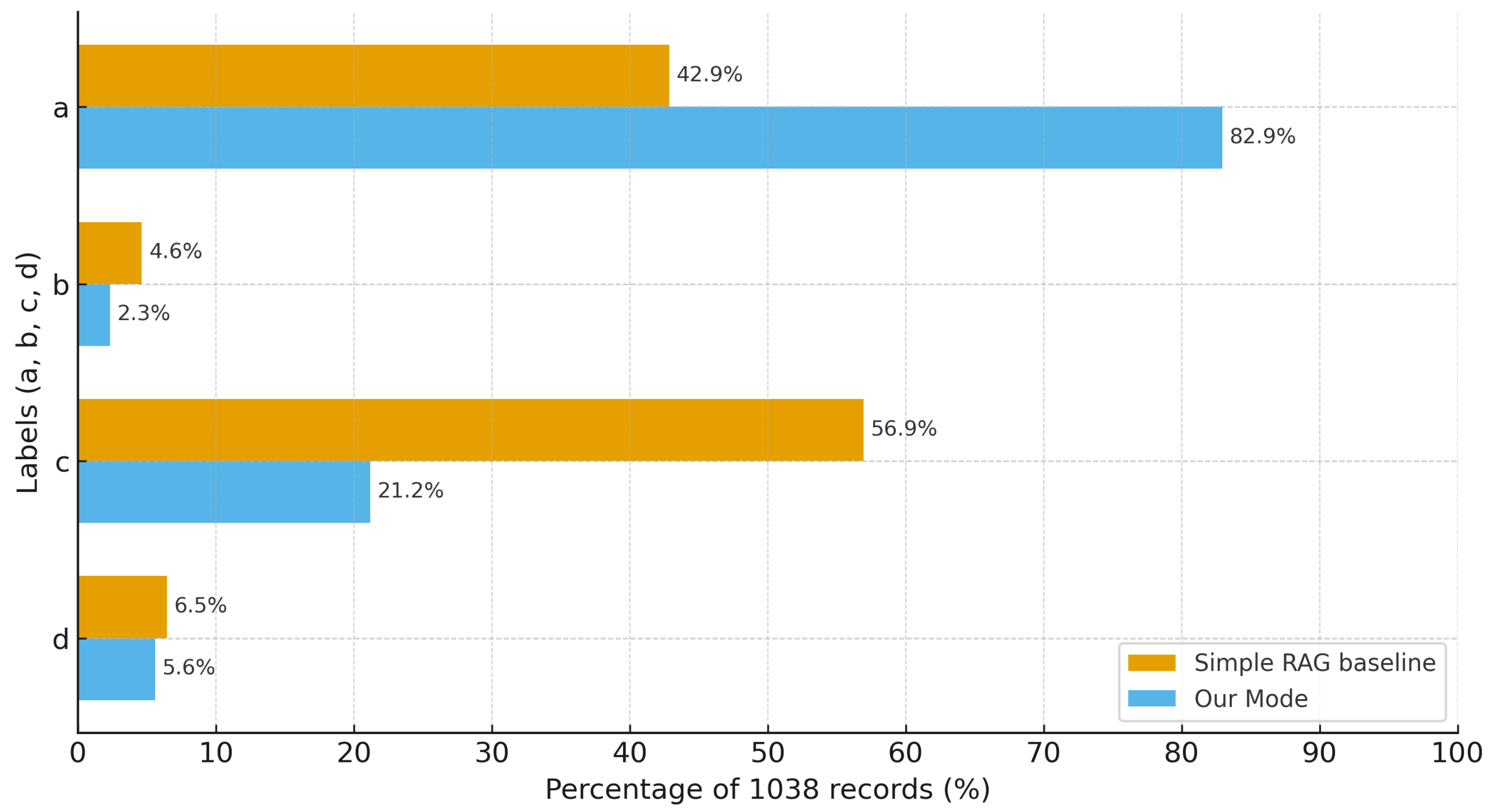

3.1. Overall Performance

3.2. Human Evaluation

3.3. Ablation Studies

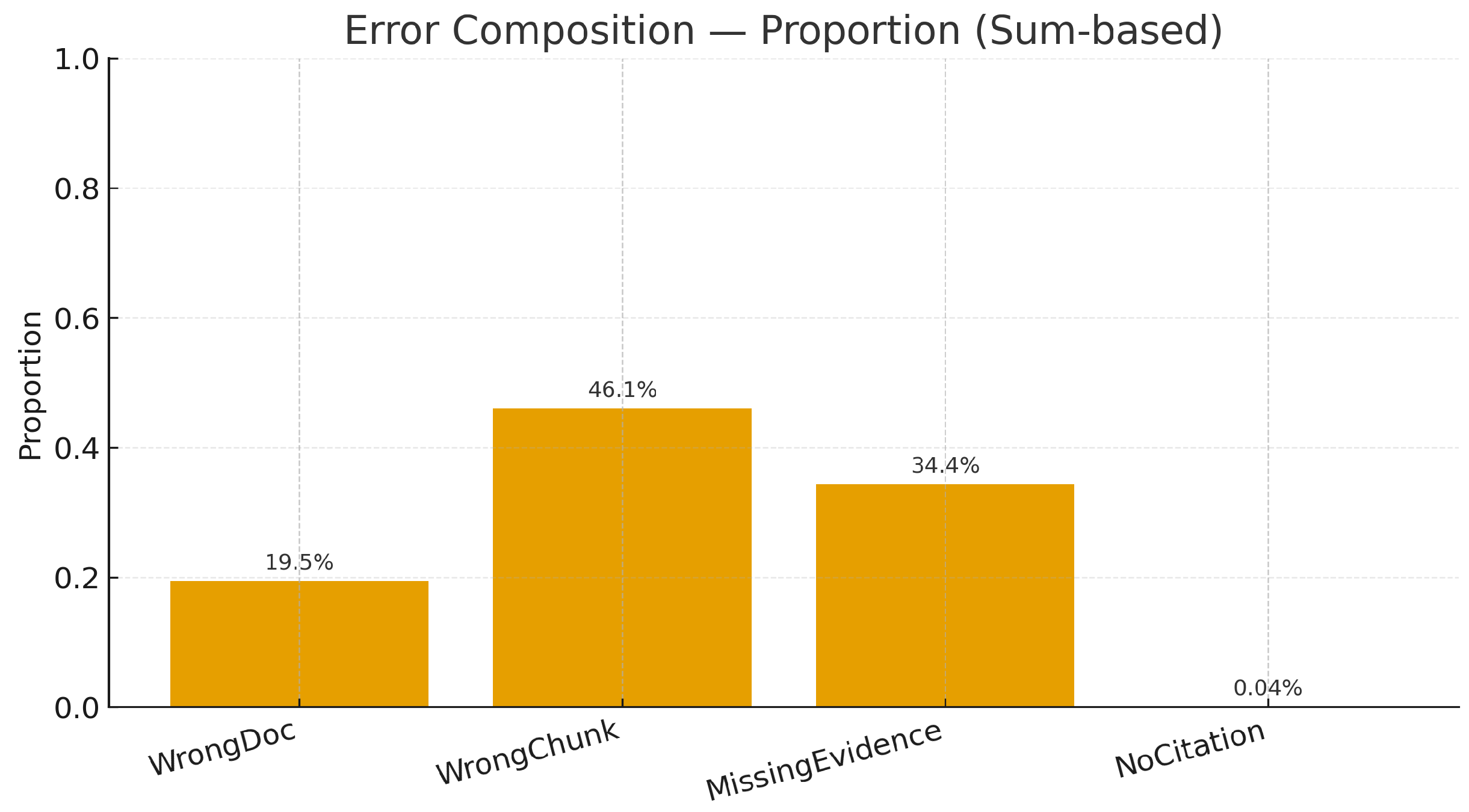

3.4. Error Analysis

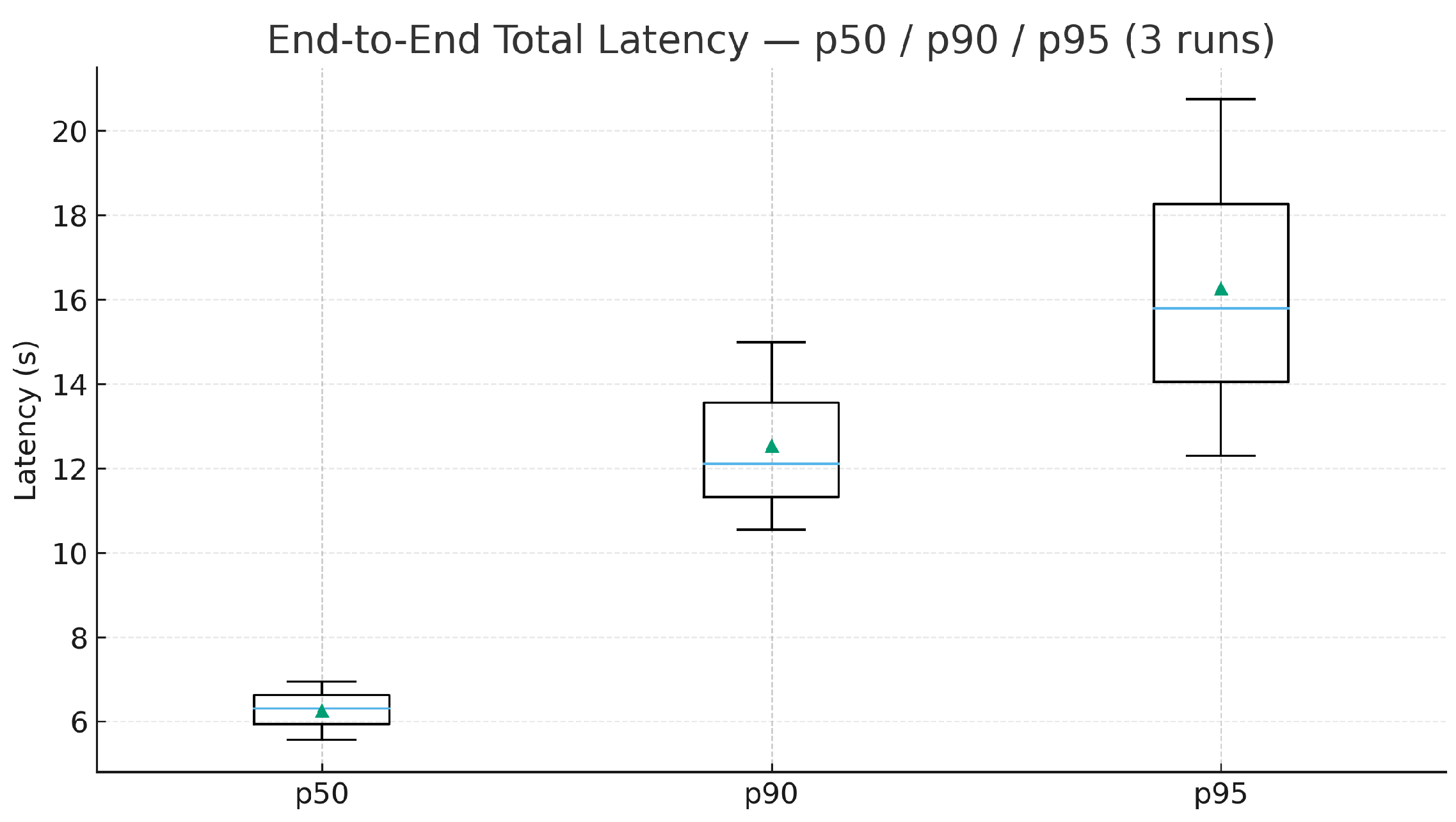

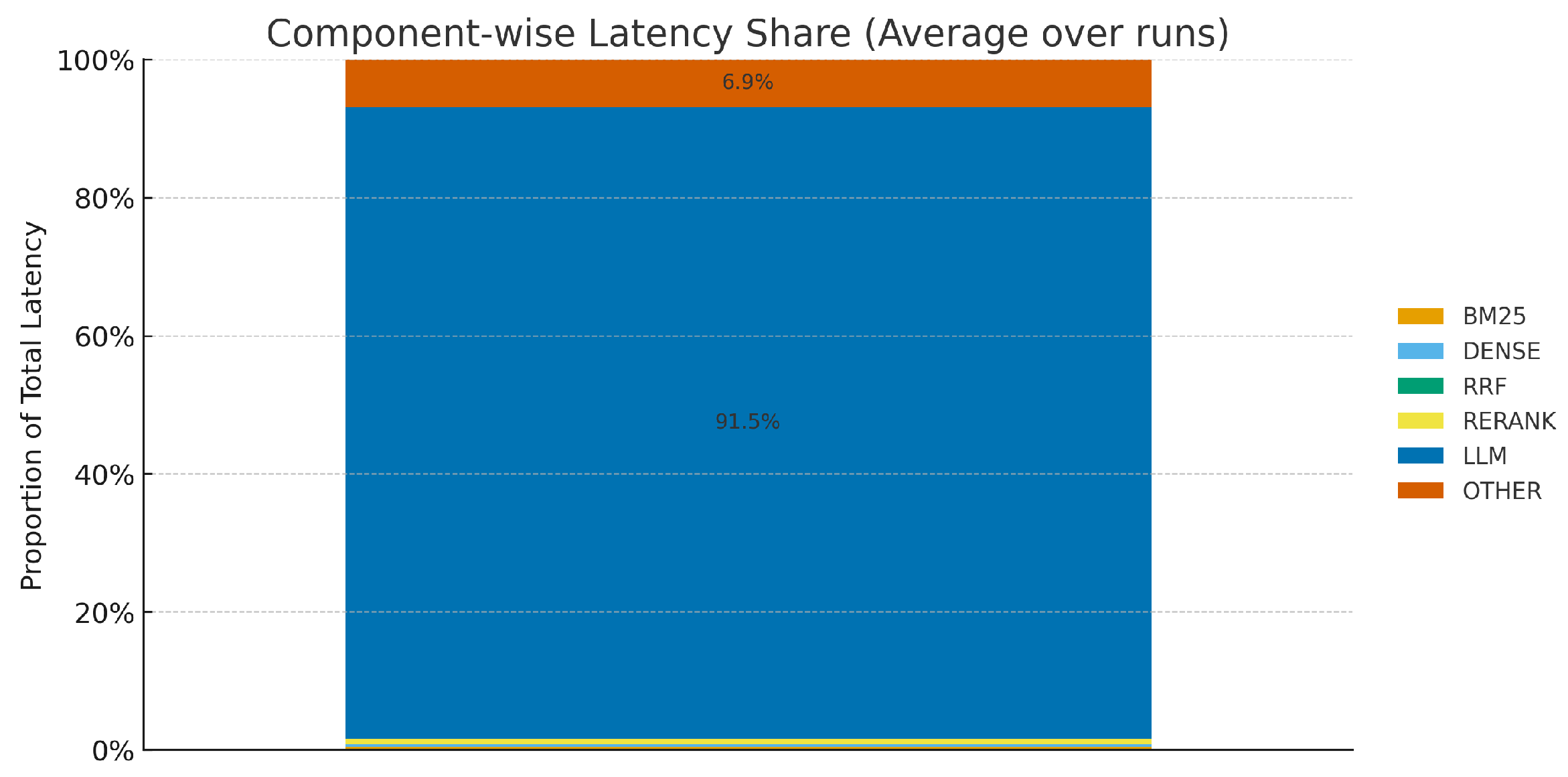

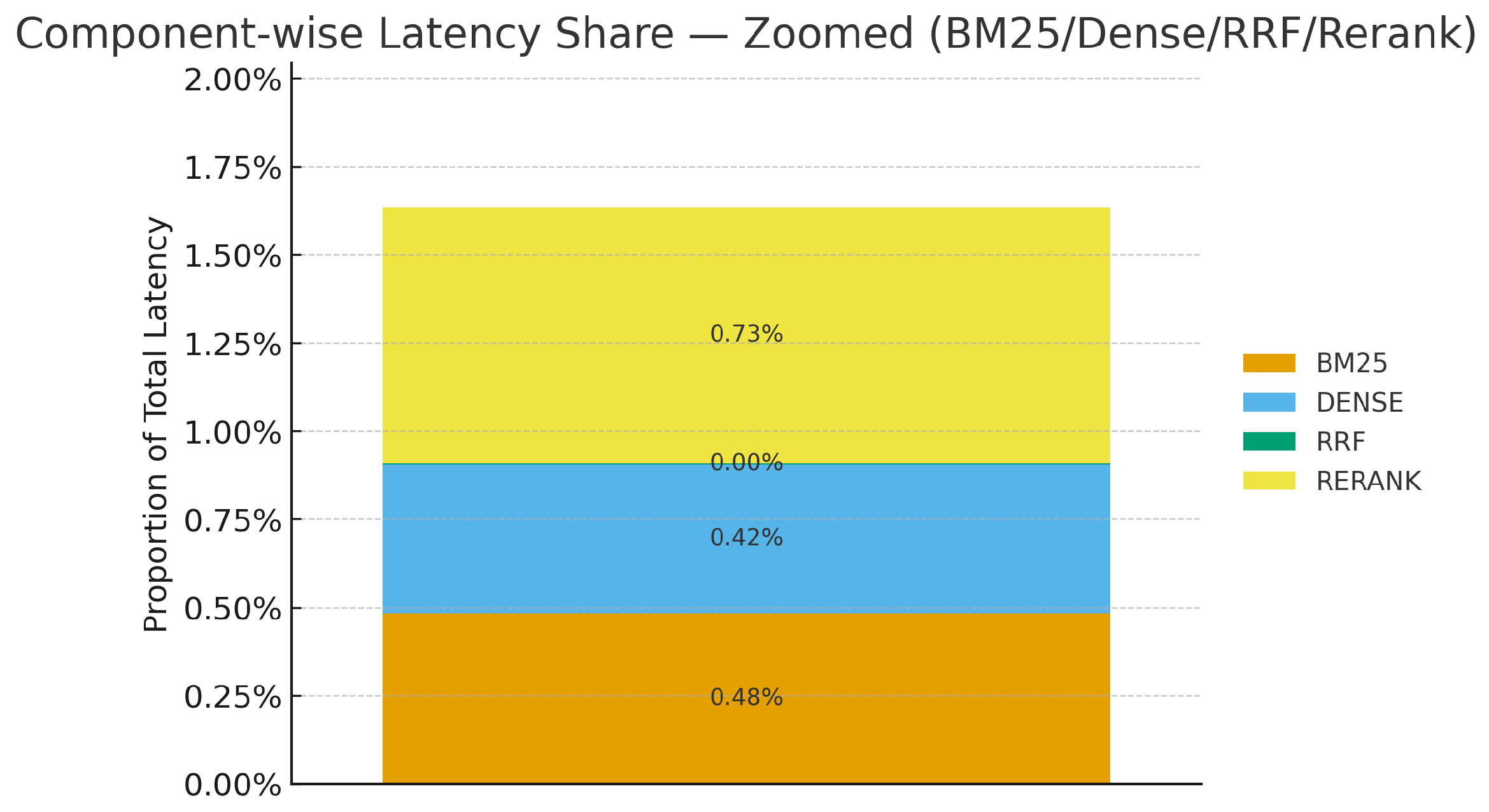

3.5. Latency and Cost Breakdown

4. Discussion

4.1. Restatement of Main Findings and Contributions

4.2. Insights from the Error Structure

4.3. Implications for Latency and Cost

4.4. Limitations of Corpus Scale and Gold Labels

4.5. On HAR/IHI Effectiveness and Improvements

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Implementation Details for Reproducibility

Appendix A.1. Note on Prompt Templates

Appendix A.2. LLM Decoding Hyperparameters

Appendix A.3. Chunking Thresholds (Semantic-Aware Splitting)

Appendix A.4. Retrieval and Reranking Defaults

Appendix A.5. Hardware and Throughput Settings

Appendix A.6. Gold-QA Synthesis Prompts (English Translations)

- You are a domain editor creating evidence-grounded gold QA in Chinese for Yunnan Arabica~coffee.

- INSTRUCTIONS:

- INPUT:

- OUTPUT JSON fields:

- id, query, answer, citations, refs, topic, difficulty, type, gold_citations, gold_answer

- (G2) Gold-QA Validator (deterministic fact check)

- Return:

- { "pass": true|false, "reasons": "…" }

- Rules:

- - temperature=0.0; top_p=1.0; do not rewrite the QA.

- - Fail if any criterion is not met.

- Normalize the QA JSON:

- - Canonicalize units (Celsius, mm) and remove redundant

- punctuation/markdown.

- - Ensure the "answer" stays concise; drop extra wording.

- - Compare against a provided list of existing queries; if

- semantic similarity

- >= 0.9, mark as~duplicate.

- Return the normalized JSON and a flag:

- { "duplicate": true|false }

Appendix A.7. Gold-QA Decoding Hyperparameters (Overrides)

Appendix A.8. Prompt Templates (English Translations)

- (A) System prompt

- (B) HAR (history-aware query rewriting) prompt

- (C) Generation prompt skeleton

- Output constraints:

- (1) Use only the provided evidence; if insufficient, explain what is missing

- and append "References: […]" at the end.

- (2) When numbers/thresholds/units/conditions are cited, tag the sentence

- with [docid#cid].

- (3) Language: Chinese. Style: practitioner-oriented, concise,

- accurate, actionable.

- Gold-QA decoding hyperparameters (overrides).

- Prompt templates (English translations of the Chinese originals).

- (A) System prompt

- You are an agronomy QA assistant for the "Yunnan Arabica coffee" scenario.

- Answers must be grounded in the provided evidence, and~you must add inline

- evidence tags [docid#cid] immediately after key factual statements. Do not

- fabricate facts or cite unseen sources. If~no suitable evidence is

- available, explain the gap and append "References: […]" at the end.

- Keep answers concise, professional, and~traceable. When thresholds/units/

- conditions are involved, place [docid#cid] after the corresponding sentence.

- (B) HAR (history-aware query rewriting) prompt

- Task: Using the most recent t=2 turns, rewrite the user need into a

- standalone, retrievable query. Preserve entities, thresholds, units,

- locations, and~time constraints; remove chit-chat and irrelevant content.

- Output: Only the rewritten query, with~no explanations.

- (C) Generation prompt skeleton

- Task: Answer the user’s question and include inline evidence tags

- [docid#cid] after key~facts.

- Evidence (Top-K corpus slices, each with [docid#cid]):

- <evidence_1_text> [docid#cid]

- <evidence_2_text> [docid#cid]

- …

- Output constraints:

- (1) Use only the provided evidence; if insufficient, explain what is missing

- and append "References: […]" at the end.

- (2) When numbers/thresholds/units/conditions are cited, tag the sentence

- with [docid#cid].

- (3) Language: Chinese. Style: practitioner-oriented, concise,

- accurate, actionable.

References

- Wikipedia Contributors. Yunnan. Wikipedia, the Free Encyclopedia. Available online: https://en.wikipedia.org/wiki/Yunnan (accessed on 16 October 2025).

- Food and Agriculture Organization of the United Nations (FAO). Coffee: Introduction. FAO Corporate Document Repository (X6939e01). Available online: https://www.fao.org/3/x6939e/x6939e01.htm (accessed on 16 October 2025).

- FAO. Arabica Coffee Manual for Lao PDR; FAO: Bangkok, Thailand, 2005; Available online: https://www.fao.org/3/ah833e/ah833e.pdf (accessed on 16 October 2025).

- FAO. Arabica Coffee Manual for Myanmar; FAO: Rome, Italy, 2015; Available online: https://openknowledge.fao.org/items/ba78b670-0947-4e73-ad10-091009c0dfc3 (accessed on 16 October 2025).

- Wang, X.; Ye, T.; Fan, L.; Liu, X.; Zhang, M.; Zhu, Y.; Gole, T.W. Extreme Cold Events Threaten Arabica Coffee in Yunnan, China. npj Nat. Hazards 2025, 2, 32. Available online: https://www.nature.com/articles/s44304-025-00092-5 (accessed on 16 October 2025). [CrossRef]

- Liu, X.; Tan, Y.; Dong, J.; Wu, J.; Wang, X.; Sun, Z. Assessing Habitat Selection Parameters of Coffea arabica Using BWM and BCM Methods Based on GIS. Sci. Rep. 2025, 15, 8. Available online: https://www.nature.com/articles/s41598-024-84073-0 (accessed on 16 October 2025).

- International Coffee Organization (ICO). Coffee in China; International Coffee Council, 115th Session, ICC-115-7; ICO: London, UK, 2015; Available online: https://www.ico.org/documents/cy2014-15/icc-115-7e-study-china.pdf (accessed on 16 October 2025).

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.-T.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP. Advances in Neural Information Processing Systems 33 (NeurIPS 2020). 2020. Available online: https://arxiv.org/abs/2005.11401 (accessed on 16 October 2025).

- Wintgens, J.N. (Ed.) Coffee: Growing, Processing, Sustainable Production, 2nd ed.; Wiley-VCH: Weinheim, Germany, 2009. [Google Scholar]

- Shuster, K.; Xu, J.; Komeili, M.; Smith, E.M.; Roller, S.; Boureau, Y.-L.; Weston, J. Retrieval Augmentation Reduces Hallucination in Conversation. Findings of the Association for Computational Linguistics: EMNLP 2021. pp. 3784–3803. 2021. Available online: https://aclanthology.org/2021.findings-emnlp.322 (accessed on 16 October 2025).

- FAO. Digital Technologies in Agriculture and Rural Areas: Status Report; FAO: Rome, Italy, 2019; Available online: https://www.fao.org/3/ca4985en/ca4985en.pdf (accessed on 16 October 2025).

- Clifford, M.N.; Willson, K.C. (Eds.) Coffee: Botany, Biochemistry and Production of Beans and Beverage; Croom Helm: London, UK, 1985. [Google Scholar]

- Liu, N.F.; Lin, K.; Hewitt, J.; Paranjape, A.; Bevilacqua, M.; Petroni, F.; Liang, P. Lost in the Middle: How Language Models Use Long Contexts. Trans. Assoc. Comput. Linguist. 2024, 12, 157–173. [Google Scholar] [CrossRef]

- Fan, W.; Ding, Y.; Ning, L.; Wang, S.; Li, H.; Yin, D.; Chua, T.S.; Li, Q. A Survey on RAG Meeting LLMs: Towards Retrieval-Augmented Large Language Models. arXiv 2024, arXiv:2405.06211. Available online: https://arxiv.org/abs/2405.06211 (accessed on 3 November 2025). [CrossRef]

- Yu, H.; Gan, A.; Zhang, K.; Tong, S.; Liu, Q.; Liu, Z. Evaluation of Retrieval-Augmented Generation: A Survey. arXiv 2024, arXiv:2405.07437. Available online: https://arxiv.org/abs/2405.07437 (accessed on 3 November 2025).

- Asai, A.; Wu, Z.; Wang, Y.; Sil, A.; Hajishirzi, H. Self-RAG: Learning to Retrieve, Generate, and Critique through Self-Reflection. arXiv 2023, arXiv:2310.11511. Available online: https://arxiv.org/abs/2310.11511 (accessed on 1 November 2025). [CrossRef]

- Vu, T.; Iyyer, M.; Wang, X.; Constant, N.; Wei, J.; Wei, J.; Tar, C.; Sung, Y.H.; Zhou, D.; Le, Q.; et al. FreshLLMs: Refreshing Large Language Models with Search Engine Augmentation. Findings of the Association for Computational Linguistics: ACL 2024. pp. 13793–13817. 2024. Available online: https://aclanthology.org/2024.findings-acl.813.pdf (accessed on 3 November 2025).

- DeepSeek-AI. DeepSeek-V3 Technical Report. 2024. Available online: https://arxiv.org/abs/2412.19437 (accessed on 16 October 2025).

- LMSYS. Overview—Chatbot Arena Leaderboard. 2025. Available online: https://lmsys.org/blog/2023-05-03-arena/ (accessed on 16 October 2025).

- DeepSeek-AI. DeepSeek-V3.1 (Model Card). 2025. Available online: https://huggingface.co/deepseek-ai/DeepSeek-V3.1 (accessed on 16 October 2025).

- LMSYS. Introducing the Hard Prompts Category in Chatbot Arena. 2024. Available online: https://lmsys.org/blog/2024-05-17-category-hard/ (accessed on 16 October 2025).

- Microsoft. Relevance Scoring in Hybrid Search Using Reciprocal Rank Fusion (RRF). Microsoft Learn Documentation, Updated 28 September 2025. Available online: https://learn.microsoft.com/en-us/azure/search/hybrid-search-ranking (accessed on 16 October 2025).

- Elastic. Reciprocal Rank Fusion—Elasticsearch Reference. Available online: https://www.elastic.co/docs/reference/elasticsearch/rest-apis/reciprocal-rank-fusion (accessed on 16 October 2025).

- Microsoft. Hybrid Search (BM25+Vector) Overview. Microsoft Learn Documentation. Available online: https://learn.microsoft.com/en-us/azure/search/hybrid-search-overview (accessed on 16 October 2025).

- Sarthi, P.; Abdullah, S.; Tuli, A.; Khanna, S.; Goldie, A.; Manning, C.D. RAPTOR: Recursive Abstractive Processing for Tree-Organized Retrieval. arXiv 2024, arXiv:2401.18059. Available online: https://arxiv.org/abs/2401.18059 (accessed on 3 November 2025). [CrossRef]

- Cormack, G.V.; Clarke, C.L.A.; Büttcher, S. Reciprocal Rank Fusion Outperforms Condorcet and Individual Rank Learning Methods. In Proceedings of the 32nd Annual International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ’09), Boston, MA, USA, 19–23 July 2009; ACM: New York, NY, USA, 2009; pp. 758–759. [Google Scholar] [CrossRef]

- Bruch, S.; Gai, S.; Ingber, A. An Analysis of Fusion Functions for Hybrid Retrieval. ACM Trans. Inf. Syst. 2023, 42, 20. [Google Scholar] [CrossRef]

- Bendersky, M.; Zhuang, H.; Ma, J.; Han, S.; Hall, K.; McDonald, R. Meeting the TREC-COVID Challenge with a 100+ Runs: Precision Medicine, Search, and Beyond. arXiv 2020, arXiv:2010.00200. Available online: https://arxiv.org/pdf/2010.00200 (accessed on 2 November 2025).

- Thakur, N.; Reimers, N.; Rücklé, A.; Srivastava, A.; Gurevych, I. BEIR: A Heterogeneous Benchmark for Zero-Shot Evaluation of Information Retrieval Models. arXiv 2021, arXiv:2104.08663. Available online: https://arxiv.org/abs/2104.08663 (accessed on 16 October 2025). [CrossRef]

- Xiao, C.; Yang, D.; Gong, Y.; Li, H.; Zhang, M.; Wang, J.; Chen, L.; Zhao, K.; Liu, Y.; Hu, S. C-Pack: Packaged Reranking for Retrieval. arXiv 2023, arXiv:2312.07597. Available online: https://arxiv.org/abs/2312.07597 (accessed on 16 October 2025).

- Nogueira, R.; Cho, K. Passage Re-ranking with BERT. arXiv 2019, arXiv:1901.04085. Available online: https://arxiv.org/abs/1901.04085 (accessed on 16 October 2025).

- Nogueira, R.; Yang, W.; Lin, J.; Cho, K. Multi-Stage Document Ranking with BERT. arXiv 2019, arXiv:1910.14424. Available online: https://arxiv.org/abs/1910.14424 (accessed on 16 October 2025). [CrossRef]

- Tseng, Y.-M.; Chen, W.-L.; Chen, C.-C.; Chen, H.H. Evaluating Large Language Models as Expert Annotators. arXiv 2025, arXiv:2508.07827. Available online: https://arxiv.org/abs/2508.07827 (accessed on 16 October 2025). [CrossRef]

- Zhang, R.; Li, Y.; Ma, Y.; Zhou, M.; Zou, L. LLMaAA: Making Large Language Models as Active Annotators. Findings of the Association for Computational Linguistics: EMNLP 2023, 2023. Available online: https://aclanthology.org/2023.findings-emnlp.872/ (accessed on 16 October 2025).

- Karim, M.M.; Khan, S.; Van, D.H.; Liu, X.; Wang, C.; Qu, Q. Transforming Data Annotation with AI Agents: A Review of Trends and Best Practices. Future Internet 2025, 17, 353. [Google Scholar] [CrossRef]

- Kazemi, A.; Natarajan Kalaivendan, S.B.; Wagner, J.; Qadeer, H.; Davis, B. Synthetic vs. Gold: The Role of LLM-Generated Labels and Data in Cyberbullying Detection. arXiv 2025, arXiv:2502.15860. Available online: https://arxiv.org/abs/2502.15860 (accessed on 16 October 2025).

- Singhal, K.; Azizi, S.; Tu, T.; Mahdavi, S.S.; Wei, J.; Chung, H.W.; Scales, N.; Tanwani, A.; Cole-Lewis, H.; Pfohl, S.; et al. Large language models encode clinical knowledge. Nature 2023, 620, 172–180. [Google Scholar] [CrossRef] [PubMed]

- Khattab, O.; Zaharia, M. ColBERT: Efficient and Effective Passage Search via Contextualized Late Interaction over BERT. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2020), Xi’an, China, 25–30 July 2020; ACM: New York, NY, USA, 2020; pp. 39–48. [Google Scholar] [CrossRef]

- Shumailov, I.; Shumaylov, Z.; Zhao, Y.; Gal, Y.; Papernot, N.; Anderson, R. The Curse of Recursion: Training on Generated Data Makes Models Forget. arXiv 2023, arXiv:2305.17493. Available online: https://arxiv.org/abs/2305.17493 (accessed on 4 November 2025).

- Thakur, N.; Pradeep, R.; Upadhyay, S.; Campos, D.; Craswell, N.; Lin, J. Support Evaluation for the TREC 2024 RAG Track: Comparing Human versus LLM Judges. arXiv 2025, arXiv:2504.15205. Available online: https://www.researchgate.net/publication/390991280 (accessed on 4 November 2025). [CrossRef]

- Krippendorff, K. Reliability in Content Analysis: Some Common Misconceptions and Recommendations. Hum. Commun. Res. 2004, 30, 411–433. Available online: https://academic.oup.com/hcr/article/30/3/411/4331534 (accessed on 4 November 2025). [CrossRef]

- Dalton, J.; Xiong, C.; Callan, J. TREC CAsT 2019: The Conversational Assistance Track Overview. arXiv 2020, arXiv:2003.13624. Available online: https://arxiv.org/abs/2003.13624 (accessed on 1 November 2025). [CrossRef]

- Qian, H.; Xie, Y.; He, C.; Lin, J.; Ma, J. Explicit Query Rewriting for Conversational Dense Retrieval. In Proceedings of the EMNLP2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022; ACL: Abu Dhabi, United Arab Emirates, 2022; pp. 10124–10136. Available online: https://aclanthology.org/2022.emnlp-main.311.pdf (accessed on 1 November 2025).

- Elgohary, A.; Peskov, D.; Boyd-Graber, J. Can You Unpack That? Learning to Rewrite Questions in Context. In Proceedings of the EMNLP-IJCNLP 2019, Hong Kong, China, 3–7 November 2019; ACL: Hong Kong, China, 2019; pp. 5717–5726. Available online: https://aclanthology.org/D19-1605/ (accessed on 1 November 2025).

| Architecture | Primary Strengths | Limitations/Risks |

|---|---|---|

| Closed-book (prompt-only) LLM | Minimal integration; fast iteration | Weak traceability; slow knowledge refresh; higher hallucination risk |

| Domain fine-tuned LLM | Encodes domain priors; stable task behavior | Data/compute cost; version drift; re-training required to update knowledge |

| Long-context (context stuffing) | Direct conditioning on source text; simple pipeline | Position sensitivity; latency/cost grow with window size [13] |

| Classic RAG (retrieve–rerank–generate) | Hot updates; evidence traceability; mature tooling | Depends on chunking/coverage; generator-dominated latency [14,15] |

| On-demand/self-reflective RAG | Adaptive retrieval; better factuality with retrieval economy | Added control complexity; extra prompt budget [16] |

| Tool/function-calling pipeline | Deterministic access to DB/APIs; strong guardrails | Integration/maintenance overhead; limited unstructured reasoning |

| KG-augmented LLM | Structural consistency; query interpretability | KG construction/curation cost; coverage/freshness gaps |

| Freshness-aware/online-search RAG | Up-to-date knowledge; resilience to world drift | Source volatility; caching/compliance; variable latency [17] |

| Benchmark (Metric) | DeepSeek-V2 Base | Qwen2.5 72B | LLaMA-3.1 405B Base | DeepSeek-V3 MoE 236B |

|---|---|---|---|---|

| Architecture | MoE | Dense | Dense | MoE |

| Activated Params | 21B | 72B | 405B | 37B |

| Total Params | 236B | 72B | 405B | 671B |

| English | ||||

| Pile-test (BPB) | 0.606 | 0.638 | 0.542 | 0.548 |

| BBH (EM, 3-shot) | 78.8 | 79.8 | 82.9 | 87.5 |

| MMLU (EM, 5-shot) | 78.4 | 85.0 | 84.4 | 87.1 |

| MMLU-Redux (EM, 5-shot) | 75.6 | 83.2 | 81.3 | 86.2 |

| MMLU-Pro (EM, 5-shot) | 51.4 | 58.3 | 52.8 | 64.4 |

| DROP (F1, 3-shot) | 80.4 | 80.6 | 86.0 | 89.0 |

| ARC-Easy (EM, 25-shot) | 97.6 | 98.4 | 98.4 | 98.9 |

| ARC-Challenge (EM, 25-shot) | 92.2 | 94.5 | 95.3 | 95.3 |

| HellaSwag (EM, 10-shot) | 87.1 | 84.8 | 89.2 | 88.9 |

| PIQA (EM, 0-shot) | 83.9 | 82.6 | 85.9 | 84.7 |

| WinoGrande (EM, 5-shot) | 86.3 | 82.3 | 85.2 | 84.9 |

| RACE-Middle (EM, 5-shot) | 73.1 | 68.1 | 74.2 | 67.1 |

| RACE-High (EM, 5-shot) | 52.6 | 50.3 | 56.8 | 51.3 |

| TriviaQA (EM, 5-shot) | 80.0 | 71.9 | 82.7 | 82.9 |

| NaturalQuestions (EM, 5-shot) | 38.6 | 33.2 | 41.5 | 40.0 |

| AGIEval (EM, 0-shot) | 57.5 | 75.8 | 60.6 | 79.6 |

| Code | ||||

| HumanEval (Pass@1, 0-shot) | 43.3 | 53.0 | 54.9 | 65.2 |

| MBPP (Pass@1, 3-shot) | 65.0 | 72.6 | 68.4 | 75.4 |

| LiveCodeBench-Base (Pass@1, 3-shot) | 11.6 | 12.9 | 15.5 | 19.4 |

| CRUXEval-I (EM, 2-shot) | 52.5 | 59.1 | 58.5 | 67.3 |

| CRUXEval-O (EM, 2-shot) | 49.8 | 59.9 | 59.9 | 69.8 |

| Math | ||||

| GSM8K (EM, 8-shot) | 81.6 | 88.3 | 83.5 | 89.3 |

| MATH (EM, 4-shot) | 43.4 | 54.4 | 49.0 | 61.6 |

| MGSM (EM, 8-shot) | 63.6 | 76.2 | 69.9 | 79.8 |

| CMath (EM, 3-shot) | 78.7 | 84.5 | 77.3 | 90.7 |

| Chinese | ||||

| CLUEWSC (EM, 5-shot) | 82.0 | 82.5 | 83.0 | 82.7 |

| C-Eval (EM, 5-shot) | 81.4 | 89.2 | 72.5 | 90.1 |

| CMMLU (EM, 5-shot) | 84.0 | 89.5 | 73.7 | 88.8 |

| CMRC (EM, 1-shot) | 77.4 | 75.8 | 76.0 | 76.3 |

| C3 (EM, 0-shot) | 77.4 | 76.7 | 79.7 | 78.6 |

| CCPM (EM, 0-shot) | 93.0 | 88.5 | 78.6 | 92.0 |

| Multilingual MMMLU-non-English (EM, 5-shot) | 64.0 | 74.8 | 73.8 | 79.4 |

| stable_id | Position | Tokens | Preview |

|---|---|---|---|

| e76faa05#1 | 0 | 12 | Arabica coffee originated in Ethiopia’s high-mountain forests. After entering commercial cultivation, full-sun systems were adopted to pursue high yields. With advances in agronomy and greater attention to plant health, many producing countries began to value shaded cultivation. Whether shade is needed mainly depends on latitude, elevation, and local climate. Coffee is a high-yield, high-nutrient-demand crop; shaded cultivation is lower-cost, lower-risk, and offers good returns, whereas full-sun cultivation requires strong water and fertilizer inputs if insufficient, plants may over-flower and fruit, leading to dieback before scaffold branches are established. |

| e76faa05#2 | 1 | 14 | Under global climate change, countries such as Brazil, Indonesia, Vietnam, and India have experienced disease outbreaks and yield loss after drought followed by heavy rainfall. Traditional non-rust-resistant cultivars widely planted in producing countries (e.g., Bourbon, Typica, Caturra, Catuai) are prone to severe coffee leaf-rust epidemics, seriously affecting production. Accordingly, some countries have emphasized shaded cultivation to create a more suitable micro-environment and stabilize yields. Examples with shade include India, Ethiopia, Colombia, Costa Rica, Mexico, Kenya, and Madagascar; regions commonly without shade include Brazil, Venezuela, Hawaii (USA), Indonesia’s Bali and Sumatra, as well as Malaysia and Uganda. |

| e76faa05#3 | 2 | 9 | Because Brazil predominantly grows coffee without shade, Catimor has historically seen limited planting due to concerns about excessive yield, over-fruiting, and early senescence. Recently, Brazil has paid greater attention to shade: studies in the south indicate that, under agroforestry systems, moderate shade can improve production efficiency. In India, coffee is almost universally shaded, often using a two-tier system short-term lower shade trees intercropped with permanent upper shade trees to create a shaded yet growth-friendly environment. |

| Setting | Precision | Recall | F1 |

|---|---|---|---|

| Our Model (Full RAG) | 0.768 | 0.918 | 0.813 |

| B1: No Reranker | 0.706 | 0.816 | 0.739 |

| B2a: Dense-only | 0.638 | 0.793 | 0.685 |

| B2b: BM25-only | 0.765 | 0.908 | 0.806 |

| B3: Embed Fallback | 0.759 | 0.887 | 0.797 |

| B4: Replace Reranker | 0.638 | 0.773 | 0.679 |

| B5: No Query Rewrite and History (No HAR and IHI) | 0.763 | 0.912 | 0.808 |

| Simple RAG Baseline | 0.534 | 0.665 | 0.573 |

| Setting | Precision | Recall | F1 |

|---|---|---|---|

| Sliding Window (Fixed-length, Overlapped) | 0.750 | 0.847 | 0.780 |

| Our Model (Full RAG) | 0.785 | 0.893 | 0.817 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Jiang, Z.; Yang, J. A RAG-Augmented LLM for Yunnan Arabica Coffee Cultivation. Agriculture 2025, 15, 2381. https://doi.org/10.3390/agriculture15222381

Chen Z, Jiang Z, Yang J. A RAG-Augmented LLM for Yunnan Arabica Coffee Cultivation. Agriculture. 2025; 15(22):2381. https://doi.org/10.3390/agriculture15222381

Chicago/Turabian StyleChen, Zheng, Zihao Jiang, and Jianping Yang. 2025. "A RAG-Augmented LLM for Yunnan Arabica Coffee Cultivation" Agriculture 15, no. 22: 2381. https://doi.org/10.3390/agriculture15222381

APA StyleChen, Z., Jiang, Z., & Yang, J. (2025). A RAG-Augmented LLM for Yunnan Arabica Coffee Cultivation. Agriculture, 15(22), 2381. https://doi.org/10.3390/agriculture15222381