Computer Vision for Site-Specific Weed Management in Precision Agriculture: A Review

Abstract

1. Introduction

- Data acquisition using remote sensing,

- Data pre-processing and development of weed detection models,

- Generation of weed density maps,

- Weeding application via actuators, and

- Performance evaluation of the precision operation. Among these steps, weed detection plays a pivotal role, as it informs subsequent decision-making processes and ensures the effectiveness of SSWM.

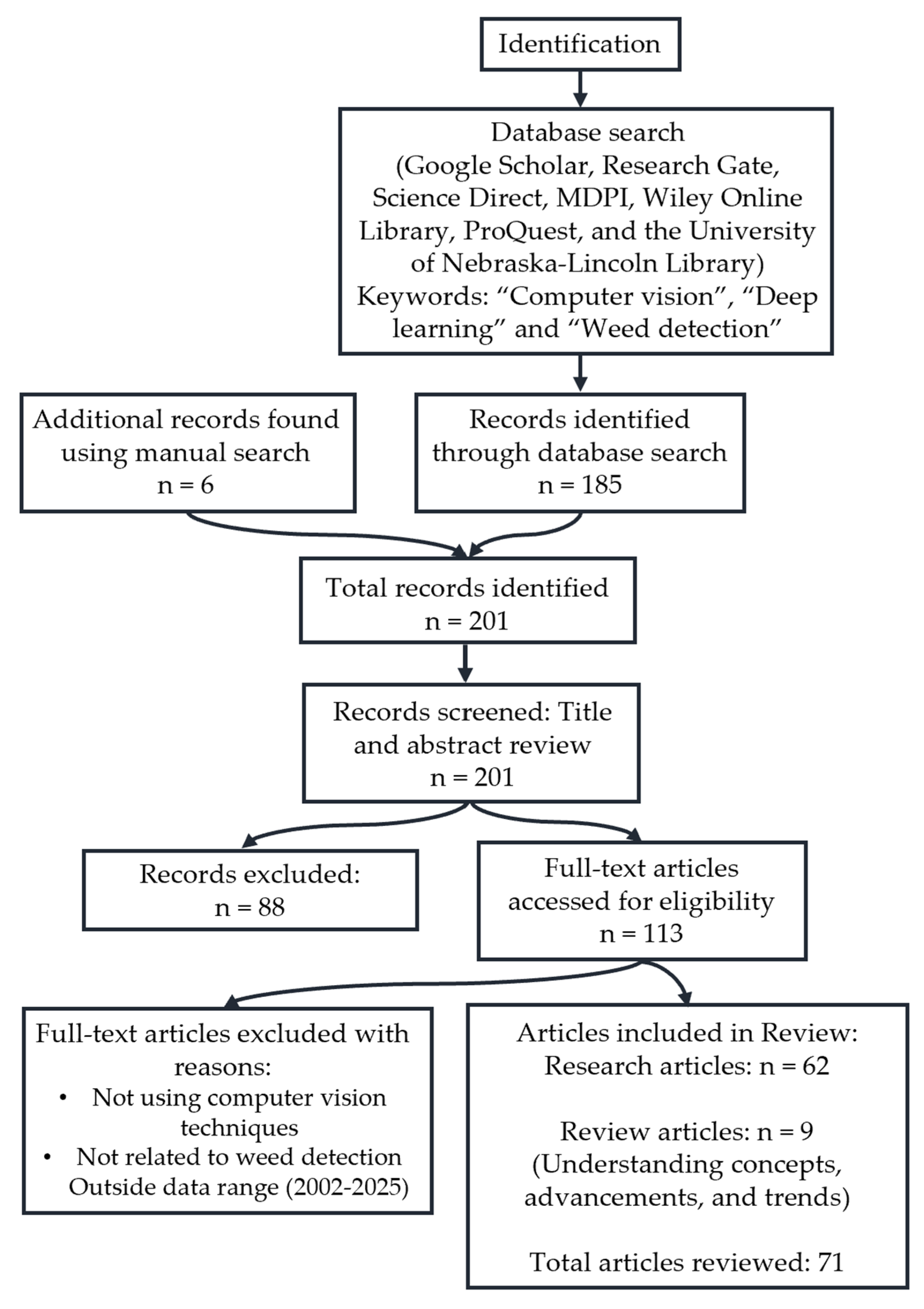

2. Methodology

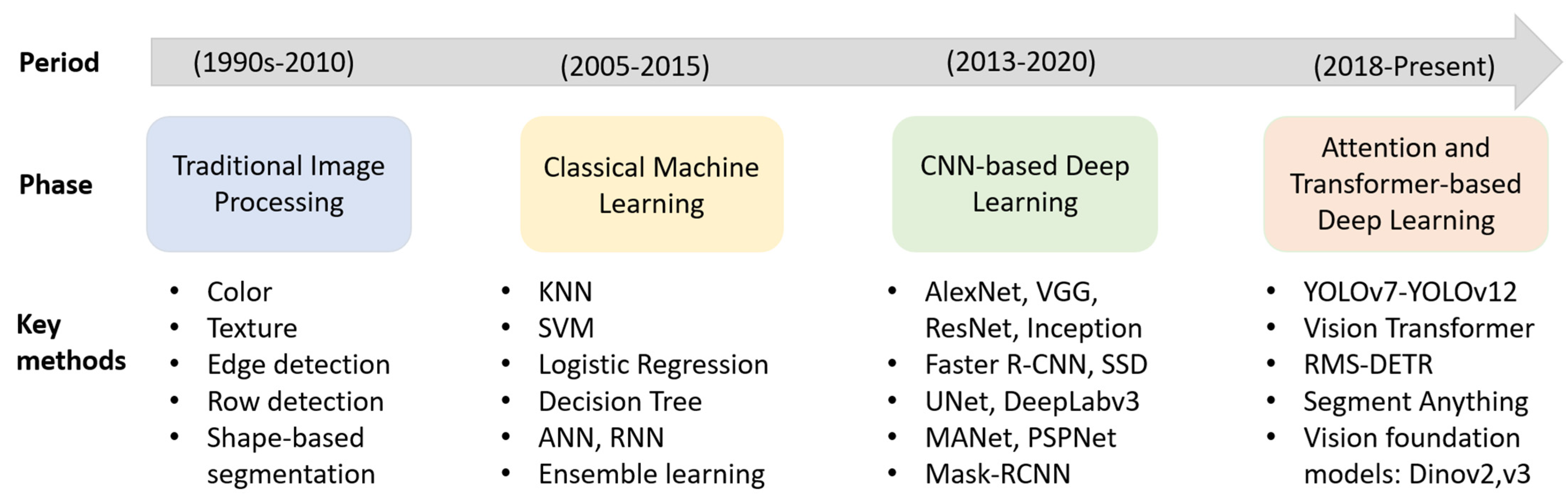

3. Computer Vision for Weed Detection

3.1. Remotely Sensed Images as a Foundation of Data Source

3.2. Traditional Computer Vision Based Approaches for Weed Detection

3.3. Machine Learning-Based Computer Vision Approaches for Weed Detection

3.3.1. Clustering Algorithms

3.3.2. K-Nearest Neighbors Algorithm

3.3.3. Regression Algorithms

3.3.4. Support Vector Machines

3.3.5. Decision Trees

3.3.6. Ensemble Learning

3.4. Deep Learning-Based Computer Vision Approaches for Weed Detection

3.4.1. Object Detection

3.4.2. Semantic Segmentation

3.4.3. Instance Segmentation

3.5. Comparative Evaluation of Classical Machine Learning and Deep Learning Approaches for Weed Detection

4. Challenges and Opportunities of Real-Time Site-Specific Weed Management Applications Using Deep Learning

4.1. Overcome Limited Training Data for SSWM Models

4.1.1. Transfer Learning and Data Augmentation

4.1.2. Publishing and Sharing Data Sets to Develop Powerful Models

4.2. Real-Time Deployment and System Integration of SSWM Models

4.2.1. High-Bandwidth Connectivity and Cloud Computing for SSWM

- (1)

- Latency: The weed detection models developed using cloud technologies require sharing of both the input data generated locally in the fields and the weed maps generated after pre-processing the information in between cloud and sensors in the fields to perform real-time SSWM applications. Strong network connectivity plays a vital role in using these technologies as there needs to be strong and robust communication between sensors and cloud locations. There needs to be constant access to the internet to maintain proper connectivity between both platforms. Also, exploiting resources available on cloud platforms may face additional queuing. These issues could lead to network latency and delay decision-making for real-time weeding applications.

- (2)

- Scalability: Data generated from sensors in the fields needs to be shared with cloud regularly and sharing large loads of data in a short time could be a challenge. Uploading higher resolution imagery or video streaming with cloud would excessive bandwidth consumptions and could lead to scalability issues in sharing data. Also, scalability issues would increase if multiple cameras shared data concurrently with the cloud.

- (3)

- Privacy and Data Security: This is a major concern as there might be risks associated with data leakage or compromising personal data from the cloud locations. There exist other issues associated with the use of cloud technologies such as misuse of sensitive information already uploaded to the cloud by the cloud companies. Users need to be wary about the privacy concerns of the information shared with cloud.

4.2.2. Advancing Edge Computing Capabilities and Model Efficiency for Machinery Integration

5. Summary and Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SSWM | Site Specific Weed Management |

| PA | Precision Agriculture |

| ML | Machine Learning |

| DL | Deep Learning |

| RS | Remote Sensing |

| AI | Artificial Intelligence |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| CNN | Convolutional Neural Network |

| UAV | Unmanned Aerial Vehicles |

| UGV | Unmanned Ground Vehicles |

| KNN | K-Nearest Neighbor |

| CART | Classification and Regression Tree |

| SVM | Support Vector Machine |

| ANN | Artificial Neural Network |

| DT | Decision Tree |

| LoR | Logistic Regression |

| RF | Random Forest |

| FFT | Fast Fourier Transform |

| XGBoost | Extreme Gradient Boosting |

| LSTM | Long-Short Term Memory |

| GAN | Generative Adversarial Networks |

| FCN | Fully Convolutional Network |

| FPS | Frame-per-second |

| RNN | Recurrent Neural Networks |

| GPU | Graphical Processing Unit |

| R-CNN | Region Convolutional Neural Network |

| YOLO | You only Look Once |

| RPN | Region Proposal Network |

| IoU | Intersection over Union |

| IoT | Internet of Things |

| SDK | Software Development Kit |

| SSD | Single Shot Detector |

| ViT | Vision Transformer |

| NIR | Near-Infra Red |

References

- Wang, D.; Cao, W.; Zhang, F.; Li, Z.; Xu, S.; Wu, X. A Review of Deep Learning in Multiscale Agricultural Sensing. Remote Sens. 2022, 14, 559. [Google Scholar] [CrossRef]

- Singh, P. Semantic Segmentation Based Deep Learning Approaches for Weed Detection. Master’s Thesis, University of Nebraska-Lincoln, Lincoln, NE, USA, 16 December 2022. Available online: https://digitalcommons.unl.edu/biosysengdiss/137/ (accessed on 25 September 2025).

- Hasan, A.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G. A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 2021, 184, 106067. [Google Scholar] [CrossRef]

- Heap, I. Global perspective of herbicide-resistant weeds. Pest Manag. Sci. 2014, 70, 1306–1315. [Google Scholar] [CrossRef]

- Zhang, N.; Wang, M.; Wang, N. Precision agriculture—A worldwide overview. Comput. Electron. Agric. 2002, 36, 113–132. [Google Scholar] [CrossRef]

- Islam, M.D.; Liu, W.; Izere, P.; Singh, P.; Yu, C.; Riggan, B.; Zhang, K.; Jhala, A.J.; Knezevic, S.; Ge, Y.; et al. Towards real-time weed detection and segmentation with lightweight CNN models on edge devices. Comput. Electron. Agric. 2025, 237, 110600. [Google Scholar] [CrossRef]

- Feyaerts, F.; Van Gool, L. Multi-spectral vision system for weed detection. Pattern Recognit. Lett. 2001, 22, 667–674. [Google Scholar] [CrossRef]

- Okamoto, H.; Murata, T.; Kataoka, T.; Hata, S.I. Plant classification for weed detection using hyperspectral imaging with wavelet analysis. Weed Biol. Manag. 2007, 7, 31–37. [Google Scholar] [CrossRef]

- Piron, A.; Leemans, V.; Lebeau, F.; Destain, M.F. Improving in-row weed detection in multispectral stereoscopic images. Comput. Electron. Agric. 2009, 69, 73–79. [Google Scholar] [CrossRef]

- Ishak, A.J.; Mustafa, M.M.; Tahir, N.M.; Hussain, A. Weed detection system using support vector machine. In Proceedings of the 2008 International Symposium on Information Theory and Its Applications (ISITA), Auckland, New Zealand, 7–10 December 2008; pp. 1–4. [Google Scholar] [CrossRef]

- Zhao, B.; Li, J.; Baenziger, P.S.; Belamkar, V.; Ge, Y.; Zhang, J.; Shi, Y. Automatic wheat lodging detection and mapping in aerial imagery to support high-throughput phenotyping and in-season crop management. Agronomy 2020, 10, 1762. [Google Scholar] [CrossRef]

- Christensen, S.; Søgaard, H.T.; Kudsk, P.; Nørremark, M.; Lund, I.; Nadimi, E.S.; Jørgensen, R. Site-specific weed control technologies. Weed Res. 2009, 49, 233–241. [Google Scholar] [CrossRef]

- Vrindts, E.; De Baerdemaeker, J.; Ramon, H. Weed detection using canopy reflection. Precis. Agric. 2002, 3, 63–80. [Google Scholar] [CrossRef]

- Shankar, R.H.; Veeraraghavan, A.K.; Sivaraman, K.; Ramachandran, S.S. Application of UAV for pest, weeds and disease detection using open computer vision. In Proceedings of the 2018 International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 13–14 December 2018; pp. 287–292. [Google Scholar] [CrossRef]

- Blasco, J.; Aleixos, N.; Roger, J.M.; Rabatel, G.; Moltó, E. Robotic weed control using machine vision. Biosyst. Eng. 2002, 83, 149–157. [Google Scholar] [CrossRef]

- Raja, R.; Nguyen, T.T.; Slaughter, D.C.; Fennimore, S.A. Real-time robotic weed knife control system for tomato and lettuce based on geometric appearance of plant labels. Biosyst. Eng. 2020, 194, 152–164. [Google Scholar] [CrossRef]

- López-Granados, F. Weed detection for site-specific weed management: Mapping and real-time approaches. Weed Res. 2011, 51, 1–11. [Google Scholar] [CrossRef]

- Murad, N.Y.; Mahmood, T.; Forkan, A.R.M.; Morshed, A.; Jayaraman, P.P.; Siddiqui, M.S. Weed detection using deep learning: A systematic literature review. Sensors 2023, 23, 3670. [Google Scholar] [CrossRef]

- Liakos, K.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine learning in agriculture: A review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef]

- Alchanatis, V.; Ridel, L.; Hetzroni, A.; Yaroslavsky, L. Weed detection in multi-spectral images of cotton fields. Comput. Electron. Agric. 2005, 47, 243–260. [Google Scholar] [CrossRef]

- Sa, I.; Chen, Z.; Popović, M.; Khanna, R.; Liebisch, F.; Nieto, J.; Siegwart, R. WeedNet: Dense semantic weed classification using multispectral images and MAV for smart farming. IEEE Robot. Autom. Lett. 2017, 3, 588–595. [Google Scholar] [CrossRef]

- Shahbazi, N.; Ashworth, M.B.; Callow, J.N.; Mian, A.; Beckie, H.J.; Speidel, S.; Nicholls, E.; Flower, K.C. Assessing the capability and potential of LiDAR for weed detection. Sensors 2021, 21, 2328. [Google Scholar] [CrossRef]

- Forero, M.G.; Herrera-Rivera, S.; Ávila-Navarro, J.; Franco, C.A.; Rasmussen, J.; Nielsen, J. Color classification methods for perennial weed detection in cereal crops. In Proceedings of the Iberoamerican Congress on Pattern Recognition, Madrid, Spain, 14–17 November 2018; Springer: Cham, Switzerland, 2018; pp. 117–123. [Google Scholar] [CrossRef]

- Bak, T.; Jakobsen, H. Agricultural robotic platform with four-wheel steering for weed detection. Biosyst. Eng. 2004, 87, 125–136. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- Lin, F.; Zhang, D.; Huang, Y.; Wang, X.; Chen, X. Detection of corn and weed species by the combination of spectral, shape and textural features. Sustainability 2017, 9, 1335. [Google Scholar] [CrossRef]

- Rani, S.V.; Kumar, P.S.; Priyadharsini, R.; Srividya, S.J.; Harshana, S. An automated weed detection system in smart farming for developing sustainable agriculture. Int. J. Environ. Sci. Technol. 2022, 19, 9083–9094. [Google Scholar] [CrossRef]

- Sukumar, P.; Ravi, D.S. Weed detection using image processing by clustering analysis. Int. J. Emerg. Technol. Eng. Res. 2016, 4, 14–18. [Google Scholar]

- Zhang, X.; Li, X.; Zhang, B.; Zhou, J.; Tian, G.; Xiong, Y.; Gu, B. Automated robust crop-row detection in maize fields based on position clustering algorithm and shortest path method. Comput. Electron. Agric. 2018, 154, 165–175. [Google Scholar] [CrossRef]

- Jensen, S.M.; Akhter, M.J.; Azim, S.; Rasmussen, J. The predictive power of regression models to determine grass weed infestations in cereals based on drone imagery—Statistical and practical aspects. Agronomy 2021, 11, 2277. [Google Scholar] [CrossRef]

- Andújar, D.; Weis, M.; Gerhards, R. An ultrasonic system for weed detection in cereal crops. Sensors 2012, 12, 17343–17357. [Google Scholar] [CrossRef]

- Yano, I.H.; Mesa, N.F.O.; Santiago, W.E.; Aguiar, R.H.; Teruel, B. Weed identification in sugarcane plantations through images taken from remotely piloted aircraft (RPA) and KNN classifier. J. Food Nutr. Sci. 2017, 5, 211. [Google Scholar] [CrossRef]

- Bakhshipour, A.; Jafari, A. Evaluation of support vector machine and artificial neural networks in weed detection using shape features. Comput. Electron. Agric. 2018, 145, 153–160. [Google Scholar] [CrossRef]

- Ahmed, F.; Al-Mamun, H.A.; Bari, A.H.; Hossain, E.; Kwan, P. Classification of crops and weeds from digital images: A support vector machine approach. Crop Prot. 2012, 40, 98–104. [Google Scholar] [CrossRef]

- Waheed, T.; Bonnell, R.B.; Prasher, S.O.; Paulet, E. Measuring performance in precision agriculture: CART—A decision tree approach. Agric. Water Manag. 2006, 84, 173–185. [Google Scholar] [CrossRef]

- Sheffield, K.J.; Clements, D.; Clune, D.J.; Constantine, A.; Dugdale, T.M. Detection of Aquatic Alligator Weed (Alternanthera philoxeroides) from aerial imagery using random forest classification. Remote Sens. 2022, 14, 2674. [Google Scholar] [CrossRef]

- Dadashzadeh, M.; Abbaspour-Gilandeh, Y.; Mesri-Gundoshmian, T.; Sabzi, S.; Hernández-Hernández, J.L.; Hernández-Hernández, M.; Arribas, J.I. Weed classification for site-specific weed management using an automated stereo computer-vision machine-learning system in rice fields. Plants 2020, 9, 559. [Google Scholar] [CrossRef] [PubMed]

- Sabzi, S.; Abbaspour-Gilandeh, Y. Using video processing to classify potato plant and three types of weed using hybrid of artificial neural network and particle swarm algorithm. Measurement 2018, 126, 22–36. [Google Scholar] [CrossRef]

- Kamath, R.; Balachandra, M.; Prabhu, S. Crop and weed discrimination using Laws’ texture masks. Int. J. Agric. Biol. Eng. 2020, 13, 191–197. [Google Scholar] [CrossRef]

- Castillejo-González, I.L.; Pena-Barragán, J.M.; Jurado-Expósito, M.; Mesas-Carrascosa, F.J.; López-Granados, F. Evaluation of pixel-and object-based approaches for mapping wild oat (Avena sterilis) weed patches in wheat fields using QuickBird imagery for site-specific management. Eur. J. Agron. 2014, 59, 57–66. [Google Scholar] [CrossRef]

- Jin, X.; Sun, Y.; Che, J.; Bagavathiannan, M.; Yu, J.; Chen, Y. A novel deep learning-based method for detection of weeds in vegetables. Pest. Manag. Sci. 2022, 78, 1861–1869. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Ran, X. Deep learning with edge computing: A review. Proc. IEEE 2019, 107, 1655–1674. [Google Scholar] [CrossRef]

- Singh, P.; Perez, M.A.; Donald, W.N.; Bao, Y. A comparative study of deep semantic segmentation and UAV-based multispectral imaging for enhanced roadside vegetation composition assessment. Remote Sens. 2025, 17, 1991. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar] [CrossRef]

- Razfar, N.; True, J.; Bassiouny, R.; Venkatesh, V.; Kashef, R. Weed detection in soybean crops using custom lightweight deep learning models. J. Agric. Food Res. 2022, 8, 100308. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Mostafa, D.; Matthias, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16×16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Espejo-Garcia, B.; Mylonas, N.; Athanasakos, L.; Fountas, S.; Vasilakoglou, I. Towards weeds identification assistance through transfer learning. Comput. Electron. Agric. 2020, 171, 105306. [Google Scholar] [CrossRef]

- Guo, Z.; Cai, D.; Zhou, Y.; Xu, T.; Yu, F. Identifying rice field weeds from unmanned aerial vehicle remote sensing imagery using deep learning. Plant Methods 2024, 20, 105. [Google Scholar] [CrossRef] [PubMed]

- Asad, M.H.; Bais, A. Weed detection in canola fields using maximum likelihood classification and deep convolutional neural network. Inf. Process. Agric. 2020, 7, 535–545. [Google Scholar] [CrossRef]

- Boyina, L.; Sandhya, G.; Vasavi, S.; Koneru, L.; Koushik, V. Weed Detection in Broad Leaves using Invariant U-Net Model. In Proceedings of the 2021 International Conference on Communication, Control and Information Sciences (ICCISc), Chennai, India, 16–18 June 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Wang, A.; Xu, Y.; Wei, X.; Cui, B. Semantic segmentation of crop and weed using an encoder-decoder network and image enhancement method under uncontrolled outdoor illumination. IEEE Access 2020, 8, 81724–81734. [Google Scholar] [CrossRef]

- Sun, C.; Zhang, M.; Zhou, M.; Zhou, X. An improved transformer network with multi-scale convolution for weed identification in sugarcane field. IEEE Access 2024, 12, 31168–31181. [Google Scholar] [CrossRef]

- Mini, G.A.; Sales, D.O.; Luppe, M. Weed segmentation in sugarcane crops using Mask R-CNN through aerial images. In Proceedings of the 2020 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 16–18 December 2020; pp. 485–491. [Google Scholar] [CrossRef]

- Jin, S.; Dai, H.; Peng, J.; He, Y.; Zhu, M.; Yu, W.; Li, Q. An improved mask R-CNN method for weed segmentation. In Proceedings of the 2022 IEEE 17th Conference on Industrial Electronics and Applications (ICIEA), Chengdu, China, 1–4 December 2022; pp. 1430–1435. [Google Scholar] [CrossRef]

- Veeranampalayam Sivakumar, A.N.; Li, J.; Scott, S.; Psota, E.; Jhala, A.J.; Luck, J.D.; Shi, Y. Comparison of object detection and patch-based classification deep learning models on mid-to-late-season weed detection in UAV imagery. Remote Sens. 2020, 12, 2136. [Google Scholar] [CrossRef]

- Singh, P.; Bao, Y.; Ru, S. Deep learning approaches for yield prediction and maturity assessment in Southern Highbush blueberry cultivation. In Proceedings of the ASA, CSSA, SSSA International Annual Meeting, San Antonio, TX, USA, 10–13 November 2024; Available online: https://scisoc.confex.com/scisoc/2024am/meetingapp.cgi/Paper/161550 (accessed on 28 October 2025).

- Chaurasia, A.; Culurciello, E. LinkNet: Exploiting encoder representations for efficient semantic segmentation. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Singh, P.; Bao, Y.; Perez, M.A.; Donald, W.N. Image-based assessment of vegetation cover and composition using U-Net-based semantic segmentation. In Proceedings of the 2023 ASABE Annual International Meeting, Omaha, NE, USA, 9–12 July 2023; p. 1. Available online: https://elibrary.asabe.org/abstract.asp?aid=54094 (accessed on 28 October 2025).

- Reedha, R.; Dericquebourg, E.; Canals, R.; Hafiane, A. Transformer neural network for weed and crop classification of high-resolution UAV images. Remote Sens. 2022, 14, 592. [Google Scholar] [CrossRef]

- Giselsson, T.M.; Jørgensen, R.N.; Jensen, P.K.; Dyrmann, M.; Midtiby, H.S. A public image database for benchmark of plant seedling classification algorithms. arXiv 2017, arXiv:1711.05458. [Google Scholar] [CrossRef]

- Chebrolu, N.; Lottes, P.; Schaefer, A.; Winterhalter, W.; Burgard, W.; Stachniss, C. Agricultural robot dataset for plant classification, localization and mapping on sugar beet fields. Int. J. Robot. Res. 2017, 36, 1045–1052. [Google Scholar] [CrossRef]

- Lameski, P.; Zdravevski, E.; Trajkovik, V.; Kulakov, A. Cloud-based architecture for automated weed control. In Proceedings of the IEEE EUROCON 2017—17th International Conference on Smart Technologies, Ohrid, North Macedonia, 6–8 July 2017; pp. 757–762. [Google Scholar] [CrossRef]

- Haug, S.; Ostermann, J. A crop/weed field image dataset for the evaluation of computer vision based precision agriculture tasks. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 105–116. [Google Scholar] [CrossRef]

- Leminen Madsen, S.; Mathiassen, S.K.; Dyrmann, M.; Laursen, M.S.; Paz, L.C.; Jørgensen, R.N. Open plant phenotype database of common weeds in Denmark. Remote Sens. 2020, 12, 1246. [Google Scholar] [CrossRef]

- Teimouri, N.; Dyrmann, M.; Nielsen, P.R.; Mathiassen, S.K.; Somerville, G.J.; Jørgensen, R.N. Weed growth stage estimator using deep convolutional neural networks. Sensors 2018, 18, 1580. [Google Scholar] [CrossRef] [PubMed]

- Mell, P.; Grance, T. Effectively and securely using the cloud computing paradigm. NIST Inf. Technol. Lab. 2009, 2, 304–311. Available online: https://zxr.io/nsac/ccsw09/slides/mell.pdf (accessed on 25 September 2025).

- Ampatzidis, Y.; Partel, V.; Costa, L. Agroview: Cloud-based application to process, analyze and visualize UAV-collected data for precision agriculture applications utilizing artificial intelligence. Comput. Electron. Agric. 2020, 174, 105457. [Google Scholar] [CrossRef]

- Alrowais, F.; Asiri, M.M.; Alabdan, R.; Marzouk, R.; Hilal, A.M.; Gupta, D. Hybrid leader based optimization with deep learning driven weed detection on internet of things enabled smart agriculture environment. Comput. Electr. Eng. 2022, 104, 108411. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Singh, P.; Niknejad, N.; Spiers, J.D.; Bao, Y.; Ru, S. Development of a smartphone application for rapid blueberry detection and yield estimation. Smart Agric. Technol. 2025, 1, 101361. [Google Scholar] [CrossRef]

- Assunção, E.; Gaspar, P.D.; Mesquita, R.; Simões, M.P.; Alibabaei, K.; Veiros, A.; Proença, H. Real-Time Weed Control Application Using a Jetson Nano Edge Device and a Spray Mechanism. Remote Sens. 2022, 14, 4217. [Google Scholar] [CrossRef]

| Data Collection | ML Model | Results | Reference |

|---|---|---|---|

| Features extracted from Gabor and FFT filters | SVM | Classification of narrow and broad-leaved weeds, Overall accuracy: 100% | [10] |

| Hyper-spectral images | DTs developed with boosting | Global accuracy scores of 95.0% were achieved using spectral and shape features | [26] |

| Bi-spectral images | Unsupervised Clustering | Overall weed detection accuracy of 75.0% | [28] |

| RGB images | Linear Regression | Average Balanced accuracy ranged between 75.0–83.0% across 6 different fields | [30] |

| RGB images | Multiple Regression | Classification accuracy of 92.8% between infested and non-infested regions | [31] |

| Pattern recognition features | K-Nearest Neighbors (KNN) classifier | Overall accuracy of 83.1% with a kappa coefficient of 0.775 | [32] |

| Three types of shape features used for training | SVM and ANN | Overall classification accuracy of crops and weeds, ANN: 92.9% and SVM 92.5% | [33] |

| Optimal features extracted from RGB images | SVM | Overall classification accuracy score of 97.0% | [34] |

| Hyper-spectral images | Classification and Regression Tree (CART) and DT | Classification accuracy of 96.0% for early growth stages of weeds in corn crops | [35] |

| RGB images | Random Forest (RF) | Overall pixel-based classification of 98.2% | [36] |

| RGB images | SVM, Extreme Gradient Boosting (XGBoost), Linear Regression | SVM classifier obtained a F1-score of 99.3% | [37] |

| RGB images | ANN | Overall classification accuracy score of 98.1% | [38] |

| Grayscale and RGB images | RF | Overall classification accuracy of 94.0% | [39] |

| Dataset Collection | DL Model | Results | Reference |

|---|---|---|---|

| RGB images captured at an altitude of 0.6 m | Object detection models: Faster R-CNN, Yolo-v3, and CenterNet | Yolo-v3 achieved the highest F1-score of 0.971 and computational efficiency | [41] |

| RGB imagery from UAV | Semantic Segmentation models: UNet++, MAnet, DeepLab V3+, and PSPNet | Best-performing model achieved mAP of 90.0% on MAnet with mit_b4 backbone | [43] |

| 400 RGB images from UAV | 5 DL models: MobileNetV2, ResNet50 and 3 custom CNNs | 5-layer CNN achieves a detection accuracy of 95.0% | [45] |

| RGB images under natural light condition | DenseNet model combined with SVM | The proposed model achieves a F1-score of 99.3% | [47] |

| Rice field weed dataset | High-level semantic feature extraction used Transformers | The developed RMS-DETR model achieved an accuracy of 79.2% | [48] |

| RGB imagery from UAV | Semantic Segmentation models: UNet and SegNet | SegNet model performed best with an Intersection over Union (IoU) score of 0.821 | [49] |

| RGB imagery from UAV | UNet model with InceptionV3 as feature extractor | Accuracy of weed detection up to 90.0% | [50] |

| RGB imagery and NIR information | Encoder-decoder based Deep CNN | Mean IoU (mIoU) score for pixel-wise segmentation score of 88.9% | [51] |

| RGB imagery Digital Nikon Z5 camera | Multi-scale feature extraction Residual attention transformer | Best-performing model achieved 97.0% accuracy and 94.1% mIoU | [52] |

| RGB imagery from UAV | Mask R-CNN with ResNet 50 and ResNet 101 backbone | Best-performing model achieved mAP50 of 65.5% using ResNet101 backbone | [53] |

| RGB imagery from UAV | Mask R-CNN: Convolutional Block Attention Module | The Improved Mask R-CNN model achieved mAP score of 0.919 | [54] |

| RGB imagery from UAV | Object detection: Faster R-CNN and SSD | Best performing Faster R-CNN achieved an IoU score 0.850 of on test dataset | [55] |

| Dataset Name | Dataset Format | Annotation Type | Total No. of Images | URL |

|---|---|---|---|---|

| WeedNet [21] | Multispectral | Images per class category | 465 | https://github.com/inkyusa/weedNet (accessed on 28 October 2025) |

| Early crop weed [47] | RGB | Images per class category | 508 | https://github.com/AUAgroup/early-crop-weed (accessed on 28 October 2025) |

| Plant Seedling [60] | RGB | Images per class category | 407 | https://www.kaggle.com/competitions/plant-seedlings-classification/ (accessed on 28 October 2025) |

| Sugar Beets [61] | Available in multiple formats | Images per class category | >10,000 | https://www.ipb.uni-bonn.de/datasets_IJRR2017/annotations/ (accessed on 28 October 2025) |

| Carrot-Weed [62] | RGB | Pixel level | 39 | https://github.com/lameski/rgbweeddetection (accessed on 28 October 2025) |

| CWFI dataset [63] | Multispectral | Pixel level | 60 | https://github.com/cwfid/dataset (accessed on 28 October 2025) |

| Plant Phenotype database [64] | RGB | Bounding box | 7590 | https://gitlab.au.dk/AUENG-Vision/OPPD/-/tree/master/ (accessed on 28 October 2025) |

| Leaf counting [65] | RGB | Images per class category | 9372 | https://www.kaggle.com/code/girgismicheal/plant-s-leaf-counting-using-vgg16 (accessed on 28 October 2025) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Singh, P.; Zhao, B.; Shi, Y. Computer Vision for Site-Specific Weed Management in Precision Agriculture: A Review. Agriculture 2025, 15, 2296. https://doi.org/10.3390/agriculture15212296

Singh P, Zhao B, Shi Y. Computer Vision for Site-Specific Weed Management in Precision Agriculture: A Review. Agriculture. 2025; 15(21):2296. https://doi.org/10.3390/agriculture15212296

Chicago/Turabian StyleSingh, Puranjit, Biquan Zhao, and Yeyin Shi. 2025. "Computer Vision for Site-Specific Weed Management in Precision Agriculture: A Review" Agriculture 15, no. 21: 2296. https://doi.org/10.3390/agriculture15212296

APA StyleSingh, P., Zhao, B., & Shi, Y. (2025). Computer Vision for Site-Specific Weed Management in Precision Agriculture: A Review. Agriculture, 15(21), 2296. https://doi.org/10.3390/agriculture15212296