Abstract

Ensuring food safety in fresh-cut vegetables is essential due to the frequent presence of foreign material (FM) that threatens consumer health and product quality. This study presents a real-time FM detection system developed using the YOLO object detection framework to accurately identify diverse FM types in cabbage and green onions. A custom dataset of 14 FM categories—covering various shapes, sizes, and colors—was used to train six YOLO variants. Among them, YOLOv7x demonstrated the highest overall accuracy, effectively detecting challenging objects such as transparent plastic, small stones, and insects. The system, integrated with a conveyor-based inspection setup and a Python graphical interface, maintained stable and high detection accuracy confirming its robustness for real-time inspection. These results validate the developed system as an alternative intelligent quality-control layer for continuous, automated inspection in fresh-cut vegetable processing lines, and establish a solid foundation for future robotic-based removal systems aimed at fully automated food safety assurance.

1. Introduction

Fresh-cut fruits and vegetables, also known as minimally processed products, undergo sanitation, physical modification, packaging, and refrigeration to ensure their convenience for immediate consumption or cooking [1]. In addition to their ease of use and extended shelf life, these products retain essential nutrients such as vitamins, minerals, and dietary fiber, which play a vital role in promoting overall health. Regular consumption of fresh-cut produce has been associated with long-term health benefits, including a lower risk of chronic diseases such as cardiovascular disorders and certain types of cancer [2]. The global market for fresh-cut fruits and vegetables has expanded significantly in recent years, driven by the growing consumer preference for convenient, healthy, and ready-to-eat food products. This trend reflects a broader societal shift toward healthier lifestyles and increasing demand for time-saving meal solutions, particularly in urban areas [3]. In 2025, the global fresh-cut vegetable market is projected to reach approximately USD 50 billion, with an estimated Compound Annual Growth Rate (CAGR) of around 7% during the 2025–2033 period [4]. In Republic of Korea, the fresh vegetables market is expected to generate revenues of USD 15.01 billion in 2025, accompanied by a projected CAGR of 5.45% between 2025 and 2030. On a per capita basis, the market is anticipated to achieve revenues of approximately USD 290.42 per person in 2025. In terms of consumption volume, it is forecasted to reach 4.61 billion kilograms by 2030, representing a growth rate of 3.8% in 2026. The average per capita consumption is estimated at 76.7 kg in 2025, underscoring the strong domestic demand and dietary dependence on fresh vegetable products in Republic of Korea [5].

Despite the rapid expansion of the fresh-cut produce market, contamination by foreign material (FM) remains a persistent issue and a major challenge for food safety management within the vegetable processing industry. FM is generally defined as unintended non-food items or substances introduced into food products, posing potential health risks to consumers [6]. These contaminants—ranging from metal fragments and plastic particles to stones and other extraneous materials—constitute one of the leading causes of consumer complaints reported by food manufacturers, distributors, and regulatory authorities [7]. In October 2025, Ben’s Original™ voluntarily recalled selected ready-to-heat rice products in the United States due to the possible presence of small stones originating from the rice farm, posing potential injury risks to consumers [8]. Furthermore, according to Société Générale de Surveillance (SGS) Digicomply (2024), incidents of FM contamination—such as plastic, metal, and wood fragments—have tripled globally over the past five years, with more than 700 cases reported in 2024 [9]. Beyond threatening consumer health, FM contamination can also result in substantial financial losses, large-scale product recalls, and long-term reputational damage to manufacturers [10]. In the fresh-cut vegetable production chain, FM detection is a critical control point within quality assurance, accounting for only 8–12% of total processing time yet up to 25% of inspection-related capital costs. Despite its limited duration, this stage directly affects product safety, regulatory compliance, and the economic sustainability of operations [11]. As a safety-critical and labor-intensive process, processors increasingly adopt automated optical and X-ray inspection systems—costing approximately USD 50,000–200,000 per unit—to reduce manual inspection time and ensure consistent food safety performance [12].

Fresh-cut vegetables are among the categories most vulnerable to FM contamination. A recent report on food-related incidents revealed that fruits and vegetables accounted for approximately 23.6% of all contamination event [13]. In Europe, an average of 500 product recalls occur each year, with FM contamination accounting for 20% of these recalls. Fruits and vegetables make up 14% of all food products affected by recalls [14]. These FM can include hard and sharp objects such as plastic pieces, glass, metal, stone, wood and insect parts, which may unintentionally end up in fresh-cut vegetables during processing [15]. Throughout 2021–2023, the Rapid Alert System for Food and Feed (RASFF) monitored several recall notifications caused by FM contamination in fruit and vegetable products, as summarized in Table 1. FM not only decreases the value of food and impact on human health but also damages the credibility of the company. All reasonable precautions must be taken to prevent physical contamination from accidentally entering the food at any stage of food processing [10,16]. Therefore, FM detection is the most critical step in the fresh-cut vegetable processing line to ensure the safety of both consumers and suppliers [17].

Table 1.

Notifications of recalls due to FM contamination in fruit and vegetable products.

A wide range of technologies has been developed and evaluated for the detection of FM in food products, including metal detection systems [18], X-ray systems [19], microwave imaging [20], near-infrared imaging [21], UV and fluorescence imaging [22], nuclear magnetic resonance [23], and ultrasonic imaging [24]. Although each of these methods exhibits distinct advantages and detection capabilities, their overall performance is often limited by the physical and chemical characteristics of the target materials. Factors such as temperature sensitivity, variations in moisture content, irregular object shapes, internal cavities, pores, and entrapped air bubbles can significantly affect detection accuracy and reliability [25]. Readers are encouraged to consult the comprehensive reviews presented in [7,25,26] for an in-depth comparative assessment of these detection approaches, including detailed analyses of their cost efficiency, processing speed, detection precision, and suitability for different categories of FM.

Among the existing detection technologies, only metal detectors and X-ray inspection systems have been commercially adopted in production lines, functioning as the primary quality-control tools for identifying FMs before food products leave the processing facility [27]. Metal detectors are known for their rapid response and high efficiency in detecting metallic objects; however, they fail to identify non-metallic materials such as plastics, glass, wood, or paper [7]. In contrast, X-ray inspection systems offer broader detection capabilities, capable of identifying both metallic and certain non-metallic FM. Nevertheless, their effectiveness decreases considerably when dealing with low-density materials such as soft plastics, which exhibit minimal radiographic contrast, achieving only 61.8% detection accuracy [23,28]. Moreover, these systems are typically positioned as the final inspection layer before product packaging and shipment, serving as the last line of defense against FM contamination [7,27].

To overcome the limitations of the aforementioned techniques for detecting FM in food products, a number of researchers have developed online inspection systems that utilize various sensors and imaging technologies. Ref. [29] introduced an electrical resistance sensor (ERS) to distinguish between food and contaminants based on resistance variations, supported by both COMSOL multiphysics software simulations and hardware experiments. The system, equipped with a Bluetooth-based graphical user interface (GUI), offered a low-cost solution for real-time monitoring, though its application remained limited to specific material types and controlled environments due to sensitivity to noise and the need for improved hardware integration. Similarly, Ref. [15] developed an liquid crystal tunable filter (LCTF)-based multispectral fluorescence imaging system that integrated fluorescence and color imaging with optimized acquisition algorithms, achieving over 95% detection accuracy for FMs in fresh-cut vegetables; however, its performance was limited when dealing with transparent or non-fluorescent materials, and maintaining high-speed processing in industrial settings remained challenging. Ref. [30] employed visible near-infrared (VNIR) hyperspectral imaging (400–1000 nm) combined with analysis of variance (ANOVA)-based waveband selection to detect slugs and worms on both sides of fresh-cut lettuce, attaining accuracies of 97.5% and 99.5%, respectively, although the system was constrained to specific biological contaminants and was sensitive to environmental variability and data complexity. In another approach, Ref. [31] utilized infrared thermography (IRT) with image processing to detect hidden contaminants in biscuits, offering a cost-effective and low false detection rate solution by leveraging the natural cooling process post-baking; nevertheless, its accuracy depended on precise template alignment and emissivity normalization, and was challenged by structural variations in biscuits and the need for frequent template updates.

Recent advancements in machine vision systems integrated with deep learning algorithms—particularly convolutional neural networks (CNNs)—have significantly enhanced object detection performance across various domains. Among these, the YOLO (You Only Look Once) family has garnered substantial attention due to its real-time detection capability and high accuracy. Its architecture is particularly well-suited for detecting small objects, effectively addressing challenges such as low visibility, occlusion, and limited feature representation that often contribute to high false-negative rates. Numerous studies have reported the superior performance of YOLO-based models in food and agricultural applications. For instance, YOLOv5 demonstrated excellent accuracy in detecting mold on food surfaces, with a precision of 98.10%, recall of 100%, and average precision (AP) of 99.60% [32]. It was also successfully adapted for detecting FMs in walnut kernels, resulting in a 1.1% increase in precision, a 38% reduction in memory usage, and a 52% enhancement in CPU processing speed [33]. An improved YOLOv7 model integrated with attention mechanisms achieved 93.1% precision, 91.9% recall, and 96.1% mAP@0.5 for kiwifruit detection in natural orchards, while maintaining a compact size (74.8 MB) and supporting real-time deployment in automated harvesting systems [34]. Similarly, a YOLOv7-based wheat ear counting system combined with DeepSORT and cross-line partitioning achieved 93.8% precision and 94.9% mAP@0.5 under field conditions, with notable improvements in model efficiency and object tracking performance [35]. Furthermore, advancements in YOLOv8 have shown promising results; an enhanced YOLOv8 model achieved 98.35% precision in detecting small FMs in Pu-erh tea, outperforming YOLOv8, Faster R-CNN, CornerNet, and SSD [36], while the YOLOv8-MeY variant achieved a 92.25% recognition rate in detecting foreign objects on sugar bag surfaces across 400 manual tests, surpassing both Faster R-CNN and YOLOv8n [37]. Collectively, these findings underscore the growing relevance and effectiveness of YOLO-based frameworks for real-time FM inspection in complex and high-speed industrial processing environments.

Building on this advancement, our previous study demonstrated the feasibility of applying YOLOv5 for FM detection in fresh-cut vegetables, successfully identifying small objects such as plastic fragments and insects in cabbage and green onion with detection accuracies exceeding 98% [38]. However, the system showed limitations in real-time industrial settings: reliability declined at higher conveyor speeds and under FM–produce overlap, and manual coupling between the detector and conveyor control introduced synchronization delays that impaired real-time performance. To address these challenges, we developed a fully integrated FM Detection System that synchronizes image acquisition, object detection, and conveyor control within a Python-based interface, enabling high-speed deployment suitable for production lines. The study sets the following measurable objectives: (i) train and fairly compare six YOLO variants (YOLOv5s, YOLOv5x, YOLOv7-tiny, YOLOv7x, YOLOv8s, YOLOv8x) on a curated 14-category FM dataset; (ii) attain high mAP at standard IoU thresholds; (iii) demonstrate robust generalization on an unseen test scenario, achieving overall detection accuracy in the upper-percentile range for both cabbage and green onion; and (iv) verify real-time deployability at the operational belt speed and characterize performance at elevated speeds.

2. Materials and Methods

2.1. Sample Preparation

In this study, two types of fresh-cut vegetables—cabbage (Brassica oleracea) and green onion (Allium fistulosum)—were purchased from a local market in Daejeon, Republic of Korea. These vegetables were selected due to their distinct morphological and chromatic diversity, which present a considerable challenge for FM detection. The cabbage samples exhibited a color spectrum ranging from light green to white, whereas the spring onion samples showed substantial color variation, extending from dark green to light green and from yellowish-white to white. Prior to cutting, all samples were thoroughly washed, and the roots of the spring onions were removed to ensure uniformity. Two sets of fresh-cut vegetable samples, each weighing approximately 3 kg, were prepared, packaged in airtight plastic containers, and stored under controlled refrigeration conditions at 3 ± 1 °C and 79% relative humidity (RH) until further use.

In addition, various types of FMs differing in shape, size, and color commonly found in fresh-cut vegetables, such as plastics [39], insects [40], stones [41], and wood pieces [42], were prepared to simulate realistic contamination scenarios. Additional FM categories that may potentially occur in fresh-cut vegetable processing were also included to enhance the model’s capacity to recognize and generalize across a wider range of FM types. Two distinct FM groups, designated as FM-1 and FM-2, were utilized in this study. FM-1 comprised 14 categories of FMs and was employed to construct the primary dataset, whereas FM-2 contained a separate collection of FM that differed in geometry, size, and color characteristics from those in FM-1. The fourteen FM categories typically found in fresh-cut vegetables are illustrated in Figure 1 and their physical dimensions are listed in Table 2. All experimental procedures and data collection activities were carried out between June 2023 and February 2024.

Figure 1.

Photograph representative of 14 categories of FMs.

Table 2.

The dimensions of FM used in this study.

2.2. Data Collection and Dataset Production

In this study, fresh-cut vegetable samples were evenly spread across the surface of a conveyor belt to ensure uniform scanning conditions. Several FMs from each category in the FM-1 group were randomly selected and manually placed onto the vegetables to simulate contamination events. Contact between the FMs and vegetable samples was established by placing the FMs fully exposed to the vegetable surface, partially covered by overlapping vegetable tissues, or positioned between overlapping leaf layers to emulate realistic contamination conditions. Each scanning session contained approximately 45–50 heterogeneous FMs distributed across the vegetable surface. After each scan, all FMs were carefully separated and manually removed from the fresh-cut vegetables before initiating the next scanning batch using the same procedure. To ensure the consistency and reliability of image acquisition, all environmental parameters—such as illumination intensity, shooting angle, and camera-to-object distance—were maintained under constant conditions during dataset construction. The image acquisition process was conducted at a controlled ambient temperature of 25 ± 1 °C and relative humidity of approximately 75 ± 1%. The detailed setup and control of these parameters are described in Section 2.6.

A total of 3471 images with a resolution of 280 × 1375 pixels were acquired and stored in the Portable Network Graphics (.png) format. To ensure precise identification of objects of interest, all images were manually annotated using the online platform Makesense.ai (http://www.makesense.ai, accessed on 25 July 2023). Each FM in the dataset was individually labeled, with every annotated image assigned a unique identifier (“Fm”) and corresponding label information saved in text (.txt) format. As the study employed a single-class labeling scheme, all annotation files were initialized with the index value “0,” following the Python-based indexing convention commonly used in object detection frameworks. No image augmentation was applied to preserve the original photometric and geometric characteristics of the samples and to avoid synthetic artifacts that could obscure small or low-contrast FMs. All image annotations were manually performed by the first author to maintain consistency and accuracy. A standardized annotation protocol was implemented throughout the dataset creation process, including predefined class naming rules, bounding-box size constraints, and region-of-interest (ROI) definitions for the 14 FM categories. To validate annotation quality, 10% of the labeled images were randomly re-inspected after the initial labeling phase to verify class correctness and bounding-box alignment, confirming consistent accuracy across all annotations.

The dataset was subsequently divided into training and validation subsets at an 8:2 ratio. The training subset comprised 1171 images of cabbage and 1200 of green onion, while the validation subset included 300 images of each, totaling 2371 annotated images for model learning and optimization. An additional testing dataset containing 500 images—252 from cabbage and 248 from green onion—was prepared exclusively for model inference. Unlike the training and validation sets, the testing dataset was not manually annotated, as it was reserved solely for evaluating model performance under unseen conditions. This structured partitioning ensured systematic dataset organization and provided a reliable foundation for objective model performance assessment.

2.3. YOLO Series Algorithm

2.3.1. YOLOv5

YOLOv5 was released a couple of months after YOLOv4 in 2020 by Glenn Jocher [43]. YOLOv5 has high reliability and stability and is easy to deploy and train. The basic framework of YOLOv5 can be divided into four parts: the input, backbone, neck, and head [44]. YOLOv5 employs practical methods at the input stage, such as mosaic data augmentation and adaptive anchor box optimization. Mosaic data augmentation is a new method that mixes four images into one. This method uses random scaling, clipping, and layout to splice. This process enriches the dataset with low requirements for hardware devices and low computational cost and improves the effect of small object detection [45]. The backbone module extracts feature from the input image based on Focus, Bottleneck CSP (Cross Stage Partial Networks), and SPP (Spatial Pyramid Pooling) and transmits them to the neck module [46]. The neck module generates a feature pyramid based on the PANet (Path Aggregation Network). It enhances the ability to detect objects with different scales by fusing low-level spatial features and high-level semantic features bidirectionally. The head module generates detection boxes, indicating the category, coordinates, and confidence by applying anchor boxes to multiscale feature maps from the neck [47]. YOLOv5 is divided into four versions based on the size of the network model: YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5x. These versions are distinguished by the increasing number of residual structures they incorporate. As the number of residual structures increases, the network’s feature extraction and fusion capabilities are enhanced, leading to improved detection accuracy. However, this improvement in accuracy is accompanied by a decrease in processing speed [44,48].

2.3.2. YOLOv7

YOLOv7, released by Wong Kin You in July 2022, is a refined network architecture that integrates advanced modules and optimization techniques. These enhancements are designed to significantly improve accuracy while maintaining the same level of inference efficiency, ensuring that the model remains computationally efficient despite the increased precision [49,50]. In terms of architectural advancements, two significant modifications were implemented: (1) the introduction of Extended Efficient Layer Aggregation Networks (E-ELAN) based on the existing Efficient Layer Aggregation Network (ELAN) architecture, and (2) model scaling. E-ELAN integration enhanced the model’s capacity to learn more diverse features, optimize parameter utilization, and improve computational efficiency. It was achieved without compromising the original gradient pathways of the ELAN architecture, thanks to the application of expansion, shuffling, and cardinality merging techniques. Regarding model scaling, the compound scaling method, as introduced in YOLOv7, was employed to develop larger models while preserving the core characteristics of the base model, such as its original design and structural integrity [51,52,53].

2.3.3. YOLOv8

YOLOv8 was released in January 2023 by Ultralytics, which developed YOLOv5 [54]. The model features improved developer convenience and reduces bounding box predictions because it is an anchor-free model, resulting in faster non-max suppression (NMS). The YOLOv8 architecture resembles that of YOLOv5, with modifications in the convolution layers. Specifically, the first 6 × 6 convolution layer in the stem was substituted with a 3 × 3 layer, and in the bottleneck, the first 3 × 3 convolution layer was replaced with a 1 × 1 layer to decrease channel numbers. The success and speed of YOLOv8 training can be attributed to its mosaic augmentation, which attaches four images in each epoch to encourage the model to learn objects with various locations, partial occlusions, and diverse surrounding pixels. In YOLOv8, mosaic augmentation is stopped during the final 10 training epochs to mitigate performance degradation on the validation and test sets [55,56]. To meet the needs of different scenarios, YOLOv8 provides different size models of the N/S/M/L/X scales. Evaluated on the MS COCO dataset test-dev 2017, YOLOv8X achieved an AP of 53.9% with 283 FPS on NVIDIA Tesla A100 and TensorRT [57].

2.4. Experimental Environment

In this study, the comparative analysis was intentionally limited to models within the YOLO family. This decision was based on the architectural characteristics of YOLO networks, which employ a single-stage detection pipeline that enables real-time inference suitable for industrial applications [52]. In contrast, multi-stage detectors such as Faster R-CNN or SSD, though highly accurate, generally involve region-proposal mechanisms and higher computational latency, making them less compatible with real-time production-line requirements [58]. Therefore, the comparative focus on YOLO models ensures that the evaluation remains aligned with the primary objective of achieving high-speed and high-accuracy detection performance applicable to real-world vegetable processing environments.

The training dataset utilized the pre-trained weights of YOLOv5, YOLOv7, and YOLOv8 provided by the original YOLO developers, as summarized in Table 3. Six sub-variants, YOLOv5s, YOLOv5x, YOLOv7-tiny, YOLOv7x, YOLOv8s, and YOLOv8x, were trained using the dataset. The same computer was used for all models. A total of 100 epochs provided sufficient iterations for loss stabilization without overfitting, while a batch size of 16 was selected to balance GPU memory constraints and gradient stability during backpropagation. The initial learning rate (0.01) and momentum (0.937) followed the default configuration commonly adopted in YOLO implementations to maintain consistent optimization behavior across all model variants, whereas a small weight decay (0.0005) was applied to prevent overfitting. During model training, the ambient temperature and humidity were maintained at 25 ± 1 °C and 70 ± 5% RH, respectively, to stabilize GPU and CPU performance and prevent thermal fluctuations that could affect computational consistency. All training parameters were set to the default configuration provided in the official YOLO repositories to ensure consistency and comparability across all models. These settings collectively offered a robust trade-off between accuracy and training efficiency for real-time detection performance. The hardware and software configuration of the computer and specific information about the training parameters are summarized in Table 4.

Table 3.

YOLO developers.

Table 4.

Experimental Environment and Parameter Settings for Model Training.

2.5. Model Evaluation

During the training and testing phases, the performance of each model was quantitatively evaluated using standard object detection metrics, including precision (P), recall (R), F1 score, and mean Average Precision (mAP) [59]. Precision and recall, respectively, measure the accuracy and completeness of positive detections, while the F1 score represents their harmonic mean, providing a balanced assessment of model robustness, particularly under class imbalance conditions. The mAP metric reflects the overall detection accuracy by averaging precision–recall relationships across all FM categories [60,61,62]. The mathematical formulations of these metrics are expressed as follows:

where TP, FP, and FN represent true positives, false positives, and false negatives, respectively; AP denotes the average precision per class; and N indicates the total number of classes. These metrics collectively provide a comprehensive and standardized framework for evaluating object detection performance.

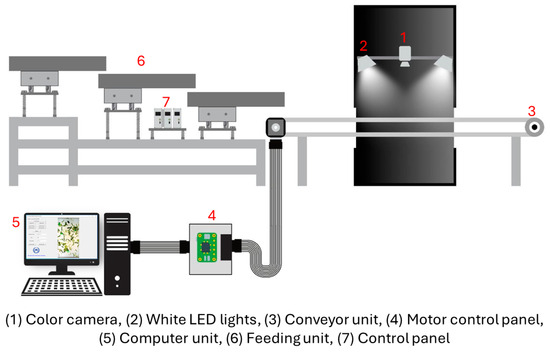

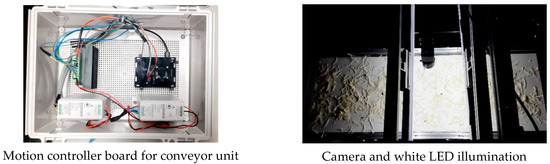

2.6. Inspection System

The inspection system for detecting FMs in fresh-cut vegetables comprised three main units: a feeder unit, a conveyor unit, and a sensor unit, as illustrated in Figure 2. The feeder unit (318 cm × 60 cm × 116 cm) delivered vegetable samples onto a conveyor belt driven by a digital frequency controller. It consisted of three trays of equal length, but varying widths (30 cm, 35 cm, and 40 cm) arranged from top to bottom to facilitate uniform distribution of samples. The conveyor unit (150 cm × 30 cm × 35 cm) was powered by a stepper motor (Nema 23, Series E, Jiangsu Novotech Electronic Technology Co., Ltd., Wuxi, China) connected to a motion controller board (TB6600 stepper motor driver with Raspberry Pi 4 Model B, Egoman Technology Co., Ltd., Shenzhen, China). The sensor unit consisted of a 5-megapixel USB 3.0 CMOS color camera (MV-CA050-20UC, Hikvision, Hangzhou, China) equipped with a C-mount lens (focal length = 8 mm, aperture = 1:1.4). The camera was calibrated using a color checker (Spyder Checkr® 24, Datacolor Technology Co., Ltd., Suzhou, China) supported by SpyderCHECKR 1.6 software. It was mounted 30 cm above the conveyor surface and flanked by two white LED lamps (JKLEDT5 60, 10 W, Kwangmin Lighting Co., Ltd., Incheon, Republic of Korea) positioned in parallel at a height of 15 cm and spaced 35 cm apart. The LED lighting arrangement (6500K, daylight type) was verified before each test to ensure stable light intensity (1200% lux) using a calibrated lux meter (Fluke 941 Light Meter, Fluke, Daegu, Republic of Korea). The linear speed of the conveyor belt was periodically measured with a laser tachometer (Lutron DT-2236C, Lutron, Shenzhen, China) and adjusted to maintain a tolerance of ±1%. Both the camera and lighting assembly were enclosed within a dark chamber to eliminate ambient light interference.

Figure 2.

Schematics of a real-time inspection system for FM detection.

2.7. YOLO Model Implementation

In this study, a custom graphical user interface (GUI) was developed to deploy the YOLO model in effective real-time FM detection. The interface was created using the Software Development Kit (SDK) provided by the camera manufacturer and implemented with Python 3.8 on a Windows platform. The design process, from initial wireframes to high-fidelity prototypes, leveraged Tkinter as the primary library. Python was selected for its seamless integration capabilities with the YOLO inference model and its extensive array of open-source libraries, facilitating the GUI development carried out in this study.

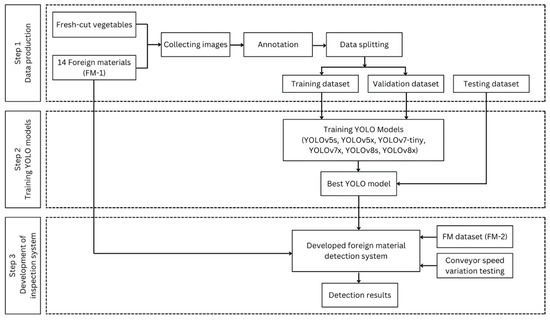

The best-performing YOLO model in .pt format was loaded into the GUI using PyTorch and seamlessly integrated with OpenCV. OpenCV was employed to process the data buffer from the industrial camera, breaking it down into individual frames and reassembling them into a real-time display within the GUI. This enabled the online identification of FMs in fresh-cut vegetables. Additionally, the GUI was integrated with the unit conveyor system using an HTTP client-server protocol, allowing control of the stepper motor driver connected to a Raspberry Pi. Subsequently, the best YOLO model was validated using FM-1 and FM-2 datasets under real-time conditions. The FM-1 evaluation investigated the model’s performance in identifying FMs in each FM category. At the same time, the FM-2 evaluation focused on the model’s ability to detect objects that have never been recognized during the training of the model. A critical assessment involved calculating the detection rate by comparing the number of successfully detected FMs against the total number of objects introduced. The model’s reliability in detecting FMs was also tested at varying conveyor speeds to determine the optimal operational speed; high, medium, and low speeds of 69.88, 36.73, and 18.40 cm/s were considered. The methodology and stages of this evaluation are depicted in Figure 3.

Figure 3.

The flow diagram of the proposed study.

3. Results

3.1. Training and Comparison Results of Different Detection Algorithms

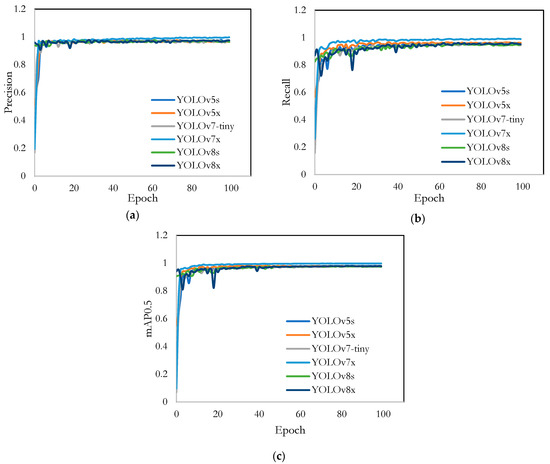

Figure 4 presents the precision, recall, and mAP0.5 (mean Average Precision at an intersection of union (IoU) threshold of 0.5) curves for all model variants during the training phase as an in-depth analysis of their performance evolution. In the precision curve (Figure 4a), all models demonstrated a rapid increase in precision during the initial epochs, stabilizing around a precision value close to 1 after approximately 20 epochs. These results indicated that all YOLO models quickly learned to identify positive detections accurately while minimizing false positives. The recall curves (Figure 4b) showed a similar trend, where the recall values for all models increased swiftly in the early epochs and leveled off around 0.9 to 1. This suggested that the models became proficient at identifying the most relevant objects with fewer false negatives. The mAP0.5 curve (Figure 4c) reflected the combined performance of precision and recall. The mAP0.5 values for all models increased significantly in the beginning and stabilized near 1, indicating that the models were highly effective in correctly identifying objects and localizing them accurately. Overall, the YOLO models exhibited a strong performance across all three metrics, with minimal differences. This demonstrated their robustness and efficacy in detecting FMs in fresh-cut vegetables. The consistency in performance across different YOLO versions highlighted the adaptability and efficiency of these models for the given task.

Figure 4.

Training results of different YOLO models: (a) precision; (b) recall; and (c) mAP0.5.

Table 5 shows that the YOLOv7x model outperformed the other models in terms of precision, recall, and mAP@0.5, suggesting superior detection capabilities. Specifically, the precision of the YOLOv7x model was 99.80%, which was 3.10%, 3.00%, 3.60%, 3.10%, and 3.00% higher than that of the YOLOv5s, YOLOv5x, YOLOv7-tiny, YOLOv8s, and YOLOv8x models, respectively. A high precision indicated that the model was likely to be accurate when detecting FMs in fresh-cut vegetables. Moreover, the YOLOv7x model achieved a recall of 99.00%, which was 3.10%, 4.00%, 3.30%, 3.80%, and 3.30% higher than the YOLOv5s, YOLOv5x, YOLOv7-tiny, YOLOv8s, and YOLOv7x models, respectively. A high recall meant that the model was excellent at detecting FMs.

Table 5.

Comparison results of object detection algorithms.

The mAP values measure the precision of object detection in a frame by comparing detected boxes to ground truth bounding boxes at an IOU of 0.5. This IoU threshold (0.5) was adopted as the main evaluation criterion following standard practice in YOLO-based real-time detection systems, where a moderate localization tolerance is acceptable for practical deployment. To validate its rationality, additional mAP values were computed across a range of IoU thresholds from 0.5 to 0.95 with 0.05 increments (mAP@[0.5:0.95]). The results confirmed consistent model ranking and stable performance trends, indicating that IoU = 0.5 provides a representative and reliable benchmark for evaluating detection quality in this application. Regarding mAP@0.5, the YOLOv7x model achieved a score of 99.80%, outperforming the other models by 1.90%, 2.60%, 2.30%, 2.30%, and 1.90%, respectively.

The performance of the YOLOv7x in this study was compared with several representative works in the field of food and agricultural FM detection. Ref. [63] reported an HSI-based detection system for FM in chili peppers using a Random Forest classifier, achieving 96.4% accuracy; however, the system required high-cost hyperspectral equipment and long computation time. Ref. [64] developed a YOLOv4-Taguchi model for PVC powder detection with mAP = 0.9385, outperforming Faster R-CNN (0.7999) but still below the accuracy of YOLOv7x in this study. Ref. [65] implemented YOLOX for tobacco cabinet inspection, achieving 99.1% accuracy but with an inference speed below 1 frame/s, limiting real-time applicability. Ref. [66] achieved 98.1% precision using YOLOv5 for mold detection on food surfaces; however, their dataset consisted of only 2050 images with limited background variation. Compared with these studies, the present YOLOv7x model benefits from its enhanced E-ELAN backbone, which enables deeper gradient flow and adaptive feature reuse, leading to more robust detection of low-contrast and transparent objects in fresh-cut vegetables under real-time conditions [50,67].

In real-world scenarios and high-precision applications, evaluating model performance is not only based on precision, recall, and mAP. In this study, F1 was used to compare model performance across varying confidence levels. As shown in Table 6, YOLOv7x achieved the highest F1 score at 99.40%, outperforming YOLOv5s, YOLOv5x, YOLOv7-tiny, YOLOv8s, and YOLOv8x by 3.10%, 3.51%, 3.45%, 3.46%, and 3.15%, respectively. In addition to its accuracy, YOLOv7x demonstrated the shortest inference time of just 1.2 milliseconds, making it the fastest among the tested models. This low latency is particularly advantageous for real-time FM detection in fresh-cut vegetables, where rapid processing is essential to maintain efficiency. The combination of exceptional speed and high F1 score confirms YOLOv7x as the optimal model for applications requiring fast and reliable detection.

Table 6.

The detection accuracy for each type of FMs with YOLOv7x is based on real-time in cabbage.

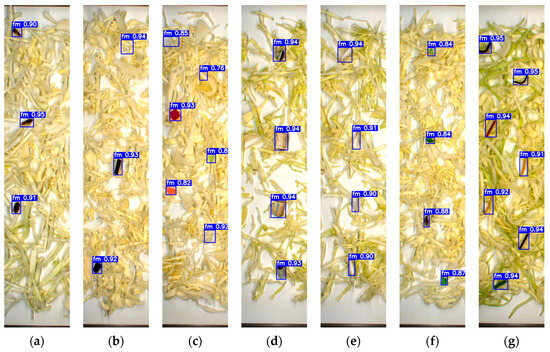

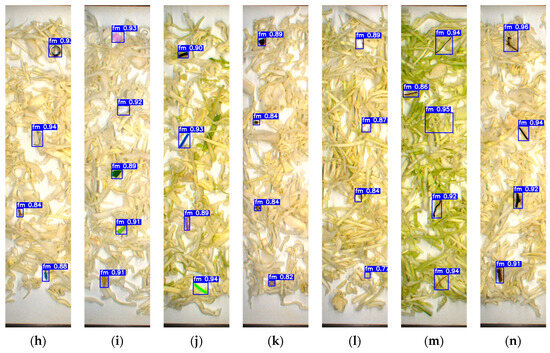

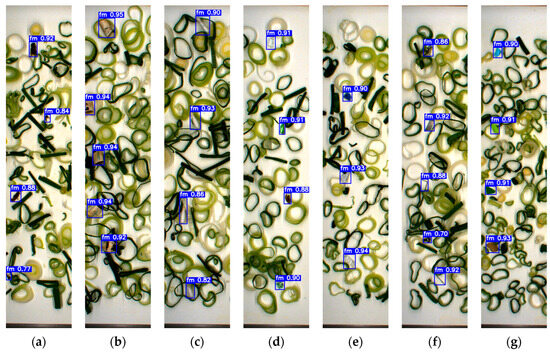

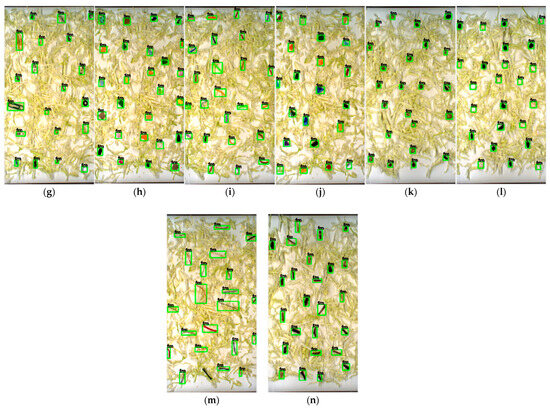

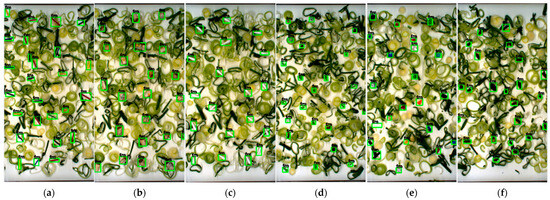

To evaluate the model’s reliability, a separate testing dataset of 500 unannotated images containing FMs and fresh-cut vegetables was prepared and excluded from the training phase to maintain data integrity. As shown in Figure 5 and Figure 6, YOLOv7x accurately classified various FMs based on type, shape, color, and size. The model successfully detected FMs that closely resembled the sample and conveyor background, including transparent plastic, green plastic, paper, insects, stones, Styrofoam, and glass fragments. Notably, it also identified very small FMs such as staples, bolts (Figure 5h), thread (Figure 5m and Figure 6l), and mosquitoes (Figure 6a), despite the detection challenges they presented.

Figure 5.

Detection results based on the testing dataset of cabbage: (a) insects; (b) hard plastic; (c) tiny plastic; (d) cigarette butt; (e) cotton bud; (f) glass; (g) cable; (h) metal; (i) paper; (j) rubber; (k) stone; (l) Styrofoam; (m) thread; (n) wood.

Figure 6.

Detection results based on the testing dataset set of green onion: (a) insect; (b) cigarette butt; (c) cotton bud; (d) glass; (e) hard plastic; (f) metal; (g) paper; (h) rubber; (i) stone; (j) Styrofoam; (k) tiny plastic; (l) thread; (m) cable; (n) wood.

3.2. Performance of YOLOv7x in Detecting Each Category of FMs

The robustness of the YOLOv7x model in detecting FMs was evaluated across 14 FM categories (FM-1) under real-time conditions, with detection results presented in Table 6 and Table 7 for cabbage and green onion samples, respectively. Overall, the model exhibited high detection accuracy across both food matrices, though performance varied depending on sample complexity. For cabbage, the model achieved detection rates exceeding 95% in nearly all categories. Particularly high accuracies were observed for hard plastic (98.78%), soft plastic (98.41%), insects (98.25%), and glass (97.33%), with flawless detection (100%) achieved for cigarette butts, metal, wood, and stones. This consistent performance can be attributed to the relatively uniform texture and color of cabbage, which likely enhanced object-background contrast and minimized false detections. In contrast, detection accuracy in green onion samples was slightly lower for certain FM types. While the model maintained perfect detection (100%) for cigarette butts, threads, metal, and wood, reduced accuracy was observed for Styrofoam (88.46%), insects (94.59%), and soft plastic (93.75%). The more complex visual structure of green onions—characterized by overlapping layers, glossy reflections, and broader chromatic variations—introduced additional visual noise, increasing the likelihood of missed detections or partial bounding-box localization.

Table 7.

The detection accuracy for each type of FMs with YOLOv7x is based on real-time in green onion.

Furthermore, the detection accuracy across different FM categories varied according to their intrinsic visual properties. Materials with high color contrast and distinct textures, such as metal, wood, and paper, achieved near-perfect accuracy. Conversely, translucent or color-similar materials, including Styrofoam, soft plastic, and glass, exhibited slightly lower accuracy due to weak edge gradients and background blending. These observations highlight that color contrast, surface reflectance, and object size strongly influence detection reliability.

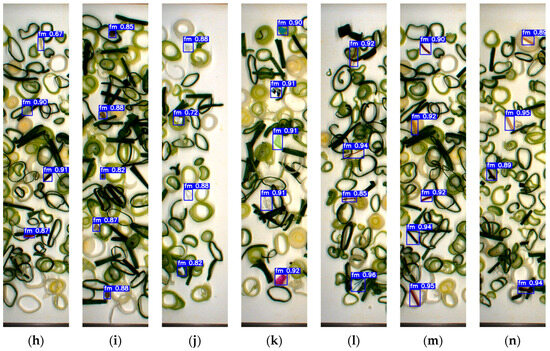

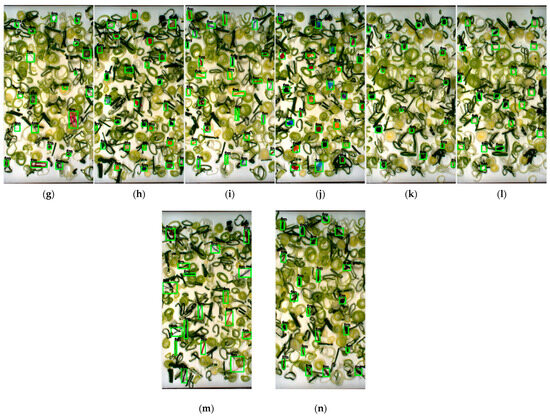

Despite these challenges, the YOLOv7x model consistently demonstrated strong detection capability across both vegetable types, maintaining accuracy above 95% in most FM categories. Notably, it also succeeded in identifying very small FMs, as illustrated in Figure 7 and Figure 8, a task that typically challenges real-time detection algorithms. It should be noted that the label ‘fm’ appearing within the green bounding boxes represents the single class of FM detected in this study. The label is automatically generated during real-time detection, while the green bounding boxes visually indicate the presence of detected FMs. This robustness confirms the model’s suitability for practical, real-time FM inspection in fresh-cut vegetable production environments.

Figure 7.

Detecting each category of FM based on YOLOv7x in cabbage sample: (a) cable; (b) cigarette butt; (c) cotton bud; (d) glass; (e) hard plastic; (f) insect; (g) metal; (h) paper; (i) rubber; (j) tiny plastic; (k) stone; (l) Styrofoam; (m) thread; (n) wood.

Figure 8.

Detecting each category of FM based on YOLOv7x in green onion sample: (a) cable; (b) cigarette butt; (c) cotton bud; (d) glass; (e) hard plastic; (f) insect; (g) metal; (h) paper; (i) rubber; (j) tiny-plastic; (k) stone; (l) Styrofoam; (m) thread; (n) wood.

3.3. Performance of the YOLOv7x Model on an Unrecognized FM Dataset

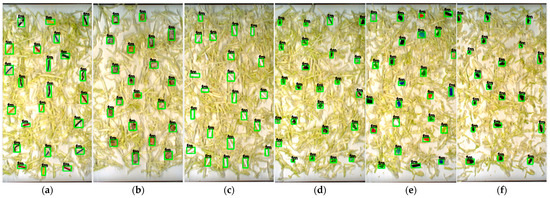

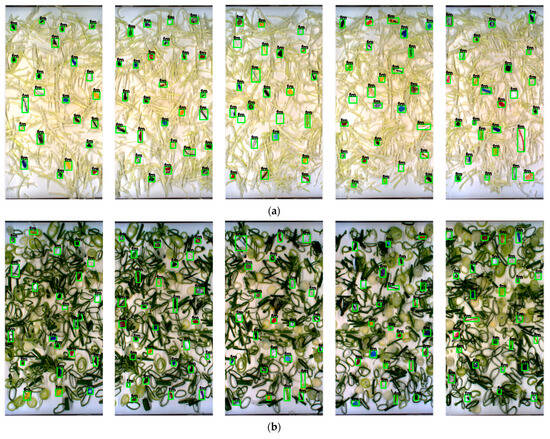

The robustness of the YOLOv7x model was evaluated under real-time conditions using a separate set of FMs (FM-2) that were not included in the training dataset. This evaluation aimed to examine the model’s generalization capability in detecting previously unseen objects with varying shapes, sizes, and colors across different vegetable matrices. A total of 247 FMs were randomly introduced into cabbage and green onion samples. As presented in Table 8, the YOLOv7x model achieved a detection accuracy of 99.18% for cabbage and 98.40% for green onion, confirming its ability to identify and classify objects not encountered during training. Representative detection results of the YOLOv7x model for the FM-2 dataset are illustrated in Figure 9. In these visual results, the label ‘fm’ shown within the green bounding boxes denotes the sole class of FM identified in this study. This label is automatically produced by the real-time detection system, whereas the green bounding boxes distinctly mark the locations of detected FMs. These results demonstrate the model’s stable detection of unfamiliar FMs across both vegetable types under real-time operational conditions.

Table 8.

Accuracy of unrecognized FMs (FM-2) detection in cabbage and green onion samples.

Figure 9.

Detection of unrecognized FMs by the YOLOv7x model: (a) Cabbage; (b) Green onion.

3.4. Performance of the Developed Inspection System in Real-Time

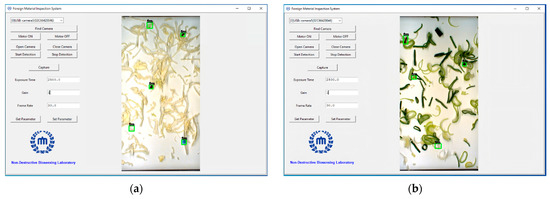

To facilitate the practical deployment of the YOLOv7x model for real-time FM detection in fresh-cut vegetables, a user-friendly software interface was developed, seamlessly integrated with the conveyor system and synchronized sensing units. As depicted in Figure 10, the software interface was designed to be intuitive and efficient, allowing operators to control and monitor the detection process easily. Key parameters, such as the exposure time, gain, and camera frame rate, were stabilized to ensure a consistent detection performance across different operating conditions.

Figure 10.

User interface for system operation and real-time visualization of FM detection in fresh-cut vegetables: (a) cabbage; (b) green onion.

The software was equipped with several critical functionalities to enhance the user experience. The “Find Camera” button allowed the system to verify camera connectivity, ensuring the detection process could begin without technical issues. Upon activation of the motor and opening of the camera, the conveyor belt was synchronized with the camera’s operation, enabling smooth real-time detection. The system displayed a field of view (FOV) of 15 × 30 cm, where the detection process occurred at a rapid speed of 0.025 s per frame, ensuring that even high-throughput operations could be handled effectively. To mitigate potential interference from external light sources, the detection setup, illustrated in Figure 11, included strategically placed white LED lights and cameras within a controlled darkroom environment. This setup ensured that the detection was not compromised by varying light conditions, a critical consideration in ensuring consistent performance in diverse processing environments.

Figure 11.

Photograph of an online detection system based on the YOLOv7x algorithm installed in a fresh-cut vegetable processing unit to detect FMs.

The hardware and software specifications of the proposed detection system are presented in Table 9.

Table 9.

Developed inspection system environment.

The system is configured with a high-performance setup comprising an NVIDIA GeForce RTX 3060 GPU, which, in combination with the PyTorch deep learning framework, enables efficient execution of the YOLOv7x model. Software synchronization with the conveyor unit, along with the implementation of CUDA 10.6 for computational acceleration, allows the system to meet real-time processing requirements with minimal latency.

To evaluate the performance of the YOLOv7x model under varying operational conditions, its detection accuracy was tested at three different conveyor belt speeds: high (69.88 cm/s), medium (36.73 cm/s), and low (18.40 cm/s). In each trial, FMs were randomly inserted into fresh-cut cabbage and green onion samples using a feeder unit designed to minimize overlapping between FMs and vegetable pieces. The samples were then dropped onto the conveyor belt and passed through the camera’s field of view, where the YOLOv7x model detected and marked the FMs using green bounding boxes displayed in the output window (Figure 10). The detection results for each speed level are summarized in Table 10.

Table 10.

Conveyor belt speed variation on FM detection accuracy on fresh-cut vegetables.

4. Discussion

This study demonstrated the effectiveness of the YOLOv7x algorithm for real-time detection of FMs in fresh-cut vegetables. Among the six YOLO variants evaluated, YOLOv7x consistently achieved the highest overall performance in terms of precision, recall, F1-score, and mAP, confirming its suitability for developing an automated inspection system. The superior performance of YOLOv7x can be attributed to its architectural design, which includes a deeper and wider backbone–neck structure and the E-ELAN module that enhances gradient flow and parameter utilization. This configuration strengthens multi-scale feature extraction and fusion, enabling the model to capture fine-grained object details that lighter architectures (such as YOLOv5 or YOLOv8) might miss under identical training settings. Additionally, the spatially enriched path aggregation and re-parameterized convolution layers further enhance contextual learning, allowing YOLOv7x to effectively detect small, irregular, and partially occluded FMs commonly found in vegetable products.

The real-time integration of the YOLOv7x model within a Python-based interface successfully synchronized the camera and conveyor belt systems. This configuration enabled stable online operation, achieving detection accuracies exceeding 95% across diverse FM categories, including challenging samples such as transparent plastics, stones, insects, and metallic fragments. The model also maintained high performance when tested with previously unseen samples (FM-2), yielding detection accuracies of 99.18% in cabbage and 98.14% in green onion. These results confirm the generalization ability of the proposed system and its robustness under practical operating conditions.

Conveyor speed was found to significantly affect detection reliability. The model achieved optimal performance at a medium belt speed of 36.73 cm/s, with accuracies of 97.92% for cabbage and 95.00% for green onion. At higher speeds (69.88 cm/s), accuracy declined to 87.88% and 86.67%, respectively, mainly due to motion blur, partial occlusion, and incomplete feature capture. Conversely, although accuracy remained high at the lowest speed (18.40 cm/s), object overlap occasionally reduced image clarity. These results indicate that medium conveyor speed provides the best balance between throughput and accuracy. The decline in accuracy at higher speeds can be attributed to limited light capture and shorter exposure time (2.5 ms), which reduce image brightness and affect transparent or small objects such as thin plastic films and Styrofoam fragments. This observation is consistent with previous studies, which report operational speeds including ≥30 cm/s for seaweed inspection [62], 1 m/s for fresh-cut lettuce [30], 25 cm/s for fresh-cut vegetables [15], 5 cm/s for sweet potatoes [68], and 33 cm/s for detecting defects in Angelica dahurica tablets [69], depending on the material characteristics and system design. Therefore, optimal operating speed should be carefully tuned based on the physical properties of the product and the response capabilities of the inspection system.

While the YOLOv7x-based inspection system demonstrated strong real-time performance, several limitations remain before large-scale industrial implementation can be achieved. The reported real-time capability primarily reflects inference time, excluding other latency factors such as image acquisition, data transmission, and feedback display. Moreover, system-level delays that may affect overall throughput were not explicitly measured. Long-term stability under continuous operation has also not been evaluated, which is essential for assessing durability and fault tolerance. Furthermore, detection reliability under extreme illumination variations and object overlaps remains a challenge that warrants further optimization.

Future research should focus on addressing these limitations through the development of an end-to-end real-time framework encompassing all processing stages—from image acquisition to decision feedback. Optimization strategies may include dynamic adjustment of camera shutter speed and frame rate, image compression, parallel data handling, and hardware–software synchronization to enhance responsiveness and stability. Additionally, incorporating adaptive illumination modules or multispectral imaging (e.g., hyperspectral, NIR, or 3D imaging) could improve the visibility of low-contrast or semi-transparent materials. Evaluating model performance across different vegetable varieties, maturity levels, and production batches would also strengthen generalization for industrial deployment.

The implications of this work extend beyond vegetable inspection. The YOLOv7x-based system offers strong potential as a real-time, high-speed, and high-accuracy inspection layer that can be integrated into broader food production or precision agriculture pipelines. Coupling the detection system with robotic manipulators for automated contaminant removal could form a closed-loop quality assurance framework, thereby enhancing operational efficiency and ensuring product safety and consistency in large-scale food processing environments.

5. Conclusions

This study rigorously compared six YOLO sub-variants (YOLOv5s, YOLOv5x, YOLOv7-tiny, YOLOv7x, YOLOv8s, YOLOv8x) for FM detection in fresh-cut vegetables using a dataset of 2971 images. YOLOv7x delivered the highest overall performance (precision 99.80%, recall 99.00%, mAP 99.80%, F1-score 0.994), and, when integrated via a Python-based interface, enabled real-time operation on a conveyor–camera setup. The system achieved >95% detection accuracy across diverse FM categories—including transparent plastics, stones, insects, and staples—generalized well to previously unseen FMs (FM-2: 99.18% in cabbage; 98.14% in green onion) and reached its best throughput–accuracy trade-off at a conveyor speed of 36.73 cm/s. These findings confirm YOLOv7x as a strong candidate for deployment in automated inspection of fresh-cut produce.

Notwithstanding these strengths, several factors may limit immediate industrial adoption. Performance degrades under partial embedding or overlaps of FMs and under strong illumination fluctuations; system-level latencies (image acquisition, transmission, display/actuation) and long-term operational stability were not quantified. Practical rollout will also require attention to economic and operational constraints (imaging hardware, operator training, and sufficiently diverse datasets per commodity). Future work should therefore prioritize robustness and adaptability—through improved feature learning for occlusion/low-contrast scenes, illumination control or multispectral integration, end-to-end latency profiling, and expanded, batch-diverse datasets—and explore closed-loop integration with robotic manipulators for automatic contaminant removal. Collectively, these efforts can translate the demonstrated detector-level performance into a reliable, scalable, and cost-effective industrial inspection solution that enhances food safety and efficiency.

Author Contributions

Conceptualization, methodology, investigation, formal analysis, data curation, writing—original draft, H.K. (Hary Kurniawan); formal analysis, data curation, software, M.A.A.A.; formal analysis, visualization, writing-review and editing, B.M.; formal analysis, data curation, H.K. (Hangi Kim) and S.L.; validation, writing—review and editing, M.S.K. and I.B.; validation, writing—review and editing, funding acquisition, supervision, B.-K.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by Chungnam National University, Republic of Korea.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Tunny, S.S.; Amanah, H.Z.; Faqeerzada, M.A.; Wakholi, C.; Kim, M.S.; Baek, I.; Cho, B.K. Multispectral Wavebands Selection for the Detection of Potential Foreign Materials in Fresh-Cut Vegetables. Sensors 2022, 22, 1775. [Google Scholar] [CrossRef]

- Raffo, A.; Paoletti, F. Fresh-Cut Vegetables Processing: Environmental Sustainability and Food Safety Issues in a Comprehensive Perspective. Front. Sustain. Food Syst. 2022, 5, 681459. [Google Scholar] [CrossRef]

- Sucheta; Singla, G.; Chaturvedi, K.; Sandhu, P.P. Status and Recent Trends in Fresh-Cut Fruits and Vegetables. In Fresh-Cut Fruits and Vegetables; Elsevier: Amsterdam, The Netherlands, 2020; pp. 17–49. [Google Scholar]

- Archive Market Research. Fresh-Cut Vegetables Market Size, Share, Trends, and Forecast, 2025–2033; Archive Market Research: Pune, India, 2024. [Google Scholar]

- Statista Research Department. Fresh Vegetables-South Korea; Statista: Hamburg, Germany, 2025. [Google Scholar]

- Čapla, J.; Zajác, P.; Fikselová, M.; Bobková, A.; Belej, Ľ.; Janeková, V. Analysis of the incidence of foreign bodies in european foods. J. Microbiol. Biotechnol. Food Sci. 2019, 9, 370–375. [Google Scholar] [CrossRef]

- Payne, K.; O’Bryan, C.A.; Marcy, J.A.; Crandall, P.G. Detection and Prevention of Foreign Material in Food: A Review. Heliyon 2023, 9, e19574. [Google Scholar] [CrossRef]

- U.S. Food and Drug Administration. U.S. Food and Drug Administration Ben’s OriginalTM Issues Voluntary Recall of Select Ben’s Original Long Grain White, Whole Grain Brown, and Ready Rice Products; U.S. Food and Drug Administration: Silver Spring, MD, USA, 2025.

- SGS Digicomply (FoodChain ID). Foreign Objects on the Rise: Wood, Metal, and Plastic in Your Food—What’s Going Wrong? SGS Digicomply: Geneva, Switzerland, 2024. [Google Scholar]

- Urazoe, K.; Kuroki, N.; Maenaka, A.; Tsutsumi, H.; Iwabuchi, M.; Fuchuya, K.; Hirose, T.; Numa, M. Automated Fish Bone Detection in X-Ray Images with Convolutional Neural Network and Synthetic Image Generation. IEEJ Trans. Electr. Electron. Eng. 2021, 16, 1510–1517. [Google Scholar] [CrossRef]

- Key Technology. Industry Report: Sorting Fresh-Cut Produce; Key Technology: Walla Walla, WA, USA, 2023. [Google Scholar]

- Astute Analytica. X-Ray Food Inspection Equipment Market Report, 2024–2032; Astute Analytica: New Delhi, India, 2024. [Google Scholar]

- Pigłowski, M. Food Hazards on the European Union Market: The Data Analysis of the Rapid Alert System for Food and Feed. Food Sci. Nutr. 2020, 8, 1603–1627. [Google Scholar] [CrossRef]

- RSA Group. Contamination and Product Recall: Food & Drink; RSA Insurance Group: London, UK, 2016; Available online: http://ec.europa.eu/food/safety/ (accessed on 12 June 2022).

- Lohumi, S.; Cho, B.-K.; Hong, S. LCTF-Based Multispectral Fluorescence Imaging: System Development and Potential for Real-Time Foreign Object Detection in Fresh-Cut Vegetable Processing. Comput. Electron. Agric. 2021, 180, 105912. [Google Scholar] [CrossRef]

- Patel, K.K.; Kar, A.; Jha, S.N.; Khan, M.A. Machine Vision System: A Tool for Quality Inspection of Food and Agricultural Products. J. Food Sci. Technol. 2012, 49, 123–141. [Google Scholar] [CrossRef]

- Everard, C.D.; Kim, M.S.; Lee, H. A Comparison of Hyperspectral Reflectance and Fluorescence Imaging Techniques for Detection of Contaminants on Spinach Leaves. J. Food Eng. 2014, 143, 139–145. [Google Scholar] [CrossRef]

- He, D.F.; Yoshizawa, M. Metal Detector Based on High-Tc RF SQUID. Phys. C Supercond. Appl. 2002, 378–381, 1404–1407. [Google Scholar] [CrossRef]

- Morita, K.; Ogawa, Y.; Thai, C.N.; Tanaka, F. Soft X-Ray Image Analysis to Detect Foreign Materials in Foods. Food Sci. Technol. Res. 2003, 9, 137–141. [Google Scholar] [CrossRef]

- Ricci, M.; Crocco, L.; Vipiana, F. Microwave Imaging Device for In-Line Food Inspection. In Proceedings of the 14th European Conference on Antennas and Propagation, EuCAP 2020, Copenhagen, Denmark, 15–20 March 2020. [Google Scholar] [CrossRef]

- Sugiyama, T.; Sugiyama, J.; Tsuta, M.; Fujita, K.; Shibata, M.; Kokawa, M.; Araki, T.; Nabetani, H.; Sagara, Y. NIR Spectral Imaging with Discriminant Analysis for Detecting Foreign Materials among Blueberries. J. Food Eng. 2010, 101, 244–252. [Google Scholar] [CrossRef]

- Pallav, P.; Diamond, G.G.; Hutchins, D.A.; Green, R.J.; Gan, T.H. A Near-Infrared (NIR) Technique for Imaging Food Materials. J. Food Sci. 2009, 74, 23–33. [Google Scholar] [CrossRef]

- Voss, J.O.; Doll, C.; Raguse, J.D.; Beck-Broichsitter, B.; Walter-Rittel, T.; Kahn, J.; Böning, G.; Maier, C.; Thieme, N. Detectability of Foreign Body Materials Using X-Ray, Computed Tomography and Magnetic Resonance Imaging: A Phantom Study. Eur. J. Radiol. 2021, 135, 109505. [Google Scholar] [CrossRef]

- Khairi, M.T.M.; Ibrahim, S.; Yunus, M.A.M.; Wahap, A.R. Detection of Foreign Objects in Milk Using an Ultrasonic System. Indones. J. Electr. Eng. Comput. Sci. 2019, 15, 1241–1249. [Google Scholar] [CrossRef]

- Tang, T.; Zhang, M.; Mujumdar, A.S. Intelligent Detection for Fresh-cut Fruit and Vegetable Processing: Imaging Technology. Compr. Rev. Food Sci. Food Saf. 2022, 21, 5171–5198. [Google Scholar] [CrossRef] [PubMed]

- Mohd Khairi, M.T.; Ibrahim, S.; Md Yunus, M.A.; Faramarzi, M. Noninvasive Techniques for Detection of Foreign Bodies in Food: A Review. J. Food Process. Eng. 2018, 41, 12808. [Google Scholar] [CrossRef]

- Graves, M.; Smith, A.; Batchelor, B. Approaches to Foreign Body Detection in Foods. Trends Food Sci. Technol. 1998, 9, 21–27. [Google Scholar] [CrossRef]

- Einarsdóttir, H.; Emerson, M.J.; Clemmensen, L.H.; Scherer, K.; Willer, K.; Bech, M.; Larsen, R.; Ersbøll, B.K.; Pfeiffer, F. Novelty Detection of Foreign Objects in Food Using Multi-Modal X-Ray Imaging. Food Control 2016, 67, 39–47. [Google Scholar] [CrossRef]

- Rizalman, N.F.I.S.; Rashid, W.N.A.; Lokmanulhakim, N.; Mohamad, E.J. Development of Foreign Material Detection in Food Sensor Using Electrical Resistance Technique. Int. J. Integr. Eng. 2019, 11, 277–285. [Google Scholar] [CrossRef]

- Mo, C.; Kim, G.; Kim, M.S.; Lim, J.; Cho, H.; Barnaby, J.Y.; Cho, B.-K. Fluorescence Hyperspectral Imaging Technique for Foreign Substance Detection on Fresh-Cut Lettuce. J. Sci. Food Agric. 2017, 97, 3985–3993. [Google Scholar] [CrossRef]

- Senni, L.; Ricci, M.; Palazzi, A.; Burrascano, P.; Pennisi, P.; Ghirelli, F. On-Line Automatic Detection of Foreign Bodies in Biscuits by Infrared Thermography and Image Processing. J. Food Eng. 2014, 128, 146–156. [Google Scholar] [CrossRef]

- Sharma, A.K.; Nguyen, H.H.C.; Bui, T.X.; Bhardwa, S.; Van Thang, D. An Approach to Ripening of Pineapple Fruit with Model Yolo V5. In Proceedings of the 2022 IEEE 7th International conference for Convergence in Technology (I2CT), Mumbai, India, 7–9 April 2022; pp. 1–5. [Google Scholar]

- Wang, Y.; Zhang, C.; Wang, Z.; Liu, M.; Zhou, D.; Li, J. Application of Lightweight YOLOv5 for Walnut Kernel Grade Classification and Endogenous Foreign Body Detection. J. Food Compos. Anal. 2024, 127, 105964. [Google Scholar] [CrossRef]

- Xia, Y.; Nguyen, M.; Yan, W.Q. A Real-Time Kiwifruit Detection Based on Improved YOLOv7. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2023; Volume 13836 LNCS, pp. 48–61. ISBN 9783031258244. [Google Scholar]

- Li, Z.; Zhu, Y.; Sui, S.; Zhao, Y.; Liu, P.; Li, X. Real-Time Detection and Counting of Wheat Ears Based on Improved YOLOv7. Comput. Electron. Agric. 2024, 218, 108670. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, S.; Chen, Y.; Xia, Y.; Wang, H.; Jin, R.; Wang, C.; Fan, Z.; Wang, Y.; Wang, B. Detection of Small Foreign Objects in Pu-Erh Sun-Dried Green Tea: An Enhanced YOLOv8 Neural Network Model Based on Deep Learning. Food Control 2025, 168, 110890. [Google Scholar] [CrossRef]

- Lu, J.; Lee, S.-H.; Kim, I.-W.; Kim, W.-J.; Lee, M.-S. Small Foreign Object Detection in Automated Sugar Dispensing Processes Based on Lightweight Deep Learning Networks. Electronics 2023, 12, 4621. [Google Scholar] [CrossRef]

- Kurniawan, H.; Andi Arief, M.A.; Manggala, B.; Lee, S.; Kim, H.; Cho, B.K. Advanced Detection of Foreign Objects in Fresh-Cut Vegetables Using YOLOv5. LWT 2024, 212, 116989. [Google Scholar] [CrossRef]

- Edwards, M.C.; Stringer, M.F. Observations on Patterns in Foreign Material Investigations. Food Control 2007, 18, 773–782. [Google Scholar] [CrossRef]

- Caparros Megido, R.; Sablon, L.; Geuens, M.; Brostaux, Y.; Alabi, T.; Blecker, C.; Drugmand, D.; Haubruge, É.; Francis, F. Edible Insects Acceptance by B Elgian Consumers: Promising Attitude for Entomophagy Development. J. Sens. Stud. 2014, 29, 14–20. [Google Scholar] [CrossRef]

- Choi, J.; Lee, S.I.; Rackerby, B.; Moppert, I.; McGorrin, R.; Ha, S.-D.; Park, S.H. Potential Contamination Sources on Fresh Produce Associated with Food Safety. J. Food Hyg. Saf. 2019, 34, 1–12. [Google Scholar] [CrossRef]

- Gil, M.I.; Selma, M.V.; López-Gálvez, F.; Allende, A. Fresh-Cut Product Sanitation and Wash Water Disinfection: Problems and Solutions. Int. J. Food Microbiol. 2009, 134, 37–45. [Google Scholar] [CrossRef]

- Jocher, G.; Stoken, A.; Borovec, J.; NanoCode012; ChristopherSTAN; Liu, C.; Laughing; Hogan, A.; Lorenzomammana; Tkianai; et al. ultralytics/yolov5: v3.0; Zenodo: Geneva, Switzerland, 2020. [Google Scholar] [CrossRef]

- Fan, Y.; Zhang, S.; Feng, K.; Qian, K.; Wang, Y.; Qin, S. Strawberry Maturity Recognition Algorithm Combining Dark Channel Enhancement and YOLOv5. Sensors 2022, 22, 419. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Dong, Z.; Gao, M. Improved YOLOv5 Network for Real-Time Multi-Scale Traffic Sign Detection. Neural. Comput. Appl. 2023, 35, 7853–7865. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, X.; Yan, J.; Qiu, X.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W. A Wheat Spike Detection Method in UAV Images Based on Improved YOLOv5. Remote Sens. 2021, 13, 3095. [Google Scholar] [CrossRef]

- Wang, H.; Shang, S.; Wang, D.; He, X.; Feng, K.; Zhu, H. Plant Disease Detection and Classification Method Based on the Optimized Lightweight YOLOv5 Model. Agriculture 2022, 12, 931. [Google Scholar] [CrossRef]

- Zhao, Z.; Yang, X.; Zhou, Y.; Sun, Q.; Ge, Z.; Liu, D. Real-Time Detection of Particleboard Surface Defects Based on Improved YOLOV5 Target Detection. Sci. Rep. 2021, 11, 21777. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Zhang, D.; Guo, X.; Yang, H. Lightweight Algorithm for Apple Detection Based on an Improved YOLOv5 Model. Plants 2023, 12, 3032. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Thakuria, A.; Erkinbaev, C. Improving the Network Architecture of YOLOv7 to Achieve Real-Time Grading of Canola Based on Kernel Health. Smart Agric. Technol. 2023, 5, 100300. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Jabed, M.R.; Shamsuzzaman, M. YOLObin: Non-Decomposable Garbage Identification and Classification Based on YOLOv7. J. Comput. Commun. 2022, 10, 104–121. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8; Ultralytics: London, UK, 2023; Available online: https://github.com/ultralytics/ultralytics (accessed on 22 July 2025).

- Gašparović, B.; Mauša, G.; Rukavina, J.; Lerga, J. Evaluating YOLOV5, YOLOV6, YOLOV7, and YOLOV8 in Underwater Environment: Is There Real Improvement? In Proceedings of the 2023 8th International Conference on Smart and Sustainable Technologies (SpliTech), Split/Bol, Croatia, 20–23 June 2023; pp. 1–4. [Google Scholar]

- Mahboob, Z.; Zeb, A.; Khan, U.S. YOLO v5, v7 and v8: A Performance Comparison for Tobacco Detection in Field. In Proceedings of the 2023 3rd International Conference on Digital Futures and Transformative Technologies (ICoDT2), Islamabad, Pakistan, 3–4 October 2023; pp. 1–6. [Google Scholar]

- Wang, X.; Li, H.; Yue, X.; Meng, L. A Comprehensive Survey on Object Detection YOLO. In Proceedings of the 5th International Symposium on Advanced Technologies and Applications in the Internet of Things (ATAIT 2023), Kusatsu, Japan, 28–29 August 2023; Available online: http://ceur-ws.org (accessed on 6 March 2024).

- Sharma, A.; Kumar, V.; Longchamps, L. Comparative Performance of YOLOv8, YOLOv9, YOLOv10, YOLOv11 and Faster R-CNN Models for Detection of Multiple Weed Species. Smart Agric. Technol. 2024, 9, 100648. [Google Scholar] [CrossRef]

- Nkuzo, L.; Sibiya, M.; Markus, E.D. A Comprehensive Analysis of Real-Time Car Safety Belt Detection Using the YOLOv7 Algorithm. Algorithms 2023, 16, 400. [Google Scholar] [CrossRef]

- Gai, R.; Liu, Y.; Xu, G. TL-YOLOv8: A Blueberry Fruit Detection Algorithm Based on Improved YOLOv8 and Transfer Learning. IEEE Access 2024, 12, 86378–86390. [Google Scholar] [CrossRef]

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object Detection Using YOLO: Challenges, Architectural Successors, Datasets and Applications. Multimed. Tools Appl. 2023, 82, 9243–9275. [Google Scholar] [CrossRef] [PubMed]

- Kwak, D.H.; Son, G.J.; Park, M.K.; Kim, Y.D. Rapid Foreign Object Detection System on Seaweed Using Vnir Hyperspectral Imaging. Sensors 2021, 21, 5279. [Google Scholar] [CrossRef]

- Jiang, J.; Cen, H.; Zhang, C.; Lyu, X.; Weng, H.; Xu, H.; He, Y. Nondestructive Quality Assessment of Chili Peppers Using Near-Infrared Hyperspectral Imaging Combined with Multivariate Analysis. Postharvest Biol. Technol. 2018, 146, 147–154. [Google Scholar] [CrossRef]

- Chen, S.-H.; Jang, J.-H.; Chang, Y.-R.; Kang, C.-H.; Chen, H.-Y.; Liu, K.F.-R.; Lee, F.-L.; Hsueh, Y.-S.; Youh, M.-J. An Automatic Foreign Matter Detection and Sorting System for PVC Powder. Appl. Sci. 2022, 12, 6276. [Google Scholar] [CrossRef]

- Wang, C.; Zhao, J.; Yu, Z.; Xie, S.; Ji, X.; Wan, Z. Real-Time Foreign Object and Production Status Detection of Tobacco Cabinets Based on Deep Learning. Appl. Sci. 2022, 12, 10347. [Google Scholar] [CrossRef]

- Jubayer, F.; Soeb, J.A.; Mojumder, A.N.; Paul, M.K.; Barua, P.; Kayshar, S.; Akter, S.S.; Rahman, M.; Islam, A. Detection of Mold on the Food Surface Using YOLOv5. Curr. Res. Food Sci. 2021, 4, 724–728. [Google Scholar] [CrossRef]

- Meng, J.; Kang, F.; Wang, Y.; Tong, S.; Zhang, C.; Chen, C. Tea Buds Detection in Complex Background Based on Improved YOLOv7. IEEE Access 2023, 11, 88295–88304. [Google Scholar] [CrossRef]

- Xu, J.; Lu, Y. Prototyping and Evaluation of a Novel Machine Vision System for Real-Time, Automated Quality Grading of Sweetpotatoes. Comput. Electron. Agric. 2024, 219, 108826. [Google Scholar] [CrossRef]

- Qin, Z.; Li, X.; Yan, L.; Cheng, P.; Huang, Y. Real-time Detection of Angelica Dahurica Tablet Using YOLOX_am. J. Food Process Eng. 2023, 46, e14480. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).