1. Introduction

Kiwiberry (

Actinidia arguta (Siebold & Zucc.) Planch. ex Miq.), commonly referred to as hardy or baby kiwi, is a dioecious climbing woody plant and represents one of the most broadly distributed members within the genus

Actinidia [

1]. Kiwiberry is widely distributed in nature, with its range extending across Northeast China, Shandong Province, as well as Russia, Japan, and Korea [

2]. Compared to kiwifruit, kiwiberry is smaller in size, has a smooth skin, and can be consumed whole. It not only has a higher nutritional value, being rich in over twenty essential nutrients and a range of vitamins, but also holds a significant position among “superfoods”. Moreover, kiwiberry possesses anti-tumor and antioxidant properties, further enhancing its health benefits [

3]. In recent years, kiwiberry has gained widespread popularity among global consumers due to its rich nutritional profile and health benefits, demonstrating tremendous market potential [

4].

Kiwiberry is a dioecious plant, making effective pollination crucial for fruit set and final fruit quality [

5]. Under natural circumstances, the typical pollinators of the kiwi berry are insects and wind. The short flowering period of kiwiberry, lasting only 4 to 5 days, coupled with adverse weather conditions and the competition from nearby crops more attractive to pollinators, often results in insufficient pollination, negatively impacting fruit size, shape, and yield, ultimately reducing its market value [

6]. Therefore, selecting a more efficient and stable pollination method is the main issue at present.

Artificial pollination can compensate for the limitations of insect and wind-mediated pollination, serving as a primary method to improve the pollination rate of kiwiberry. However, traditional artificial pollination exhibits limited operational efficiency, requires substantial labor input, and entails relatively high labor expenses [

7]. In recent years, the advancement of smart agriculture has promoted the emergence of intelligent pollination robots, which are anticipated to serve as alternatives to conventional manual pollination. They not only improve pollination efficiency but also reduce labor intensity and save pollen, making them highly significant in practical applications [

8]. Due to the asynchronous flowering period of kiwiberry, both flowers and buds are always present simultaneously. Therefore, the intelligent pollination robot needs to have the ability to distinguish between flowers and buds, ensuring the maximization of pollination efficiency and quality, while avoiding unnecessary pollen waste. Therefore, this study simultaneously detects flowers and buds of kiwiberry, which not only facilitates precise pollination but also enables the prediction of the flowering peak by monitoring the number of flowers and buds, thereby estimating the optimal pollination timing.

Detecting kiwiberry flowers and buds in natural orchard environments remains challenging due to dense clustering, variable morphology, and frequent occlusions. Traditional object detection methods relying on manually designed features such as color, shape, and edge often perform poorly under these conditions, resulting in reduced detection accuracy, weak robustness, and limited real-time applicability [

9]. With the advancement of computer vision techniques, deep learning–based object detection methods have been increasingly introduced into agricultural image analysis. Compared with conventional detection approaches, deep learning models typically provide higher detection accuracy, better adaptability to complex orchard environments, and faster inference speed [

10]. Currently, deep learning–based detection frameworks can be generally categorized into two-stage and one-stage paradigms. Two-stage detectors (e.g., Faster R-CNN [

11]) first generate region proposals before performing classification and regression. Although this design enhances recognition performance, it also brings considerable computational overhead and slower inference, making such models less suitable for real-time detection of kiwiberry flowers and buds [

12]. In contrast, one-stage detectors represented by the You Only Look Once (YOLO [

13]) family directly perform classification and localization on dense prediction maps without intermediate region proposal generation. This architectural simplicity improves inference speed while maintaining competitive accuracy, making one-stage models more appropriate for real-time orchard detection tasks.

In recent years, numerous YOLO-based architectures have been tailored to address the challenges of flower and bud detection in complex orchard environments. Zhao et al. [

14] developed the CR-YOLOv5s model to enhance the detection accuracy of chrysanthemum flowers and buds in cluttered backgrounds. By substituting the convolutional layers in the YOLOv5s backbone with RepVGG [

15] modules and integrating the Coordinate Attention (CA) [

16] mechanism, the model achieved enhanced feature representation and spatial focus, reaching a mean average precision (mAP) of 93.9%, 4.5% higher than the baseline YOLOv5s. Bai et al. [

17] proposed a YOLOv7-based model to address the detection of small and overlapping strawberry flowers and fruits with high color similarity. A high-resolution detection head based on the Swin Transformer [

18] captured fine spatial details, while a GS-ELAN neck optimization module reduced computational cost without compromising accuracy, achieving an mAP of 92.1% and demonstrating robustness and real-time performance. Ren et al. [

19] presented FPG-YOLO for detecting stamens of ‘Yuluxiang’ pear flowers to enable low-cost, high-precision automated pollination. Ghost Convolution (GhostConv) [

20] layers replaced most convolutional blocks in YOLOv5n to reduce parameters and computation, while the parameter-free average attention mechanism (PfAAM) [

21] was integrated into the C3 module to enhance feature extraction, improving mAP by 1.2% and reducing model size and computational cost by 0.65 MB and 0.53 GFLOPs, respectively. Wang et al. [

22] introduced GhP2-YOLO for detecting small objects such as rapeseed flowers and buds. By adding a P2 detection head and GhostConv modules to YOLOv8m and designing a lightweight C3Ghost module, the model enhanced sensitivity to small targets while maintaining compactness, achieving an mAP of 95.5%. Xia et al. [

23] proposed a hybrid model, MTYOLOX, integrating YOLOX [

24] with the Deformable Attention Transformer (DAT) [

25] and Swin Transformer to improve apple inflorescence detection. The newly designed DATCSP and Swin-TCSP layers enhanced multi-scale semantic representation and contextual learning, resulting in higher accuracy and robustness under complex orchard conditions. Overall, these studies demonstrate that integrating transformer or attention mechanisms into YOLO frameworks substantially improves the detection of small and occluded targets in agricultural imagery. Nevertheless, such enhancements often increase model complexity and computational cost, thereby limiting deployment on resource-constrained devices. Moreover, the cross-species generalizability of current YOLO-based flower detection models remains insufficiently explored, particularly for crops with dense and heterogeneous floral distributions.

In summary, although deep learning-based YOLO models have been extensively utilized for flower detection, studies specifically targeting kiwiberry flowers are still scarce and encounter several challenges. These challenges primarily arise from the asynchronous blooming period of kiwiberry flowers and the dense distribution of flowers and buds with similar colors and varying sizes. Furthermore, complex natural backgrounds, illumination differences, and frequent occlusions in orchard environments further complicate the detection task. Therefore, to address these issues, this study introduces an efficient real-time detection model for kiwiberry flowers, YOLO11s-RFBS, developed based on improvements to YOLO11s [

26]. The main contributions of this work are summarized as follows:

- (1)

A kiwiberry flower dataset was established, encompassing multiple illumination conditions, different flower density distributions, and varied occlusion levels, thereby offering diverse and representative data samples for kiwiberry flower detection.

- (2)

Based on the YOLO11s model, four targeted improvements were introduced to enhance the accuracy of detecting kiwiberry flowers and buds. First, the P2 detection head was incorporated to replace the original P5 head, enabling the model to better capture more detailed features of small flowers and buds. Second, Receptive-field attention convolution (RFAConv) was integrated into the backbone to substitute standard convolutional blocks, allowing the model to emphasize feature importance under different receptive field positions and to alleviate parameter sharing issues in convolutional kernels. Third, the C3k2-Faster module was adopted to reduce redundant computation and memory access, thereby improving the efficiency of feature extraction. Finally, a weighted bidirectional feature pyramid slim neck network (BIFPSNN) was employed to enhance multi-scale feature fusion and reduce overall model complexity. Experimental results on the kiwiberry flower dataset demonstrate that the YOLO11s-RFBS model achieves superior performance compared with other mainstream object detection models.

- (3)

The YOLO11s-RFBS model was implemented on the Jetson Orin Nano platform (NVIDIA Corporation, Santa Clara, CA, USA), where it achieved a detection speed exceeding 21 FPS, thus satisfying real-time detection requirements. Even under constrained computational and memory conditions, the model maintained accurate and efficient detection of kiwiberry flowers and buds, laying a technical foundation for the development of intelligent pollination robots.

2. Materials and Methods

2.1. Image Data Acquisition

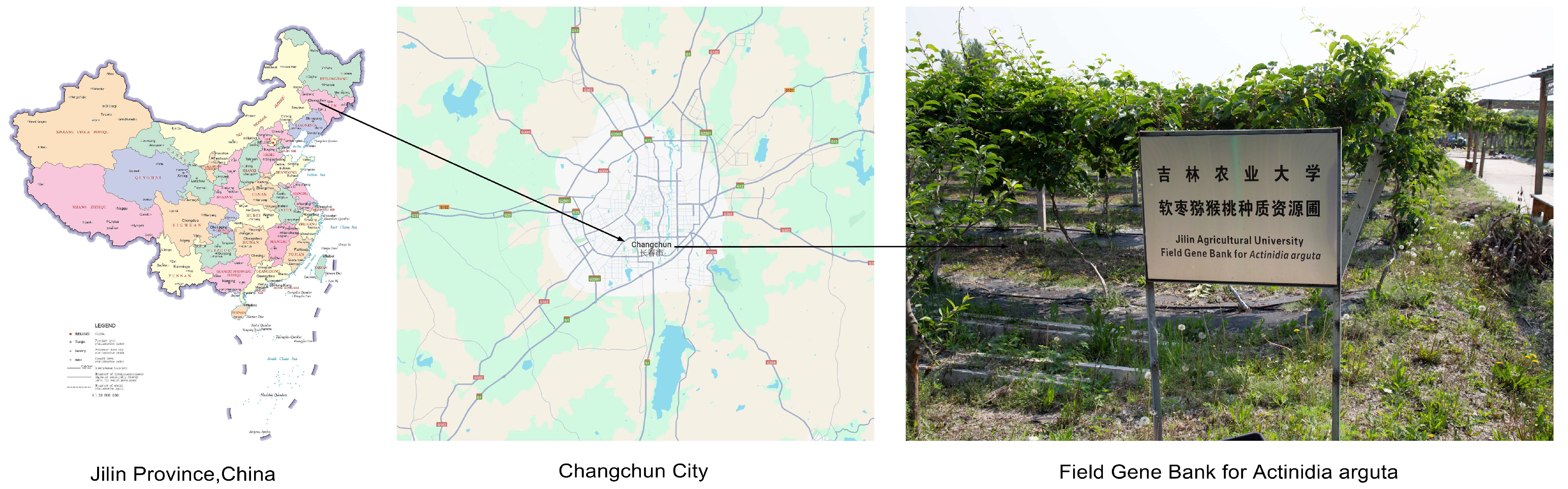

The kiwiberry flower and bud image data used in this study were collected in June 2024 at the Field Gene Bank for Actinidia arguta of Jilin Agricultural University, located in Changchun, Jilin Province, China, as illustrated in

Figure 1.

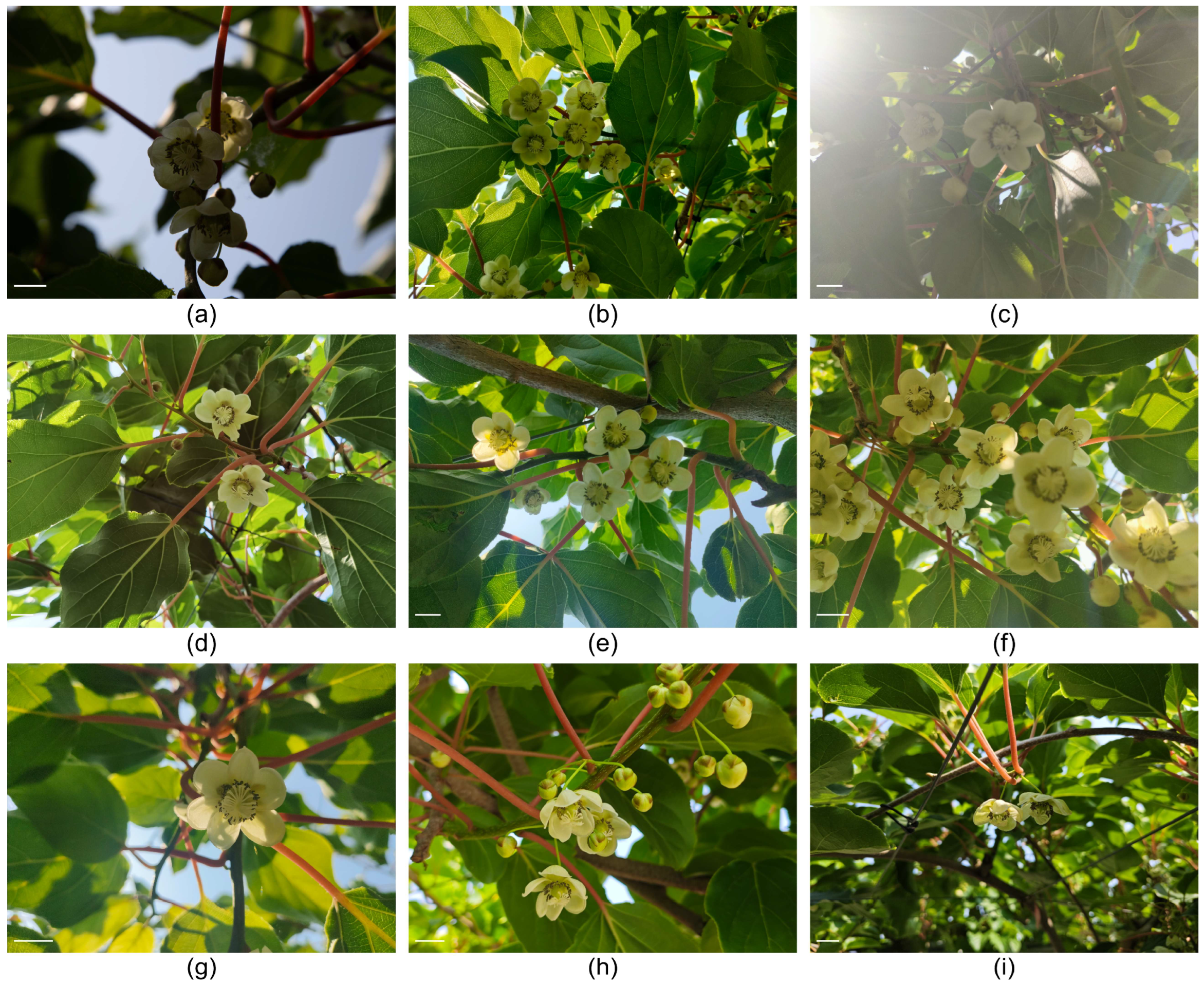

In kiwiberry orchards, a T-frame cultivation structure is typically adopted, causing the flowers and buds to hang downward from the canopy. A Digital Single-Lens Reflex camera (Canon EOS 6D; Canon Inc., Tokyo, Japan) with a resolution of 5742 × 3648 was used to capture the images from an upward-facing viewpoint beneath the flowers. To ensure the model’s applicability in real orchard environments, the dataset includes images under diverse conditions, such as different illumination levels, flower densities, and degrees of occlusion. After manual screening, 1492 original images were retained, with representative examples presented in

Figure 2.

2.2. Dataset Establishment and Image Data Augmentation

In this study, 1492 original images were selected and partitioned into three subsets: a training set containing 1194 images, a validation set with 149 images, and a test set with 149 images, corresponding to an 8:1:1 split ratio. The flowers and buds in the training and validation sets were manually annotated using the LabelImg 1.8.6 tool (

https://github.com/tzutalin/labelImg, accessed on 11 June 2024). The annotations for flowers and flower buds (hereafter referred to as “flower” and “bud”, respectively) were labeled accordingly. For clarity, the term “bud” in this study exclusively denotes flower buds, excluding other types of buds such as leaf or shoot buds. The annotation files were exported in XML format to support subsequent image data augmentation. The overall annotation workflow is shown in

Figure 3.

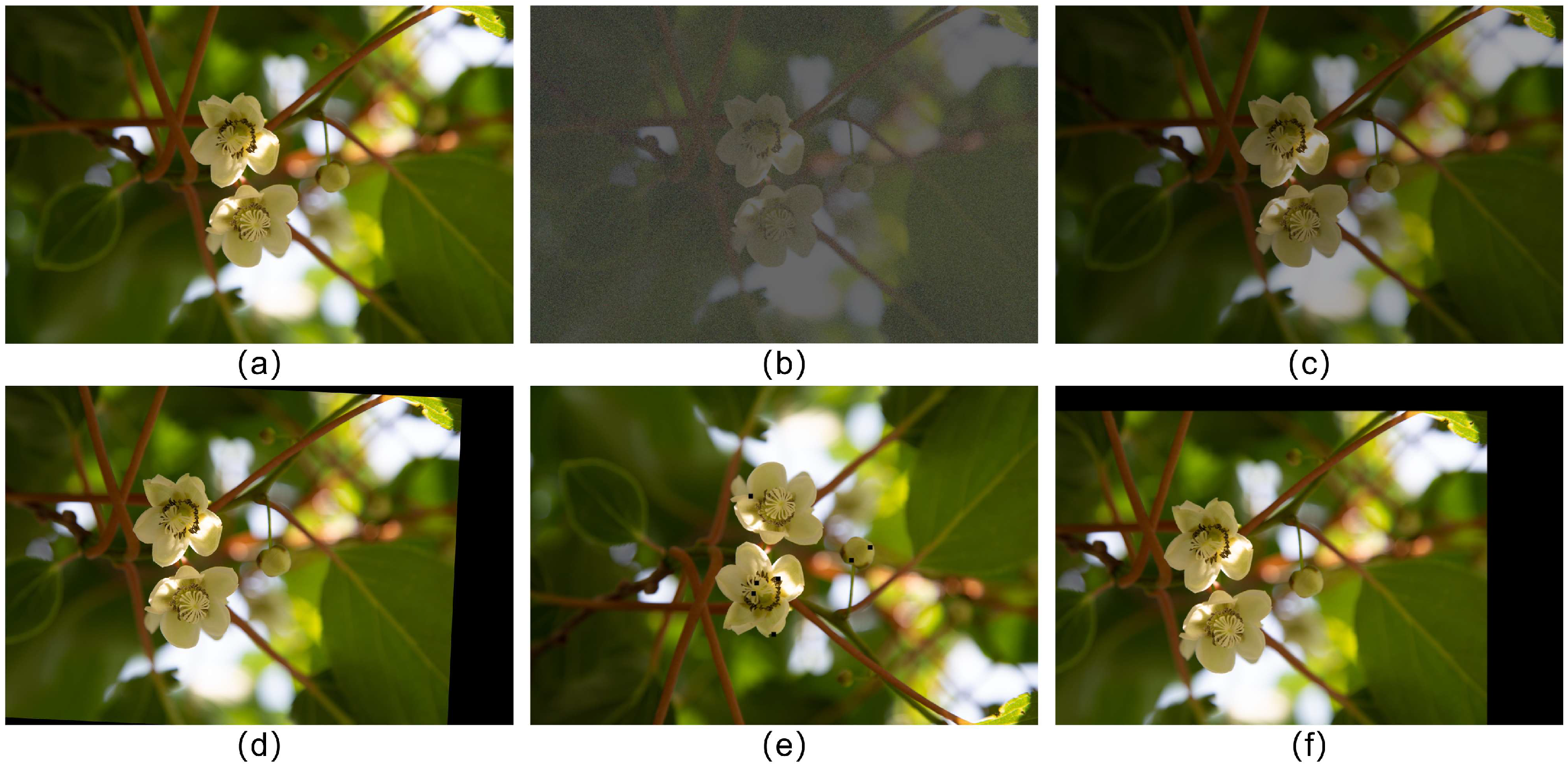

To mitigate overfitting arising from the limited dataset size and to enhance the model’s generalization and robustness, data augmentation operations were applied solely to the training set images in this work [

27]. This includes adding noise, rotation, translation, flipping, brightness adjustment, cutout, and their combinations.

Figure 4 illustrates examples of the original image and some of the augmented images. The addition of noise and the cutout method simulate common disturbances and object occlusions in orchards, enhancing the model’s robustness. Image rotation, flipping, and translation were employed to simulate the diverse conditions encountered by detection devices in orchard environments, thereby enhancing the model’s robustness. In addition, brightness adjustment was used to reduce the influence of illumination variability on detection performance [

28]. Following these data augmentation procedures, the training set increased from 1194 labeled images to 4776 images, resulting in a total of 5074 images in the complete dataset. The validation and test sets were kept unaltered and did not undergo any augmentation, ensuring an objective and unbiased assessment of the model’s generalization performance on unseen data. Furthermore, all annotation files were converted from XML to the TXT format required by the YOLO model.

2.3. Overview of the YOLO11 Model

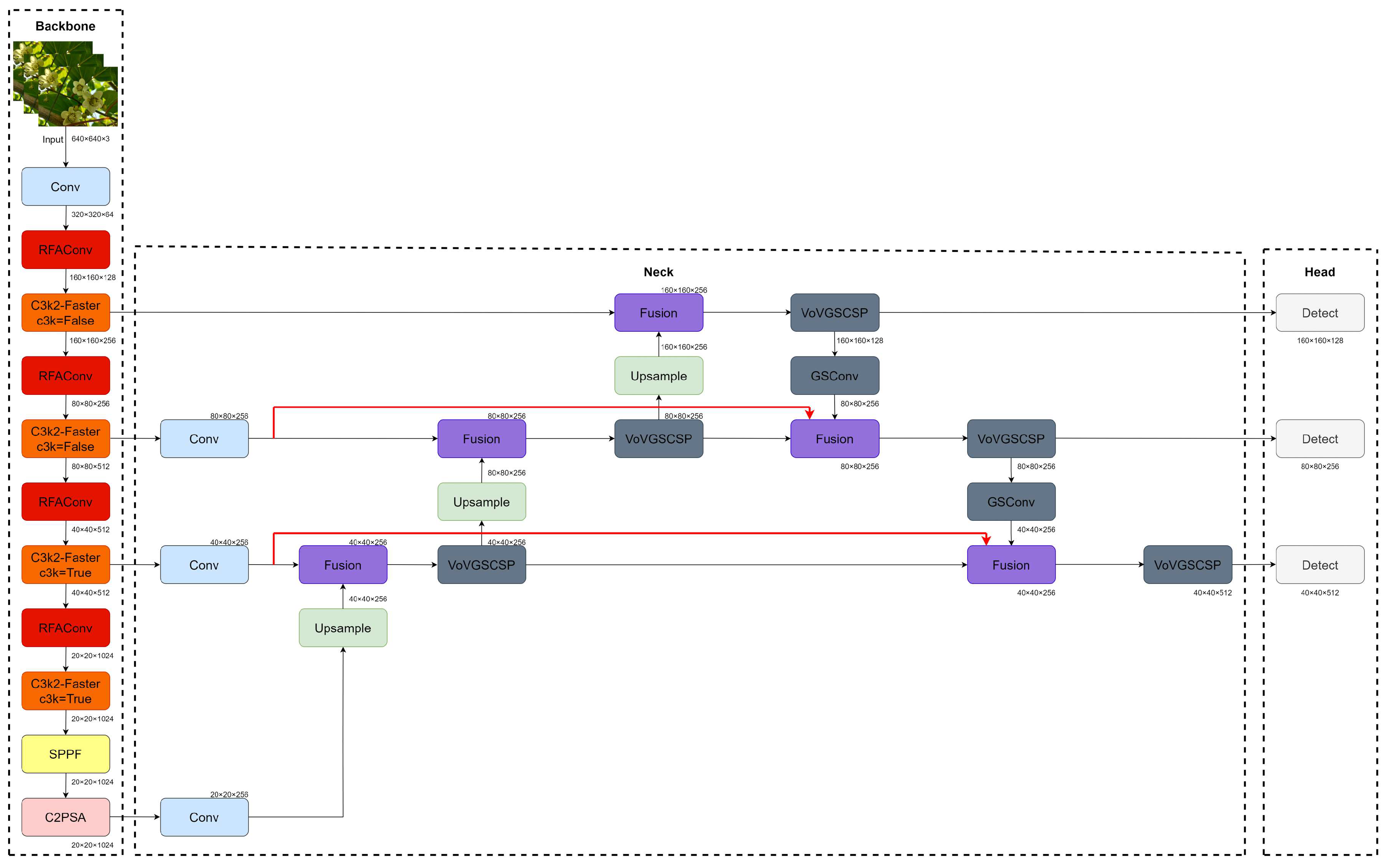

YOLO11, the latest efficient model released by Ultralytics (Frederick, MD, USA), inherits the strengths of previous YOLO versions while incorporating new architectural refinements. These improvements enhance inference speed and reduce model complexity without compromising detection accuracy, making YOLO11 well-suited for real-time application scenarios. It supports multiple computer vision tasks, including object detection, instance segmentation, image classification, and pose estimation, ranking it among the current State-of-the-Art models. Its overall architecture remains consistent with earlier YOLO versions, consisting of a backbone, neck, and detection head, as shown in

Figure 5. YOLO11 introduces several architectural improvements over YOLOv8, which are summarized as follows:

- (1)

Firstly, YOLO11 introduces the C3k2 module to replace the original C2f module. The C3k2 module is derived from the Cross Stage Partial (CSP) bottleneck design, providing improved flexibility and computational efficiency compared to C2f. Its structural adaptability is controlled by the c3k parameter: when c3k = False, the structure of C3k2 is similar to that of C2f; when c3k = True, the bottleneck within C3k2 is replaced by the C3k structure, enabling the extraction of more complex features [

29].

- (2)

Secondly, YOLO11 retains the Spatial Pyramid Pooling-Fast (SPPF) module and integrates the Cross Stage Partial Bottleneck with 2 Convolutions and Position-Sensitive Attention (C2PSA) module. In this structure, multiple Position-Sensitive Attention (PSA) units are stacked to enable the network to more effectively focus on critical spatial regions in the image, thereby enhancing the ability to learn and distinguish informative features.

- (3)

Finally, YOLO11 continues to employ a decoupled head architecture, in which classification and localization are processed separately. Within the classification branch, depthwise separable convolutions are used instead of standard convolutional layers, which reduces parameter count and computational overhead while maintaining effective cross-channel feature interaction. As a result, the overall model becomes more lightweight. Therefore, YOLO11 is adopted as the baseline model in this study.

2.4. YOLO11s-RFBS

Although YOLO11 performs effectively in general object detection applications, its performance is affected in kiwiberry orchard environments due to the flowers and buds exhibiting variations in size, highly similar color tones, dense clustering, and frequent occlusions. These factors lead to reduced detection accuracy, indicating the need for further optimization. Moreover, since the model is expected to be deployed on edge devices, it is essential to balance model complexity, computational efficiency, and detection accuracy.

Based on a comprehensive evaluation, YOLO11s was selected as the baseline model and further improved to form the enhanced YOLO11s-RFBS. The principal improvements are summarized as follows:

- (1)

The original P5 detection head in YOLO11s is replaced with a P2 detection head, while the P3 and P4 detection heads remain unchanged. Notably, the P5 layer continues to participate in the feature fusion process but is no longer directly connected to the detection branch. Since kiwiberry flowers and buds are small and densely distributed, the low-resolution P5 feature map may result in the loss of fine-grained details. Employing the higher-resolution P2 detection head allows the model to capture more subtle features while simultaneously reducing model parameters and overall size, making it more suitable for the detection task in this study.

- (2)

The standard convolution blocks in the backbone are replaced with RFAConv. By integrating receptive-field-aware spatial attention, RFAConv dynamically reweights spatial features, effectively addressing parameter-sharing limitations in convolution kernels and improving the network’s capacity to represent complex visual information.

- (3)

A lightweight feature extraction module, C3k2-Faster, based on partial convolution (PConv), is introduced to replace the original C3k2 module. This modification reduces redundant computation and memory access while maintaining effective feature extraction capability, contributing to a more efficient backbone.

- (4)

Furthermore, BIFPSNN is adopted as the neck module, enabling adaptive multi-scale feature fusion through learnable weighting while maintaining a lightweight architecture.

Figure 6 illustrates the overall architecture of the proposed YOLO11s-RFBS model, in which four major improvements are incorporated: RFAConv in the backbone, the lightweight C3k2-Faster module, the BIFPSNN structure in the neck, and a revised detection head configuration where a higher-resolution P2 head replaces the original P5 head while the P3 and P4 heads are preserved. The internal composition and operational principles of these modules are further shown in

Figure 7 and

Figure 8 and 10–15 (

Figure 9 shows the visualization of feature maps at an intermediate stage of the YOLO11s backbone).

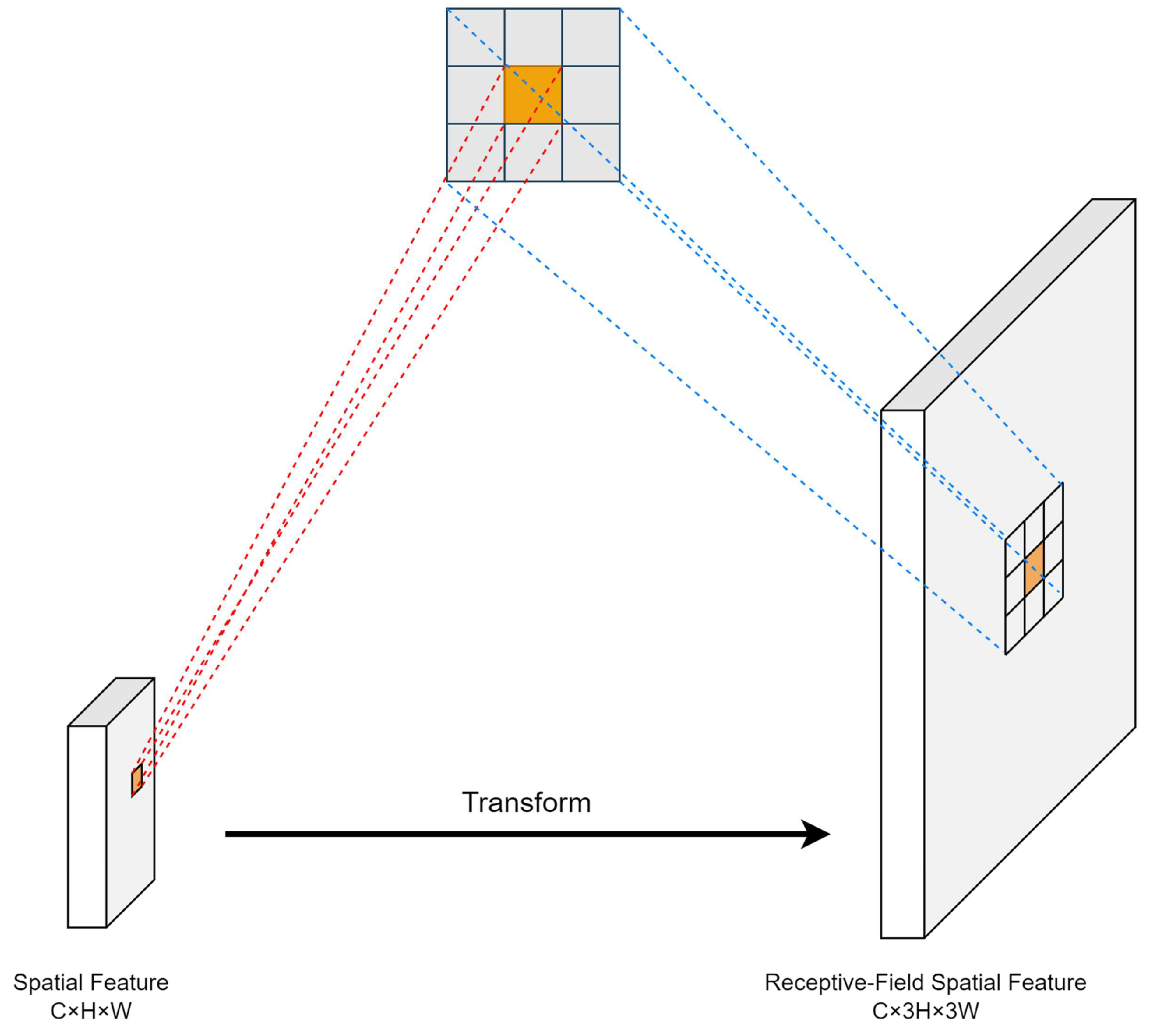

2.5. RFAConv

The detection of kiwiberry flowers and buds is challenging due to their dense clustering, variation in blossom size, high similarity to the background, and frequent occlusions, which collectively increase the complexity of the detection task. In conventional convolutional neural networks (CNNs), the use of shared convolution kernels across spatial positions does not sufficiently model position-dependent feature differences, thereby limiting the network’s ability to capture subtle and fine-grained details in densely distributed floral regions. To mitigate this issue, RFAConv [

30] is introduced in this study. By incorporating receptive-field attention (RFA), RFAConv refines feature weighting across different spatial locations, enabling more effective feature discrimination while requiring only minimal additional parameters and computational overhead. The operating mechanism of RFAConv is shown in

Figure 7.

The primary advantage of RFAConv lies in its ability to embed RFA into the convolution process. The RFA mechanism evaluates spatial context within the receptive field and dynamically assigns weights to different spatial regions, enabling selective feature enhancement during convolution. This mechanism effectively alleviates the parameter-sharing limitation in conventional convolution operations and addresses the difficulty of modeling spatial variability when using large convolution kernels (e.g., 3 × 3). To further clarify the concept of receptive-field spatial features, a detailed explanation is provided in

Figure 8. Considering a 3 × 3 convolution kernel, the spatial features correspond to the features extracted from the original input feature map, while the receptive-field spatial features represent the features obtained after applying receptive-field-aware attention. These transformed features are derived through multiple non-overlapping sliding windows, each corresponding to a 3 × 3 region, aligned with the receptive field utilized during convolution.

In RFAConv, grouped convolutions are employed to efficiently extract receptive-field spatial features, mapping the original feature representation into a new feature domain. Performance is enhanced by learning attention mappings that describe the interaction among receptive-field features. To reduce computational overhead and parameter count, average pooling (AvgPool) is used to aggregate global information within each receptive-field region, followed by 1 × 1 grouped convolutions to integrate information across receptive fields. A Softmax function is then applied to highlight the relative importance of different spatial regions within the receptive field. The computation of RFAConv is defined in Equation (1):

where

denotes a grouped convolution of kernel size

, and Norm represents normalization.

is the input feature map, Arf is the learned attention map, and Frf is the transformed receptive-field spatial feature. The resulting feature map consists of non-overlapping receptive-field regions, with size

, where the height and width are three times those of the input feature map. Thus, a 3 × 3 convolution kernel with a stride = 3 is applied to extract receptive-field features efficiently. The attention map learned by RFAConv adaptively determines the contribution of each receptive-field region and dynamically reweights feature responses. This mechanism enables the model to better capture fine-scale flower and bud details, thereby improving detection accuracy.

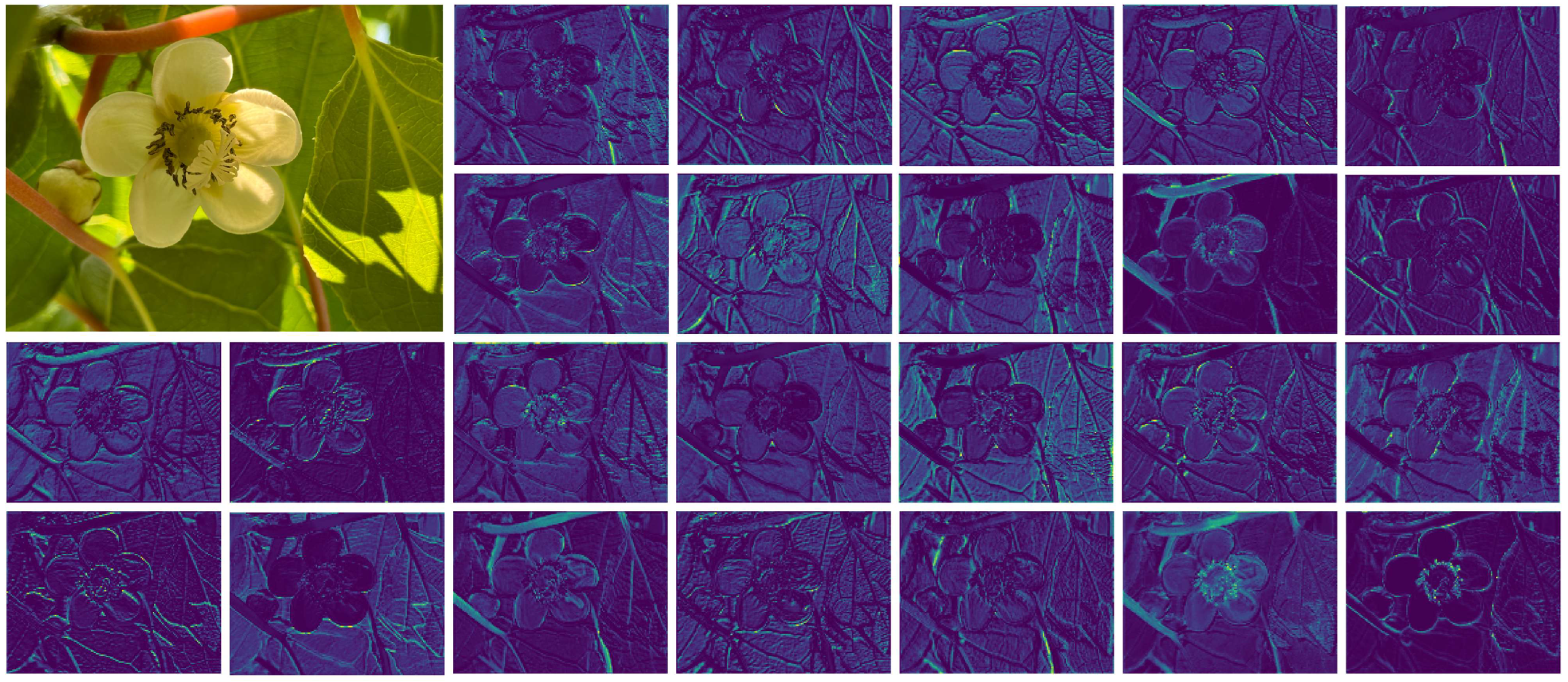

2.6. C3k2-Faster

In the intermediate layers of the YOLO11s backbone, the feature maps of kiwiberry flowers and buds were examined across different channels. The analysis shows that many channels produce highly similar feature responses, as illustrated in

Figure 9. Such channel-wise similarity indicates feature redundancy, which is undesirable for the task of detecting kiwiberry flowers and buds.

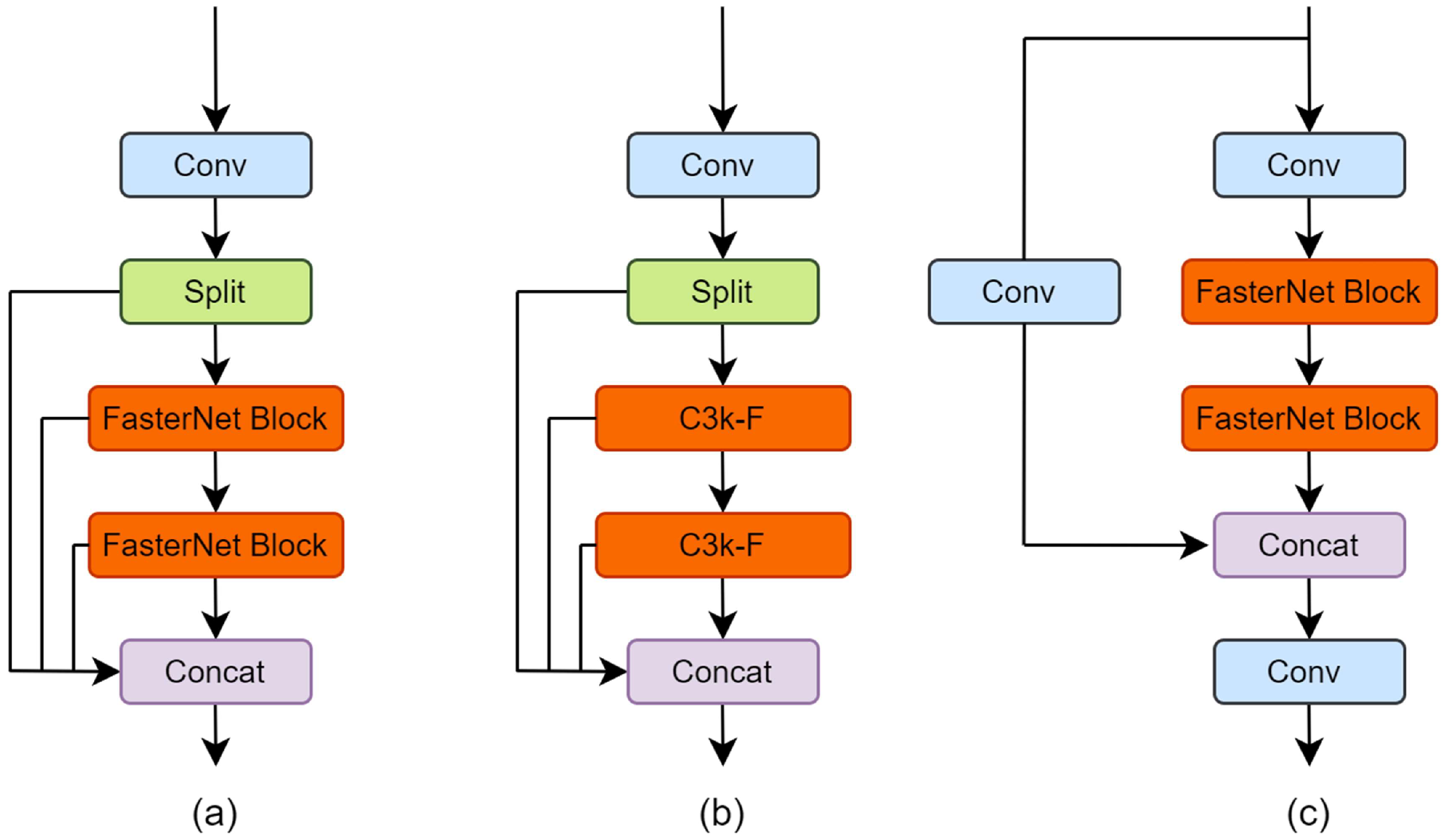

To address the channel redundancy and further reduce computational overhead, this study applies PConv [

31], as illustrated in

Figure 10a. PConv selects only a subset of input channels to represent the feature information and applies standard convolution exclusively to these selected channels, while the remaining channels are passed through unchanged. This strategy significantly lowers the number of floating-point operations (FLOPs) and memory access requirements compared to conventional convolution. When the input and output feature map channels are both

, the FLOPs and memory usage of PConv are given in Equations (2) and (3).

When the partial channel proportion is configured to , the computation required by PConv is compressed to approximately of that of a full standard convolution, and the corresponding memory access overhead is similarly reduced to about of the standard convolution operation.

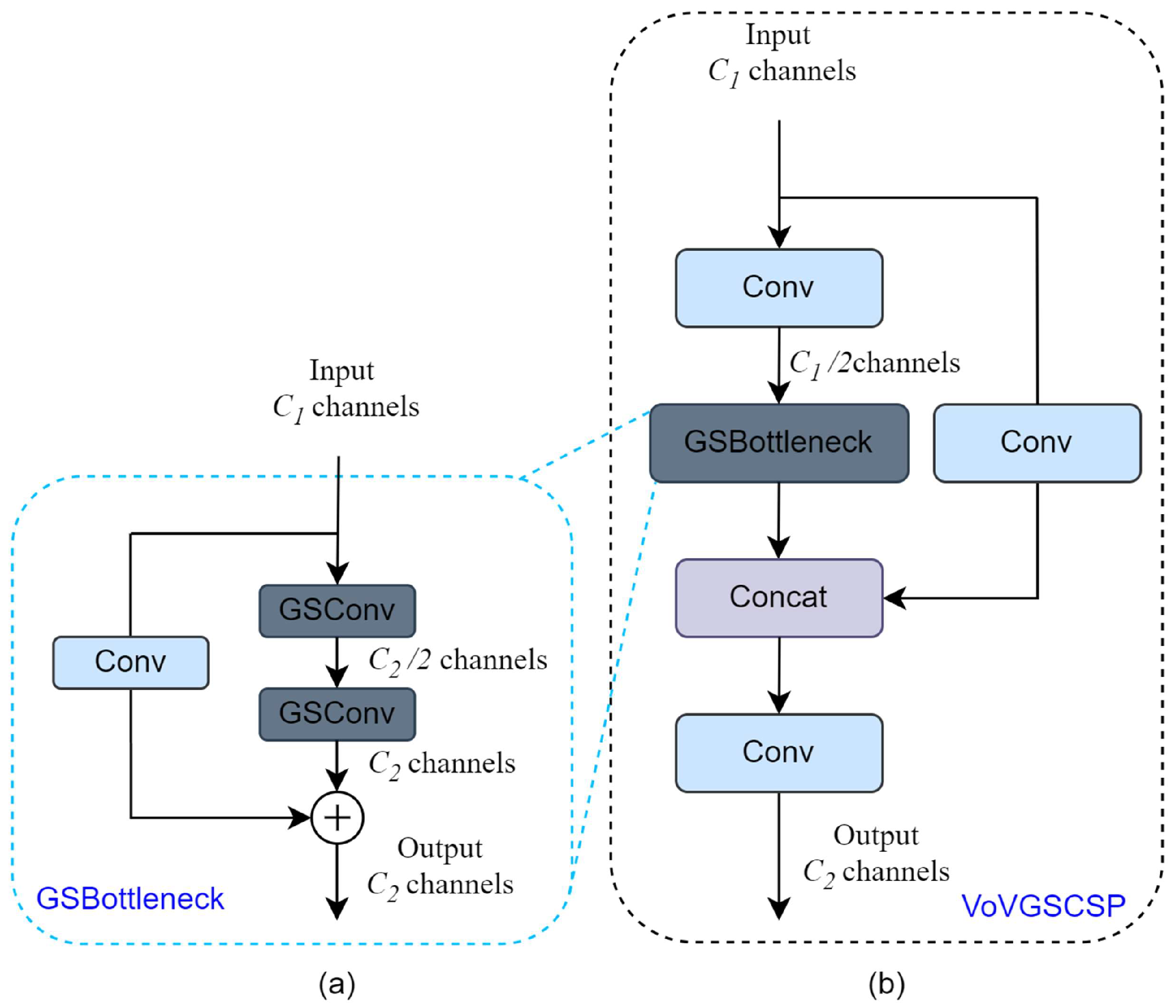

To further improve the feature extraction efficiency of the C3k2 module while maintaining its feature extraction capability, this study replaced the bottleneck module in C3k2 with the FasterNet Block integrated with PConv. The FasterNet Block structure, shown in

Figure 10b, consists of a PConv and two 1 × 1 standard convolutions. It also employs a shortcut connection to facilitate the reuse of input features. Normalization and activation layers are used only after the middle 1 × 1 standard convolution, both preserving feature diversity and achieving lower latency. This study named the proposed feature extraction module C3k2-Faster, and its structure is shown in

Figure 11. The C3k2-Faster module retains the flexibility of the original C3k2 module. The structure of the C3k2-Faster module varies with changes in the c3k value, allowing it to adapt to feature extraction tasks of varying complexity. The C3k2-Faster module demonstrates significant advantages in the detection task of kiwiberry flowers and buds. By reducing FLOPs and memory access, it not only lowers the model’s complexity but also enables more efficient extraction of features relevant to flowers and buds.

To further enhance the feature extraction capability of the C3k2 module while keeping its efficiency, this study replaces the bottleneck component in C3k2 with the FasterNet Block integrated with PConv. As illustrated in

Figure 10b, the FasterNet Block is composed of one PConv layer followed by two standard

convolutions, and incorporates a shortcut pathway that enables the reuse of input features. Normalization and activation are applied only after the final

convolution, which helps maintain feature diversity and reduces computational latency. The improved module is referred to as C3k2-Faster, and its structure is presented in

Figure 11. C3k2-Faster preserves the adaptive flexibility of the original C3k2 design, where the c3k value determines the number of feature extraction branches, enabling the module to adjust to tasks of different complexity. By lowering FLOPs and memory access, C3k2-Faster not only reduces model complexity but also improves the efficiency of extracting features relevant to kiwiberry flowers and buds.

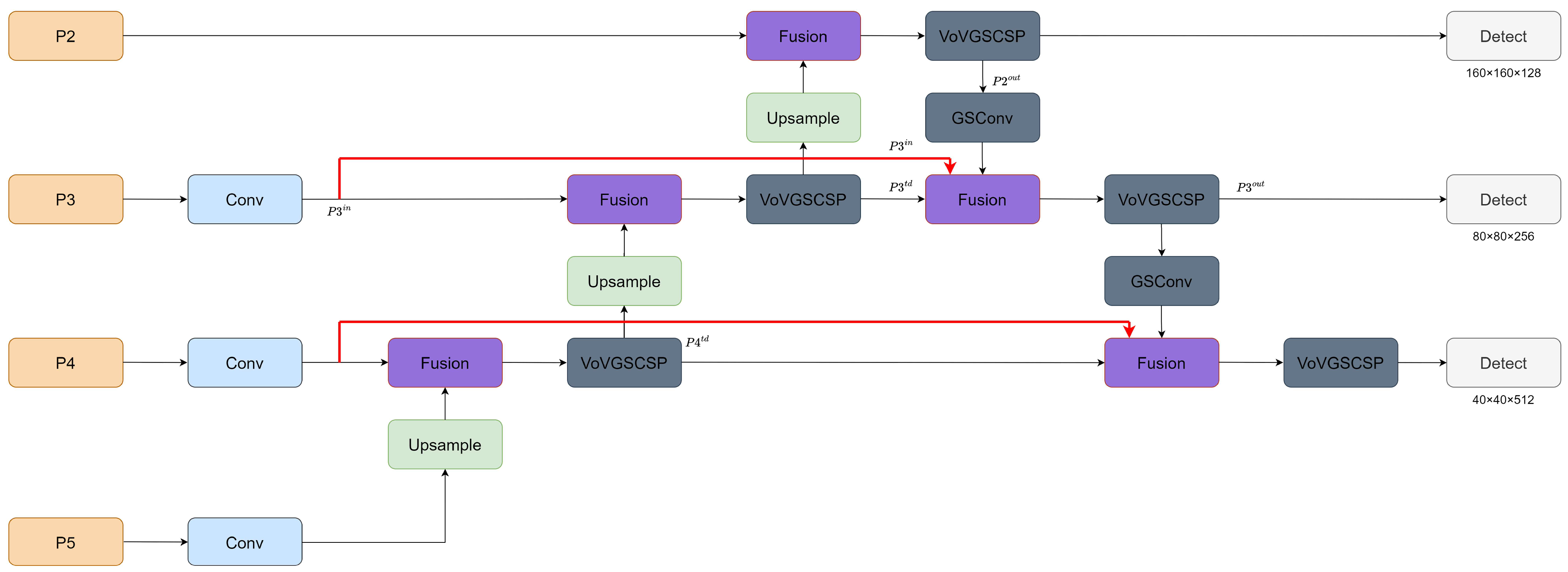

2.7. BIFPSNN

In the baseline YOLO11s architecture, the neck utilizes the Path Aggregation Network (PAN) [

32] to enhance information interaction between low-level and high-level features through top-down and bottom-up pathways.

However, due to the differences in spatial resolution among the input feature maps, their contributions to the fused output are not evenly balanced [

33]. Analysis indicates that the traditional linear aggregation strategy in PAN often causes high-level features to dominate the fusion process, while low-level details are suppressed. This imbalance can result in missed or inaccurate detection of small and densely distributed kiwiberry flowers and buds. In addition, considering the computational limits and deployment constraints of real orchard pollination systems, the neck network must also maintain a lightweight structure. To overcome these issues, this study introduces the BIFPSNN. The design is inspired by the bidirectional feature aggregation mechanism of BiFPN proposed by Tan et al. in EfficientDet [

34], and incorporates the lightweight network design strategy of Slim-neck. This structure enhances multi-scale feature fusion and improves detection accuracy, while also reducing computational overhead, making it better aligned with practical deployment in orchard environments. The structural layout of the designed neck module is illustrated in

Figure 12.

2.7.1. Improved BiFPN

BiFPN is built upon the PAN framework and provides a more efficient bidirectional multi-scale feature aggregation pathway. Unlike PAN, BiFPN introduces learnable fusion weights, allowing the network to dynamically regulate the contribution of each input feature map. Through this adaptive weighting mechanism, the network can appropriately emphasize features at different scales, thereby alleviating the imbalance problem commonly encountered in traditional PAN-based fusion. In addition, BiFPN constructs extra lateral connections between input and output nodes, enabling the integration of a greater number of feature maps without causing a notable rise in computational overhead. In this study, a fast normalization-based weighted fusion strategy is adopted to perform the feature aggregation, as expressed in Equation (4).

In this formulation, and denote learnable fusion weights, corresponds to the input feature maps, and denotes the resulting fused output. To guarantee that the fusion weights remain non-negative (), a ReLU activation is applied to each learnable weight. Furthermore, a small constant is included in the computation to prevent numerical instability.

In the standard neck design of YOLO11s, feature fusion is performed using feature maps from the P5 layer down to the P3 layer, while the P6 and P7 layers are not involved. Specifically, in this study, the P2, P3, P4, and P5 layers correspond to feature outputs from different stages of the backbone. Their spatial resolutions are approximately 1/4, 1/8, 1/16, and 1/32 of the input image, respectively. After input images are resized to 640 × 640, the spatial resolutions of these layers become approximately 160 × 160 for P2, 80 × 80 for P3, 40 × 40 for P4, and 20 × 20 for P5. As the network depth increases from P2 to P5, spatial resolution progressively decreases while semantic abstraction strengthens. Lower-level features (e.g., P2) retain more detailed spatial texture information, whereas higher-level features (e.g., P5) contain richer semantic context.

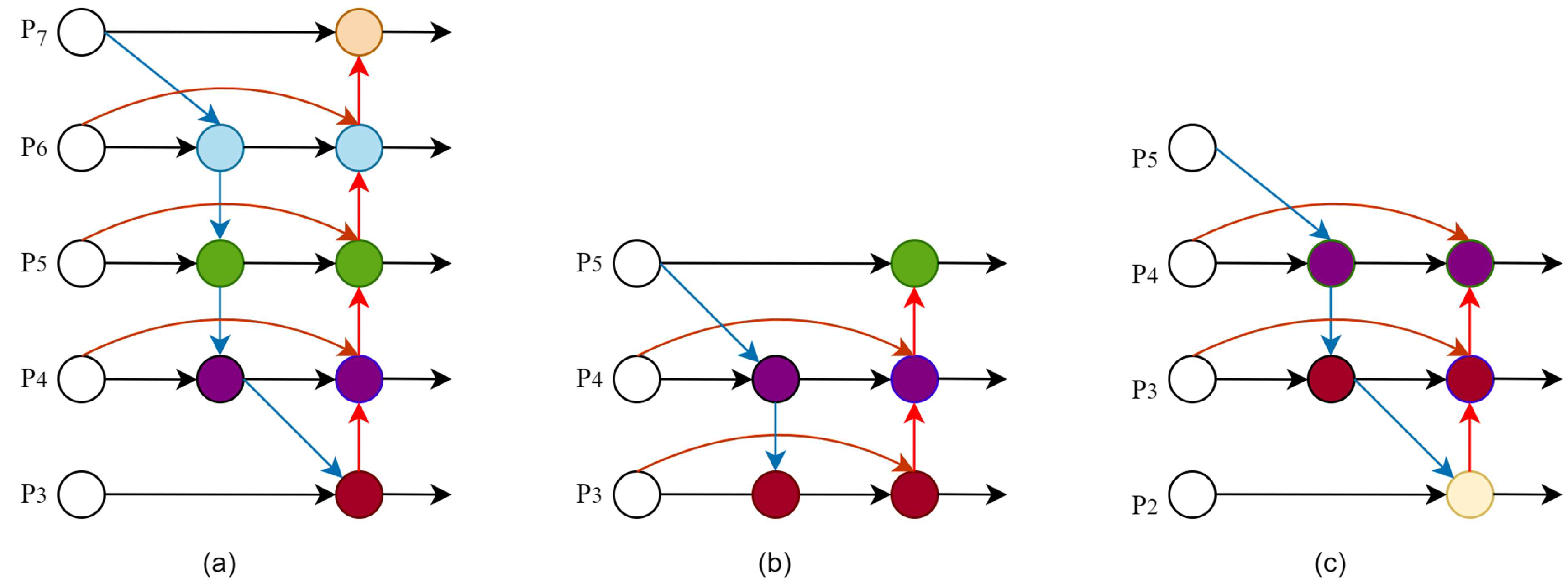

The BiFPN structure constructed on the YOLO11s neck is shown in

Figure 13b. Feature fusion among the P3–P5 layers generally meets the requirements of large-scale object detection. However, for kiwiberry flower and bud detection, the targets are small, densely distributed, and structurally subtle, making P3–P5 fusion alone insufficient for capturing fine spatial details. To alleviate this limitation, an improved BiFPN is introduced, which extends the fusion pathway to include the P2 layer while retaining multi-scale semantic integration from P2–P5. The P5 layer remains part of the feature fusion process but is no longer directly connected to the detection head. The architecture of the improved BiFPN is illustrated in

Figure 13c. This modification enhances the ability to utilize high-resolution spatial detail, thereby improving detection performance for small and densely clustered targets such as kiwiberry flowers and buds. Taking the third BIFPSNN layer (

Figure 12) as an example, the weighted feature fusion process is described in detail, and the fused output features are formulated in Equations (5) and (6).

where

denotes the intermediate feature of the third layer in the top–down path, and

is the corresponding output feature in the bottom–up path. The term “Resize” refers to the upsampling or downsampling operation used to align spatial resolutions across feature maps from different layers. The term “Block” represents the convolutional module used for feature extraction; in this study, the VoVGSCSP module is utilized for this purpose. The improved BiFPN employed here integrates the P2, P3, P4, and P5 layers. By performing multi-scale weighted feature fusion, the network achieves a more balanced contribution across feature levels. As a result, fine-grained spatial information from lower-level features is better preserved, while semantic abstraction from higher-level features is effectively incorporated. This enhances the model’s capability to detect small targets and manage scenes with complex backgrounds, particularly in the detection of kiwiberry flowers and buds, ensuring that subtle details are not overlooked.

2.7.2. Construction of the Slim-Neck Lightweight Neck Using GSConv and VoVGSCSP

After improving detection performance with the enhanced BiFPN, the lightweight capability of the neck network was further strengthened by introducing Group Shuffle Convolution (GSConv) [

35]. This adjustment enables the complete BIFPSNN neck architecture to better satisfy the deployment demands of edge devices and the computational resource constraints typical of practical orchard pollination scenarios.

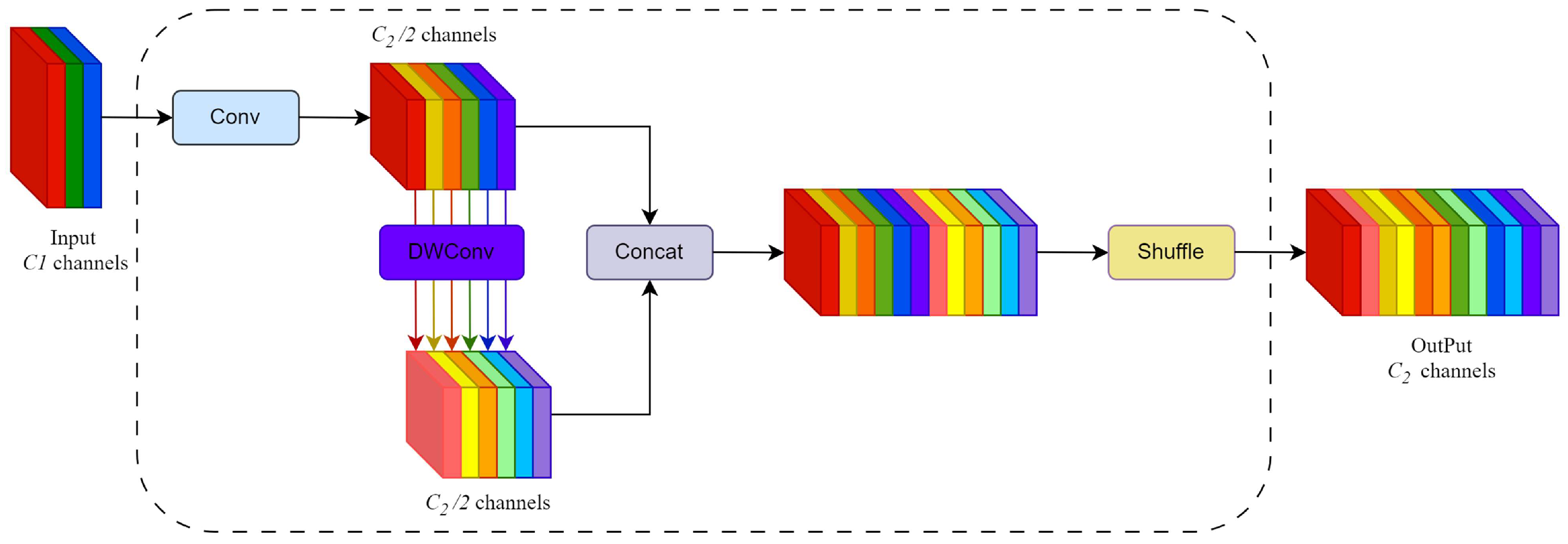

The GSConv module is depicted in

Figure 14. Firstly, an input feature map with C1 channels is processed by a standard convolution, generating an intermediate feature map with C2/2 channels. Concurrently, a depthwise convolution (DWConv) is applied to produce another feature map that maintains the same channel count. The two resulting feature maps are then concatenated and passed through a channel shuffle operation to reorganize channel information. Ultimately, the output feature map possesses C2 channels. By leveraging the representational strength of standard convolution together with the efficiency of DWConv, GSConv effectively alleviates the channel information isolation problem commonly encountered in depthwise convolution. Consequently, it achieves reduced model complexity while retaining robust feature representation and detection accuracy.

The GSConv structure is illustrated in

Figure 14. An input feature map with

channels is first processed by a standard convolution block to produce a feature map with

channels. Meanwhile, a depthwise convolution (DWConv) is used to generate another feature map with the same number of channels. The two outputs are then concatenated, followed by a channel shuffle operation to redistribute channel information. The final output is a feature map with C2 channels. By combining the representational capacity of standard convolution with the efficiency of DWConv, GSConv alleviates the channel information separation issue inherent in depthwise convolution. As a result, it reduces model complexity while preserving effective feature expression and detection accuracy.

However, GSConv is not suitable for use across every stage of the network, as excessive deployment would significantly increase network depth and computational overhead, resulting in longer inference time. When feature maps are propagated to the neck, their spatial resolution is already reduced, and the number of channels is compressed, meaning that the amount of redundant information is relatively small. Therefore, GSConv is selectively applied only within the neck to substitute the standard convolution block. Additionally, a GSBottleneck module was constructed based on GSConv, and the VoVGSCSP module was further introduced to replace the original C3k2 feature extraction module at the neck. The structural configuration is illustrated in

Figure 15. According to the architectural characteristics of the YOLO11 neck, a Slim-neck structure composed of GSConv and VoVGSCSP modules was designed, forming a lightweight neck with minimal computational burden and without compromising representational capacity. This Slim-neck structure was integrated into the improved BiFPN, enabling the network to reduce model complexity while maintaining high detection accuracy for kiwiberry flower and bud recognition.

In summary, to enhance the detection of small flower buds of kiwiberry, this study systematically improves YOLO11s from four aspects: feature preservation, enhancement, representation, and fusion. Specifically, the P improvement (P2 detection head) introduces a high-resolution detection head to retain fine-grained details; the R improvement (RFAConv) emphasizes key regions under complex backgrounds; the F improvement (C3k2-Faster) streamlines feature extraction for clearer representations; and the BS improvement (BIFPSNN) balances shallow detail with deep semantic fusion. Through the synergistic interaction of these improvements, the YOLO11s-RFBS model forms a continuous enhancement process across “detail retention–region attention–effective feature representation–multi-scale fusion”. While maintaining a lightweight architecture, the model achieves more stable perception, extraction, and integration of key information from small targets, thereby significantly improving the detection precision and robustness of flowers and buds of kiwiberry in complex orchard environments.

2.8. Experimental Environment

To maintain experimental consistency, all evaluations were performed under an identical hardware configuration and software environment, as detailed in

Table 1.

In addition, the training process did not utilize any pre-trained weights.

The input images were uniformly resized to 640 × 640, and the models were trained for 200 epochs with a batch size of 16. The SGD optimizer was employed, while all other hyperparameters followed the default settings of the official YOLO11 implementation. For fairness, the comparative models were also trained using their respective default parameter settings.

2.9. Evaluation Metrics

To evaluate model performance in kiwiberry flower and bud detection, widely adopted metrics in object detection were used. Model complexity was assessed based on parameter count, GFLOPs, and model size. Inference speed was measured in frames per second (FPS). For detection accuracy, precision (P), recall (R), average precision (AP), and mean average precision (mAP) were employed as quantitative evaluation indicators, with their formulations provided in Equations (7)–(10).

Here, True Positives (TP) refer to correctly recognized targets, False Negatives (FN) indicate actual targets that are not detected, and False Positives (FP) represent non-targets that are mistakenly identified as targets. Average Precision (AP) reflects the detection accuracy for an individual class, whereas mean Average Precision (mAP) represents the average AP across all classes. The metric mAP@0.5 denotes the mAP calculated using an Intersection over Union (IoU) threshold of 0.5.

3. Experiments and Results Discussion

3.1. Ablation Experiment

In this study, four improvements were introduced to the YOLO11s baseline model: (P) replacing the original P5 detection head with a P2 head while keeping the P3 and P4 heads unchanged; (R) substituting the conventional backbone convolution with RFAConv; (F) introducing the lightweight C3k2-Faster module instead of the original C3k2 structure; and (BS) integrating BIFPSNN into the neck.

To evaluate both the independent effect of each improvement and the synergistic performance gains achieved through their combination, ablation experiments were conducted by applying the improvements individually and in combination under identical datasets and experimental settings. The corresponding results are summarized in

Table 2.

As shown in

Table 2, substituting the P5 detection head with the P2 head while keeping the P3 and P4 heads increases the feature map resolution at the detection stage, enabling the network to capture finer structural details. Although this adjustment results in a 6.6 GFLOPs increase in computation and a 13.7% rise in the number of parameters, the model size is reduced by 20.8%. Meanwhile, precision, recall, and mAP@0.5 improve by 0.8%, 1.6%, and 1.7%, indicating that the P2 head provides better performance for identifying small and densely distributed kiwiberry flowers and buds than the P5 head. Replacing the standard convolution units in the backbone with RFAConv maintains the parameter count, computational cost, and model size, while enhancing precision, recall, and mAP@0.5 by 1.8%, 1.3%, and 1.3%, respectively. This improvement is attributed to the ability of RFAConv to leverage spatial contextual cues and strengthen discriminative feature extraction. Introducing the C3k2-Faster module reduces model parameters by 6.3%, lowers computational cost by 5.5%, and decreases model size by 1%, while precision, recall, and mAP@0.5 increase by 1.3%, 1.2%, and 1%, respectively. This demonstrates that C3k2-Faster effectively balances feature representation efficiency and network compactness. Incorporating BIFPSNN into the neck yields performance gains of 0.7%, 1.4%, and 1.2% in precision, recall, and mAP@0.5, respectively. Simultaneously, the number of parameters, computational cost, and model size are reduced by 8%, 9%, and 8%, highlighting the module’s ability to enhance multi-scale feature fusion while limiting redundancy.

The fully enhanced YOLO11s-RFBS model, formed by integrating all four improvements, yields performance gains of 0.8%, 3.4%, and 2.7% in precision, recall, and mAP@0.5, respectively. Although the computational cost increases slightly by 1.7 GFLOPs, the parameter count and model size are reduced by 33.2% and 31.8%, respectively. Overall, this integrated design achieves a notable improvement in detection accuracy while maintaining a controllable computational burden, indicating that the optimization strategy is both effective and efficient for identifying kiwiberry flowers and buds. Furthermore, the progression of performance enhancement obtained through the stepwise addition of each module is shown in

Figure 16.

To further analyze the effects of each improvement module under complex scenarios, two representative scenes were selected for visual comparison: a small-object scenario and an occluded-object scenario. In the visualizations, white circles indicate false or duplicate detections, while yellow triangles denote missed detections. As shown in

Figure 17, the introduction of different modules provides targeted improvements to the model’s detection performance.

In the small-object scenario shown in

Figure 17a, all models exhibit a certain degree of missed detections, but their performances vary significantly. The baseline YOLO11s model shows unstable detection for small targets, with multiple missed and duplicate detections, whereas the YOLO11s-BS and YOLO11s-P models perform better, detecting more small-sized targets without false detections. The P improvement (detection head modification) enhances the model’s perception and localization of small targets by strengthening the utilization of shallow high-resolution features. The BS improvement (BIFPSNN) introduces a learnable weighting mechanism and optimizes multi-scale feature fusion, thereby enhancing the feature representation and detection stability for small-sized targets. Together, these improvements substantially enhance detection performance in small-object scenes.

In the occluded-object scenario shown in

Figure 17b, all models experience varying degrees of missed detections, but the YOLO11s-R model achieves the best performance, exhibiting the fewest missed detections without false positives. The R improvement (RFAConv) introduces a receptive field attention mechanism that dynamically allocates feature weights across spatial positions, strengthening the model’s focus on key information regions and effectively improving detection robustness under occlusion and complex background conditions. Consequently, the model can accurately recognize kiwiberry flowers and buds even when partially occluded by leaves, branches, or overlapping floral structures.

In summary, the R improvement provides the most significant enhancement in detection performance under occluded scenes, while the P and BS improvements primarily improve the detection of small targets. The F improvement (C3k2-Faster) mainly contributes to overall model lightweighting and feature extraction efficiency. Each module demonstrates complementary advantages under different complex scenarios, collectively enhancing the model’s detection robustness and overall performance.

3.2. Comparison of Detection Head Configurations

To assess the performance of the proposed detection head design for kiwiberry flower and bud recognition, multiple head configurations were tested based on the YOLO11s baseline network. Specifically, YOLO11s (P3, P4, P5) represent the original detection head layout of the baseline model; YOLO11s (P2, P3, P4) correspond to a revised setup where the P2 head substitutes P5; and YOLO11s (P2, P3, P4, P5) indicate an extended configuration used to explore the influence of incorporating all four heads concurrently. The comparative findings are reported in

Table 3.

The comparison results show that replacing the P5 head with the higher-resolution P2 head leads to clear improvements in precision, recall, and mAP@0.5, while reducing the number of parameters and size by 17.8% and 20.8%. Although the GFLOPs increase slightly, the additional computational load remains within an acceptable range and is compensated for by improved detection reliability, with fewer missed and false detections. Additionally, a configuration using four detection heads (P2, P3, P4, P5) was evaluated. However, incorporating the P5 head again did not produce further accuracy gains and instead increased the number of parameters, GFLOPs, and size by 26.9%, 7.0%, and 3.0%, respectively. Therefore, the P2–P3–P4 detection head configuration achieves a more favorable balance of performance and efficiency for kiwiberry flower and bud detection.

To further verify the effectiveness of the P2, P3, and P4 detection head configuration, comparative experiments were carried out by applying different detection head combinations to the YOLO11s-RFBS model. The corresponding findings are reported in

Table 4.

The comparative results indicate that the P2–P3–P4 detection head configuration yields the best overall performance, with precision, recall, and mAP@0.5 increasing by 1.7%, 1.4%, and 1.7%, respectively. Meanwhile, the number of parameters and model size are reduced by 23% and 24.2%, and the additional computational cost remains minimal. This demonstrates that replacing the P5 head with P2 enhances the ability to capture high-resolution spatial detail, thereby improving the detection of small and densely clustered kiwiberry flowers and buds. By contrast, enabling all four detection heads (P2, P3, P4, P5) does not lead to further performance benefits. Instead, adding the P5 head increases parameters, computation, and size by 26.9%, 7%, and 3%, respectively, while precision increases by only 0.5% and mAP@0.5 by 0.2%, and recall remains nearly unchanged. This indicates that reintroducing P5 adds computational burden without producing meaningful accuracy gains.

Therefore, the P2–P3–P4 detection head configuration achieves a balanced compromise between recognition accuracy and computational cost, making it the most suitable setup for kiwiberry flower and bud detection.

3.3. Comparative Experiment of Object Detection Models

To more comprehensively evaluate the detection capability of the improved YOLO11s-RFBS model for kiwiberry flowers and buds, this subsection compares it with several representative object detection models, and the performance outcomes are reported in

Table 5. All comparisons were carried out under identical hardware conditions, using an Intel Core i5-13600KF CPU and an NVIDIA GeForce RTX 4070 SUPER GPU. The inference speed (FPS) was measured on the GPU, ensuring the fairness and consistency of the speed evaluation across different models.

As shown in

Table 5, the YOLO11s-RFBS model achieves an mAP@0.5 of 91.7%, which is slightly below Faster R-CNN (92.0%), but exceeds the performance of the other models, demonstrating a notable advantage in overall detection capability. However, Faster R-CNN relies on a highly complex architecture with 137,098,724 parameters, 370.2 GFLOPs, and an inference speed of only 13.2 FPS, which limits its suitability for real-time deployment in practical orchard environments. In contrast, YOLO11s-RFBS maintains competitive mAP@0.5 performance while substantially reducing model complexity, containing only 6,282,114 parameters, requiring 23.0 GFLOPs, and occupying 13.1 MB of storage. Furthermore, it supports a high inference speed of 90.5 FPS. These results confirm that YOLO11s-RFBS achieves a favorable trade-off between accuracy, efficiency, and model size, making it well-suited for resource-limited and real-time agricultural detection applications.

In the precision metric, the SSD model attains the highest value of 96.1%, but its recall is only 68.0%, indicating that although SSD performs well in identifying positive samples, it suffers from a substantial missed-detection issue. In contrast, the YOLO11s-RFBS model achieves a precision of 91.8% and a recall of 84.6%, the latter being markedly higher than that of SSD. This demonstrates that YOLO11s-RFBS can provide more stable and comprehensive target detection, effectively balancing recognition accuracy and missed-detection control. Moreover, the precision of YOLO11s-RFBS surpasses that of YOLOv7 [

36], YOLOv8s, YOLOv9s [

37], and EfficientDet, further confirming its advantage in detection performance among these compared models.

Regarding inference speed, the YOLO11s-RFBS model attains a speed of 90.5 FPS, which is markedly faster than models such as EfficientDet (22.4 FPS) and YOLOv5s (49.6 FPS), indicating strong real-time detection capability. Although its speed is slightly lower than that of the baseline YOLO11s, the difference remains within an acceptable range, as the improvement in detection precision and feature representation compensates for the marginal reduction. Moreover, the YOLO11s-RFBS model features the lowest parameter count and smallest model size among the compared approaches, making it highly advantageous for deployment on edge devices with limited computational and memory resources.

In addition, several models were evaluated on the test set, and representative detection outcomes are illustrated in

Figure 18.

Figure 18a–d present the detection performance of kiwiberry flowers and buds under different scenarios, where erroneous and duplicate detections are marked with white circles, and missed detections are indicated by yellow triangles. In the overlapping scenario shown in

Figure 18a, YOLOv7, Faster R-CNN, and YOLO11s-RFBS successfully identified the bud positioned in front of the flower, whereas the other models exhibited missed detections. Additionally, both YOLOv5s and Faster R-CNN generated erroneous detections. For the distant-target scenario in

Figure 18b, only YOLOv7, YOLOv9s, Faster R-CNN, EfficientDet, and YOLO11s-RFBS were able to accurately detect the bud. In the low-light environment, all models experienced varying levels of missed detections, but the YOLO11s-RFBS model achieved the best overall accuracy, with only two targets undetected. In the dense-distribution case shown in

Figure 18c, RT-DETR-l [

38], YOLOv8s, YOLOv9s, Faster R-CNN, and YOLOv10s [

39] all exhibited erroneous detections, with YOLOv9s additionally producing duplicate detections. In contrast, the YOLO11s-RFBS model avoided these problems entirely. For the distant-scene test in

Figure 18d, all models presented both false and missed detections; however, YOLO11s-RFBS achieved the fewest errors in both aspects, confirming its superior robustness and stability in complex environments. Overall, YOLO11s-RFBS demonstrated the most reliable and accurate detection performance for kiwiberry flowers and buds across a range of environmental and visual conditions.

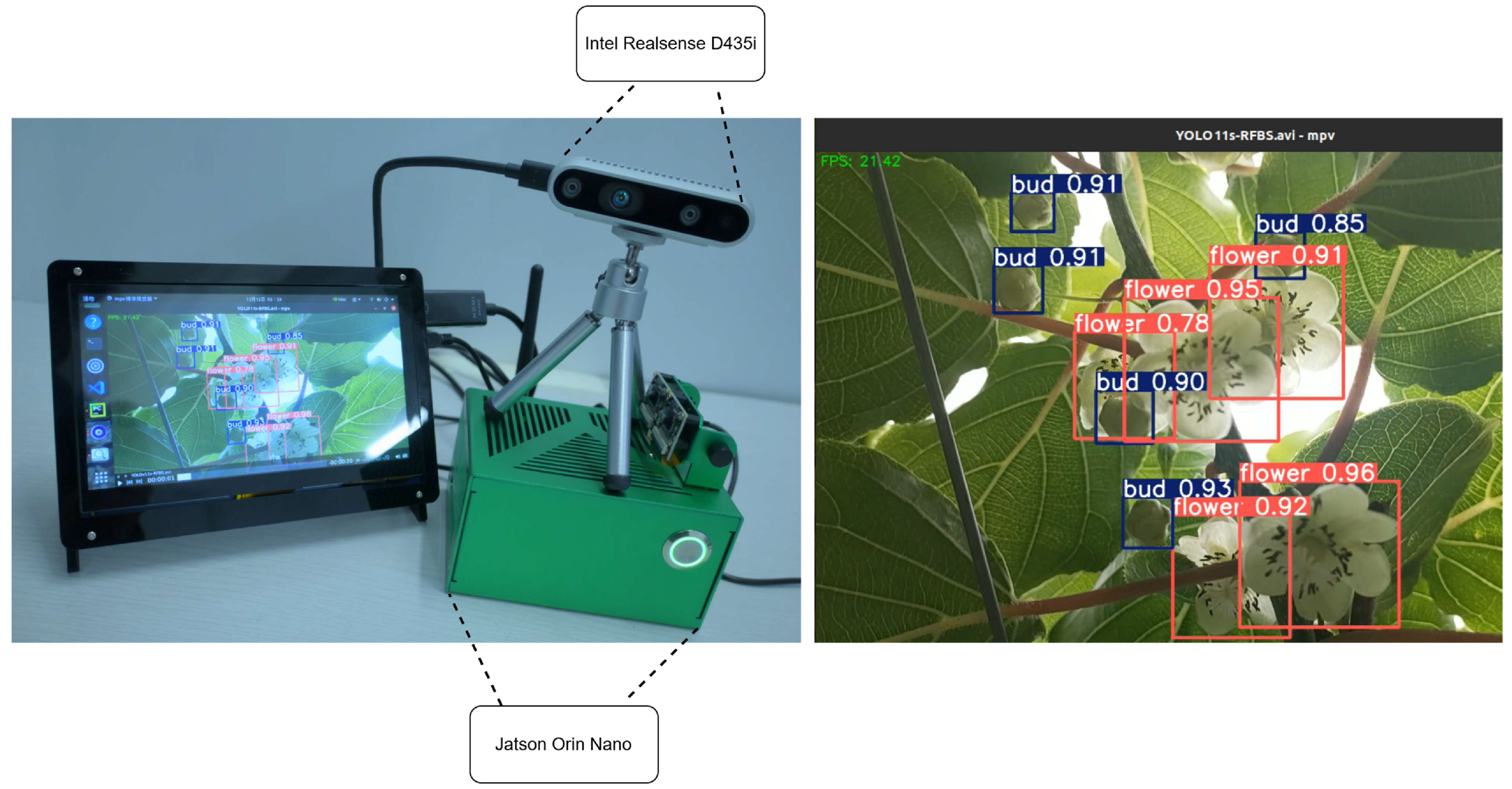

3.4. Edge Deployment Evaluation of the Improved Model

To evaluate the practical deployability of the optimized model on edge hardware, YOLO11s-RFBS was executed on a Jetson Orin Nano platform for field-oriented inference. The inference process utilized the device’s onboard GPU to perform field-oriented detection. Image data were captured using an Intel RealSense D435i depth camera (Intel Corporation, Santa Clara, CA, USA), and the detection output was visualized on a 7-inch touchscreen. The deployment setup and corresponding results are shown in

Figure 19. The observations indicate that YOLO11s-RFBS can reliably complete the identification of kiwiberry flowers and buds on the Jetson Orin Nano device, maintaining stable accuracy and consistent inference performance. Moreover, the detection speed remains above 21 FPS, meeting real-time operational requirements for intelligent orchard applications.

Furthermore, to evaluate the YOLO11s-RFBS model under practical deployment conditions, on-device inference tests were performed using the Jetson Orin Nano platform. Performance was measured using mAP@0.5, precision, and recall. As presented in

Table 6, the model reached 91.6% mAP@0.5, 91.7% precision, and 84.3% recall on the edge device. The close consistency between these results and the main experimental outcomes indicates that the model sustains both high inference efficiency and reliable detection accuracy when deployed on resource-limited hardware, confirming its applicability and robustness in real agricultural scenarios.

4. Discussion

4.1. Comparative Analysis with Existing YOLO-Based Architectures for Lightweight and Small-Object Detection

Within agricultural vision-based detection tasks, designing models that are both lightweight and capable of reliably identifying small targets continues to be a key research focus. Existing studies, such as YOLO-Granada [

40] and ASD-YOLO [

41], have introduced structural optimizations that achieved a certain balance between model complexity and detection precision.

YOLO-Granada substitutes the YOLOv5s backbone with ShuffleNetv2 and integrates the CBAM attention module, which effectively decreases parameter count and model size while accelerating inference. This design highlights the feasibility of employing YOLO frameworks in lightweight model construction. However, this model primarily targeted medium and large fruit detection tasks (e.g., pomegranates) and lacked high-resolution feature layers and cross-layer information interaction mechanisms, resulting in limited adaptability to small targets and complex backgrounds. Although the model achieved over 50% parameter compression, its mAP@0.5 decreased by approximately 0.7% compared to the baseline, indicating a loss of feature representation capability during lightweight optimization. Overall, while YOLO-Granada exhibits strong performance in lightweight design, its improvement in small-object detection and robustness under complex scenarios remains limited. ASD-YOLO, built upon the YOLOv7 framework, introduced SPD-Conv, DySample, and the L-Norm attention mechanism to enhance small-object detection under complex backgrounds. Although SPD-Conv expands the receptive field and mitigates feature loss, its optimization remains confined to the convolutional receptive field level. Meanwhile, the model still exhibits high computational complexity (59.2 GFLOPs) and parameter count (36.3 M), resulting in limited lightweight effectiveness. Moreover, the L-Norm attention mechanism is based on static normalization and lacks dynamic adaptability to illumination variations and background interference.

In contrast, the proposed YOLO11s-RFBS model emphasizes both lightweight architecture and accurate detection of small targets. The introduction of the P2 high-resolution detection head, together with the BIFPSNN structure, enhances the extraction of fine spatial cues and strengthens the interaction among multi-scale features. RFAConv incorporates receptive-field-aware spatial modulation into the backbone, enabling the network to adaptively emphasize informative regions while suppressing background noise, particularly under dense and occluded orchard conditions. Meanwhile, the C3k2-Faster module reduces unnecessary computation by employing partial convolution, resulting in a 33.3% reduction in parameters, a 31.8% decrease in model size, and a 2.7% gain in mAP@0.5. Overall, YOLO11s-RFBS achieves reliable recognition of small, densely distributed, and morphologically similar targets while preserving a compact model design, thereby providing a well-balanced solution in terms of detection accuracy and computational efficiency and improving its suitability for practical agricultural deployment.

4.2. Challenge-Oriented Structural Design of the YOLO11s-RFBS Model

To address the key challenges in detecting kiwiberry flowers and buds—including small object size, dense distribution, occlusion, and complex background interference—this study introduces four targeted structural optimizations based on the baseline YOLO11s model.

The detection head modification enhances the model’s ability to identify small and densely clustered targets by introducing a higher-resolution detection layer at an earlier stage. This allows the network to extract finer spatial cues from shallow features, thereby improving localization accuracy and detection reliability in dense regions. The BIFPSNN module employs a learnable feature-weighting strategy to optimize multi-scale feature fusion while maintaining a lightweight neck structure. This design facilitates the adaptive integration of low-level, detailed features with high-level semantic information, improving scene adaptability. RFAConv integrates receptive-field-aware spatial attention, enabling adaptive emphasis on informative regions and effective suppression of background clutter, which strengthens feature discrimination in visually complex orchard environments. The C3k2-Faster module reduces redundant computation through partial convolution, decreasing FLOPs and memory usage while preserving strong feature extraction capacity. This leads to more efficient representation and stable detection performance.

Collectively, these four modules work synergistically at different levels to address the major challenges in the detection task, significantly enhancing the accuracy, robustness, and lightweight efficiency of the proposed model for kiwiberry flower and bud detection, thus confirming the practical value and broad applicability of the structural enhancements introduced herein.

4.3. Effect of Imaging Distance on Detection Performance and Future Improvements

The relative spatial distance between the imaging device and the target is a key factor influencing the YOLO11s-RFBS model’s detection performance for flowers and buds. When the shooting distance is short, flowers and buds occupy a larger portion of the image, and their fine details become clearer, enabling the model to effectively extract key texture and morphological information and achieve higher detection accuracy. In contrast, when the shooting distance increases, the pixel scale of the targets decreases, and the edge and texture features become weaker, which may lead to reduced detection precision.

To alleviate the adverse effects of distance variation on detection performance, this study introduces several targeted structural optimizations based on the YOLO11s baseline model. Specifically, the high-resolution detection head (P2) enhances the utilization of shallow spatial detail features, allowing the model to effectively capture small target characteristics even under long-distance imaging conditions. Meanwhile, the BIFPSNN structure employs a learnable multi-scale feature fusion mechanism, which strengthens the interaction and representation between shallow and deep features, thereby improving detection accuracy and robustness across different target distances and scales. In addition, the constructed dataset includes samples captured at multiple shooting distances, enabling the model to learn richer scale variation features during training and further enhancing its adaptability and generalization capability under various imaging conditions.

Future research will consider integrating depth information to more accurately characterize the spatial distance between the camera and the target. Introducing depth cues into the feature extraction or multi-scale fusion stages can enable the model to adaptively adjust feature weighting according to target distance, thereby enhancing detection accuracy and stability under varying imaging distances.

4.4. Practical Applications, Limitations, and Future Directions

The proposed YOLO11s-RFBS model demonstrates strong application potential in the visual perception module of kiwiberry pollination robots. It offers a lightweight architecture while retaining high detection precision, enabling stable real-time identification of kiwiberry flowers and buds. This provides reliable visual information for robotic arm path planning and pose control, supporting an efficient and practical solution for automated kiwiberry pollination and orchard management.

Although the model performs well in controlled experimental environments, several practical challenges still exist during real-world deployment. Pollination robots typically rely on embedded or industrial edge devices, where computing power, memory, and energy supply are limited. These constraints place higher demands on model compactness and inference speed. To mitigate these issues, future research may consider incorporating model compression strategies, such as network pruning and knowledge distillation, to further decrease computational overhead. Moreover, combining edge inference with multi-sensor fusion techniques is expected to enhance the model’s real-time responsiveness and operational robustness in complex orchard scenarios.

In the practical implementation of commercial orchard systems, the use of depth cameras for image acquisition may encounter potential challenges. The installation, positioning, angle, and field of view of the camera are critical; improper configuration may result in unstable image quality, thereby affecting subsequent data processing. Depth cameras also require periodic calibration to maintain long-term operational stability. With regard to device integration, the compatibility and data transmission efficiency between the YOLO11s-RFBS model and hardware components such as robotic arms and sensors may pose challenges, particularly on resource-constrained edge devices. As the deployment scale expands, long-term maintenance, updates, and system debugging may become bottlenecks. Therefore, reducing maintenance costs while ensuring stable system operation represents a critical issue that warrants further investigation.

Given that the training data were mainly collected from a single kiwiberry cultivar within a fixed orchard layout, the current model still has limitations in generalization. When applied to different flower varieties or orchard structures, its detection performance may decline. In addition, due to the short blooming period of kiwiberry, the data collection process largely occurred during continuous sunny weather, resulting in insufficient samples from cloudy and rainy environments. This study has considered the potential impact of varying weather conditions on model performance and employed data augmentation techniques during model development to compensate for the limited weather variation, thereby improving the model’s adaptability to different lighting and environmental conditions. Furthermore, as kiwiberry is primarily cultivated in northern China, where the blooming period is typically characterized by stable, sunny weather, the dataset collected reflects the natural environment of the local region well, giving the model a certain level of transferability. Nevertheless, future research should consider expanding the dataset to include multiple plant varieties, orchard layouts, and climatic conditions, which will help further improve the model’s robustness, cross-scenario generalization ability, and overall stability in real-world orchard environments.

Overall, the YOLO11s-RFBS model establishes a solid visual perception foundation for intelligent kiwiberry pollination systems. Continued optimization in model architecture, data diversity, and practical deployment strategies is expected to further enhance its adaptability and reliability, thereby promoting its broader application in vision-guided intelligent pollination within modern agricultural production.

5. Conclusions

To facilitate precise and efficient identification of kiwiberry flowers and buds in complex orchard environments and to improve the overall effectiveness of pollination operations, this study develops the YOLO11s-RFBS model. The main contributions and findings are outlined below:

This study enhances the baseline YOLO11s model through four targeted improvements, resulting in a notable boost in detection performance. First, while retaining the P3 and P4 detection heads, the original P5 detection head is replaced with a P2 head. The P5 layer remains involved in feature fusion but no longer connects directly to the detection branch. The introduction of the P2 head increases spatial resolution, allowing the model to better capture fine-grained details during object localization. Second, RFAConv is incorporated into the backbone. By leveraging receptive-field-aware attention, it adaptively adjusts feature weights across different spatial regions, reinforcing key features while suppressing irrelevant background information. Third, the C3k2-Faster feature extraction module is introduced to replace the original C3k2 structure. This module reduces redundant computation and memory access while still ensuring effective feature representation, thereby improving efficiency. Finally, the BIFPSNN neck structure is designed to achieve more balanced multi-scale feature fusion. This adjustment not only enhances the utilization of feature information across scales but also decreases the overall complexity of the neck, further contributing to a more lightweight model architecture.

The experimental results show that YOLO11s-RFBS achieves a precision of 91.8%, a recall of 84.6%, and an mAP@0.5 of 91.7%. Compared with YOLO11s, these indicators increase by 0.8%, 3.4%, and 2.7%, respectively, while the parameters and model size decrease by 33.3% and 31.8%. In comparison with other representative detectors, YOLO11s-RFBS attains the highest mAP@0.5 and simultaneously maintains the smallest parameter count and storage footprint, demonstrating comprehensive performance advantages. The final network comprises 6,282,114 parameters, requires 23 GFLOPs of computation, and occupies 13.1 MB of memory. Moreover, when deployed on the Jetson Orin Nano platform, YOLO11s-RFBS sustains an inference speed of over 21 FPS while preserving stable precision and recall. This verifies that the improved model can deliver reliable detection performance under limited computational resources, thereby meeting real-time operational requirements in practical orchard scenarios.

To conclude, the YOLO11s-RFBS model achieves an effective trade-off between detection precision and real-time inference efficiency, indicating its strong potential for deployment in the visual perception module of intelligent kiwiberry pollination equipment. The proposed model provides an efficient and feasible visual recognition approach for the automation and intelligence of kiwiberry pollination operations. It can significantly improve pollination efficiency and operational quality, thereby increasing fruit yield and reducing labor costs. More importantly, the application of intelligent pollination robots has potential environmental and economic benefits: by reducing labor input and energy consumption, and by improving resource utilization efficiency and yield stability, such systems not only contribute to the green transformation of agriculture but also offer new pathways toward the sustainable development of smart farming [

42]. The model proposed in this study lays a solid technical and theoretical foundation for the broader adoption and practical implementation of agricultural automation and intelligent equipment in the future.