Death Detection and Removal in High-Density Animal Farming: Technologies, Integration, Challenges, and Prospects

Abstract

1. Introduction

2. Death Detection Methods

2.1. Data Acquisition Methods

2.1.1. Manual Data Collection

2.1.2. Fixed Imaging Systems

2.1.3. Mobile Imaging Devices

2.2. Imaging Sensors

2.2.1. RGB Camera

2.2.2. Thermal Infrared Camera

2.2.3. Multi-Sensor Image Fusion

2.3. Dataset Establishment

2.4. Data Processing Methods

2.4.1. Traditional Death Detecting Methods

2.4.2. Novel Death Detecting Methods

2.4.3. Summary of the Death Detecting Methods

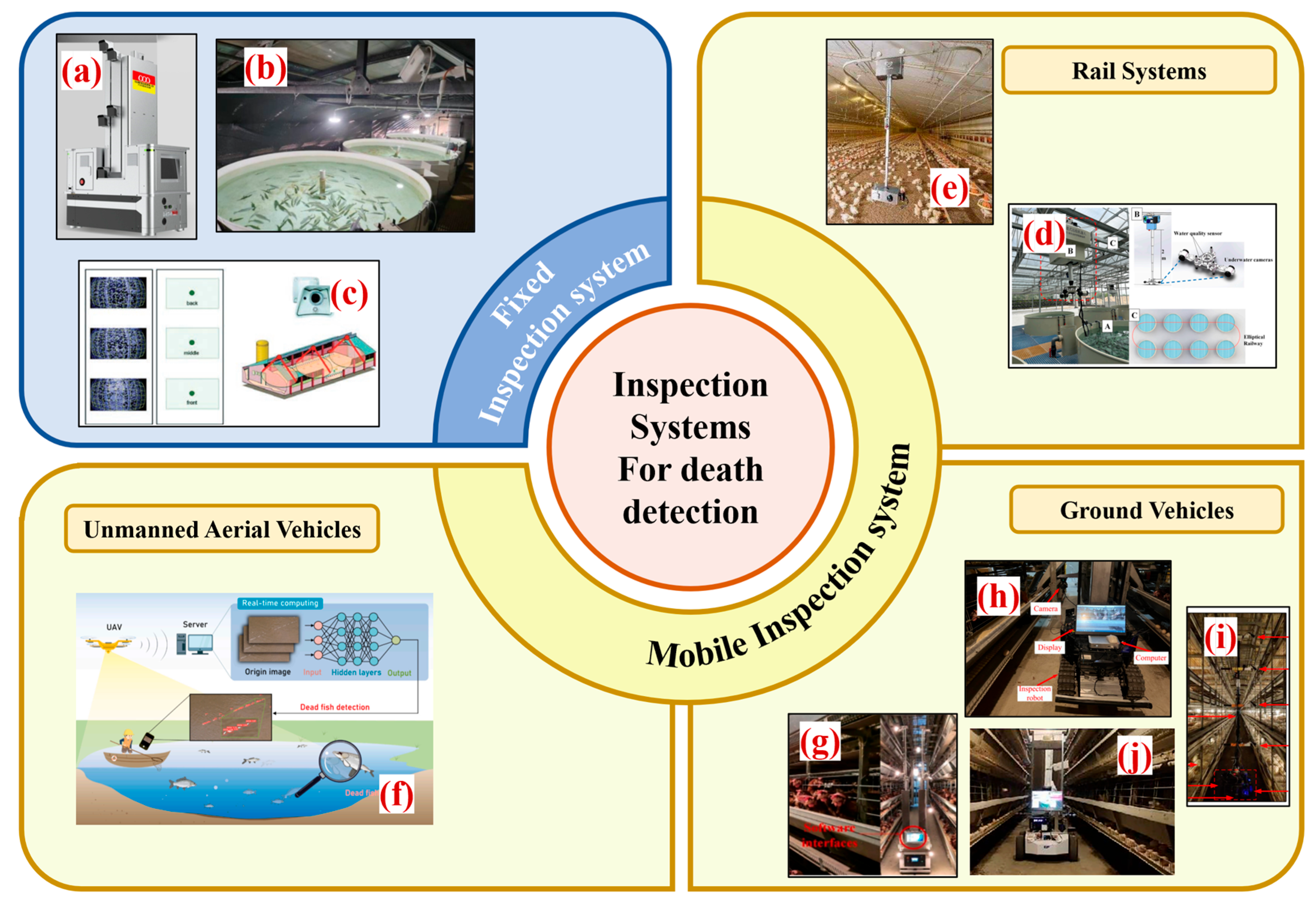

3. Inspection System

3.1. Fixed Inspection System

3.2. Mobile Inspection System

3.3. Summary of Existing Inspection Systems

4. Automated Removal System

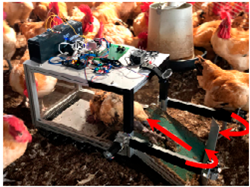

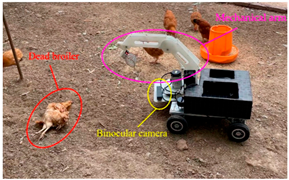

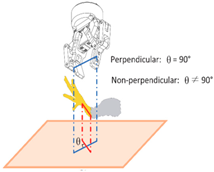

4.1. Removal Systems for Floor-Raised Poultry Houses

4.2. Removal Systems for Cage-Raised Poultry Houses

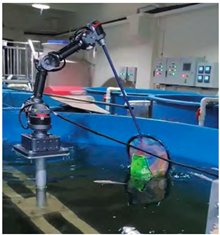

4.3. Dead Fish Removal System in Aquaculture

4.4. Summary of Current Removal Systems and Limitations

| Reference | Target Farm Animal | Robot | Walking (Moving) Speed | Average Removal Time | Average Success Rate |

|---|---|---|---|---|---|

| Liu et al., 2021 [2] | Floor-raised broilers |  | 3.3 cm/3 on the litter | approximately 1 min per operation, maximum of 2 chickens per operation | N/A |

| Xin et al., 2024 [35] | Floor-raised broilers |  | N/A | N/A | 81.30% |

| Li et al., 2022 [26] | Cage-raised broilers |  | N/A | ranged from 70.5 to 77.8 s per round | 90% at 1000 lux light intensity |

| Hu, 2021 [63] | Cage-raised broilers |  | N/A | 32 s | 96.7% (more than 30 min) 88.3% (deceased within 30 min) |

| Wang, 2023 [9] | Cage-raised broilers |  | N/A | around 85 s | 83% (for chickens 4–7 weeks old) |

| Li et al., 2023 [21] | Recirculating aquaculture system |  | 0.3 m/s | N/A | 77.14% (one-time removal success rate) |

5. Discussion

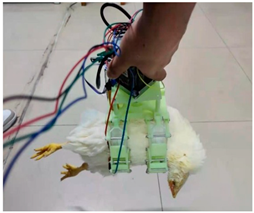

5.1. Wearable-Sensor-Based Death Detection Methods

5.2. Ethical and Animal Welfare Considerations

5.3. Limitations of Current Works and Future Directions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Godfray, H.C.J.; Beddington, J.R.; Crute, I.R.; Haddad, L.; Lawrence, D.; Muir, J.F.; Pretty, J.; Robinson, S.; Thomas, S.M.; Toulmin, C. Food Security: The Challenge of Feeding 9 Billion People. Science 2010, 327, 812–818. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.W.; Chen, C.H.; Tsai, Y.C.; Hsieh, K.W.; Lin, H.T. Identifying Images of Dead Chickens with a Chicken Removal System Integrated with a Deep Learning Algorithm. Sensors 2021, 21, 3579. [Google Scholar] [CrossRef] [PubMed]

- Ren, G.; Lin, T.; Ying, Y.; Chowdhary, G.; Ting, K.C. Agricultural robotics research applicable to poultry production: A review. Comput. Electron. Agric. 2020, 169, 105216. [Google Scholar] [CrossRef]

- Ojo, R.O.; Ajayi, A.O.; Owolabi, H.A.; Oyedele, L.O.; Akanbi, L.A. Internet of Things and Machine Learning techniques in poultry health and welfare management: A systematic literature review. Comput. Electron. Agric. 2022, 200, 107266. [Google Scholar] [CrossRef]

- Özentürk, U.; Chen, Z.; Jamone, L.; Versace, E. Robotics for poultry farming: Challenges and opportunities. Comput. Electron. Agric. 2024, 226, 109411. [Google Scholar] [CrossRef]

- Li, D.; Wang, Q.; Li, X.; Niu, M.; Wang, H.; Liu, C. Recent advances of machine vision technology in fish classification. ICES J. Mar. Sci. 2022, 79, 263–284. [Google Scholar] [CrossRef]

- Bumbálek, R.; Umurungi, S.N.; Ufitikirezi, J.D.D.M.; Zoubek, T.; Kuneš, R.; Stehlík, R.; Lin, H.; Bartoš, P. Deep learning in poultry farming: Comparative analysis of Yolov8, Yolov9, Yolov10, and Yolov11 for dead chickens detection. Poult. Sci. 2025, 104, 105440. [Google Scholar] [CrossRef]

- Badgujar, C.M.; Poulose, A.; Gan, H. Agricultural object detection with You Only Look Once (YOLO) Algorithm: A bibliometric and systematic literature review. Comput. Electron. Agric. 2024, 223, 109090. [Google Scholar] [CrossRef]

- Wang, L. Study on Health Monitoring of Broiler Flocks and Removal Test of Dead Broilers. Master’s Thesis, Shandong University of Technology, Zibo, China, 2023. [Google Scholar]

- Luo, S.; Ma, Y.; Jiang, F.; Wang, H.; Tong, Q.; Wang, L. Dead Laying Hens Detection Using TIR-NIR-Depth Images and Deep Learning on a Commercial Farm. Animals 2023, 13, 1861. [Google Scholar] [CrossRef]

- Hao, H.; Fang, P.; Duan, E.; Yang, Z.; Wang, L.; Wang, H. A Dead Broiler Inspection System for Large-Scale Breeding Farms Based on Deep Learning. Agriculture 2022, 12, 1176. [Google Scholar] [CrossRef]

- Ma, W.; Wang, X.; Yang, S.X.; Xue, X.; Li, M.; Wang, R.; Yu, L.; Song, L.; Li, Q. Autonomous inspection robot for dead laying hens in caged layer house. Comput. Electron. Agric. 2024, 227, 109595. [Google Scholar] [CrossRef]

- Jia, Y.; Xue, H.; Zhou, Z.; Zhao, X.; Huo, X.; Li, L. Automatic identification method for dead chicken in cage based on infrared thermal imaging technology. J. Hebei Agric. Univ. 2023, 46, 105–112. [Google Scholar]

- Peng, X.; He, X.; Sun, Y.; Liu, R.; Liang, Y.; Zhong, Y.; Pang, J.; Xiong, K. Identification and 3D localization of dead pig head based on improved YOLOv5. Acta Agric. Univ. Jiangxiensis 2024, 46, 763–773. [Google Scholar] [CrossRef]

- Khanal, R.; Wu, W.; Lee, J. Automated Dead Chicken Detection in Poultry Farms Using Knowledge Distillation and Vision Transformers. Appl. Sci. 2025, 15, 136. [Google Scholar] [CrossRef]

- Muvva, V.V.R.M.; Zhao, Y.; Parajuli, P.; Zhang, S.; Tabler, T.; Purswell, J. Early detection of mortality in poultry production using high resolution thermography. In Proceedings of the 10th International Livestock Environment Symposium (ILES X), Omaha, NE, USA, 25–27 September 2018; pp. 1499–1504. [Google Scholar]

- Heng, X.; Shen, M.X.; Liu, L.S.; Yao, W.; Li, P. The detection method of lactating deadpigs based on improved YOLOv7 and image fusion. J. Nanjing Agric. Univ. 2024, 48, 464–475. [Google Scholar]

- Zhao, Y.; Shen, M.; Liu, L.; Chen, J.; Zhu, W. Study on the method of detecting dead chickens in caged chicken based on improved YOLO v5s and image fusion. J. Nanjing Agric. Univ. 2024, 47, 369–382. [Google Scholar]

- Zhao, W.; Cheng, Y.; Cao, T.; Zhang, X.; Wu, A.; Xu, B. Design and kinematics analysis of robotic arm used for picking up dead chickens. J. Chin. Agric. Mech. 2023, 44, 131–136. [Google Scholar]

- Zhang, P.; Zheng, J.; Gao, L.; Li, P.; Long, H.; Liu, H.; Li, D. A novel detection model and platform for dead juvenile fish from the perspective of multi-task. Multimed. Tools Appl. 2024, 83, 24961–24981. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Y.; Ni, Q.; Huang, D. Research on intelligent salvaging technology of floating dead fish in circulating water aquaculture based on DeepSORT algorithm. Mar. Fish. 2023, 45, 749–758. [Google Scholar]

- Zhao, S.; Zhang, S.; Lu, J.; Wang, H.; Feng, Y.; Shi, C.; Li, D.; Zhao, R. A lightweight dead fish detection method based on deformable convolution and YOLOV4. Comput. Electron. Agric. 2022, 198, 107098. [Google Scholar] [CrossRef]

- Zhu, W.; Lu, C.; Li, X.; Kong, L. Dead Birds Detection in Modern Chicken Farm Based on SVM. In Proceedings of the 2009 2nd International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009; pp. 1–5. [Google Scholar]

- Duan, E.; Wang, L.; Lei, Y.; Hao, H.; Wang, H. Dead Rabbit Recognition Model Based on Instance Segmentation and Optical Flow Computing. Trans. Chin. Soc. Agric. Mach. 2022, 53, 256–264, 273. [Google Scholar]

- Bist, R.B.; Subedi, S.; Yang, X.; Chai, L. Automatic Detection of Cage-Free Dead Hens with Deep Learning Methods. Agriengineeing 2023, 5, 1020–1038. [Google Scholar] [CrossRef]

- Li, G.; Chesser, G.D.; Purswell, J.L.; Magee, C.L.; Gates, R.S.; Xiong, Y. Design and Development of a Broiler Mortality Removal. Appl. Eng. Agric. 2022, 38, 853–863. [Google Scholar] [CrossRef]

- Jiang, L.; Wang, W.; Huo, X.; Wang, H.; Tang, J.; Li, L. Design and experiment of dead chicken recognition robot system. J. Chin. Agric. Mech. 2023, 44, 81–87. [Google Scholar]

- Qu, Z. Study on Detection Method of Dead Chicken in Unmanned Chicken Farm. Master’s Thesis, Jilin University, Changchun, China, 2019. [Google Scholar]

- Wu, D.; Ying, Y.; Zhou, M.; Pan, J.; Cui, D. DCDNet: A deep neural network for dead chicken detection in layer farms. Comput. Electron. Agric. 2025, 237, 110492. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, T.; Fang, C.; Zheng, H.; Ma, C.; Wu, Z. A detection method for dead caged hens based on improved YOLOv7. Comput. Electron. Agric. 2024, 226, 109388. [Google Scholar] [CrossRef]

- Xue, H. Design and Implementation of Dead Broiler Identification System Based on Infrared Thermal Imaging Technology. Master’s Thesis, Nanjing Agricultural University, Nanjing, China, 2020. [Google Scholar]

- Zhou, C.; Wang, C.; Sun, D.; Hu, J.; Ye, H. An automated lightweight approach for detecting dead fish in a recirculating aquaculture system. Aquaculture 2025, 594, 741433. [Google Scholar] [CrossRef]

- Zhang, H.; Tian, Z.; Liu, L.; Liang, H.; Feng, J.; Zeng, L. Real-time detection of dead fish for unmanned aquaculture by yolov8-based UAV. Aquaculture 2025, 595, 741551. [Google Scholar] [CrossRef]

- Tian, Q.; Huo, Y.; Yao, M.; Wang, H. A method for detecting dead fish on large water surfaces based on improved YOLOv10. arXiv 2024, arXiv:2409.00388. [Google Scholar] [CrossRef]

- Xin, C.; Li, H.; Li, Y.; Wang, M.; Lin, W.; Wang, S.; Zhang, W.; Xiao, M.; Zou, X. Research on an Identification and Grasping Device for Dead Yellow-Feather Broilers in Flat Houses Based on Deep Learning. Agriculture 2024, 14, 1614. [Google Scholar] [CrossRef]

- Zheng, J.; Fu, Y.; Zhao, R.; Lu, J.; Liu, S. Dead Fish Detection Model Based on DD-IYOLOv8. Fishes 2024, 9, 356. [Google Scholar] [CrossRef]

- Zhao, R.; Wang, Y.; Zhao, S.; Zhang, S.; Duan, Y. Detection and positioning system of dead fish in factory farming. China Agric. Inform. 2024, 36, 31–46. [Google Scholar]

- Lu, C.F. Study on Dead Birds Detection System Based on Machine Vision in Modern Chicken Farm. Master’s Thesis, Jiangsu University, Zhenjiang, China, 2009. [Google Scholar]

- Peng, Y. Study on Detecting Dead Birds in Modern Chicken Farm Based on SVM. Master’s Thesis, Jiangsu University, Zhenjiang, China, 2010. [Google Scholar]

- Bai, Z.; Lv, Y.; Zhu, Y.; Ma, Y.; Duan, E. Dead Duck Recognition Algorithm Based on Improved Mask R-CNN. Trans. Chin. Soc. Agric. Mach. 2024, 55, 305–314. [Google Scholar]

- Zaninelli, M.; Redaelli, V.; Luzi, F.; Bronzo, V.; Mitchell, M.; Dell Orto, V.; Bontempo, V.; Cattaneo, D.; Savoini, G. First Evaluation of Infrared Thermography as a Tool for the Monitoring of Udder Health Status in Farms of Dairy Cows. Sensors 2018, 18, 862. [Google Scholar] [CrossRef]

- McManus, C.; Bianchini, E.; Paim, T.; De Lima, F.; Neto, J.; Castanheira, M.; Esteves, G.; Cardoso, C.; Dalcin, V. Infrared Thermography to Evaluate Heat Tolerance in Different Genetic Groups of Lambs. Sensors 2015, 15, 17258–17273. [Google Scholar] [CrossRef]

- Cai, Z.; Cui, J.; Yuan, H.; Cheng, M. Application and research progress of infrared thermography in temperature measurement of livestock and poultry animals: A review. Comput. Electron. Agric. 2023, 205, 107586. [Google Scholar] [CrossRef]

- Li, D.; Song, Z.; Quan, C.; Xu, X.; Liu, C. Recent advances in image fusion technology in agriculture. Comput. Electron. Agric. 2021, 191, 106491. [Google Scholar] [CrossRef]

- Hao, H.; Jiang, W.; Luo, S.; Sun, X.; Wang, L.; Wang, H. Detection of Dead Broilers Based on Fusion of Color and Thermal Infrared Image Information. Trans. Chin. Soc. Agric. Mach. 2025, 56, 47–64. [Google Scholar]

- Depuru, B.K.; Putsala, S.; Mishra, P. Automating poultry farm management with artificial intelligence: Real-time detection and tracking of broiler chickens for enhanced and efficient health monitoring. Trop. Anim. Health Prod. 2024, 56, 75. [Google Scholar] [CrossRef] [PubMed]

- Hao, H.; Zou, F.; Duan, E.; Lei, X.; Wang, L.; Wang, H. Research on Broiler Mortality Identification Methods Based on Video and Broiler Historical Movement. Agriculture 2025, 15, 225. [Google Scholar] [CrossRef]

- Wang, L. Research and Application of Dead Fish Recognition Technology Based on Deep Learning. Master’s Thesis, Guangdong Ocean University, Zhanjiang, China, 2022. [Google Scholar]

- Fu, T.; Feng, D.; Ma, P.; Hu, W.; Yang, X.; Li, S.; Zhou, C. DF-DETR: Dead fish-detection transformer in recirculating aquaculture system. Aquacult. Int. 2025, 33, 43. [Google Scholar] [CrossRef]

- Tong, C.; Li, B.; Wu, J.; Xu, X. Developing a Dead Fish Recognition Model Based on an Improved YOLOv5s Model. Appl. Sci. 2025, 15, 3463. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, H.; Pei, R.; Pang, C. Thermal infrared dead rabbit identification method based on improved YOLOF. Heilongjiang Anim. Sci. Veter. Med. 2023, 2023, 118–122. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 2002, 86, 2278–2324. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar] [CrossRef]

- Soeb, M.J.A.; Jubayer, M.F.; Tarin, T.A.; Al Mamun, M.R.; Ruhad, F.M.; Parven, A.; Mubarak, N.M.; Karri, S.L.; Meftaul, I.M. Tea leaf disease detection and identification based on YOLOv7 (YOLO-T). Sci. Rep. 2023, 13, 6078. [Google Scholar] [CrossRef]

- Rai, N.; Zhang, Y.; Villamil, M.; Howatt, K.; Ostlie, M.; Sun, X. Agricultural weed identification in images and videos by integrating optimized deep learning architecture on an edge computing technology. Comput. Electron. Agric. 2024, 216, 108442. [Google Scholar] [CrossRef]

- Wang, J.; Wang, N.; Li, L.; Ren, Z. Real-time behavior detection and judgment of egg breeders based on YOLO v3. Neural Comput. Appl. 2020, 32, 5471–5481. [Google Scholar] [CrossRef]

- Nasiri, A.; Amirivojdan, A.; Zhao, Y.; Gan, H. Estimating the Feeding Time of Individual Broilers via Convolutional Neural Network and Image Processing. Animals 2023, 13, 2428. [Google Scholar] [CrossRef]

- De Montis, A.; Pinna, A.; Barra, M.; Vranken, E. Analysis of poultry eating and drinking behavior by software eYeNamic. J. Agric. Eng. 2013, 44, e33. [Google Scholar] [CrossRef]

- Jiang, W.; Hao, H.; Wang, H.; Wang, L. Possible application of agricultural robotics in rabbit farming under smart animal husbandry. J. Clean. Prod. 2025, 501, 145301. [Google Scholar] [CrossRef]

- Hu, Z. Research on Underactuated End Effector of Dead Chicken Picking Robot. Master’s Thesis, Hebei Agricultural University, Baoding, China, 2021. [Google Scholar]

- Bao, Y.; Lu, H.; Zhao, Q.; Yang, Z.; Xu, W. Detection system of dead and sick chickens in large scale farms based on artificial intelligence. Math. Biosci. Eng. 2021, 18, 6117–6135. [Google Scholar] [CrossRef]

- Alves, A.A.C.; Fernandes, A.F.A.; Breen, V.; Hawken, R.; Rosa, G.J.M. Monitoring mortality events in floor-raised broilers using machine learning algorithms trained with feeding behavior time-series data. Comput. Electron. Agric. 2024, 224, 109124. [Google Scholar] [CrossRef]

| Reference | Specie | Specific Specie and the Breeding Mode | Collection Method | Imaging Sensor | Data Type | Dataset Size | Raw Image Resolution | Annotation Tool | |

|---|---|---|---|---|---|---|---|---|---|

| Poultry farming | Lu, 2009 [38] | Chicken | Cage-raised | N/A | ZC301 CMOS chip camera | RGB image | N/A | N/A | N/A |

| 66 et al., 2009 [23] | Chicken | Cage-raised | Fixed camera | ZC301 CMOS chip camera | RGB image | 160 sets (2 images each) | N/A | N/A | |

| Peng, 2010 [39] | Chicken | Cage-raised | Fixed camera | MV-VS140FM/FC camera | RGB image | 120 sets (2 images each) | 1392 × 1040 | N/A | |

| Muvva et al., 2018 [16] | Broiler | Floor-raised | Fixed camera | Duo R camera | Thermal infrared and RGB image | 120 sets (1 thermal infrared image and 1 RGB image each) | 1920 × 1080 | N/A | |

| Xue, 2020 [31] | Chicken | Floor-raised | Mobile platform-vehicle | FLIR TAU2 630 | Thermal infrared image | 8050 (10,000 after data augmentation) | 640 × 512 | LabelImg | |

| Liu et al., 2021 [2] | Chicken | Floor-raised | Mobile platform-vehicle | Logitech C922 Pro Stream Camera | RGB image | 150 | 1920 × 1080 | N/A | |

| Li et al., 2022 [26] | Broiler | Lab simulation | Fixed on a designed robotic arm | Intel RealSense D435 | RGB image | 14,288 images under 8 different lighting conditions | 1920 × 1080 | N/A | |

| depth image | 1080 × 720 | N/A | |||||||

| Hao et al., 2022 [11] | Broiler | Cage-raised | Mobile platform-vehicle | Sony XCG-CG240C | RGB image | 1310 | 1920 × 2000 | LabelImg | |

| Manual collection | |||||||||

| Luo et al., 2023 [10] | Hen | Floor-raised | Mobile platform-vehicle | RealSense L515 | NIR, depth images | 2052 sets (living hens) and 1937 sets (dead hens) (1 TIR, 1 NIR, 1 depth image each) | 640 × 480 | LabelImg | |

| IRay P2 | TIR images | 256 × 192 | |||||||

| Bist et al., 2023 [25] | Hen | Floor-raised | Fixed camera | PRO-1080MSB camera | Thermal infrared image | 9000 images after data augmentation | 1920 × 1080 | Makesense.AI (image labeled website) | |

| DVR-4580 | |||||||||

| Jiang et al., 2023 [27] | Chicken | Cage-raised | Mobile platform-vehicle | Lepton3.5 | Thermal infrared image | N/A | 160 × 120 | N/A | |

| Wang, 2023 [9] | Broiler | Cage-raised | Mobile platform-vehicle | HIKMICRO H16 | Thermal infrared image | 1820 | 160 × 120 | LabelImg | |

| Jia et al., 2023 [13] | Chicken | Cage-raised | Manual collection | FLIR | Thermal infrared image | 700 | 320 × 240 | N/A | |

| Zhao et al., 2024 [18] | Chicken | Cage-raised | Fixed camera | FLIR A6 | Thermal infrared image | 9060 | 640 × 512 | LabelImg | |

| RGB image | 1920 × 1080 | ||||||||

| Yang et al., 2024 [30] | Hen | Cage-raised | Mobile platform-vehicle | SIYI A8 mini zoom gimbal camera | RGB image | 5000 | 1280 × 720 | LabelImg | |

| Bai et al., 2024 [40] | Duck | Cage-raised | Mobile platform-vehicle | IMX camera module | RGB image | 1057 | N/A | SAM-Tool | |

| Xin et al., 2024 [35] | Chicken | Floor-raised | Binocular camera | RGB image | 1565 | 1920 × 1080 | LabelMe | ||

| Ma et al., 2024 [12] | Hen | Cage-raised | Manual collection | Xiaomi 13 smartphone | RGB image | 17,758 annotated images | 1920 × 1080 | LabelMe | |

| Mobile platform-vehicle | Eight LRCP20680_1080P | RGB image | 1920 × 1080 | N/A | |||||

| Depuru et al., 2024 [46] | Broiler | Floor-raised | N/A | a Lenovo USB, 3.5-inch, 2.1-MP, 90-degree ultrawide, 360-rotation, 30-fps camera | RGB image | N/A | N/A | N/A | |

| N/A | HP High-Definition W100 wide-range camera | RGB image | N/A | 640 × 480 | N/A | ||||

| Hao et al., 2025 [47] | Broiler | Cage-raised | Fixed camera | HIKROBOT China Inc., MV-CA023-10UC, Hangzhou, China | RGB image | 770 | N/A | Labelme | |

| Hao et al., 2025 [45] | Broiler | Cage-raised | Fixed camera | RealSense L515 | RGB, near-infrared, depth image | 991 sets with 3 images each set | depth image 1024 × 768; RGB image 1920 × 1080 | N/A | |

| IRay P2 | Thermal infrared image | N/A | 256 × 192 | N/A | |||||

| Wu et al., 2025 [29] | Chicken | Cage-raised | Mobile platform-vehicle | N/A | RGB image | 3000 | 1920 × 1080 | LabelImg | |

| Khanal et al., 2025 [15] | Chicken | Floor-raised | Fixed camera | IPC675LFW-AX4DUPKC-VG camera | RGB image | 1462 | N/A | Contain no explicit labels | |

| Fishery farming | Zhao et al., 2022 [22] | Fish | Lab simulation | Fixed camera | N/A | RGB image | 1150 | 1920 × 1080 | LabelImg |

| Wang, 2022 [48] | Fish | Deep and far sea aquaculture | Manual collection | NIKKOR 42X | RGB image | 1312 | N/A | LabelImg | |

| Li et al., 2023 [21] | Fish | Recirculating aquaculture system | Fixed camera | Logitech C920e | RGB image | 8970 | 1280 × 720 | roLabelImg | |

| Zhao et al., 2024 [37] | Fish | Lab simulation | Fixed camera | ZED 2 stereo camera | RGB image | 1172 | 1280 × 720 | LabelImg | |

| Zheng et al., 2024 [36] | Fish | Aquaculture base | N/A | N/A | RGB image | 958 | N/A | LabelMe | |

| Tian et al., 2024 [34] | Fish | Pond | Mobile platform-UAV | N/A | RGB image | 500 | 3840 × 2160 | X-AnyLabeling | |

| Zhang et al., 2024 [20] | Fish | Breeding pond (Fish vegetable symbiosis factory) | Fixed camera | N/A | RGB image | 2500 | 1920 × 1080 | LabelImg | |

| Zhang et al., 2025 [33] | Fish | Experimental base | Mobile platform-UAV | DJI M30T | RGB image | 4766 | 4000 × 3000 | DarkLabel (software) | |

| Zhou et al., 2025 [32] | Fish | Recirculating aquaculture system | Mobile platform-Railway | DCY01 | RGB image | 9530 | 1920 × 1080 | LabelImg | |

| Fu et al., 2025 [49] | Fish | Recirculating aquaculture system | Fixed camera | Hikvision surveillance camera | RGB image | 1635 | 2560 × 1920 | LabelImg | |

| Tong et al., 2025 [50] | Fish | Fish farm | Manual collection | OPPO A96, Huawei Nova 7 Plus, Yingshi CS-H8 camera | RGB image | 670 | 640 × 640 | LabelImg | |

| Livestock farming | Duan et al., 2022 [24] | Rabbit | Cage-raised | Fixed camera | Hikrobot MV CA020 10GC | RGB videos | 40 locations (1 min each) | 1624 × 1240 | LabelMe |

| Zhao et al., 2023 [51] | Rabbit | Cage-raised | Fixed camera | InfiRay T2L-6L | Thermal infrared image | 3443 | 256 × 192 | LabelImg | |

| Peng et al., 2024 [14] | Pig | Breeding farm | Manual collection | Mobile phone | RGB image | 2000 | 1792 × 828 | LabelImg | |

| Intel RealSense D435 | RGB, infrared, depth image | 24 | 1280 × 720 | ||||||

| Heng et al., 2024 [17] | Piglet | Breeding farm | Fixed camera | FLIR A6 stereo camera | Thermal infrared image | 2000 sets (1 thermal infrared image, 1 RGB image each) | 640 × 512 | LabelImg | |

| RGB image | 1920 × 1080 |

| Reference | Animal | Deep Learning Model | Network (Backbone) | Modification | Accuracy | Average Precision (AP) | Mean Average Precision (mAP) | Precision | Recall | F1 Score | Speed |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Xue, 2020 [31] | Chicken | YOLOv3 | Darknet-v53 | N/A | N/A | N/A | N/A | 90.8% | 98.9% | 94.7% | 43.6 fps |

| Faster R-CNN | VGG16 | N/A | N/A | N/A | N/A | 98.1% | 98.7% | 98.4% | 8.8 fps | ||

| Liu et al., 2021 [2] | Chicken | YOLOv4 | CSPDarknet53 | N/A | 97.5% | N/A | 100% | 95.24% | 100% | N/A | N/A |

| Li et al., 2022 [26] | Broiler (Chicken) | YOLOv4 | CSPDarknet53 | N/A | N/A | N/A | N/A | 95.1% | 86.3% | 90.5% | 7 fps |

| Hao et al., 2022 [11] | Broiler (Chicken) | improved-YOLOv3 | Darknet-v53 | (1) mosaic enhancement: enrich the dataset, reduce the consumption of GPU (2) the Swish function (activation function): achieve a stronger activation performance (3) an SPP module: reduce information loss and integrate features of different sizes (4) CIoU loss (loss function): faster convergence and better performance | N/A | N/A | 98.6% | 95.0% | 96.8% | N/A | 0.09 s/frame |

| Bist et al., 2023 [25] | Hen (Chicken) | YOLOv5s-MD | a variant of the efficientNet | (1) an improved efficientNet (backbone): achieve good feature extraction performance while being lightweight and efficient (2) “YOLO Loss”, a customed combination of Objectness Loss, Classification Loss, and Regression Loss: to encourage accurate predictions and penalize incorrect predictions | N/A | N/A | 99.5% (mAP@0.50); 82.3%(mAP@0.50:0.95) | N/A | 98.4% | N/A | 55.6 fps |

| Wang, 2023 [9] | Broiler (Chicken) | YOLOv5 | Improved CSPDarknet53 | N/A | N/A | 97.4% (live); 93.1% (dead) | N/A | 97.2% (live); 97.6% (dead) | 93.0% (live); 87.8% (dead) | N/A | 69.4 fps |

| Zhao et al., 2024 [18] | Chicken | YOLOv5s-SE | Improved CSPDarknet53 | (1) CSP module (backbone): reduce computational load (2) Focus module (backbone): expand the input channels, maximizes the preservation of information integrity (3) SE module (attention module): improves characterization ability (4) CIoU (loss function): better assest the quality of the box (5) DIoU_NMS (NMS process): help to address the issue of occlusions | N/A | N/A | 98.2% (mAP@0.5) | 97.70% | 97.90% | N/A | 5.4 ms/frame |

| Yang et al., 2024 [30] | Hen (Chicken) | improved-YOLOv7 | MobileNetv3 | (1) CBAM (attention module): make the networks concentrate on valuable feature layers (2) Repulsion loss (loss function): improved the crowded hen occlusion issue (3) DIoU-NMS (NMS process): filter out more accurate prediction boxes without increasing the computational load | N/A | 86.2% (live); 85.1% (dead) | N/A | 95.7% (live); 95.1% (dead) | 86.8% (live); 86.8% (dead) | 91.0% (live); 90.8% (dead) | 103 fps |

| Bai et al., 2024 [40] | Duck | Mask R-CNN + Swin Transformer | Swin Transformer | (1) Swin Transformer (backbone): improve feature extraction ability (2) Cross-Entropy Loss (loss function): improve classification performance, perform better under high-dense scenarios | N/A | 95.8% (mAP@0.90) | N/A | N/A | N/A | N/A | N/A |

| Xin et al., 2024 [35] | Chicken | improved-YOLOv6 + SE | EfficientRep | (1) ASPP module: reduce the leakage in the detection in complex backgrounds; improve the iteration speed (2) SE attention mechanism: improves characterization ability | N/A | N/A | 92% | 86% | 89% | 87% | N/A |

| Ma et al., 2024 [12] | Hen (Chicken) | RTMDet | CSPNeXt | N/A | N/A | N/A | 92.8% (mAP@50) | 90.61% | 84.4% | N/A | N/A |

| Depuru et al., 2024 [46] | Broiler (Chicken) | YOLOv5s | CSPDarknet53 | N/A | 95% | N/A | mAP@0.50: 98.1% (1WOC); 93.3% (2WOC); 90.7% (3WOC); 96.5% (4WOC) | 94.7% (1WOC); 88.4% (2WOC); 91.2% (3WOC); 95.2% (4WOC) | 95.9% (1WOC); 83.4% (2WOC); 80.1% (3WOC); 90.1% (4WOC) | N/A | N/A |

| Hao et al., 2025 [45] | Broiler (Chicken) | YOLOv11s | N/A | N/A | 91.7% | N/A | N/A | 93.0% | 87.2% | 89.4% | 6.1 ms/frame |

| Zhao et al., 2022 [22] | Fish | DM-YOLOv4 | MobileNetV3 | (1) MobileNetV3 (backbone): achieve lightweight feature extraction network (2) a deep separable convolution (replace the standard convolution): to reduce the number of parameters of the overall network | N/A | 92.43% | N/A | 95.47% | 77.52% | N/A | 64 fps |

| Wang, 2022 [48] | Fish | improved-YOLOv5 | GhostNeck | (1) GhostNet (backbone) (2) CIoU(loss function) (3) SE module | N/A | 97.5% (live); 99% (dead) | 98.40% | N/A | N/A | N/A | 333.3 fps |

| Li et al., 2023 [21] | Fish | DeepSORT | N/A | (1) rotate appearance features and rotating IoU: obtain more robust target dead fish information | 79.6% | 68.4% | N/A | N/A | N/A | N/A | N/A |

| Zhao et al., 2024 [37] | Fish | YOLOv7-PC | improved EfficientNet | (1) PConv module (convolutional module): improve detection speed (2) Coordinate Attention module (neck network): improve feature extraction abilities, reduce false detection caused by occlusion | N/A | N/A | 96.6% | 97.9% | 85.3% | N/A | 49 fps |

| Zheng et al., 2024 [36] | Fish | DD-IYOLOv8 | Improved CSPDarknet53 | (1) DySnakeConv (neck network): to adaptively adjust the receptive field, improving the network’s capability to extract features (2) added a layer for detecting minor objects, modified the detection head of YOLOv8 to 4: to better focus on small targets and occluded dead fish (3) Hybrid Attention Mechanism (later stages of the backbone network): refine global feature extraction | N/A | 91.7% | N/A | 92.8% | 89.4% | 91.0% | N/A |

| Tian et al., 2024 [34] | Fish | improved-YOLOv10 | FasterNet | (1) FasterNet (backbone): reduce model complexity (2) apply enhanced connectivity methods, introduce CSPStage (neck network): improve feature fusion (3) add a compact target detection head: to enhance the detection performance of smaller objects | N/A | 97.50% | N/A | 95.70% | 94.50% | N/A | 36 fps |

| Zhang et al., 2024 [20] | Fish | E-YOLOv4 | Improved CSPDarknet53 | (1) Efficient Channel Attention module (backbone network): strengthen the feature auxiliary branch | N/A | N/A | 96.91% | 94.85% | 94.96% | 95% | N/A |

| D-YOLOv4 | Densenet169 | (1) DenseNet169 (backbone): strengthen the transmission and utilization of dead fish target area features, alleviate the problem of gradient disappearance in the training process | N/A | N/A | 95.93% | 94.81% | 93.44% | 94% | N/A | ||

| Zhang et al., 2025 [33] | Fish | improved-YOLOv8 | Improved CSPDarknet53 | (1) Attention scale sequent fusion: enhance the extraction of multi-scale information, realize the fusion of feature maps at different scales (2) Triple feature encoding: ensure the details of the feature layers of different sized are fully considered (3) Channel and position attention mechanism: to better allocate the weights for learning information under different environmental conditions (4) P2 detection head: to better capture smaller and finer features | N/A | N/A | 98.10% | 95.60% | 95.20% | N/A | 124 fps |

| Zhou et al., 2025 [32] | Fish | Deadfish-YOLO | improved lightweight CSPDarknet | (1) a lightweight backbone network: to ensure fast computations (2) an attention mechanism: to suppress unimportant features (3) ReLU-memristor-like activation function: to improve neural-network performance | N/A | N/A | 94.6% | N/A | N/A | N/A | 85 fps |

| Fu et al., 2025 [49] | Fish | DF-DETR | RepNCSPELAN | (1) RepNCSPELAN (backbone): to better extract multi-scale features, to reduce the amount of model parameters (2) CascadedGroupAttention (feature fusion method): to capture more target features (3) CCFM_CSP module: to fuse important features using parallel dilated convolution with different expansion rates | N/A | N/A | N/A | 94.8% | 94.1% | 94.4% | N/A |

| Tong et al., 2025 [50] | Fish | YOLO-DWM | Improved CSPDarknet53 | (1) DWMConv: to better extract feature (2) C3-EMA module: to enhance the feature processing capabilities (3) C3-Light module: to reduce the model’s parameters and FLOPs | N/A | N/A | 87.5%(mAP@50) | 93.6% | 77.5% | 84.8% | N/A |

| Zhao et al., 2023 [51] | Rabbit | improved-YOLOF | MobileNetv3 | (1) MobileNetv3(backbone): to reduce the number of parameters, achieve a lightweight performance (2) SRM-ASPP structure (field enhancement network): to assist the feature extraction | N/A | 95.0% | N/A | 89.2% | 93.1% | N/A | 79.2 fps |

| Peng et al., 2024 [14] | Pig | YOLOv5-MobileNetV2 | MobileNetV2 | (1) MobileNetV2 (backbone): to reduce the number of parameters, achieve a lightweight performance | N/A | N/A | 99.5% | 99.2% | 99.6% | N/A | 10.8 ms/frame |

| YOLOv5-CBAM | CBAM | (1) CBAM attention mechanism (backbone): to improve the model’s focus on the pig head | N/A | N/A | 99.5% | 99.6% | 99.8% | N/A | 11.6 ms/frame | ||

| Heng et al., 2024 [17] | Piglet (Pig) | YOLOv7-SE | improved EfficientNet | (1) SE attention mechanism (backbone): to better extract features | N/A | N/A | 92.1% | 93.8% | 90.3% | N/A | 6.8 fps |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, Y.; Wang, L.; Jiang, W.; Wang, H. Death Detection and Removal in High-Density Animal Farming: Technologies, Integration, Challenges, and Prospects. Agriculture 2025, 15, 2249. https://doi.org/10.3390/agriculture15212249

Han Y, Wang L, Jiang W, Wang H. Death Detection and Removal in High-Density Animal Farming: Technologies, Integration, Challenges, and Prospects. Agriculture. 2025; 15(21):2249. https://doi.org/10.3390/agriculture15212249

Chicago/Turabian StyleHan, Yutong, Liangju Wang, Wei Jiang, and Hongying Wang. 2025. "Death Detection and Removal in High-Density Animal Farming: Technologies, Integration, Challenges, and Prospects" Agriculture 15, no. 21: 2249. https://doi.org/10.3390/agriculture15212249

APA StyleHan, Y., Wang, L., Jiang, W., & Wang, H. (2025). Death Detection and Removal in High-Density Animal Farming: Technologies, Integration, Challenges, and Prospects. Agriculture, 15(21), 2249. https://doi.org/10.3390/agriculture15212249