Abstract

Cotton is one of the world’s most important economic crops, and its yield and quality have a significant impact on the agricultural economy. However, Verticillium wilt of cotton, as a widely spread disease, severely affects the growth and yield of cotton. Due to the typically small and densely distributed characteristics of this disease, its identification poses considerable challenges. In this study, we introduce YOLO-MSPM, a lightweight and accurate detection framework, designed on the YOLOv11 architecture to efficiently identify cotton Verticillium wilt. In order to achieve a lightweight model, MobileNetV4 is introduced into the backbone network. Moreover, a single-head self-attention (SHSA) mechanism is integrated into the C2PSA block, allowing the network to emphasize critical areas of the feature maps and thus enhance its ability to represent features effectively. Furthermore, the PC3k2 module combines pinwheel-shaped convolution (PConv) with C3k2, and the mobile inverted bottleneck convolution (MBConv) module is incorporated into the detection head of YOLOv11. Such adjustments improve multi-scale information integration, enhance small-target recognition, and effectively reduce computation costs. According to the evaluation, YOLO-MSPM achieves precision (0.933), recall (0.920), mAP50 (0.970), and mAP50-95 (0.797), each exceeding the corresponding performance of YOLOv11n. In terms of model lightweighting, the YOLO-MSPM model has 1.773 M parameters, which is a 31.332% reduction compared to YOLOv11n. Its GFLOPs and model size are 5.4 and 4.0 MB, respectively, representing reductions of 14.286% and 27.273%. The study delivers a lightweight yet accurate solution to support the identification and monitoring of cotton Verticillium wilt in environments with limited resources.

1. Introduction

As a major economic crop, cotton plays a crucial role in supplying raw material for the textile industry [1,2]. Verticillium wilt, caused by Verticillium dahlia Kleb., is a common and highly damaging disease during cotton growth, causing wilting, yellowing, and death of the leaves, which leads to reduced cotton yield and quality [3,4]. In terms of pathogens, the Verticillium genus is a soil-borne plant pathogen that causes wilt in temperate and subtropical regions, capable of infecting over 200 plant species [5]. Traditional detection of Verticillium wilt mainly relies on manual visual inspection, which is subjective and inefficient. Establishing an efficient and accurate monitoring system is essential for large-scale detection, thus improving disease prevention and control efficiency [6,7,8].

In recent years, plant disease recognition based on RGB images and deep learning has become a key direction in agricultural intelligence research. RGB images are easy to obtain and cost-effective, especially when captured rapidly through mobile devices such as smartphones, making this method highly practical and promising for field applications [9,10,11]. The widespread application of machine and deep learning technologies has significantly improved the accuracy and efficiency of physiological status and disease recognition of the plants [12,13,14,15]. After integrating deep learning algorithms, inexpensive and readily available RGB images have achieved high accuracy on various standard datasets [16,17,18,19]. A variety of deep learning paradigms have been explored, including Transformer-based architectures (e.g., DETR and its variants) and segmentation-based methods (e.g., U-Net, DeepLab, Mask R-CNN) [20,21,22,23]. While Transformer models excel at capturing global contextual information and segmentation methods provide pixel-level delineation of lesions, both approaches usually require large training datasets and high computational resources, which constrain their applicability in real-time field scenarios. By contrast, YOLO-based object detection achieves a favorable balance between accuracy, speed, and computational efficiency, making it more suitable for lightweight, real-time applications in agricultural scenarios.

With the development of deep learning, YOLO-based object detection algorithms have demonstrated high accuracy and robustness in disease recognition, offering fast detection speeds and ease of optimization [24,25,26,27,28]. Sun et al. [29] constructed a pest recognition model, YOLO-PEST, based on YOLOv8n, which outperforms the original YOLOv8n model in detection accuracy, with a 3.46% increase in mAP50 and a 7.81% improvement in recall (R). In recent years, researchers have made various improvements to the YOLO model to tackle small-scale or overlapping disease spot recognition tasks in complex environments, such as incorporating attention mechanisms, dynamic convolutional structures, and optimizing loss functions. These optimizations have significantly enhanced the model’s detection accuracy [30,31,32]. Baek et al. [33] introduced an improved AppleStem-YOLO model that incorporates ghost bottlenecks together with global attention to achieve apple stem segmentation. This modification decreased the overall model parameters while boosting efficiency in computation. Xue et al. [34] introduced the PEW-YOLO lightweight detection model to address the issue of low detection efficiency for citrus pests and diseases. By optimizing the PP-LCNet backbone network, introducing the lightweight PGNet backbone, replacing the original C2f module with an integrated multi-scale attention-enhanced C2f_EMA module, and using the Wise-IoU loss function, they improved mAP50 by 1.8% and reduced the model’s parameters by 32.2%, meeting real-time detection requirements. Meng et al. [35] proposed the YOLO-MSM maize leaf disease detection algorithm, which integrates multi-scale variable kernel convolutions, develops the C2F-SK module for optimized feature extraction and representation, and uses MPDIOU to optimize the loss function, achieving increases of 0.66% in precision (P) and 1.61% in R compared to the baseline algorithm.

Cotton Verticillium wilt exhibits a variety of visual features, such as leaf curling, yellowing, and spot distribution, at different growth stages of cotton, making it a typical small-object disease. Therefore, the recognition of cotton Verticillium wilt is easily affected by factors such as light variation, leaf occlusion, and background interference [6,36]. Although recently emerging YOLO variants (such as LCDDN-YOLO [37] and CDDLite-YOLO [38]) have demonstrated promising performance in cotton disease detection, their application for automated detection of cotton Verticillium wilt still faces numerous challenges. Furthermore, most existing studies have not adequately addressed the trade-off between detection accuracy and lightweight deployment, which is crucial for large-scale practical applications.

To address the aforementioned challenges, this study proposes an intelligent disease recognition method based on RGB images of cotton Verticillium wilt. By employing an enhanced YOLO-MSPM model, it achieves precise lesion localization and lightweight deployment under complex background conditions. The YOLO-MSPM model incorporates the MobileNetV4 architecture into its backbone network, enhancing multi-scale feature extraction capabilities while meeting lightweight requirements. To address the diversity of disease features, this study incorporates the single-head self-attention (SHSA) mechanism and pinwheel-shaped convolution (PConv). This leads to the development of novel cross stage partial with single-head self-attention (C2PSHSA) and PC3k2 modules, which enhance the model’s ability to capture disease features, thereby ensuring precise lesion localization. Additionally, mobile inverted bottleneck convolution (MBConv) is introduced in the detection head to further improve the model’s accuracy in predicting lesion bounding boxes. To validate the model’s effectiveness, this study compares YOLO-MSPM with multiple YOLO series models, RetinaNet, and EfficientDet in terms of performance. Through ablation experiments, the contributions of each module are analyzed. With these innovative designs, the YOLO-MSPM model provides an effective solution for efficient identification and lightweight deployment in cotton Verticillium wilt detection.

2. Materials and Methods

2.1. Experimental Dataset

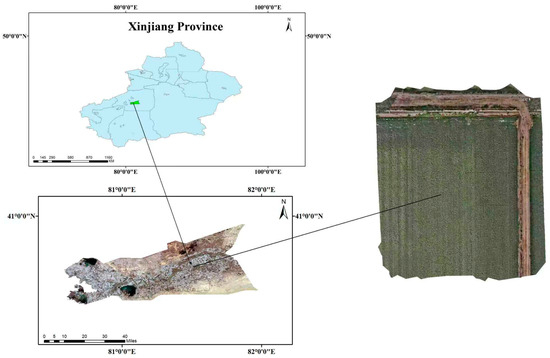

This study’s experimental data was collected from the cotton cultivation base in the Shituan, Alar City, Xinjiang Province, as shown in Figure 1. This region is an important cotton-growing area with a typical temperate continental arid climate. In recent years, the local climate has exhibited trends of rising average daily temperatures and increased precipitation [39]. These climatic conditions not only provide favorable environments for cotton growth but also create specific conditions conducive to the occurrence of Verticillium wilt. Between August and September 2024, this study photographed cotton plants infected with Verticillium wilt from different angles and distances, ultimately resulting in the collection of 694 raw images.

Figure 1.

Geographic location and satellite imagery of the experimental site in Aral City, Xinjiang, China.

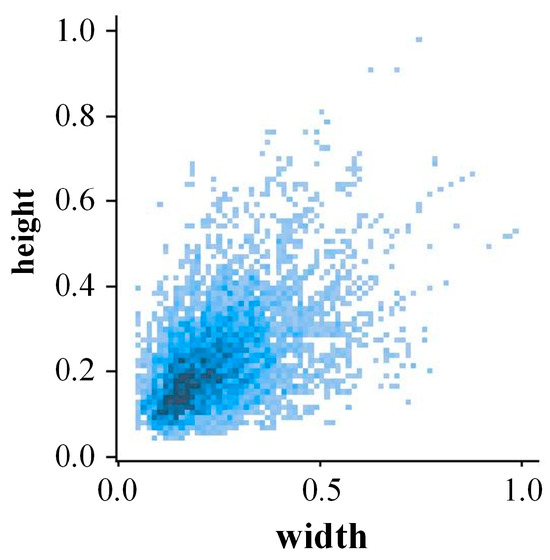

In order to improve the adaptability of the cotton Verticillium wilt recognition and detection model under different environmental conditions, data augmentation was applied to the collected raw images. The data augmentation techniques included random adjustment of lighting intensity, random flipping, translation, and rotation of the images, adding noise, random masking, and image cropping. This resulted in a total of 3470 augmented images, providing rich and comprehensive data support for training the model. Figure 2 shows the distribution of bounding box sizes in the images. From the figure, it is evident that most of the bounding boxes have widths and heights concentrated within a smaller range, indicating the presence of numerous small objects in the image. Additionally, the figure also shows some larger bounding boxes, indicating that the model needs to be capable of handling targets of varying sizes in practical applications.

Figure 2.

Distribution of target bounding box widths and heights.

2.2. YOLO-MSPM Model Construction

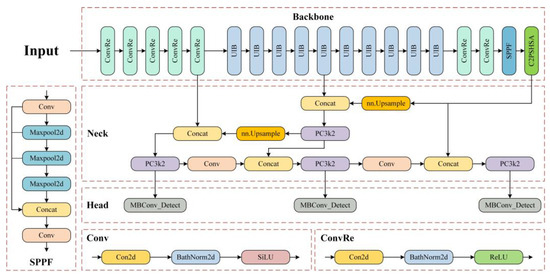

Leveraging the strengths of earlier YOLO versions, YOLOv11 attains higher accuracy and speed in object detection through multiple architectural improvements [40,41]. This study proposes a novel cotton Verticillium wilt detection model, YOLO-MSPM, based on the YOLOv11 framework. The model has undergone a series of structural optimizations to achieve high accuracy and low complexity in the detection of cotton Verticillium wilt. Firstly, the MobileNetV4 architecture is introduced into the backbone network of YOLOv11 to effectively reduce the model’s computational cost without compromising feature extraction efficiency, thus providing a solid foundation for subsequent processing modules. Additionally, in the C2PSA module of YOLOv11, the SHSA mechanism is incorporated. Through the single-head attention mechanism, the model effectively captures long-range dependencies between features and emphasizes critical regions in the image, which consequently enhances the global semantic features extracted by MobileNetV4. Within the neck, the conventional convolution in C3k2 is substituted with PConv in this study. PConv effectively extracts multi-directional feature information through its unique pinwheel-shaped structure, which in turn enhances the model’s ability to detect complex textures and shapes. The synergy between PConv and SHSA further strengthens the model’s spatial understanding, enabling it to retain long range feature dependencies while accurately capturing fine grained local details, ultimately forming an efficient spatial perception mechanism. Finally, in the detection head of YOLOv11, the MBConv module is introduced to refine the object detection output, which in turn improves the model’s localization accuracy. These four modules collaboratively optimize the network architecture from multiple dimensions, forming a complementary and synergistic relationship. The overall structure of the YOLO-MSPM model is shown in Figure 3.

Figure 3.

The structure of the YOLO-MSPM model.

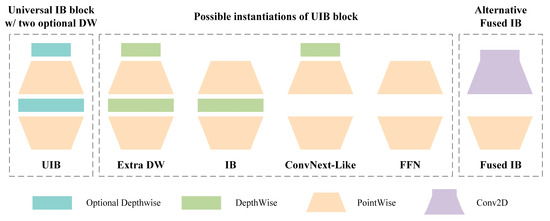

2.3. Lightweight Backbone Network MobileNetV4

MobileNetV4 is the latest generation of lightweight neural network architectures, specifically optimized for mobile devices [42]. The core module of this architecture, universal inverted bottleneck (UIB), is an extension of the traditional inverted bottleneck (IB). It enhances network flexibility and adaptability by introducing two optional depthwise operations [43,44]. This design allows the network to choose whether to include additional depthwise operations at different stages, thereby improving feature extraction capability while maintaining computational efficiency. The UIB module, depicted in Figure 4. Supports multiple instantiations such as Extra DW, IB, ConvNext-Like, and FFN. Such a design allows the backbone network to flexibly adapt to various optimization tasks, thus enabling efficient feature extraction. Furthermore, to improve the accuracy of object detection, the MobileNetV4 architecture in this study incorporates a multi-scale feature extraction mechanism. This mechanism extracts features at different levels of the backbone network and fuses them in the neck part, enabling precise detection of targets.

Figure 4.

The structure of the UIB module in MobileNetV4.

2.4. Improved C2PSA Module

The C2PSA module combines convolutional layers and spatial attention mechanisms to perform parallel extraction of local detail features and global spatial information. The convolutional layers capture local details within the feature maps, while the spatial attention mechanism dynamically adjusts the weight of each position in the feature map, directing the model’s focus toward target regions [45]. This parallel processing approach not only retains the fine details of local features but also enhances the model’s understanding of global context through the spatial attention mechanism, thereby improving the accuracy of object detection.

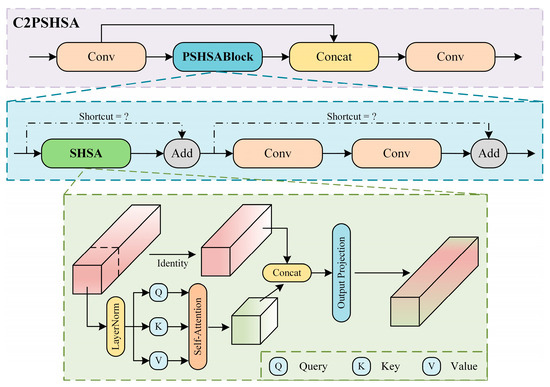

In this study, the SHSA mechanism is introduced into the C2PSA module, replacing the original spatial attention mechanism, resulting in the proposed C2PSHSA module. The structure of the C2PSHSA module is shown in Figure 5. The SHSA mechanism employs a single-head attention strategy, which effectively avoids the redundant computational issues often associated with multi-head attention, and further reduces computational complexity by applying attention to only certain channels [46]. By integrating the SHSA mechanism into the C2PSA module, the model can achieve higher computational efficiency while maintaining high accuracy. Additionally, it reduces memory usage and is more capable of capturing global dependencies within the feature map, thereby improving feature representation quality. The C2PSHSA module makes the model more advantageous on resource constrained devices and performs exceptionally well in complex visual tasks.

Figure 5.

Structure diagram of the C2PSHSA module.

2.5. Improved C3k2 Module

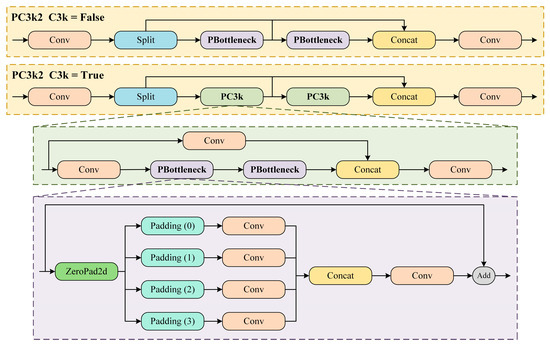

The C3k2 module, based on the CSP structure, replaces the traditional larger convolution kernels with two smaller ones, effectively reducing model complexity and computational demands without compromising feature extraction performance [47]. This design not only improves the efficiency of feature extraction but also enhances the model’s ability to detect targets of different scales, particularly excelling in small target detection.

In this study, the PConv is introduced to replace the original regular convolution in C3k2, resulting in the new PC3k2 module, as shown in Figure 6. The PConv, with its unique convolution kernel design, can better capture the underlying features of targets, which strengthens the model’s performance on small object detection [48]. Additionally, the design of PConv significantly enlarges the receptive field while adding only a small number of parameters. This allows the PC3k2 module to further optimize the detection performance for targets of various scales, especially small targets, by capturing the global information of the target more comprehensively. The PC3k2 also retains the flexibility of the C3k2 module, making it adjustable and optimized for different detection task requirements, ensuring effective detection and processing of targets at various scales.

Figure 6.

Structure diagram of the PC3k2 module.

2.6. Improved Detection Head

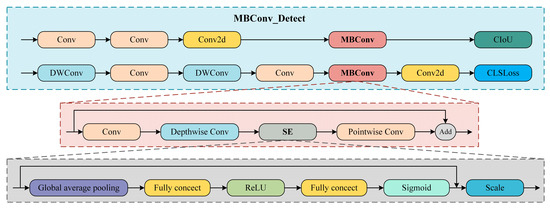

YOLOv11 introduces two depthwise separable convolution (DWConv) layers into the detection head. Specifically, the regression head employs conventional convolution operations, while the classification head utilizes DWConv [49]. The regression head is mainly responsible for predicting the boundary box coordinates of objects, which typically requires high localization accuracy, thus conventional convolutions are used to capture finer feature information. On the other hand, the classification head focuses on identifying the object categories, and DWConv are more suitable for classification tasks due to their computational efficiency and lower parameter count, which helps improve the model’s speed and reduce its complexity. This design enables YOLOv11 to optimize computational efficiency while maintaining high detection accuracy, particularly exhibiting superior performance when handling large-scale datasets.

To further optimize the model’s detection performance, this study incorporates the MBConv structure into the classification and regression heads of the YOLOv11 detection head, thereby constructing a new MBConv_Detect module, whose architecture is shown in Figure 7. MBConv enhances computational efficiency by combining depthwise convolutions with pointwise convolutions. The structure first expands the channel numbers of the feature map, then applies depthwise convolution, and finally reduces the channel numbers through pointwise convolution, thereby reducing computation while maintaining network performance [50]. Furthermore, the MBConv architecture incorporates the squeeze-and-excitation (SE) module, which enhances the network’s expressiveness by adaptively recalibrating the channel responses of feature maps. The SE module performs global average pooling on feature maps and produces channel attention weights via two fully connected layers, thereby enhancing the representational power of key features [51]. By integrating MBConv into the YOLOv11 detection head to form the MBConv_Detect module, the model becomes more capable of adapting to input of varying sizes and effectively predicting the location of target bounding boxes, thus improving the model’s generalization ability and the accuracy of object detection.

Figure 7.

Structure diagram of the MBConv_Detect module.

2.7. Experimental Setup

2.7.1. Experimental Environment Setup

The hardware used for the experiments in this study includes a computer equipped with an NVIDIA RTX A2000 GPU, which has a memory capacity of 12 GB, and is paired with an Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz processor and 30GB of RAM. For the software setup, Ubuntu 20.04 served as the operating system, and the experimental framework was implemented using PyTorch 2.1.1, Python 3.10, along with CUDA 11.8 and cuDNN 8.

During model training, the number of iterations was set to 300. The initial learning rate was 0.01, with a learning rate decay factor of 0.01. This factor gradually reduces the learning rate during training to prevent overfitting caused by excessively high learning rates in the later stages. Additionally, the momentum coefficient was set to 0.937 to accelerate model convergence and enhance training stability. The weight decay coefficient was set to 0.0005, serving as a regularization method to constrain the model’s weights and prevent overfitting. The experiment also included 3 warm-up epochs to ensure a smooth start for the model during the initial stages of training.

2.7.2. Evaluation Metrics

To evaluate the model’s performance in recognition and detection, this study used P, R, mAP50, and mAP50-95 as metrics to measure detection effectiveness. Specifically, P measures the accuracy of the model’s predictions, while R reflects the model’s ability to correctly predict positive classes among the true positive samples. mAP50 refers to the model’s average precision (AP) at an intersection over union (IoU) threshold of 0.5, while mAP50-95 is the model’s average precision across IoU thresholds ranging from 0.5 to 0.95. The formulas for calculating the different evaluation metrics are as follows:

where represents true positives, represents false positives, represents false negatives, and denotes the number of categories.

3. Results and Discussion

3.1. Performance Comparison of the Model Before and After Improvements

This study aimed to improve the detection performance of the YOLOv11 model for cotton Verticillium wilt recognition by enhancing its ability to detect targets at different scales. Table 1 demonstrates the comparison results of the before and after improvements in terms of detection accuracy and computational complexity. Compared to YOLOv11n, the improved YOLO-MSPM model achieves 0.933 and 0.920 on P and R, respectively, representing improvements of 1.891% and 3.238% on mAP50 and mAP50-95. In terms of model complexity, the YOLO-MSPM model has only 1.773 M parameters, 5.4 GFLOPs, and a model size of 4.0 MB, significantly smaller than the YOLOv11n model. Therefore, it can be concluded that the YOLO-MSPM model outperforms the YOLOv11n model in both detection accuracy and model size.

Table 1.

Comparison of detection performance before and after model improvements.

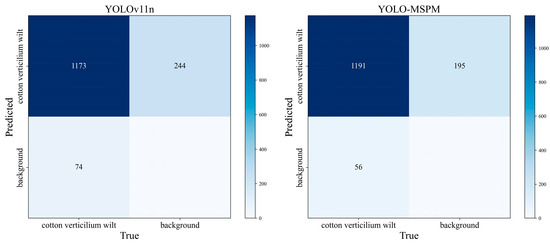

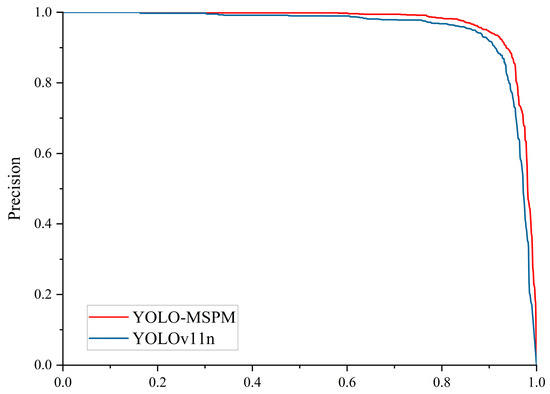

Figure 8 shows the confusion matrix results for the YOLOv11n and YOLO-MSPM models. In the confusion matrix, the values on the diagonal represent the number of correctly predicted samples, where higher values indicate better recognition accuracy. The off-diagonal values indicate misclassified samples, and lower values indicate higher detection accuracy. From Figure 8, it can be seen that the YOLO-MSPM model correctly identified 1191 cotton Verticillium wilt samples, missed 56 samples, and falsely detected 195 samples. In comparison, the YOLOv11n model correctly predicted 1173 samples, with 74 missed samples and 244 false positives. Figure 9 compares the PR curves of the models before and after improvements. At the same R, the YOLO-MSPM model outperformed the YOLOv11n model in terms of P. Overall, the YOLO-MSPM model demonstrates higher detection accuracy with fewer misclassifications than the YOLOv11n model.

Figure 8.

Confusion matrix of the models before and after improvements.

Figure 9.

PR Curve comparison of the models before and after improvements.

3.2. Comparison of Detection Performance Across Different Models

In order to evaluate the detection performance of the YOLO-MSPM model comprehensively, this study compared its performance with various n-scale YOLO series models, as well as RetinaNet and EfficientDet models. The YOLO series models include YOLOv5n, YOLOv6n, YOLOv8n, and YOLOv10n. These YOLO models are widely used in the field of object detection and are considered representative in terms of performance and practical deployment. Additionally, RetinaNet and EfficientDet, as widely applied single-stage detection models, excel in balancing accuracy and speed. By conducting a comparative analysis with these established models, the performance advantages of YOLO-MSPM can be more thoroughly demonstrated.

Table 2 presents a comparison of detection accuracy and computational complexity across different models. The results indicate that YOLOv5n has smaller parameters, GFLOPs, and model size, which are 2.503 M, 7.1, and 5.3 MB, respectively, but its detection accuracy is relatively low. Specifically, the mAP50-95 is only 0.689, which is 13.551% lower than that of YOLO-MSPM. While YOLOv6n shows an improvement in accuracy over YOLOv5n, it also results in increased computational costs. YOLOv10n strikes a balance between accuracy and computational cost, achieving a R of 0.891 and an mAP50 of 0.947, which are only 3.152% and 2.371% lower than those of YOLO-MSPM, respectively. However, its parameter size and GFLOPs are 52.002% and 51.852% higher than YOLO-MSPM, meaning there is still a performance gap when compared to YOLO-MSPM. Furthermore, the detection performance of the RetinaNet model lags significantly behind YOLO-MSPM, with an mAP50-95 of only 0.423, considerably lower than that of the YOLO models and YOLO-MSPM. While the EfficientDet model achieves P and mAP50 values of 0.868, its parameter size is 3.853 M and model size is 8.2 MB, which are 2.08 M and 4.2 MB larger than YOLO-MSPM, respectively.

Table 2.

Comparison of detection performance across different models.

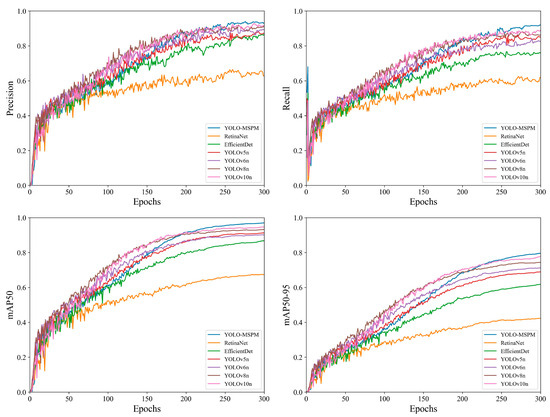

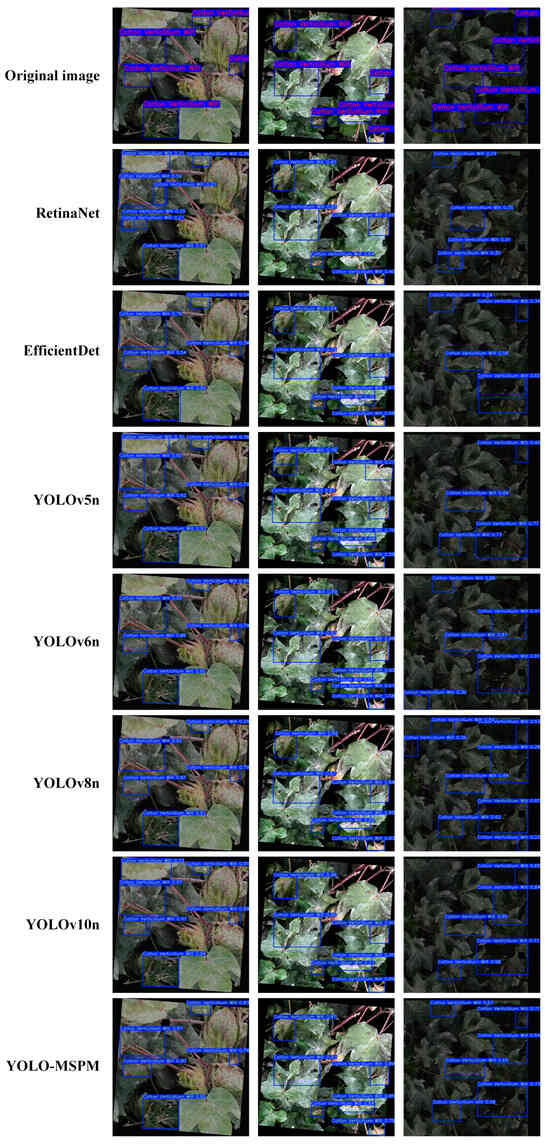

To further compare the detection effectiveness of the different models, the study plotted the precision iteration curves for each model, as shown in Figure 10. Furthermore, three image samples were randomly selected to qualitatively compare the detection outcomes of the models, as illustrated in Figure 11. As shown in Figure 10, the accuracy of all models exhibits an upward trend as the number of training iterations increases. Specifically, the YOLO-MSPM model demonstrates relatively low performance across all metrics during the initial training phase but ultimately achieves the highest level as training iterations progress. This “initially low, then high” trend is primarily attributed to the deeper architecture and the incorporation of attention mechanisms within the YOLO-MSPM model, which require a longer period during early training to complete parameter optimization and effective feature learning. In contrast, the performances of YOLOv5n and YOLOv6n are quite similar, while YOLOv8n and YOLOv10n show better performance than YOLOv5n and YOLOv6n but still have lower final detection accuracy than YOLO-MSPM. The precision iteration curves of RetinaNet and EfficientDet models consistently fall below those of the YOLO series models and YOLO-MSPM, indicating relatively lower detection performance. Furthermore, as shown in Figure 11, YOLOv5n, YOLOv6n, YOLOv8n, YOLOv10n, as well as RetinaNet and EfficientDet, exhibit some false positives and missed detections, whereas the YOLO-MSPM model has relatively fewer errors. In summary, the YOLO-MSPM model outperforms other comparative models in both detection accuracy and computational cost, providing more accurate identification of cotton Verticillium wilt and reducing false positives and false negatives.

Figure 10.

Comparison of precision iteration curves across different models.

Figure 11.

Comparison of detection performance among different models.

3.3. Validation of the Effectiveness of Different Modules

3.3.1. Validation of the MobileNetV4 Architecture

In this study, the YOLOv11n model was lightweighted by introducing the MobileNetV4 architecture into its backbone network. Table 3 shows the changes in accuracy and computational costs before and after the introduction of the MobileNetV4 architecture. The results show that after incorporating the MobileNetV4 architecture, the model’s recognition accuracy decreased. Specifically, P decreased from 0.920 to 0.905, R decreased from 0.900 to 0.877, and mAP50 and mAP50-95 decreased to 0.947 and 0.736, respectively. However, in terms of computational cost, after introducing MobileNetV4, the model’s parameters decreased by 28.854%, GFLOPs decreased by 19.048%, and model size was reduced by 27.273%. These changes indicate that while the MobileNetV4 architecture offers significant advantages in reducing computational costs, it negatively impacts the model’s detection accuracy. The design of the MobileNetV4 architecture aims to achieve efficient computation in resource constrained environments. To achieve lightweight performance, MobileNetV4 reduces both computational load and parameter count, but this inevitably weakens the model’s ability to extract features, resulting in a decrease in accuracy.

Table 3.

Experimental results before and after introducing the MobileNetV4 architecture.

3.3.2. Validation of the C2PSHSA Module

In order to improve the detection accuracy of YOLOv11n, the SHSA mechanism was integrated into the C2PSA structure. Table 4 presents the model’s accuracy and computational cost before and after the introduction of the C2PSHSA module. The data from the table show that after incorporating the C2PSHSA module, the model’s P increased by 3.804%, R improved by 3.889%, and the mAP50 and mAP50-95 increased by 2.521% and 6.606%, respectively. Additionally, the parameters, GFLOPs, and model size did not show significant changes. These results suggest that the C2PSHSA module enhances detection accuracy without a substantial increase in computational cost. The C2PSHSA module effectively leverages the feature information capture and fusion capabilities of the SHSA mechanism to guide the model toward focusing on key target regions. This enables efficient extraction of detailed information from objects at different scales while reducing the interference of background noise on detection results, thereby enhancing the model’s detection accuracy for objects of varying sizes.

Table 4.

Experimental results before and after introducing the C2PSHSA module.

3.3.3. Validation of the PC3k2 Module

Table 5 displays the experimental results before and after the introduction of the PC3k2 module. The experimental data indicate that the inclusion of the PC3k2 module resulted in a significant improvement in the model’s accuracy, particularly in R and mAP50-95, which increased by 5.000% and 7.642%, respectively. The parameters are 2.496 M, GFLOPs are 6.3, and the model size is 5.3 MB, which shows no significant change compared to YOLOv11n. These results suggest that the PC3k2 module not only enhances detection accuracy but also ensures that the computational cost of the model remains largely unaffected.

Table 5.

Experimental results before and after introducing the PC3k2 module.

The PC3k2 module combines the advantages of C3k2 and PConv, allowing it to more accurately extract central features of the target and background information, significantly enhancing the model’s feature extraction capabilities while avoiding a noticeable increase in computational cost. PConv, with its unique pinwheel-shaped structure, is more effective at enhancing the contrast between the target and background. This design achieves a larger receptive field with fewer parameters, while also suppressing cluttered background signals, ultimately improving detection accuracy.

3.3.4. Validation of the MBConv Detect Module

Table 6 presents a comparison of the YOLOv11n model’s recognition performance before and after incorporating the MBConv Detect module. The results show that after introducing the MBConv Detect module, the P of the YOLOv11n model increased to 0.946, mAP50 improved by 0.027, and R and mAP50-95 increased by 5.444% and 7.254%, respectively. These results indicate that the MBConv Detect module provides a significant advantage in enhancing the model’s recognition capabilities. However, the inclusion of the MBConv module also led to an increase in computational cost. Specifically, the parameter size increased by 0.055 M, GFLOPs increased by 0.3, and the model size increased by 0.2 MB. The MBConv module employs DWConv and the SE mechanism, which helps capture complex features in images more efficiently. However, since the MBConv module was added to both the classification and regression heads in the detection head, this contributed to an increase in computational costs. Despite this, considering the significant accuracy improvements brought by the MBConv module, this increase in cost is deemed acceptable.

Table 6.

Experimental results before and after introducing the MBConv Detect module.

3.3.5. Ablation Experiment

To comprehensively evaluate the synergistic effects of each module in the YOLO-MSPM model, this study conducted an ablation experiment by introducing different combinations of modules. Table 7 presents the detection results from the various experiments. In the two-module combination experiments, any pairwise combination of the C2PSHSA, PC3k2, and MBConv_Detect modules was able to improve the accuracy of the YOLOv11n model. For example, when both the C2PSHSA and MBConv_Detect modules were introduced, the model’s R increased to 0.958, and mAP50-95 increased to 0.845, with the parameter size increasing by 0.023 M and GFLOPs increasing by 0.3. When the C2PSHSA and PC3k2 modules were used together, the model’s P was 0.955, and mAP50-95 was 0.835, with no increase in the computational cost of the model. When all three modules (C2PSHSA, PC3k2, and MBConv_Detect) were used together, the model’s performance reached its peak, with P and R increasing by 5.217% and 5.889%, respectively, and mAP50 and mAP50-95 increasing by 3.571% and 10.363%.

Table 7.

Ablation experiment results.

The introduction of the MobileNetV4 architecture significantly enhanced the model’s lightweight characteristics. In the three-module combination experiments, MobileNetV4 effectively reduced the model’s parameters and computational complexity. For example, when MobileNetV4 was combined with the C2PSHSA and PC3k2 modules, although there was a slight drop in accuracy, with P and R decreased by 0.019 and 0.026, respectively, and mAP50 was 0.968, mAP50-95 was 0.778. In this case, the model’s parameter size was reduced to 1.718 M, a decrease of 30.276%, while GFLOPs and model size decreased by 1.2 and 1.5 MB, respectively.

When MobileNetV4 was combined with C2PSHSA, PC3k2, and MBConv_Detect modules, the YOLO-MSPM model achieved a balance between accuracy and computational cost. Compared to using only the C2PSHSA, PC3k2, and MBConv_Detect modules, the model showed a slight decrease in R by 3.463%, and mAP50 by 1.623%. However, the parameter size was reduced to 1.773 M, a decrease of 29.615%, while GFLOPs and model size were reduced by 18.182% and 25.926%, respectively. In conclusion, the YOLO-MSPM model, through structural adjustments and optimizations, has achieved a balance between accuracy and efficiency in the task of cotton Verticillium wilt detection. It demonstrates strong adaptability and can accurately identify the disease, providing an efficient and precise technological approach for its prevention and control.

4. Conclusions

This study developed a lightweight cotton Verticillium wilt detection model, YOLO-MSPM, based on the YOLOv11n model. The model integrates a lightweight feature extraction network, MobileNetV4, into the backbone network and replaces the original attention mechanism in the C2PSA module with the SHSA mechanism. In addition, PConv was introduced in the C3k2 module, and the MBConv module was added to both the classification and regression heads of the YOLOv11n detection head. These optimizations effectively improved the model’s accuracy in detecting cotton Verticillium wilt and significantly reduced the computational burden. The performance of the improved YOLO-MSPM model achieved a P of 0.933, R of 0.920, mAP50 of 0.970, and mAP50-95 of 0.797. Compared to YOLOv11n, the model showed improvements of 1.413%, 2.222%, 1.891%, and 3.238%, respectively, and outperformed the YOLOv5n, YOLOv6n, YOLOv8n, and YOLOv10n models, as well as the RetinaNet and EfficientDet models. The parameter size of YOLO-MSPM was only 1.773 M, a 31.332% reduction compared to YOLOv11n, with GFLOPs of 5.4 and a model size of 4.0 MB.

Results from this study demonstrate that the YOLO-MSPM model performs excellently in identifying cotton Verticillium wilt. Future research will explore the adaptability and performance of the YOLO-MSPM model on datasets collected from different regions with varying climate and soil conditions, diverse shooting environments, and multiple types of acquisition devices. Additionally, the model architecture will be further optimized to enhance its target recognition capabilities in complex environments, enabling stable and efficient operation in diverse and dynamic natural settings. Furthermore, the model will be continuously improved with reference to current state-of-the-art plant disease detection methods and deployed in real world field environments to systematically evaluate its detection performance and applicability under practical conditions.

Author Contributions

Conceptualization, X.Z. and Y.S.; methodology, L.X., F.W. and J.C.; software, F.W. and Y.S.; validation, X.Z., F.W. and J.C.; formal analysis, J.C. and Y.S.; investigation, L.X., F.W., X.L. and T.L.; resources, F.W., X.L. and X.Z.; data curation, X.L., X.Y. and T.L.; writing—original draft preparation, X.Z. and J.C.; writing—review and editing, Y.S. and L.X.; visualization, L.X., F.W. and X.Y.; supervision, X.Z., J.C. and Y.S.; project administration, L.X., Y.S. and J.C.; funding acquisition, L.X. and Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Key Laboratory of Tarim Oasis Agriculture (Tarim University), Ministry of Education (No: TOlab2025001), the Fundamental Research Funds for the Central Universities (No. KYLH2025019), the Henan University of Science and Technology Young Backbone Teacher Project (No. 4004-13450010), and Key R&D and Promotion Projects in Henan Province (Science and Technology Development) (No. 222102110452).

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Acknowledgments

We would like to express our gratitude to the cotton plantation base located in the Shituan, Alar City, Xinjiang Province, for providing the database used in this study.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Chen, S.; Zhu, L.; Sun, L.; Huang, Q.; Zhang, Y.; Li, X.; Ye, X.; Li, Y.; Wang, L. A systematic review of the life cycle environmental performance of cotton textile products. Sci. Total Environ. 2023, 883, 163659. [Google Scholar] [CrossRef]

- Hron, R.J.; Hinchliffe, D.J.; Thyssen, G.N.; Condon, B.D.; Zeng, L.; Santiago Cintron, M.; Jenkins, J.N.; McCarty, J.C.; Sui, R. Interrelationships between cotton fiber quality traits and fluid handling and moisture management properties of nonwoven textiles. Text. Res. J. 2023, 93, 4197–4218. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhao, M.; Li, T.; Wang, L.; Liao, C.; Liu, D.; Zhang, H.; Zhao, Y.; Liu, L.; Ge, X.; et al. Interactions between Verticillium dahliae and cotton: Pathogenic mechanism and cotton resistance mechanism to Verticillium wilt. Front. Plant Sci. 2023, 14, 1174281. [Google Scholar] [CrossRef]

- Zhang, G.; Meng, Z.; Ge, H.; Yuan, J.; Qiang, S.; Jiang, P.a.; Ma, D. Investigating Verticillium wilt occurrence in cotton and its risk management by the direct return of cotton plants infected with Verticillium dahliae to the field. Front. Plant Sci. 2023, 14, 1220921. [Google Scholar] [CrossRef]

- Fradin, E.F.; Thomma, B.P.H.J. Physiology and molecular aspects of Verticillium wilt diseases caused by V. dahliae and V. albo-atrum. Mol. Plant Pathol. 2006, 7, 71–86. [Google Scholar] [CrossRef]

- Huang, C.; Zhang, Z.; Zhang, X.; Jiang, L.; Hua, X.; Ye, J.; Yang, W.; Song, P.; Zhu, L. A novel intelligent system for dynamic observation of cotton verticillium wilt. Plant Phenomics 2023, 5, 0013. [Google Scholar] [CrossRef]

- Yang, M.; Kang, X.; Qiu, X.; Ma, L.; Ren, H.; Huang, C.; Zhang, Z.; Lv, X. Method for early diagnosis of verticillium wilt in cotton based on chlorophyll fluorescence and hyperspectral technology. Comput. Electron. Agric. 2024, 216, 108497. [Google Scholar] [CrossRef]

- Pan, P.; Yao, Q.; Shen, J.; Hu, L.; Zhao, S.; Huang, L.; Yu, G.; Zhou, G.; Zhang, J. CVW-Etr: A High-Precision Method for Estimating the Severity Level of Cotton Verticillium Wilt Disease. Plants 2024, 13, 2960. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Zhang, D.; Sun, Y.; Nanehkaran, Y.A. Using deep transfer learning for image-based plant disease identification. Comput. Electron. Agric. 2020, 173, 105393. [Google Scholar] [CrossRef]

- Barbedo, J.G.A.; Koenigkan, L.V.; Santos, T.T. Identifying multiple plant diseases using digital image processing. Biosyst. Eng. 2016, 147, 104–116. [Google Scholar] [CrossRef]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep Neural Networks Based Recognition of Plant Diseases by Leaf Image Classification. Comput. Intell. Neurosci. 2016, 2016, 3289801. [Google Scholar] [CrossRef]

- Too, E.C.; Li, Y.; Njuki, S.; Liu, Y. A comparative study of fine-tuning deep learning models for plant disease identification. Comput. Electron. Agric. 2019, 161, 272–279. [Google Scholar] [CrossRef]

- Ferentinos, K.P. Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 2018, 145, 311–318. [Google Scholar] [CrossRef]

- Garofalo, S.P.; Ardito, F.; Sanitate, N.; De Carolis, G.; Ruggieri, S.; Giannico, V.; Rana, G.; Ferrara, R.M. Robustness of Actual Evapotranspiration Predicted by Random Forest Model Integrating Remote Sensing and Meteorological Information: Case of Watermelon (Citrullus lanatus, (Thunb.) Matsum. & Nakai, 1916). Water 2025, 17, 323. [Google Scholar] [CrossRef]

- Nigam, R.; Shukla, K.K.; Birah, A.; Khokhar, M.K.; Bhattacharya, B.K. Integrating Ground-Based Spectral Reflectance and Machine Learning for Cotton Leaf Curl Virus Disease (CLCuD) Detection in Cotton Crop. Adv. Space Res. 2025; in press. [Google Scholar] [CrossRef]

- Ngugi, L.C.; Abelwahab, M.; Abo-Zahhad, M. Recent advances in image processing techniques for automated leaf pest and disease recognition–A review. Inf. Process. Agric. 2021, 8, 27–51. [Google Scholar] [CrossRef]

- Wang, P.; Li, W.; Ogunbona, P.; Wan, J.; Escalera, S. RGB-D-based human motion recognition with deep learning: A survey. Comput. Vis. Image Underst. 2018, 171, 118–139. [Google Scholar] [CrossRef]

- Xu, X.; Li, Y.; Wu, G.; Luo, J. Multi-modal deep feature learning for RGB-D object detection. Pattern Recognit. 2017, 72, 300–313. [Google Scholar] [CrossRef]

- Wang, F.; Pan, J.; Xu, S.; Tang, J. Learning Discriminative Cross-Modality Features for RGB-D Saliency Detection. IEEE Trans. Image Process. 2022, 31, 1285–1297. [Google Scholar] [CrossRef]

- Wu, J.; Wen, C.; Chen, H.; Ma, Z.; Zhang, T.; Su, H.; Yang, C. DS-DETR: A model for tomato leaf disease segmentation and damage evaluation. Agronomy 2022, 12, 2023. [Google Scholar] [CrossRef]

- Li, X.; Ding, H.; Yuan, H.; Zhang, W.; Pang, J.; Cheng, G.; Chen, K.; Liu, Z.; Loy, C.C. Transformer-based visual segmentation: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10138–10163. [Google Scholar] [CrossRef]

- do Rosário, E.; Saide, S.M. Segmentation of Leaf Diseases in Cotton Plants Using U-Net and a MobileNetV2 as Encoder. In Proceedings of the 2024 International Conference on Artificial Intelligence, Big Data, Computing and Data Communication Systems (icABCD), Port Louis, Mauritius, 1–2 August 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Xu, H.; Song, J.; Zhu, Y. Evaluation and Comparison of Semantic Segmentation Networks for Rice Identification Based on Sentinel-2 Imagery. Remote Sens. 2023, 15, 1499. [Google Scholar] [CrossRef]

- Kaleem, Z. Lightweight and Computationally Efficient YOLO for Rogue UAV Detection in Complex Backgrounds. IEEE Trans. Aerosp. Electron. Syst. 2025, 61, 5362–5366. [Google Scholar] [CrossRef]

- Ma, C.; Fu, Y.; Wang, D.; Guo, R.; Zhao, X.; Fang, J. YOLO-UAV: Object Detection Method of Unmanned Aerial Vehicle Imagery Based on Efficient Multi-Scale Feature Fusion. IEEE Access 2023, 11, 126857–126878. [Google Scholar] [CrossRef]

- Chen, C.; Zheng, Z.; Xu, T.; Guo, S.; Feng, S.; Yao, W.; Lan, Y. YOLO-Based UAV Technology: A Review of the Research and Its Applications. Drones 2023, 7, 190. [Google Scholar] [CrossRef]

- Qiu, J.; Yu, F.; Xu, F.; Chen, X.; Wang, J. A Relative Attitude Detection Method for Unmanned Aerial Vehicles Based on You Only Look Once Framework. Aerospace 2025, 12, 191. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 18th IEEE/CVF International Conference on Computer Vision (ICCV), Electr Network, Montreal, QC, Canada, 11–17 October 2021; pp. 9992–10002. [Google Scholar]

- Sun, C.; Bin Azman, A.; Wang, Z.; Gao, X.; Ding, K. YOLO-UP: A High-Throughput Pest Detection Model for Dense Cotton Crops Utilizing UAV-Captured Visible Light Imagery. IEEE Access 2025, 13, 19937–19945. [Google Scholar] [CrossRef]

- Wang, L.; Cai, J.; Wang, T.; Zhao, J.; Gadekallu, T.R.; Fang, K. Detection of Pine Wilt Disease Using AAV Remote Sensing with an Improved YOLO Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 19230–19242. [Google Scholar] [CrossRef]

- Yao, J.; Song, B.; Chen, X.; Zhang, M.; Dong, X.; Liu, H.; Liu, F.; Zhang, L.; Lu, Y.; Xu, C.; et al. Pine-YOLO: A Method for Detecting Pine Wilt Disease in Unmanned Aerial Vehicle Remote Sensing Images. Forests 2024, 15, 737. [Google Scholar] [CrossRef]

- Gao, W.; Zong, C.; Wang, M.; Zhang, H.; Fang, Y. Intelligent identification of rice leaf disease based on YOLO V5-EFFICIENT. Crop Prot. 2024, 183, 106758. [Google Scholar] [CrossRef]

- Baek, N.R.; Lee, Y.; Noh, D.-h.; Lee, H.-M.; Cho, S.W. AS-YOLO: Enhanced YOLO Using Ghost Bottleneck and Global Attention Mechanism for Apple Stem Segmentation. Sensors 2025, 25, 1422. [Google Scholar] [CrossRef] [PubMed]

- Xue, R.; Wang, L. Research on Lightweight Citrus Leaf Pest and Disease Detection Based on PEW-YOLO. Processes 2025, 13, 1365. [Google Scholar] [CrossRef]

- Meng, Y.; Zhan, J.; Li, K.; Yan, F.; Zhang, L. A rapid and precise algorithm for maize leaf disease detection based on YOLO MSM. Sci. Rep. 2025, 15, 6016. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Liang, Z.; Yang, G.; Lin, T.; Liu, B. Assessing the severity of verticillium wilt in cotton fields and constructing pesticide application prescription maps using unmanned aerial vehicle (UAV) multispectral images. Drones 2024, 8, 176. [Google Scholar] [CrossRef]

- Feng, H.; Chen, X.; Duan, Z. LCDDN-YOLO: Lightweight Cotton Disease Detection in Natural Environment, Based on Improved YOLOv8. Agriculture 2025, 15, 421. [Google Scholar] [CrossRef]

- Pan, P.; Shao, M.; He, P.; Hu, L.; Zhao, S.; Huang, L.; Zhou, G.; Zhang, J. Lightweight cotton diseases real-time detection model for resource-constrained devices in natural environments. Front. Plant Sci. 2024, 15, 1383863. [Google Scholar] [CrossRef]

- Zhu, Y.; Sun, L.; Luo, Q.; Chen, H.; Yang, Y. Spatial optimization of cotton cultivation in Xinjiang: A climate change perspective. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103523. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- He, L.; Zhou, Y.; Liu, L.; Cao, W.; Ma, J. Research on object detection and recognition in remote sensing images based on YOLOv11. Sci. Rep. 2025, 15, 14032. [Google Scholar] [CrossRef]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.; Ye, C.; Akin, B. Mobilenetv4-universal models for the mobile ecosystem. arXiv 2024, arXiv:2404.10518. [Google Scholar] [CrossRef]

- Tao, Y.; Karimian, H.; Shi, J.; Wang, H.; Yang, X.; Xu, Y.; Yang, Y. MobileYOLO-Cyano: An Enhanced Deep Learning Approach for Precise Classification of Cyanobacterial Genera in Water Quality Monitoring. Water Res. 2025, 285, 124081. [Google Scholar] [CrossRef] [PubMed]

- Du, Y.; Chen, L.; Hao, X. RL-Net: A rapid and lightweight network for detecting tiny vehicle targets in remote sensing images. Complex Intell. Syst. 2025, 11, 328. [Google Scholar] [CrossRef]

- Luo, Y.; Wu, A.; Fu, Q. MAS-YOLOv11: An improved underwater object detection algorithm based on YOLOv11. Sensors 2025, 25, 3433. [Google Scholar] [CrossRef]

- Yun, S.; Ro, Y. Shvit: Single-head vision transformer with memory efficient macro design. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5756–5767. [Google Scholar]

- Meng, H.; Tao, M.; Huang, R.; Xu, Y.; Memon, M.B. Improved YOLOv11-EGM deep learning model for rock fragment identification. Arch. Civ. Mech. Eng. 2025, 25, 223. [Google Scholar] [CrossRef]

- Yang, J.; Liu, S.; Wu, J.; Su, X.; Hai, N.; Huang, X. Pinwheel-shaped Convolution and Scale-based Dynamic Loss for Infrared Small Target Detection. arXiv 2025, arXiv:2412.16986. [Google Scholar] [CrossRef]

- Wang, C.; Wang, Z.; Chen, L.; Liu, W.; Wang, X.; Cao, Z.; Zhao, J.; Zou, M.; Li, H.; Yuan, W. Intelligent Identification of Tea Plant Seedlings Under High-Temperature Conditions via YOLOv11-MEIP Model Based on Chlorophyll Fluorescence Imaging. Plants 2025, 14, 1965. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Le, Q. Efficientnetv2: Smaller models and faster training. In Proceedings of the 2021 International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Cui, J.; Zhang, Y.; Chen, H.; Zhang, Y.; Cai, H.; Jiang, Y.; Ma, R.; Qi, L. CSWin-MBConv: A dual-network fusing CNN and Transformer for weed recognition. Eur. J. Agron. 2025, 164, 127528. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).