RFA-YOLOv8: A Robust Tea Bud Detection Model with Adaptive Illumination Enhancement for Complex Orchard Environments

Abstract

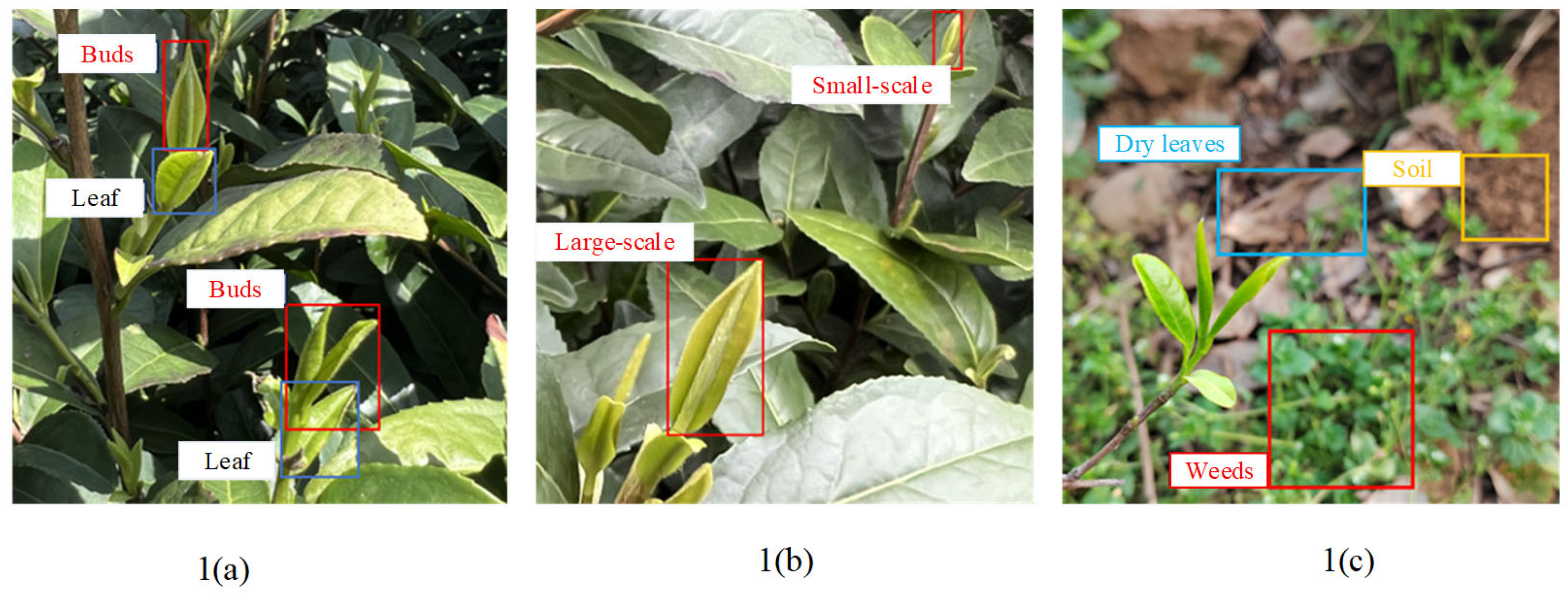

1. Introduction

2. Related Works

3. Proposed Methods

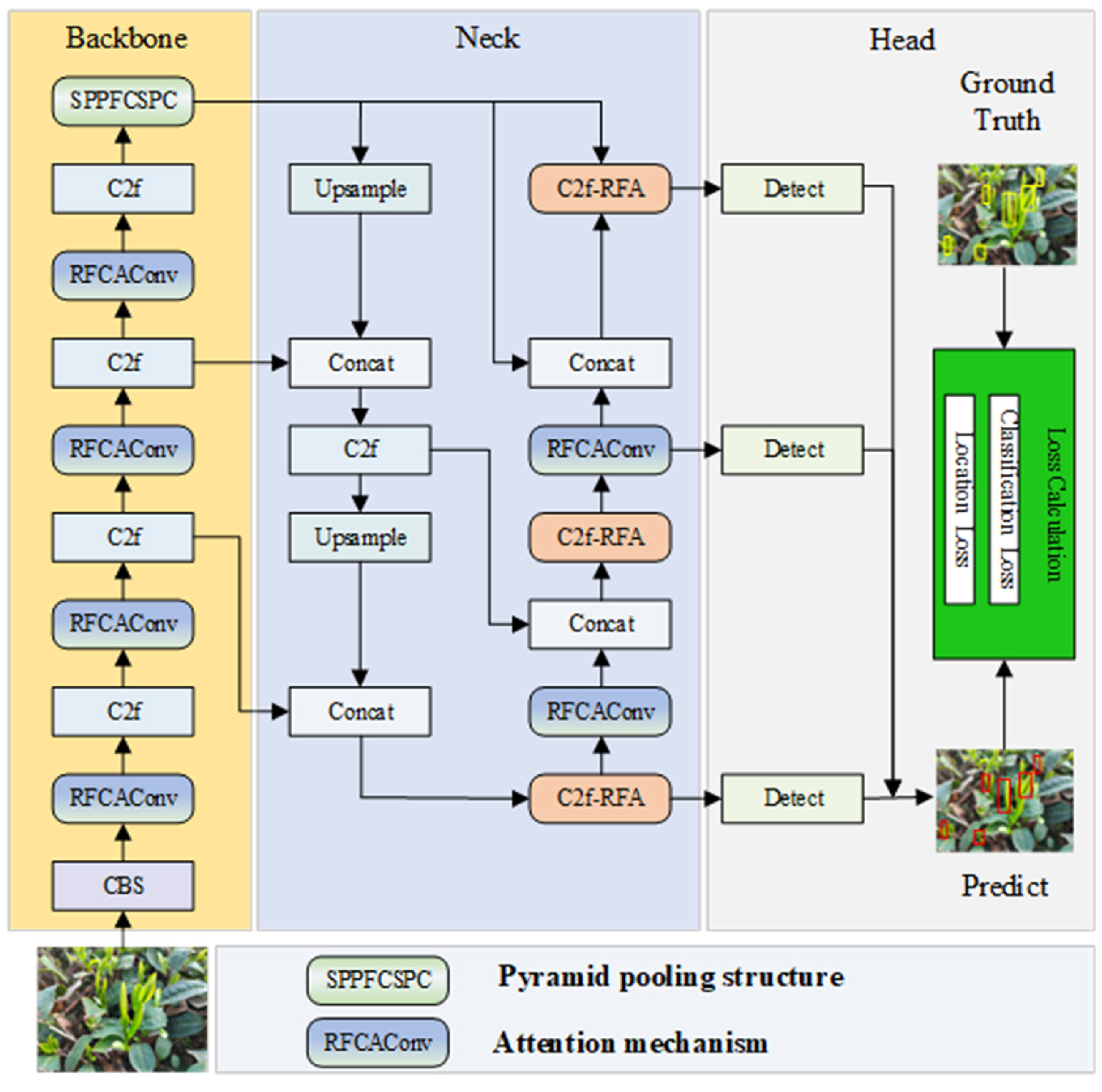

3.1. Overall Model Structure of RFA-YOLOv8

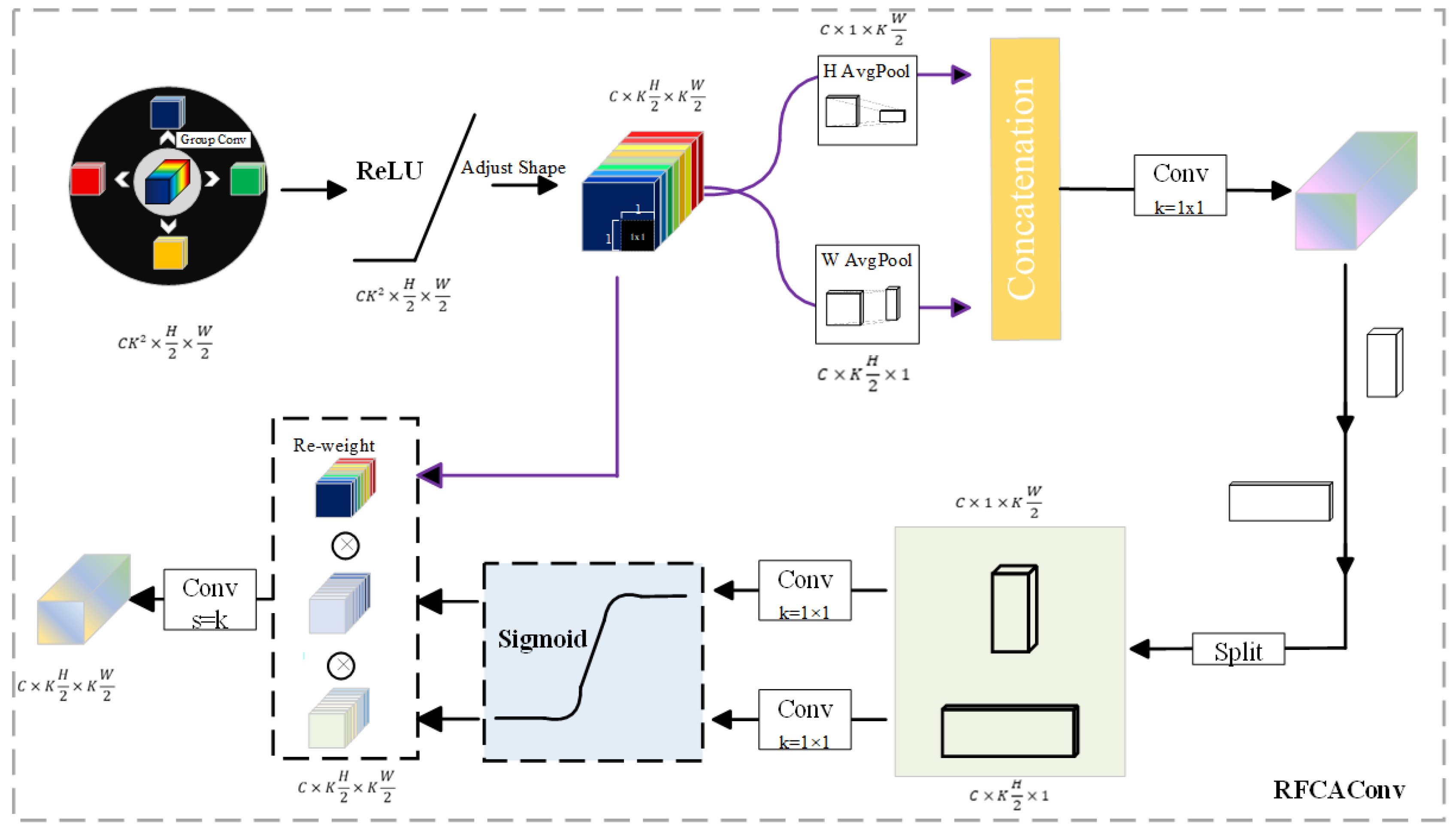

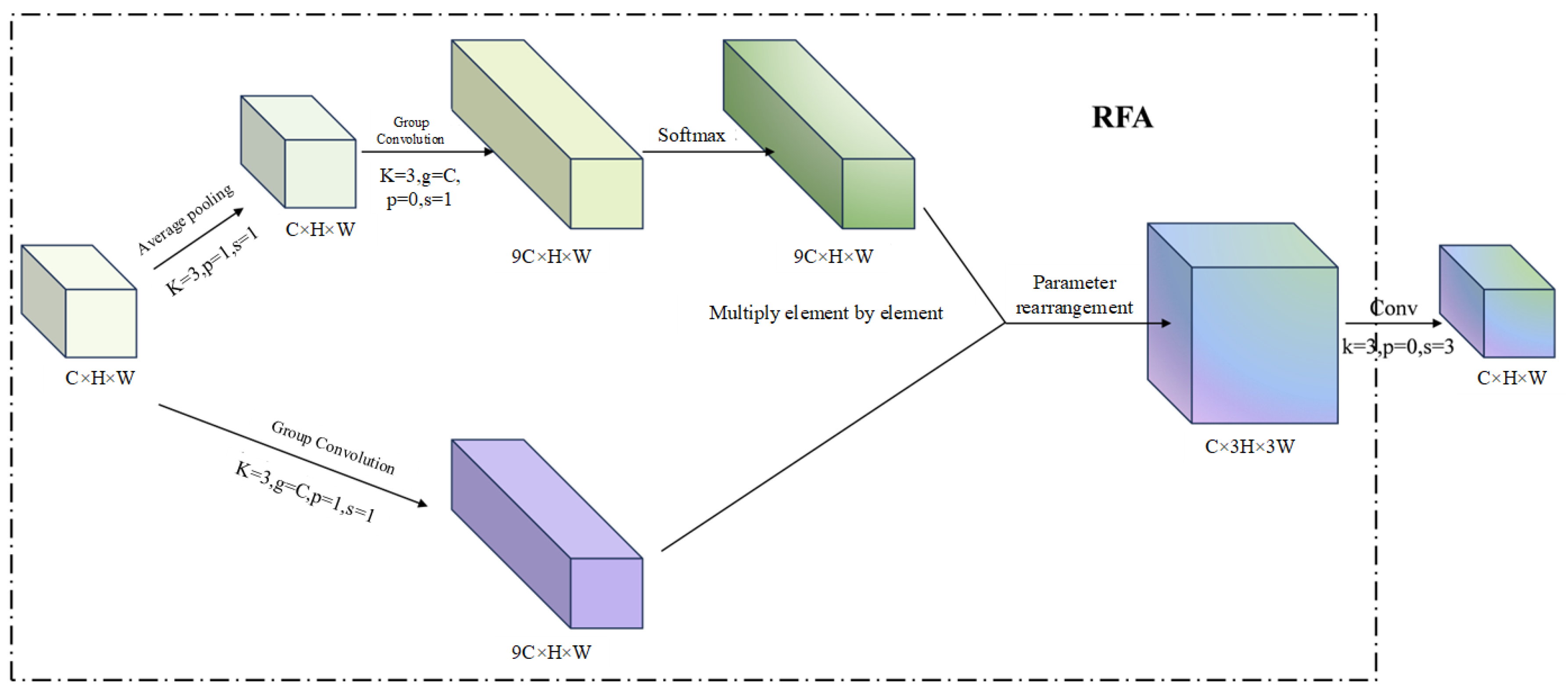

3.2. RFCAConv Module

3.2.1. Parameter-Free Attention Module

3.2.2. SPPFCSPC Module

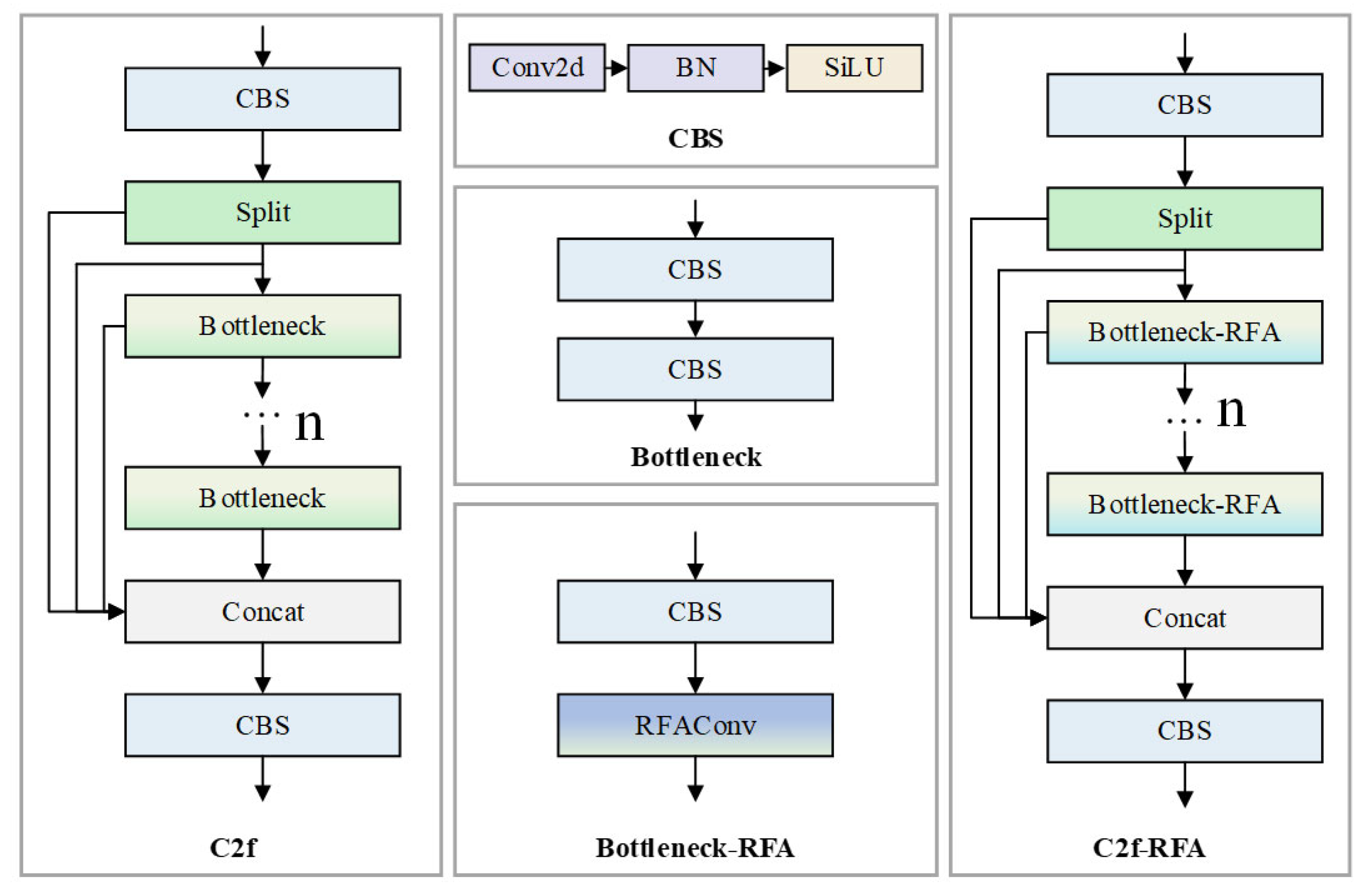

3.3. C2f-RFA Module

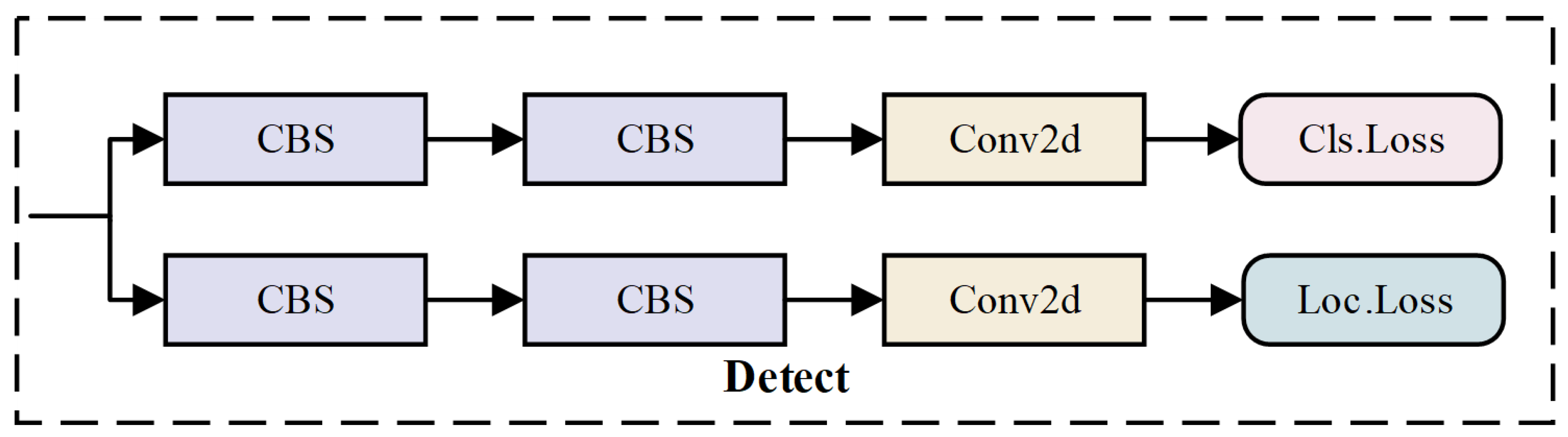

3.4. Decoupled Detection Head with EIoU Loss Function

3.4.1. Decoupling Detection Heads

3.4.2. EIoU Loss Function

4. Experiments

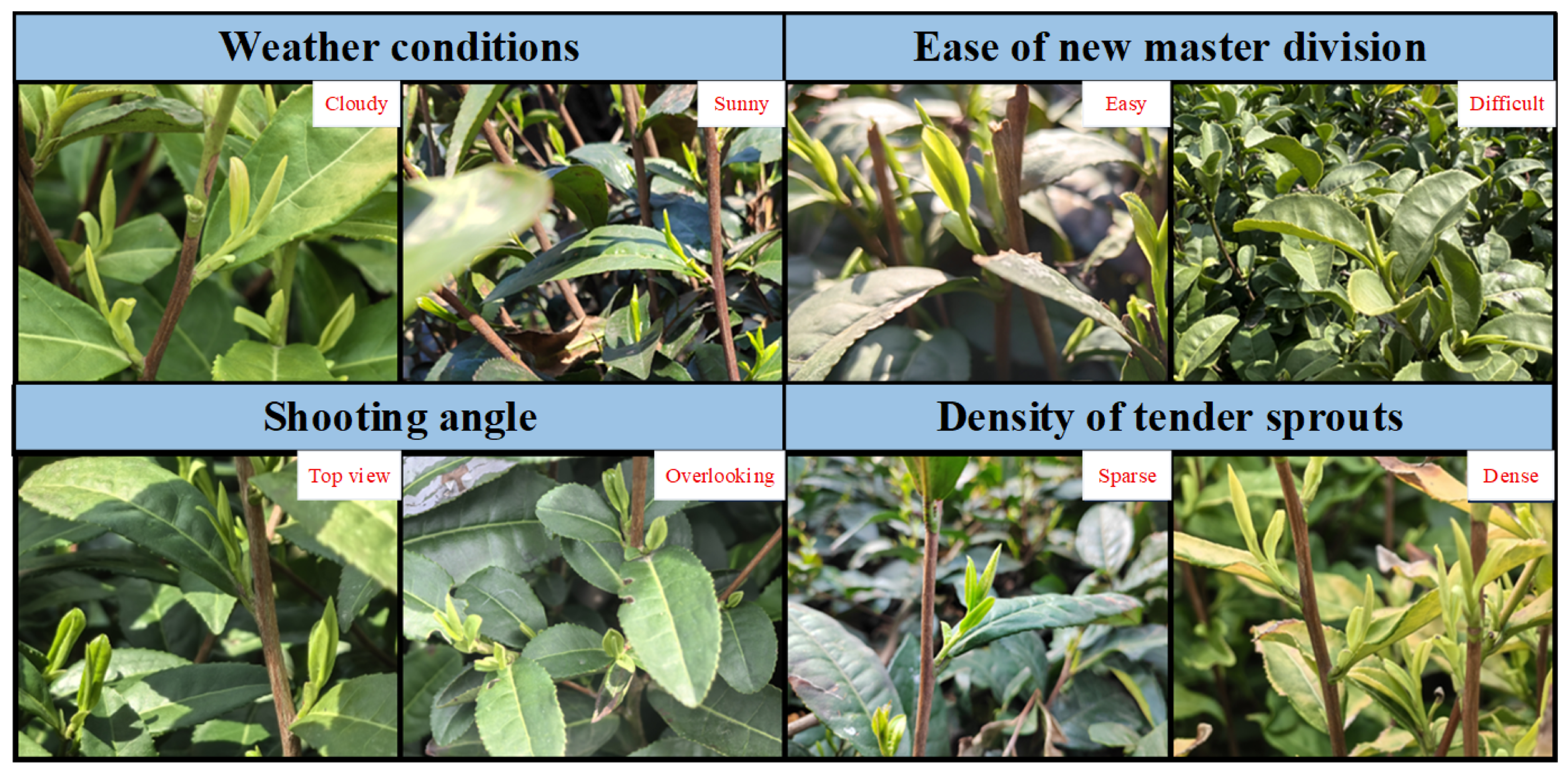

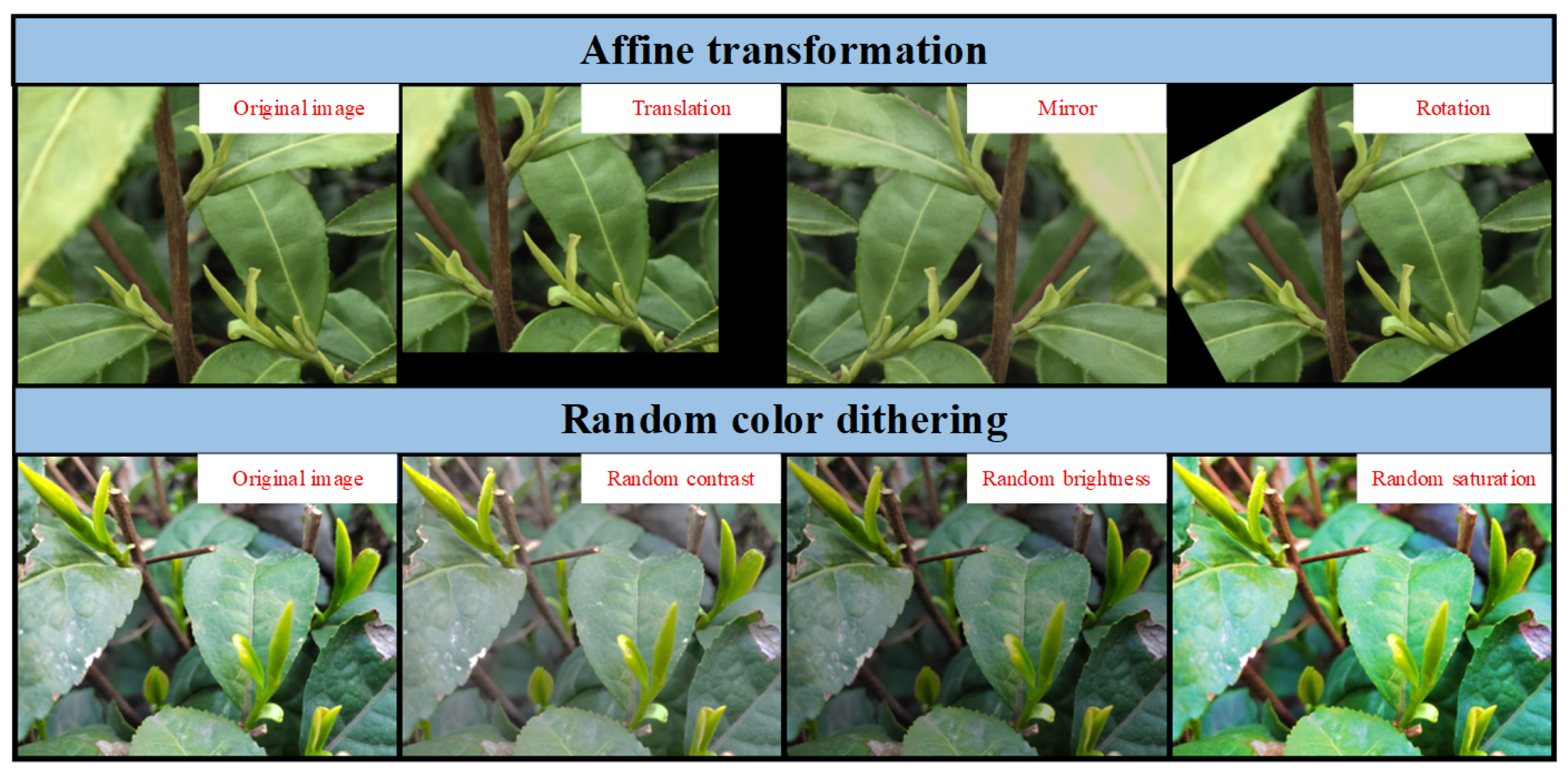

4.1. Datasets and Evaluation Metrics

4.2. Experimental Environment and Training Parameter Settings

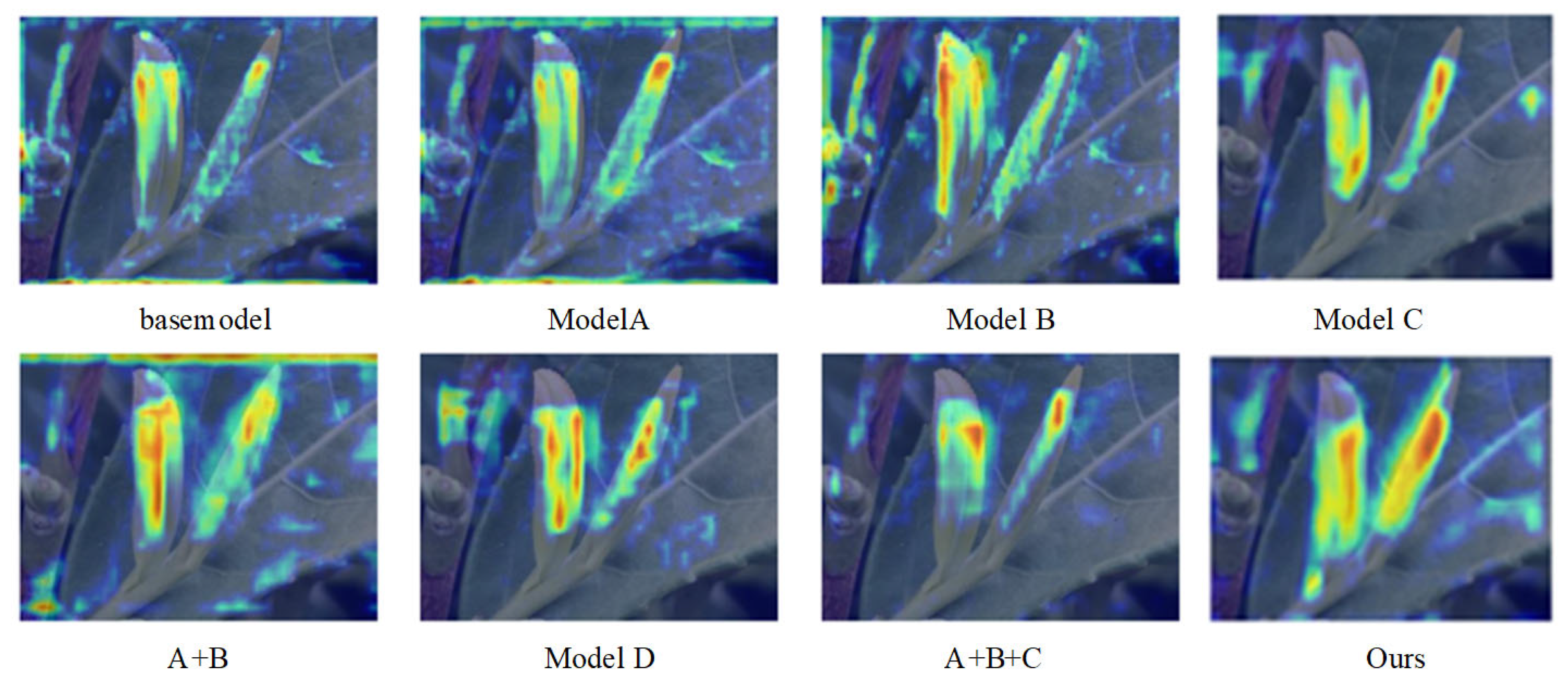

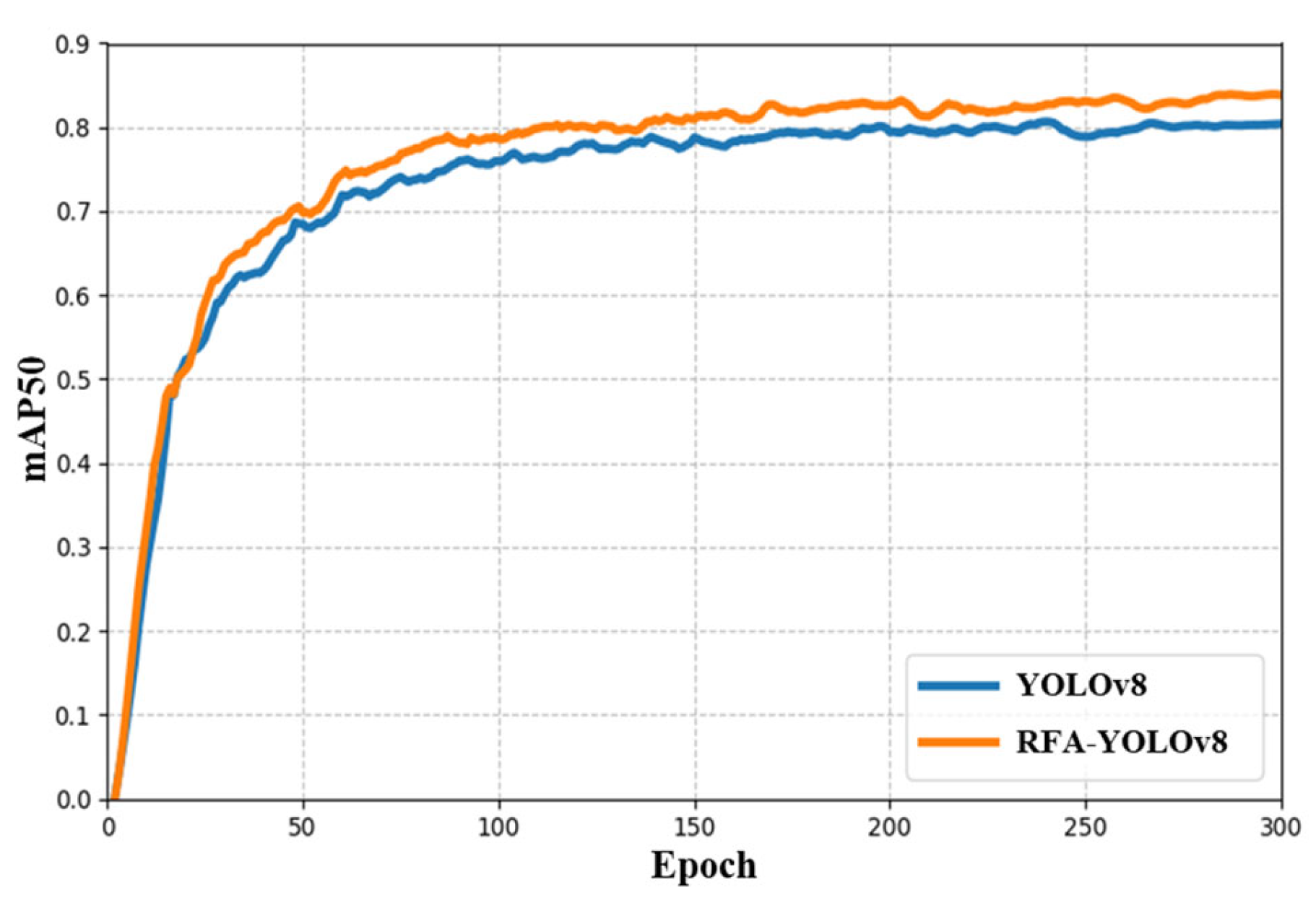

4.3. Ablation Experiment

4.4. Comparative Experiments

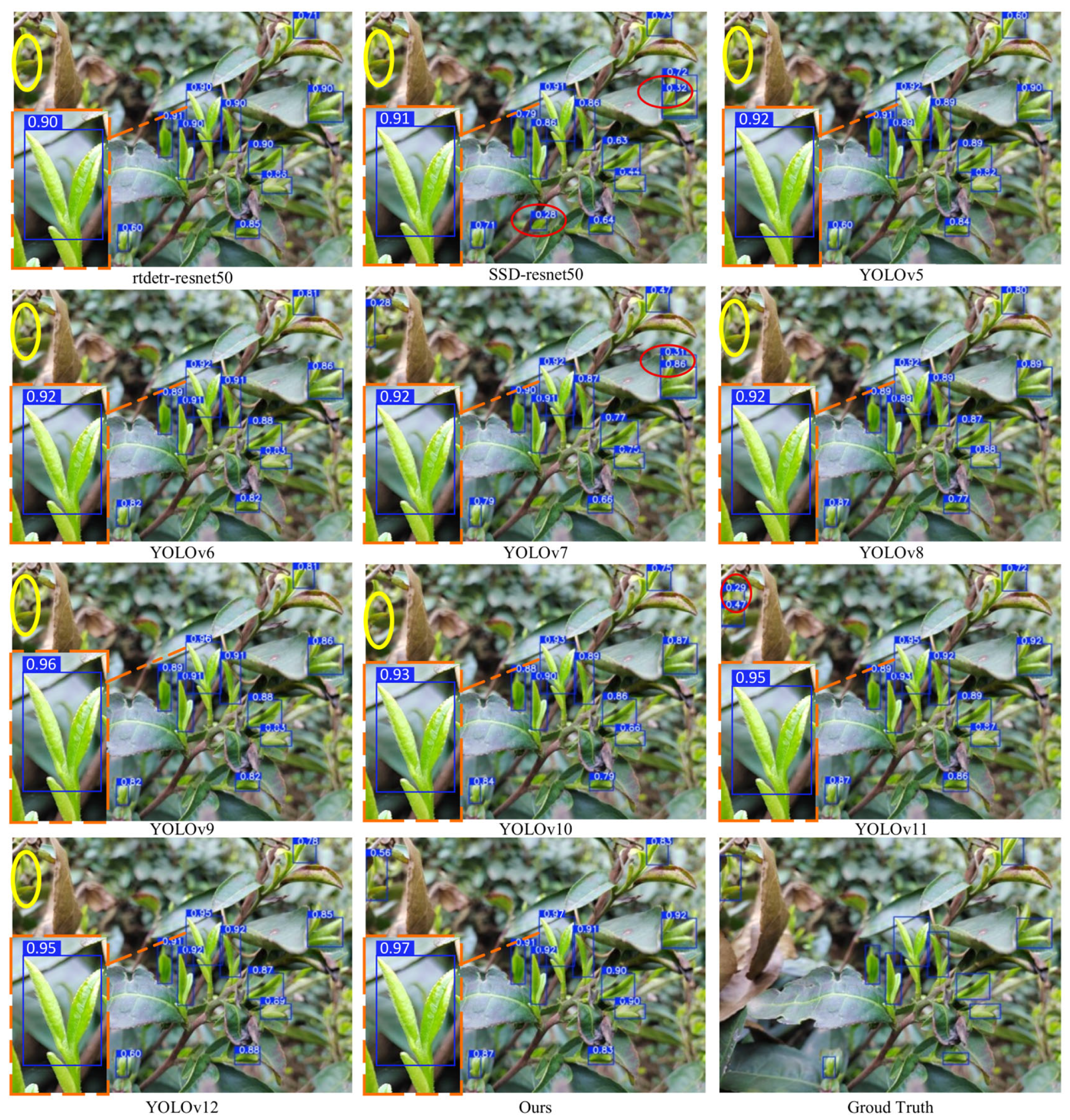

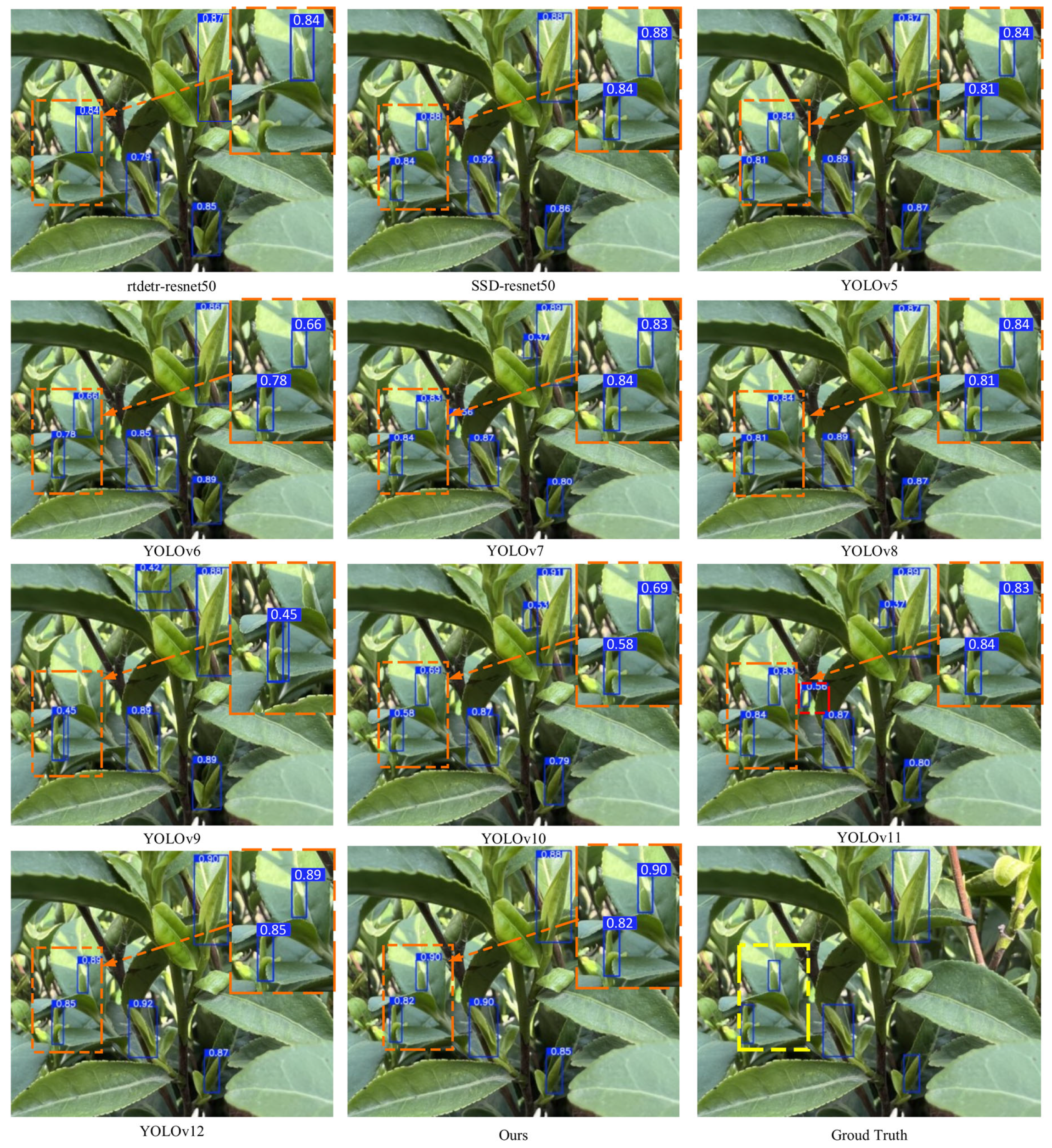

4.4.1. Comparative Experiments with Other Methods

4.4.2. Validation Experiments for Robustness

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, Z.; Lu, Y.; Yang, M.; Wang, G.; Zhao, Y.; Hu, Y. Optimal Training Strategy for High-Performance Detection Model of Multi-Cultivar Tea Shoots Based on Deep Learning Methods. Sci. Hortic. 2024, 328, 112949. [Google Scholar] [CrossRef]

- Xu, Z.; Liu, J.; Wang, J.; Cai, L.; Jin, Y.; Zhao, S.; Xie, B. Realtime Picking Point Decision Algorithm of Trellis Grape for High-Speed Robotic Cut-and-Catch Harvesting. Agronomy 2023, 13, 1618. [Google Scholar] [CrossRef]

- Wang, C.; Li, H.; Deng, X.; Liu, Y.; Wu, T.; Liu, W.; Xiao, R.; Wang, Z.; Wang, B. Improved You Only Look Once v.8 Model Based on Deep Learning: Precision Detection and Recognition of Fresh Leaves from Yunnan Large-Leaf Tea Tree. Agriculture 2024, 14, 2324. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, J.; Li, Y.; Gui, Z.; Yu, T. Tea Bud Detection and 3D Pose Estimation in the Field with a Depth Camera Based on Improved YOLOv5 and the Optimal Pose-Vertices Search Method. Agriculture 2023, 13, 1405. [Google Scholar] [CrossRef]

- Jin, Y.; Liu, J.; Xu, Z.; Yuan, S.; Li, P.; Wang, J. Development Status and Trend of Agricultural Robot Technology. Int. J. Agric. Biol. Eng. 2021, 14, 1–19. [Google Scholar] [CrossRef]

- He, Q.; Liu, Z.; Li, X.; He, Y.; Lin, Z. Detection of the Pigment Distribution of Stacked Matcha During Processing Based on Hyperspectral Imaging Technology. Agriculture 2024, 14, 2033. [Google Scholar] [CrossRef]

- Yang, N.; Yuan, M.; Wang, P.; Zhang, R.; Sun, J.; Mao, H. Tea Diseases Detection Based on Fast Infrared Thermal Image Processing Technology. J. Sci. Food Agric. 2019, 99, 3459–3466. [Google Scholar] [CrossRef]

- Wang, R.; Feng, J.; Zhang, W.; Liu, B.; Wang, T.; Zhang, C.; Xu, S.; Zhang, L.; Zuo, G.; Lv, Y.; et al. Detection and Correction of Abnormal IoT Data from Tea Plantations Based on Deep Learning. Agriculture 2023, 13, 480. [Google Scholar] [CrossRef]

- Jia, W.; Zheng, Y.; Zhao, D.; Yin, X.; Liu, X.; Du, R. Preprocessing Method of Night Vision Image Application in Apple Harvesting Robot. Int. J. Agric. Biol. Eng. 2018, 11, 158–163. [Google Scholar] [CrossRef]

- Li, H.; Hu, W.; Hassan, M.M.; Zhang, Z.; Chen, Q. A Facile and Sensitive SERS-Based Biosensor for Colormetric Detection of Acetamiprid in Green Tea Based on Unmodified Gold Nanoparticles. J. Food Meas. Charact. 2019, 13, 259–268. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. arXiv 2014. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:1504.08083. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. What Is YOLOv5: A Deep Look into the Internal Features of the Popular Object Detector. arXiv 2024. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar] [CrossRef]

- Zhou, C.; Zhu, Y.; Zhang, J.; Ding, Z.; Jiang, W.; Zhang, K. The Tea Buds Detection and Yield Estimation Method Based on Optimized YOLOv8. Sci. Hortic. 2024, 338, 113730. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Gui, Z.; Chen, J.; Li, Y.; Chen, Z.; Wu, C.; Dong, C. A Lightweight Tea Bud Detection Model Based on Yolov5. Comput. Electron. Agric. 2023, 205, 107636. [Google Scholar] [CrossRef]

- Xie, S.; Sun, H. Tea-YOLOv8s: A Tea Bud Detection Model Based on Deep Learning and Computer Vision. Sensors 2023, 23, 6576. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Yu, X.; Shi, X.; Han, Y.; Guo, Z.; Liu, Y. Development of Carbon Quantum Dot–Labeled Antibody Fluorescence Immunoassays for the Detection of Morphine in Hot Pot Soup Base. Food Anal. Methods 2020, 13, 1042–1049. [Google Scholar] [CrossRef]

- Huang, X.; Yu, S.; Xu, H.; Aheto, J.H.; Bonah, E.; Ma, M.; Wu, M.; Zhang, X. Rapid and Nondestructive Detection of Freshness Quality of Postharvest Spinaches Based on Machine Vision and Electronic Nose. J. Food Saf. 2019, 39, e12708. [Google Scholar] [CrossRef]

- Cao, Y.; Li, H.; Sun, J.; Zhou, X.; Yao, K.; Nirere, A. Nondestructive Determination of the Total Mold Colony Count in Green Tea by Hyperspectral Imaging Technology. J. Food Process Eng. 2020, 43, e13570. [Google Scholar] [CrossRef]

- Lv, J.; Wang, Y.; Xu, L.; Gu, Y.; Zou, L.; Yang, B.; Ma, Z. A Method to Obtain the Near-Large Fruit from Apple Image in Orchard for Single-Arm Apple Harvesting Robot. Sci. Hortic. 2019, 257, 108758. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. arXiv 2018, arXiv:1703.06870. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. arXiv 2017, arXiv:1712.00726. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2018, arXiv:1708.02002. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. arXiv 2019, arXiv:1904.01355. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. arXiv 2020, arXiv:2005.12872. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 16965–16974. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Graham, B.; El-Nouby, A.; Touvron, H.; Stock, P.; Joulin, A.; Jégou, H.; Douze, M. LeViT: A Vision Transformer in ConvNet’s Clothing for Faster Inference. arXiv 2021, arXiv:2104.01136. [Google Scholar] [CrossRef]

- Chu, X.; Tian, Z.; Zhang, B.; Wang, X.; Shen, C. Conditional Positional Encodings for Vision Transformers. arXiv 2023, arXiv:2102.10882. [Google Scholar] [CrossRef]

- Ranftl, R.; Bochkovskiy, A.; Koltun, V. Vision Transformers for Dense Prediction. arXiv 2021, arXiv:2103.13413. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, K.; Cao, J.; Timofte, R.; Magno, M.; Benini, L.; Van Gool, L. LocalViT: Analyzing Locality in Vision Transformers. arXiv 2025, arXiv:2104.05707. [Google Scholar] [CrossRef]

- Dai, Z.; Liu, H.; Le, Q.V.; Tan, M. CoAtNet: Marrying Convolution and Attention for All Data Sizes. arXiv 2021, arXiv:2106.04803. [Google Scholar] [CrossRef]

- Ren, R.; Sun, H.; Zhang, S.; Zhao, H.; Wang, L.; Su, M.; Sun, T. FPG-YOLO: A Detection Method for Pollenable Stamen in “Yuluxiang” Pear under Non-Structural Environments. Sci. Hortic. 2024, 328, 112941. [Google Scholar] [CrossRef]

- Sun, X.; Mu, S.; Xu, Y.; Cao, Z.; Su, T. Detection Algorithm of Tea Tender Buds under Complex Background Based on Deep Learning. J. Hebei Univ. (Nat. Sci. Ed.) 2019, 39, 211–216. [Google Scholar] [CrossRef]

- Yang, H.; Chen, L.; Chen, M.; Ma, Z.; Deng, F.; Li, M.; Li, X. Tender Tea Shoots Recognition and Positioning for Picking Robot Using Improved YOLO-V3 Model. IEEE Access 2019, 7, 180998–181011. [Google Scholar] [CrossRef]

- Xie, J.; Huang, C.; Liang, Y.; Huang, Y.; Zhou, Z.; Huang, Y.; Mu, S. Tea Shoot Recognition Method Based on Improved YOLOX Model. GDNYKX Guangdong Agric. Sci. 2022, 49, 49–56. [Google Scholar] [CrossRef]

- Wang, M.; Gu, J.; Wang, H.; Hu, T.; Fang, X.; Pan, Z. Method for Identifying Tea Buds Based on Improved YOLOv5s Model. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 2023, 39, 150–157. [Google Scholar] [CrossRef]

- Gui, J.; Wu, D.; Xu, H.; Chen, J.; Tong, J. Tea Bud Detection Based on Multi-scale Convolutional Block Attention Module. J. Food Process Eng. 2024, 47, e14556. [Google Scholar] [CrossRef]

- Gao, F.; Wen, X.; Huang, J.; Chen, G.; Jin, S.; Zhao, X. Tea Bud Recognition Algorithm Based on AD-YOLOX-Nano. Trans. Chin. Soc. Agric. Mach. 2025, 46, 178–184. [Google Scholar] [CrossRef]

- Chen, Y.; Guo, Y.; Li, J.; Zhou, B.; Chen, J.; Zhang, M.; Cui, Y.; Tang, J. RT-DETR-Tea: A Multi-Species Tea Bud Detection Model for Unstructured Environments. Agriculture 2024, 14, 2256. [Google Scholar] [CrossRef]

- Wang, J.; Liu, L.; Tang, Q.; Sun, K.; Zeng, L.; Wu, Z. Evaluation and Selection of Suitable QRT-PCR Reference Genes for Light Responses in Tea Plant (Camellia sinensis). Sci. Hortic. 2021, 289, 110488. [Google Scholar] [CrossRef]

- Lv, R.; Hu, J.; Zhang, T.; Chen, X.; Liu, W. Crop-Free-Ridge Navigation Line Recognition Based on the Lightweight Structure Improvement of YOLOv8. Agriculture 2025, 15, 942. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, C.; Yang, D.; Song, T.; Ye, Y.; Li, K.; Song, Y. RFAConv: Innovating Spatial Attention and Standard Convolutional Operation. arXiv 2024, arXiv:2304.03198. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. arXiv 2021, arXiv:2103.02907. [Google Scholar] [CrossRef]

- Xue, H.; Chen, J.; Tang, R. Improved YOLOv8 for Small Object Detection. In Proceedings of the 2024 5th International Conference on Computing, Networks and Internet of Things, Tokyo, Japan, 24–26 May 2024; ACM: New York, NY, USA, 2024; pp. 266–272. [Google Scholar]

- Zhang, F.; Leong, L.V.; Yen, K.S.; Zhang, Y. An Enhanced Lightweight Model for Small-Scale Pedestrian Detection Based on YOLOv8s. Digit. Signal Process. 2025, 156, 104866. [Google Scholar] [CrossRef]

- Wang, S.; Hao, X. YOLO-SK: A Lightweight Multiscale Object Detection Algorithm. Heliyon 2024, 10, e24143. [Google Scholar] [CrossRef]

- Xue, Z.; Xu, R.; Bai, D.; Lin, H. YOLO-Tea: A Tea Disease Detection Model Improved by YOLOv5. Forests 2023, 14, 415. [Google Scholar] [CrossRef]

- Zhu, M.; Kong, E. Multi-Scale Fusion Uncrewed Aerial Vehicle Detection Based on RT-DETR. Electronics 2024, 13, 1489. [Google Scholar] [CrossRef]

| Experimental Environment | Model/Parameter |

|---|---|

| CPU device | 12th Gen Intel(R) Core(TM) i3-12100F |

| GPU device | NVIDIA RTX 3060 12G |

| operating system | Ubuntu 20.04 |

| CUDA Version | CUDA 11.8 |

| programming language | Python 3.8.19 |

| Deep Learning Framework | Pytorch 2.4.0 |

| IDE | PyCharm Community Edition 2024.2.0.1 |

| Datasets | mAP@0.5/% | mAP@0.5:0.95/% |

|---|---|---|

| Original dataset | 79.7 | 52.1 |

| Enhanced dataset | 80.5 | 53.2 |

| Model | P/% | R/% | mAP@0.5/% | mAP@0.5:0.95/% | FPS/ (f·s−1) |

|---|---|---|---|---|---|

| baseline | 77.7 | 74.5 | 80.5 | 53.2 | 68.5 |

| A | 79.1 | 75.7 | 81.7 | 55.6 | 51.0 |

| B | 77.9 | 77.0 | 81.5 | 55.3 | 66.5 |

| C | 81.6 | 71.9 | 80.9 | 54.5 | 57.5 |

| D | 80.1 | 75.1 | 81.3 | 55.6 | 70.2 |

| A + B | 80.7 | 76.9 | 82.4 | 55.9 | 44.5 |

| A + B + C | 82.0 | 76.3 | 83.5 | 57.5 | 40.0 |

| Ours | 82.5 | 77.1 | 84.1 (↑ 3.6) | 58.7 (↑ 4.3) | 42.4 |

| Model | mAP@0.5 | mAP@0.5:0.95 | Params (M) | FLOPs (G) | FPS |

|---|---|---|---|---|---|

| BaselineYOLOv8m | 80.5 | 53.2 | 24.6 | 78.7 | 68.5 |

| YOLOv8m + DWConv | 78.4 | 55.2 | 18.7 | 18.7 | 28.8 |

| YOLOv8m + GhostConv | 77.6 | 54.6 | 23.6 | 73.8 | 86.2 |

| RFA-YOLOv8 | 84.1 | 58.7 | 34.3 | 86.7 | 42.4 |

| Model | mAP@0.5/% | mAP@0.5:0.95/% | Parameters/M | FLOPs/G | FPS/(f·s−1) |

|---|---|---|---|---|---|

| rtdetr-resnet50 | 72.9 | 49.1 | 41.9 | 125.6 | 83.3 |

| SSD-resnet50 | 74.3 | 48.3 | 24.4 | 87.7 | 55.6 |

| YOLOv5 (m) | 78.7 | 52.1 | 23.9 | 64.0 | 75.8 |

| YOLOv6 (m) | 79.2 | 51.3 | 49.6 | 161.1 | 52.4 |

| YOLOv7 | 78.5 | 50.9 | 35.5 | 105.1 | 59.9 |

| YOLOv8 (n) | 76.2 | 51.1 | 3 | 8.1 | 17.4 |

| YOLOv8 (s) | 79.6 | 49.8 | 11.1 | 28.4 | 15.7 |

| YOLOv8 (m) | 80.5 | 53.2 | 24.6 | 78.7 | 68.5 |

| YOLOv8m-MobileNetV4 | 75.8 | 49.4 | 8.6 | 21.7 | 18.9 |

| YOLOv9 (m) | 80.2 | 53.3 | 19.1 | 76.5 | 58.8 |

| YOLOv10 (m) | 79.7 | 52.1 | 15.7 | 63.4 | 77.5 |

| YOLOv11 (m) | 79.0 | 52.4 | 19.1 | 67.6 | 73.5 |

| YOLOv12 (m) | 79.4 | 57.6 | 20.1 | 67.1 | 119.1 |

| Ours | 84.1 | 58.7 | 34.3 | 86.7 | 42.4 |

| Luminous Intensity | Models | mAP@0.5/% | mAP@0.5:0.95/% |

|---|---|---|---|

| High intensity | YOLOv8 | 78.9 | 50.9 |

| Ours | 82.5 | 56.5 | |

| Moderate intensity | YOLOv8 | 87.3 | 59.6 |

| Ours | 88.2 | 63.4 | |

| Low intensity | YOLOv8 | 75.3 | 49.1 |

| Ours | 81.6 | 56.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Q.; Gu, J.; Xiong, T.; Wang, Q.; Huang, J.; Xi, Y.; Shen, Z. RFA-YOLOv8: A Robust Tea Bud Detection Model with Adaptive Illumination Enhancement for Complex Orchard Environments. Agriculture 2025, 15, 1982. https://doi.org/10.3390/agriculture15181982

Yang Q, Gu J, Xiong T, Wang Q, Huang J, Xi Y, Shen Z. RFA-YOLOv8: A Robust Tea Bud Detection Model with Adaptive Illumination Enhancement for Complex Orchard Environments. Agriculture. 2025; 15(18):1982. https://doi.org/10.3390/agriculture15181982

Chicago/Turabian StyleYang, Qiuyue, Jinan Gu, Tao Xiong, Qihang Wang, Juan Huang, Yidan Xi, and Zhongkai Shen. 2025. "RFA-YOLOv8: A Robust Tea Bud Detection Model with Adaptive Illumination Enhancement for Complex Orchard Environments" Agriculture 15, no. 18: 1982. https://doi.org/10.3390/agriculture15181982

APA StyleYang, Q., Gu, J., Xiong, T., Wang, Q., Huang, J., Xi, Y., & Shen, Z. (2025). RFA-YOLOv8: A Robust Tea Bud Detection Model with Adaptive Illumination Enhancement for Complex Orchard Environments. Agriculture, 15(18), 1982. https://doi.org/10.3390/agriculture15181982