Abstract

In this paper, we propose a knowledge distillation framework specifically designed for semantic segmentation tasks in agricultural scenarios. This framework aims to address several prevalent challenges in smart agriculture, including limited computational resources, strict real-time constraints, and suboptimal segmentation accuracy on cropped images. Traditional single-level feature distillation methods often suffer from insufficient knowledge transfer and inefficient utilization of multi-scale features, which significantly limits their ability to accurately segment complex crop structures in dynamic field environments. To overcome these issues, we propose a multi-level distillation strategy that leverages feature and embedding patch distillation, combining high-level semantic features with low-level texture details for joint distillation. This approach enables the precise capture of fine-grained agricultural elements, such as crop boundaries, stems, petioles, and weed clusters, which are critical for achieving robust segmentation. Additionally, we integrated an enhanced attention mechanism into the framework, which effectively strengthens and fuses key crop-related features during the distillation process, thereby further improving the model’s performance and image understanding capabilities. Extensive experiments on two agricultural datasets (sweet pepper and sugar) demonstrate that our method improves segmentation accuracy by 7.59% and 6.79%, without significantly increasing model complexity. Further validation shows that our approach exhibits strong generalization capabilities on two widely used public datasets, proving its applicability beyond agricultural domains.

1. Introduction

In recent years, smart agriculture has rapidly emerged as a key driver of agricultural modernization worldwide. By integrating advanced technologies, such as artificial intelligence, computer vision, remote sensing, and the Internet of Things (IoT), smart agriculture enables real-time environmental monitoring, precise field management, and data-driven decision-making [1]. Intelligent applications—including automated pest control, smart irrigation systems, crop disease recognition, and UAV-based spraying—have been widely implemented in agricultural production [2,3]. For example, deep learning models have been successfully applied to analyze multispectral UAV imagery and field sensor data for crop disease classification and yield prediction [4]. These advancements have significantly accelerated the digital transformation of agriculture and laid a solid foundation for building more intelligent, efficient, and autonomous farming systems.

Despite these achievements, smart agricultural systems still face considerable challenges in practical deployments, particularly in field-based vision applications. The agricultural environment is inherently complex and dynamic, often involving factors such as overlapping vegetation, varying lighting conditions, occlusions from weeds, and small-scale target objects like stems, petioles, and early-stage crops [1,5,6]. These issues significantly affect the accuracy and robustness of vision models used for crop recognition and segmentation. Moreover, the need for real-time decision-making in large-scale farmland scenarios imposes strict latency and hardware constraints on algorithmic performance, limiting the practical value of many state-of-the-art deep learning models [7,8].

To address these challenges, deep learning techniques have been widely applied to semantic segmentation tasks in agricultural vision applications. Deep neural network architectures, such as U-Net [9], DeepLabV3+ [10], and PSPNet [11], have been extensively used for tasks like crop type segmentation, weed identification, disease region annotation, and fruit tree contour extraction, significantly improving the accuracy and efficiency of agricultural image analysis. Studies have shown that DeepLabV3+ achieves a mean intersection over union of approximately 89.82% and an overall accuracy exceeding 94.8% when performing farmland segmentation on multispectral remote sensing images [12]. Additionally, in turmeric crop images, DeepLabV3+ successfully distinguished between diseased areas, crops, and background, demonstrating its practicality in precision agriculture scenarios [13]. Other studies have proposed models that integrate PointRend with contrastive learning strategies, achieving the precise segmentation of irregular diseased regions in aerial multispectral images. Semantic segmentation methods have also been employed for extracting green plant areas in farmland, crop–weed recognition, and similar tasks, showing great potential in enhancing the intelligence of automated agricultural machinery operations [14]. However, the high computational complexity and large model sizes of these approaches hinder their deployment on edge devices, making it challenging to achieve real-time performance in practical applications [15,16]. While lightweight models improve deployability, they usually sacrifice segmentation accuracy, especially for small or complex targets [17]. Thus, balancing real-time efficiency with high-precision segmentation remains a major challenge in agricultural vision system development [18].

Lightweight model design is considered an effective approach to balance performance and deployment feasibility in agricultural vision tasks. Researchers have proposed a variety of lightweight architectures, MobileNet [19], Lite Transformer [20], and improved versions of SegFormer [21], to reduce parameter counts and accelerate inference speed. For example, Mehta et al. introduced dynamic convolution and reparameterization in MobileViT, reducing the number of parameters to one-fifth of those in standard Transformers while maintaining high accuracy [22]. Lite Transformer achieved real-time processing at 88.4 FPS in high-resolution agricultural segmentation tasks [23]. However, most of these models are designed for general-purpose vision applications and have a limited capacity for fine-grained feature representation. As a result, they tend to overlook subtle differences between crops and weeds and often perform poorly in tasks such as the stem or structural segmentation of crops.

To address these limitations, knowledge distillation has attracted attention as a powerful model compression technique. It enables a compact student model to effectively inherit the representations learned by a larger teacher model. Most existing distillation strategies are either response-based or feature-based, relying on supervision from single layers, and are not optimized for multi-scale, texture-rich agricultural imagery [24,25,26,27,28].

This study proposes a SegFormer-based embedding patch knowledge distillation (SEKD) method, which efficiently leverages the texture and structural information in embedding patches to achieve the fine-grained segmentation of crops and weeds under complex farmland conditions. To address the need for multi-scale target recognition in agricultural images, we design feature reconstruction distillation and feature relationship distillation, which are organically integrated with embedding patch distillation to form a multi-dimensional combined distillation strategy, thereby effectively enhancing the model’s perception accuracy in challenging field environments. Moreover, by effectively transferring knowledge from the teacher model, this method enables the lightweight student model to achieve high segmentation accuracy while maintaining low computational resource consumption, making it particularly suitable for real-time deployment in smart agriculture scenarios. The main contributions of this work are as follows:

- We apply SegFormer-specific patch embeddings for the first time in crop segmentation scenarios, extracting structural and statistical texture information from crop images as the knowledge source for distillation, aiming to improve both the accuracy and the generalization of the model in crop segmentation tasks.

- We propose a feature fusion and enhancement method based on attention mechanisms, which effectively alleviates the information loss caused by blurred crop boundaries in agricultural images.

- We propose feature relation distillation and feature reconstruction distillation, integrating them into the embedding distillation framework to address its limitations in modeling fine-grained local information and spatial relationships between features.

- Experimental results demonstrate that the proposed distillation framework significantly improves the segmentation performance across multiple crop datasets, highlighting its practical effectiveness in agricultural scenarios.

2. Methods

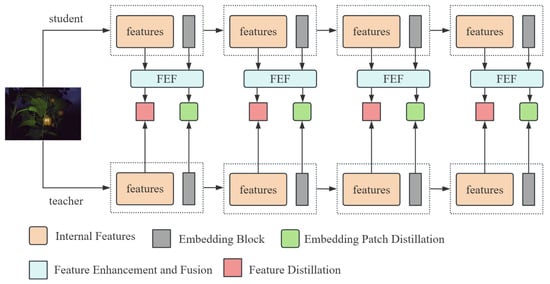

This section elaborates in detail on the embedding distillation scheme we proposed. This scheme is designed to distill the semantic features of each layer of SegFormer, and the calculation methods of different distillation losses are introduced in sequence. The overall distillation framework is shown in Figure 1.

Figure 1.

The block framework of the SEKD. This framework includes the feature distillation process between the teacher network and the student network.

In this study, a Feature Enhancement and Fusion module (FEF) is innovatively proposed. Meanwhile, three efficient distillation methods are designed to optimize the knowledge transfer process, thus enhancing the performance of the student model. The three distillation methods are feature relationship distillation [29], feature reconstruction distillation, and embedding patch distillation. They were primarily designed to address several key limitations in the existing knowledge distillation approaches, with a particular focus on the practical challenges of agricultural semantic segmentation. For example, the failure of multi-scale information fusion made it difficult for the model to simultaneously recognize large background areas and small crop structures [25,26]. Insufficient knowledge transfer led to weak generalization when the student model was applied to complex field environments, such as crop occlusion and weed overlap [30]. Additionally, the underutilization of low-level features resulted in the poor representation of critical texture details—such as crop edges and stems—which negatively affected the overall segmentation accuracy and stability [25].

As shown in Figure 1, feature distillation in this study mainly comprises two components: feature relationship distillation and feature reconstruction distillation. To address practical challenges in agricultural segmentation—such as complex crop structures and diverse target distributions—feature relationship distillation constructs a feature relationship matrix to fully capture contextual dependencies among features. This enables the student model to learn richer structural and spatial information, thereby improving its ability to distinguish complex regions. Feature reconstruction distillation introduces a self-supervised mechanism that feeds back the feature loss from the teacher model to the student model, encouraging the student to actively restore and supplement critical features. This effectively enhances the completeness of knowledge transfer and mitigates information loss caused by small objects or noise. The synergy of these two distillation strategies significantly strengthens the student model’s perception of fine-grained features and its overall segmentation performance.

2.1. Feature Enhancement and Fusion Module

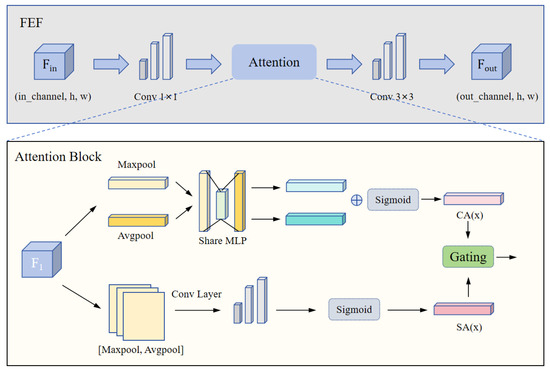

In agricultural semantic segmentation tasks, the extraction and accurate recognition of fine-grained features are severely hampered by complex crop structures, large variations in scale, and strong background interference [31,32]. To address these challenges, this paper proposes a Feature Enhancement and Fusion (FEF) module, which adaptively highlights key information in images and integrates multi-scale semantic features. By introducing a dynamic gated attention mechanism, the FEF module effectively enhances the representation of small-scale and occluded crop components (such as stems and leaves), significantly improving the model’s segmentation accuracy in complex field environments.

In the attention block, the channel attention module (CA) extracts the importance of different channels in the feature map through the global average pooling and global max pooling strategies, thereby enhancing the feature of channels with rich information [33,34]. Suppose is the input feature map, and the calculation of the channel attention weight is expressed as follows:

where the is the fully connected layer for dimensionality reduction, and is the fully connected layer for dimensionality increase. is the ReLU activation function, and is the Sigmoid activation function. represents performing global average pooling on the input feature map , and represents performing global max pooling on the input feature map .

The spatial attention (SA) module is used to highlight the important spatial positions in the feature map. It performs average pooling and max pooling operations on the input feature map along the channel dimension to obtain two single-channel feature maps. These two maps are concatenated along the channel dimension, and then a convolution operation is applied to obtain the spatial attention weights. The calculation can be expressed as follows:

where the represents a convolution kernel of size .

To further refine the attention mechanism, we introduce a dynamic gating mechanism that adaptively adjusts the contributions of the channel and spatial attention based on the input [35]. The final output is computed as a weighted combination of the channel and spatial attention:

where and are dynamic gating weights generated from the input features.

Specifically, the combined features from the channel and spatial attention are processed through a 1 × 1 convolution and a Sigmoid activation to generate the gating weights:

where is the learnable parameter of the gating network.

As shown in Figure 2, the FEF module adopts a unified attention mechanism that adaptively fuses channel and spatial information from the input features. Through a lightweight structure, the FEF module enhances key regions and prominent crop structures, enabling more precise feature alignment with the teacher model. This design significantly improves the representation of small-scale and occluded crop components, which are particularly challenging in agricultural segmentation tasks.

Figure 2.

The block structure of the FEF module. FEF introduce a dynamic gating mechanism to adjust the contribution of the channel and spatial attention adaptively.

2.2. Feature Relationship Distillation

In agricultural segmentation tasks, accurately distinguishing crop structures relies not only on global feature relationships but also on fine-grained local features. Traditional pixel-level difference calculations are insufficient to comprehensively capture the structural and semantic distributions among features, resulting in limited model performance in complex field environments. To address this issue, we propose a feature relation loss that compares the relation matrices between the teacher and student models, guiding the student model to learn crop distributions and spatial patterns from the perspective of the overall structural relationships.

When calculating the feature relation loss, we flatten the spatial dimensions of the feature map into a vector. Then we multiply the flattened feature map by its transpose to calculate the autocorrelation matrix. The calculation can be expressed as follows:

where and represent the original features of the student and the teacher, respectively. and represent the corresponding transpose matrices.

The feature relation loss can be represented by the MSE between the normalized student relation matrix and teacher relation matrix . The complete formula is as follows:

where is the spatial dimension of the flattened feature map (), and and represent the relation matrices of the student and teacher models, respectively, calculated by the product of the flattened feature map and its transpose. and denote the Frobenius norms of the relation matrices, which are used to normalize them to ensure that their values are within a comparable range [36,37]. The loss minimizes the difference between the normalized relation matrices using the MSE.

The relation matrix encodes the context relation information within the feature map, and such information is of vital importance. It not only focuses on the similarity of individual features but also has the ability to capture the overall structure and patterns.

We calculate the feature relation loss and utilize its gradient information to optimize the student model. This guiding mechanism enables the student network to better learn and replicate the complex behaviors and patterns of the teacher network, thus enhancing the effect of knowledge distillation.

2.3. Feature Reconstruction Distillation

Due to insufficient knowledge transfer, critical information in crop images may be lost during the distillation process, which can negatively impact the accuracy of the student model in crop segmentation and other agricultural image tasks. To address this issue, we innovatively introduced a feature reconstruction mechanism into the knowledge distillation framework, enabling the student model to more effectively replicate the high-quality feature representations extracted by the teacher model, thereby improving the completeness and effectiveness of the knowledge transfer.

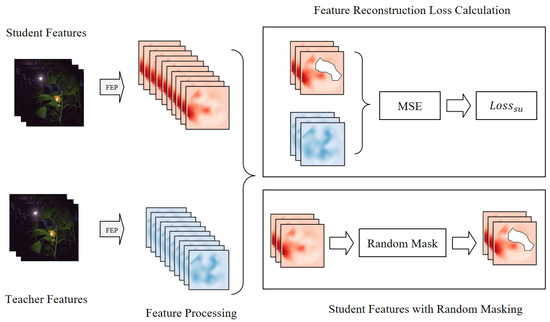

As depicted in Figure 3, we employ a random mask to partially cover the original features, and the masked regions are substituted with a zero matrix. Through this mask design, we simulate the scenario of information loss, thereby compelling the student model to infer the complete feature representation based on partial information. We randomly mask 30% of the areas on the height and width of the feature map. The selection of the masked regions is achieved by randomly generating the starting positions and in each batch and then applying the mask at these positions. The generated mask is a tensor with the same size as the student features, where the masked regions are set to 0 and the other regions are set to 1.

Figure 3.

The structure of regional feature reconstruction distillation. The teacher guides the student using extracted features and a mask. The loss is computed using MSE. FEP denotes the process by which the model processes image features.

The reconstruction loss is calculated by using the MSE to compute the Euclidean distance between two tensors. The formula for the reconstruction loss is expressed as follows:

where represents the student feature matrix that has already been masked, and represents the teacher’s feature matrix.

For the masked regions, the MSE between the student and the teacher features is used as the reconstruction loss, encouraging the student model to recover missing information and more closely match the teacher’s feature representations. To comprehensively transfer knowledge, this reconstruction loss is computed at each layer of the network, and the final feature reconstruction loss is obtained by averaging the reconstruction losses across all the feature layers. This approach not only enhances the feature learning ability of the student model but also improves the segmentation performance for challenging structures while reducing the reliance on annotated data.

2.4. Embedding Patch Distillation

Most existing knowledge distillation methods for crop segmentation mainly focus on high-level features, often neglecting low-level structures and edge information, which results in limited performance for small object segmentation and complex boundary recognition tasks [38,39]. To address this issue, this study leverages the patch embedding mechanism of SegFormer and innovatively proposes a new low-level feature distillation approach that fully exploits the rich texture information contained in embedding patches.

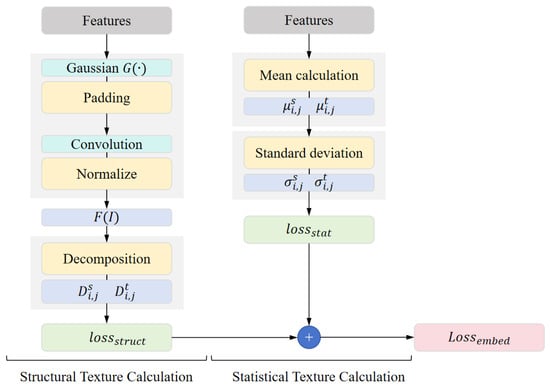

Specifically, we design a dual-texture distillation loss based on embedding patches, which effectively integrates structural texture and statistical texture information. The structural texture loss captures local geometric details—such as crop contours and edges—through multi-scale convolution and decomposition, while the statistical texture loss is based on the mean and the standard deviation, enhancing the model’s perception of global texture features, such as the regional intensity distribution. The combination of these two losses significantly improves the student model’s ability to model and segment fine details in complex agricultural images. The calculation process is illustrated in Figure 4.

Figure 4.

The structure of the embedding patch loss calculation. The left branch computes the structural texture loss using Gaussian kernel-based convolution, normalization, and decomposition, followed by MSE calculation (). The right branch computes the statistical texture loss based on the mean and the standard deviation, followed by MSE calculation (). The final embedding loss () is obtained by combining both losses.

Structural Texture Calculation: The core of structural texture knowledge distillation lies in enhancing the student network’s understanding of the geometric features of images. These features include boundaries, shapes, and directions, which play a crucial role in capturing the details and contours of images [40,41]. To achieve this goal, we adopt a multi-scale decomposition method based on the Laplacian pyramid and the directional filter bank [42].

The embedding patches are processed and reshaped into the image format . Its smoothed version is obtained through the Gaussian blur operation G(∙). Then, by calculating the difference between the original image and the blurred image, the Laplacian pyramid layer is obtained. The calculation formula is as follows:

where represents the reshaped image at the -th layer, and G(∙) is the Gaussian blur operation function. refers to the output of the Laplacian pyramid at the -th layer, that is, the edge and detail features.

By repeatedly performing this blurring and differencing operation multiple times, we generate a pyramid of multi-scale image representations. To more effectively capture the directional features of the image, we introduce a directional filter bank. This filter bank is capable of decomposing the image into sub-bands in multiple directions, thereby enhancing the representation ability of structural textures. For the output of each layer of the Laplacian pyramid, we further apply a set of directional filters to extract directional information. The calculation formula is as follows:

where represents the sub-band in the -th direction of the -th layer, and (∙) represents the directional filter function.

Through this multi-directional decomposition, we are able to capture the edge and texture features of the image in multiple directions. Finally, we calculate the differences between the teacher network and the student network on each directional sub-band and quantify these differences using the MSE. The calculation formula is as follows:

where represents the total number of directional sub-bands.

The is the loss calculated for the structural texture. This ensures that the student network can gradually approach the performance of the teacher network in terms of the structural texture, thereby enhancing its sensitivity to and understanding of the geometric features of the image.

The Laplacian pyramid enables the multi-scale representation of image details, allowing the model to capture both coarse structures and fine edges in crop images. The directional filter bank supports multi-directional decomposition, enhancing the ability to extract complex crop contours and geometric features. Unlike Gabor filters, which rely on fixed parameters [43], our proposed method can adaptively model diverse shapes and textures in farmland imagery, providing more expressive and robust texture representation, especially in challenging scenarios, such as overlapping leaves, irregular crop shapes, and blurred boundaries.

Statistical Texture Calculation: To enhance the student network’s ability to recognize texture features in crop images, we incorporate the computation of statistical texture loss into the loss function. The core objective is to strengthen the model’s understanding of the pixel intensity distribution in crop images, thereby enabling a more accurate representation of texture variations, disease-affected regions, and edge structures. By modeling the global statistical properties of image pixels, such as the mean and the standard deviation, we aim to capture the overall texture patterns present in the images.

To extract statistical texture features, we carry out regional block processing on the feature map. For each block, we calculate its mean value and standard deviation so as to depict the intensity distribution pattern of the local area. The specific calculation formulas are as follows:

where and are the mean value and the standard deviation of the -th block, respectively. represents the -th data point in the -th region. is the number of pixels within the block.

Based on the aforementioned method, we extract the statistical features of each local block. To enable the student network to effectively learn the statistical texture features of the teacher network, we calculate the differences in their statistical features and use the MSE for quantification. The specific calculation is as follows:

The is the statistical texture loss. By minimizing this loss, the student network can better approximate the performance of the teacher network in terms of the statistical texture.

Based on the analysis of the low-level features of the embedding patches, the structural texture loss can effectively capture the edge and detail features of the image, thereby improving the ability to recognize the contours of objects in complex scenes. At the same time, the statistical texture loss promotes the student network’s learning of the global intensity distribution, making it consistent with the teacher network. The combination of the two enhances the overall texture consistency and visual effects, which can be formulated as

where and represent the hyperparameters for the weighted tuning of the structural texture loss and the statistical texture loss. In the experiment, and are set to 0.6 and 0.4, respectively.

We perform a weighted fusion of the two losses to construct the embedding loss () for knowledge distillation, thereby improving the efficiency of distillation learning at both the local and global levels. This method effectively overcomes the shortcomings of single-feature learning and provides a more balanced and comprehensive distillation framework.

3. Results

3.1. Experimental Setup

3.1.1. Dataset

In this experiment, we used two widely recognized crop semantic segmentation datasets. The rationale for selecting these two datasets is as follows:

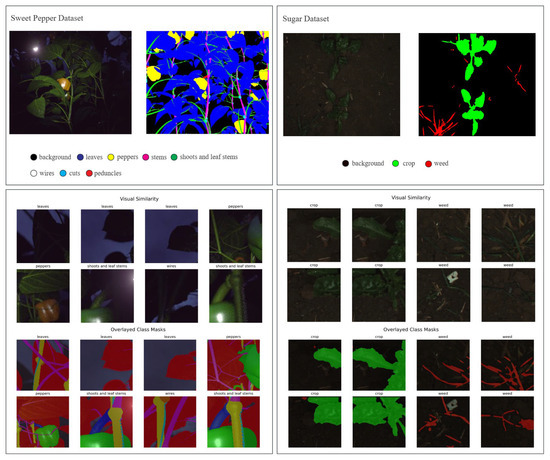

As shown in Figure 5, the sweet pepper dataset [44] contains eight categories and incorporates various complex interference factors. In real agricultural production environments, occlusion between crops is a common phenomenon, posing significant challenges for semantic segmentation tasks. This dataset is used to evaluate the adaptability and effectiveness of our proposed distillation method in complex and realistic farmland scenarios. The sugar dataset [45] includes three categories: background, crop, and weed. It simulates a realistic farmland environment, where one of the main challenges lies in the high color similarity between crops and weeds, which significantly affects the segmentation accuracy. This dataset is primarily used to validate the effectiveness of our proposed distillation method in modeling fine-grained differences between crops and weeds, preserving edge contours, and recognizing low-contrast regions. Figure 5 presents sample images from both datasets, along with representative examples that illustrate the segmentation challenges inherent to each.

Figure 5.

Experimental training dataset display. The sweet pepper dataset reflects the real-world complexity of agricultural field environments, where severe occlusion occurs between crops. In contrast, the sugar dataset highlights the segmentation challenges in real farmland caused by the similarity in crop colors.

We partitioned the sweet pepper and sugar datasets according to a 7:1:2 ratio, with 20% of the data permanently reserved as a test set for the final evaluation of the generalization performance. The remaining 80% was further divided evenly into eight subsets, each accounting for 10% of the total data (labeled as subset 1 through subset 8). To achieve a balance between computational efficiency and the proportion of training and validation samples (70% for training and 10% for validation), we conducted five repeated hold-out experiments. In each experiment, one subset was randomly selected to serve as the validation set, while the other seven subsets were combined to form the training set. Different random seeds were used for each repetition to generate five independent data splits. The final experimental results were obtained by averaging the outcomes across these five runs.

In addition, we introduced two non-agricultural datasets to evaluate the generalization and transferability of the proposed distillation method. The experiments conducted on all these datasets comprehensively demonstrate the generalization and the superiority of our distillation method in both complex agricultural conditions and more general segmentation scenarios.

3.1.2. Model Evaluation Metrics

For the purpose of the quantitative evaluation, we mainly employ the mean intersection over union (mIoU) as the evaluation metric for the performance of semantic segmentation, and its specific calculation method is illustrated in Equation (14). Additionally, when it comes to the evaluation of the distillation model, we utilize the computational workload (FLOPs) and the number of parameters (Params) of the model to measure its complexity, as detailed in Equations (15) and (16). These metrics provide an objective measure of the model’s performance. Through comprehensive evaluation, we are able to comprehensively understand and compare the effects of different methods. Its specific calculation method is illustrated in Equation (12).

where represent set of categories, which includes categories ranging from 0 to , covering the background or blank category. represents the number of pixels that were originally classified as and are correctly predicted as . represents the number of pixels that were originally classified as but are incorrectly predicted as .

where and represent the number of input and output channels in the -th layer, and are the height and width of the convolutional kernel, and and represent the spatial dimensions of the output feature map.

where denotes the presence of a bias term.

3.1.3. Training Configuration

The network models used in this study are based on the SegFormer architecture, with both the backbone and decoder structures consistent with the original paper. Specifically, the backbone adopts a hierarchical Mix Transformer as the feature extractor, which consists of four stages with overlapping patch embedding modules and multi-head self-attention mechanisms. In the experiments, SegFormer-B0, B1, B2, and B3 were used as backbone networks for comparison and validation, while all other hyperparameters (including the feature dimensions of each stage, the number of attention heads, network depths, activation functions, and normalization methods) strictly followed the default settings in the original SegFormer implementation. The experimental code has been made openly available on GitHub to facilitate reproducibility. The detailed experimental parameters are presented in Table 1.

Table 1.

Training configuration and hyper-parameter settings for the distillation experiments.

To mitigate model overfitting and enhance the generalization capability, this study incorporates multiple regularization strategies during training. Specifically, at the data level, data augmentation techniques, such as random flipping and random cropping, are employed to improve the model’s robustness to input variations. In terms of optimizer configuration, the AdamW optimizer is used with its built-in weight decay mechanism, and a polynomial learning rate decay strategy is adopted to effectively control the convergence process.

3.1.4. Distillation Loss Combination Strategies

Our combined distillation strategy is designed based on three complementary types of knowledge: embedding-level semantic representation, structural consistency between features, and semantic feature reconstruction. Enze et al. [21] pointed out in SegFormer that the patch embedding module extracts rich texture and semantic information from images, which effectively captures the overall structure and content distribution. Inspired by this, we innovatively introduce the embedding features as a knowledge source in the distillation process, aiming to enable the lightweight student model to better inherit the teacher model’s semantic representation capabilities.

However, the embedding-only distillation method has two main limitations: first, it struggles to capture fine-grained local information; second, it overlooks the modeling of spatial relationships between features, which constrains its representation capability in complex scenarios. To address these issues, we propose two complementary distillation strategies to enhance the comprehensiveness and effectiveness of the knowledge transfer.

Feature reconstruction distillation draws inspiration from the concept of reconstruction error in anomaly detection, using it as a supervisory signal to guide the student model in better recovering the deep semantic features of the teacher model, especially enhancing the recognition of small objects and boundary regions [46]. In contrast, feature relation distillation focuses on modeling the relative relationships and spatial structures among features, compensating for the lack of structural modeling in embedding distillation and further strengthening the student model’s understanding of object shapes, structural continuity, and contextual dependencies.

To validate the complementarity and synergy of the proposed distillation strategies, and to further improve the overall distillation effectiveness, we design three sets of distillation loss configurations. The specific description is shown in Table 2. Among them, the calculation methods of , , and , including the calculation processes, have been introduced in detail in the above methodology.

Table 2.

Description of distillation loss combinations.

3.2. Analysis of Experimental Results

3.2.1. The Analysis of the Distillation Results on the Sweet Pepper Dataset

The sweet pepper dataset has relatively complex category interferences. For example, the segmentation of small objects at various nodes of the crops poses a challenge to the model. As shown in Table 3, we systematically evaluated the performance of SEKD and the optimized combined distillation method on the sweet pepper dataset and conducted an in-depth analysis of the model optimization effects.

Table 3.

The results of distillation on the sweet pepper dataset.

The experimental results show that the SEKD method demonstrates significant effectiveness in agricultural image segmentation tasks. On the sweet pepper dataset, compared with the undistilled student model, the SEKD distillation method improved the mIoU by 2.73%, fully verifying the performance enhancement achieved by the proposed embedding distillation mechanism in complex agricultural scenarios.

To deeply explore the potential of embedding distillation, two combined optimization strategies, SEKD-SU and SEKD-CT, were further introduced. The experimental data shows that the segmentation accuracy of SEKD-SU on the dataset achieved a remarkable 7.59% increase in the mIoU compared with that of the baseline SEKD model, while SEKD-CT achieved an optimization effect of 6.57%. These comparative results not only verify the effective supplementary role of the combined distillation strategy for the embedding method but also highlight the synergistic effect of multi-level optimization in the knowledge distillation process.

Table 3 also compares the experimental results of two representative distillation methods, KD and TKD, where KD is a traditional knowledge distillation method and TKD is a relatively new distillation approach. The results show that although both KD and TKD can improve the model performance to some extent (for example, KD increases the mIoU to 38.41% and TKD to 41.96%), their overall effectiveness on the sweet pepper dataset is still significantly lower than that of our proposed combined distillation methods. This further validates the significant advantages of the proposed embedding distillation and its multi-level optimization strategy in improving the performance of agricultural segmentation models.

From the perspective of the model complexity analysis, the student model SegFormer-B0, optimized through distillation, achieved a significant gain in segmentation performance with a limited cost. Specifically, the number of parameters only increased by 0.87 M (from 3.72 M to 4.59 M), and the FLOPs only increased by 2.82 G (from 13.59 G to 16.41 G). The mIoU increased by 7.59% (from 37.83% to 45.42%). The moderate expansion of the model size and the significant improvement in performance achieved an ideal balance, fully demonstrating the engineering practical value of the proposed method in intelligent agricultural applications.

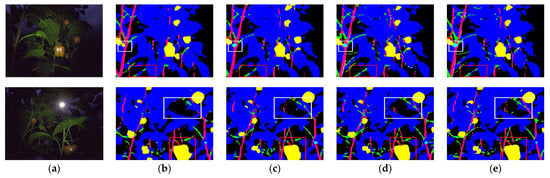

The analysis of the visualized segmentation results for the sweet pepper dataset: As shown in Figure 6, this figure presents a segmentation result image of sweet peppers that allows for intuitive qualitative analysis. In the figure, the white line and the red line, respectively, represent the parts with differences in the segmentation effects.

Figure 6.

An example image of the segmentation results of sweet peppers. (a) Original image. (b) Segmentation result without distillation. (c) SEKD segmentation result. (d) SEKD-SU segmentation result. (e) SEKD-CT segmentation result.

In Figure 6, the segmentation effect of the embedding distillation SEKD, in (c), on the crop node parts and the stems and leaves is relatively obvious. However, the segmentation of the crop by the student model in (b) is rather blurry, and it fails to correctly identify the segmentation of the nodes, stems, and leaves. This indicates that the SEKD embedding distillation can effectively improve the recognition accuracy of the model for small objects. Images (d) and (c) are the segmentation effect diagrams of the combined distillation. Compared with the basic SEKD distillation method, the combined distillation method can more clearly complete the segmentation of crop images, and the segmentation effect has more advantages than that of SEKD.

The above segmentation results indicate that SEKD can effectively improve the segmentation accuracy of the original model in farmland environments with complex interference factors. The further introduction of combined distillation methods (SEKD-SU and SEKD-CT) compensates for the shortcomings of the basic SEKD distillation, such as blurry crop recognition and incomplete boundary segmentation. These methods demonstrate higher accuracy and precision in the recognition and segmentation of multi-class crops. These results validate the important role of the combined distillation strategy in enhancing the model’s ability to identify fine-grained features in agricultural scenarios.

3.2.2. The Analysis of the Distillation Results on the Sugar Dataset

The sugar dataset has three segmentation categories and there is no interference from complex environments. As shown in Table 4, the SEKD series of distillation models also demonstrates significant improvements in segmentation performance on the sugar dataset.

Table 4.

The results of distillation on the sugar dataset.

The mIoU of SegFormerB3 is 87.38%, outperforming that of SegFormerB0, which is 74.44%. Thanks to the substantial increase in the number of parameters (47.22 M) and FLOPs (142.75 G) of SegFormerB3 compared to SegFormerB0, it achieved a superior performance. In contrast, the SEKD model, with a resource consumption of only 4.58 million parameters and 16.37 GFLOPs of computation, achieved an mIoU of 76.73%, which is a 2.29% improvement compared to SegFormerB0. The combined distillation models SEKD-SU and SEKD-CT continuously optimize their performance while maintaining the same resource efficiency, achieving mIoUs of 79.77% and 81.23% respectively. This trend indicates that the SEKD series effectively improves the segmentation accuracy through structural optimization, rather than simply increasing the model size.

As shown in Table 4, both the KD and the TKD models maintain relatively low parameter counts and computational costs. Although they achieve certain performance improvements compared to the baseline SegFormerB0 model (with an mIoU of 74.44%), reaching 75.55% and 76.68%, respectively, their segmentation accuracy is still significantly lower than that of our proposed SEKD series methods. The main reason for the lower distillation efficiency of KD and TKD lies in their reliance on a single source of knowledge, as their knowledge transfer is based only on the final output or specific feature information. This single-source approach limits the further potential of the model. In contrast, the SEKD combined distillation adopts a multi-level and multi-perspective strategy, effectively integrating various knowledge sources, such as structural information and embedded features, thereby significantly enhancing the segmentation performance.

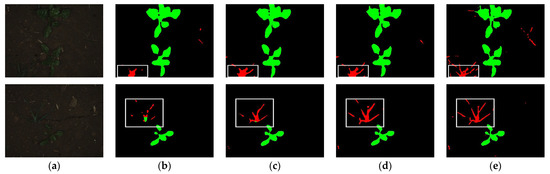

The SEKD series distillation significantly enhances the contour segmentation accuracy of the target categories (crop and weeds) and the background in the sugar dataset. Efficient segmentation has been successfully achieved under the condition of limited resources, verifying the effectiveness of this distillation method in balancing lightweight performance and accuracy. As shown in Figure 7, for the segmentation of details and contours, SEKD demonstrates a more distinct ability to delineate boundaries in the identification of complex leaf edges and small weed areas. Compared with the SEKD, the segmentation results output by SEKD-CT show a significant reduction in noise in the transition area where the main body of the sugar meets the background. The error segmentation rate of weeds is significantly reduced. This improvement is attributed to the adaptive fusion mechanism of the multi-scale contextual information utilized by this distillation method. It is able to capture both local detailed features and global semantic correlations simultaneously, enabling the precise depiction of fine-grained contours under limited computational resources. The experimental results show that the structural optimization strategy of the SEKD series not only improves the overall segmentation performance but also provides reliable technical support for automated weeding in agricultural scenarios by enhancing the contour consistency.

Figure 7.

An example image of the segmentation results of sugar. The white boxes highlight the regions with differences in the model segmentation performance. (a) Original image. (b) Segmentation result without distillation. (c) SEKD segmentation result. (d) SEKD-SU segmentation result. (e) SEKD-CT segmentation result.

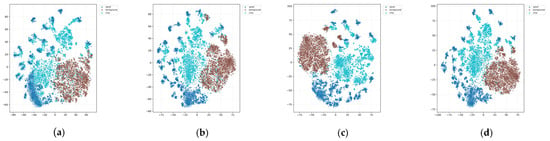

Analyses of t-SNE visualizations: From the perspective of feature representation, we employ t-SNE visualization to evaluate the feature separability in the latent space under the embedding distillation and hybrid distillation strategies.

As shown in Figure 8, under identical t-SNE settings, the combined distillation in SEKD (Sil = 0.27, DBI = 1.83) leads to a more distinct pattern of tight intra-cluster aggregation and clear inter-cluster separation in the feature distribution of the student model, thereby enhancing the cohesion within each class and widening the gaps between different semantic categories. In contrast, the student model without distillation (Sil = 0.17, DBI = 2.58) still exhibits dispersed intra-class points and noticeable overlaps between classes. This visual evidence is consistent with the trends observed in our clustering metrics, further confirming, at the feature-space level, that the joint distillation strategy enhances feature discriminability and supports the observed improvements in performance.

Figure 8.

t-SNE visualizations of feature distributions on the sugar dataset for (a) SegFormerB0 (Sil = 0.17, DBI = 2.58); (b) SEKD (Sil = 0.21, DBI = 2.13); (c) SEKD-SU (Sil = 0.27, DBI = 2.05); and (d) SEKD-CT (Sil = 0.27, DBI = 1.83).

3.2.3. Comparison with Other Advanced Knowledge Distillation Methods

To investigate the generalization and transferability of the proposed distillation method across different domains, we conducted experiments on two non-agricultural semantic segmentation datasets: Cityscapes [47] and Pascal VOC2012 [48]. By comparing our method with several mainstream distillation strategies, we aim to validate its adaptability and effectiveness in complex urban street scenes and general object segmentation tasks, thereby further demonstrating its broad application potential in cross-domain semantic segmentation.

As shown in Table 5, we conducted a comparative analysis of various knowledge distillation methods on the Cityscapes dataset, aiming to verify the generalization and transferability of the proposed SEKD method. The baseline model SegFormer-B0 achieved an mIoU of 53.40%, while traditional feature distillation (KD) only improved the performance by 3.49%. Recent methods with better performance, such as RKD and VID, achieved improvements of 3.61% and 5.23%, respectively, but their effectiveness still falls short compared to our proposed SEKD method.

Table 5.

The distillation results on the cityscapes dataset.

SEKD and its combined strategies (SEKD-SU and SEKD-CT) achieved more significant performance improvements while maintaining low parameter counts and computational complexity. For example, the student model distilled by SEKD-SU has only about 20% of the parameters of the teacher model SegFormer-B2, yet the segmentation accuracy gap is merely 12.41%. This result fully demonstrates that the SEKD series of methods is not only suitable for agricultural scenarios but also possesses strong cross-domain adaptability and exhibits excellent engineering practicality.

As shown in Table 6, we conducted systematic knowledge distillation experiments on the enhanced VOC2012 dataset. The experimental results show that the CD distillation based on channel-level knowledge distillation effectively enhances the attention to the significant regions through the activation map normalization strategy, increasing the mIoU of the student model by 9.32% (44.62% vs. 53.94%). The KR distillation innovatively introduces a cross-stage knowledge review mechanism, supervising the high-level features with low-level features, and increases the mIoU by 17.02% (44.62% vs. 61.64%).

Table 6.

The distillation results on the enhanced VOC2012 dataset.

The SEKD series of methods proposed by us have achieved a more optimal balance between model complexity and performance improvement. With the embedding block distillation strategy, SEKD has achieved a 17.29% increase in the mIoU (from 44.62% to 61.91%) by moderately increasing 1.75 million parameters and 4.26 giga floating point operations in the computational workload. Its improved versions, SEKD-SU and SEKD-CT, further boost the performance to 64.13% and 66.26%, respectively, through a combined distillation strategy, which represent increases of 19.51% and 21.64% compared to the undistilled student model. The experimental results demonstrate that the design of embedding block feature distillation is capable of effectively extracting structured knowledge. The combined distillation strategy, by integrating multi-level knowledge, can significantly enhance the model’s transferability.

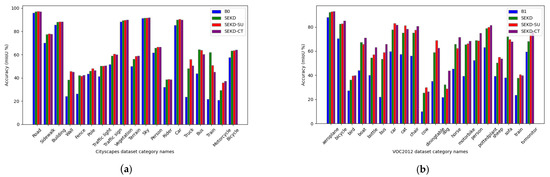

Per-Class Analysis of Distillation Results on Cityscapes and VOC2012 Datasets: We carried out a visual presentation of the mIoU results for each category of Cityscapes and VOC2012, as depicted in Figure 9. The SEKD series of models demonstrates remarkable performance improvements in multiple categories.

Figure 9.

The mIoU of each category for the SEKD series of distillation methods is presented on the Cityscapes and VOC2012 datasets. (a) shows the mIoU of each category on the Cityscapes dataset; (b) shows the mIoU of each category on the VOC2012 dataset.

As shown in Figure 9, on the Cityscapes dataset, the SEKD series of models outperforms the baseline model (B0) in most categories. Especially for the more difficult-to-detect categories, such as “fence”, “pole” and “traffic light”, it exhibits higher segmentation accuracies. This indicates that the SEKD model has more superior feature extraction capabilities when dealing with complex scenes. Similarly, on the VOC2012 dataset, the SEKD series of distillation methods also exhibits significant performance advantages in categories such as “bird”, “dog”, and “person”. Especially in the case of SEKD-CT, the mIoU of all the categories exceeds that of the student model and shows a substantial improvement compared to the baseline SEKD distillation method. These results indicate that the SEKD distillation method and the combined distillation method effectively enhance the model’s generalization ability and fine-grained classification ability, verifying their potential and advantages in applications for complex visual tasks.

3.3. Ablation Experiments

3.3.1. The Verification of the Effectiveness of the Feature Enhancement and Fusion Module

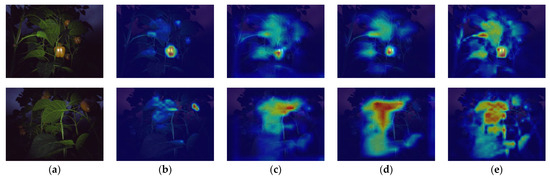

We conducted ablation experiments on the effectiveness of the proposed Feature Enhancement and Fusion Module (FEF) to verify its contribution to improving the performance of knowledge distillation. In order to comprehensively evaluate the impact of the FEF module, we designed a series of experiments, observing the performance changes by gradually removing or replacing the key functions within the module.

First of all, we set up a basic fusion module (Base). This model does not contain a feature enhancement mechanism and only relies on basic convolutional operations to increase the feature dimension. On this basis, we gradually introduced each sub-component in the FEF module and separately implemented the single fusion of channel attention (CF) and spatial attention (SF). By comparing the performance of these models on the sweet pepper dataset, the contribution of each sub-module to the overall performance can be clearly identified.

According to the experimental results shown in Table 7 above, after adding the channel attention mechanism, the distillation model was significantly improved, both in its accuracy and its feature extraction ability. After further introducing the spatial attention mechanism, the performance of the distillation model was further enhanced. This verifies the significance of spatial attention in capturing crucial spatial information. Finally, when the complete attention module was introduced, the distillation model achieved the best performance. This demonstrates that the FEF module has a remarkable effect on feature fusion and enhancing the feature expression ability.

Table 7.

The experimental results of gradually introducing spatial attention and channel attention into the FEF module, where the sweet pepper dataset was used in the experiment.

In Figure 10, we visualized the attention regions of the model on crop images under different attention mechanisms within the FEF module. (a) shows the original image, while (b) represents the baseline model without any attention mechanism. In this case, the attention distribution is relatively scattered, and the model fails to focus on key parts of the crop, especially the edges of the fruit and leaf boundaries, which may lead to blurred target recognition or broken boundaries. In (c) and (d), the channel attention mechanism and the spatial attention mechanism are applied separately. The results show that the model significantly enhances its focus on key crop regions, such as fruits and young leaves, with more concentrated attention on edges, thus improving its ability to perceive structural and fine-grained features. (e) shows the attention heatmap of the model after integrating the complete FEF module. The model with the FEF module demonstrates a strong ability to capture both the overall shape of the crop target and the detailed features of local areas, such as fruit edges and leaf tips. This global–local fusion attention strategy is particularly suitable for crop recognition and localization in agricultural images with complex backgrounds, such as overlapping leaves, uneven lighting, and occlusions.

Figure 10.

Visualization of attention maps. The darker colors represent areas where the model’s attention is more intensely focused. (a) Original image. (b) Base. (c) +CF (Channel Fusion). (d) +SF (Spatial Fusion). (e) FEF (Feature Enhancement and Fusion).

Through the feature enhancement strategy, the activation of meaningful regions is strengthened. This fusion strategy not only enhances the model’s expressive capability but also improves its ability to distinguish between similar objects and handle complex backgrounds more effectively.

3.3.2. Verifying the Effectiveness of Individual Distillation Modules

In order to verify the distillation effect of each module, we conducted a comparative experiment, as shown in Table 8. We selected SegFormerB0 and SegFormerB3 as the student and teacher models for the distillation, respectively. The mIoU values on the sugar dataset are 74.44% and 87.38%, respectively. In the experiment, we designed two groups of comparative distillation models. SEKD-SU(-EM) and SEKD-CT(-EM), respectively, represent SEKD-SU and SEKD-CT, with the loss of the embedding distillation part removed. The feature reconstruction loss and the feature relationship loss are used alone as the distillation loss for training.

Table 8.

The distillation results after increasing or decreasing the embedding patch loss, where the sugar dataset was used in the experiment.

It can be seen from the experimental results in Table 8 that the mIoU of SEKD on the sugar dataset reaches 76.73%. Compared with 74.44% of SegFormerB0, it has increased by 2.29%. This indicates that the distillation of the embedding module has a significant effect in improving the model performance.

In the experiment, we tried different combinations of loss functions. The results show that after removing the embedding loss from the SEKD-CT model, the mIoU drops from 81.23% to 78.20%, with a decrease of 3.03%. This result indicates that the feature-relationship-based distillation method we proposed is effective, capable of playing an important role in model training and significantly enhancing the distillation effect. Similarly, when the embedding loss of the SEKD-SU model is removed and only the self-supervised loss is retained, the mIoU decreases from 79.77% to 78.27%, with a decline of 1.5%. This further demonstrates the importance of feature reconstruction distillation in improving the performance of the model.

These results validate the effectiveness of the feature relationship distillation, feature reconstruction distillation, and embedding distillation methods we proposed. When different types of distillation methods are used in combination, the segmentation performance is superior to that of a single distillation method. This further demonstrates the potential of the combined distillation strategy we proposed in enhancing the model’s understanding of agricultural images. By integrating multi-level knowledge, this strategy consistently enhances the model’s ability to recognize fine-grained features, such as crops and weeds.

4. Discussion

4.1. Research Contributions and Advantages

This paper systematically analyzed the key factors affecting the effectiveness of knowledge distillation in agricultural domains and proposed a multi-level knowledge distillation method for enhanced crop segmentation in precision agriculture. Specifically, by leveraging SegFormer-based embedding patch knowledge distillation (SEKD) and further incorporating relationship feature distillation and feature reconstruction distillation strategies, our method significantly enhances the student network’s ability to perceive intricate features and structural information within complex agricultural environments. The experimental results demonstrate notable performance improvements on the sweet pepper and sugar datasets, particularly excelling at detecting small targets (such as nodes of sweet pepper plants) and suppressing interference from complex backgrounds (overlapping leaves and fruits). Moreover, the proposed method introduces only minimal additional computational and resource overheads, combining lightweight design with high efficiency. This makes it highly suitable for deployment on resource-constrained edge computing devices, such as agricultural robots and drone platforms, thus showing promising practical application prospects and scalability.

In practical knowledge patch stitching and processing, we adopted the strategy of limiting the image size to mitigate potential boundary effects and feature blurring. Although approaches such as annealing, nonlinear image compression, and boundary effect handling theoretically offer greater flexibility and potential performance improvements—enabling more refined local feature fusion—they typically rely on specific model architectures and involve complex parameter tuning, which reduces their transferability across different segmentation models and hampers their universal deployment. In contrast, the image size limitation method is simple to implement, can be easily integrated with existing segmentation model structures, and does not require extensive modifications to the algorithmic framework, thus exhibiting excellent engineering feasibility and practical applicability. Furthermore, its adaptability and deployment flexibility allow for better integration into knowledge distillation frameworks, effectively meeting the requirements for system efficiency and resource constraints in real-world scenarios.

4.2. Limitations and Weaknesses of the Proposed Method

Although the proposed distillation framework demonstrates promising effectiveness in agricultural segmentation tasks, several notable limitations remain in this study. First, the additional distillation steps inevitably introduce some model complexity; for example, the multi-level feature alignment between the teacher and the student networks during training increases the computational load and the memory consumption (leading to increased training computation and parameter counts). Second, the types of datasets used in this study are relatively limited, covering only sweet pepper and sugar crops, without involving a broader range of crop types or more complex environmental variations (such as intense lighting, occlusion, or extreme weather). Therefore, the generalization ability and robustness of the proposed method in other agricultural scenarios still need to be further validated. Finally, although our method emphasizes lightweight design and is suitable for deployment on resource-constrained devices, there is always a trade-off between model compression and segmentation accuracy. The excessive simplification of the model structure may compromise the richness of feature representation, thereby affecting model performance in more complex agricultural scenarios.

4.3. Future Directions and Prospects for Improvement

In future work, we will focus on exploring more efficient distillation strategies, such as dynamic or adaptive distillation methods, to further reduce additional computational overheads and training costs. At the same time, we plan to expand the scope of our datasets to include a wider variety of crop types and more complex environmental conditions, thereby comprehensively validating and enhancing the generalization ability and robustness of our model. Through these further studies and optimizations, the proposed method will become better aligned with the practical needs of smart agriculture and contribute to the widespread and robust application of agricultural visual perception technologies.

5. Conclusions

In this paper, we proposed a SegFormer-based embedding patch knowledge distillation framework (SEKD). By integrating feature relationship distillation and feature reconstruction distillation, a multi-level, fine-grained knowledge transfer mechanism was constructed, effectively addressing common challenges in agricultural production, such as indistinct boundaries between crops and weeds, the inaccurate recognition of small targets, and the insufficient representation of structural information in complex farmland imagery. SEKD significantly reduces the cost of model deployment on agricultural equipment, enhancing its feasibility in real-world smart agriculture scenarios. We conducted systematic experiments on two high-quality agricultural image segmentation datasets (sweet pepper and sugar), and the results show that compared to other mainstream knowledge distillation methods, SEKD demonstrates stronger boundary awareness and structural consistency in crop image segmentation tasks. This study provides a lightweight, accurate, and highly deployable visual solution for tasks such as crop recognition and weed detection in smart agriculture, laying a solid foundation for the practical application of intelligent agricultural perception systems.

Author Contributions

Conceptualization, Z.L.; methodology, L.X. (Lan Xiang) and J.S.; software, Z.L. and L.X. (Lan Xiang); validation, J.S.; formal analysis, L.X. (Lijia Xu); investigation, L.X. (Lan Xiang); resources, J.S.; data curation, M.W.; writing—original draft preparation, Z.L. and L.X. (Lan Xiang); writing—review and editing, J.S.; visualization, D.L.; supervision, M.W.; project administration, J.S.; funding acquisition, Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

The study was supported by the Open Fund Project of the Observation and Research Station of Land Ecology and Land Use in Chengdu Plain, Ministry of Natural Resources (Grant No. CDORS-2024-06); this research was funded by the Sichuan Provincial Science and Technology Department Regional Innovation Cooperation Project: Research on Key Technological Equipment for AI-Based Rice Pest and Disease Identification and Control, grant number 2024YFHZ0177. This research was funded by the Sichuan Provincial Natural Science Foundation Project, grant number 2024NSFSC0337 and 2025ZNSFSC0162.

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author. The code can be obtained at https://github.com/xl-alt/SEKD (accessed on 28 April 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| mIoU | mean intersection over union |

| FLOPs | floating point operations |

| Params | parameters |

| Sil | Silhouette Coefficient |

| DBI | Davies–Bouldin Index |

References

- Zhu, H.; Lin, C.; Liu, G.; Wang, D.; Qin, S.; Li, A.; Xu, J.-L.; He, Y. Intelligent agriculture: Deep learning in UAV-based remote sensing imagery for crop diseases and pests detection. Front. Plant Sci. 2024, 15, 1435016. [Google Scholar] [CrossRef] [PubMed]

- Burdett, H.; Wellen, C. Statistical and machine learning methods for crop yield prediction in the context of precision agriculture. Precis. Agric. 2022, 23, 1553–1574. [Google Scholar] [CrossRef]

- Palani, H.K.; Ilangovan, S.; Senthilvel, P.G.; Thirupurasundari, D.; Kumar, R. AI-Powered Predictive Analysis for Pest and Disease Forecasting in Crops. In Proceedings of the 2023 International Conference on Communication, Security and Artificial Intelligence (ICCSAI), Greater Noida, India, 23–25 November 2023; pp. 950–954. [Google Scholar]

- Sharma, A.K.; Rajawat, A.S. Crop yield prediction using hybrid deep learning algorithm for smart agriculture. In Proceedings of the 2022 Second International Conference on Artificial Intelligence and Smart Energy (ICAIS), Coimbatore, India, 23–25 February 2022; pp. 330–335. [Google Scholar]

- Johnson, N.; Kumar, M.S.; Dhannia, T. A survey on Deep Learning Architectures for effective Crop Data Analytics. In Proceedings of the 2021 International Conference on Advances in Computing and Communications (ICACC), Kochi, India, 21–23 October 2021; pp. 1–10. [Google Scholar]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Anand, T.; Sinha, S.; Mandal, M.; Chamola, V.; Yu, F.R. AgriSegNet: Deep aerial semantic segmentation framework for IoT-assisted precision agriculture. IEEE Sens. J. 2021, 21, 17581–17590. [Google Scholar] [CrossRef]

- Istiak, M.A.; Syeed, M.M.; Hossain, M.S.; Uddin, M.F.; Hasan, M.; Khan, R.H.; Azad, N.S. Adoption of Unmanned Aerial Vehicle (UAV) imagery in agricultural management: A systematic literature review. Ecol. Inform. 2023, 78, 102305. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, part III 18. pp. 234–241. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Sun, W.; Zhou, R.; Nie, C.; Wang, L.; Sun, J. Farmland segmentation from remote sensing images using deep learning methods. In Proceedings of the Remote Sensing for Agriculture, Ecosystems, and Hydrology XXII, Online, 21–25 September 2020; pp. 51–57. [Google Scholar]

- Xu, B.; Fan, J.; Chao, J.; Arsenijevic, N.; Werle, R.; Zhang, Z. Instance segmentation method for weed detection using UAV imagery in soybean fields. Comput. Electron. Agric. 2023, 211, 107994. [Google Scholar] [CrossRef]

- Zhang, J.; Gong, J.; Zhang, Y.; Mostafa, K.; Yuan, G. Weed identification in maize fields based on improved Swin-Unet. Agronomy 2023, 13, 1846. [Google Scholar] [CrossRef]

- Wang, J.; Gou, C.; Wu, Q.; Feng, H.; Han, J.; Ding, E.; Wang, J. Rtformer: Efficient design for real-time semantic segmentation with transformer. Adv. Neural Inf. Process. Syst. 2022, 35, 7423–7436. [Google Scholar]

- Yu, J.; Zhang, J.; Shu, A.; Chen, Y.; Chen, J.; Yang, Y.; Tang, W.; Zhang, Y. Study of convolutional neural network-based semantic segmentation methods on edge intelligence devices for field agricultural robot navigation line extraction. Comput. Electron. Agric. 2023, 209, 107811. [Google Scholar] [CrossRef]

- Zhang, Y.; Lv, C. TinySegformer: A lightweight visual segmentation model for real-time agricultural pest detection. Comput. Electron. Agric. 2024, 218, 108740. [Google Scholar] [CrossRef]

- Zhang, P.; Sun, X.; Zhang, D.; Yang, Y.; Wang, Z. Lightweight deep learning models for high-precision rice seedling segmentation from UAV-based multispectral images. Plant Phenomics 2023, 5, 0123. [Google Scholar] [CrossRef] [PubMed]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Wu, Z.; Liu, Z.; Lin, J.; Lin, Y.; Han, S. Lite transformer with long-short range attention. arXiv 2020. arXiv 2004, arXiv:2004.11886. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Mehta, S.; Rastegari, M. Mobilevit: Light-weight, general-purpose, and mobile-friendly vision transformer. arXiv 2021, arXiv:2110.02178. [Google Scholar]

- Tian, Y.; Chen, F.; Wang, H.; Zhang, S. Real-time semantic segmentation network based on lite reduced atrous spatial pyramid pooling module group. In Proceedings of the 2020 5th International Conference on Control, Robotics and Cybernetics (CRC), Wuhan, China, 16–18 October 2020; pp. 139–143. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Ji, D.; Wang, H.; Tao, M.; Huang, J.; Hua, X.-S.; Lu, H. Structural and statistical texture knowledge distillation for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16876–16885. [Google Scholar]

- Liu, R.; Yang, K.; Roitberg, A.; Zhang, J.; Peng, K.; Liu, H.; Wang, Y.; Stiefelhagen, R. TransKD: Transformer knowledge distillation for efficient semantic segmentation. IEEE Trans. Intell. Transp. Syst. arXiv 2024, arXiv:2202.13393. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, K.; Liu, C.; Qin, Z.; Luo, Z.; Wang, J. Structured knowledge distillation for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2604–2613. [Google Scholar]

- Shu, C.; Liu, Y.; Gao, J.; Yan, Z.; Shen, C. Channel-wise knowledge distillation for dense prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 5311–5320. [Google Scholar]

- Lee, S.; Song, B.C. Graph-based knowledge distillation by multi-head attention network. arXiv 2019, arXiv:1907.02226. [Google Scholar]

- Amirkhani, A.; Khosravian, A.; Masih-Tehrani, M.; Kashiani, H. Robust semantic segmentation with multi-teacher knowledge distillation. IEEE Access 2021, 9, 119049–119066. [Google Scholar] [CrossRef]

- Milioto, A.; Lottes, P.; Stachniss, C. Real-time semantic segmentation of crop and weed for precision agriculture robots leveraging background knowledge in CNNs. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2229–2235. [Google Scholar]

- Tian, Z.; Chen, P.; Lai, X.; Jiang, L.; Liu, S.; Zhao, H.; Yu, B.; Yang, M.-C.; Jia, J. Adaptive perspective distillation for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 1372–1387. [Google Scholar] [CrossRef]

- Chen, L.; Yao, H.; Fu, J.; Ng, C.T. The classification and localization of crack using lightweight convolutional neural network with CBAM. Eng. Struct. 2023, 275, 115291. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Li, F.; Li, G.; He, X.; Cheng, J. Dynamic dual gating neural networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 5330–5339. [Google Scholar]

- Böttcher, A.; Wenzel, D. The Frobenius norm and the commutator. Linear Algebra Its Appl. 2008, 429, 1864–1885. [Google Scholar] [CrossRef]

- Pillai, S.U.; Suel, T.; Cha, S. The Perron-Frobenius theorem: Some of its applications. IEEE Signal Process. Mag. 2005, 22, 62–75. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

- Zhao, J.-X.; Liu, J.-J.; Fan, D.-P.; Cao, Y.; Yang, J.; Cheng, M.-M. EGNet: Edge guidance network for salient object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8779–8788. [Google Scholar]

- Lin, F.; Liang, Z.; Wu, S.; He, J.; Chen, K.; Tian, S. Structtoken: Rethinking semantic segmentation with structural prior. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 5655–5663. [Google Scholar] [CrossRef]

- Zhu, L.; Ji, D.; Zhu, S.; Gan, W.; Wu, W.; Yan, J. Learning statistical texture for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12537–12546. [Google Scholar]

- Hu, X.; Bao, M.; Zhang, X.-P.; Wen, S.; Li, X.; Hu, Y.-H. Quantized Kalman filter tracking in directional sensor networks. IEEE Trans. Mob. Comput. 2017, 17, 871–883. [Google Scholar] [CrossRef]

- Zhu, L.; Chen, T.; Yin, J.; See, S.; Liu, J. Learning gabor texture features for fine-grained recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 1621–1631. [Google Scholar]

- Barth, R.; IJsselmuiden, J.; Hemming, J.; Van Henten, E.J. Data synthesis methods for semantic segmentation in agriculture: A Capsicum annuum dataset. Comput. Electron. Agric. 2018, 144, 284–296. [Google Scholar] [CrossRef]

- Chebrolu, N.; Lottes, P.; Schaefer, A.; Winterhalter, W.; Burgard, W.; Stachniss, C. Agricultural robot dataset for plant classification, localization and mapping on sugar beet fields. Int. J. Robot. Res. 2017, 36, 1045–1052. [Google Scholar] [CrossRef]

- Yang, J.; Shi, Y.; Qi, Z. DFR: Deep Feature Reconstruction for Unsupervised Anomaly Segmentation. arXiv 2020, arXiv:2012.07122. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Park, W.; Kim, D.; Lu, Y.; Cho, M. Relational knowledge distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3967–3976. [Google Scholar]

- Ahn, S.; Hu, S.X.; Damianou, A.; Lawrence, N.D.; Dai, Z. Variational information distillation for knowledge transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9163–9171. [Google Scholar]

- Chen, P.; Liu, S.; Zhao, H.; Jia, J. Distilling knowledge via knowledge review. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5008–5017. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).