Driving by a Publicly Available RGB Image Dataset for Rice Planthopper Detection and Counting by Fusing Swin Transformer and YOLOv8-p2 Architectures in Field Landscapes

Abstract

1. Introduction

2. Materials and Methods

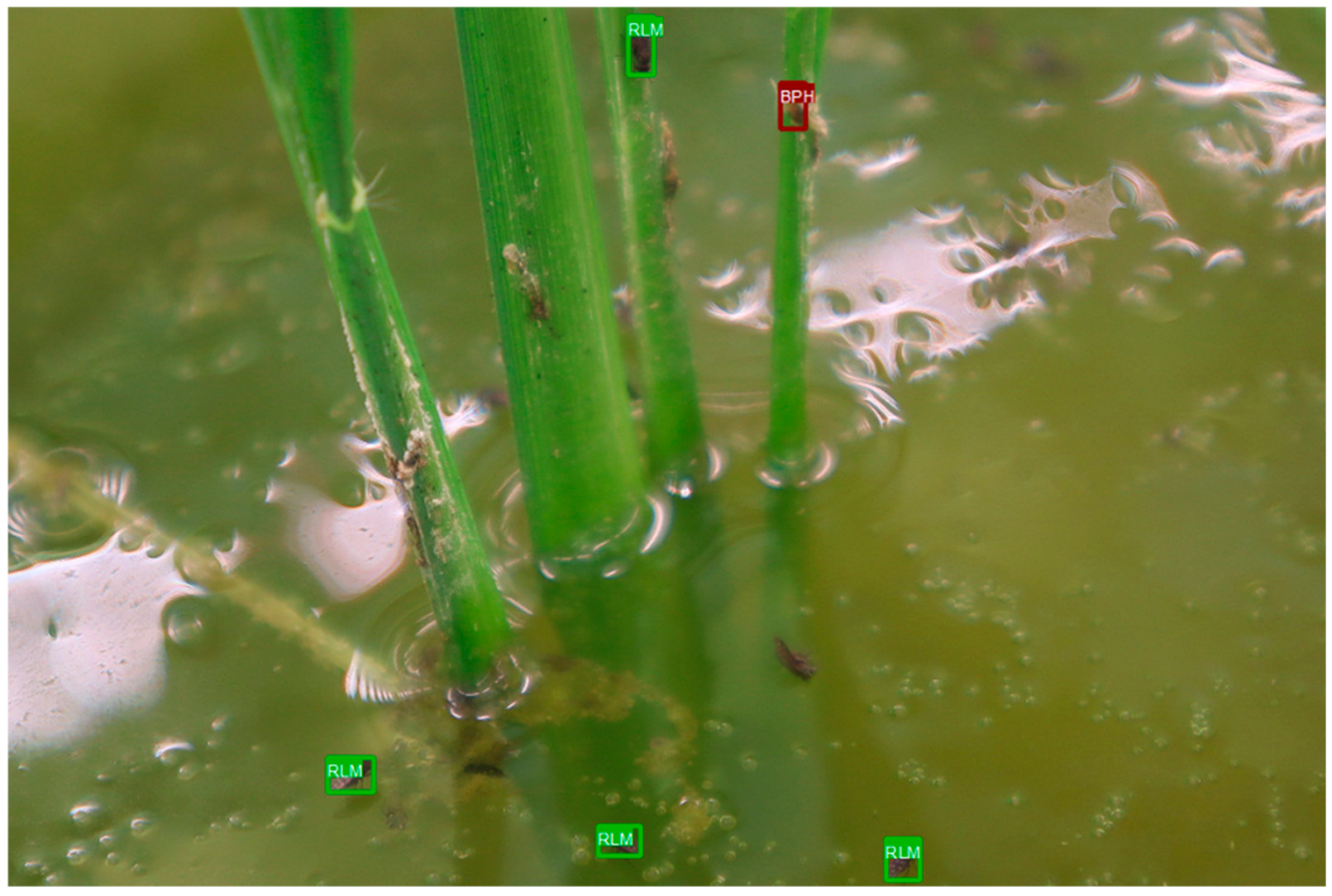

2.1. RGB Digital Imagery Collection from the Field Landscapes

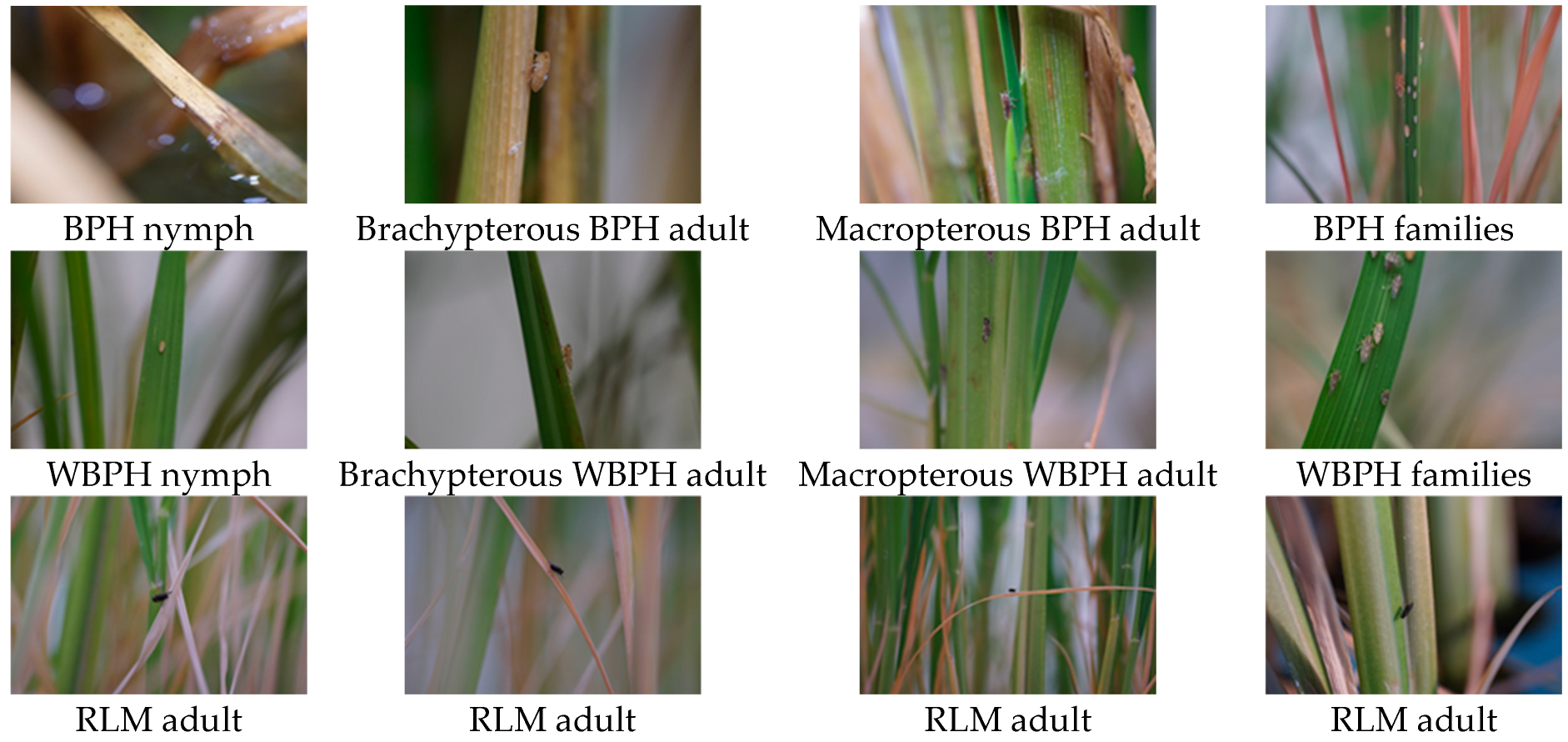

2.2. Planthopper Object Annotation and Counting Based on X-AnyLabeling

2.3. Constructing the High-Quality Publicly Available Rice Planthopper Image Dataset

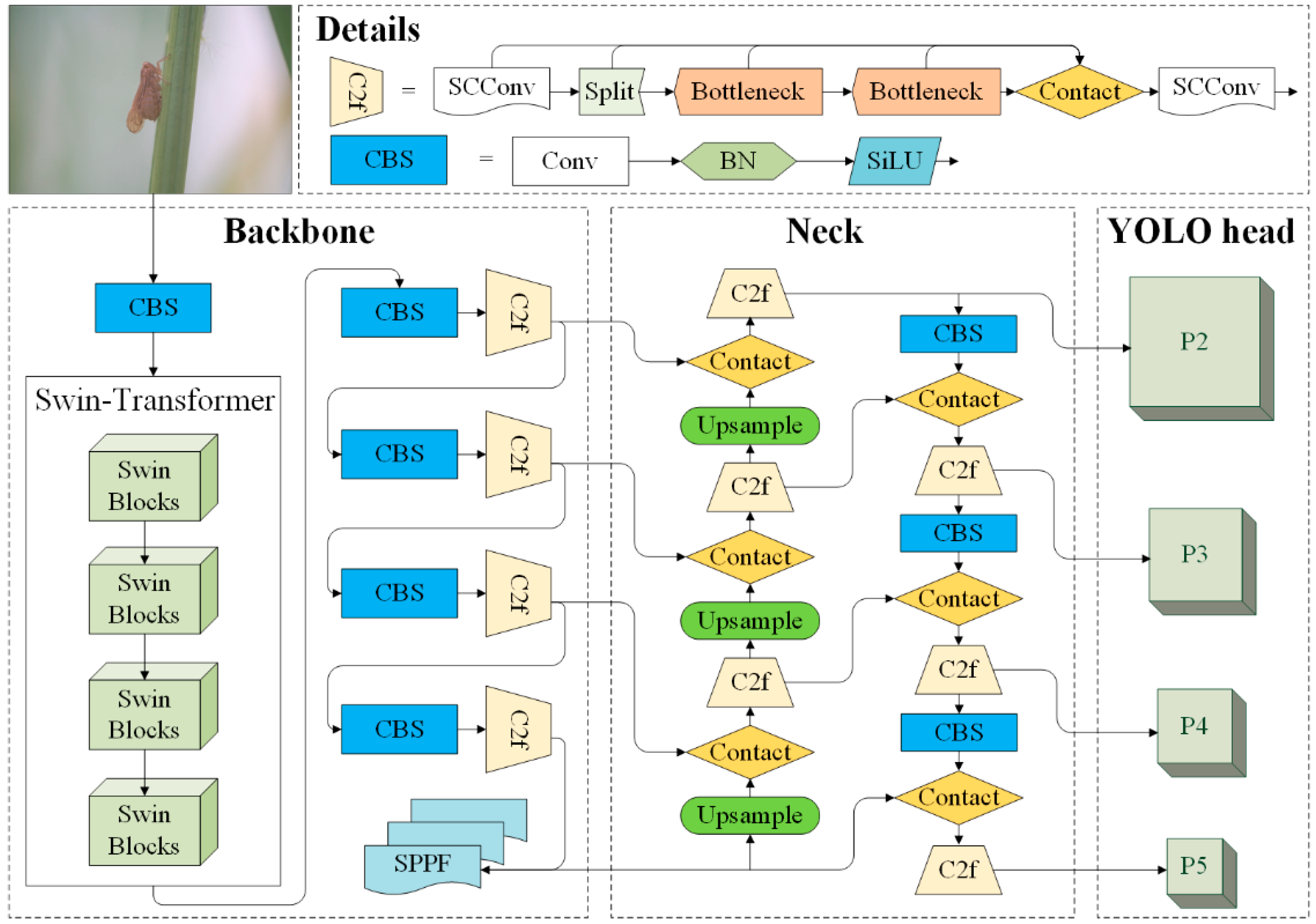

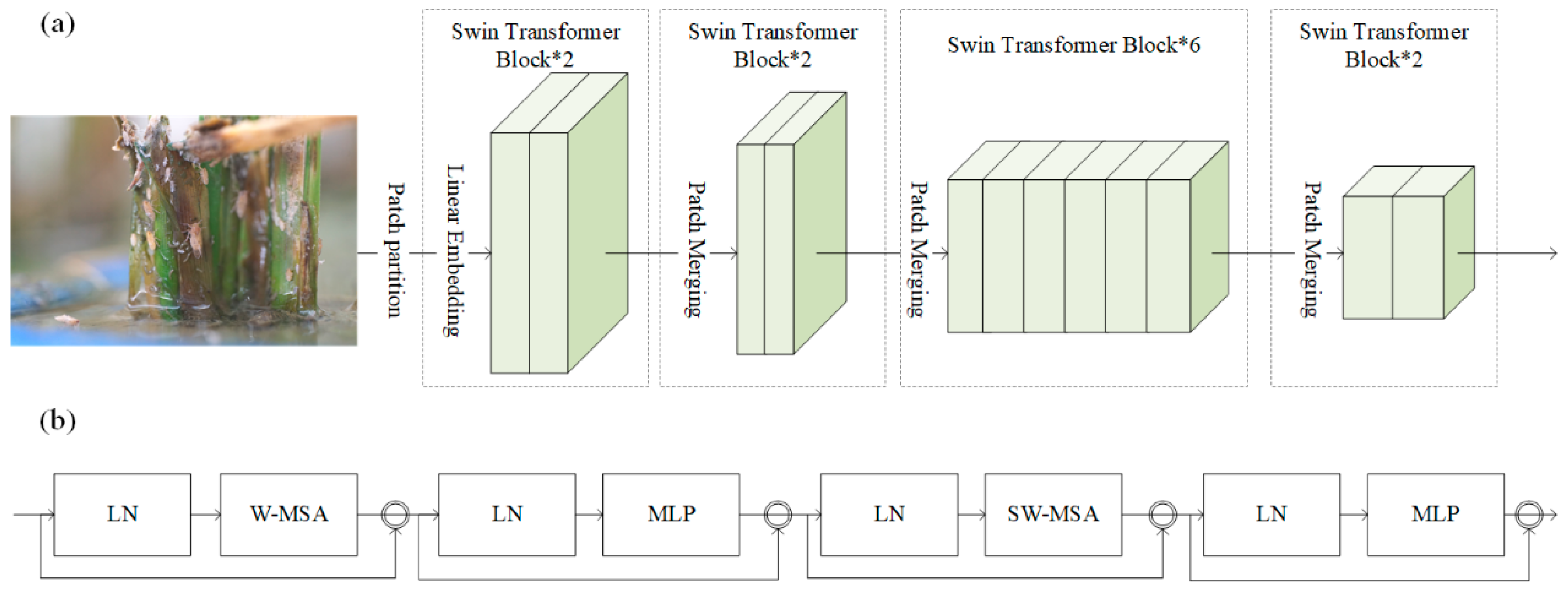

2.4. Fusing Swin Transformer-Based Module with YOLOv8 Backbone for Extrating Features

2.5. Optimizing Computation Efficiency of the SwinT YOLOv8-p2 Architecture

2.6. The Evaluation Metrics and Benchmark Methods Involved in Detection and Counting Tasks

3. Results

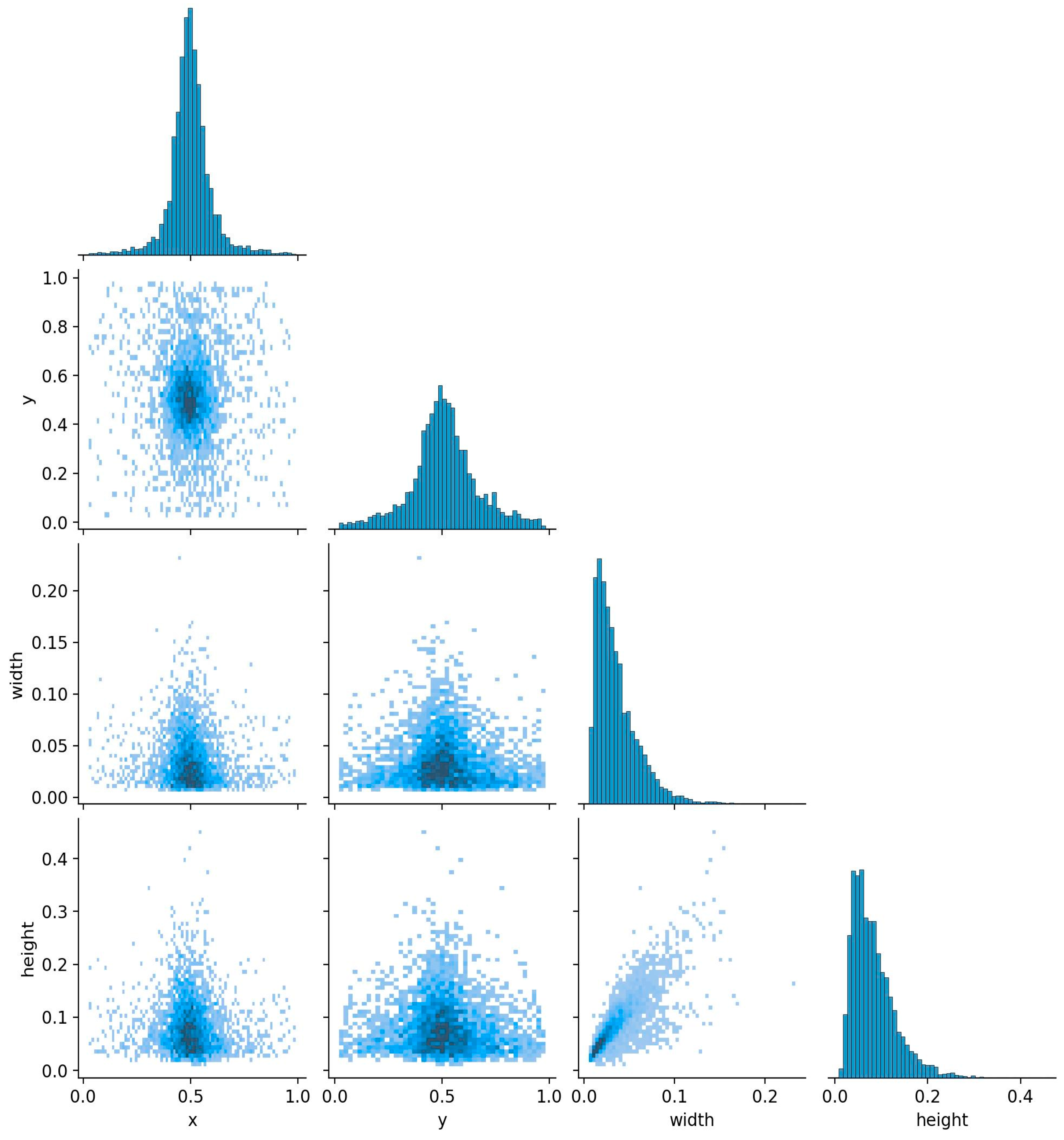

3.1. The Size and Spatial Distribution of the Target Box in the Rice Planthopper Dataset

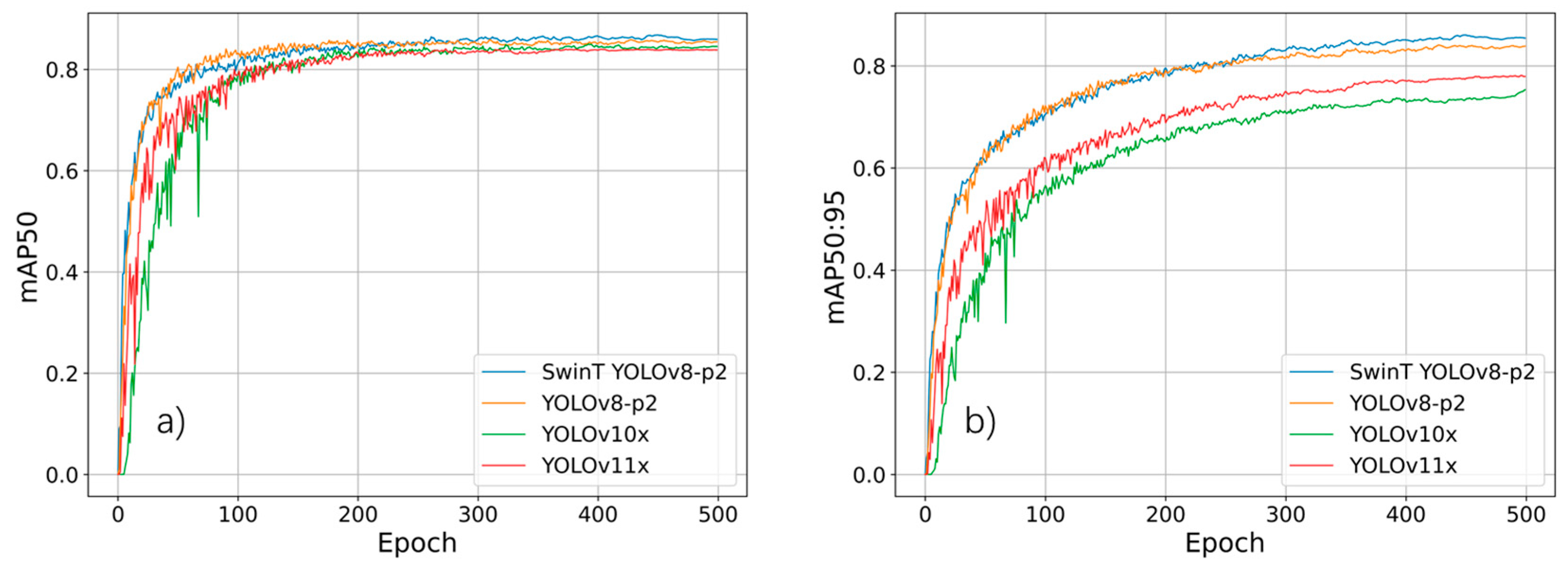

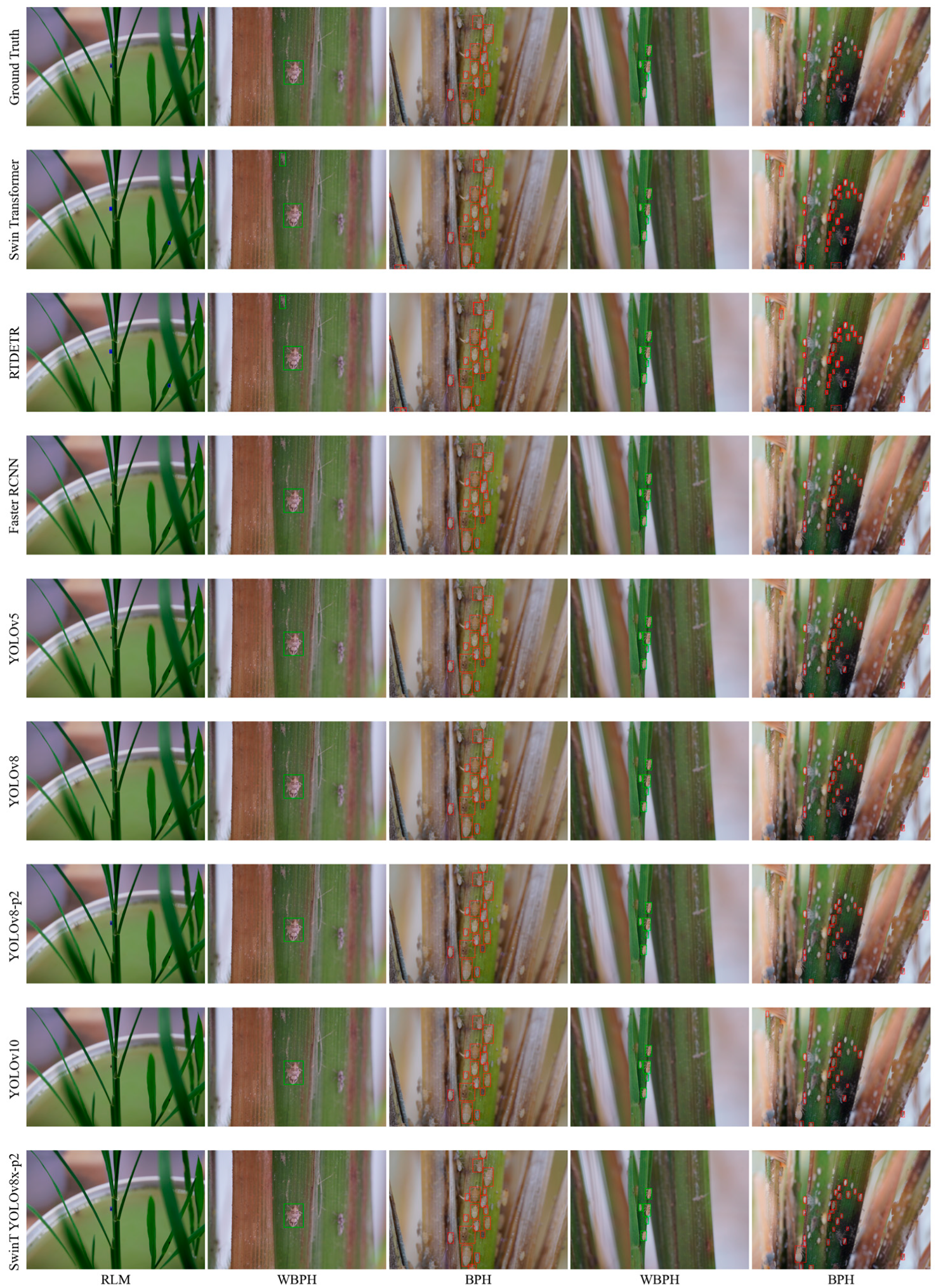

3.2. Performances of Different Deep Learning Methods for Detecting Planthoppers

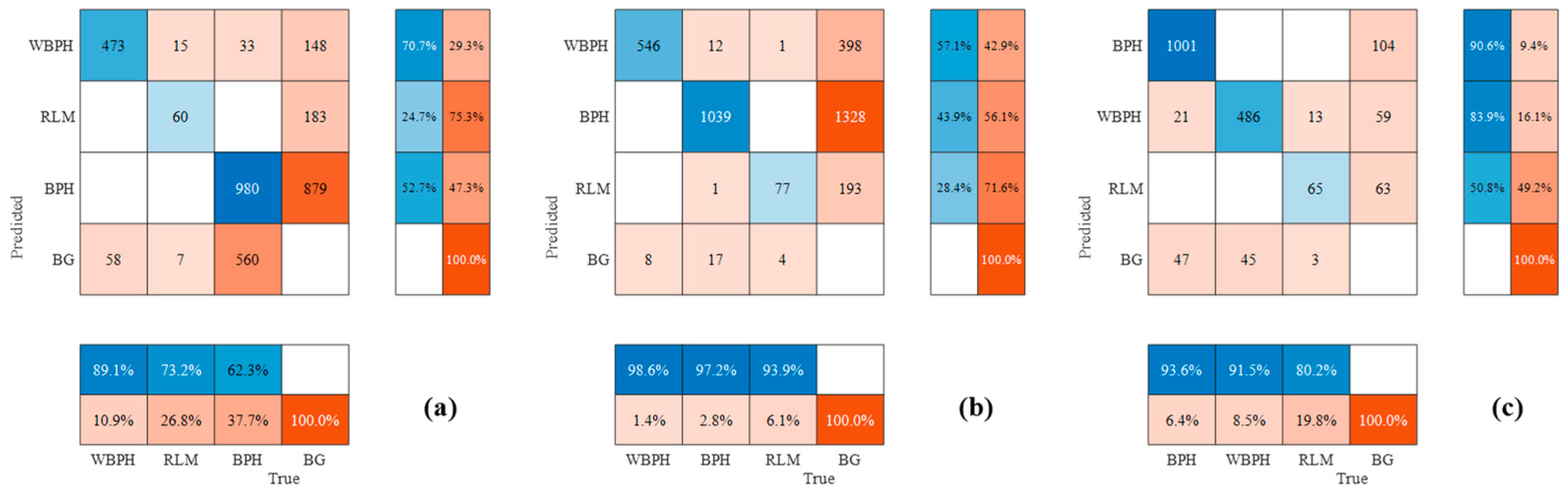

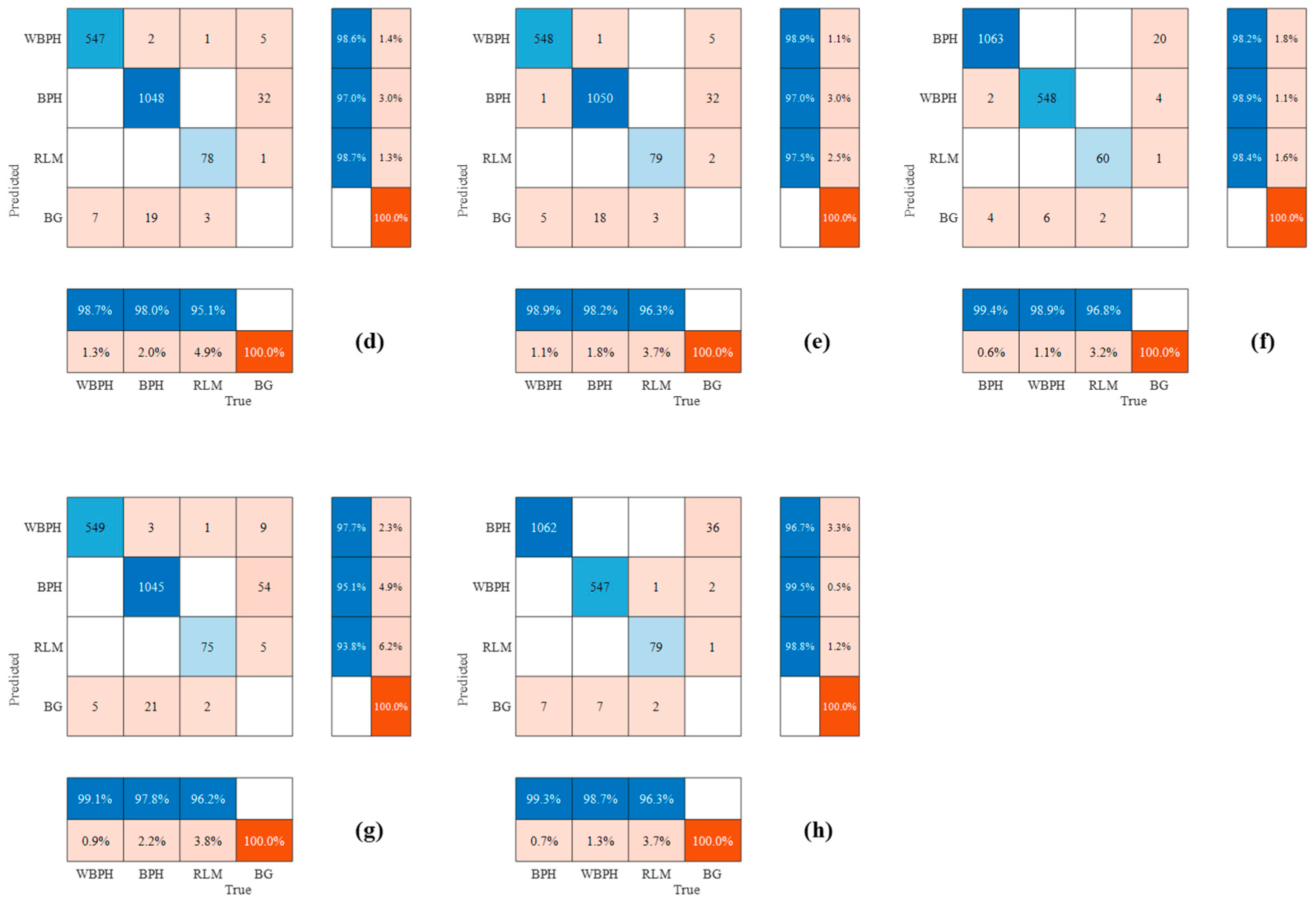

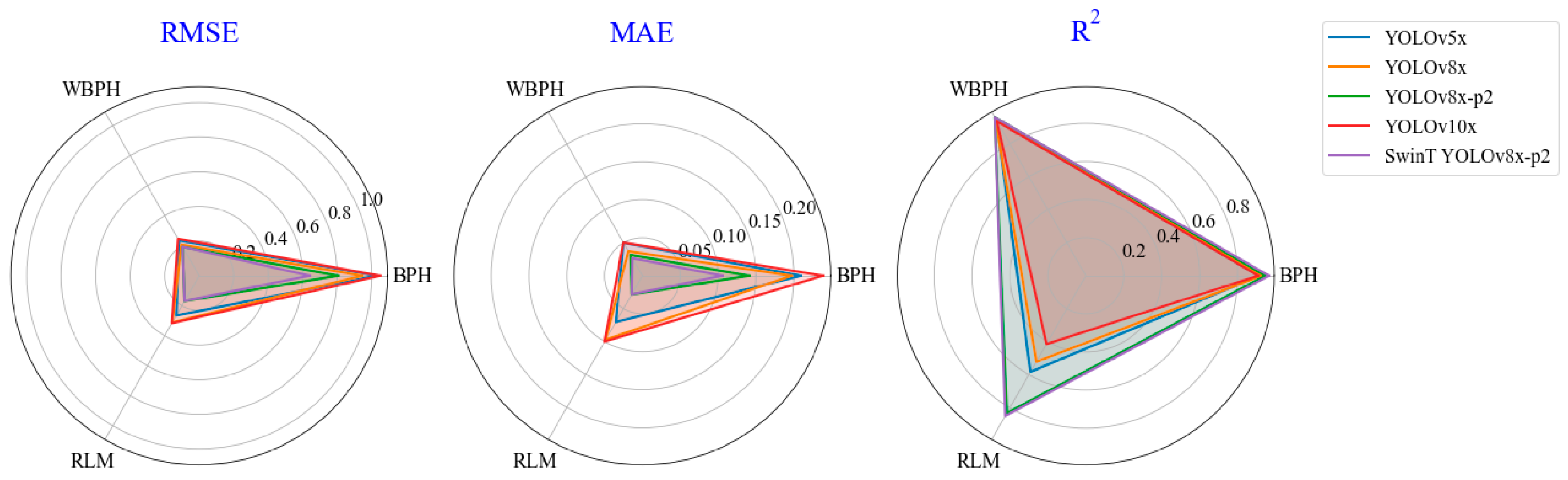

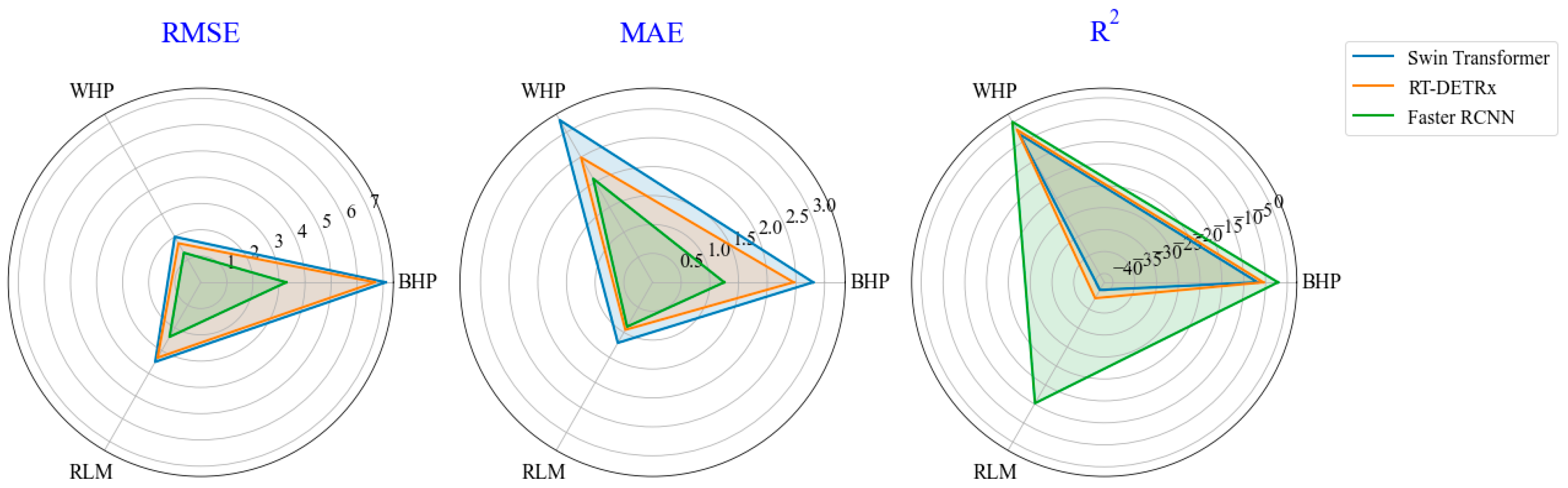

3.3. Differences in Planthopper Counting Based on Involved Methods

4. Discussion

5. Conclusions

- (1)

- A high-definition publicly accessible planthopper image dataset has been created, and the small targets with complex backgrounds are dominant in this dataset.

- (2)

- YOLOv8-p2 is robust for the detection of pests, with mAP50, mAP50:95, F1-score, Recall, Precision and FPS of up to 0.847, 0.835, 0.899, 0.985, 0.826 and 16.69, respectively.

- (3)

- By integrating the Swin Transformer module and YOLOv8-p2 architectures, the performance of SwinT YOLOv8-p2 shows remarkable improvement compared to the YOLOv8-p2 and YOLOv10 methods, with increases in the mAP50 and mAP50:95 ranging from 1.9% to 61.8%.

- (4)

- The correlation relationship in counting between the manually counted pests and detected pests was strong across the YOLO methods, especially for the SwinT YOLOv8x-p2 method, with the R2 above 0.85 and the RMSE and MAE below 0.64 and 0.11, respectively, in different pests.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Seck, P.A.; Diagne, A.; Mohanty, S.; Wopereis, M.C. Crops that feed the world 7: Rice. Food Secur. 2012, 4, 7–24. [Google Scholar] [CrossRef]

- Chowdhury, P.R.; Medhi, H.; Bhattacharyya, K.G.; Hussain, C.M. Severe deterioration in food-energy-ecosystem nexus due to ongoing Russia-Ukraine war: A critical review. Sci. Total Environ. 2023, 902, 166131. [Google Scholar] [CrossRef]

- Heong, K.L.; Wong, L.; Delos Reyes, J.H. Addressing planthopper threats to Asian rice farming and food security: Fixing insecticide misuse. In Rice Planthoppers: Ecology, Management, Socio Economics and Policy; Asian Development Bank: Manila, Philippines, 2015; pp. 65–76. [Google Scholar]

- Dale, D. Insect pests of the rice plant–their biology and ecology. Biol. Manag. Rice Insects 1994, 438, 363–487. [Google Scholar]

- Sun, G.; Liu, S.; Luo, H.; Feng, Z.; Yang, B.; Luo, J.; Tang, J.; Yao, Q.; Xu, J. Intelligent monitoring system of migratory pests based on searchlight trap and machine vision. Front. Plant Sci. 2022, 13, 897739. [Google Scholar] [CrossRef]

- Zhou, X.; Zhang, H.; Pan, Y.; Li, X.; Jia, H.; Wu, K. Comigration of the predatory bug Cyrtorhinus lividipennis (Hemiptera: Miridae) with two species of rice planthopper across the South China Sea. Biol. Control 2023, 179, 105167. [Google Scholar] [CrossRef]

- Sheng, H.; Yao, Q.; Luo, J.; Liu, Y.; Chen, X.; Ye, Z.; Zhao, T.; Ling, H.; Tang, J.; Liu, S. Automatic detection and counting of planthoppers on white flat plate images captured by AR glasses for planthopper field survey. Comput. Electron. Agric. 2024, 218, 108639. [Google Scholar] [CrossRef]

- Hanson, P.E. Insects and Other Arthropods of Tropical America; Cornell University Press: Ithaca, NY, USA, 2016. [Google Scholar]

- Qing, Y.; Chen, G.-T.; Zheng, W.; Zhang, C.; Yang, B.-J.; Jian, T. Automated detection and identification of white-backed planthoppers in paddy fields using image processing. J. Integr. Agric. 2017, 16, 1547–1557. [Google Scholar]

- He, Y.; Zhou, Z.; Tian, L.; Liu, Y.; Luo, X. Brown rice planthopper (Nilaparvata lugens Stal) detection based on deep learning. Precis. Agric. 2020, 21, 1385–1402. [Google Scholar] [CrossRef]

- Ibrahim, M.F.; Khairunniza-Bejo, S.; Hanafi, M.; Jahari, M.; Ahmad Saad, F.S.; Mhd Bookeri, M.A. Deep CNN-Based Planthopper Classification Using a High-Density Image Dataset. Agriculture 2023, 13, 1155. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhan, W.; Sun, K.; Zhang, Y.; Guo, Y.; He, Z.; Hua, D.; Sun, Y.; Zhang, X.; Tong, S.; et al. RPH-Counter: Field detection and counting of rice planthoppers using a fully convolutional network with object-level supervision. Comput. Electron. Agric. 2024, 225, 109242. [Google Scholar] [CrossRef]

- Khairunniza-Bejo, S.; Ibrahim, M.F.; Hanafi, M.; Jahari, M.; Ahmad Saad, F.S.; Mhd Bookeri, M.A. Automatic Paddy Planthopper Detection and Counting Using Faster R-CNN. Agriculture 2024, 14, 1567. [Google Scholar] [CrossRef]

- Guo, Q.; Wang, C.; Xiao, D.; Huang, Q. An Enhanced Insect Pest Counter Based on Saliency Map and Improved Non-Maximum Suppression. Insects 2021, 12, 705. [Google Scholar] [CrossRef]

- Cong, S.; Zhou, Y. A review of convolutional neural network architectures and their optimizations. Artif. Intell. Rev. 2023, 56, 1905–1969. [Google Scholar] [CrossRef]

- Wang, H.; Li, Y.; Dang, L.M.; Moon, H. An efficient attention module for instance segmentation network in pest monitoring. Comput. Electron. Agric. 2022, 195, 106853. [Google Scholar] [CrossRef]

- Islam, S.; Elmekki, H.; Elsebai, A.; Bentahar, J.; Drawel, N.; Rjoub, G.; Pedrycz, W. A comprehensive survey on applications of transformers for deep learning tasks. Expert Syst. Appl. 2024, 241, 122666. [Google Scholar] [CrossRef]

- Yang, J.; Li, C.; Zhang, P.; Dai, X.; Xiao, B.; Yuan, L.; Gao, J. Focal self-attention for local-global interactions in vision transformers. arXiv 2021, arXiv:2107.00641. [Google Scholar]

- He, J.; Zhang, S.; Yang, C.; Wang, H.; Gao, J.; Huang, W.; Wang, Q.; Wang, X.; Yuan, W.; Wu, Y. Pest recognition in microstates state: An improvement of YOLOv7 based on Spatial and Channel Reconstruction Convolution for feature redundancy and vision transformer with Bi-Level Routing Attention. Front. Plant Sci. 2024, 15, 1327237. [Google Scholar] [CrossRef]

- Tabani, H.; Balasubramaniam, A.; Marzban, S.; Arani, E.; Zonooz, B. Improving the efficiency of transformers for resource-constrained devices. In Proceedings of the 2021 24th Euromicro Conference on Digital System Design (DSD), Palermo, Italy, 1–3 September 2021; pp. 449–456. [Google Scholar]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y. A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 87–110. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Qi, F.; Chen, G.; Liu, J.; Tang, Z. End-to-end pest detection on an improved deformable DETR with multihead criss cross attention. Ecol. Inform. 2022, 72, 101902. [Google Scholar] [CrossRef]

- Zhang, Y.; Lv, C. TinySegformer: A lightweight visual segmentation model for real-time agricultural pest detection. Comput. Electron. Agric. 2024, 218, 108740. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Li, C.; Luo, C.; Zhou, Z.; Wang, R.; Ling, F.; Xiao, L.; Lin, Y.; Chen, H. Gene expression and plant hormone levels in two contrasting rice genotypes responding to brown planthopper infestation. BMC Plant Biol. 2017, 17, 57. [Google Scholar] [CrossRef]

- Wang, W. Advanced Auto Labeling Solution with Added Features. Available online: https://github.com/CVHub520/X-AnyLabeling (accessed on 3 November 2023).

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 4015–4026. [Google Scholar]

- Sohan, M.; Sai Ram, T.; Rami Reddy, C.V. A review on yolov8 and its advancements. In Proceedings of the International Conference on Data Intelligence and Cognitive Informatics, Tirunelveli, India, 18–20 November 2024; pp. 529–545. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Yang, Y.; Jiao, L.; Liu, X.; Liu, F.; Yang, S.; Feng, Z.; Tang, X. Transformers meet visual learning understanding: A comprehensive review. arXiv 2022, arXiv:2203.12944. [Google Scholar]

- Chen, J.; He, T.; Zhuo, W.; Ma, L.; Ha, S.; Chan, S.-H.G. Tvconv: Efficient translation variant convolution for layout-aware visual processing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12548–12558. [Google Scholar]

- Li, J.; Wen, Y.; He, L. Scconv: Spatial and channel reconstruction convolution for feature redundancy. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6153–6162. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Jocher, G.; Stoken, A.; Borovec, J.; Changyu, L.; Hogan, A.; Diaconu, L.; Poznanski, J.; Yu, L.; Rai, P.; Ferriday, R. ultralytics/yolov5: v3.0. Zenodo 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 20 December 2020).

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Kurmi, Y.; Gangwar, S. A leaf image localization based algorithm for different crops disease classification. Inf. Process. Agric. 2022, 9, 456–474. [Google Scholar] [CrossRef]

- Rana, S.; Crimaldi, M.; Barretta, D.; Carillo, P.; Cirillo, V.; Maggio, A.; Sarghini, F.; Gerbino, S. GobhiSet: Dataset of raw, manually, and automatically annotated RGB images across phenology of Brassica oleracea var. Botrytis. Data Brief 2024, 54, 110506. [Google Scholar] [CrossRef]

- Li, W.; Zheng, T.; Yang, Z.; Li, M.; Sun, C.; Yang, X. Classification and detection of insects from field images using deep learning for smart pest management: A systematic review. Ecol. Inform. 2021, 66, 101460. [Google Scholar] [CrossRef]

- Wu, X.; Zhan, C.; Lai, Y.-K.; Cheng, M.-M.; Yang, J. Ip102: A large-scale benchmark dataset for insect pest recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8787–8796. [Google Scholar]

- Liu, J.; Wang, X. Plant diseases and pests detection based on deep learning: A review. Plant Methods 2021, 17, 22. [Google Scholar] [CrossRef]

- Farkaš, L. Object Tracking and Detection with YOLOv8 and StrongSORT Algorithms Captured by Drone; University of Split, Faculty of Science, Department of Informatics: Split, Croatia, 2023. [Google Scholar]

- Touvron, H.; Cord, M.; El-Nouby, A.; Verbeek, J.; Jégou, H. Three things everyone should know about vision transformers. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 497–515. [Google Scholar]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in vision: A survey. ACM Comput. Surv. (CSUR) 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Lakmal, D.; Kugathasan, K.; Nanayakkara, V.; Jayasena, S.; Perera, A.S.; Fernando, L. Brown planthopper damage detection using remote sensing and machine learning. In Proceedings of the 2019 18th IEEE International Conference on Machine Learning and Applications (ICMLA), Boca Raton, FL, USA, 16–19 December 2019; pp. 97–104. [Google Scholar]

- Mahapatra, A.; Roy, S.S.; Pratihar, D.K.; Mahapatra, A.; Roy, S.S.; Pratihar, D.K. Multi-legged robots—A review. In Multi-Body Dynamic Modeling of Multi-Legged Robots; Springer: Berlin/Heidelberg, Germany, 2020; pp. 11–32. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar]

| Equipment/Software | Name | Company | Country |

|---|---|---|---|

| CPU | I5 13600k | Intel | America |

| GPU | GeForce RTX3080Ti | NVIDIA | America |

| Operating System | Windows 10 | Microsoft | America |

| Deep learning framework | Pytorch 2.2.2 | Meta | America |

| Index | Pest Names | Images | Instances |

|---|---|---|---|

| 1 | Brown planthopper | 3000 | 5335 |

| 2 | Whitebacked planthopper | 1800 | 2770 |

| 3 | Rice leaf miner | 200 | 410 |

| Model Features | Architectures | Models | References |

|---|---|---|---|

| Transformer-based | Swin Transformer-based | Cascade-Mask R-CNN-Swin-Based | [25] |

| RT-DETR | RT-DETRx | [34] | |

| CNN-based | Faster R-CNN | Faster R-CNN-ResNet50-FPN | [35] |

| YOLOv5 | YOLOv5x | [36] | |

| YOLOv8 | YOLOv8x | [29] | |

| YOLOv8-p2 | YOLO8x-p2 | [29] | |

| YOLOv10 | YOLOv10x | [30] | |

| YOLOv11 | YOLOv11x | [37] |

| Model Features | Detection Models | mAP50 | mAP50:95 | F1-Score | Recall | Precision | FPS | FLOPs | Parameters |

|---|---|---|---|---|---|---|---|---|---|

| Transformer-based | Cascade-Mask R-CNN-Swin-based | 0.705 | 0.526 | 0.661 | 0.778 | 0.574 | 20.19 | 738.6 | 258.9M |

| RT-DETRx | 0.755 | 0.543 | 0.730 | 0.845 | 0.643 | 69.44 | 279.3 | 120M | |

| CNN-based | Faster R-CNN-ResNet50-FPN | 0.812 | 0.591 | 0.775 | 0.876 | 0.695 | 8.33 | 245.6 | 45.2M |

| YOLOv5x | 0.844 | 0.763 | 0.887 | 0.969 | 0.817 | 84.75 | 236.0 | 86.7M | |

| YOLOv8x | 0.840 | 0.759 | 0.889 | 0.971 | 0.820 | 86.96 | 257.4 | 68.2M | |

| YOLOv8x-p2 | 0.847 | 0.835 | 0.899 | 0.985 | 0.826 | 16.69 | 316.1 | 67.1M | |

| YOLOv10x | 0.845 | 0.752 | 0.879 | 0.962 | 0.809 | 97.09 | 171.3 | 61.2M | |

| YOLOv11x | 0.842 | 0.781 | 0.891 | 0.976 | 0.821 | 90.65 | 194.4 | 56.9 M | |

| YOLOv8x-p2 (SCConv) | 0.851 | 0.840 | 0.901 | 0.985 | 0.828 | 25.85 | 261.2 | 60.1M | |

| Hybrid | SwinT YOLOv8x-p2 (Non-SCConv) | 0.860 | 0.848 | 0.903 | 0.986 | 0.832 | 6.48 | 360.8 | 76.8M |

| SwinT YOLOv8x-p2 | 0.868 | 0.851 | 0.905 | 0.989 | 0.835 | 17.42 | 307.4 | 65.2 M |

| Number of Tests | SwinT YOLOv8-p2 | YOLOv8-p2 | YOLO v11 | |||

|---|---|---|---|---|---|---|

| mAP50 | mAP50:95 | mAP50 | mAP50:95 | mAP50 | mAP50:95 | |

| 1 | 0.865 | 0.850 | 0.847 | 0.835 | 0.842 | 0.781 |

| 2 | 0.869 | 0.852 | 0.845 | 0.832 | 0.838 | 0.780 |

| 3 | 0.860 | 0.849 | 0.849 | 0.837 | 0.837 | 0.782 |

| 4 | 0.864 | 0.851 | 0.842 | 0.830 | 0.839 | 0.779 |

| 5 | 0.868 | 0.852 | 0.846 | 0.833 | 0.840 | 0.781 |

| Number of Tests | SwinT YOLOv8-p2 (Detection Task) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| mAP50 | mAP50 (Mean) | mAP50 (Standard Deviation) | mAP50:95 | mAP50:95 (Mean) | mAP50:95 (Standard Deviation) | ||||

| 1 | 0.865 | 0.865 | 0.00356 | 0.835 | 0.833 | 0.00270 | |||

| 2 | 0.869 | 0.832 | |||||||

| 3 | 0.860 | 0.837 | |||||||

| 4 | 0.864 | 0.830 | |||||||

| 5 | 0.868 | 0.833 | |||||||

| Number of tests | SwinT YOLOv8-p2 (Counting Task) | ||||||||

| R2 | R2 (Mean) | R2 (Standard Deviation) | RMSE | RMSE (Mean) | RMSE (Standard Deviation) | MAE | MAE (Mean) | MAE (Standard Deviation) | |

| 1 | 0.928 | 0.928 | 0.00260 | 0.269 | 0.268 | 0.00339 | 0.0525 | 0.0526 | 0.00136 |

| 2 | 0.931 | 0.264 | 0.0514 | ||||||

| 3 | 0.925 | 0.272 | 0.0548 | ||||||

| 4 | 0.927 | 0.270 | 0.0530 | ||||||

| 5 | 0.931 | 0.265 | 0.0516 | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ji, X.; Li, J.; Cai, X.; Ye, X.; Gouda, M.; He, Y.; Ye, G.; Li, X. Driving by a Publicly Available RGB Image Dataset for Rice Planthopper Detection and Counting by Fusing Swin Transformer and YOLOv8-p2 Architectures in Field Landscapes. Agriculture 2025, 15, 1366. https://doi.org/10.3390/agriculture15131366

Ji X, Li J, Cai X, Ye X, Gouda M, He Y, Ye G, Li X. Driving by a Publicly Available RGB Image Dataset for Rice Planthopper Detection and Counting by Fusing Swin Transformer and YOLOv8-p2 Architectures in Field Landscapes. Agriculture. 2025; 15(13):1366. https://doi.org/10.3390/agriculture15131366

Chicago/Turabian StyleJi, Xusheng, Jiaxin Li, Xiaoxu Cai, Xinhai Ye, Mostafa Gouda, Yong He, Gongyin Ye, and Xiaoli Li. 2025. "Driving by a Publicly Available RGB Image Dataset for Rice Planthopper Detection and Counting by Fusing Swin Transformer and YOLOv8-p2 Architectures in Field Landscapes" Agriculture 15, no. 13: 1366. https://doi.org/10.3390/agriculture15131366

APA StyleJi, X., Li, J., Cai, X., Ye, X., Gouda, M., He, Y., Ye, G., & Li, X. (2025). Driving by a Publicly Available RGB Image Dataset for Rice Planthopper Detection and Counting by Fusing Swin Transformer and YOLOv8-p2 Architectures in Field Landscapes. Agriculture, 15(13), 1366. https://doi.org/10.3390/agriculture15131366