DAENet: A Deep Attention-Enhanced Network for Cropland Extraction in Complex Terrain from High-Resolution Satellite Imagery

Abstract

1. Introduction

2. Materials and Methods

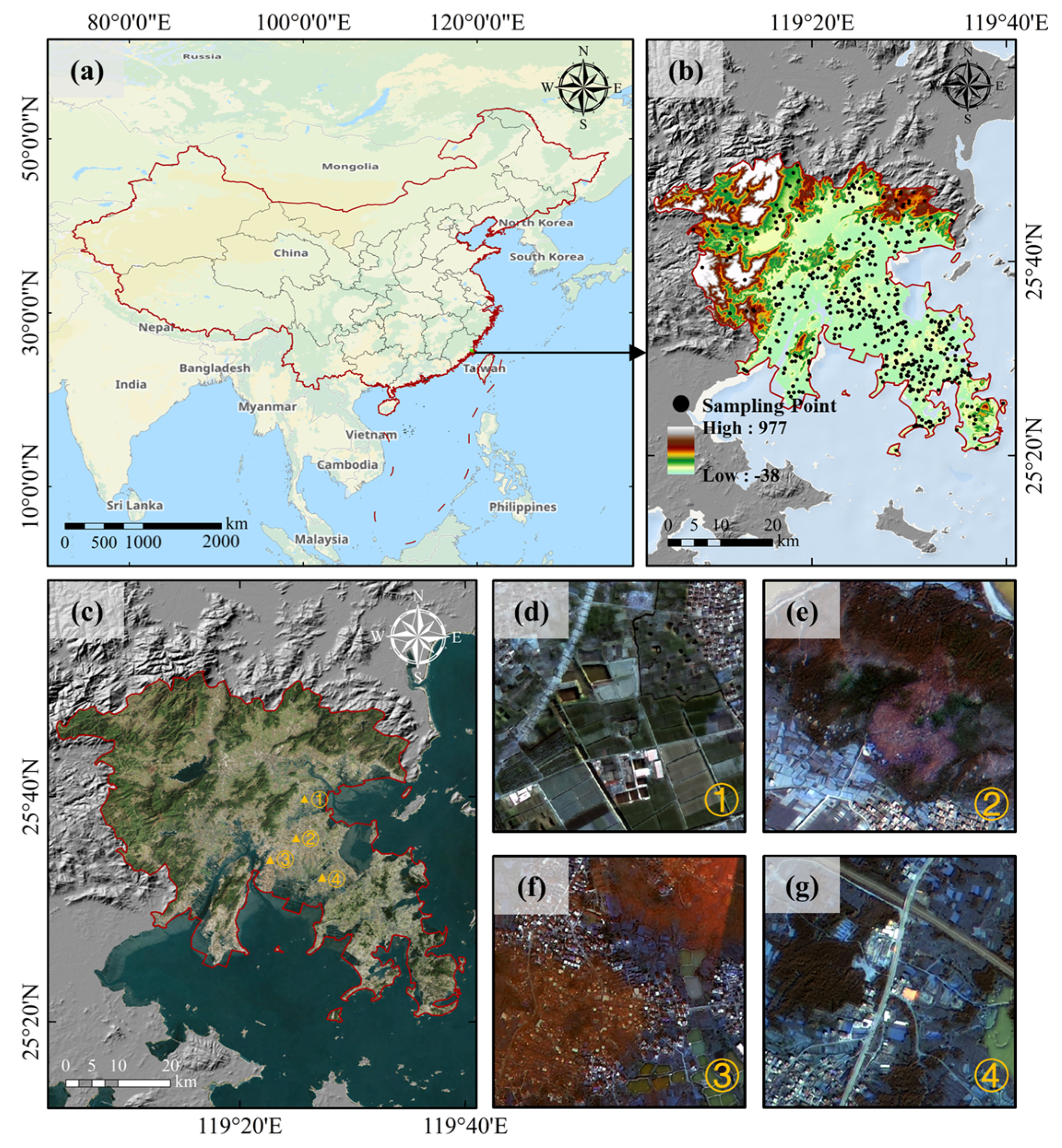

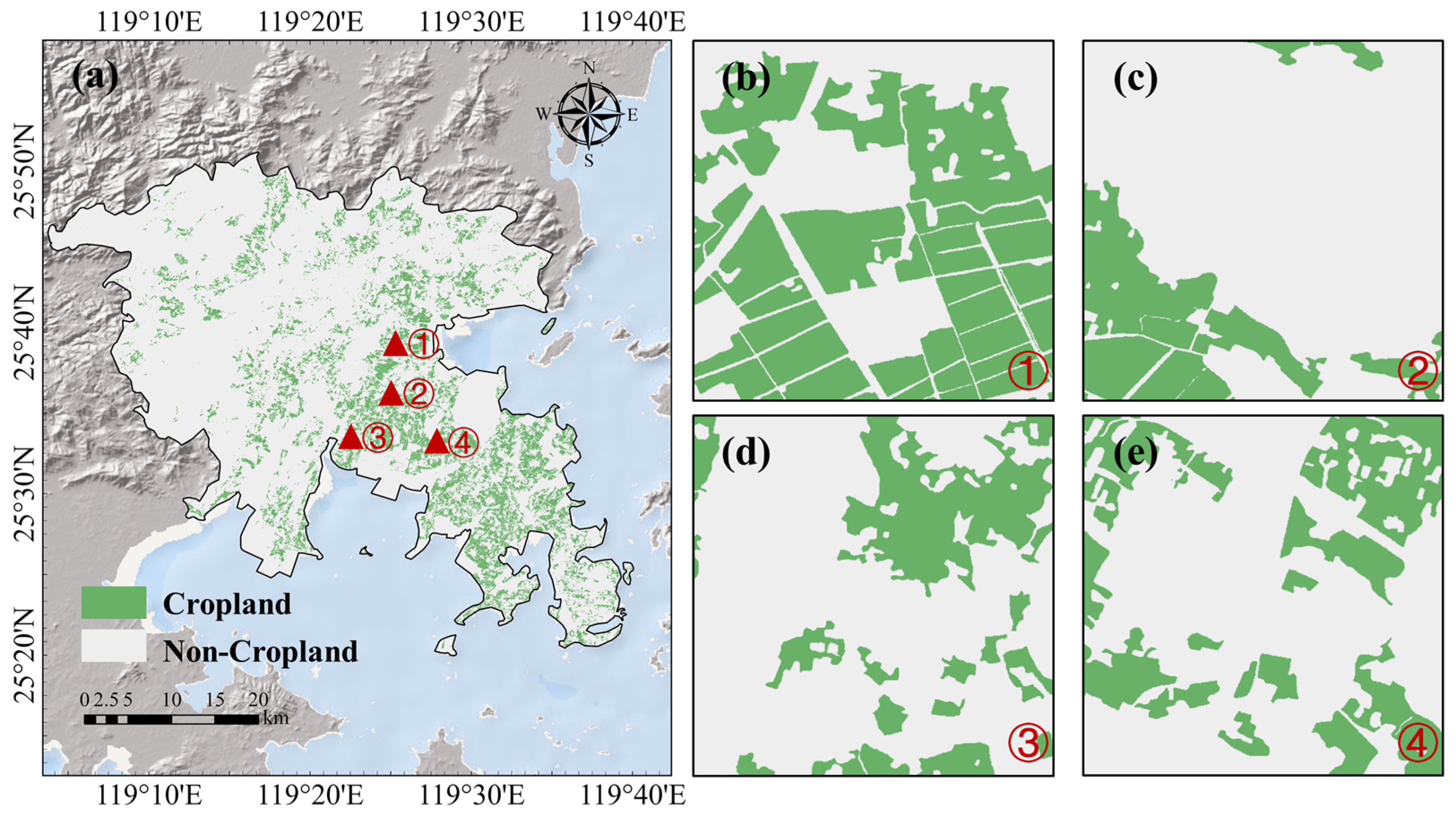

2.1. Study Area

2.2. Data

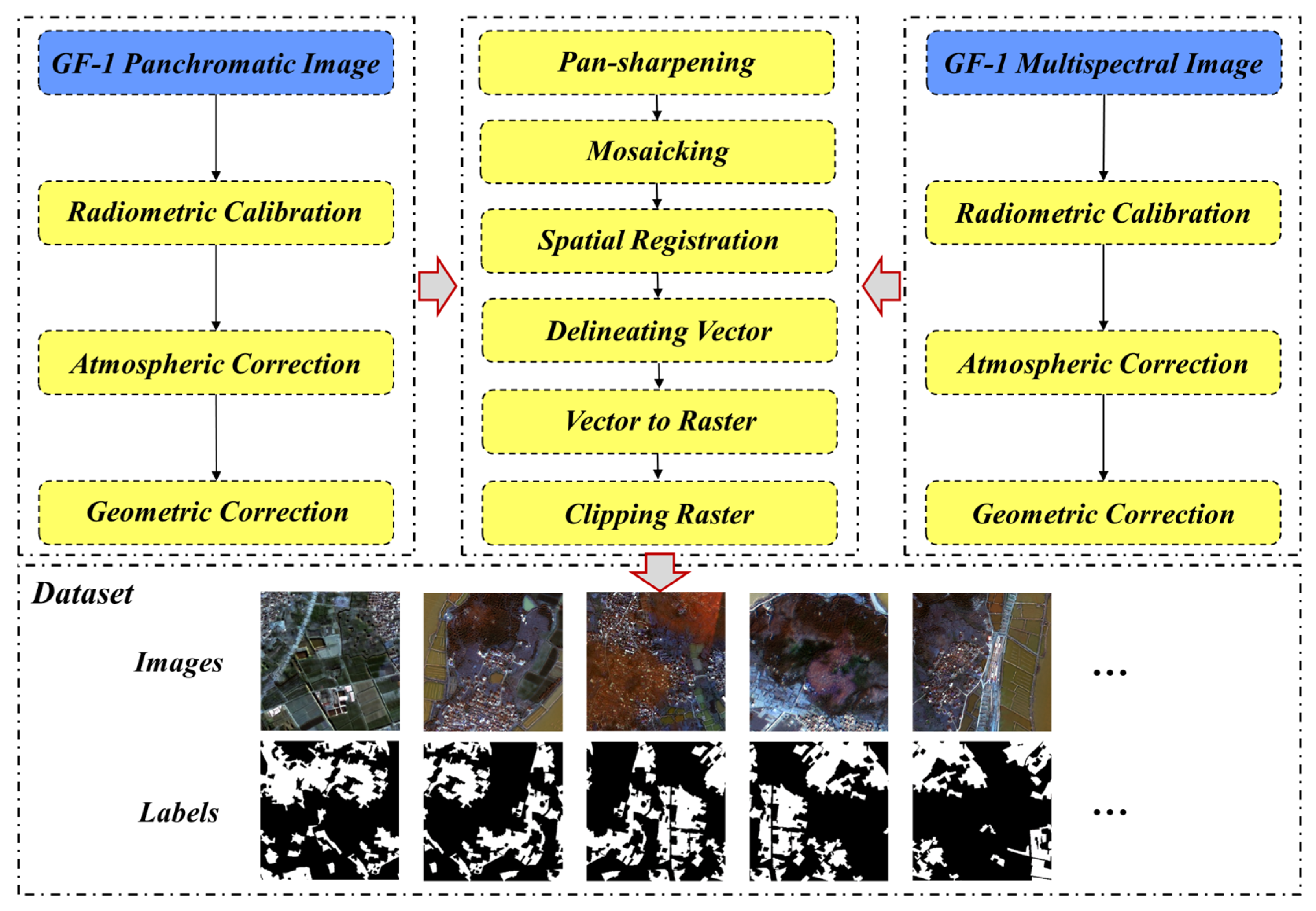

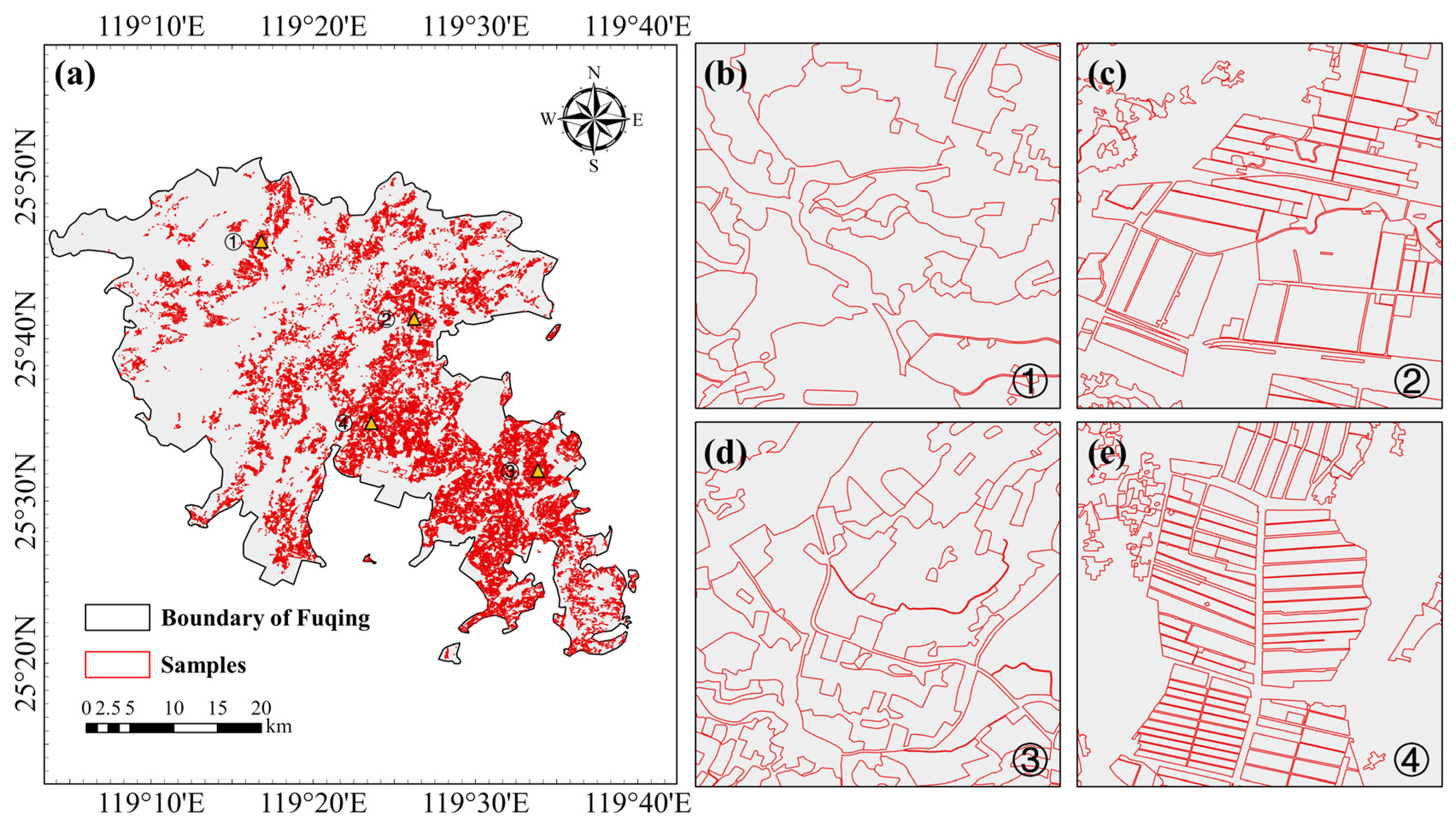

2.2.1. Remote Sensing Images Pre-Processing and Dataset Constructing

2.2.2. Ground Verification Data

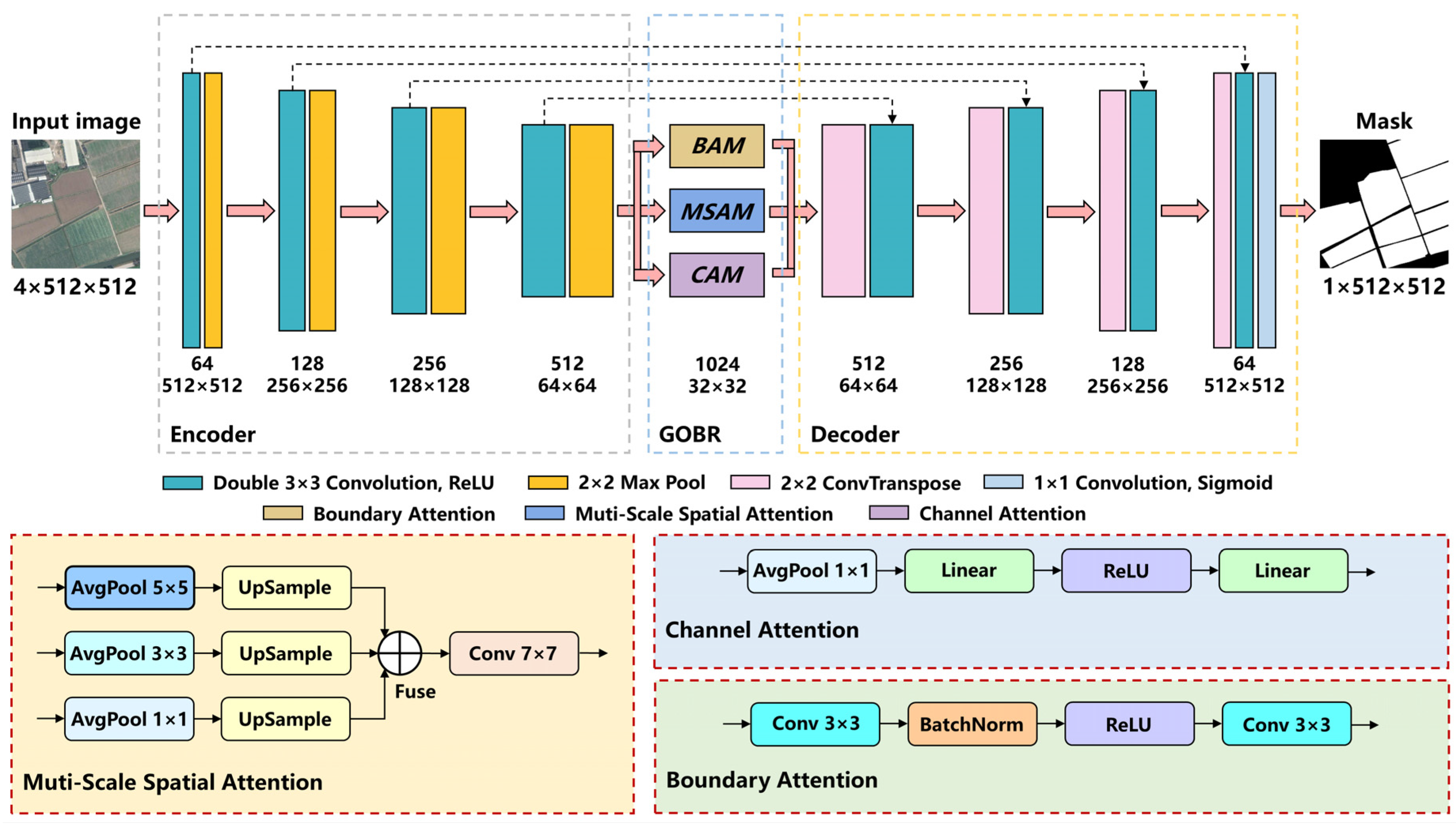

2.3. DAENet Architecture

2.4. Geometric-Optimized and Boundary-Restrained Block

2.4.1. Channel Attention Module

2.4.2. Multi-Scale Spatial Attention Module

2.4.3. Boundary Attention Module

2.4.4. Loss Function

2.5. Accuracy Assessment

2.6. Experimental Settings

2.7. The Use of AI Tools

3. Results

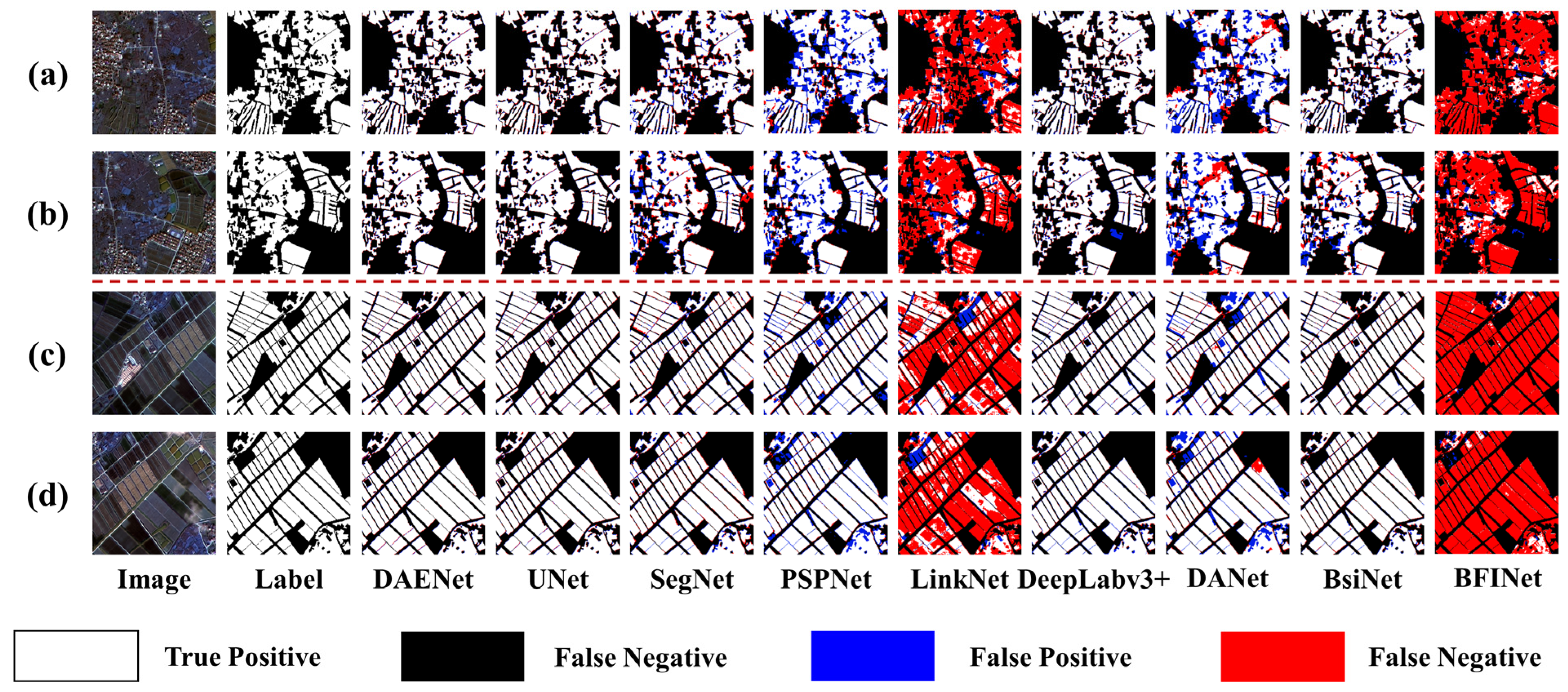

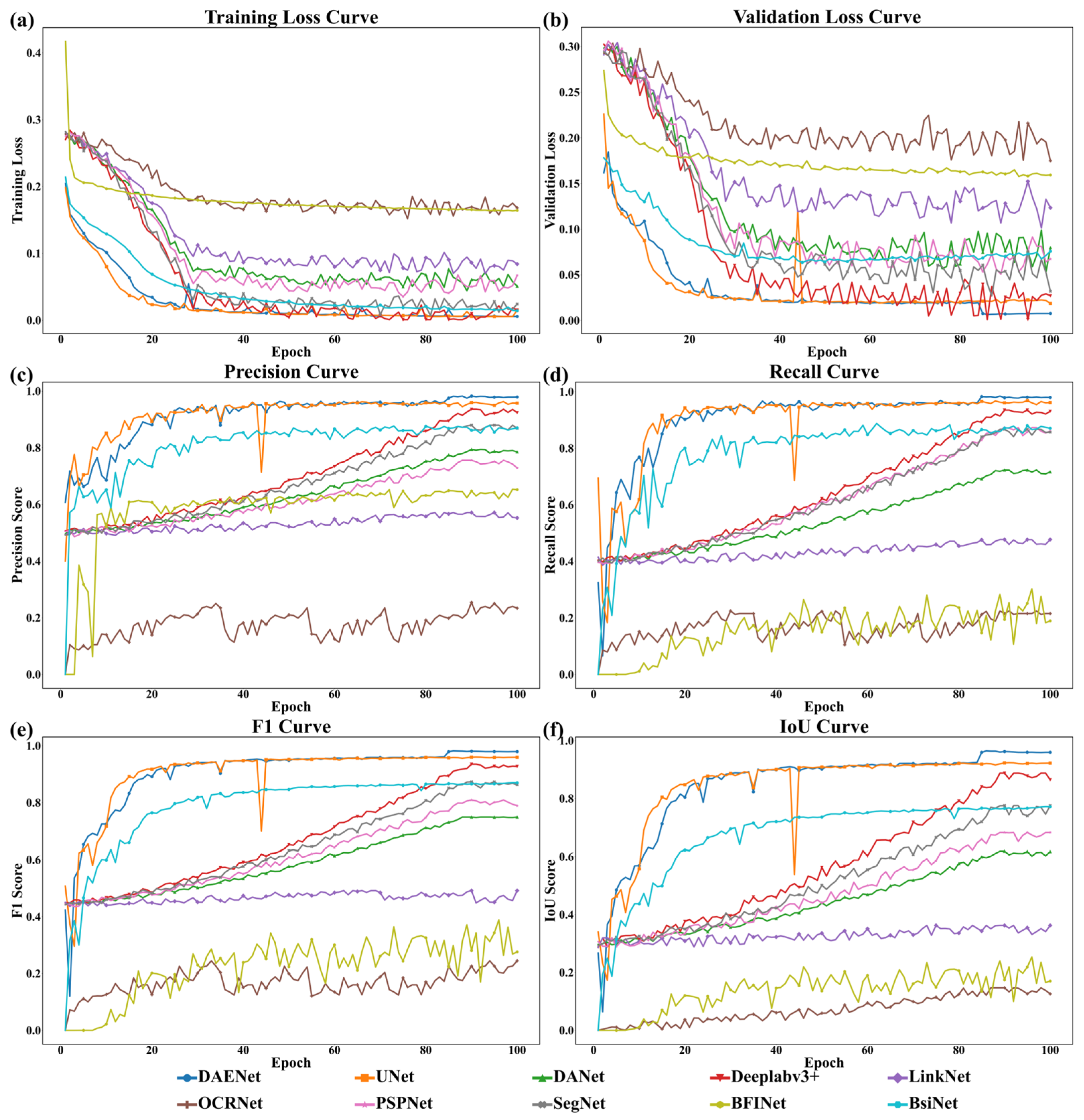

3.1. Comparison with Other Networks

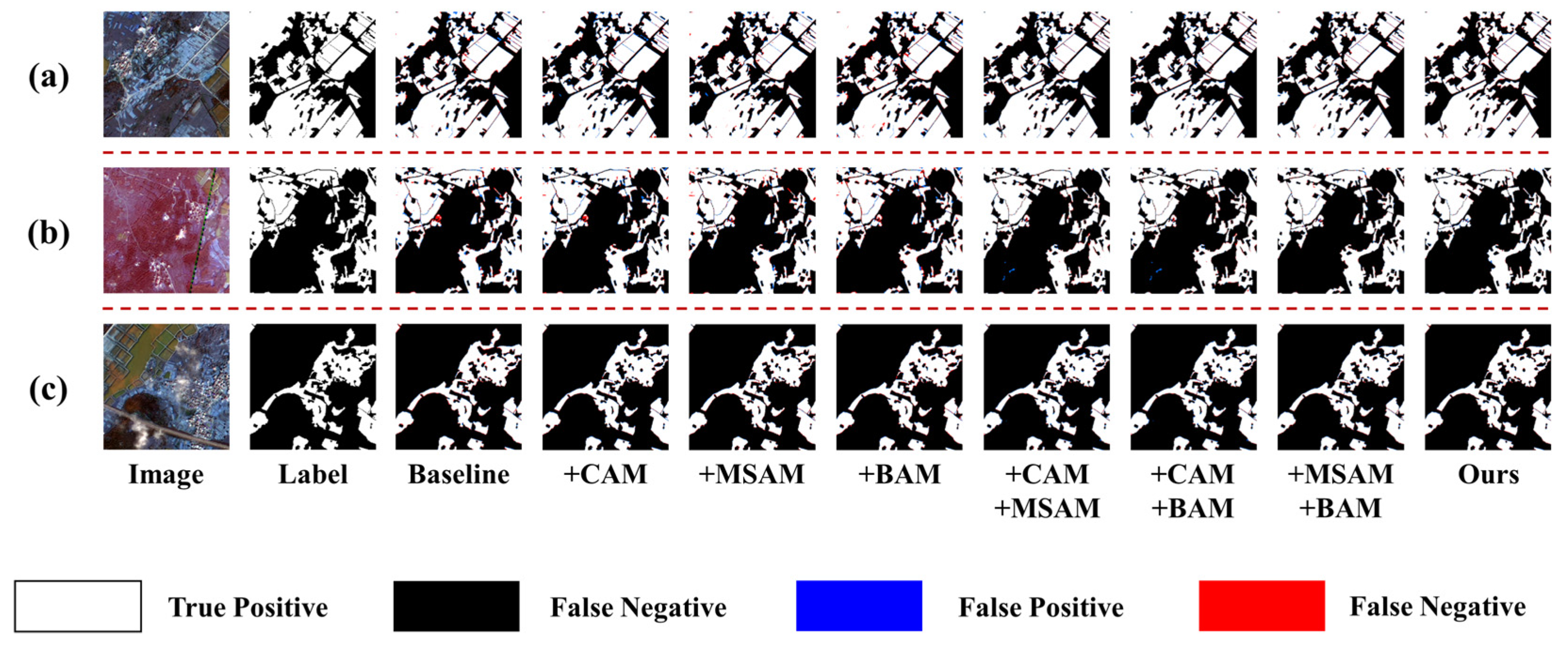

3.2. Ablation Study

3.3. Mapping of Cropland in Study Area

4. Discussion

4.1. Performance Superiority of DAENet in Complex Terrain

4.2. Comparison of Model Parameters

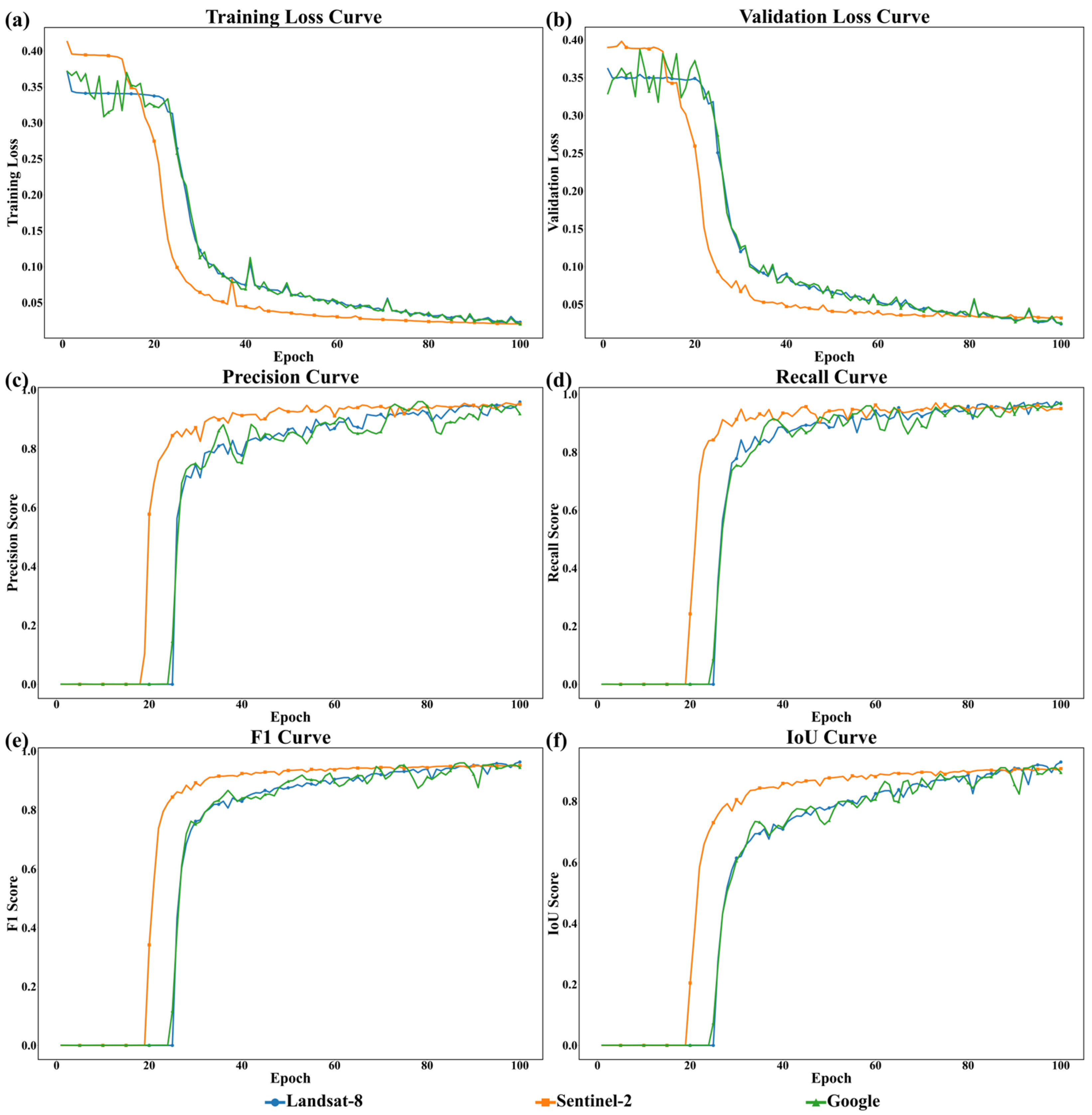

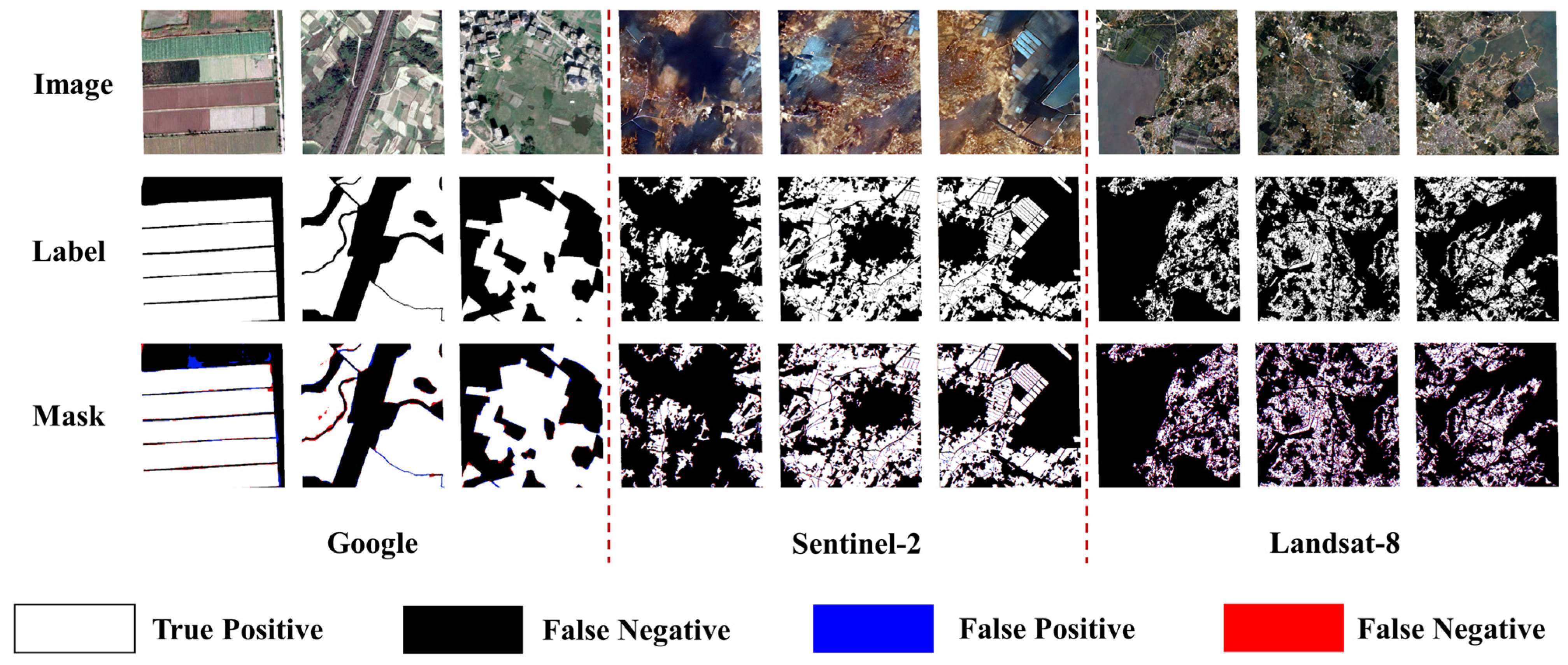

4.3. Generalization Performance on Multi-Resolution Satellite Data

4.4. Potential Improvements

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Amin, E.; Verrelst, J.; Rivera-Caicedo, J.P.; Pipia, L.; Ruiz-Verdu, A.; Moreno, J. Prototyping Sentinel-2 green LAI and brown LAI products for cropland monitoring. Remote Sens. Environ. 2021, 255, 112168. [Google Scholar] [CrossRef] [PubMed]

- Du, R.; Xiang, Y.; Chen, J.; Lu, X.; Zhang, F.; Zhang, Z.; Yang, B.; Tang, Z.; Wang, X.; Qian, L. The daily soil water content monitoring of cropland in irrigation area using Sentinel-2/3 spatio-temporal fusion and machine learning. Int. J. Appl. Earth Obs. Geoinf. 2024, 132, 104081. [Google Scholar] [CrossRef]

- Shen, Q.; Deng, H.; Wen, X.; Chen, Z.; Xu, H. Statistical Texture Learning Method for Monitoring Abandoned Suburban Cropland Based on High-Resolution Remote Sensing and Deep Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3060–3069. [Google Scholar] [CrossRef]

- Cheng, Z.; Chen, J.M.; Guo, Z.; Miao, G.; Zeng, H.; Wang, R.; Huang, Z.; Wang, Y. Improving UAV-Based LAI Estimation for Forests Over Complex Terrain by Reducing Topographic Effects on Multispectral Reflectance. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4400119. [Google Scholar] [CrossRef]

- Yang, Q.; Tang, F.; Tian, Z.; Xue, J.; Zhu, C.; Su, Y.; Li, P. Intelligent processing of UAV remote sensing data for building high-precision DEMs in complex terrain: A case study of Loess Plateau in China. Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104187. [Google Scholar] [CrossRef]

- Zhou, Z.; Wu, J.; Wang, C.; Wang, J.; Cheng, F. A Synchronous Acquisition Method for Dominant Tree Species and Forest Age in Complex Mountainous Terrain Through Growth Characteristics Matching. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4403518. [Google Scholar] [CrossRef]

- Benami, E.; Jin, Z.; Carter, M.R.; Ghosh, A.; Hijmans, R.J.; Hobbs, A.; Kenduiywo, B.; Lobell, D.B. Uniting remote sensing, crop modelling and economics for agricultural risk management. Nat. Rev. Earth Environ. 2024, 5, 907. [Google Scholar] [CrossRef]

- Khattak, W.A.; Sun, J.; Zaman, F.; Jalal, A.; Shafiq, M.; Manan, S.; Hameed, R.; Khan, I.; Khan, I.U.; Khan, K.A.; et al. The role of agricultural land management in modulating water-carbon interplay within dryland ecological systems. Agric. Ecosyst. Environ. 2025, 378, 109315. [Google Scholar] [CrossRef]

- Navarro, A.; Silva, I.; Catalao, J.; Falcao, J. An operational Sentinel-2 based monitoring system for the management and control of direct aids to the farmers in the context of the Common Agricultural Policy (CAP): A case study in mainland Portugal. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102469. [Google Scholar] [CrossRef]

- Meijninger, W.; Elbersen, B.; van Eupen, M.; Mantel, S.; Ciria, P.; Parenti, A.; Sanz Gallego, M.; Perez Ortiz, P.; Acciai, M.; Monti, A. Identification of early abandonment in cropland through radar-based coherence data and application of a Random-Forest model. Glob. Change Biol. Bioenergy 2022, 14, 735–755. [Google Scholar] [CrossRef]

- Rahman, M.S.; Di, L.; Yu, E.; Zhang, C.; Mohiuddin, H. In-Season Major Crop-Type Identification for US Cropland from Landsat Images Using Crop-Rotation Pattern and Progressive Data Classification. Agriculture 2019, 9, 17. [Google Scholar] [CrossRef]

- Xu, F.; Yao, X.; Zhang, K.; Yang, H.; Feng, Q.; Li, Y.; Yan, S.; Gao, B.; Li, S.; Yang, J.; et al. Deep learning in cropland field identification: A review. Comput. Electron. Agric. 2024, 222, 109042. [Google Scholar] [CrossRef]

- Jaafar, H.H.; Sujud, L.H. High-resolution satellite imagery reveals a recent accelerating rate of increase in land evapotranspiration. Remote Sens. Environ. 2024, 315, 114489. [Google Scholar] [CrossRef]

- Li, M.; Lu, C.; Lin, M.; Xiu, X.; Long, J.; Wang, X. Extracting vectorized agricultural parcels from high-resolution satellite images using a Point-Line-Region interactive multitask model. Comput. Electron. Agric. 2025, 231, 109953. [Google Scholar] [CrossRef]

- Zhu, X.; Wang, T.; Skidmore, A.K.; Lee, S.J.; Duporge, I. Mitigating terrain shadows in very high-resolution satellite imagery for accurate evergreen conifer detection using bi-temporal image fusion. Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104244. [Google Scholar] [CrossRef]

- Jin, M.; Liu, X.; Wu, L.; Liu, M. Distinguishing Heavy-Metal Stress Levels in Rice Using Synthetic Spectral Index Responses to Physiological Function Variations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 75–86. [Google Scholar] [CrossRef]

- Tian, L.; Wang, Z.; Xue, B.; Li, D.; Zheng, H.; Yao, X.; Zhu, Y.; Cao, W.; Cheng, T. A disease-specific spectral index tracks Magnaporthe oryzae infection in paddy rice from ground to space. Remote Sens. Environ. 2023, 285, 113384. [Google Scholar] [CrossRef]

- Yuan, W.; Meng, Y.; Li, Y.; Ji, Z.; Kong, Q.; Gao, R.; Su, Z. Research on rice leaf area index estimation based on fusion of texture and spectral information. Comput. Electron. Agric. 2023, 211, 108016. [Google Scholar] [CrossRef]

- Akinyemi, F.; Speranza, C. Land transformation across agroecological zones reveals expanding cropland and settlement at the expense of tree-cover and wetland areas in Nigeria. Geo-Spat. Inf. Sci. 2024, 6, 1–21. [Google Scholar] [CrossRef]

- Wang, J.; Xiao, X.; Liu, L.; Wu, X.; Qin, Y.; Steiner, J.L.; Dong, J. Mapping sugarcane plantation dynamics in Guangxi, China, by time series Sentinel-1, Sentinel-2 and Landsat images. Remote Sens. Environ. 2020, 247, 111951. [Google Scholar] [CrossRef]

- Yang, J.; Hu, Q.; Li, W.; Song, Q.; Cai, Z.; Zhang, X.; Wei, H.; Wu, W. An automated sample generation method by integrating phenology domain optical-SAR features in rice cropping pattern mapping. Remote Sens. Environ. 2024, 314, 114387. [Google Scholar] [CrossRef]

- Zhao, Z.; Dong, J.; Zhang, G.; Yang, J.; Liu, R.; Wu, B.; Xiao, X. Improved phenology-based rice mapping algorithm by integrating optical and radar data. Remote Sens. Environ. 2024, 315, 114460. [Google Scholar] [CrossRef]

- Li, H.; Lin, H.; Luo, J.; Wang, T.; Chen, H.; Xu, Q.; Zhang, X. Fine-Grained Abandoned Cropland Mapping in Southern China Using Pixel Attention Contrastive Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 2283–2295. [Google Scholar] [CrossRef]

- Zhao, H.; Long, J.; Zhang, M.; Wu, B.; Xu, C.; Tian, F.; Ma, Z. Irregular Agricultural Field Delineation Using a Dual-Branch Architecture From High-Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2024, 21, 3448628. [Google Scholar] [CrossRef]

- Long, J.; Li, M.; Wang, X.; Stein, A. Delineation of agricultural fields using multi-task BsiNet from high-resolution satellite images. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102871. [Google Scholar] [CrossRef]

- Zhong, H.; Wu, C. T-UNet: Triplet UNet for change detection in high-resolution remote sensing images. Geo-Spat. Inf. Sci. 2024, 4, 1–18. [Google Scholar] [CrossRef]

- Liu, X.; He, W.; Liu, W.; Yin, G.; Zhang, H. Mapping annual center-pivot irrigated cropland in Brazil during the 1985–2021 period with cloud platforms and deep learning. ISPRS J. Photogramm. Remote Sens. 2023, 205, 227–245. [Google Scholar] [CrossRef]

- Mpakairi, K.S.; Dube, T.; Sibanda, M.; Mutanga, O. Fine-scale characterization of irrigated and rainfed croplands at national scale using multi-source data, random forest, and deep learning algorithms. ISPRS J. Photogramm. Remote Sens. 2023, 204, 117–130. [Google Scholar] [CrossRef]

- Yue, J.; Tian, Q.; Liu, Y.; Fu, Y.; Tian, J.; Zhou, C.; Feng, H.; Yang, G. Mapping cropland rice residue cover using a radiative transfer model and deep learning. Comput. Electron. Agric. 2023, 215, 108421. [Google Scholar] [CrossRef]

- Lazin, R.; Shen, X.; Anagnostou, E. Estimation of flood-damaged cropland area using a convolutional neural network. Environ. Res. Lett. 2021, 16, 054011. [Google Scholar] [CrossRef]

- Zhang, D.; Pan, Y.; Zhang, J.; Hu, T.; Zhao, J.; Li, N.; Chen, Q. A generalized approach based on convolutional neural networks for large area cropland mapping at very high resolution. Remote Sens. Environ. 2020, 247, 111912. [Google Scholar] [CrossRef]

- Zhao, S.; Liu, X.; Ding, C.; Liu, S.; Wu, C.; Wu, L. Mapping Rice Paddies in Complex Landscapes with Convolutional Neural Networks and Phenological Metrics. GIScience Remote Sens. 2020, 57, 37–48. [Google Scholar] [CrossRef]

- Long, J.; Zhang, Z.; Zhang, Q.; Zhao, X.; Igathinathane, C.; Xing, J.; Saha, C.K.; Sheng, W.; Li, H.; Zhang, M. Comprehensive wheat lodging detection under different UAV heights using machine/deep learning models☆. Comput. Electron. Agric. 2025, 231, 109972. [Google Scholar] [CrossRef]

- Sharifi, A.; Safari, M.M. Enhancing the Spatial Resolution of Sentinel-2 Images Through Super-Resolution Using Transformer-Based Deep-Learning Models. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 4805–4820. [Google Scholar] [CrossRef]

- Yao, Y.; Gao, R.; Wu, H.; Dong, A.; Hu, Z.; Ma, Y.; Guan, Q.; Luo, P. Explainable Mapping of the Irregular Land Use Parcel With a Data Fusion Deep-Learning Model. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5612015. [Google Scholar] [CrossRef]

- Mohammadi, S.; Belgiu, M.; Stein, A. A source-free unsupervised domain adaptation method for cross-regional and cross-time crop mapping from satellite image time series. Remote Sens. Environ. 2024, 314, 114385. [Google Scholar] [CrossRef]

- Wu, Y.; Peng, Z.; Hu, Y.; Wang, R.; Xu, T. A dual-branch network for crop-type mapping of scattered small agricultural fields in time series remote sensing images. Remote Sens. Environ. 2025, 316, 114497. [Google Scholar] [CrossRef]

- Zhao, H.; Wu, B.; Zhang, M.; Long, J.; Tian, F.; Xie, Y.; Zeng, H.; Zheng, Z.; Ma, Z.; Wang, M.; et al. A large-scale VHR parcel dataset and a novel hierarchical semantic boundary-guided network for agricultural parcel delineation. ISPRS J. Photogramm. Remote Sens. 2025, 221, 1–19. [Google Scholar] [CrossRef]

- Guo, D.; Li, Z.; Gao, X.; Gao, M.; Yu, C.; Zhang, C.; Shi, W. RealFusion: A reliable deep learning-based spatiotemporal fusion framework for generating seamless fine-resolution imagery. Remote Sens. Environ. 2025, 321, 114689. [Google Scholar] [CrossRef]

- Jiao, L.; Luo, P.; Huang, R.; Xu, Y.; Ye, Z.; Liu, S.; Liu, S.; Tong, X. Modeling hydrous mineral distribution on Mars with extremely sparse data: A multi-scale spatial association modeling framework. ISPRS J. Photogramm. Remote Sens. 2025, 222, 16–32. [Google Scholar] [CrossRef]

- Li, J.; Wei, Y.; Lin, L.; Yuan, Q.; Shen, H. Two-stage downscaling and correction cascade learning framework for generating long-time series seamless soil moisture. Remote Sens. Environ. 2025, 321, 114884. [Google Scholar] [CrossRef]

- Lu, H.; Li, B.; Yang, G.; Fan, G.; Wang, H.; Pang, Y.; Wang, Z.; Lian, Y.; Xu, H.; Huang, H. Towards a point cloud understanding framework for forest scene semantic segmentation across forest types and sensor platforms. Remote Sens. Environ. 2025, 318, 114591. [Google Scholar] [CrossRef]

- Zhang, B.; Wang, Z.; Liang, B.; Dong, L.; Feng, Z.; He, M.; Feng, Z. A lightweight spatiotemporal classification framework for tree species with entropy-based change resistance filter using satellite imagery. Int. J. Appl. Earth Obs. Geoinf. 2025, 138, 104449. [Google Scholar] [CrossRef]

- Zhou, T.; Zhang, G.; Wang, J.; Zhu, Z.; Woolway, R.I.; Han, X.; Xu, F.; Peng, J. A novel framework for accurate, automated and dynamic global lake mapping based on optical imagery. ISPRS J. Photogramm. Remote Sens. 2025, 221, 280–298. [Google Scholar] [CrossRef]

- Li, N.; Feng, Y.; Diao, W.; Sun, X.; Cheng, L.; Fu, K. DeepGolf: A fine-grained perception framework for golf course distribution in the real world based on multi-source remote sensing data. Int. J. Appl. Earth Obs. Geoinf. 2025, 136, 104394. [Google Scholar] [CrossRef]

- Meng, X.; Bao, Y.; Zhang, X.; Luo, C.; Liu, H. A long-term global Mollisols SOC content prediction framework: Integrating prior knowledge, geographical partitioning, and deep learning models with spatio-temporal validation. Remote Sens. Environ. 2025, 318, 114592. [Google Scholar] [CrossRef]

- Tian, S.; Sha, A.; Luo, Y.; Ke, Y.; Spencer, R.; Hu, X.; Ning, M.; Zhao, Y.; Deng, R.; Gao, Y.; et al. A novel framework for river organic carbon retrieval through satellite data and machine learning. ISPRS J. Photogramm. Remote Sens. 2025, 221, 109–123. [Google Scholar] [CrossRef]

- Vizzari, M.; Lesti, G.; Acharki, S. Crop classification in Google Earth Engine: Leveraging Sentinel-1, Sentinel-2, European CAP data, and object-based machine-learning approaches. Geo-Spat. Inf. Sci. 2024, 4, 1–16. [Google Scholar] [CrossRef]

- Dong, F.; Yin, Q.; Hong, W. An Improved Man-Made Structure Detection Method for Multi-aspect Polarimetric SAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 5717–5732. [Google Scholar] [CrossRef]

- Lang, P.; Fu, X.; Dong, J.; Yang, H.; Yin, J.; Yang, J.; Martorella, M. Recent Advances in Deep-Learning-Based SAR Image Target Detection and Recognition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 6884–6915. [Google Scholar] [CrossRef]

- Zhang, H.; Ma, G.; Wang, D.; Zhang, Y. M3ICNet: A cross-modal resolution preserving building damage detection method with optical and SAR remote sensing imagery and two heterogeneous image disaster datasets. ISPRS J. Photogramm. Remote Sens. 2025, 221, 224–250. [Google Scholar] [CrossRef]

- Hazaymeh, K.; Al-Jarrah, M. Assessing the impact of land cover on air quality parameters in Jordan: A spatiotemporal study using remote sensing and cloud computing (2019–2022). Int. J. Appl. Earth Obs. Geoinf. 2024, 135, 104293. [Google Scholar] [CrossRef]

- He, S.; Le, C. Retrieving the Concentration of Particulate Inorganic Carbon for Cloud-Covered Coccolithophore Bloom Waters Based on a Machine-Learning Approach. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4205010. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, Y.; Feng, Q.; Yin, G.; Zhang, D.; Li, Y.; Gong, J.; Li, Y.; Li, J. Understanding urban expansion and shrinkage via green plastic cover mapping based on GEE cloud platform: A case study of Shandong, China. Int. J. Appl. Earth Obs. Geoinf. 2024, 128, 103749. [Google Scholar] [CrossRef]

- Cong, N.; Du, Z.; Zheng, Z.; Zhao, G.; Sun, D.; Zu, J.; Zhang, Y. Altitude explains insignificant autumn phenological changes across regions with large topography relief in the Tibetan Plateau. Sci. Total Environ. 2024, 921, 171088. [Google Scholar] [CrossRef]

- Gu, Z.; Chen, J.; Chen, Y.; Qiu, Y.; Zhu, X.; Chen, X. Agri-Fuse: A novel spatiotemporal fusion method designed for agricultural scenarios with diverse phenological changes. Remote Sens. Environ. 2023, 299, 113874. [Google Scholar] [CrossRef]

- Sun, C.; Li, J.; Liu, Y.; Zhao, S.; Zheng, J.; Zhang, S. Tracking annual changes in the distribution and composition of saltmarsh vegetation on the Jiangsu coast of China using Landsat time series-based phenological parameters. Remote Sens. Environ. 2023, 284, 113370. [Google Scholar] [CrossRef]

- Wang, H.; Ye, Z.; Yao, Y.; Chang, W.; Liu, J.; Zhao, Y.; Li, S.; Liu, Z.; Zhang, X. Improving cross-regional model transfer performance in crop classification by crop time series correction. Geo-Spat. Inf. Sci. 2024, 11, 1–16. [Google Scholar] [CrossRef]

- Moudry, V.; Gdulova, K.; Gabor, L.; Sarovcova, E.; Bartak, V.; Leroy, F.; Spatenkova, O.; Rocchini, D.; Prosek, J. Effects of environmental conditions on ICESat-2 terrain and canopy heights retrievals in Central European mountains. Remote Sens. Environ. 2022, 279, 113112. [Google Scholar] [CrossRef]

- Wan, W.; Zhao, L.; Zhang, J.; Liang, H.; Guo, Z.; Liu, B.; Ji, R. Toward Terrain Effects on GNSS Interferometric Reflectometry Snow Depth Retrievals: Geometries, Modeling, and Applications. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4415514. [Google Scholar] [CrossRef]

- Chen, X.; Huang, Y.; Yu, X.; Mao, Y.; Zhang, Z.; Chen, Z.; Hong, W. A RFI Mitigation Approach for Spaceborne SAR Using Homologous Interference Knowledge at Coastal Regions. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 5990–6006. [Google Scholar] [CrossRef]

- Liu, C.; Deng, Y.; Zhang, Z.; Fan, H.; Zhang, H.; Qi, X.; Wang, W. TNN-STME: A Matrix Decomposition Method for SAR Ship Real-Time Detection Using 2-D Asymmetric Resolution Mode. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 7221–7235. [Google Scholar] [CrossRef]

- Song, H.; Wang, B.; Qian, X.; Gu, Y.; Jin, G.; Yang, R. Enhancing Water Extraction for Dual-Polarization SAR Images Based on Adaptive Feature Fusion and Hybrid MLP Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 6953–6967. [Google Scholar] [CrossRef]

- Liu, J.; Luo, H.; Zhang, W.; Liu, F.; Xiao, L. Multiscale Self-Supervised Constraints and Change-Masks-Guided Network for Weakly Supervised Change Detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4701415. [Google Scholar] [CrossRef]

- Lu, K.; Zhang, R.; Huang, X.; Xie, Y.; Ning, X.; Zhang, H.; Yuan, M.; Zhang, P.; Wang, T.; Liao, T. Pattern Integration and Enhancement Vision Transformer for Self-Supervised Learning in Remote Sensing. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5613913. [Google Scholar] [CrossRef]

- Yang, R.; Zhong, Y.; Su, Y. Self-Supervised Joint Representation Learning for Urban Land-Use Classification With Multisource Geographic Data. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5608021. [Google Scholar] [CrossRef]

- Dai, A.; Yang, J.; Zhang, Y.; Zhang, T.; Tang, K.; Xiao, X.; Zhang, S. A difference enhancement and class-aware rebalancing semi-supervised network for cropland semantic change detection. Int. J. Appl. Earth Obs. Geoinf. 2025, 137, 104415. [Google Scholar] [CrossRef]

- Duan, W.; Ji, L.; Huang, J.; Chen, S.; Peng, S.; Zhu, S.; Ye, M. Semi-Supervised Multiview Prototype Learning With Motion Reconstruction for Moving Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5001215. [Google Scholar] [CrossRef]

- Shi, Y.; Zhang, B.; Xu, J.; Wang, Y.; Jin, Z. Joint Supervised and Semi-Supervised Seismic Velocity Model Building Based on VGU Network. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5905514. [Google Scholar] [CrossRef]

- Li, D.; Neira-Molina, H.; Huang, M.; Syam, M.S.; Yu, Z.; Zhang, J.; Bhatti, U.A.; Asif, M.; Sarhan, N.; Awwad, E.M. CSTFNet: A CNN and Dual Swin-Transformer Fusion Network for Remote Sensing Hyperspectral Data Fusion and Classification of Coastal Areas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 5853–5865. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, Z.; Li, M.; Zhang, L.; Peng, X.; He, R.; Shi, L. Dual Fine-Grained network with frequency Transformer for change detection on remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2025, 136, 104393. [Google Scholar] [CrossRef]

- Xu, A.; Xue, Z.; Li, Z.; Cheng, S.; Su, H.; Xia, J. UM2Former: U-Shaped Multimixed Transformer Network for Large-Scale Hyperspectral Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5506221. [Google Scholar] [CrossRef]

- Wang, D.; Chen, X.; Guo, N.; Yi, H.; Li, Y. STCD: Efficient Siamese transformers-based change detection method for remote sensing images. Geo-Spat. Inf. Sci. 2024, 27, 1192–1211. [Google Scholar] [CrossRef]

- Li, Z.; Ma, Y.; Mei, X.; Ma, J. Two-view correspondence learning using graph neural network with reciprocal neighbor attention. ISPRS J. Photogramm. Remote Sens. 2023, 202, 114–124. [Google Scholar] [CrossRef]

- Lin, C.-H.; Lin, T.-H.; Chanussot, J. Quantum Information-Empowered Graph Neural Network for Hyperspectral Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5537615. [Google Scholar] [CrossRef]

- Zhao, E.; Qu, N.; Wang, Y.; Gao, C.; Duan, S.-B.; Zeng, J.; Zhang, Q. Thermal Infrared Hyperspectral Band Selection via Graph Neural Network for Land Surface Temperature Retrieval. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5003414. [Google Scholar] [CrossRef]

- Hashemi, F.S.; Zoej, M.J.V.; Youssefi, F.; Li, H.; Shafian, S.; Farnaghi, M.; Pirasteh, S. Integrating RS data with fuzzy decision systems for innovative crop water needs assessment. Int. J. Appl. Earth Obs. Geoinf. 2025, 136, 104338. [Google Scholar] [CrossRef]

- Rui, Z.; Zhang, Z.; Zhang, M.; Azizi, A.; Igathinathane, C.; Cen, H.; Vougioukas, S.; Li, H.; Zhang, J.; Jiang, Y.; et al. High-throughput proximal ground crop phenotyping systems—A comprehensive review. Comput. Electron. Agric. 2024, 224, 109108. [Google Scholar] [CrossRef]

- Sisheber, B.; Marshall, M.; Mengistu, D.; Nelson, A. Assimilation of Earth Observation Data for Crop Yield Estimation in Smallholder Agricultural Systems. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 557–572. [Google Scholar] [CrossRef]

| Network | Precision | Recall | F1-Score | IoU |

|---|---|---|---|---|

| DANet | 0.7943 | 0.7231 | 0.7492 | 0.6186 |

| DeepLabv3Plus | 0.9362 | 0.9352 | 0.9354 | 0.8879 |

| LinkNet | 0.5713 | 0.4772 | 0.4917 | 0.3626 |

| OCRNet | 0.2545 | 0.2154 | 0.2261 | 0.1469 |

| PSPNet | 0.7559 | 0.8734 | 0.8094 | 0.6828 |

| SegNet | 0.8797 | 0.8672 | 0.8731 | 0.7758 |

| UNet | 0.9605 | 0.9603 | 0.9604 | 0.9239 |

| BFINet | 0.6239 | 0.2834 | 0.3715 | 0.2404 |

| BsiNet | 0.8672 | 0.8742 | 0.8700 | 0.7720 |

| Ours | 0.9826 | 0.9797 | 0.9811 | 0.9636 |

| Variant | Precision | Recall | F1-Score | IoU |

|---|---|---|---|---|

| Baseline | 0.9563 | 0.9583 | 0.9571 | 0.9234 |

| +CAM | 0.9650 | 0.9567 | 0.9652 | 0.9361 |

| +MSAM | 0.9668 | 0.9675 | 0.9671 | 0.9392 |

| +BAM | 0.9687 | 0.9691 | 0.9688 | 0.9410 |

| +CAM + MSAM | 0.9723 | 0.9740 | 0.9731 | 0.9503 |

| +CAM + BAM | 0.9740 | 0.9752 | 0.9747 | 0.9532 |

| +MSAM + BAM | 0.9731 | 0.9758 | 0.9752 | 0.9540 |

| Ours | 0.9826 | 0.9797 | 0.9811 | 0.9636 |

| Network | Params (M) | FLOPs (T) | MACs (G) | Avg Time/Epoch (S) |

|---|---|---|---|---|

| DANet | 32.36 | 0.2204 | 110.22 | 919.36 |

| DeepLabv3Plus | 57.68 | 0.2410 | 120.49 | 583.25 |

| LinkNet | 7.36 | 1.9299 | 964.97 | 1484.71 |

| OCRNet | 25.06 | 0.0218 | 10.90 | 622.48 |

| PSPNet | 4.40 | 0.0294 | 14.68 | 349.89 |

| SegNet | 16.50 | 0.0952 | 47.61 | 535.16 |

| UNet | 30.86 | 0.2075 | 108.79 | 573.03 |

| BFINet | 5.33 | 0.0584 | 29.21 | 383.21 |

| BsiNet | 7.85 | 0.1933 | 96.65 | 427.95 |

| Ours | 31.03 | 0.2186 | 109.30 | 575.72 |

| Data | Precision | Recall | F1-Score | IoU |

|---|---|---|---|---|

| Landsat-8 | 0.9589 | 0.9674 | 0.9631 | 0.9289 |

| Sentinel-2 | 0.9517 | 0.9507 | 0.9512 | 0.9070 |

| 0.9514 | 0.9607 | 0.9560 | 0.9171 | |

| GaoFen-1 | 0.9826 | 0.9797 | 0.9811 | 0.9636 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Yang, M.; Zhang, T.; Hu, S.; Zhuang, Q. DAENet: A Deep Attention-Enhanced Network for Cropland Extraction in Complex Terrain from High-Resolution Satellite Imagery. Agriculture 2025, 15, 1318. https://doi.org/10.3390/agriculture15121318

Wang Y, Yang M, Zhang T, Hu S, Zhuang Q. DAENet: A Deep Attention-Enhanced Network for Cropland Extraction in Complex Terrain from High-Resolution Satellite Imagery. Agriculture. 2025; 15(12):1318. https://doi.org/10.3390/agriculture15121318

Chicago/Turabian StyleWang, Yushen, Mingchao Yang, Tianxiang Zhang, Shasha Hu, and Qingwei Zhuang. 2025. "DAENet: A Deep Attention-Enhanced Network for Cropland Extraction in Complex Terrain from High-Resolution Satellite Imagery" Agriculture 15, no. 12: 1318. https://doi.org/10.3390/agriculture15121318

APA StyleWang, Y., Yang, M., Zhang, T., Hu, S., & Zhuang, Q. (2025). DAENet: A Deep Attention-Enhanced Network for Cropland Extraction in Complex Terrain from High-Resolution Satellite Imagery. Agriculture, 15(12), 1318. https://doi.org/10.3390/agriculture15121318