YOLOv8-LSW: A Lightweight Bitter Melon Leaf Disease Detection Model

Abstract

1. Introduction

- (1)

- A bitter melon leaf disease dataset was constructed, which includes varying light intensities and leaf densities, reflecting real production environments.

- (2)

- Based on the CSP (Cross-Stage Partial) concept, the backbone network was improved to the LeYOLO-small structure, and lightweight design was achieved by introducing depthwise separable convolutions and cross-stage feature reuse modules. While the number of parameters and model size were reduced, the performance of the model was significantly enhanced.

- (3)

- The ShuffleAttention module was embedded before the feature pyramid network, combining the advantages of channel attention and spatial attention to better extract important features from images, suppress unimportant background information, and reduce computational overhead.

- (4)

- The WIoUv3 loss function with a dynamic non-monotonic focusing mechanism (FM) was used, dynamically adjusting gradient gain by evaluating the outlier degree of anchor boxes, thus mitigating the negative impact of low-quality anchor boxes on the training process. While ensuring high-quality anchor box regression, the model’s convergence speed and localization accuracy were improved.

2. Materials and Methods

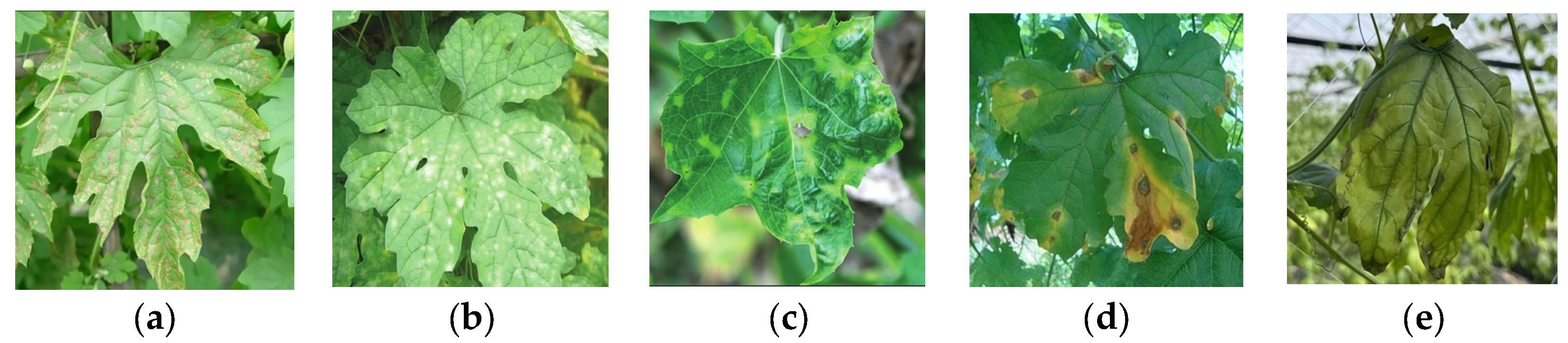

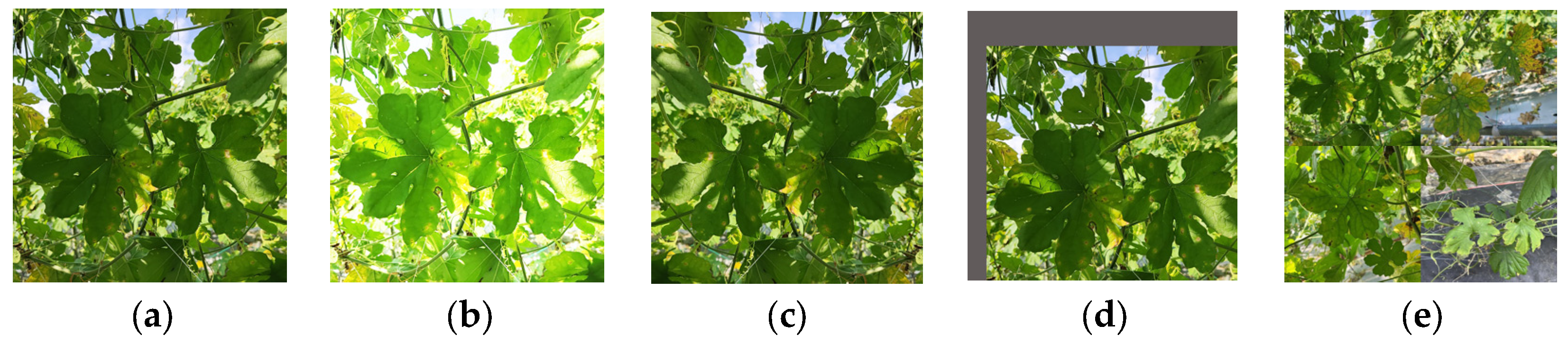

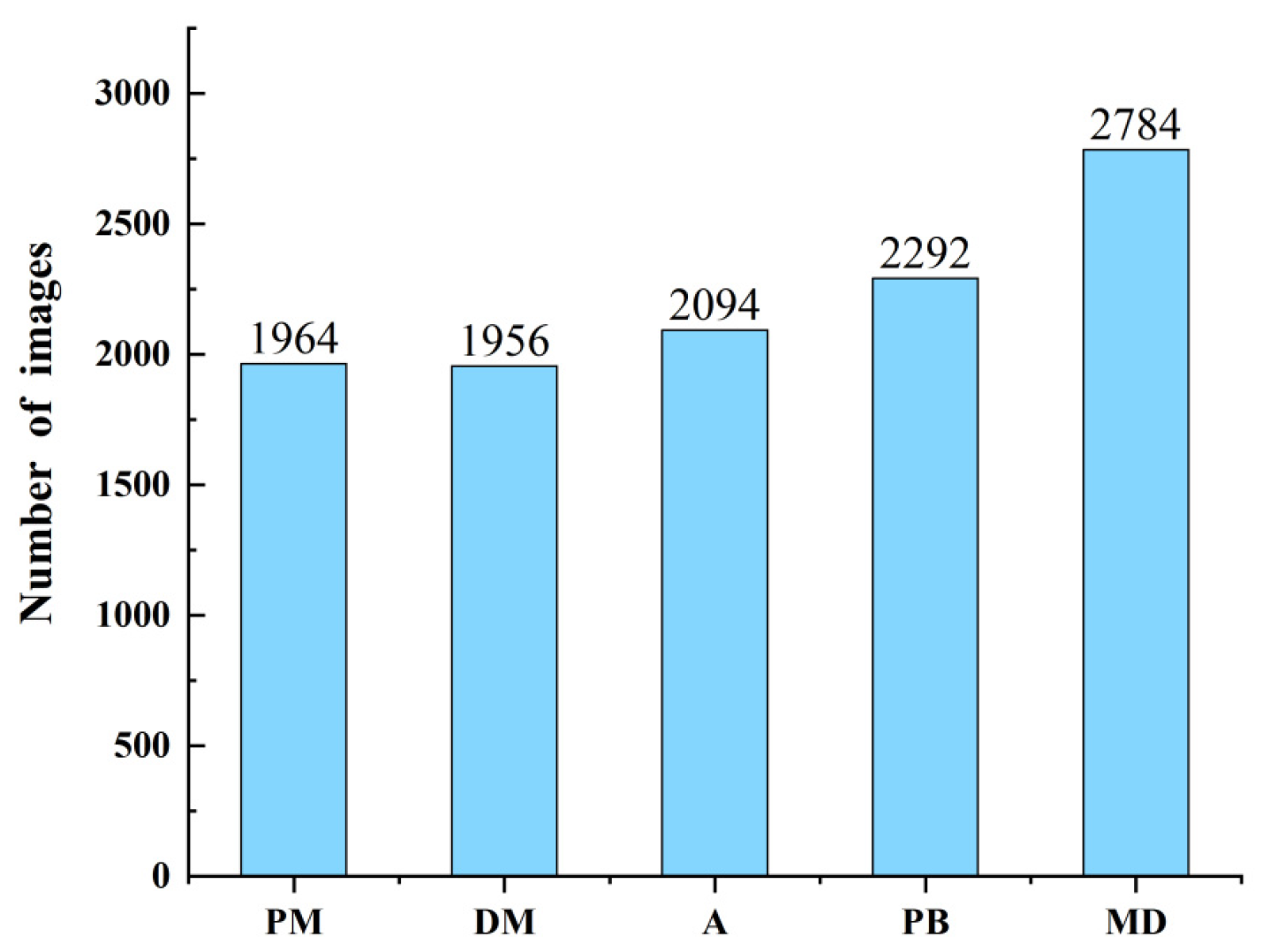

2.1. Data Collection and Dataset Construction

2.1.1. Data Collection

2.1.2. Dataset Construction

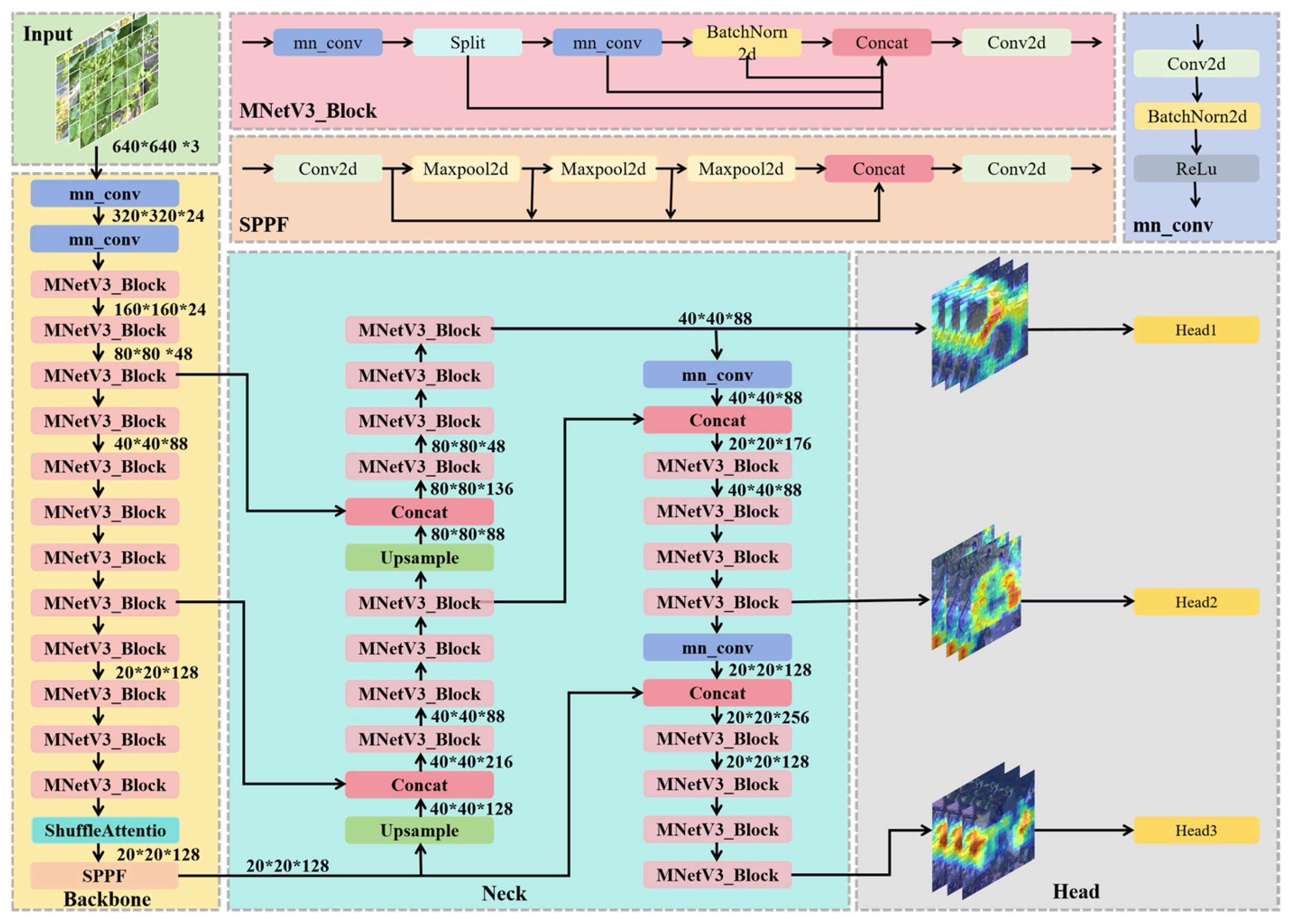

2.2. YOLOv8-LSW Mode

2.2.1. LeYOLO-Small Backbone Structure

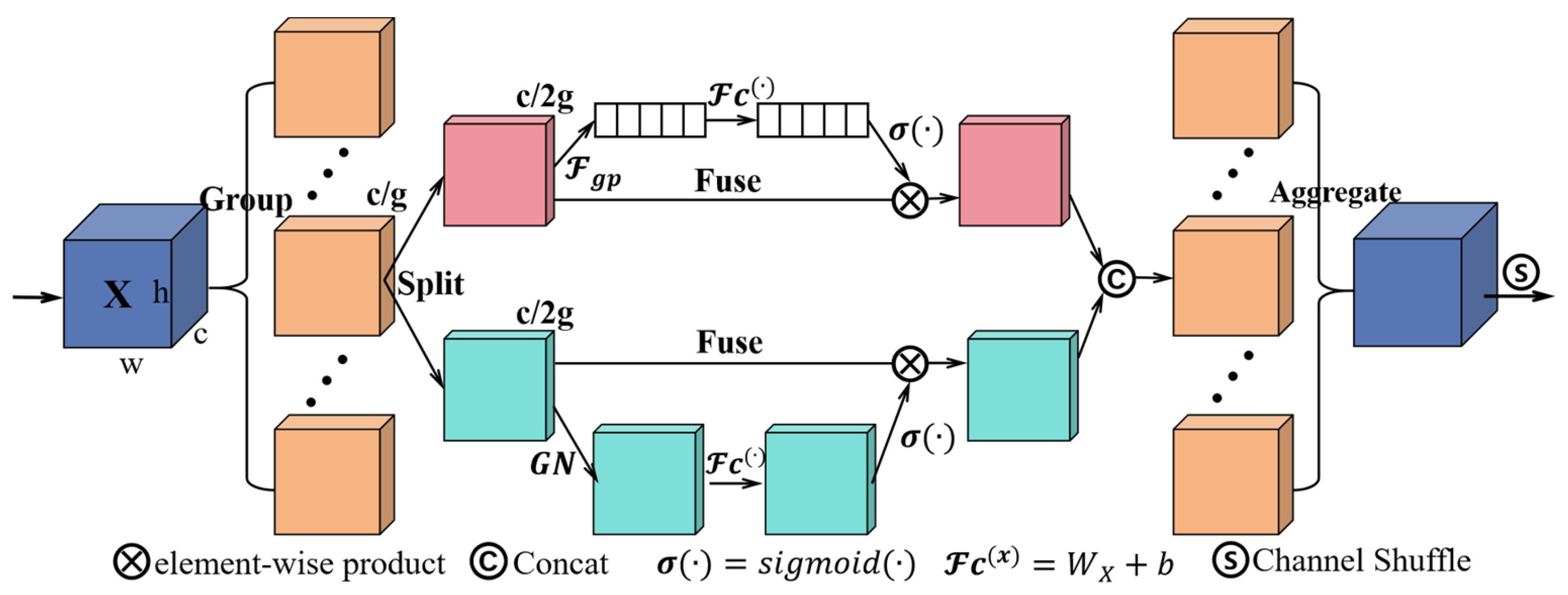

2.2.2. ShuffleAttention Attention Mechanism

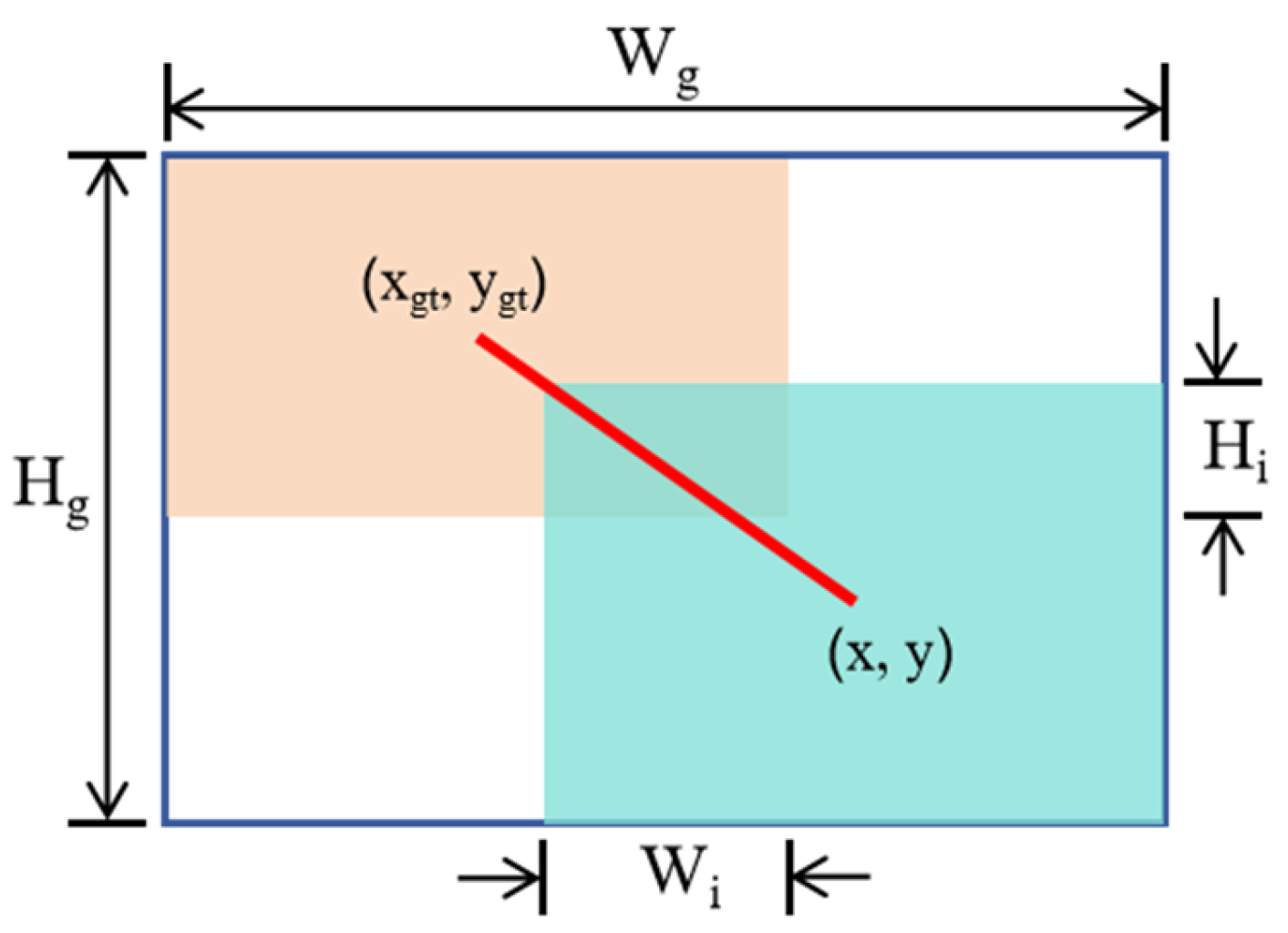

2.2.3. WIoUv3 Loss Function

2.3. Evaluation Metrics

3. Results

3.1. Experimental Environment

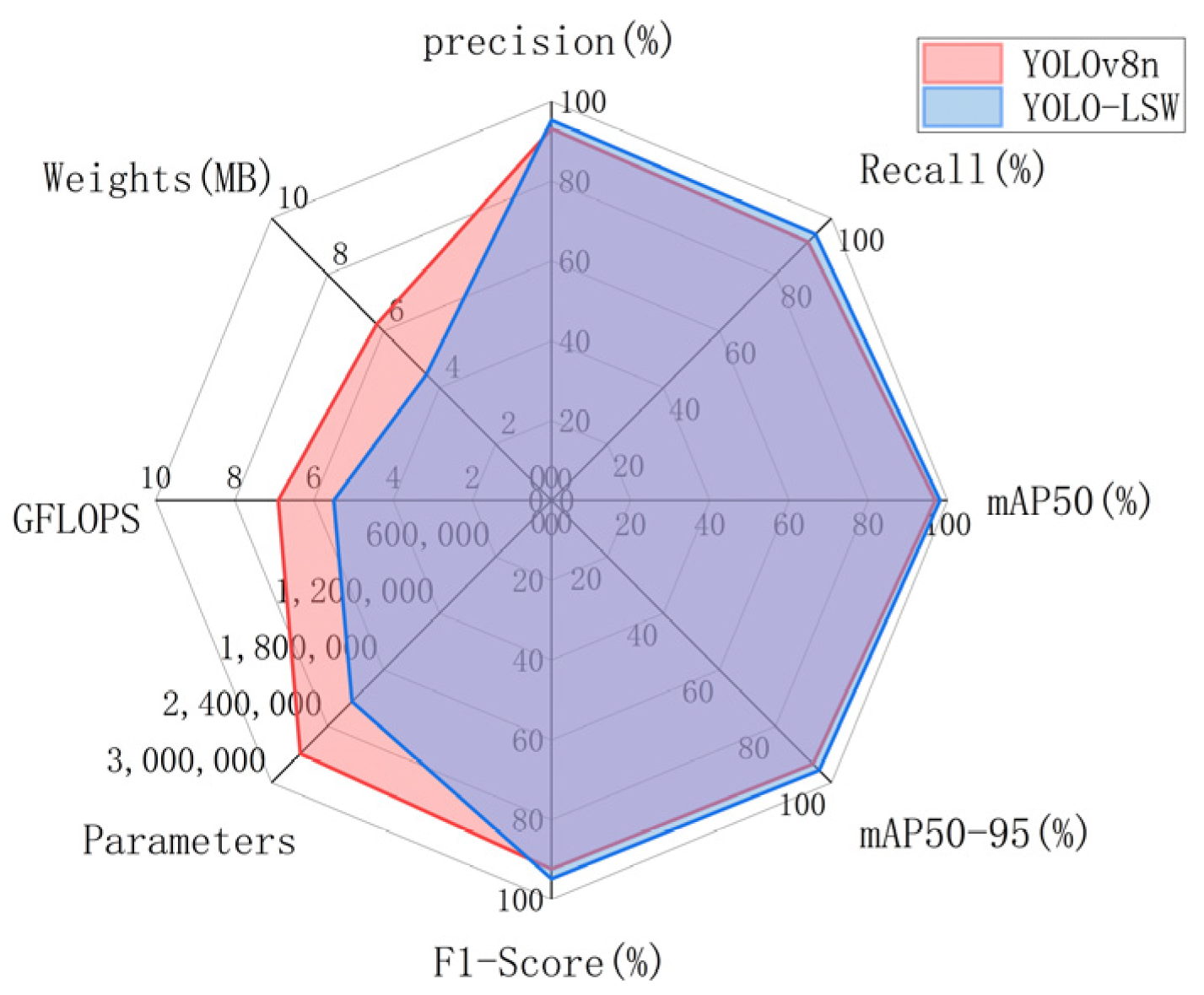

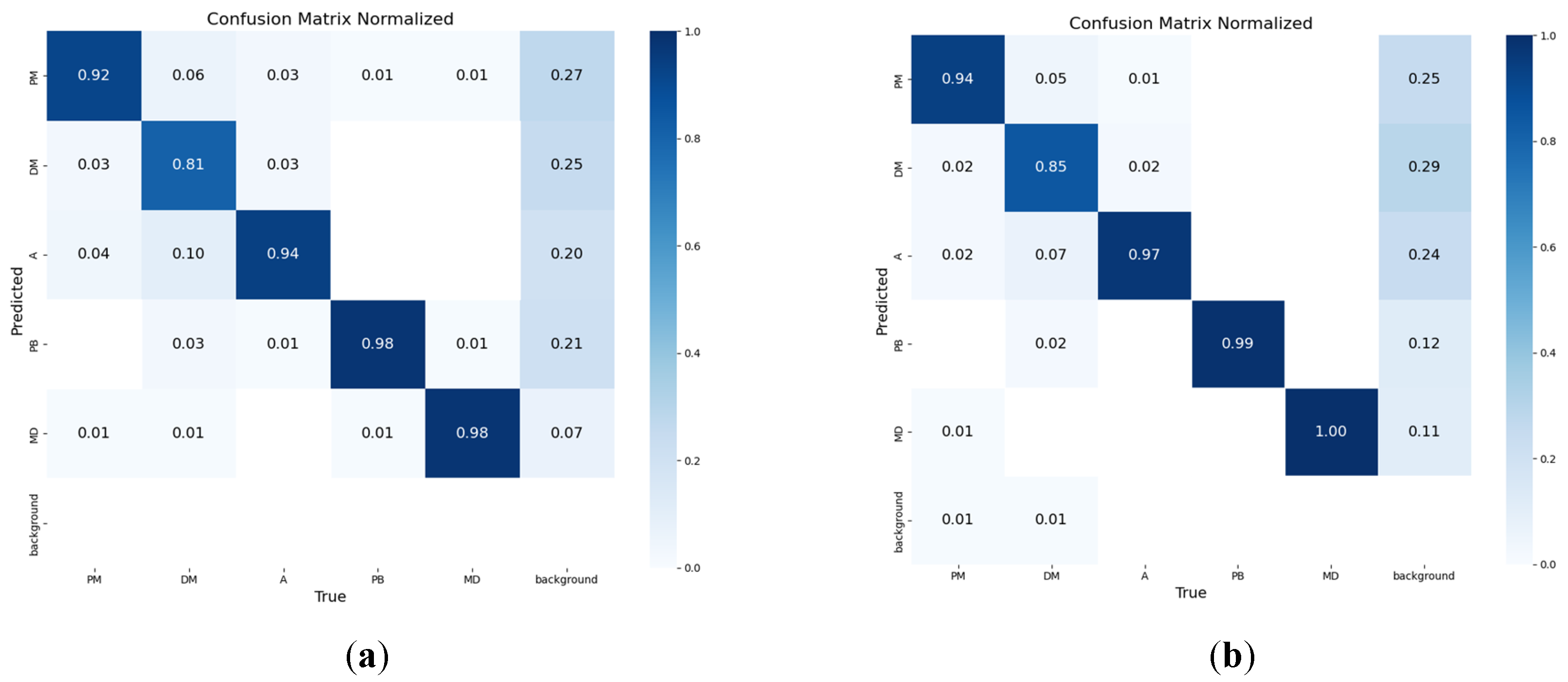

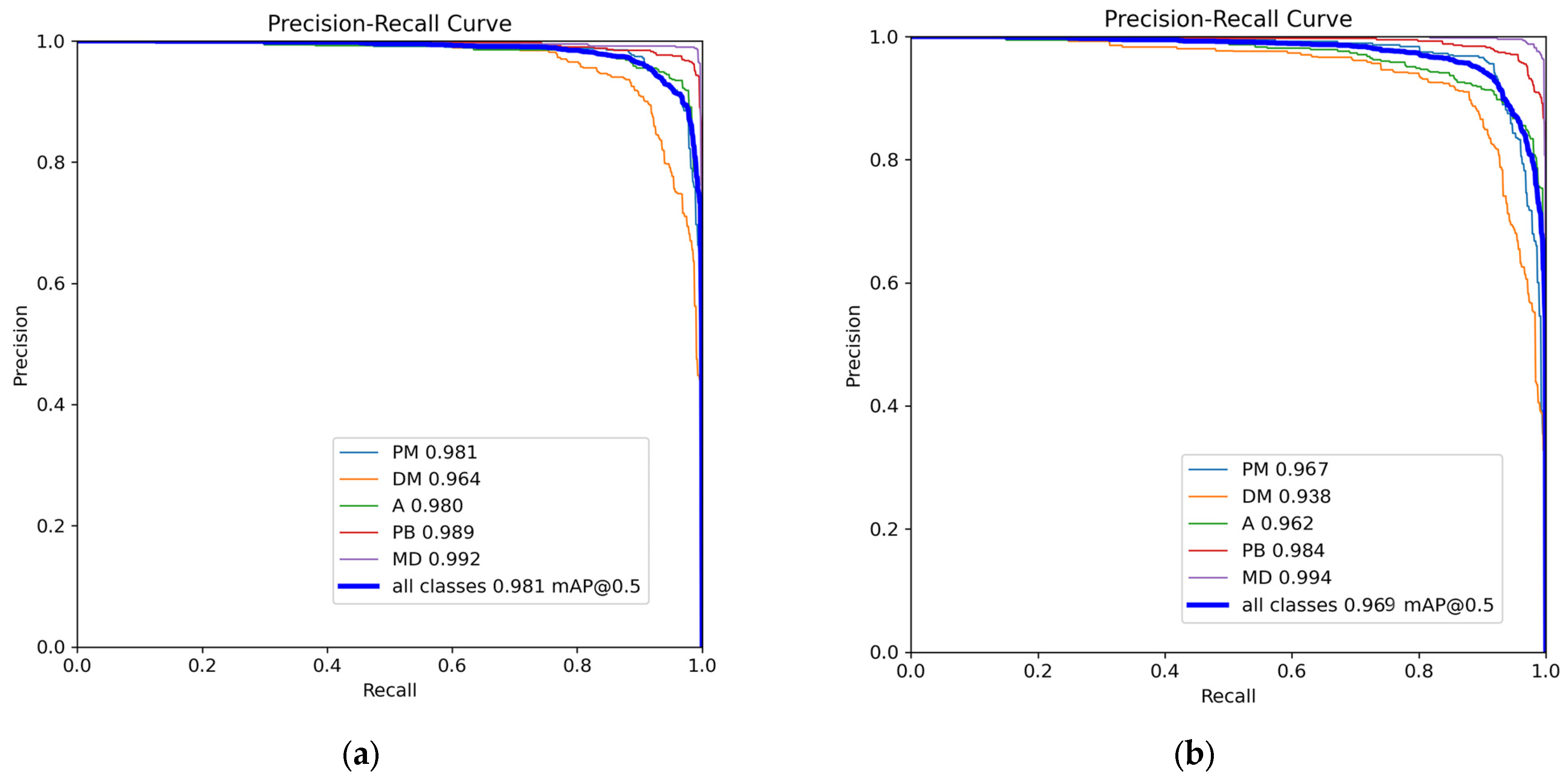

3.2. Model Training Results

3.3. Ablation Study

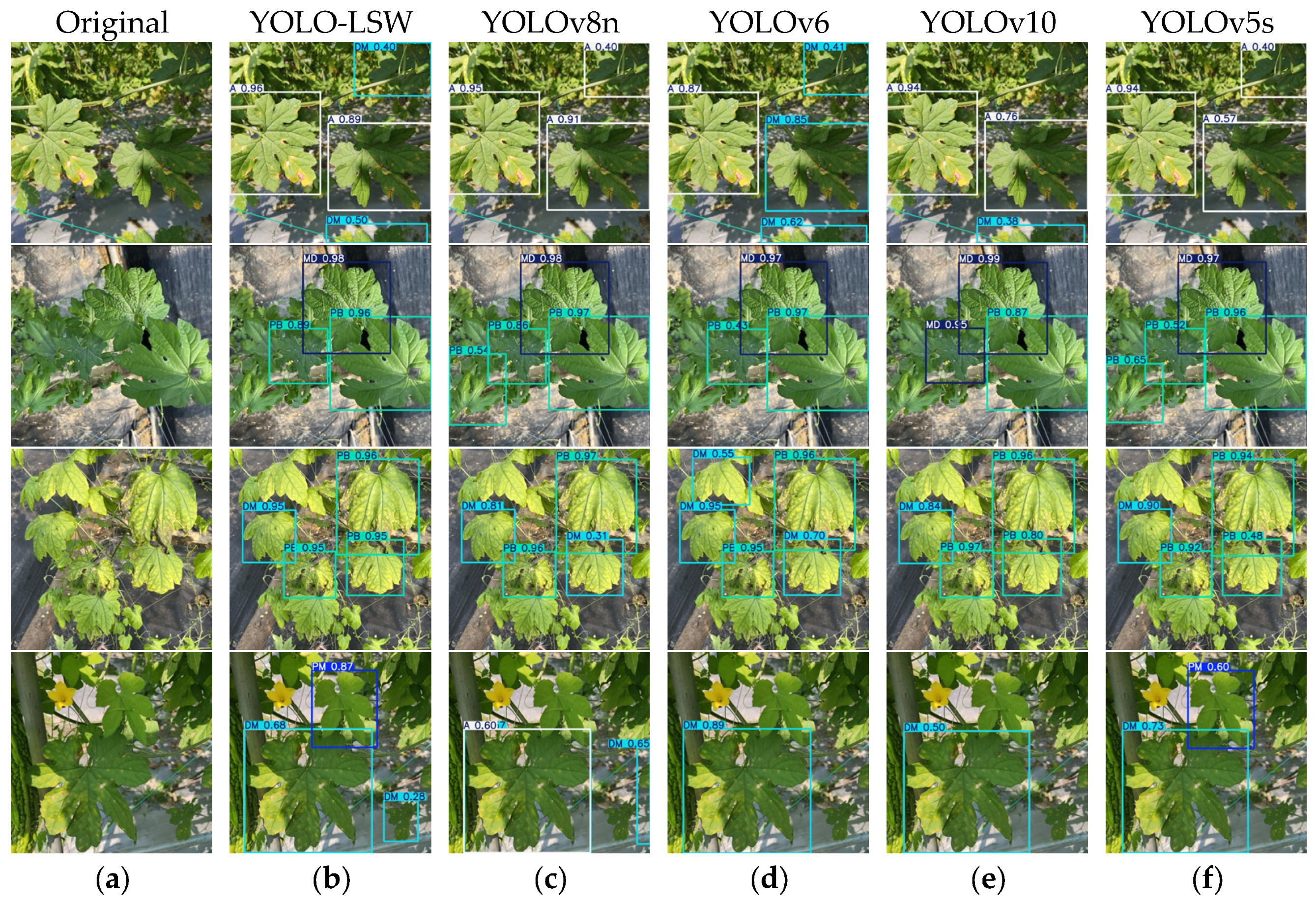

3.4. Comparative Experiments

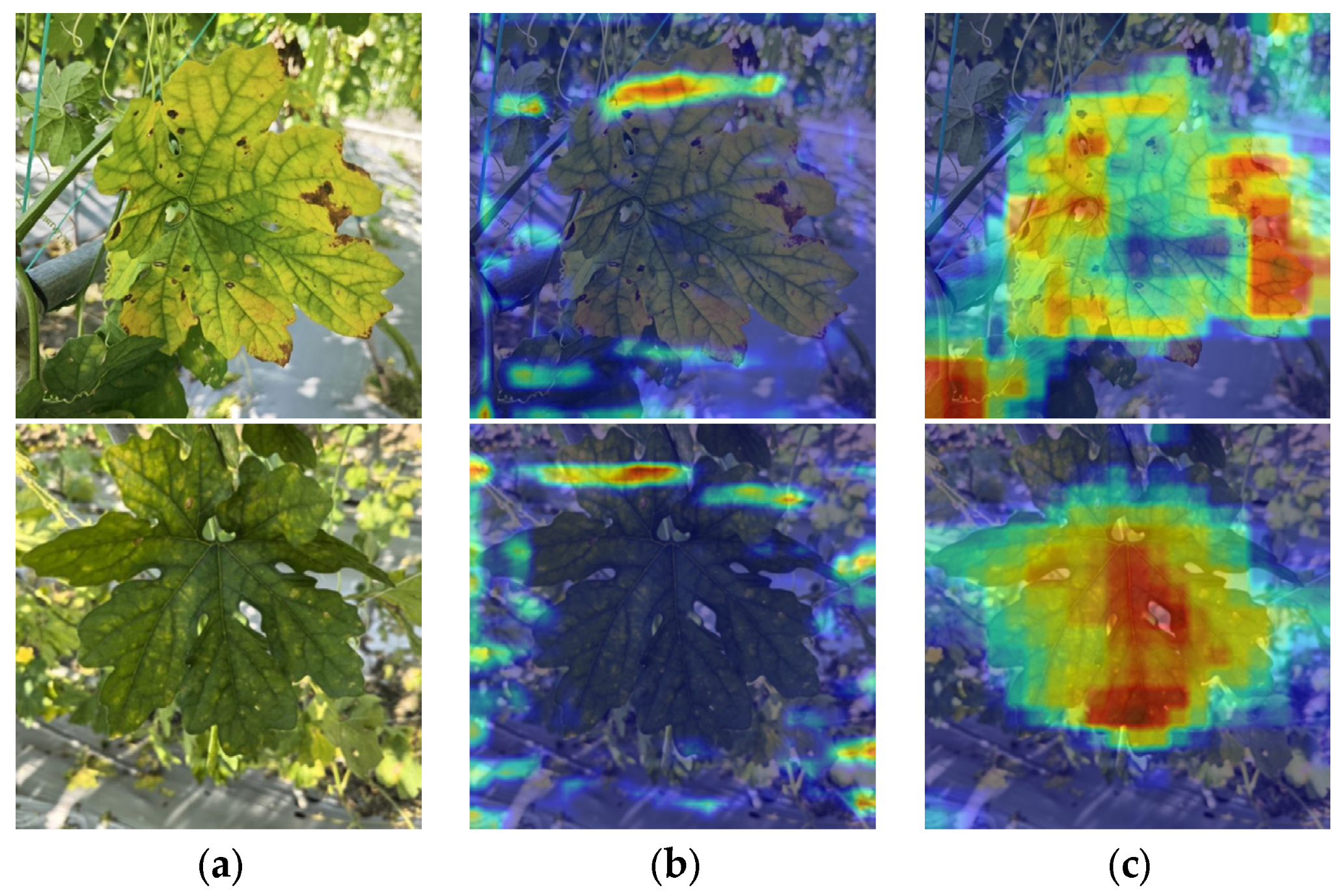

4. Discussions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ge, H.; Liu, S.; Zheng, H.; Chang, P.; Huang, W.; Lin, S.; Zheng, J.; Li, H.; Huang, Z.; Jia, Q.; et al. Identification and Expression Analysis of Lipoxygenase Gene in Bitter Gourd (Momordica charantia). Genes 2024, 15, 1557. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.J.; Ye, J.M.; Turner, N.; Hohnen-Behrens, C.; Ke, C.Q.; Tang, C.P.; Chen, T.; Weiss, H.C.; Gesing, E.R.; Rowland, A. Antidiabetic activities of triterpenoids isolated from bitter melon associated with activation of the AMPK pathway. Chem. Biol. 2008, 15, 263–273. [Google Scholar] [CrossRef] [PubMed]

- Akihisa, T.; Higo, N.; Tokuda, H.; Ukiya, M.; Akazawa, H.; Tochigi, Y.; Kimura, Y.; Suzuki, T.; Nishino, H. Cucurbitane-type triterpenoids from the fruits of Momordica charantia and their cancer chemopreventive effects. J. Nat. Prod. 2007, 70, 1233–1239. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Zhang, C. Corn disease identification method based on local discriminant mapping algorithm. J. Agric. Eng. 2014, 30, 167–172. [Google Scholar] [CrossRef]

- Morbekar, A.; Parihar, A.; Jadhav, R. Crop disease detection using YOLO. In Proceedings of the 2020 International Conference for Emerging Technology (INCET), Belgaum, India, 5–7 June 2020; IEEE: Piscataway, NJ, USA; pp. 1–5. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Digital image processing techniques for detecting, quantifying and classifying plant diseases. SpringerPlus 2013, 2, 660. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 1418. [Google Scholar] [CrossRef]

- Sharif, M.A.; Raza, M.; Yasmin, M.; Satapathy, S.; Chandra, S. An integrated design of particle swarm optimization (PSO) with fusion of features for detection of brain tumor. Pattern Recognit. Lett. 2020, 129, 150–157. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Gong, A.; Jing, X.M. Image Recognition of Crop Diseases Based on Multi-convolution Neural Network Model Ensemble. Comput. Technol. Dev. 2020, 30, 134–139. [Google Scholar] [CrossRef]

- Wang, Y.L.; Zhang, H.L.; Liu, Q.F.; Zhang, Y.S. Image classification of tomato leaf diseases based on transfer learning. J. China Agric. Univ. 2019, 24, 124–130. [Google Scholar] [CrossRef]

- Liu, Y.J.; He, Y.K.; Wu, X.M.; Wang, W.J.; Zhang, L.N.; Lyu, H.Z. Potato Sprouting and Surface Damage Detection Method Based on Improved Faster R-CNN. Trans. Chin. Soc. Agric. Mach. 2024, 55, 371–378. [Google Scholar] [CrossRef]

- Wang, C.S.; Zhou, J.; Wu, H.R.; Teng, G.F.; Zhao, C.J.; Li, J.X. Identification of vegetable leaf diseases based on improved Multi-scale ResNet. Trans. Chin. Soc. Agric. Eng. 2020, 36, 209–217. [Google Scholar] [CrossRef]

- Li, L.L.; Zhang, S.J.; Wang, B. Plant disease detection and classification by deep learning: A review. IEEE Access 2021, 9, 56683–56698. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X.W. Tomato diseases and pests detection based on improved YOLO v3 convolutional neural network. Front. Plant Sci. 2020, 11, 898. [Google Scholar] [CrossRef]

- Li, X.; Chen, X.; Yang, J.; Li, Y. Transformer helps identify kiwifruit diseases in complex natural environments. Comput. Electron. Agric. 2022, 200, 107258. [Google Scholar] [CrossRef]

- Zeng, T.H.; Li, S.Y.; Song, Q.M.; Zhong, F.L.; Wei, X. Lightweight Tomato Real-Time Detection Method Based on Improved YOLO and Mobile Deployment. Comput. Electron. Agric. 2023, 205, 107625. [Google Scholar] [CrossRef]

- Zhou, Q.; Li, H.C.; Cai, Z.L.; Zhong, Y.W.; Zhong, F.L.; Lin, X.Y.; Wang, L.J. YOLO-ACE: Enhancing YOLO with Augmented Contextual Efficiency for Precision Cotton Weed Detection. Sensors 2025, 25, 1635. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhai, J.; Wang, L.; Song, B.; Chen, L. Recognition algorithm of agricultural diseases and insect pests based on PP-YOLO. Trans. Chin. Soc. Agric. Eng. 2024, 40, 80–87. [Google Scholar] [CrossRef]

- Hu, Y.; Liu, G.; Chen, Z.; Liu, J.; Guo, J. Lightweight one-stage maize leaf disease detection model with knowledge distillation. Agriculture 2023, 13, 1664. [Google Scholar] [CrossRef]

- Wei, H.B.; Zhang, D.J.; Du, G.M.; Xiao, W.F. Vegetable recognition algorithm based on improved YOLO v3. J. Zhengzhou Univ. (Eng. Sci.) 2020, 41, 7–12+31. [Google Scholar] [CrossRef]

- He, Y.; Peng, Y.; Wei, C.; Liu, H. Automatic disease detection from strawberry leaf based on improved YOLOv8. Plants 2024, 13, 2556. [Google Scholar] [CrossRef] [PubMed]

- Yuan, Y.; Fu, X.L.; Li, H.H. Potato Disease Recognition and Classification Based on Improved YOLOv8. J. Inn. Mong. Agric. Univ. (Nat. Sci. Ed.) 2024, 45, 56–65. [Google Scholar] [CrossRef]

- Yang, F.; Yao, X.T. Lightweighted Wheat Leaf Diseases and Pests Detection Model Based on Improved YOLOv8. Smart Agric. 2024, 6, 147–157. [Google Scholar] [CrossRef]

- Jiang, T.; Du, X.; Zhang, N.; Sun, X.; Li, X.; Tian, S.; Liang, Q. YOLOv8-GO: A Lightweight Model for Prompt Detection of Foliar Maize Diseases. Appl. Sci. 2024, 14, 10004. [Google Scholar] [CrossRef]

- Xu, H.; Fu, L.; Li, J.; Lin, X.; Chen, L.; Zhong, F.; Hou, M. A method for analyzing the phenotypes of nonheading Chinese cabbage leaves based on deep learning and OpenCV phenotype extraction. Agronomy 2024, 14, 699. [Google Scholar] [CrossRef]

- Li, B.; Fan, J. Rice pest classification based on YOLOv5. Jiangsu Agric. Sci. 2024, 52, 175–182. [Google Scholar] [CrossRef]

- Zhang, L.; Bayin, T.; Zeng, Q. Early disease detection method for grapevine leaves based on StyleGAN2-ADA and improved YOLOv7. Trans. Chin. Soc. Agric. Mach. 2024, 55, 241–252. [Google Scholar] [CrossRef]

- Han, X.; Xu, Y.; Feng, R.; Liu, T.; Bai, J.; Lan, Y. Early identification of crop diseases based on infrared thermography and improved YOLOv5. Trans. Chin. Soc. Agric. Mach. 2023, 54, 300–307+375. Available online: https://link.cnki.net/urlid/11.1964.S.20231016.1159.011 (accessed on 10 June 2025).

- Sekharamantry, P.K.; Melgani, F.; Malacarne, J. Deep learning-based apple detection with attention module and improved loss function in YOLO. Remote Sens. 2023, 15, 1516. [Google Scholar] [CrossRef]

- Zhang, Q.L.; Yang, Y.B. SA-NET: Shuffle attention for deep convolutional neural networks. In Proceedings of the 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: Piscataway, NJ, USA; pp. 2235–2239. [Google Scholar] [CrossRef]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA; pp. 770–778. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.L.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Piscataway, NJ, USA; pp. 4510–4520. [Google Scholar] [CrossRef]

- Yang, R.J.; Zhang, H.; Ye, J. Improved lightweight military aircraft detection algorithm for remote sensing images with YOLOv8n. Electron. Meas. Technol. 2025, 48, 154–165. [Google Scholar] [CrossRef]

- Elfwing, S.; Uchibe, E.; Doya, K. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Netw. 2018, 107, 3–11. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.; Zhang, X.; Shen, W.; Lin, Z.; Liu, S.; Jia, Q.; Li, H.; Zheng, J.; Zhong, F. Improved CSW-YOLO model for bitter melon phenotype detection. Plants 2024, 13, 3329. [Google Scholar] [CrossRef] [PubMed]

- Liu, K.; Lin, S.L.; Shi, X.Y.; Wang, T. Few-shot wildlife detection based on multi-scale context extraction. Chin. J. Liq. Cryst. Disp. 2025, 40, 516–526. [Google Scholar] [CrossRef]

- Chen, Y.; Xu, H.; Chang, P.; Huang, Y.; Zhong, F.; Jia, Q.; Chen, L.; Zhong, H.; Liu, S. CES-YOLOv8: Strawberry maturity detection based on the improved YOLOv8. Agronomy 2024, 4, 1353. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding box regression loss with dynamic focusing mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar] [CrossRef]

- Wang, S.; Yao, L.; Xu, L.; Zhang, Y. An improved YOLOv7-Tiny method for the segmentation of images of vegetable fields. Agriculture 2024, 14, 856. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, J.K.; Lin, Z.Y.; Li, Q. Steel surface defect detection algorithm based on improved YOLOv8n. Electron. Meas. Technol. 2024, 47, 191–198. [Google Scholar] [CrossRef]

- Kumar, S.; Abdelhamid, A.A.; Zaki, T. Visualizing the unseen: Exploring GRAD-CAM for interpreting convolutional image classifiers. Full Length Artic. 2023, 4, 34–42. [Google Scholar] [CrossRef]

- Hussain, T.; Shouno, H. Explainable deep learning approach for multi-class brain magnetic resonance imaging tumor classification and localization using gradient-weighted class activation mapping. Information 2023, 14, 642. [Google Scholar] [CrossRef]

- Zuo, Z.; Gao, S.; Peng, H.; Liu, Y. Lightweight detection of broccoli heads in complex field environments based on LBDC-YOLO. Agronomy 2024, 14, 2359. [Google Scholar] [CrossRef]

- Deng, W.; Chen, L.P.; Zhang, R.R.; Tang, Q.; Xu, G.; Li, L.L.; Xu, M. Review on Key Technologies for UAV Precision Agro-chemical Application. Agric. Eng. 2020, 10, 1–10. [Google Scholar] [CrossRef]

- Sayed, M.; Brostow, G. Improved handling of motion blur in online object detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA; pp. 1706–1716. [Google Scholar] [CrossRef]

- Ashar, A.A.K.; Abrar, A.; Liu, J.A. Survey on Object Detection and Recognition for Blurred and Low-Quality Images: Handling, Deblurring, and Reconstruction. In Proceedings of the 2024 8th International Conference on Information System and Data Mining, Los Angeles, CA, USA, 24–26 June 2024; IEEE: Piscataway, NJ, USA; pp. 95–100. [Google Scholar] [CrossRef]

| Model Names | Datasets | P (Baseline) | P (Improved) | R (Baseline) | R (Improved) |

|---|---|---|---|---|---|

| KTD-YOLOv8 | Strawberry Leaf Disease | 89.10% | 90.00% | 77.60% | 81.30% |

| YOLOv8-DCN | Potato Disease | 88.78% | 96.50% | 87.32% | 94.36% |

| YOLOv8-SS | Wheat Leaf Disease | 79.30% | 89.41% | 87.32% | 94.36% |

| YOLOv8-GO | Corn Leaf Disease | 87.00% | 90.00% | 76.60% | 77.40% |

| Parameter | Configuration |

|---|---|

| CPU | Intel(R) Xeon(R) Platinum 8481C |

| Random access memory (RAM) | 80GB |

| GPU | RTX 4090D |

| Display memory | 24 GB |

| Training environment | CUDA 11.3 |

| Operating system | ubuntu20.04 (64-bit) |

| Development environment (computer) | PyTorch 1.11.0 Python 3.8.18 |

| ID | Backbone | Attention | Loss | Precision | Recall | mAP50 | mAP50-95 | F1-Score |

|---|---|---|---|---|---|---|---|---|

| 1 | 93.10% | 91.60% | 96.90% | 93.40% | 92.34% | |||

| 2 | WIOUV3 | 93.90% | 93.40% | 97.30% | 93.70% | 93.65% | ||

| 3 | ShuffleAttention | 93.30% | 92.80% | 97.50% | 94.20% | 93.05% | ||

| 4 | LeYOLO-small | 94.10% | 93.80% | 97.80% | 95.60% | 93.95% | ||

| 5 | ShuffleAttention | WIOUV3 | 93.80% | 93.90% | 97.40% | 93.60% | 93.85% | |

| 6 | LeYOLO-small | ShuffleAttention | 95.00% | 93.90% | 97.80% | 95.60% | 94.45% | |

| 7 | LeYOLO-small | WIOUV3 | 94.10% | 93.90% | 97.80% | 95.60% | 94.00% | |

| 8 | LeYOLO-small | ShuffleAttention | WIOUV3 | 95.30% | 94.30% | 98.10% | 95.60% | 94.80% |

| Models | Precision | Recall | mAP50 | mAP50-95 | F1-Score | Parameters | GFLOPS |

|---|---|---|---|---|---|---|---|

| YOLOv8n | 93.10% | 91.60% | 96.90% | 93.40% | 92.34% | 2,691,183 | 6.9 |

| YOLOv3-tiny | 87.10% | 91.60% | 94.20% | 82.30% | 89.29% | 9,521,594 | 14.3 |

| YOLOv5 | 92.40% | 92.80% | 96.70% | 91.90% | 92.60% | 2,182,639 | 5.8 |

| YOLOv5s | 94.90% | 93.70% | 97.50% | 94.40% | 94.30% | 7,815,551 | 18.7 |

| YOLOv6s | 94.20% | 92.80% | 97.10% | 95.70% | 93.49% | 15,977,119 | 42.8 |

| YOLOv6 | 91.20% | 92.20% | 96.10% | 93.50% | 91.70% | 41,55,519 | 11.5 |

| YOLOv10n | 93.20% | 93.50% | 96.90% | 94.00% | 93.35% | 2,696,366 | 8.2 |

| YOLO-LSW | 95.30% | 94.30% | 98.10% | 95.60% | 94.80% | 2,137,199 | 5.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Xu, H.; Deng, Y.; Cai, Y.; Wu, Y.; Zhong, X.; Zheng, J.; Lin, Z.; Ruan, M.; Chen, J.; et al. YOLOv8-LSW: A Lightweight Bitter Melon Leaf Disease Detection Model. Agriculture 2025, 15, 1281. https://doi.org/10.3390/agriculture15121281

Liu S, Xu H, Deng Y, Cai Y, Wu Y, Zhong X, Zheng J, Lin Z, Ruan M, Chen J, et al. YOLOv8-LSW: A Lightweight Bitter Melon Leaf Disease Detection Model. Agriculture. 2025; 15(12):1281. https://doi.org/10.3390/agriculture15121281

Chicago/Turabian StyleLiu, Shuang, Haobin Xu, Ying Deng, Yixin Cai, Yongjie Wu, Xiaohao Zhong, Jingyuan Zheng, Zhiqiang Lin, Miaohong Ruan, Jianqing Chen, and et al. 2025. "YOLOv8-LSW: A Lightweight Bitter Melon Leaf Disease Detection Model" Agriculture 15, no. 12: 1281. https://doi.org/10.3390/agriculture15121281

APA StyleLiu, S., Xu, H., Deng, Y., Cai, Y., Wu, Y., Zhong, X., Zheng, J., Lin, Z., Ruan, M., Chen, J., Zhang, F., Li, H., & Zhong, F. (2025). YOLOv8-LSW: A Lightweight Bitter Melon Leaf Disease Detection Model. Agriculture, 15(12), 1281. https://doi.org/10.3390/agriculture15121281