Precision Weeding in Agriculture: A Comprehensive Review of Intelligent Laser Robots Leveraging Deep Learning Techniques

Abstract

1. Introduction

- (1)

- A content analysis method is provided to organize the literature and present the research process.

- (2)

- The key technology of intelligent laser robot in weed-control process is provided.

- (3)

- Challenges and open problems are analyzed from all angles. New trends in this research field and future directions are accurately identified, so as to share the grand vision of research in the field of intelligent laser robot weeding and greatly expand our cognitive horizons.

2. Content Analysis Method and Research Process Design

2.1. Sample Extraction

2.2. Content Analysis Coding

2.3. Research Steps

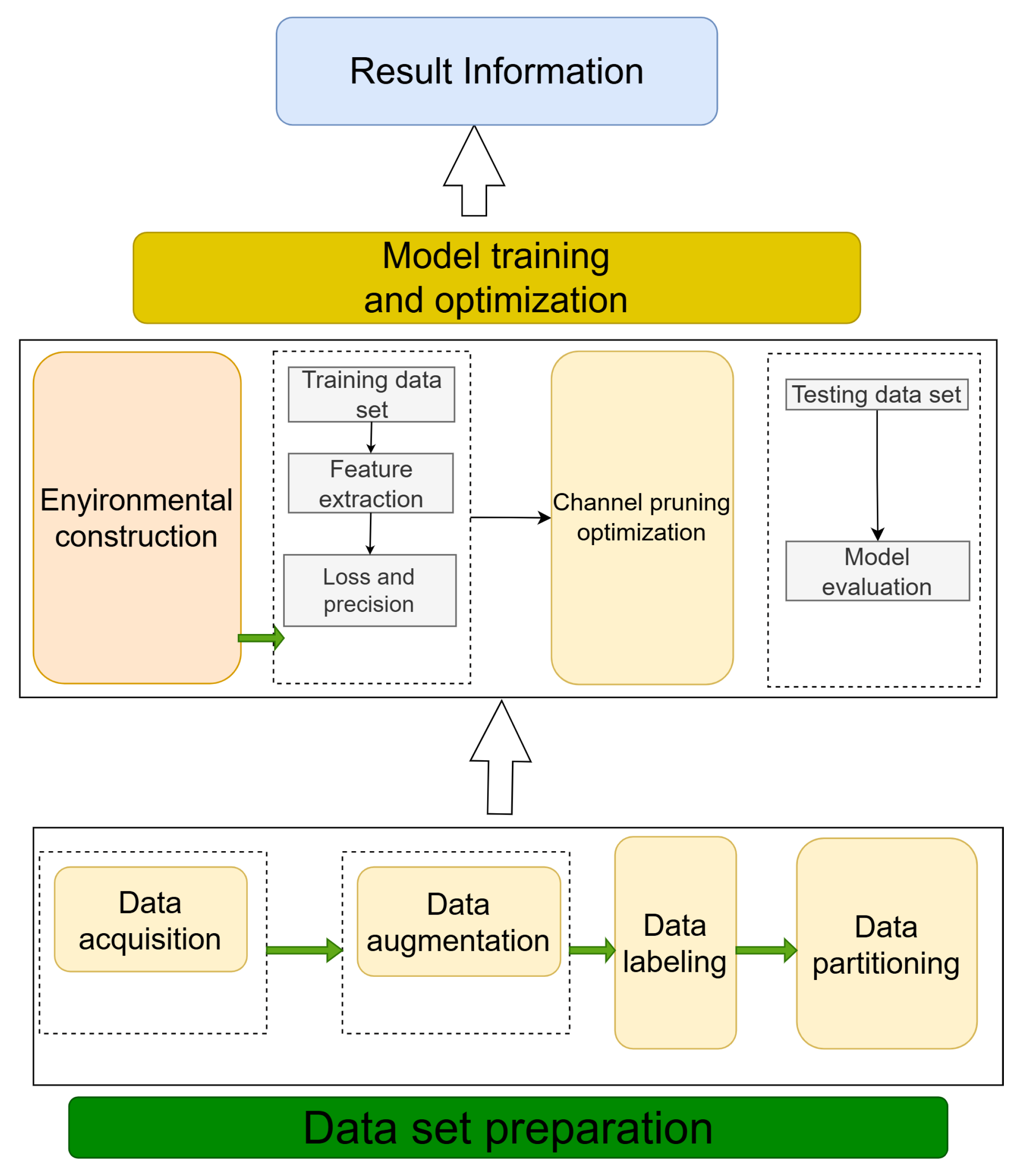

3. Weed-Control System

3.1. Perception Layer

3.1.1. USB Camera

3.1.2. Binocular Camera

3.2. Decision-Making Layer

3.3. Execution Layer

3.3.1. Laser Weeding Device—Working Principle

3.3.2. Laser Control Mechanism

3.3.3. Weeding Performance of Existing Laser Weeding Robots

3.3.4. Robot Body Structure

3.4. Weeding Process

3.4.1. Weed-Control Standards

3.4.2. Weed Target Detection

4. Deep Learning Algorithms

4.1. Introduction to Deep Learning Detection Algorithms

4.1.1. Convolutional Neural Network

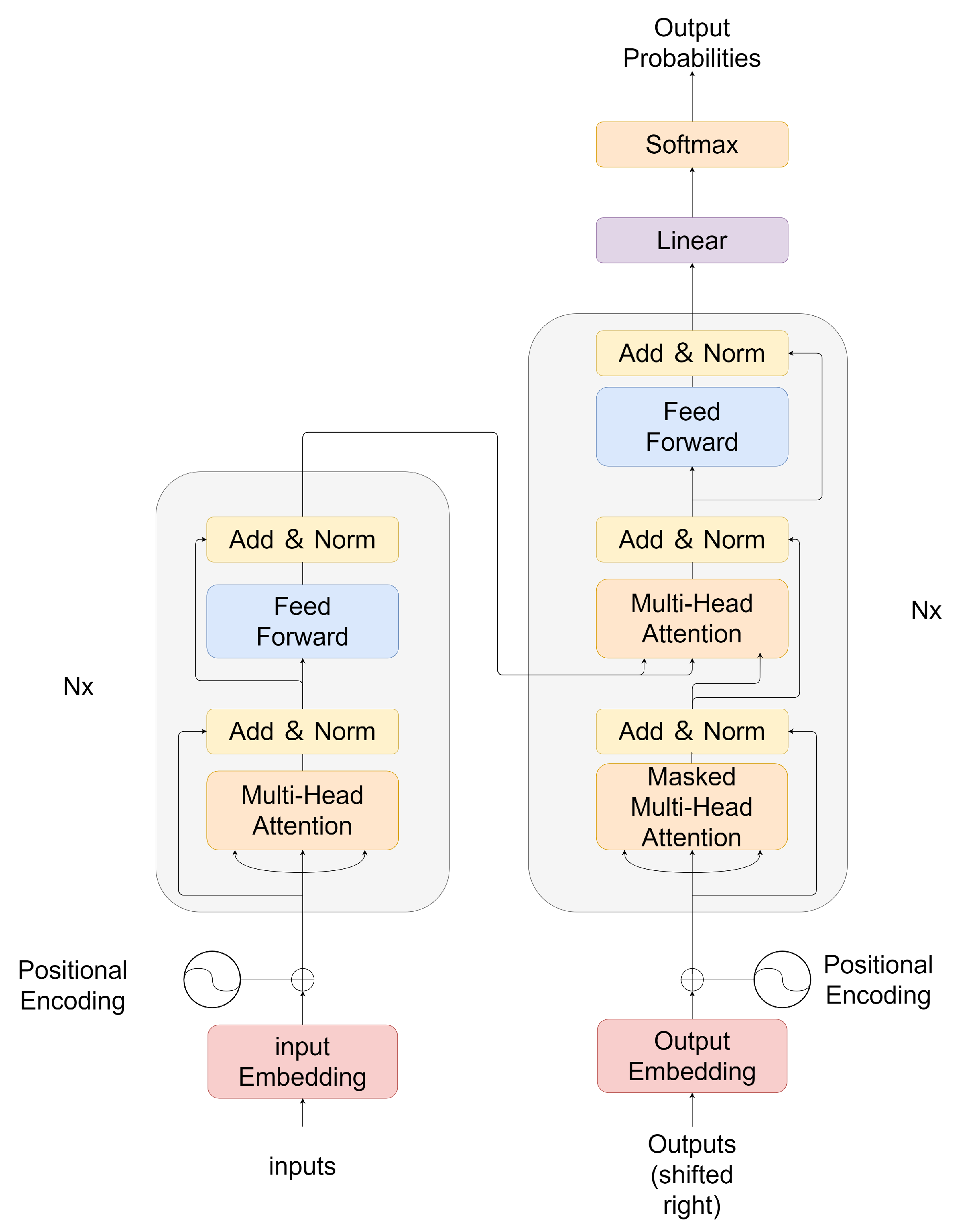

4.1.2. Transformer-Based Convolutional Neural Network

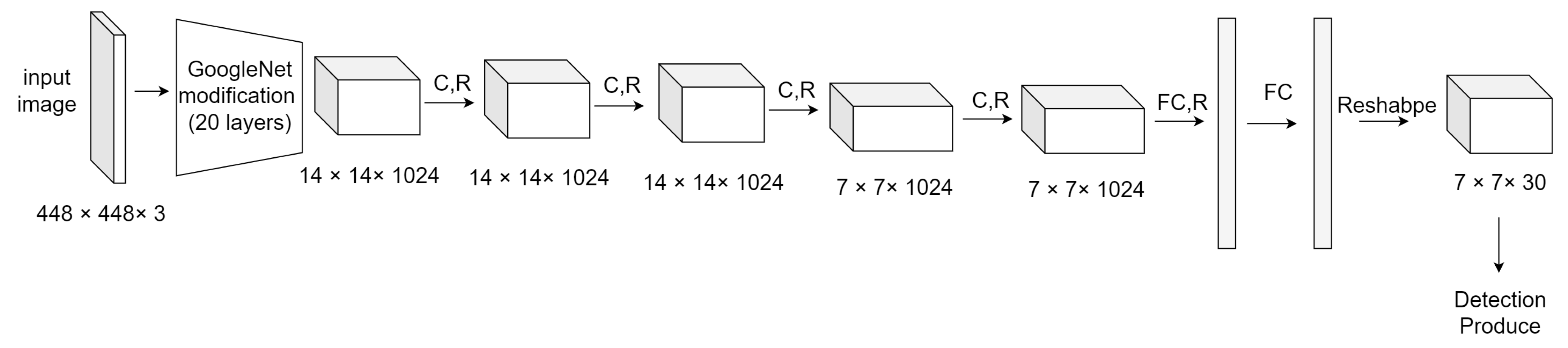

4.1.3. YOLO Target-Detection Algorithm

4.2. Other Object-Detection Algorithms Related to Deep Learning

4.3. Object-Detection Algorithm Evaluation Method

4.3.1. Comparative Analysis of Popular Deep Learning Algorithms

4.3.2. Evaluation Metrics for Deep Learning Algorithm

| Evaluation Metrics | Meaning | Application Scenarios |

|---|---|---|

| Accuracy | • Accuracy refers to the proportion of correct predictions made by the model among all samples, that is, the ratio of the number of correctly predicted samples to the total number of samples. | • Accuracy is a basic indicator for evaluating model performance, which measures the classification accuracy of the model on the overall data. |

| Precision | • Precision refers to the proportion of samples that are actually positive among all samples predicted to be positive. | • Precision is mainly used to focus on the accuracy of the model in predicting the positive class. |

| Recall | • Recall refers to the proportion of samples that are correctly predicted as positive by the model among all samples that are actually positive. | • Recall is used to measure the model’s ability to detect positive samples. |

| mean Average Precision (mAP) | • mAP is a commonly used indicator in target-detection tasks. It is the average of the average precision (AP) of multiple categories. | • mAP is mainly used in target-detection tasks to evaluate the detection accuracy of the model for different target categories. |

| Intersection over Union (IoU) | • IoU is a metric used to measure the degree of overlap between two bounding boxes. It is the ratio of the intersection to the union of the two bounding boxes. | • In object detection, IoU is usually used to determine the degree of match between the predicted bounding box and the true bounding box, thereby evaluating the positioning accuracy of the model. |

| Frames Per Second (FPS) | • FPS means the number of frames processed per second | • Reflects the processing speed of the model. |

4.4. Key Issues to Be Addressed for Deep Learning Detection System

4.4.1. Small Object-Detection Problem

4.4.2. The Balance Between Speed and Accuracy

5. Applications of Deep Learning Algorithms in Weed Control

5.1. Application of YOLO-Based Target-Detection Algorithm in Weed Control

5.2. Application of Object-Detection Algorithm Based on Faster R-CNN in Weed Control

5.3. Application of Object-Detection Algorithm Based on Convolutional Neural Network in Weed Control

5.4. Application of Other Detection Algorithms in Weed Control

6. Future Trends

6.1. Weeding System Based on Multimodal Data Fusion

6.2. Intelligent Decision Making

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ahmadi, A.; Halstead, M.; Smitt, C.; McCool, C. BonnBot-I Plus: A Bio-Diversity Aware Precise Weed Management Robotic Platform. IEEE Robot. Autom. Lett. 2024, 9, 6560–6567. [Google Scholar] [CrossRef]

- Alcantara, A.; Magwili, G.V. CROPBot: Customized Rigid Organic Plantation Robot. In Proceedings of the 2022 International Conference on Emerging Technologies in Electronics, Computing and Communication (ICETECC), Jamshoro, Sindh, Pakistan, 7–9 December 2022; pp. 1–7. [Google Scholar]

- Andreasen, C.; Scholle, K.; Saberi, M. Laser Weeding With Small Autonomous Vehicles: Friends or Foes? Front. Agron. 2022, 4, 841086. [Google Scholar] [CrossRef]

- Krupanek, J.; de Santos, P.G.; Emmi, L.; Wollweber, M.; Sandmann, H.; Scholle, K.; Di Minh Tran, D.; Schouteten, J.J.; Andreasen, C. Environmental performance of an autonomous laser weeding robot—A case study. Int. J. Life Cycle Assess. 2024, 29, 1021–1052. [Google Scholar] [CrossRef]

- Arakeri, M.P.; Vijaya Kumar, B.P.; Barsaiya, S.; Sairam, H.V. Computer vision based robotic weed control system for precision agriculture. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Udupi, India, 13–16 September 2017; pp. 1201–1205. [Google Scholar]

- Aoki, T.; Inada, S.; Shimizu, D. Development of a Snake Robot to Weed in Rice Paddy Field and Trial of Field Test. In Proceedings of the 2024 IEEE/SICE International Symposium on System Integration (SII), Ha Long, Vietnam, 8–11 January 2024; pp. 1537–1542. [Google Scholar]

- Zhao, P.; Chen, J.; Li, J.; Ning, J.; Chang, Y.; Yang, S. Design and Testing of an autonomous laser weeding robot for strawberry fields based on DIN-LW-YOLO. Comput. Electron. Agric. 2025, 229, 109808. [Google Scholar] [CrossRef]

- Banu, E.A.; Chidambaranathan, S.; Jose, N.N.; Kadiri, P.; Abed, R.E.; Al-Hilali, A. A System to Track the Behaviour or Pattern of Mobile Robot Through RNN Technique. In Proceedings of the 2024 4th International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE), Greater Noida, India, 14–15 May 2024; pp. 2003–2005. [Google Scholar]

- Fossum, E.R. CMOS image sensors: Electronic camera-on-a-chip. IEEE Trans. Electron. Dev. 1997, 44, 1689–1698. [Google Scholar] [CrossRef]

- Litwiller, D. Ccd vs. cmos. Photonic Spectra 2001, 35, 154–158. [Google Scholar]

- Shung, K.K.; Smith, M.; Tsui, B.M. Principles of Medical Imaging; Academic Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trsns. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Hu, X.; Chang, Y.; Qin, H.; Xiao, J.; Cheng, H. Binocular ranging method based on improved YOLOv8 and GMM image point set matching. J. Graph. 2024, 45, 714. [Google Scholar]

- Jiang, M.; Tian, Z.; Yu, C.; Shi, Y.; Liu, L.; Peng, T.; Hu, X.; Yu, F. Intelligent 3D garment system of the human body based on deep spiking neural network. Virtual Real. Intell. Hardw. 2024, 6, 43–55. [Google Scholar] [CrossRef]

- Moldvai, L.; Mesterhazi, P.A.; Teschner, G.; Nyeeki, A. Weed Detection and Classification with Computer Vision Using a Limited Image Dataset. Appl. Sci. 2024, 14, 4839. [Google Scholar] [CrossRef]

- Verma, A.; Al-Jawahry, H.M.; Alsailawi, H.A.; Kirubanantham, P.; SherinEliyas; Saadoun, O.N.; Manalmoradkarim; Zaidan, D.T. The Gm System for the use of AGMR System. In Proceedings of the 2024 4th International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE), Greater Noida, India, 14–15 May 2024; pp. 1114–1119. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, JMLR Workshop and Conference Proceedings, Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trsns. Pattern Anal. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully convolutional one-stage object detection. arXiv 2019, arXiv:1904.01355. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6569–6578. [Google Scholar]

- Sirikunkitti, S.; Chongcharoen, K.; Yoongsuntia, P.; Ratanavis, A. Progress in a Development of a Laser-Based Weed Control System. In Proceedings of the 2019 Research, Invention, and Innovation Congress (RI2C), Bangkok, Thailand, 11–13 December 2019; pp. 1–4. [Google Scholar]

- Zhang, H.; Cao, D.; Zhou, W.; Currie, K. Laser and optical radiation weed control: A critical review. Precis. Agric. 2024, 25, 2033–2057. [Google Scholar] [CrossRef]

- Kashyap, P.K.; Kumar, S.; Jaiswal, A.; Prasad, M.; Gandomi, A.H. Towards precision agriculture: IoT-enabled intelligent irrigation systems using deep learning neural network. IEEE Sens. J. 2021, 21, 17479–17491. [Google Scholar] [CrossRef]

- Yaseen, M.U.; Long, J.M. Laser Weeding Technology in Cropping Systems: A Comprehensive Review. Agronomy 2024, 14, 2253. [Google Scholar] [CrossRef]

- Andreasen, C.; Vlassi, E.; Salehan, N. Laser weeding of common weed species. Front. Plant Sci. 2024, 15, 1375164. [Google Scholar] [CrossRef]

- Ji, Y. Theoretical and Experimental Research on Weed Removal by 1064 nm Laser. Master’s Thesis, Changchun University of Science and Technology, Changchun, China, 2024. [Google Scholar]

- Lameski, P.; Zdravevski, E.; Kulakov, A. Review of automated weed control approaches: An environmental impact perspective. In Proceedings of the ICT Innovations 2018. Engineering and Life Sciences: 10th International Conference, ICT Innovations 2018, Ohrid, Macedonia, 17–19 September 2018; Proceedings 10. Springer: Berlin/Heidelberg, Germany, 2018; pp. 132–147. [Google Scholar]

- Ju, M.R.; Luo, H.B.; Wang, Z.B.; He, M.; Chang, Z.; Hui, B. Improved YOLOV3 Algorithm and Its Application in Small Target Detection. Acta Opt. Sin. 2019, 39, 0715004. [Google Scholar]

- Xu, Y.; Jiang, M.; Li, Y.; Wu, Y.; Lu, G. Fruit target detection based on improved YOLO and NMS. J. Electron. Meas. Instrum. 2023, 36, 114–123. [Google Scholar]

- Li, C.Y.; Yao, J.M.; Lin, Z.X.; Yan, Q.; Fan, B.Q. Object Detection Method Based on Improved YOLO Light weight Network. Laser Optoelectron. Prog. 2020, 57, 141003. [Google Scholar]

- Jia, S.; Dabo, G.; Yang, T. Real Time Object Detection Based on Improved YOLOv3 Network. Laser Optoelectron. Prog. 2020, 57, 221505. [Google Scholar]

- Chen, X.; Gupta, A. An implementation of faster rcnn with study for region sampling. arXiv 2017, arXiv:1702.02138. [Google Scholar]

- Johnson, J.W. Adapting mask-rcnn for automatic nucleus segmentation. arXiv 2018, arXiv:1805.00500. [Google Scholar]

- Pei, W.; Xu, Y.; Zhu, Y.; Wang, P.; Lu, M.; Li, F. The Target Detection Method of Aerial Photography Images with Improved SSD. J. Softw. 2019, 30, 738–758. [Google Scholar]

- Zhen, Z.; LI, M.; Liu, H.; Ma, J. Improved ssd algorithm and its application in target detection. Comput. Appl. Softw. 2021, 38, 226–231. [Google Scholar]

- Wu, T.; Zhang, Z.; Liu, Y.; Pei, W.; Chen, H. A Lightweight small target detection algorithm based on improved SSD. Infrared Laser Eng. 2018, 47, 37–43. [Google Scholar] [CrossRef]

- Ali, M.A.M.; Aly, T.; Raslan, A.T.; Gheith, M.; Amin, E.A. Advancing Crowd Object Detection: A Review of YOLO, CNN and ViTs Hybrid Approach. J. Intell. Learn. Syst. Appl. 2024, 16, 175–221. [Google Scholar] [CrossRef]

- Rashid, Y.; Bhat, J.I. OlapGN: A multi-layered graph convolution network-based model for locating influential nodes in graph networks. Knowl.-Based Syst. 2024, 283, 111163. [Google Scholar] [CrossRef]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vision 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Zhao, L.; Zhang, Z. A improved pooling method for convolutional neural networks. Sci. Rep. 2024, 14, 1589. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Katona, T.; Tóth, G.; Petró, M.; Harangi, B. Developing New Fully Connected Layers for Convolutional Neural Networks with Hyperparameter Optimization for Improved Multi-Label Image Classification. Mathematics 2024, 12, 806. [Google Scholar] [CrossRef]

- Dalm, S.; Offergeld, J.; Ahmad, N.; van Gerven, M. Efficient deep learning with decorrelated backpropagation. arXiv 2024, arXiv:2405.02385. [Google Scholar]

- Qin, H.; Wang, J.; Mao, X.; Zhao, Z.; Gao, X.; Lu, W. An improved faster R-CNN method for landslide detection in remote sensing images. J. Geovis. Spat. Anal. 2024, 8, 2. [Google Scholar] [CrossRef]

- Yao, X.; Chen, H.; Li, Y.; Sun, J.; Wei, J. Lightweight image super-resolution based on stepwise feedback mechanism and multi-feature maps fusion. Multimed. Syst. 2024, 30, 39. [Google Scholar] [CrossRef]

- Zhang, J. Rpn: Reconciled polynomial network towards unifying pgms, kernel svms, mlp and kan. arXiv 2024, arXiv:2407.04819. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Jiao, L.; Abdullah, M.I. YOLO series algorithms in object detection of unmanned aerial vehicles: A survey. Serv. Oriented. Comput. Appl. 2024, 18, 269–298. [Google Scholar] [CrossRef]

- Parfenov, A.; Parfenov, D. Creating an Image Recognition Model to Optimize Technological Processes. In Proceedings of the 2024 IEEE International Multi-Conference on Engineering, Computer and Information Sciences (SIBIRCON), Novosibirsk, Russia, 30 September–2 October 2024; pp. 421–424. [Google Scholar]

- Mohammed, K. Reviewing Mask R-CNN: An In-depth Analysis of Models and Applications. Easychair Prepr. 2024, 2024, 11838. [Google Scholar]

- Michael, J.J.; Thenmozhi, M. Survey on Weeding Tools, Equipment, AI-IoT Robots with Recent Advancements. In Proceedings of the 2023 International Conference on Integration of Computational Intelligent System (ICICIS), Pune, India, 1–4 November 2023; pp. 1–6. [Google Scholar]

- Islam, S.; Elmekki, H.; Elsebai, A.; Bentahar, J.; Drawel, N.; Rjoub, G.; Pedrycz, W. A comprehensive survey on applications of transformers for deep learning tasks. Expert Syst. Appl. 2024, 241, 122666. [Google Scholar] [CrossRef]

- Tang, Y.; Wang, Y.; Guo, J.; Tu, Z.; Han, K.; Hu, H.; Tao, D. A survey on transformer compression. arXiv 2024, arXiv:2402.05964. [Google Scholar]

- Han, X.; Chang, J.; Wang, K. You only look once: Unified, real-time object detection. Procedia Comput. Sci. 2021, 183, 61–72. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Savinainen, O. Uncertainty Estimation and Confidence Calibration in YOLO5Face. Master’s Thesis, Department of Electrical Engineering, Linköping University, Linköping, Sweden, 2024. Available online: https://www.diva-portal.org/smash/get/diva2:1871866/FULLTEXT01.pdf (accessed on 26 May 2025).

- Russell, M.; Fischaber, S. OpenCV based road sign recognition on Zynq. In Proceedings of the 2013 11th IEEE International Conference on Industrial Informatics (INDIN), Bochum, Germany, 29–31 July 2013; pp. 596–601. [Google Scholar]

- Chandrika, R.R.; Vanitha, K.; Thahaseen, A.; Chandramma, R.; Neerugatti, V.; Mahesh, T. Number Plate Recognition Using OpenCV. In Proceedings of the 2024 International Conference on Emerging Smart Computing and Informatics (ESCI), Pune, India, 5–7 March 2024; pp. 1–4. [Google Scholar]

- Mentzingen, H.; Antonio, N.; Lobo, V. Joining metadata and textual features to advise administrative courts decisions: A cascading classifier approach. Artif. Intell. Law 2024, 32, 201–230. [Google Scholar] [CrossRef]

- Lai, Z.; Liang, G.; Zhou, J.; Kong, H.; Lu, Y. A joint learning framework for optimal feature extraction and multi-class SVM. Inf. Sci. 2024, 671, 120656. [Google Scholar] [CrossRef]

- Chandan, G.; Jain, A.; Jain, H. Real time object detection and tracking using Deep Learning and OpenCV. In Proceedings of the 2018 International Conference on inventive research in computing applications (ICIRCA), Coimbatore, India, 11–12 July 2018; pp. 1305–1308. [Google Scholar]

- Jiao, L.; Zhang, F.; Liu, F.; Yang, S.; Li, L.; Feng, Z.; Qu, R. A survey of deep learning-based object detection. IEEE Access 2019, 7, 128837–128868. [Google Scholar] [CrossRef]

- Wu, X.; Sahoo, D.; Hoi, S.C. Recent advances in deep learning for object detection. Neurocomputing 2020, 396, 39–64. [Google Scholar] [CrossRef]

- Hui, Y.; You, S.; Hu, X.; Yang, P.; Zhao, J. SEB-YOLO: An Improved YOLOv5 Model for Remote Sensing Small Target Detection. Sensors 2024, 24, 2193. [Google Scholar] [CrossRef]

- Hanley, J.A.; McNeil, B.J. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 1982, 143, 29–36. [Google Scholar] [CrossRef] [PubMed]

- Rosebrock, A. Intersection over Union (IoU) for Object Detection. 2016. Available online: https://www.pyimagesearch.com/2016/11/07/intersection-over-union-iou-for-object-detection/ (accessed on 18 May 2021).

- Rane, N.; Kaya, O.; Rane, J. Advancing the Sustainable Development Goals (SDGs) through artificial intelligence, machine learning, and deep learning. Artif. Intell. Mach. Learn. Deep. Learn. Sustain. Ind. 2024, 5, 2–74. [Google Scholar]

- Zhang, W.; Sun, H.; Chen, X.; Li, X.; Yao, L.; Dong, H. Research on weed detection in vegetable seedling fields based on the improved YOLOv5 intelligent weeding robot. J. Graph. 2023, 44, 346. [Google Scholar]

- Yang, Z.; Li, H. Improved weed recognition algorithm based on YOLOv5-SPD. J. Shanghai Univ. Eng. Sci. Gongcheng Jishu Daxue Xuebao 2024, 38, 75–82. [Google Scholar]

- Jiawei, H.; Xia, L.; Fangtao, D.; Mengchao, H.; Xiwang, D.; Tengfei, T. Research on weed recognition and positioning method of laser weeding robot based on deep learning. J. Tianjin Univ. Technol. 2024, 40, 1–9. [Google Scholar]

- Chu, B.; Shao, R.; Fang, Y.; Lu, Y. Weed Detection Method Based on Improved YOLOv8 with Neck-Slim. In Proceedings of the 2023 China Automation Congress (CAC), Chongqing, China, 17–19 November 2023; pp. 9378–9382. [Google Scholar]

- Tesema, S.N.; Bourennane, E.B. Denseyolo: Yet faster, lighter and more accurate yolo. In Proceedings of the 2020 11th IEEE Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 4–7 November 2020; pp. 0534–0539. [Google Scholar]

- Chen, H.; Jin, H.; Lv, S. YOLO-DSD: A YOLO-Based Detector Optimized for Better Balance between Accuracy, Deployability and Inference Time in Optical Remote Sensing Object Detectionc. Appl. Sci. 2022, 12, 7622. [Google Scholar] [CrossRef]

- Hammad, A.; Moretti, S.; Nojiri, M. Multi-scale cross-attention transformer encoder for event classification. J. High Energy Phys. 2024, 2024, 144. [Google Scholar] [CrossRef]

- Li, R.; Li, Y.; Qin, W.; Abbas, A.; Li, S.; Ji, R.; Wu, Y.; He, Y.; Yang, J. Lightweight Network for Corn Leaf Disease Identification Based on Improved YOLO v8s. Agriculture 2024, 14, 220. [Google Scholar] [CrossRef]

- Min, X.; Zhou, W.; Hu, R.; Wu, Y.; Pang, Y.; Yi, J. Lwuavdet: A lightweight uav object detection network on edge devices. IEEE Internet Things J. 2024, 11, 24013–24023. [Google Scholar] [CrossRef]

- Selmy, H.A.; Mohamed, H.K.; Medhat, W. Big data analytics deep learning techniques and applications: A survey. Inf. Syst. 2024, 120, 102318. [Google Scholar] [CrossRef]

- Raja, R.; Nguyen, T.T.; Slaughter, D.C.; Fennimore, S.A. Real-time weed-crop classification and localisation technique for robotic weed control in lettuce. Biosyst. Eng. 2020, 192, 257–274. [Google Scholar] [CrossRef]

- Qin, L.; Xu, Z.; Wang, W.; Wu, X. YOLOv7-Based Intelligent Weed Detection and Laser Weeding System Research: Targeting Veronica didyma in Winter Rapeseed Fields. Agriculture 2024, 14, 910. [Google Scholar] [CrossRef]

- Rakhmatulin, I.; Andreasen, C. A Concept of a Compact and Inexpensive Device for Controlling Weeds with Laser Beams. Agronomy 2020, 10, 1616. [Google Scholar] [CrossRef]

- Yu, Z.; He, X.; Qi, P.; Wang, Z.; Liu, L.; Han, L.; Huang, Z.; Wang, C. A Static Laser Weeding Device and System Based on Fiber Laser: Development, Experimentation, and Evaluation. Agronomy 2024, 14, 1426. [Google Scholar] [CrossRef]

- Xiong, Y.; Ge, Y.; Liang, Y.; Blackmore, S. Development of a prototype robot and fast path-planning algorithm for static laser weeding. Comput. Electron. Agric. 2017, 142, 494–503. [Google Scholar] [CrossRef]

- Chen, B.; Tojo, S.; Watanabe, K. Machine Vision for a Micro Weeding Robot in a Paddy Field. Biosyst. Eng. 2003, 85, 393–404. [Google Scholar] [CrossRef]

- Coleman, G.; Betters, C.; Squires, C.; Leon-Saval, S.; Walsh, M. Low Energy Laser Treatments Control Annual Ryegrass (Lolium rigidum). Front. Agron. 2021, 2, 601542. [Google Scholar] [CrossRef]

- Wangwang, W.; Zhenyang, G.; Yingjie, Y.; Huaifeng, Y.; Haoran, Z. Research on the application of laser weed control technology in upland rice fields. Agric. Eng. 2013, 3, 5–7. [Google Scholar]

- Kim, G.H.; Kim, S.C.; Hong, Y.K.; Han, K.S.; Lee, S.G. A robot platform for unmanned weeding in a paddy field using sensor fusion. In Proceedings of the 2012 IEEE International Conference on Automation Science and Engineering (CASE), Seoul, Republic of Korea, 20–24 August 2012; pp. 904–907. [Google Scholar]

- Kameyama, K.; Umeda, Y.; Hashimoto, Y. Simulation and experiment study for the navigation of the small autonomous weeding robot in paddy fields. In Proceedings of the The Society of Instrument and Control Engineers-SICE, Nagoya, Japan, 14–17 September 2013; pp. 1612–1617. [Google Scholar]

- Zhu, H.; Zhang, Y.; Mu, D.; Bai, L.; Zhuang, H.; Li, H. YOLOX-based blue laser weeding robot in corn field. Front. Plant Sci. 2022, 13, 1017803. [Google Scholar] [CrossRef]

- Xingye, M.; Bing, Y.; Jiayi, R.; Zhouchao, L.; He, Y. Intelligent laser weeding device based on STM32 microcontroller. J. Tianjin Univ. Technol. 2023, 39, 1–9. [Google Scholar]

- Danlei, M. Research on the Actuator and Recognition Algorithm of Laser Weeding Robot. Master’s Thesis, Kunming University of Science and Technology, Kunming, China, 2022. [Google Scholar]

- Chen, D.; Xiangping, F.; Yongke, L.; Shihao, Z.; Qin, S.; Junjie, Z. Research on cotton seedling and weed recognition method in Xinjiang based on improved YOLOv5. Comput. Digit. Eng. 2023, 51, 1144–1149. [Google Scholar]

- Panboonyuen, T.; Thongbai, S.; Wongweeranimit, W.; Santitamnont, P.; Suphan, K.; Charoenphon, C. Object detection of road assets using transformer-based YOLOX with feature pyramid decoder on thai highway panorama. Information 2021, 13, 5. [Google Scholar] [CrossRef]

- Debiao, M.; Dengyong, T.; Pan, L.; Yun, W.; Mingyu, H. Intelligent perception and precise control system design of laser weeding robot. China Sci. Technol. Inf. 2023, 29, 81–85. [Google Scholar]

- Maram, B.; Das, S.; Daniya, T.; Cristin, R. A Framework for Weed Detection in Agricultural Fields Using Image Processing and Machine Learning Algorithms. In Proceedings of the 2022 International Conference on Intelligent Controller and Computing for Smart Power (ICICCSP), Hyderabad, India, 21–23 July 2022; pp. 1–6. [Google Scholar]

- Ge, Z.Y.; Wu, W.W.; Yu, Y.J.; Zhang, R.Q. Design of mechanical arm for laser weeding robot. Appl. Mech. Mater. 2013, 347, 834–838. [Google Scholar] [CrossRef]

- Wangwang, W. Research on the Actuator of Laser Weeding Robot. Ph.D. Thesis, Kunming University of Science and Technology, Kunming, China, 2014. [Google Scholar]

- Andreasen, C.; Vlassi, E.; Salehan, N. Laser weeding: Opportunities and challenges for couch grass (Elymus repens (L.) Gould) control. Sci. Rep. 2024, 14, 11173. [Google Scholar] [CrossRef]

- Sethia, G.; Guragol, H.K.S.; Sandhya, S.; Shruthi, J.; Rashmi, N. Automated Computer Vision based Weed Removal Bot. In Proceedings of the 2020 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 2–4 July 2020; pp. 1–6. [Google Scholar]

- Fatima, H.S.; Ul Hassan, I.; Hasan, S.; Khurram, M.; Stricker, D.; Afzal, M.Z. Formation of a Lightweight, Deep Learning-Based Weed Detection System for a Commercial Autonomous Laser Weeding Robot. Appl. Sci. 2023, 13, 3997. [Google Scholar] [CrossRef]

- Dhinesh, S.; Nagarajan, P.; Raghunath, M.; Sundar, S.; Dhanushree, N.; Pugazharasu, S. AI Based Weed Locating and Deweeding using Agri-Bot. In Proceedings of the 2023 Third International Conference on Smart Technologies, Communication and Robotics (STCR), Suryamangalam, India, 9–10 December 2023; Volume 1, pp. 1–6. [Google Scholar]

- Raffik, R.; Mayukha, S.; Hemchander, J.; Abishek, D.; Tharun, R.; Kumar, S.D. Autonomous weeding robot for organic farming fields. In Proceedings of the 2021 International Conference on Advancements in Electrical, Electronics, Communication, Computing and Automation (ICAECA), Coimbatore, India, 8–9 October 2021; pp. 1–4. [Google Scholar]

- Patnaik, A.; Narayanamoorthi, R. Weed removal in cultivated field by autonomous robot using LABVIEW. In Proceedings of the 2015 International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), Coimbatore, India, 19–20 March 2015; pp. 1–5. [Google Scholar]

- Zhang, H.; Zhong, J.X.; Zhou, W. Precision optical weed removal evaluation with laser. In Proceedings of the CLEO: Applications and Technology, San Jose, CA, USA, 7–12 May 2023; Optica Publishing Group: Washington, DC, USA, 2023; p. JW2A.145. [Google Scholar]

- Jian, W.; Yuguang, Q. Farmland grass seedling detection method based on improved YOLO v5. Jiangsu Agric. Sci. 2024, 52, 197–204. [Google Scholar]

- Zatari, A.; Hoor, B.; Nasereddin, N. Intelligent Weeding Robot Using Deep-Learning. 2022. Available online: https://scholar.ppu.edu/handle/123456789/8955 (accessed on 1 June 2022).

- Zhang, J.L.; Su, W.H.; Zhang, H.Y.; Peng, Y. SE-YOLOv5x: An Optimized Model Based on Transfer Learning and Visual Attention Mechanism for Identifying and Localizing Weeds and Vegetables. Agronomy 2022, 12, 2061. [Google Scholar] [CrossRef]

- Khalid, N.; Elkhiri, H.; Oumaima, E.; ElFahsi, N.; Zahra, Z.F.; Abdellatif, K. Revolutionizing Weed Detection in Agriculture through the Integration of IoT, Big Data, and Deep Learning with Robotic Technology. In Proceedings of the 2023 3rd International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Tenerife, Canary Islands, Spain, 19–21 July 2023. [Google Scholar]

- Wang, Z.; Yang, Z.; Song, X.; Zhang, H.; Sun, B.; Zhai, J.; Yang, S.; Xie, Y.; Liang, P. Raman spectrum model transfer method based on Cycle-GAN. Spectrochim. Acta A 2024, 304, 123416. [Google Scholar] [CrossRef]

- Aravind, R.; Daman, M.; Kariyappa, B.S. Design and development of automatic weed detection and smart herbicide sprayer robot. In Proceedings of the 2015 IEEE Recent Advances in Intelligent Computational Systems (RAICS), Trivandrum, India, 10–12 December 2015; pp. 257–261. [Google Scholar]

- Qiao, H.; Yang, X.; Liang, Z.; Liu, Y.; Ge, Z.; Zhou, J. A Method for Extracting Joints on Mountain Tunnel Faces Based on Mask R-CNN Image Segmentation Algorithm. Appl. Sci. 2024, 14, 6403. [Google Scholar] [CrossRef]

| Step | Description | Methods |

|---|---|---|

| Perception Layer | Responsible for obtaining image data of fields and other areas to provide basic materials for subsequent weed identification. | Binocular Camera, USB camera, USB camera |

| Decision Layer | By analyzing and processing the image data transmitted from the perception layer, the location and characteristics of weeds can be accurately identified. | BP, CNN, ANN, ResNet, GoogleNet, Transformer, YOLO |

| Execution Layer | Based on the weed information identified by the decision-making layer, lasers are emitted in a targeted manner to achieve precise weeding operations. | Semiconductor laser, carbon dioxide laser |

| Model | Deep Learning Technology | Method | Advantage |

|---|---|---|---|

| YOLO + ResNet [29] | • YOLO | Add two residual units to the second block; modify six DBL units before detection layer | Improve feature reuse, enhance small target understanding and accuracy |

| YOLO + SPP5 [30] | • YOLO | Design SPP5 module with refined pooling kernels; create YOLOv4-SPP2-5 model | Replace first SPP of YOLOv4 with SPP5, add second SPP for multi-scale feature capture |

| YOLO + DenseNet [31] | • YOLO | Merge channels across layers; add DenseNet layer | Reduce calculations, speed up training, optimize resource use |

| YOLO Network Branch [32] | • YOLO | Add network branch, adjust anchor box, filter samples by mask | Balance positive and negative samples, improve sample learning |

| Faster R-CNN [33] | • CNN | Migrate Faster RCNN to TensorFlow, simplify | Optimize training and testing speeds on COCO dataset |

| Mask R-CNN [34] | • CNN | Replace ROI-Pooling with ROIAlign, add FCN head | Create accurate masks, avoid feature misalignment |

| SSD + Anti-residual Module [35] | • SSD | Use a series of convolutions and sum layer | Enhance image perception, improve detection accuracy |

| SSD + Hierarchical feature fusion [36] | • SSD | Sum and concatenate hole convolution outputs | Utilize feature-scale differences, complement features |

| Efnet-1 [37] | • EfficientNet | Comprise normal and MB convolution modules, connect to classifier | Extract multi-scale features, enhance feature representation |

| Key Issues | Solutions | Reference |

|---|---|---|

| Small object-detection problem | (1) Introduce the Convolutional Block Attention Module (CBAM) into the backbone feature-extraction network of the YOLOv5 target-detection algorithm and add the Transformer module. | [69] |

| (2) Combine the collaborative attention (CA) and the receptive field block (RFB) module to improve the backbone network, introduce the CA attention mechanism, use the CARAFE upsampling method, and adopt WIoU v3 to replace the CIoU loss function. | [70] | |

| (3) The YOLOX algorithm is optimized by adding lightweight attention modules, adding deconvolution layers, using GIoU instead of IoU, etc., to improve detection accuracy, enhance the algorithm’s ability to extract small-size features, and improve the accuracy of the predicted box position. | [71] | |

| The balance between speed and accuracy | (1) Optimize the Neck layer of YOLOv8 using GSConv and VoV-GSCSP to improve the accuracy and inference speed of the model | [72] |

| (2) Reshape the subsequent layers so that the new output tensor corresponds to an pixel grid cell instead of 32 × 32 as in YOLOv2. Add two blocks consisting of a convolutional layer, a batch normalization layer, and a leaky ReLU activation layer after the reshape, and finally add an output convolutional layer | [73] | |

| (3) A new feature-extraction module DenseRes Block is proposed to replace the CSP Block in the backbone network CSP DarkNet in YOLOv4. The DenseRes Block consists of several series residual structures and shortcut connections with the same topology, which can better extract features while reducing the amount of calculation and inference time. | [74] |

| Applications | Goal | Method | Reference |

|---|---|---|---|

| Vegetable plot | • Avoid the intensive labor of manual weeding and reduce food production costs | (1) Intelligent weed detection and laser weeding system to achieve the accurate positioning and removal of weeds | [79] |

| • Achieve the accurate detection of weeds in vegetable seedling fields, which has potential practical value in the research and development of smart agricultural equipment, etc. | (2) Use crop-marking technology and a machine-vision system | [80] | |

| Laboratory environment | • Demonstrate the feasibility of laser weeding equipment | Designed and tested a laser-based weed control device that controls weeds by irradiating the weed stems with lasers | [81] |

| • Provide technical support for the realization of automated agricultural machinery precision fertilization, pesticide application and weeding | |||

| Orchard | • Improve weed-control efficiency and reduce environmental impact | (1) Developed a static, movable, liftable and adjustable enclosed fiber laser weeding equipment and system | [82] |

| • Improve weed-control efficiency and accuracy and reduce costs | (2) Designed and produced a prototype of a laser weeding robot based on STM32, using color training and morphological feature recognition algorithms to improve weed recognition accuracy | [83] | |

| Rice fields | • Increase rice yield and reduce labor input • Reduce herbicide use and reduce environmental impact | (1) Use machine vision systems to identify weeds and crops in rice fields and guide robots to perform precise weeding. | [84] |

| • Improve the accuracy of crop and associated weed identification and detection under complex backgrounds | (2) Use low-energy laser processing to control the growth of weeds by irradiating specific parts of the weeds. | [85] | |

| (3) Use image processing to identify weeds, determine the amount of laser required for weeding, and use a fixed step length to perform weeding operations. | [86] | ||

| (4) Develop an unmanned weeding robot platform and achieve stable autonomous navigation and weeding operations through sensor fusion. | [87] | ||

| (5) Develop a small two-wheeled autonomous weeding robot that uses GPS and directional sensors for navigation, taking into account the effects of soil and GPS errors to achieve precise weeding operations. | [88] | ||

| Cornfield | • Increase corn yield, reduce the impact of weeds on corn, and reduce labor intensity | (1) Identify crops and weeds through the YOLOX network and calculate the coordinates of weeds using the triangular similarity principle. | [89] |

| • Achieve the rapid identification and positioning of weed meristems based on laser weeding | (2) Upload images and send control signals through the WiFi module. | [90] | |

| • Improve the accuracy and efficiency of weed identification and provide accurate targeting for laser weeding | (3) Optimize the YOLOX algorithm and use self-made data sets for training and testing. | [87] | |

| (4) Test a new static weeding path-planning algorithm, using an image-processing algorithm based on the color and size differences of crops and weeds to separate crops and weeds from the field background, detect the type of foreground and output the location information of weeds. | [91] | ||

| (5) Design and trial-produce a laser weeding robot prototype, conduct field trials, and optimize the weed recognition algorithm. | [92] | ||

| (6) Introduce a feature-extraction method based on wavelet transform to classify and identify weeds, and accurately control the sprayer to spray herbicides according to the weed location. | [86] | ||

| Cotton Field | • Reduce the use of chemical herbicides | Use the YOLOX network to identify weeds, calculate the weed coordinates through monocular ranging, and control the end of the robotic arm to emit laser to weed. | [93] |

| lawn | • Achieve efficient, accurate and environmentally friendly weed-control operations | (1) Designed and manufactured a laser weeding device based on a single-chip microcomputer, which senses and identifies weeds through sensors or cameras | [94] |

| • Reduce dependence on chemical agents and reduce pollution to the environment | (2) Designed and trained a CNN model for weed detection and classification, combining a laser range finder (LRF) and an inertial measurement unit (IMU) to detect rice seedlings and obstacles, and achieve automatic weeding | [95] |

| Problem | Solution | Reference |

|---|---|---|

| Traditional weed control methods are labor intensive | (1) Designed and manufactured a laser weeding robot based on a robotic arm, which achieves weeding through the cooperation of the robotic arm and laser. | [96] |

| (2) Used the blue laser as the weeding actuator to design an intelligent laser weeding device. | [90] | |

| (3) Designed and studied the actuator of the laser weeding robot, including laser control, weed recognition, robot field positioning and navigation, etc. | [97] | |

| Mechanical weeding may damage crop roots, harm beneficial organisms, and affect soil structure. Chemical weeding is harmful to humans and the environment. | (1) Use low-energy laser treatment to control the growth of weeds by irradiating specific parts of the weeds. | [85] |

| (2) Experimentally study the effects of the laser on the growth and development of weeds at different growth stages and determine the optimal timing and dosage for weed control. | [26] | |

| (3) Study the effect of laser on weed control and create a weed damage model. | [84] | |

| (4) Study the effect of laser on Elymus repens and experimentally determine the laser dose and number of irradiations required to kill this weed. | [98] | |

| Traditional herbicides have resistance problems | (1) Use small autonomous laser weeding vehicles to reduce the use of chemical herbicides and reduce the impact on the environment and organisms. | [3] |

| (2) Use CO2 laser cutting systems and laser-pointer triangulation systems to replace pesticides for weeding. | [22] | |

| (3) Develop an automatic weeding robot that detects the location of weeds in real time and removes them through image recognition and processing technology, avoiding the use of harmful chemicals. | [99] | |

| Traditional computer-vision methods have difficulty detecting weeds in natural scenes | (1) Develop a deep learning-based weed detection model | [100] |

| (2) Design and manufacture Agri-Bot, which uses image processing and AI technology to identify and locate weeds | [101] | |

| (3) Use advanced sensors and AI technology to accurately identify and remove weeds | [102] | |

| Large weeding robots are not suitable for farmland environment in southwest China | (1) The design of a small blue laser weeding robot | [89] |

| (2) The use of a small autonomous robot to automatically detect and remove weeds in farmland | [103] | |

| Traditional detection algorithms have low recognition accuracy for small-sized weeds and obscured weeds | (1) Use laser reflection to identify changes in weeds, and use the Cascade RCNN deep learning method to detect and locate weeds after laser irradiation. | [104] |

| (2) Use GSConv and VoV-GSCSP to optimize the Neck layer of YOLOv8 to improve the accuracy and inference speed of the model and achieve weed detection. | [72] | |

| (3) Combine the collaborative attention CA and the receptive field block (RFB) module to improve the backbone network and introduce the CA attention mechanism. | [105] | |

| Wheeled mobile robots are susceptible to uncertainty and interference during operation | (1) Control the robot’s movement and weeding operations through a smartphone to achieve automated weeding | [106] |

| (2) Use a dual-servo system to adjust the laser emission angle to achieve precise weeding | [98] | |

| (3) Propose an RNN-based tracking system to coordinate multiple controllers to achieve predetermined path tracking | [8] | |

| (4) Improve the planning method and use a rolling-view observation model and biodiversity-aware weeding method | [1] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Song, C.; Xu, T.; Jiang, R. Precision Weeding in Agriculture: A Comprehensive Review of Intelligent Laser Robots Leveraging Deep Learning Techniques. Agriculture 2025, 15, 1213. https://doi.org/10.3390/agriculture15111213

Wang C, Song C, Xu T, Jiang R. Precision Weeding in Agriculture: A Comprehensive Review of Intelligent Laser Robots Leveraging Deep Learning Techniques. Agriculture. 2025; 15(11):1213. https://doi.org/10.3390/agriculture15111213

Chicago/Turabian StyleWang, Chengming, Caixia Song, Tong Xu, and Runze Jiang. 2025. "Precision Weeding in Agriculture: A Comprehensive Review of Intelligent Laser Robots Leveraging Deep Learning Techniques" Agriculture 15, no. 11: 1213. https://doi.org/10.3390/agriculture15111213

APA StyleWang, C., Song, C., Xu, T., & Jiang, R. (2025). Precision Weeding in Agriculture: A Comprehensive Review of Intelligent Laser Robots Leveraging Deep Learning Techniques. Agriculture, 15(11), 1213. https://doi.org/10.3390/agriculture15111213