3.3. DMN-YOLO Model Performance

The detection performance metrics of the DMN-YOLO model for various disease categories are shown in

Table 3. The results indicate that DMN-YOLO demonstrates robust recognition capabilities and performs effectively in diverse apple leaf disease detection tasks. Specifically, for brown spot disease, the model achieved a precision of 86.7%, a recall of 86.5%, and a mAP@50 of 98.9%. In the case of frogeye leaf spot, the precision reached 88.3%, the recall was 94.2%, and mAP@50 attained 97.9%. These experimental findings confirm that DMN-YOLO provides high detection accuracy across multiple disease types, underscoring its potential for precise identification and monitoring in agricultural disease management applications.

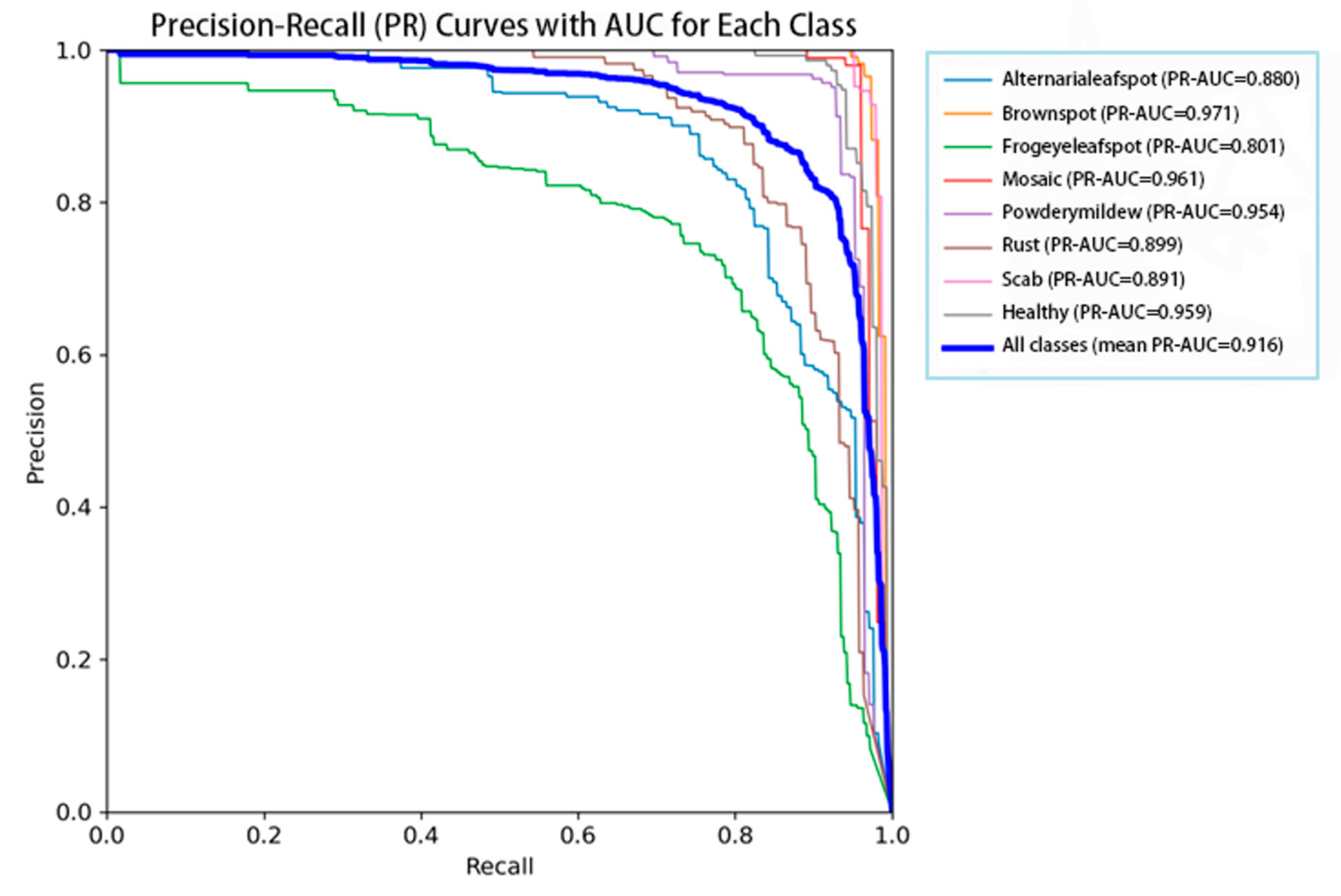

To assess the classification performance of the proposed model, the area under the precision–recall curve (PR-AUC) was calculated for seven apple leaf disease categories and healthy leaves. As shown in

Figure 9, the average PR-AUC reached 91.6%, indicating that the model achieves a good balance between precision and recall across all classes. The highest PR-AUC values were obtained for brown spot (97.1%) and mosaic (96.1%), suggesting that these categories have more distinctive visual features or a higher representation in the training dataset. In contrast, the lowest PR-AUC was recorded for frogeye leaf spot (80.1%), which may be attributed to inter-class similarity or class imbalance. For the healthy class, the PR-AUC reached 95.9%, demonstrating the model’s effectiveness in distinguishing between healthy and diseased leaves—an essential requirement for practical agricultural applications. Overall, the results demonstrate that the proposed DMN-YOLO model achieves robust and reliable performance in multi-class apple leaf disease recognition.

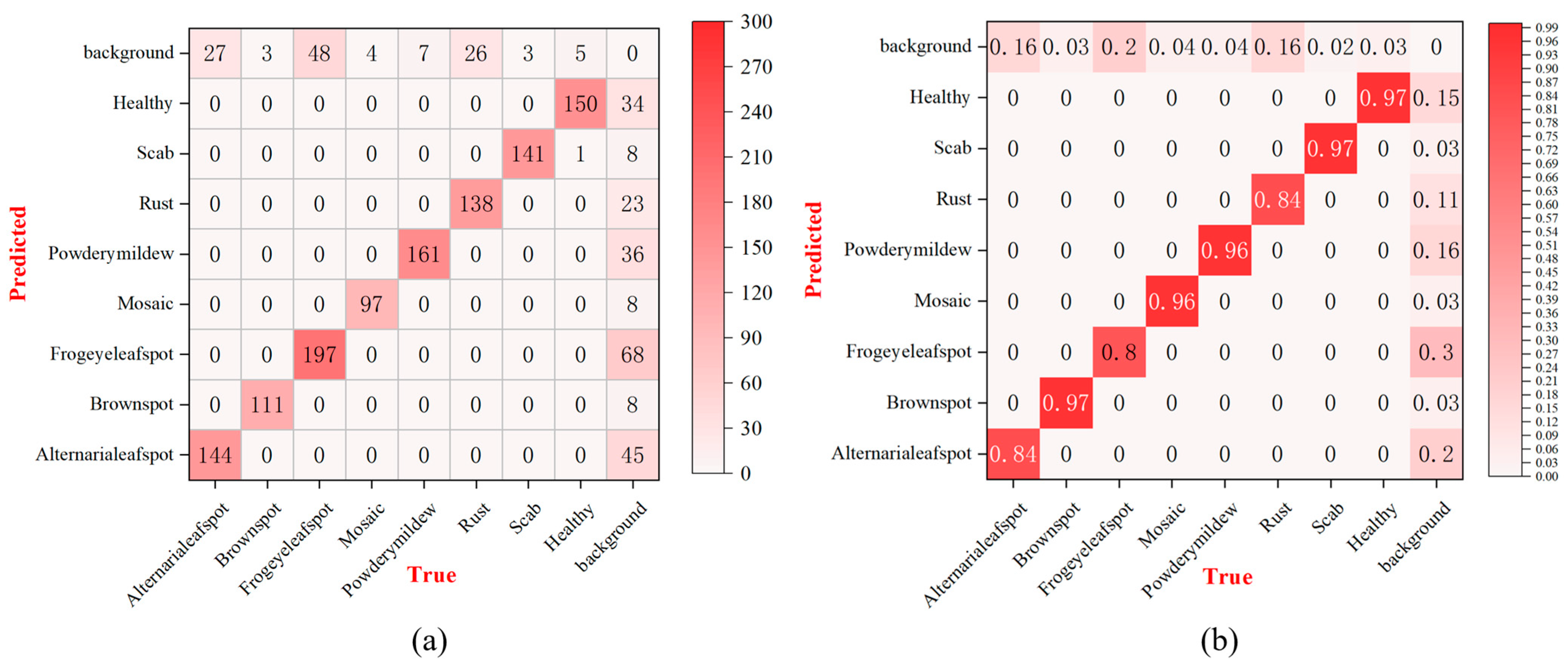

Figure 10 presents the confusion matrix analysis results for the proposed DMN-YOLO model in the task of apple leaf disease classification.

Figure 10a, which displays the absolute prediction counts, shows that the model achieves excellent classification performance for disease types such as powdery mildew (161 correct predictions), scab (141), and frogeye leaf spot (197), demonstrating its strong capability in accurately distinguishing these categories. However, some misclassifications are observed in categories like Alternaria leaf spot and healthy leaves, where samples are occasionally confused with the background or other diseases due to visual similarities and complex image backgrounds.

Figure 10b, the normalized confusion matrix, further emphasizes the model’s high detection accuracy across most categories, with powdery mildew and scab achieving 96.0% and 97.0% accuracy, respectively, and brown spot reaching 97.0%. Although the recall rate for Alternaria leaf spot is slightly lower at 84.0%, the model still exhibits robust overall performance. These results confirm the effectiveness and reliability of the DMN-YOLO model, highlighting its potential for accurate and practical apple leaf disease identification in intelligent agricultural systems.

3.4. Ablation Experiment

An ablation study was conducted to assess the individual contribution of each proposed module to the overall detection performance.

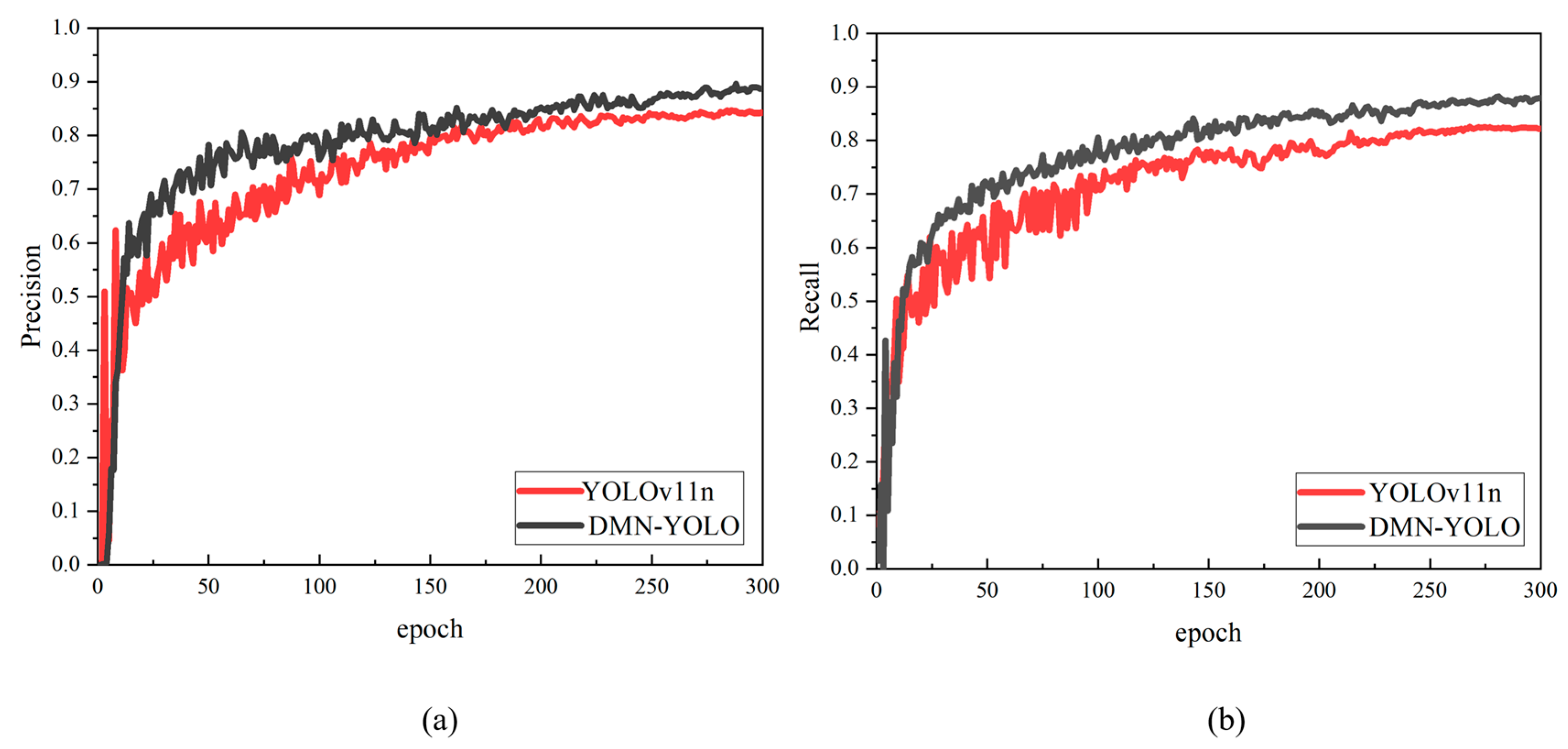

Table 4 illustrates that integrating the MAFPN module into the baseline model resulted in a 2.1% increase in precision, a 1.6% increase in recall, a 3.4% improvement in mAP@50, and a 1.8% gain in the F1-score. These results demonstrate that MAFPN effectively enhances multi-scale feature aggregation and significantly improves the model’s capability to recognize targets of varying scales. Following the integration of the RepHDWConv module in the neck structure, precision experienced a slight decline of 0.2%; however, recall and the F1-score increased by 2.1% and 1.0%, respectively. This indicates that while maintaining lightweight architecture, the module enhances local feature extraction and provides a stronger representation of object boundaries and texture details. Substituting the original detection head with the RT-DETR detection head (DetrHead) from the RT-DETR framework resulted in precision of 86.9%, a mAP@50 of 91.8%, and an F1-score of 86.6%. These improvements highlight the module’s superior capacity for modeling global contextual relationships and enhancing localization accuracy, particularly in complex scenes. Additionally, replacing the conventional bounding box regression loss with the normalized Wasserstein distance (NWD) loss function yielded increases of 3.5%, 2.1%, and 3.0% in precision, recall, and F1-score, respectively. This modification notably enhances the model’s ability to detect small and densely distributed objects. Overall, the optimized DMN-YOLO model achieved precision, recall, mAP@50, and F1-score values of 88.8%, 87.9%, 93.2%, and 88.1%, respectively. Compared to the YOLOv11n model, this represents improvements of 5.5% in precision, 3.4% in recall, 5.0% in mAP@50, and 4.2% in the F1-score. These ablation results comprehensively validate the effectiveness and superiority of the proposed enhancements. As illustrated in

Figure 11, a comparison of the precision–recall curves between the DMN-YOLO and YOLOv11n models further confirms the former’s strong performance in apple leaf disease detection tasks.

Figure 12 presents the XGrad-CAM [

28,

29] visualization heatmaps of the model before and after the enhancements, providing an intuitive representation of the model’s attention to critical regions. Areas with higher heat intensity correspond to regions that the model identifies as important features. The YOLOv11n model exhibits a relatively diffuse attention distribution, which may result in inaccurate localization of diseased areas. In contrast, the improved DMN-YOLO model demonstrates a more concentrated and precise focus on pathological regions, thereby enhancing its capacity to detect various types of apple leaf diseases. These visual results, combined with quantitative performance improvements, further validate the enhanced model’s superior detection accuracy and demonstrate its effectiveness in practical agricultural disease monitoring applications.

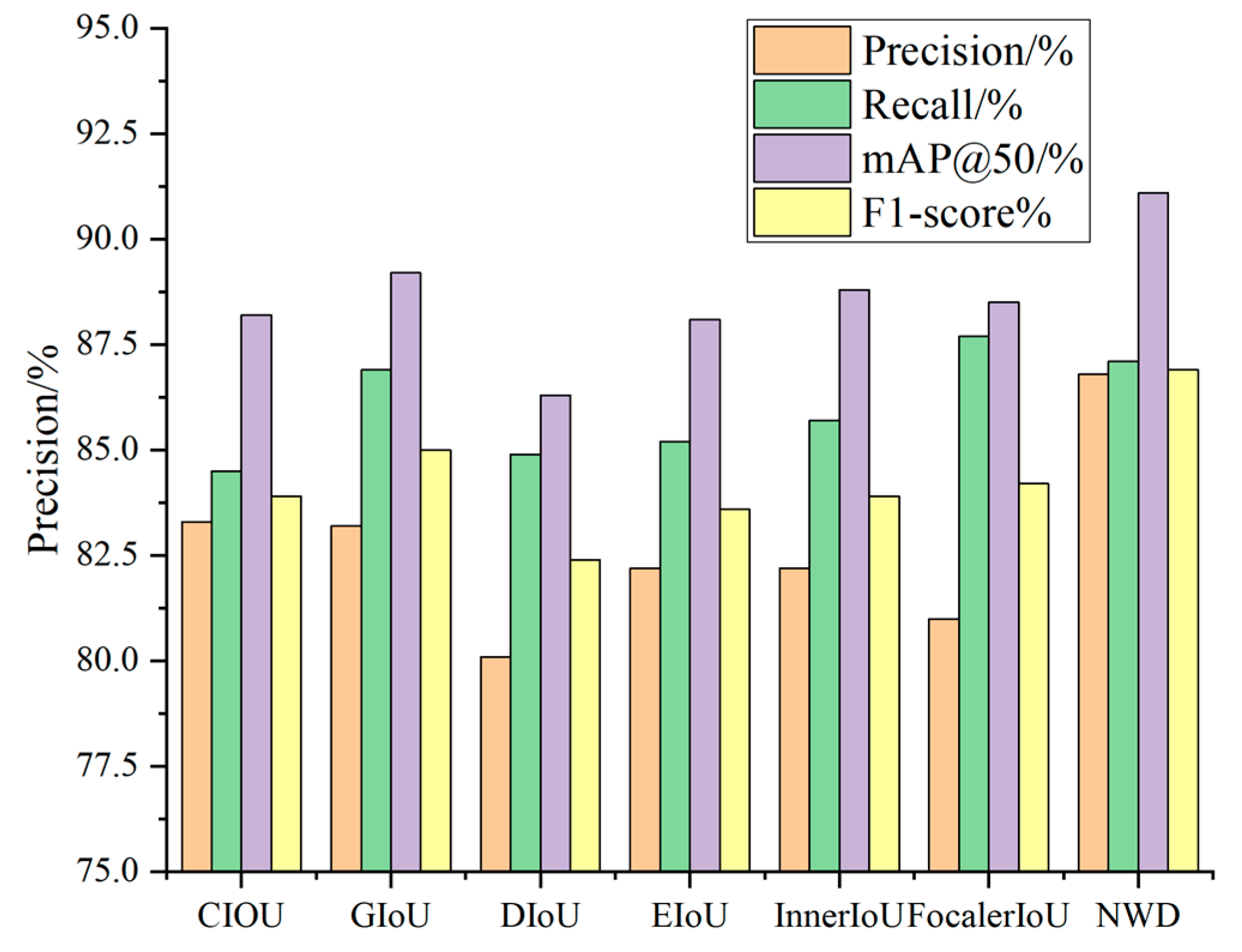

3.6. Comparison of YOLOv11n Models Using Different Loss Functions

To investigate the impact of various loss functions on the performance of the YOLOv11n model and identify the most effective one, this study replaces the original CIoU loss function in YOLOv11n with GIoU, DIoU, EIoU, InnerIoU, FocalerIoU, and NWD loss functions. Comparative experiments are conducted to evaluate their effects.

Table 6 presents the detection results for each loss function, while

Figure 12 provides a visual comparison of how different loss functions influence model performance.

As demonstrated in

Table 6, a comparison of the performance of various loss functions reveals that the NWD loss function significantly outperforms other widely used loss functions across multiple key metrics. Specifically, in comparison with the original CIoU loss function, NWD enhances precision by 3.5%, recall by 2.6%, mAP@50 by 2.9%, and the F1-score by 3.0%. When compared to the GIoU loss function, NWD achieves a 3.6% improvement in precision. In comparison with the DIoU loss function, NWD improves precision by 6.7% and mAP@50 by 4.8%. Furthermore, when compared to the EIoU loss function, NWD yields a 4.6% increase in precision and a 3.3% improvement in the F1-score. Similar to the InnerIoU loss function, NWD results in a 4.6% improvement in precision and a 3.0% increase in the F1-score. Although NWD slightly lags behind FocalerIoU in recall, it surpasses FocalerIoU by 5.8% in precision. As shown in

Figure 13, the NWD loss function achieves a good balance between precision and recall while also improving detection accuracy, mAP@50, and the F1-score, confirming its outstanding results in apple leaf disease detection.

3.7. Comparative Experiments on Different Models

This study conducted a comprehensive comparison between DMN-YOLO and several mainstream object detection models, including YOLOv12n [

35], RT-DETR [

36], YOLOv10n [

37], YOLOv9s [

38], YOLOv9t [

39], YOLOv8n [

40], and YOLOv5n [

41]. The comparison was based on multiple performance metrics, including precision, recall, mAP@50 (mean average precision at IoU 0.5), the F1-score, and FPS (frames per second, indicating inference speed), as summarized in

Table 7.

The proposed DMN-YOLO model demonstrates superior performance compared to all mainstream models across all evaluation indicators. Specifically, on the training set, DMN-YOLO achieved a precision of 88.8%, a recall of 87.9%, a mAP@50 of 93.2%, and an F1-score of 88.3%, outperforming all other models in terms of detection accuracy. Moreover, it attained an inference speed of 235.3 FPS, significantly exceeding that of models such as YOLOv9s (58.2 FPS) and YOLOv10n (140.7 FPS), thereby highlighting its superior efficiency and real-time processing capabilities. On the validation set, DMN-YOLO continued to exhibit leading performance, with a precision of 90.0%, a recall of 89.0%, a mAP@50 of 93.7%, and an F1-score of 89.4%. These values notably surpass those of the strong baseline YOLOv12n (87.6% precision, 85.5% recall, 91.5% mAP@50, and 86.5% F1-score), further validating the enhanced generalization ability of the proposed model. In summary, DMN-YOLO offers comprehensive improvements in detection accuracy, recall, inference speed, and robustness, establishing itself as the most effective and reliable object detection framework among all the models evaluated in this study.

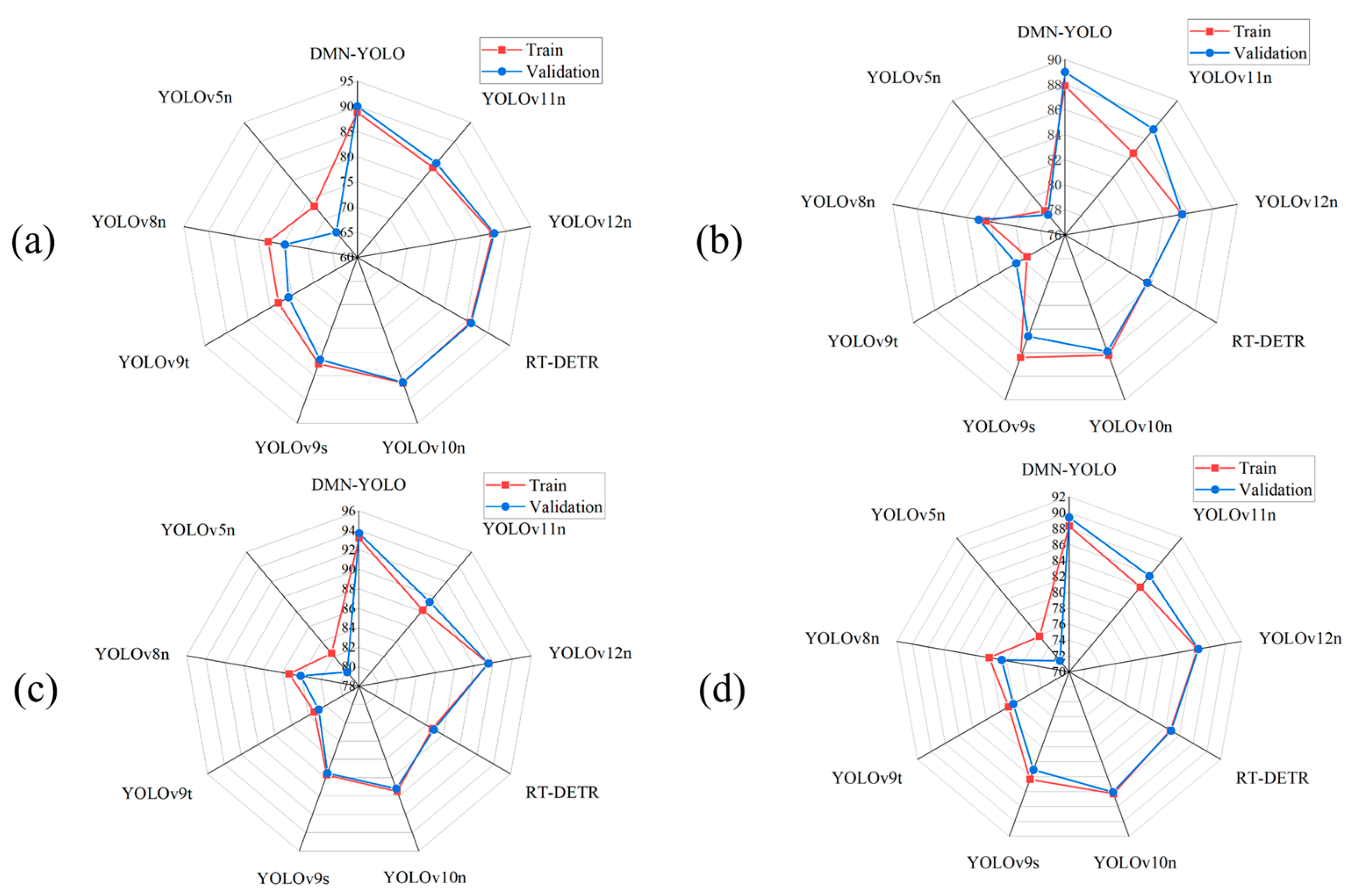

Figure 14 shows a comprehensive set of radar charts that compare the performance of DMN-YOLO with other mainstream object detection models. While advanced models like RT-DETR and the latest YOLO versions (e.g., YOLOv10n and YOLOv12n) exhibit competitive performance in certain indicators, they still fall short in comprehensive detection effectiveness when compared to DMN-YOLO. Notably, the minimal difference observed between the radar chart curves of the training and validation sets suggests that DMN-YOLO demonstrates robust generalization ability and minimal risk of overfitting. Overall, DMN-YOLO demonstrates superior performance, robustness, and stability across multiple key evaluation metrics, underscoring its effectiveness and applicability in practical scenarios involving apple leaf disease detection.

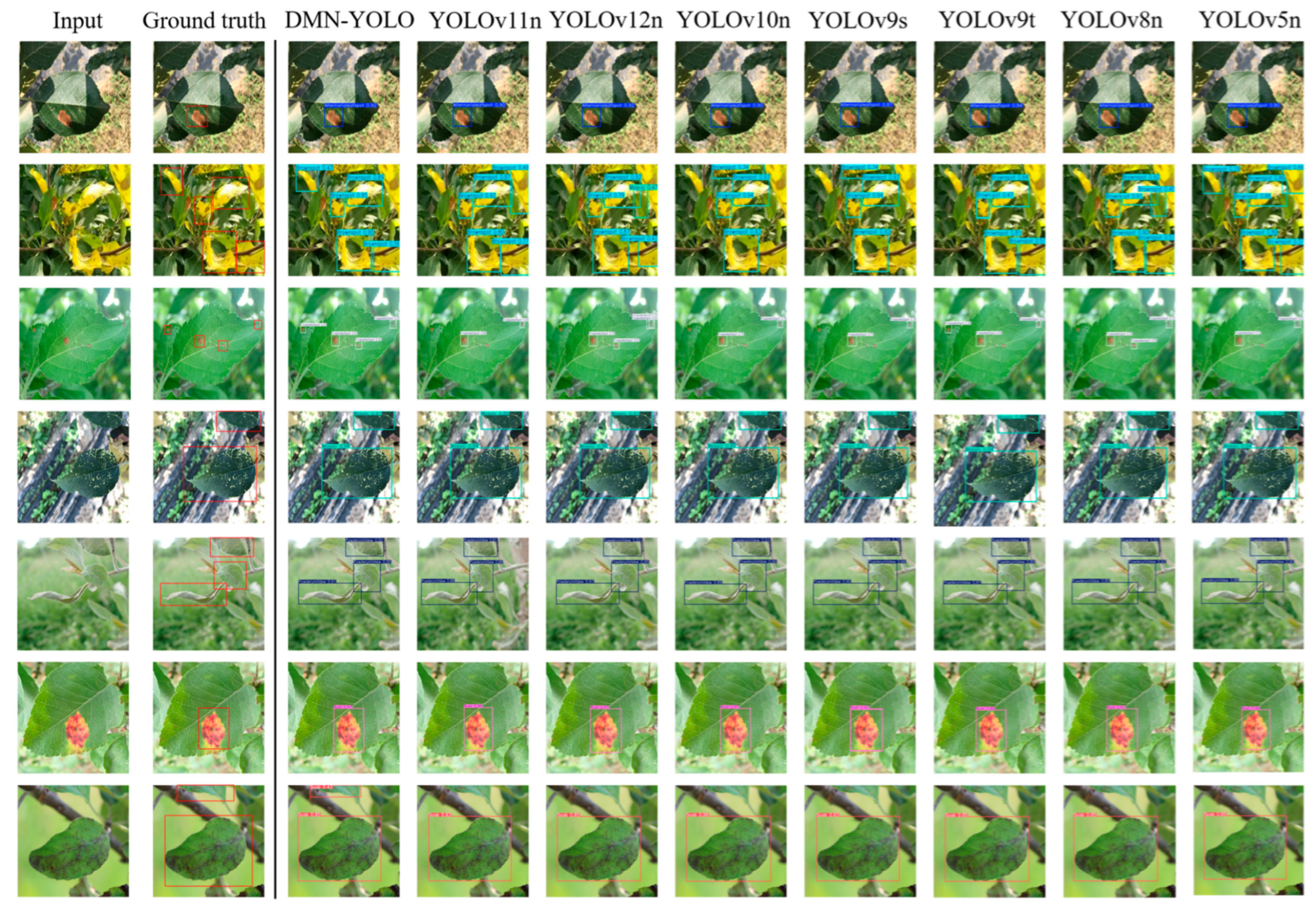

To intuitively illustrate the detection effectiveness of the proposed DMN-YOLO model,

Figure 15 provides a visual comparison with several representative YOLO-based models. The experiment randomly selects one representative image from each disease category in the test dataset. As shown in

Figure 14, the first column displays the input test images, followed by the ground-truth annotations, the third column displays the detection results of the DMN-YOLO model, and columns 4 through 10 depict the results of other detection models, including YOLOv11n, YOLOv12n, YOLOv10n, YOLOv9s, YOLOv9t, YOLOv8n, and YOLOv5n. Each row corresponds to a specific apple leaf disease category.

For the detection of leaf spot disease in the first row, the bounding boxes predicted by DMN-YOLO are closest to the ground truth, whereas models such as YOLOv10n and YOLOv5n exhibit inaccuracies such as oversized or misaligned bounding boxes. In the case of dense brown spot disease (second row), DMN-YOLO accurately detects all lesion areas and successfully differentiates between adjacent diseased regions. In contrast, YOLOv11n, YOLOv9s, and YOLOv9t suffer from missed detections or overlapping bounding boxes. The third row illustrates the detection of frogeye leaf spot disease, characterized by small and subtle lesions. DMN-YOLO demonstrates superior capability in recognizing all lesion areas while effectively avoiding confusion with leaf texture or shadows. Other models, including YOLOv9t and YOLOv8n, produce false positives in these scenarios. Moreover, under complex background conditions (rows 4 to 6), DMN-YOLO consistently maintains accurate localization of disease regions, whereas several YOLO variants are prone to errors due to background interference, leading to false detection results or misaligned bounding boxes. Overall, among all the compared detection models, DMN-YOLO yields detection results that are the most consistent with ground-truth annotations, demonstrating its excellent performance, robustness, and practical application potential in the context of apple leaf disease detection.

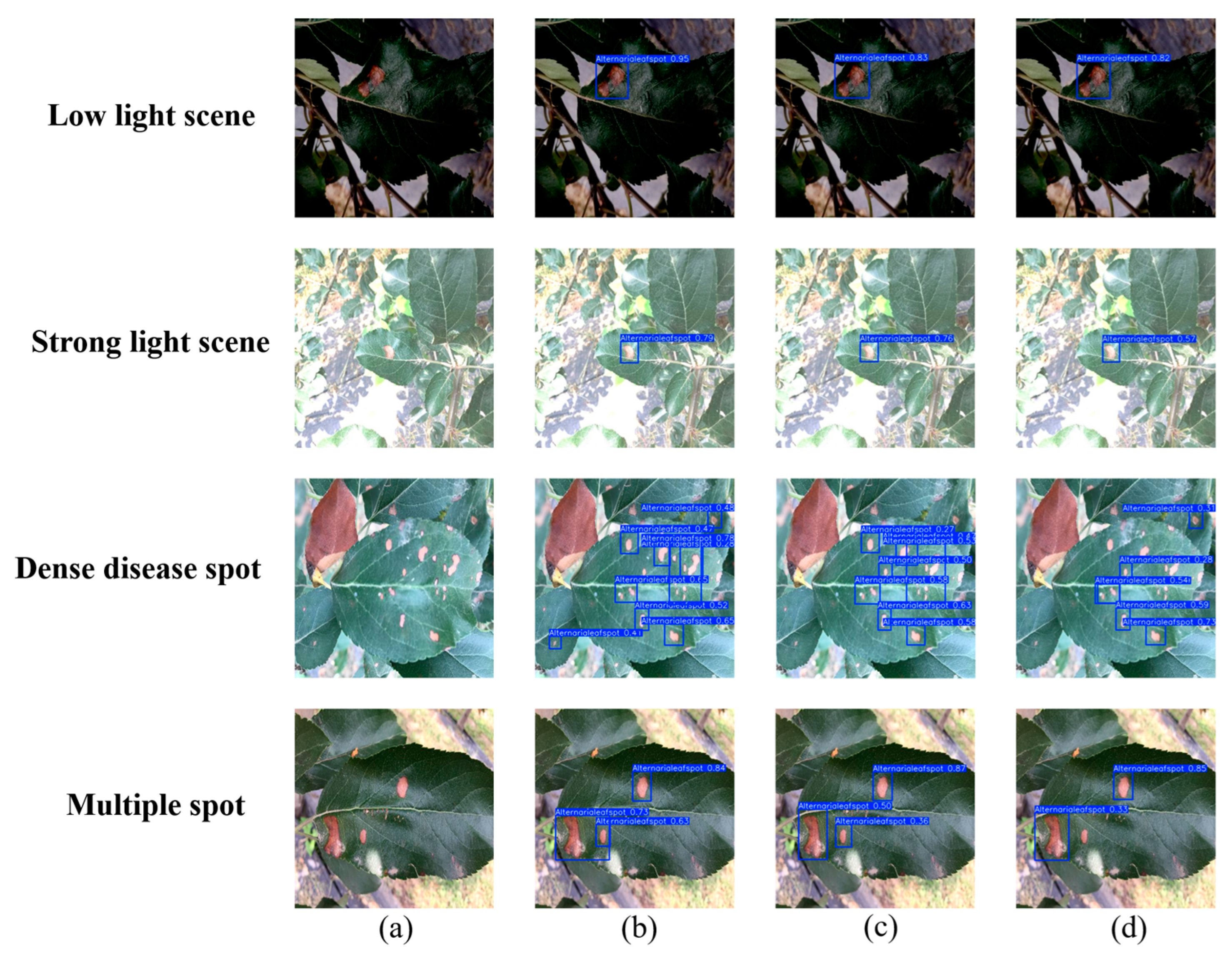

Figure 16 compares the detection performance of DMN-YOLO, YOLOv12n, and YOLOv11n on apple leaf disease images across four challenging conditions. The experimental results reveal that both YOLOv12n and YOLOv11n are prone to missing dark lesion areas, particularly in cases with strong background interference, and often suffer from inaccurate bounding box localization. In contrast, DMN-YOLO effectively identifies and localizes the lesion regions with high accuracy.

Under conditions of strong illumination, where background overexposure severely affects image quality, YOLOv12n and YOLOv11n exhibit significant instances of missed detections. However, DMN-YOLO demonstrates robust performance by maintaining the reliable detection of disease spots even in overexposed environments. In scenarios where lesions are densely clustered, YOLOv12n tends to merge adjacent lesions into one detection result, failing to capture the subtle differences between them. YOLOv11n will miss detections in this case. In contrast, DMN-YOLO shows superior performance, accurately distinguishing and detecting multiple closely spaced small lesions and achieving clear separation. Furthermore, in multi-spot scenarios, DMN-YOLO detects a greater number of lesion areas and delineates their boundaries more precisely, indicating superior capability in handling complex disease distribution patterns. These results highlight the robustness, precision, and fine-grained detection advantages of DMN-YOLO in diverse and challenging detection environments.

The detection results of disease images by different models under various scenarios, as illustrated in

Figure 4 and

Figure 5, align with the quantitative evaluation metrics presented in

Table 4. The comprehensive comparison demonstrates that the DMN-YOLO model outperforms other mainstream models in complex environmental conditions, exhibiting superior detection performance and robustness.

3.8. Generalization Experiment

To comprehensively evaluate the generalization capability of the proposed model across various crop disease recognition tasks, a generalization experiment was conducted using a grape leaf disease dataset. The disease composition of this dataset is presented in

Table 8, with representative disease images illustrated in

Figure 17. Given that grapes are a widely cultivated fruit crop, this experiment serves as an effective validation of the model’s robustness and adaptability when confronted with diverse data distributions. Consequently, it underscores the model’s potential applicability and versatility in real-world agricultural scenarios.

Table 9 shows the generalization performance of the DMN-YOLO model on the grape leaf disease dataset. The DMN-YOLO model achieved better performance than the YOLOv11n model on the training set across all evaluation indicators. Specifically, it achieved a precision of 85.8%, a recall of 86.4%, a mAP@50 of 91.3%, and an F1-score of 86.0%, representing improvements of 3.6%, 3.5%, 2.8%, and 3.5%, respectively, over the baseline. Similarly, on the validation set, DMN-YOLO maintained its advantage, attaining a precision of 85.4%, a recall of 85.9%, a mAP@50 of 91.6%, and an F1-score of 85.6%, which correspond to increases of 3.5%, 3.2%, 3.1%, and 4.4%, respectively. These experimental results demonstrate that the DMN-YOLO model possesses enhanced feature representation capability and improved detection accuracy for object detection tasks, reflecting the effectiveness of the model’s architectural improvements. The generalization results on the grape leaf disease dataset further confirm DMN-YOLO’s robustness and adaptability, supporting its practical application in diverse plant disease detection tasks.

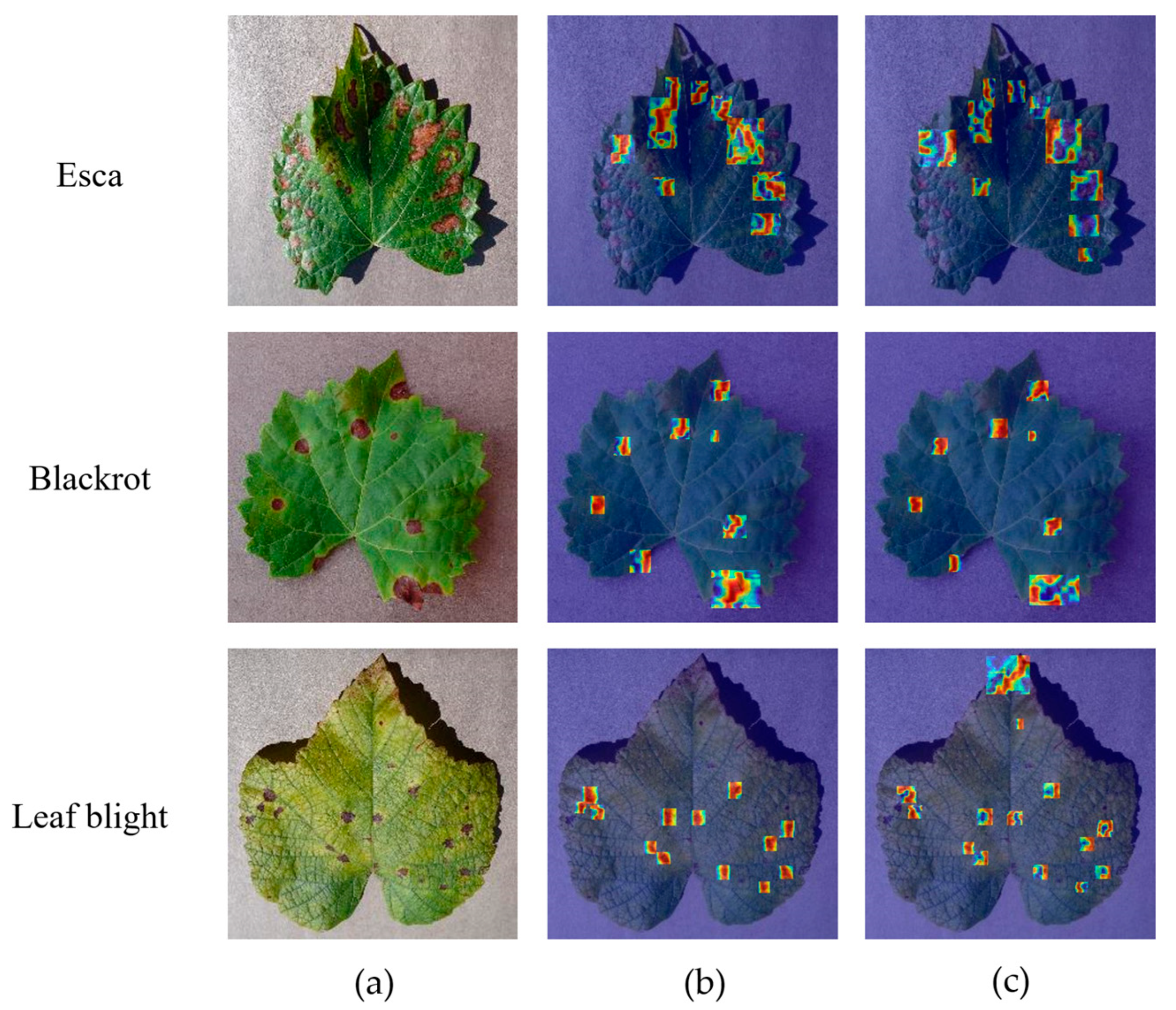

This study utilized Grad-CAM++ to visualize heatmaps and verify the model’s ability to detect grape leaf disease regions across multiple categories of infected leaf images. As shown in

Figure 18, while YOLOv11n responds to diseased areas in some samples, issues such as misalignment and background interference are evident. In the cases of grape esca and grape black rot, the heatmap from YOLOv11n fails to focus accurately on the actual diseased areas, with some responses deviating from key regions, thus reducing the model’s discriminatory performance. In the grape leaf blight sample, the response area is more scattered, lacking a clear focus on the diseased area. In contrast, DMN-YOLO demonstrates superior positioning and focus capabilities across all three disease types. Its response areas are more concentrated and effectively cover the diseased regions. Notably, in the grape black rot and grape leaf blight images, the heatmap accurately highlights multiple characteristic diseased areas, showcasing the DMN-YOLO model’s enhanced feature extraction and area recognition capabilities.