Abstract

To address the multi-target detection problem in the automatic seedling-feeding procedure of vegetable-grafting robots from dual perspectives (top-view and side-view), this paper proposes an improved YOLOv8-SDC detection segmentation model based on YOLOv8n-seg. The model improves rootstock seedlings’ detection and segmentation accuracy by SAConv replacing the original Conv c2f_DWRSeg module, replacing the c2f module, and adding the CA mechanism. Specifically, the SAConv module dynamically adjusts the receptive field of convolutional kernels to enhance the model’s capability in extracting seedling shape features. Additionally, the DWR module enables the network to more flexibly adapt to the perception accuracy of different cotyledons, growth points, stem edges, and contours. Furthermore, the incorporated CA mechanism helps the model eliminate background interference for better localization and identification of seedling grafting characteristics. The improved model was trained and validated using preprocessed data. The experimental results show that YOLOv8-SDC achieves significant accuracy improvements over the original YOLOv8n-seg model, YOLACT, Mask R-CNN, YOLOv5, and YOLOv11 in both object detection and instance segmentation tasks under top-view and side-view conditions. The mAP of Box and Mask for cotyledon (leaf1, leaf2, leaf), growing point (pot), and seedling stem (stem) assays reached 98.6% and 99.1%, respectively. The processing speed reached 200 FPS. The feasibility of the proposed method was further validated through grafting features, such as cotyledon deflection angles and stem–cotyledon separation points. These findings provide robust technical support for developing an automatic seedling-feeding mechanism in grafting robotics.

1. Introduction

China is the global leader in vegetable production and consumption. In 2022, global vegetable production totaled 1.173 billion tons, with China accounting for 618 million tons, representing 52.69% of the total output [1]. The annual demand for vegetable seedlings in China reaches 680 billion plants, including 50 billion grafted seedlings [2]. Grafting is a technique that involves attaching a branch or bud from one plant to the stem or root of another so that the two parts grow into a complete plant. Widely adopted in modern vegetable production, grafting effectively mitigates issues such as continuous cropping obstacles, pests, and diseases [3,4,5,6] while enhancing crop resistance and yield [7,8,9]. Common vegetable-grafting methods include splice, side, approach, and cleft grafting.

Manual grafting is inefficient, labor-intensive, and suffers from a shortage of skilled grafters, creating an urgent demand for automation in grafting in the vegetable industry. Grafting robots can precisely execute tasks such as seedling clamping, cutting, alignment, and clipping, significantly improving automation levels and production efficiency. However, morphological variations among vegetable seedlings often necessitate manual assistance during seedling feeding, resulting in reduced grafting machine efficiency. Consequently, automated seedling-feeding has emerged as a critical technical bottleneck in grafting technology. In recent years, deep learning has found extensive applications in agricultural domains, including crop growth monitoring, fruit harvesting, and pest and disease early warning systems [10,11]. Nevertheless, complex background interference during detection often reduces recognition accuracy and segmentation precision. Leveraging advanced machine learning and deep learning algorithms for melon seedling feature detection and segmentation can provide crucial information guidance for fully automated grafting machines, particularly in automated seedling-feeding.

In the field of seedling feature segmentation, researchers have developed various innovative methods tailored to diverse application scenarios. Lai et al. [12] addressed the need for automatic scion detection in vegetable-grafting machines by proposing a lightweight segmentation network based on an improved UNet. They employed MobileNetV2 as the backbone network and incorporated the Ghost module. The refined Mobile-UNet model demonstrated improvements of 5.69%, 1.32%, 4.73%, and 3.12% in mIoU, precision, recall, and Dice coefficient, respectively, achieving precise segmentation of the scion cotyledons in the clamping mechanism. Deng et al. [13] proposed a CPHNet-based stem segmentation algorithm for seedlings, which demonstrated enhanced precision and stability in stem segmentation compared to alternative models. On a self-constructed dataset, the model achieved an mIoU of 90.4%, mAP of 93.0%, mR of 95.9%, and an F1 score of 94.4%. Zi et al. [14] proposed a semantic segmentation network model based on an optimized ResNet to accurately segment corn seedlings and weeds in complex field environments. By adjusting the backbone network and integrating an atrous spatial pyramid pooling module and a strip pooling module, the model showed enhanced capability to capture multi-scale contextual information and global semantic features. The experimental results revealed that the model achieved an mIoU of 85.3% on a self-built dataset, showcasing robust segmentation performance and generalization ability, thereby offering valuable insights for developing intelligent weeding devices.

In segmentation and localization research, investigators are committed to achieving enhanced precision while maintaining low complexity and computational demands. Jiang et al. [15] proposed an improved YOLOv5-seg instance segmentation algorithm for bitter gourd-harvesting robots, integrating a CA mechanism and a refinement algorithm. The model achieved mean precision values of 98.15% and 99.29% for recognition and segmentation, respectively, representing improvements of 1.39% and 5.12% over the original model. The localization errors for the three-dimensional coordinates (X, Y, Z) were 7.025 mm, 5.6135 mm, and 11.535 mm, respectively. This method offers valuable insights for accurately recognizing and localizing bitter gourd harvesting points. Zhang et al. [16] proposed a lightweight weed localization algorithm based on YOLOv8n-seg, incorporating FasterNet and CDA. The model reduced computational costs by 26.7%, model size by 38.2%, and floating-point operations to 8.9 GFLOPs while achieving a weed segmentation accuracy of 97.2%. Fan et al. [17] proposed an improved weed recognition and localization method based on an optimized Faster R-CNN framework with data augmentation techniques. The enhanced model achieved an average inference time of 0.261 s per image with an mAP of 94.21%. Paul et al. [18] compared the performance of YOLO algorithms for pepper detection and peduncle segmentation. In the pepper detection task, the YOLOv8s model achieved an mAP of 0.967 at an IoU threshold of 0.5. For peduncle detection, the YOLOv8s-seg model achieved mAP values of 0.790 and 0.771 for Box and Mask detection, respectively. Using a RealSense D455 RGB-D camera, the laboratory tests achieved target point localization with maximum errors of 8 mm, 9 mm, and 12 mm in the longitudinal, vertical, and lateral directions, respectively.

Researchers have proposed various innovative solutions in the field of detection and segmentation algorithms for complex problems. Wu et al. [19] addressed the challenge of apple detection and segmentation in occluded scenarios by proposing the SCW-YOLOv8n model. By integrating the SPD-Conv module, GAM, and Wise-IoU loss function, the model significantly improved apple detection and segmentation accuracy. The detection mAP and segmentation mAP of the original YOLOv8n model were increased to 75.9% and 75.7%, respectively, while maintaining a real-time processing speed of 44.37 FPS. Zuo et al. [20] tackled the challenges of varying scales and irregular edges in the branches and leaves of fruits and vegetables by proposing a segmentation network model that integrates target region semantics and edge information. They combined the UNET backbone network with an edge-aware module and added an ASPP module between the encoder and decoder to extract feature maps with different receptive fields. The results show that the average pixel accuracy of the test set reached 90.54%.

In summary, there has been limited research on feature detection and segmentation of melon-grafting seedlings, and while existing studies exhibit certain limitations, they provide valuable references for this work. To address the need for the rapid and high-precision detection and segmentation of seedling features in automated melon-grafting machines, this study employs an improved YOLOv8-SDC model to process individual seedlings’ dual-view (top and side) image data. The proposed approach enhances the speed and accuracy of seedling detection and segmentation. Extracting stem–cotyledon separation points and cotyledon deflection angles establishes the necessary prerequisites for precisely adjusting cotyledon direction and seedling height.

The main contributions of this study are as follows: (1) In the Backbone layer, SAConv is introduced to replace Conv, dynamically adjusting the receptive field of convolutional kernels, thereby enhancing the model’s ability to extract multi-scale features, particularly suitable for target detection tasks in complex scenarios. This improvement enhances the model’s detection accuracy and robustness without significantly increasing computational costs. (2) In the Neck layer, the c2f_DWRSeg module replaces the c2f module, enabling the network to flexibly adapt to segmentation tasks involving targets of varying shapes. This enhances the model’s precision in perceiving edges and contours and improves its ability to capture irregular shapes and detailed features. (3) Introducing the CA mechanism enhances the model’s ability to focus on key features by capturing spatial and inter-channel relationships. This reduces the influence of irrelevant regions, such as the background, effectively improving the model’s target recognition and localization accuracy in complex backgrounds.

2. Materials

2.1. Data Collection

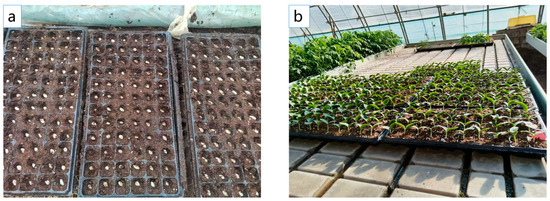

The experiment was conducted on 25 October 2024, in the Beijing Academy of Agriculture and Forestry Sciences seedling greenhouse. White-seeded pumpkin ‘Jingxin Rootstock No. 3’ was selected, and standard 50-cell (5 × 10) trays with dimensions (length × width × height) of 540 mm × 280 mm × 55 mm were used. A mixture of peat, vermiculite, and perlite in a 1:1:1 ratio was evenly blended, moistened with water, and filled into the trays. The sowing direction was set with the bud tip oriented at a 45° angle to the upper left corner, and the bud tip was positioned at the center of the cell. The sowing depth was 10 mm to 15 mm. The greenhouse temperature was maintained at 25 °C to 30 °C, and the relative humidity was kept at 60% to 80%. After 10 days of growth, 1000 white-seeded pumpkin seedlings were cultivated, as shown in Figure 1.

Figure 1.

Seedling preparation. (a) Directional sowing; (b) white-seeded pumpkin rootstock seedlings.

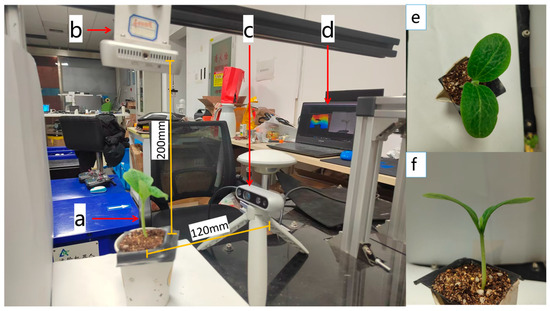

The image acquisition setup consisted of two RealSense D435i depth cameras, a laptop, a support frame, and seedlings, as shown in Figure 2. Single seedlings were cut from the trays using scissors, and seedling (a) was placed on the platform. The top-view camera (b) was vertically installed 200 mm above the seedling, while the side-view camera (c) was horizontally installed 120 mm away from the seedling. The depth cameras were connected to the laptop (d) via Type-C USB 3.0 cables. The cameras were programmatically controlled to capture images at a resolution of 640 × 480, which were saved in JPG format.

Figure 2.

Image acquisition setup. (a) White-seeded pumpkin seedling; (b) top-view RealSense camera; (c) side-view RealSense camera; (d) laptop; (e) top-view perspective; (f) side-view perspective.

Image Acquisition Process: The image acquisition process began on 4 November 2024, with data collection conducted during three time periods: 9:00 a.m.–11:30 a.m., 2:00 p.m.–5:30 p.m., and 7:00 p.m.–11:00 p.m. Collecting data at different times ensured comprehensive coverage of the seedling conditions under varying lighting and temperature conditions, guaranteeing image diversity. A total of 2000 qualified images were retained after excluding samples with improper handling or poor quality, comprising 1000 top-view and 1000 side-view images. The dataset was randomly partitioned into training, validation, and test sets at 8:1:1.

2.2. Data Processing

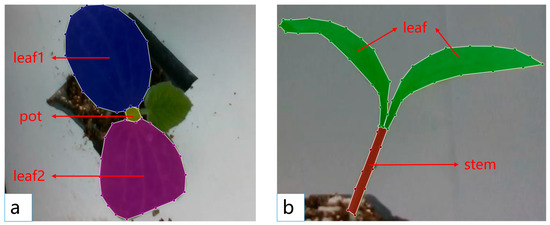

LabelMe was used for image annotation in the detection and segmentation tasks [21], with the ultimate goal of identifying the deflection angle of the cotyledons and the separation point between the stem and leaves in the images. In the top view, the cotyledon 1 (leaf1), cotyledon 2 (leaf2), and growing point (pot) regions of the seedlings were annotated. By comparing the sizes of leaf1 and leaf2, the cotyledon with the larger pixel area was designated as the reference cotyledon. The geometric centers of the reference cotyledon and the growing point region were obtained, and the angle between the line connecting these two points and the vertical direction was defined as the cotyledon deflection angle. In the side view, the two cotyledons (leaf) and the seedling stem (stem) regions were annotated. The overlapping point between the seedling stem region and the two cotyledon regions was identified as the stem–cotyledon separation point. The image annotation is illustrated in Figure 3. The annotated files were saved in JSON format and subsequently converted into the TXT format required for YOLOv8 training through a program.

Figure 3.

Seedling image annotation. (a) Top-view image; (b) side-view image.

3. Methods

3.1. Improved YOLOv8-SDC Network Model

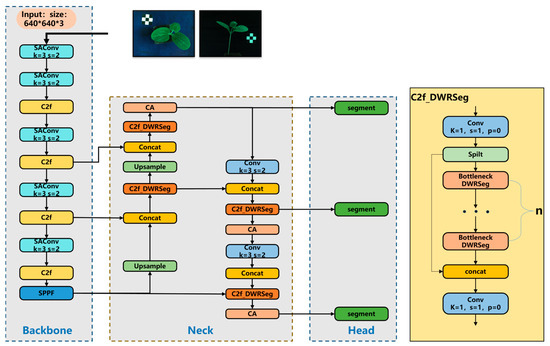

The YOLOv8 model retains the same fundamental principles as its predecessors, with the Ultralytics team introducing new improvement modules based on YOLOv5 to further enhance the model’s detection performance. Compared to two-stage detection algorithms such as Faster R-CNN and Mask R-CNN [22,23], YOLOv8 offers faster inference speed and end-to-end training capabilities, particularly excelling in real-time detection scenarios [24,25,26,27,28]. The YOLOv8 network model primarily consists of four parts: Input, Backbone, Neck, and Head. YOLOv8n-Seg is a fast and high-precision instance segmentation algorithm that has shown significant effectiveness in plant segmentation tasks [29]. The base model used in this study is YOLOv8n-seg, and the improved model is named YOLOv8-SDC. Its network structure is illustrated in Figure 4.

Figure 4.

YOLOv8-SDC model.

This paper proposes three critical improvements to the base YOLOv8n-seg model: (1) replacement of standard convolutional layers with SAConv modules in the backbone network to enhance receptive field adaptation, (2) substitution of the original c2f modules with c2f_DWRSeg blocks in the neck network for improved feature fusion, and (3) integration of CA mechanisms prior to each segmentation module output for optimized feature representation. The processing pipeline begins by resizing input seedling images (640 × 480 pixels) to a standardized 640 × 640 resolution through LetterBox transformation while preserving the original aspect ratio via gray padding. The enhanced architecture subsequently performs multi-scale feature extraction through the modified backbone network to capture hierarchical features, while the neck layer enhances target discriminability. The detection heads’ output predicted bounding boxes and class information, while the segmentation heads generated prototype masks and coefficient matrices. This integrated system ultimately produces detection boxes, class labels, and corresponding binarized masks, achieving real-time, end-to-end instance segmentation while maintaining high precision.

3.2. SAConv Module and Its Components

3.2.1. Introduction to the SAConv Module

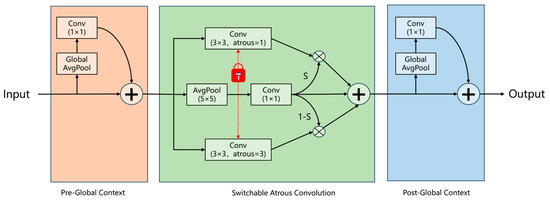

Wang et al. [30] proposed a novel convolutional module, SAConv, for semantic segmentation tasks. This module replaces the conventional Conv2d layers and consists of three key components: two global modules positioned before and after the SAConv component, as illustrated in Figure 5. During the feature extraction phase, the network progressively downsamples the input through multiple stages, each containing several SAConv modules. The core innovation lies in its switchable atrous rate mechanism, which dynamically adjusts the convolutional receptive field. This mechanism learns path weights to automatically select optimal feature extraction patterns. Each SAConv module is followed by BatchNorm normalization and SiLU activation functions, effectively preserving local detailed features while enhancing multi-scale contextual awareness. The feature maps undergo systematic downsampling from an initial resolution of 320 × 320 (stride = 2) to 20 × 20 (stride = 32), ultimately generating a three-level feature pyramid at resolutions of 80 × 80, 40 × 40, and 20 × 20.

Figure 5.

SAConv structure.

The global context module processes the input feature map. First, the feature map undergoes global average pooling, followed by a 1 × 1 convolution. The input feature map and the output of the 1 × 1 convolution are then fused through addition to integrating features from different layers. After this processing, the fused features enter the SAC stage. One branch applies two 3 × 3 convolutional kernels with dilation rates of 1 and 3, enabling switching between different dilation rates to expand the receptive field of the convolutional kernels and capture larger regional information. The other branch performs 5 × 5 average pooling on the fused feature map to further extract the average features of local regions, followed by a convolution operation. The switch (S) in the figure determines the contribution ratio of features from each dilation rate through weights, and these features are then weighted and fused. These operations share the same weights to reduce the number of parameters and dynamically adjust the receptive field. The addition operation combines the convolution results from different dilation rates and the convolution results after average pooling. Finally, the features enter the global context module, where the context module processes them similarly to produce the final output.

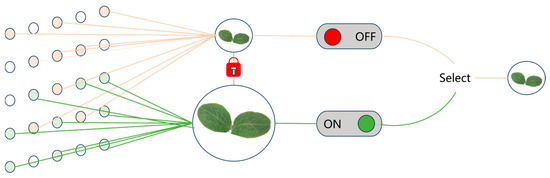

3.2.2. Principles and Mechanisms of SAC Convolution

SAC is an advanced convolution mechanism primarily designed to enhance feature extraction capabilities in object detection and segmentation tasks. The core idea of SAC is to apply convolutions with different “dilation rates” to the same feature map, as shown in Figure 6. Dilated convolution expands the receptive field by inserting “holes” into the convolutional kernel without increasing the number of parameters or computational cost, allowing SAC to capture features at different scales. SAC also incorporates a unique design: a “switch function” determines how to combine the results of convolutions with different dilation rates. This switch is “spatially dependent”, as each position in the feature map can have its switch to determine the appropriate dilation rate. This enhances the network’s flexibility in handling objects of varying sizes and scales.

Figure 6.

Principle of the SAConv mechanism.

3.3. Introduction to the c2f_DWRSeg Module

The C2f_DWRSeg module is designed to extract multi-scale features more efficiently. It first adjusts the number of input channels using a 1 × 1 convolution and then processes the feature map through multiple Bottleneck_DWRSeg modules. Finally, the output feature maps from the main and residual branches are concatenated and passed through a convolution operation to produce the final feature map. This design improves the model’s performance in visual tasks by combining local and global information, especially in scenarios that demand efficient computation and fine-grained feature extraction.

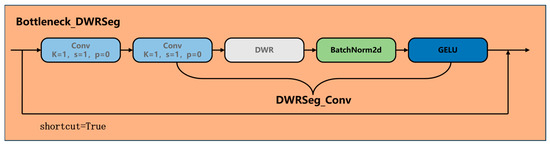

3.3.1. Introduction to the Bottleneck_DWRseg Module

The Bottleneck_DWRseg module architecture is illustrated in Figure 7. Its core design combines depthwise separable convolution with residual connections. The module initially employs a 1 × 1 convolution for channel dimension reduction, followed by a 3 × 3 depthwise separable convolution for spatial feature extraction, which significantly reduces computational complexity. Finally, a 1 × 1 convolution restores the channel dimension while incorporating residual connections.

Figure 7.

Bottleneck_DWRSeg structure.

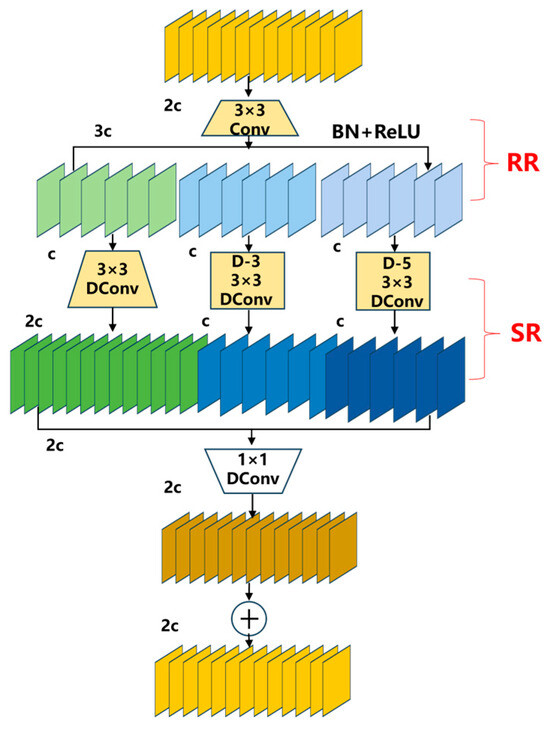

3.3.2. Introduction to the DWR Module

The innovative DWR module [31] is shown in Figure 8. It is an efficient convolutional module that combines Depthwise separable convolution and residual connections. First, the DWR module processes the input feature map using a standard 3 × 3 convolution. After the convolution operation, the feature map undergoes batch normalization and ReLU activation to further adjust its distribution and introduce nonlinear transformations. The convolution results are then split into multiple branches, each applying a different dilation rate (e.g., d = 1, d = 3, d = 5) for dilated convolution. Next, the concatenated feature map is processed by a 1 × 1 convolution and further normalized using BN. Finally, through residual connections, the addition operation enables information sharing between the input and output feature maps, which helps mitigate the vanishing gradient problem and facilitates network training.

Figure 8.

DWR structure.

The DWR module proposes a two-step residual feature extraction method to enhance multi-scale information capture efficiency for real-time segmentation tasks. This approach consists of two distinct phases: Regional Residualization and Semantic Residualization. During the Regional Residualization phase, the input feature maps are first divided into groups, with each group processed by depthwise separable dilated convolutions employing different dilation rates. This architecture enables an adaptive perception of various seedling morphologies, ensuring accurate identification regardless of leaf shapes (including elliptical forms and other configurations). The Semantic Residualization phase subsequently generates concise representations of the regionally residualized features, precisely extracting seedling edges and contour information. This processing maintains clear segmentation boundaries even when confronted with irregular leaf margins. The phase employs depthwise separable dilated convolution with a single dilation rate for semantic filtering, effectively eliminating redundant information while preserving critical features. The final output is produced through feature concatenation.

3.4. Introduction to the CA Mechanism Module

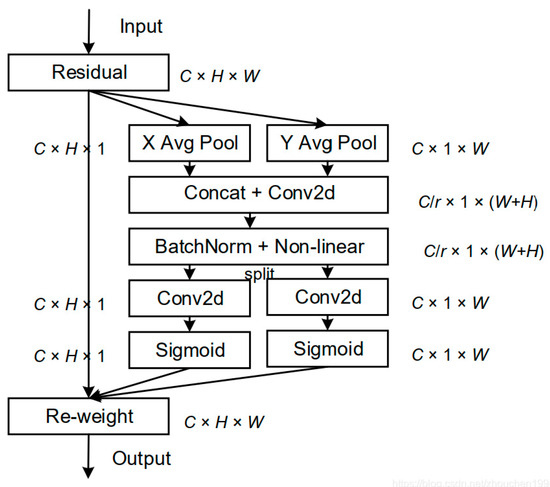

The CA mechanism is a feature enhancement mechanism that dynamically adjusts channel weights to improve feature representation [32]. The module initially performs coordinate-wise dimension reduction on the input features, conducting global pooling operations separately along the horizontal and vertical axes to generate orientation-aware feature descriptors. Subsequently, these descriptors undergo convolutional transformation followed by Sigmoid activation to produce attention weight maps. Ultimately, these weight maps are multiplied with the original features to achieve spatial-channel collaborative optimization.

The CA module encodes precise positional information through two key steps, coordinate information embedding and coordinate attention generation, as illustrated in Figure 9.

Figure 9.

Structure of the CA mechanism.

First, regarding coordinate information embedding, traditional global pooling operations lose positional information. The CA module addresses this issue by decomposing global pooling into two one-dimensional feature encoding operations. Specifically, for the input feature map X, pooling kernels of size (H, 1) and (1, W) are applied to encode each channel along the horizontal and vertical directions, respectively. The output of the c-th channel at height h is expressed by the following Formula (1).

The output of the c-th channel at width w is expressed by the following Formula (2).

This operation generates a pair of direction-aware attention maps, which capture spatial long-range dependencies while preserving precise positional information, thereby helping the network locate targets more accurately.

In the coordinate information embedding stage, a pair of direction-aware attention maps is generated by performing one-dimensional pooling operations along the horizontal and vertical directions. At the same time, precise positional information along the other spatial direction is retained, helping the network locate targets more accurately. This process corresponds to the figure’s X Avg Pool and Y Avg Pool sections. Next, a coordinate attention generation operation is designed to better utilize the generated feature maps. Specifically, the two generated feature maps are concatenated and then transformed via a shared 1 × 1 convolution to generate an intermediate feature map f, as shown in Formula (3).

Here, F1 represents the 1 × 1 convolution, and δ is the activation function. Next, f is split along the spatial dimension into fh and fw, which are then transformed into feature maps with the same number of channels as the input X through two separate 1 × 1 convolutions, Fh and Fw, as shown in Formulas (4) and (5).

Here, σ is the activation function. Finally, gh and gw are expanded into attention weights, and the final output of the CA module is expressed as shown in Formula (6).

Through the above design, the CA module simultaneously implements attention mechanisms in both horizontal and vertical directions while retaining channel attention characteristics. This structure captures long-range dependencies and precise positional information and effectively enhances the model’s ability to locate and recognize targets. Moreover, its lightweight design ensures minimal computational overhead, making it highly suitable for integration into existing networks.

In seedling segmentation tasks, the CA mechanism enhances the model’s ability to extract seedling features by adaptively adjusting channel weights, enabling better differentiation of seedling targets from complex backgrounds. At the same time, the CA module utilizes precise positional information encoding to significantly enhance the localization accuracy of seedlings, particularly when seedling edges are blurry, or shapes are irregular, enabling the more accurate capture of their contours and spatial positions. This combination of background suppression and precise localization makes the CA mechanism excel in seedling segmentation tasks.

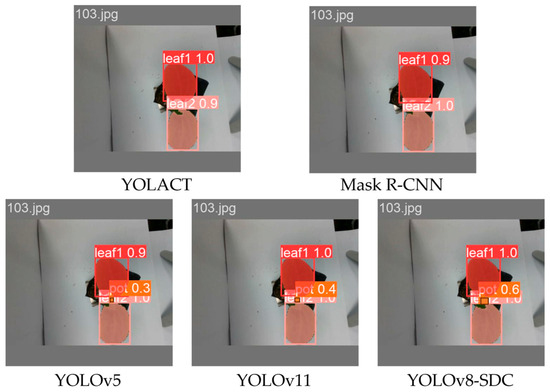

3.5. Model Testing

To verify the difference between the improved model and other models, YOLOV8-SDC, YOLOv5, YOLACT, Mask R-CNN, and YOLOv11 were compared, and the optimal model weight file was selected for testing. During the test, the image was automatically divided into two categories: side view and top view. The first category is that the top view has a growth point area. If it is the first category, the two cotyledons are identified as leaf1 and leaf2. By comparing the pixel areas of leaf1 and leaf2, the cotyledon with a larger area is defined as the reference cotyledon. The geometric center points of the growth point and the reference cotyledon are automatically found, and the angle between the line connecting the two points and the vertical direction is the cotyledon deflection angle. The second category is that the side view has a seedling stem area. If it is the second category, the two cotyledons are identified as leaves, and the overlap point between the seedling stem area and the two cotyledon areas is automatically found as the stem–cotyledon separation point. In order to further verify the recognition accuracy of the model, manual testing was used to find the growth point center and stem–cotyledon separation point of the grafting feature in the original top view and the test image; finally, the model was verified by model testing and manual testing.

4. Experiments

4.1. Experimental Environment

The experiments were performed on a Windows 11 operating system, featuring an Intel Xeon Platinum 8383C CPU, an NVIDIA GeForce RTX 4090 GPU, and 256 GB of RAM. The programming language used was Python 3.9, with PyTorch 1.3.1 as the deep learning framework. The CUDA and GPU acceleration library cuDNN versions were 12.8 and 8.9, respectively. The training input size was set to 640 × 480 pixels, with a batch size of 64, 32 worker threads, and 1000 iterations.

4.2. Evaluation Metrics

Based on several important evaluation metrics and practical applications of the YOLOv8 network model, the model’s performance was assessed from the following aspects: precision, recall, mean average precision, frames per second, and the size of the weight file. These serve as the evaluation metrics for the model. The formulas are as follows:

Precision: The proportion of predicted positive instances that are positive. It measures the accuracy of the model’s predictions; the fewer incorrect positive predictions, the higher the precision.

Recall: The proportion of actual positive instances correctly predicted as positive. It measures the model’s coverage of positive instances; the fewer positive instances missed, the higher the recall.

Mean Average Precision: The average of the average precision across multiple categories. Usually, the IoU threshold is used as the standard. For example, mAP@0.5 represents the mAP when the IoU threshold is 0.5. Measuring the balance between the overall detection accuracy and recall rate of the model is the core indicator for comprehensively evaluating the performance of the model in target detection. It is obtained by calculating the average precision of all categories, where AP is the area under the precision–recall curve, reflecting the balance between the detection accuracy and recall rate of the model for a certain category at different confidence thresholds.

Here, N represents the number of samples in the test set, and AP denotes the average precision for each query.

Frames Per Second: The inference speed of the model in real-time detection is measured by the number of image frames processed per second. It evaluates the model’s inference efficiency; a higher FPS indicates the model is more suitable for real-time applications.

4.3. Ablation Experiments on YOLOv8-SDC

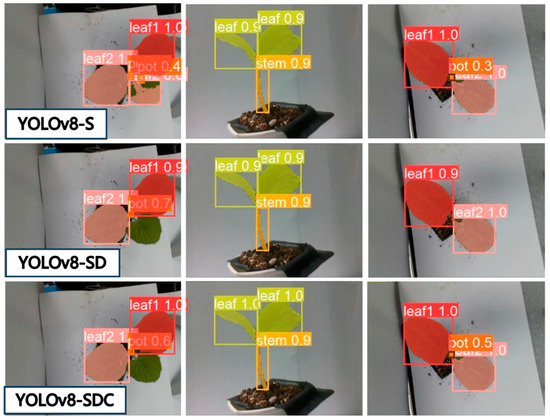

Using YOLOv8n-seg as the base network, modifications were applied to both the backbone and neck networks. To evaluate the effectiveness of these improvements in practical applications, we designed the following ablation experiments. First, the original YOLOv8n-seg model was designated as YOLOv8. Subsequently, the SAConv module was integrated into the YOLOv8n-seg network, replacing the original convolutional layers, enabling the network to dynamically adjust the receptive fields of convolutional kernels for enhanced seedling shape feature extraction, resulting in a network named YOLOv8-S. Following this, the c2f module in YOLOv8-S was replaced with the c2f_DWRseg module, allowing the network to better adapt to perception accuracy for different cotyledons, growth points, and stem edges/contours, yielding a network termed YOLOv8-SD. Lastly, the CA mechanism was incorporated into YOLOv8-SD, enabling the network to reduce background interference and improve the localization and identification of seedling grafting features, producing the final network, YOLOv8-SDC. The experimental results are presented in Table 1.

Table 1.

Ablation experiments results.

As shown in Table 1, YOLOv8-S replaces the original convolution using the SAConv switchable null convolution, enhancing the flexibility and adaptability of feature extraction and thereby improving the accuracy and efficiency of feature extraction. At a threshold of 0.5, the average accuracy of the seedling detection Box and Mask improved by 0.3% and 0.7%, respectively, compared to the original model. In the YOLOv8-S network, the DWR module combines local and global information, enhancing the model’s performance in visual tasks and improving computational efficiency and fine-grained feature extraction. At a threshold of 0.5, the average accuracy of the seedling detection Box and Mask improved by 0.3% and 1.4%, respectively. By incorporating the CA global attention mechanism into the YOLOv8-SD network, the model not only captures inter-channel information but also considers direction-aware positional information, aiding in better target localization and recognition. At a threshold of 0.5, the average accuracy of the seedling detection Box and Mask improved by 0.9% and 1.7%, respectively. Compared to the original model, the YOLOv8-SDC model reduces GFLOPs from 12.0 to 11.3, reducing the model complexity and computational cost. In terms of FPS, the improved model achieves a higher image processing speed, increasing from approximately 169 FPS to 200 FPS, representing an 18.34% improvement in segmentation speed. The memory footprint of the model increases only slightly, from 6.8 MB to 8.3 MB, still meeting the requirements for deployment on edge devices. However, the real-time detection precision for seedlings increases from 97.7% in the original model to 98.6%, and the segmentation precision increases from 97.4% to 99.1%.

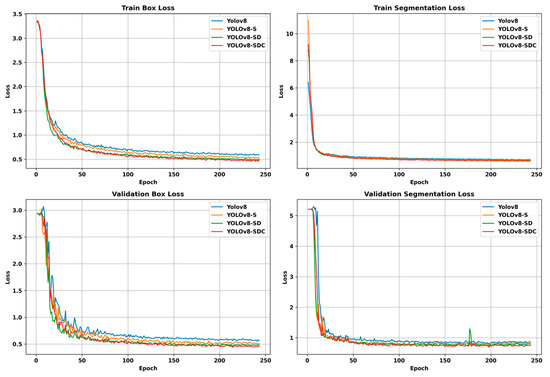

As illustrated in Figure 10, the loss function trends of the YOLOv8, YOLOv8-S, YOLOv8-SD, and YOLOv8-SDC models are compared. The figure shows that the loss curves for both the training and validation sets gradually stabilize, indicating that the models converge during training. The loss curve of the YOLOv8-SDC model consistently remains the lowest, demonstrating its superior convergence performance. However, the maximum epoch value on the x-axis is 250, and the model stabilizes before reaching this maximum, indicating that the YOLOv8-SDC model achieves optimal performance with fewer training epochs, further validating its excellent adaptability and robustness.

Figure 10.

Comparison of training and validation set model loss.

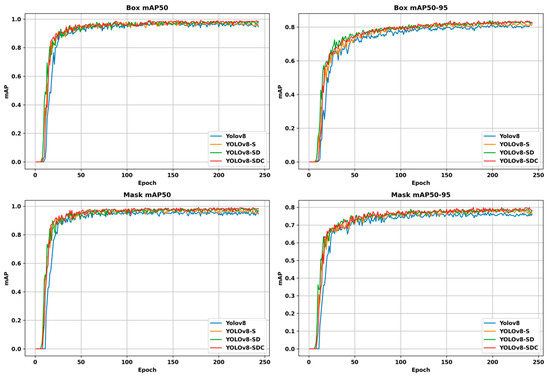

As illustrated in Figure 11, the YOLOv8-SDC model demonstrates the best performance in both Box and Mask metrics for mAP@50 and mAP@50-95. The corresponding curves consistently remain at the top, indicating that the model achieves the highest precision in both object detection and segmentation tasks.

Figure 11.

Comparison of model mAP results.

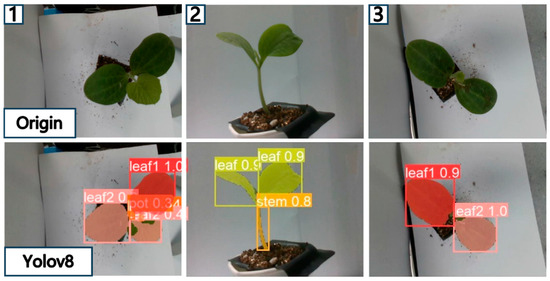

Using the YOLOv8 model to detect and segment seedlings from dual perspectives, The experimental results are shown in Figure 12. In the unmodified model, the model incorrectly detects the unlabeled true cotyledon as “leaf2” in image 1, but can correctly detect and segment it in image 2. However, in image 3, the model fails to detect and segment the “pot” area. With the YOLOv8-S model, the pot region in Image 3 is successfully identified and segmented, but the unlabeled true cotyledon in Image 1 is still misidentified as “leaf2”. The YOLOv8-SD model successfully distinguishes the true cotyledon in Image 1 but fails to detect the “pot” region in Image 3. Ultimately, the improved YOLOv8-SDC model achieves accurate detection and segmentation for all three images (Images 1–3), clearly demonstrating its superiority and optimal performance in detecting and segmenting seedlings from dual perspectives.

Figure 12.

Actual inference results of the model. image 1: Top view with true cotyledons; image 2: Side view; image 3: Top view without true cotyledons.

4.4. Comparison Results and Analysis of the YOLOv8-SDC Model

YOLOv8-SDC was compared with YOLOv5, YOLACT, Mask R-CNN, and YOLOv11 to validate the differences between the improved model and other models. All models were trained under identical environments and parameter configurations using the same dataset to ensure fairness, scientific rigor, and practicality in the experimental results. The comparison results are shown in Table 2.

Table 2.

Model comparison results.

In terms of segmentation speed, the improved model YOLOv8n-SDC achieves the fastest performance, reaching 200 FPS, which meets the requirements for real-time seedling detection and segmentation tasks. The weight size of the improved model is 8.3 MB, making it suitable for deployment on edge computing platforms. In contrast, Mask R-CNN and YOLACT have significantly larger model weights, far exceeding those of the YOLO series models. This reflects the increased model complexity and computational burden required to handle instance segmentation tasks, rendering them unsuitable for deployment on mobile devices. Although YOLOv11 has a high segmentation speed and accuracy, with Box and Mask accuracies of 97.8 and 97.5, respectively, it simplifies the segmentation head in pursuit of speed, resulting in the loss of edge details, so it is not as good as our improved model. Finally, in terms of average precision for both Box and Mask detection, the improved model YOLOv8-SDC outperforms all other models. Although each model has unique features and strengths, YOLOv8-SDC stands out as the optimal model when considering detection and segmentation accuracy, image segmentation speed, and model weight size. The model comparison renderings are shown in Figure 13.

Figure 13.

Model comparison results.

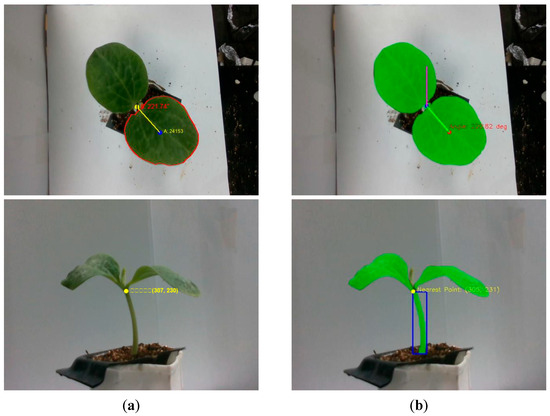

4.5. Characteristics of Rootstock Seedlings in Automatic Seedling-Feeding Operations

Automatic seedling-feeding operations require determining the spatial coordinates of the cotyledon deflection angle and the stem–cotyledon separation point, providing foundational data for adjusting the cotyledon orientation and positioning the seedling height. The identification results of the cotyledon deflection angle in the top view and the stem–cotyledon separation point in the side view are shown in Figure 14.

Figure 14.

Test comparison results. (a) Manual testing; (b) model testing.

Ten sets of top-view and side-view images of white-seed pumpkin rootstocks were randomly selected, and the results of manual measurements were compared with those of the model testing, as shown in Table 3.

Table 3.

Comparison of manual measurements and model testing results.

From the observed results, it is evident that the proposed method achieves a maximum error of 3.23° and an average error of 1.52° in identifying the cotyledon deflection angle for top-view images of white-seed pumpkin seedlings. For side-view images, the maximum error in identifying the stem–cotyledon separation point is 4 pixels, with an average error of 1.8 pixels. These results fully meet the precision requirements for cotyledon deflection angle and stem–cotyledon separation point localization in automatic seedling-feeding operations.

5. Discussion

The dual perspectives segment the various parts of the seedlings. This paper proposes three key improvements to the base YOLOv8n-seg model. First, in the Backbone layer, the original convolution is replaced with SAConv as the basic module. By applying convolutions with different dilation rates on the same input features and combining them using a switch function, the model can adaptively capture multi-scale feature information, significantly enhancing the recognition accuracy and segmentation precision for targets in images. Second, in the Neck network, c2f_DWRSeg replaces c2f, utilizing Depthwise separable dilated convolutions for multi-scale feature extraction. Through an efficient two-step residual approach, the network can more flexibly adapt to segmentation tasks involving targets of varying shapes, improving the model’s precision in perceiving edges and contours. Finally, a CA mechanism module is added before the output of each segmentation module. The model can more accurately locate and identify target regions by integrating channel attention and spatial positional information. Due to its lightweight design, it introduces almost no additional computational burden, making it highly suitable for integration into existing lightweight network architectures.

The improved model will be deployed on the vegetable-grafting robot in the future, and the performance requirements of the model are very high. First of all, the accuracy of the external feature recognition of the rootstock is the most important, which is the guarantee of successful grafting. Secondly, the mechanical grafting process is required to be completed quickly in about 3 s, so the speed of detection is also particularly important. Finally, the problem of model deployment on the edge computing platform needs to be considered. For the previous comprehensive considerations, the improved YOLOv8-SDC network model was finally selected by comparing different network models. It is the best regarding the detection speed and average detection accuracy and can be deployed on the edge computing platform. The parameters of the cotyledon deflection angle and the stem–cotyledon separation point obtained by post-processing are compared with the manual measurement values to verify the feasibility of the proposed method. However, in future studies, the obtained feature parameters will be further processed, and the actual coordinates will be found in combination with the depth information to obtain the true parameter values. In addition, we plan to test the improved model in the environment of automatic seedling removal in the plug tray so that it can work in the seedling growth environment. The proposed improved network model provides a valuable reference for studying seedlings with irregular morphology in complex environments and promotes the development and application of fully automatic grafting machines.

6. Conclusions

This paper proposes an improved YOLOv8n-SDC model for feature detection and the segmentation of melon rootstock seedlings to obtain the cotyledon deflection angle and the coordinates of the stem–cotyledon separation point. The following conclusions can be drawn based on the experiment:

(1) Compared with the YOLOv5, YOLACT, Mask R-CNN, and YOLOv11 models, the improved YOLOv8n-SDC model performs best, with a segmentation speed far higher than other models, and an increase of about 18.34% over the original model. Most importantly, the improved model has the highest average Box and Mask accuracies, which were 98.6% and 99.1%, respectively. The weight file size of the improved model is 8.3 MB, which meets the requirements of edge computing platform deployment.

(2) Through the verification test of the cotyledon deflection angle and the stem–cotyledon separation point, it is found that the error is within the allowable range of grafting, which fully meets the accuracy requirements of the cotyledon deflection angle and the stem–cotyledon separation point during the automatic seedling raising process, and also provides the prerequisite for the precise adjustment of the cotyledon direction and the seedling raising height during the automatic seedling raising process of the grafting robot.

Author Contributions

Conceptualization, K.J. and K.G.; methodology, K.J. and K.G.; software, K.J.; validation, L.L., K.G. and K.J.; formal analysis, T.P.; investigation, T.P.; resources, Z.W. and K.G.; data curation, Z.W. and K.G.; writing—original draft preparation, L.L., K.G. and K.J.; writing—review and editing, L.L., K.G. and K.J.; visualization, T.P. and K.G. supervision, K.J. and L.L.; project administration, K.J. and K.G.; funding acquisition, K.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Nature Science Foundation of China (Grant No. 32171898), National key research and development plan (2024YFD2000604), the Beijing Key Laboratory of Agricultural Intelligent Equipment Technology (PT2025-44), and the China National Agricultural Research System (CARS-25-07). We are also grateful to the reviewers for their valuable comments on improving the article.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Nomenclature

| Switchable Atrous Convolution | |

| Dilation-wise Residual | |

| Coordinate Attention | |

| mIoU | mean Intersection over Union |

| mAP | mean Average Precision |

| mR | mean Recall |

| CDA | Connected Domain Analysis |

| GAM | Global Attention Mechanism |

| ASPP | a Spatial Pyramid Pooling |

| leaf1 | cotyledon 1 |

| leaf2 | cotyledon 2 |

| pot | growing point |

| leaf | cotyledons |

| stem | seedling stem |

| BN | batch normalization |

| RR | Region Residualization |

| SR | Semantic Residualization |

| CNN | neural network |

| xc | The cth channel of the input feature map |

| The horizontal feature encoding value of the cth channel at the height coordinate h | |

| The horizontal feature encoding value of the cth channel at the width coordinate w | |

| F1 | 1 × 1 convolution |

| δ | activation function |

| f | feature map |

| gh, gw | expanded into attention weight |

| FPS | Frames Per Second |

| AP | Average Precision |

References

- Dai, J.; Wang, J.; Li, N.; Li, D.; Xu, D. Effects of different rootstock grafting on the growth characteristics of thin skin melon. China Cucurbits Veg. 2024, 37, 88–93. (In Chinese) [Google Scholar]

- Zou, Y.; Jiang, J.; Wang, G.; Huang, K.; Liang, D.; Yan, S. Effects of Calabash Rootstock Grafting on Growth, Fruit Traits and Yield of Watermelon. J. Agric. 2022, 12, 50–54. (In Chinese) [Google Scholar]

- Chang, C.; Huang, Y.; Chen, W.; Huang, Y. Mechanism Optimization of the Clamping and Cutting Arrangement Device for Solanaceae Scion and Stock Seedlings. Appl. Sci. 2023, 13, 1548. [Google Scholar] [CrossRef]

- Shanmugam, S.; Murugan, M.; Shanthi, M.; Elaiyabharathi, T.; Angappan, K.; Karthikeyan, G.; Arulkumar, G.; Manjari, P.; Ravishankar, M.; Sotelo-Cardona, P. Evaluation of Integrated Pest and Disease Management Combinations against Major Insect Pests and Diseases of Tomato in Tamil Nadu, India. Horticulturae 2024, 10, 766. [Google Scholar] [CrossRef]

- Ou, Y.; Chu, Q.; Yang, Y.; Jiang, D.; Yan, L.; He, T.; Gu, S. Experimental Study on Position Correction Mechanism of Rootstock Seedlings for Vegetable Grafting. J. Agric. Mech. Res. 2024, 46, 196–200. (In Chinese) [Google Scholar]

- Naik, S.A.T.S.; Hongal, S.V.; Hanchinamani, C.N.; Manjunath, G.; Ponnam, N.; Shanmukhappa, M.K.; Meti, S.; Khapte, P.S.; Kumar, P. Grafting Bell Pepper onto Local Genotypes of Capsicum spp. as Rootstocks to Alleviate Bacterial Wilt and Root-Knot Nematodes under Protected Cultivation. Agronomy 2024, 14, 470. [Google Scholar] [CrossRef]

- Song, J.; Fan, Y.; Li, X.; Li, Y.; Mao, H.; Zuo, Z.; Zou, Z. Effects of daily light integral on tomato (Solanum lycopersicon L.) grafting and quality in a controlled environment. Int. J. Agric. Biol. Eng. 2022, 15, 44–50. [Google Scholar] [CrossRef]

- Jiang, Y.; Wang, J.; Wu, C.; Liu, L.; Tan, W.; Liu, S.; Wang, Z.; Feng, Y. Effects of different rootstocks on growth and quality of Jinguanwang melon. China Cucurbits Veg. 2024, 37, 80–86. (In Chinese) [Google Scholar]

- Fan, G.; Fan, X.; An, J.; Guo, H.; Jin, W. Compatibility Analysis of Different Rootstocks Grafting on Muskmelon and Effects on Growth and Fruit Quality. J. Chang. Veg. 2024, 02, 50–53. (In Chinese) [Google Scholar]

- Xu, X.; Shi, J.; Chen, Y.; He, Q.; Liu, L. Research on machine vision and deep learning based recognition of cotton seedling aphid infestation level. Front. Plant Sci. 2023, 14, 1200901. [Google Scholar] [CrossRef]

- Bao, Y.; Li, L.; Su, X. Application of Deep Convolutional Neural Network in Tomato Leaf Disease Recognition. J. Fujian Comput. 2025, 41, 04. (In Chinese) [Google Scholar]

- Lai, Y.; Yu, Q.; Fang, J.; Jiang, L.; Wu, Y.; Huang, Z. Image Segmentation Method of Cucurbitaceae Scion Seedling Cotyledons Based on Mobile-UNet. Softw. Guide. 2024, 23, 153–161. (In Chinese) [Google Scholar]

- Deng, Q.; Zhao, J.; Li, R.; Liu, G.; Hu, Y.; Ye, Z.; Zhou, G. A Precise Segmentation Algorithm of Pumpkin Seedling Point Cloud Stem Based on CPHNet. Plants 2024, 13, 2300. [Google Scholar] [CrossRef]

- Zi, T.; Li, W. Research on semantic segmentation of field maize seedlings and weeds based on deep learning. J. Baicheng Norm. Univ. 2024, 38, 39–47. (In Chinese) [Google Scholar]

- Jiang, S.; Wei, Y.; Lyu, S.; Yang, H.; Liu, Z.; Xie, F.; Ao, J.; Lu, J.; Li, Z. Identification and Location Method of Bitter Gourd Picking Point Based on Improved YOLOv5-Seg. Agronomy 2024, 14, 2403. [Google Scholar] [CrossRef]

- Zhang, D.; Lu, R.; Guo, Z.; Yang, Z.; Wang, S.; Hu, X. Algorithm for Locating Apical Meristematic Tissue of Weeds Based on YOLO Instance Segmentation. Agronomy 2024, 14, 2121. [Google Scholar] [CrossRef]

- Fan, X.; Zhou, J.; Xu, Y.; Li, K.; Wen, D. Identification and Localization of Weeds Based on Optimized Faster R-CNN in Cotton Seedling Stage. Trans. Chin. Soc. Agric. Mach. 2021, 05, 003. (In Chinese) [Google Scholar] [CrossRef]

- Paul, A.; Machavaram, R.; Kumar, D.; Nagar, H. Smart solutions for capsicum Harvesting: Unleashing the power of YOLO for Detection, Segmentation, growth stage Classification, Counting, and real-time mobile identification. Comput. Electron. Agric. 2024, 219, 108832. [Google Scholar] [CrossRef]

- Wu, T.; Miao, Z.; Huang, W.; Han, W.; Guo, Z.; Li, T. SGW-YOLOv8n: An Improved YOLOv8n-Based Model for Apple Detection and Segmentation in Complex Orchard Environments. Agriculture 2024, 14, 1958. [Google Scholar] [CrossRef]

- Zuo, X.; Lin, H.; Wang, D. A method of crop seedling plant segmentation on edge information fusion model. IEEE Access 2022, 10, 95281–95293. [Google Scholar] [CrossRef]

- Russell, B.; Torralba, A.; Murphy, K.; Freeman, W. LabelMe: A database and web-based tool for image annotation. Int. J. Comput. Vis. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Bi, X.; Hu, J.; Xiao, B.; Li, W.; Gao, X. IEMask R-CNN: Information-Enhanced Mask R-CNN. IEEE Trans. Big Data 2023, 9, 688–700. [Google Scholar] [CrossRef]

- Gai, R.; Chen, N.; Yuan, H. A detection algorithm for cherry fruits based on the improved YOLO-v4 model. Neural Comput. Appl. 2023, 35, 13895–13906. [Google Scholar] [CrossRef]

- Chen, Y.; Xu, H.; Zhang, X. An object detection method for bayberry trees based on an improved YOLO algorithm. Int. J. Digit. Earth 2023, 16, 781–805. [Google Scholar] [CrossRef]

- Wang, J.; Gao, Z.; Zhang, Y.; Zhou, J.; Wu, J.; Li, P. Real-Time Detection and Location of Potted Flowers Based on a ZED Camera and a YOLO V4-Tiny Deep Learning Algorithm. Horticulturae 2022, 8, 21. [Google Scholar] [CrossRef]

- Zhang, F.; Chen, Z.; Ali, S.; Yang, N.; Fu, S.; Zhang, Y. Multi-class detection of cherry tomatoes using improved YOLOv4-Tiny. Int. J. Agric. Biol. Eng. 2023, 16, 225–231. [Google Scholar] [CrossRef]

- Zhang, T.; Zhou, J.; Liu, W.; Yue, R.; Yao, M.; Shi, J.; Hu, J. Seedling-YOLO: High-Efficiency Target Detection Algorithm for Field Broccoli Seedling Transplanting Quality Based on YOLOv7-Tiny. Agronomy 2024, 14, 931. [Google Scholar] [CrossRef]

- Si, Y.; Kong, D.; Wang, K.; Liu, L.; Yang, X. Thinning apple inflorescence at single branch level using improvedYOLOv8-Seg. Trans. Chin. Soc. Agric. Eng. 2024, 40, 100–108. (In Chinese) [Google Scholar] [CrossRef]

- Wang, D.; He, D. Channel pruned YOLO V5s-based deep learning approach for rapid and accurate apple fruitlet detection before fruit thinning. Biosyst. Eng. 2021, 210, 271–281. [Google Scholar] [CrossRef]

- Wei, H.; Liu, X.; Xu, S.; Dai, Z.; Dai, Y.; Xu, X. DWRSeg: Rethinking efficient acquisition of multi-scale contextual information for real-time semantic segmentation. arXiv 2022, arXiv:2212.01173. [Google Scholar]

- Tang, J.; Gong, S.; Wang, Y.; Liu, B.D.; Du, C.Y.; Gu, B.Y. Beyond coordinate attention: Spatial-temporal recalibration and channel scaling for skeleton-based action recognition. Signal Image Video Process. 2024, 18, 199–206. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).