EfficientDet-4 Deep Neural Network-Based Remote Monitoring of Codling Moth Population for Early Damage Detection in Apple Orchard

Abstract

1. Introduction

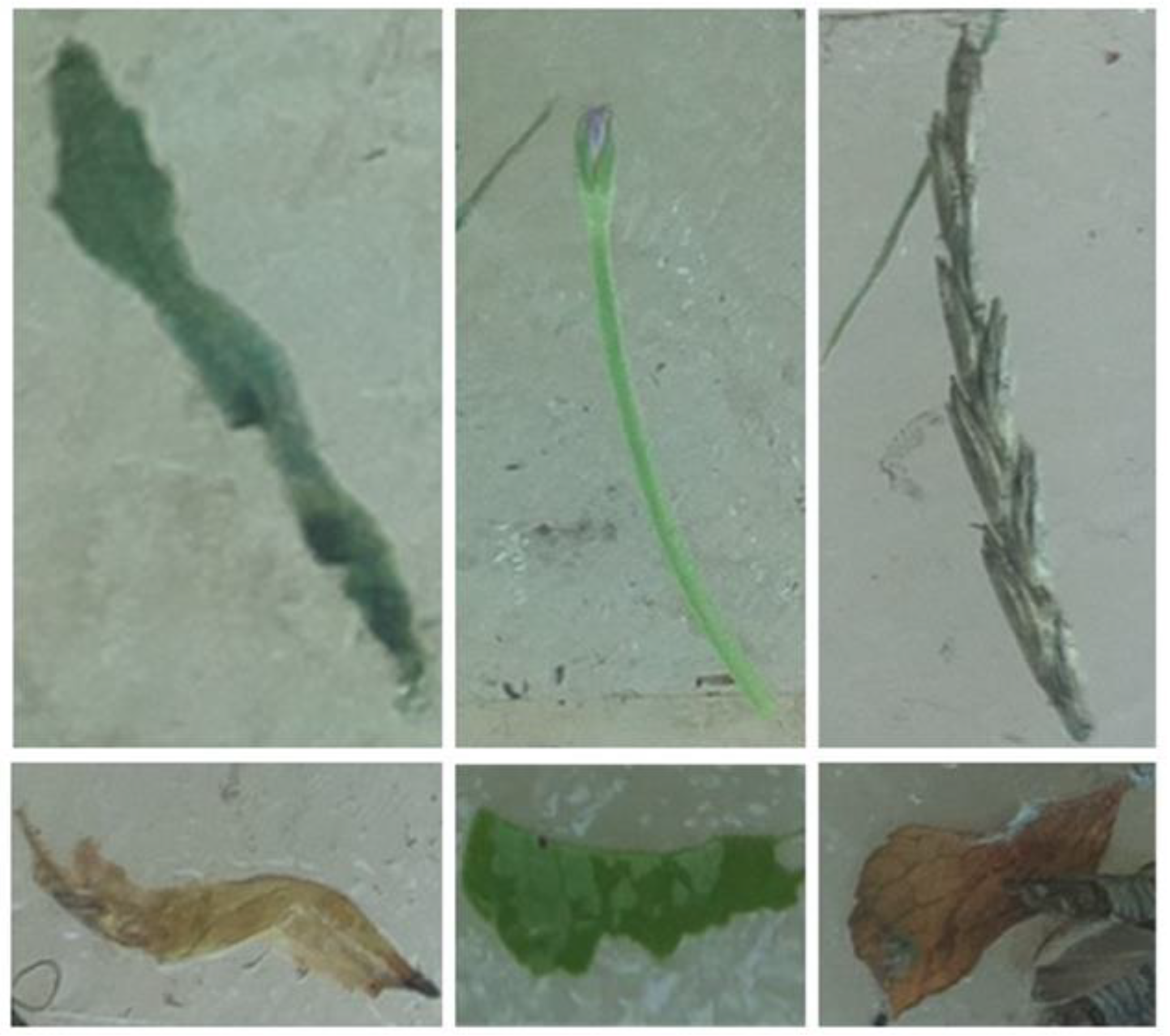

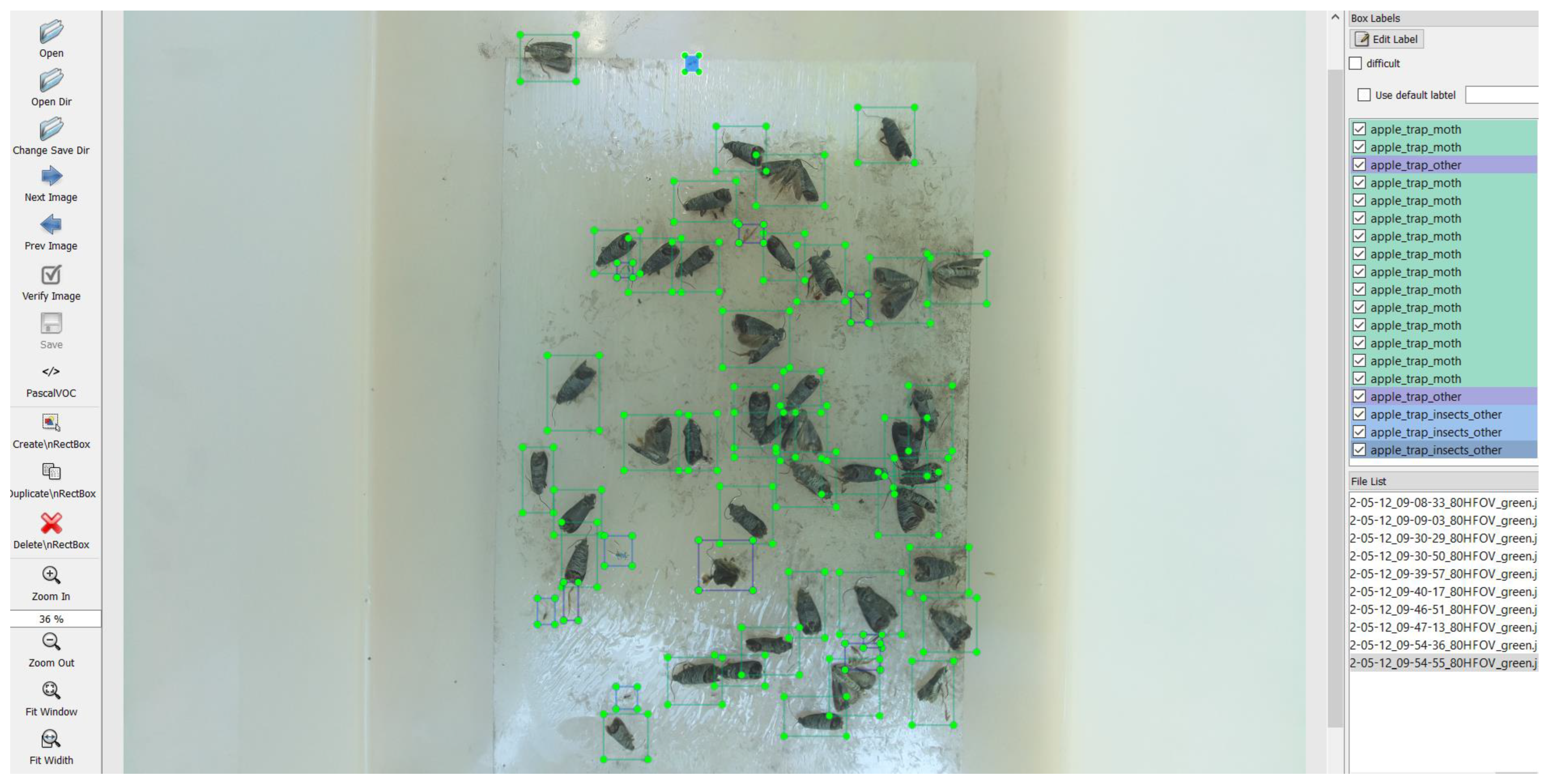

2. Materials and Methods

2.1. Smart Trap Prototype

2.2. Analytical Model

| Parameter | Explanation * |

|---|---|

| AP | AP @ IoU+ = 50% to 95% with steps of 5% |

| APIoU = 0.50 | AP @ IoU = 50% |

| APIoU = 0.75 | AP @ IoU = 75% |

| APs | AP for objects with small size: area < 32 × 32 |

| APm | AP for objects with medium size: 32 × 32 < area < 96 × 96 |

| APl | AP for objects with large size: area > 96 × 96 |

| ARmax1 | AR given 1 detection per image |

| ARmax10 | AR given 10 detections per image |

| ARmax100 | AR given 100 detections per image |

| ARs | AR for objects with small size: area < 32 × 32 |

| ARm | AR for objects with medium size: 32 × 32 < area < 96 × 96 |

| ARl | AR for objects with large size: area > 96 × 96 |

| AP_MOTH | AP for the class MOTH |

| Model validation accuracy ** | |

| Learning loss | The number of errors in the training dataset indicates how well the deep learning model fits the test dataset. |

| Validation loss | The number of errors in the validation dataset indicates how well the deep learning model performs on the validation dataset. |

| Metric and Formula * | Explanation ** |

|---|---|

| General model performance across all classes. The proportion of accurate predictions to the total number of predictions. | |

| Determines the model’s ability to correctly categorise a sample as Positive. The ratio between the number of TP detections and the total number of positive samples (either correct or incorrect). | |

| Determines the model’s capacity to identify Positive samples. The proportion of TP samples relative to the total number of Positive samples. As recall increases, more positive samples are identified. | |

| The mean of accuracy and recall. Combining the precision and recall measures into one metric. |

3. Results and Discussion

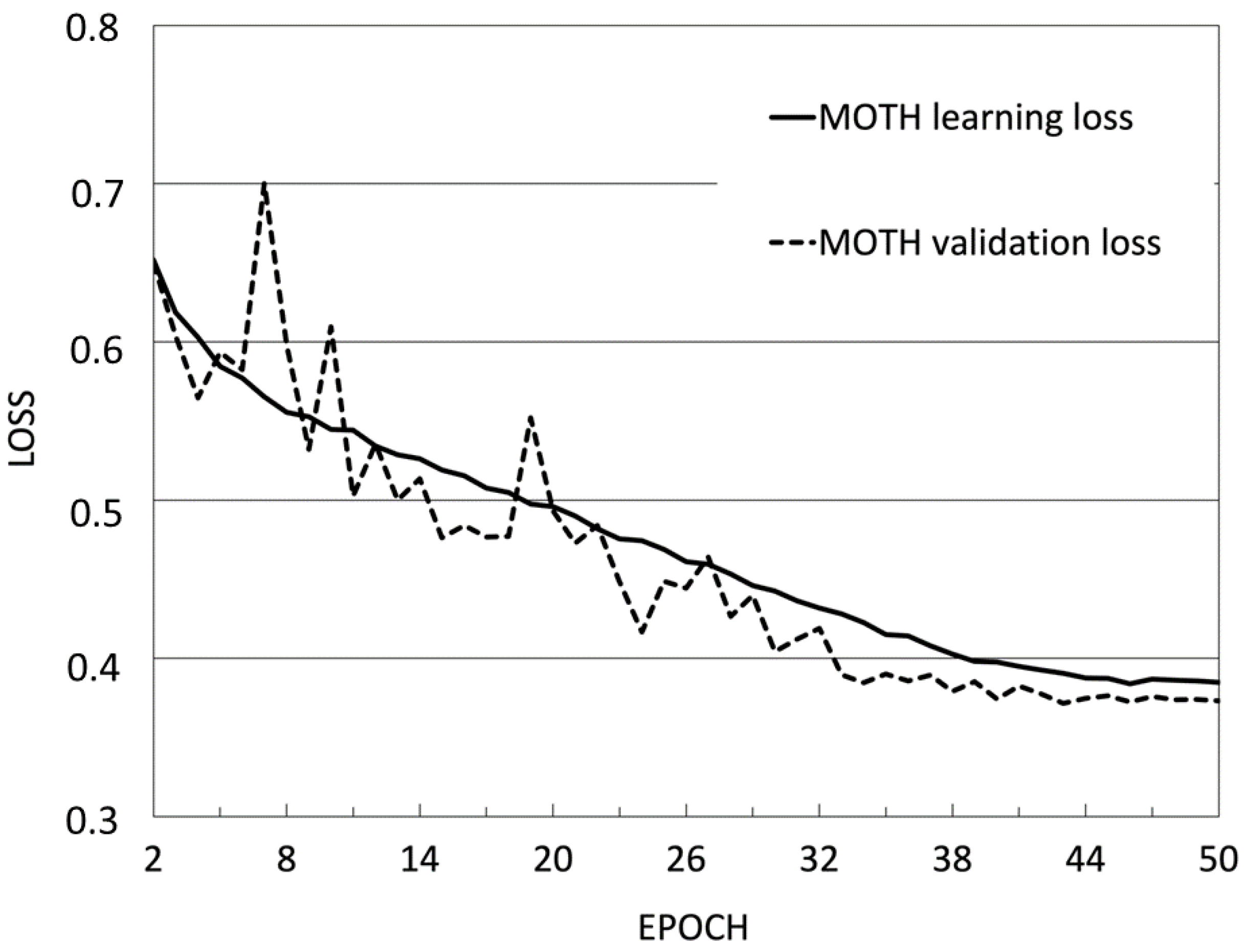

3.1. Analytical Model Performance

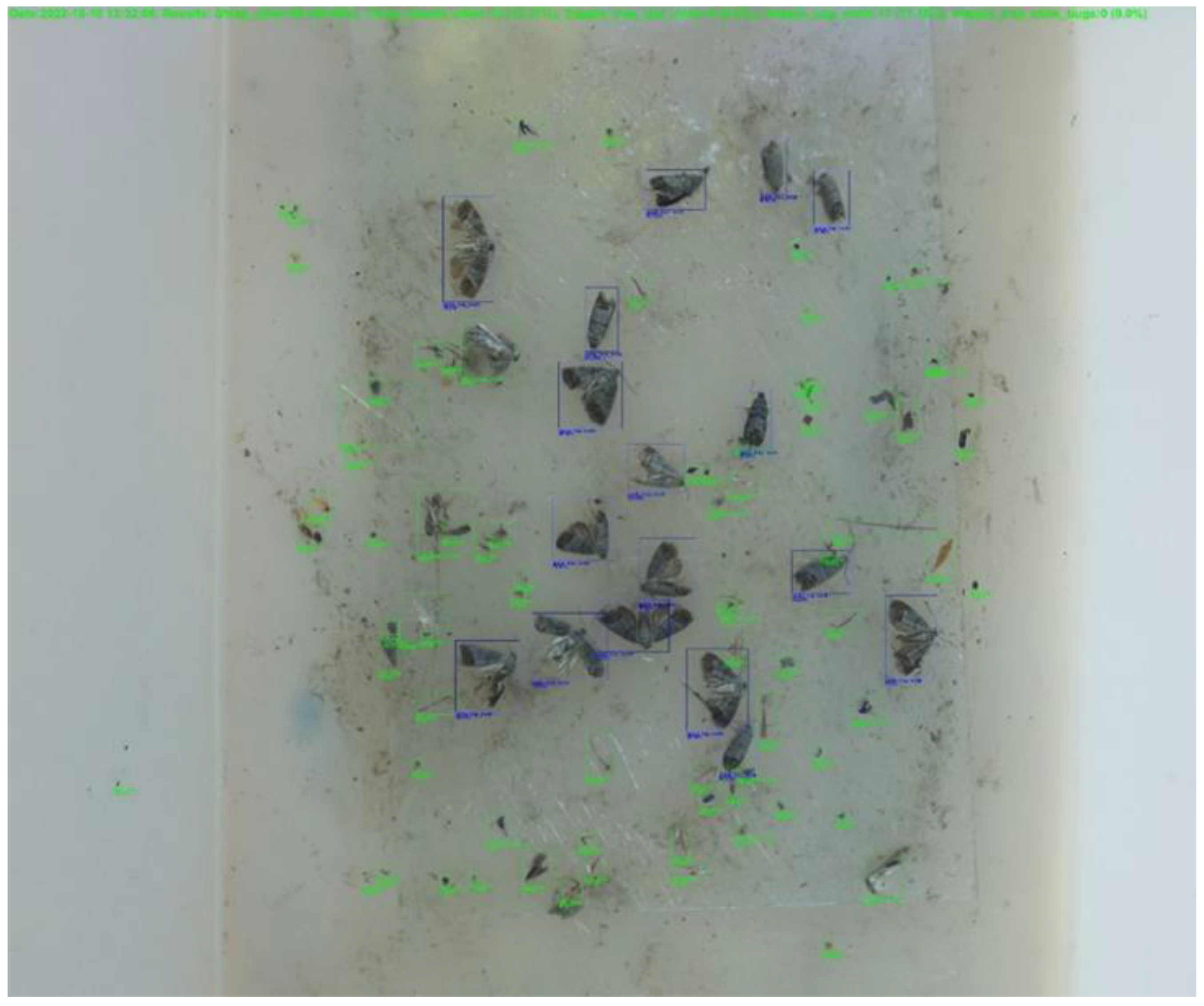

3.2. Smart Trap Operating

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Franck, P.; Timm, A.E. Population genetic structure of Cydia pomonella: A review and case study comparing spatiotemporal variation. J. Appl. Entomol. 2010, 134, 191–200. [Google Scholar] [CrossRef]

- Pajač, I.; Barić, B.; Šimon, S.; Mikac, K.M.; Pejić, I. An initial examination of the population genetic structure of Cydia pomonella (Lepidoptera: Tortricidae) in Croatian apple orchards. J. Food Agric. Environ. 2011, 9, 459–464. [Google Scholar]

- Men, Q.L.; Chen, M.H.; Zhang, Y.L.; Feng, J.N. Genetic structure and diversity of a newly invasive species, the codling moth, Cydia pomonella (L.) (Lepidoptera: Tortricidae) in China. Biol. Invasions 2013, 15, 447–458. [Google Scholar] [CrossRef]

- Basoalto, A.; Ramírez, C.C.; Lavandero, B.; Devotto, L.; Curkovic, T.; Franck, P.; Fuentes-Contreras, E. Population genetic structure of codling moth, Cydia pomonella (L.) (Lepidoptera: Tortricidae), in different localities and host plants in Chile. Insects 2020, 11, 285. [Google Scholar] [CrossRef] [PubMed]

- Kuyulu, A.; Genç, H. Genetic diversity of codling moth Cydia pomonella L. (Lepidoptera: Tortricidae) populations in Turkey. Turk. J. Zool. 2020, 44, 462–471. [Google Scholar] [CrossRef]

- Cichón, L.I.; Soleño, J.; Garrido, S.A.; Guiñazú, N.; Montagna, C.M.; Franck, P.; Olivares, J.; Musleh, S.; Rodríguez, M.A.; Fuentes-Contreras, E. Genetic structure of Cydia pomonella populations in Argentina and Chile implies isolating barriers exist between populations. J. Appl. Entomol. 2021, 145, 911–921. [Google Scholar] [CrossRef]

- Kadoić Balaško, M.; Bažok, R.; Mikac, K.M.; Benítez, H.A.; Suazo, M.J.; Viana, J.P.G.; Lemic, D.; Živković, I.P. Population Genetic Structure and Geometric Morphology of Codling Moth Populations from Different Management Systems. Agronomy 2022, 12, 1278. [Google Scholar] [CrossRef]

- Sauphanor, B.; Brosse, V.; Bouvier, J.C.; Speich, P.; Micoud, A.; Martinet, C. Monitoring resistance to diflubenzuron and deltamethrin in French codling moth populations (Cydia pomonella). Pest Manag. Sci. 2000, 56, 74–82. [Google Scholar] [CrossRef]

- Reyes, M.; Barros-Parada, W.; Ramírez, C.C.; Fuentes-Contreras, E. Organophosphate resistance and its main mechanism in populations of codling moth (Lepidoptera: Tortricidae) from Central Chile. J. Econ. Entomol. 2015, 108, 277–285. [Google Scholar] [CrossRef]

- Yang, X.Q.; Zhang, Y.L. Investigation of insecticide-resistance status of Cydia pomonella in Chinese populations. Bull. Entomol. Res. 2015, 105, 316–325. [Google Scholar] [CrossRef]

- Pajač Živković, I.; Barić, B. Rezistentnost jabukova savijača na insekticidne pripravke. Glas. Biljn. Zast. 2017, 17, 469–479. [Google Scholar]

- Bosch, D.; Rodríguez, M.A.; Avilla, J. Monitoring resistance of Cydia pomonella (L.) Spanish field populations to new chemical insecticides and the mechanisms involved. Pest Manag. Sci. 2018, 74, 933–943. [Google Scholar] [CrossRef]

- Pajač Živković, I.; Benitez, H.A.; Barić, B.; Drmić, Z.; Kadoić Balaško, M.; Lemic, D.; Dominguez Davila, J.H.; Mikac, K.M.; Bažok, R. Codling Moth Wing Morphology Changes Due to Insecticide Resistance. Insects 2019, 10, 310. [Google Scholar] [CrossRef]

- Knight, A.L.; Hilton, R.; Light, D.M. Monitoring codling moth (Lepidoptera: Tortricidae) in apple with blends of ethyl (E,Z)-2,4-decadienoate and codlemone. Environ. Entomol. 2005, 34, 598–603. [Google Scholar] [CrossRef]

- Lacey, L.A.; Unruh, T.R. Biological control of codling moth (Cydia pomonella, Lepidoptera: Tortricidae) and its role in integrated pest management, with emphasis on entomopathogens. Vedalia 2005, 12, 33–60. [Google Scholar]

- Knight, A.L. Codling moth areawide integrated pest management. In Areawide Pest Management: Theory and Implementation, 1st ed.; Koul, O., Cuperus, G., Elliott, N., Eds.; CAB International: Oxfordshire, UK, 2008; pp. 159–190. [Google Scholar] [CrossRef]

- Mitchell, V.J.; Manning, L.A.; Cole, L.; Suckling, D.M.; El-Sayed, A.M. Efficacy of the pear ester as a monitoring tool for codling moth Cydia pomonella (Lepidoptera: Tortricidae) in New Zealand apple orchards. Pest Manag. Sci. 2008, 64, 209–214. [Google Scholar] [CrossRef]

- Kadoić Balaško, M.; Bažok, R.; Mikac, K.M.; Lemic, D.; Pajač Živković, I. Pest management challenges and control practices in codling moth: A review. Insects 2020, 11, 38. [Google Scholar] [CrossRef]

- Pajač, I.; Barić, B.; Mikac, K.M.; Pejić, I. New insights into the biology and ecology of Cydia pomonella from apple orchards in Croatia. Bull. Insectol. 2012, 65, 185–193. [Google Scholar]

- Thaler, R.; Brandstätter, A.; Meraner, A.; Chabicovski, M.; Parson, W.; Zelger, R.; Dalla Via, J.; Dallinger, R. Molecular phylogeny and population structure of the codling moth (Cydia pomonella) in Central Europe: II. AFLP analysis reflects human-aided local adaptation of a global pest species. Mol. Phylogenet. Evol. 2008, 48, 838–849. [Google Scholar] [CrossRef]

- Lacey, L.A.; Thomson, D.; Vincent, C.; Arthurs, S.P. Codling moth granulovirus: A comprehensive review. Biocontrol Sci. Technol. 2008, 18, 639–663. [Google Scholar] [CrossRef]

- Maceljski, M. Jabučni savijač (Cydia/Laspeyresia, Carpocapsa, Grapholita/pomonella L.). In Poljoprivredna Entomologija, 2nd ed.; Zrinski: Cakovec, Croatia, 2002; pp. 302–309. [Google Scholar]

- Ciglar, I. Integrirana Zaštita Voćaka i Vinove Loze, 1st ed.; Zrinski: Cakovec, Croatia, 1998; pp. 82–87. [Google Scholar]

- Šubić, M.; Braggio, A.; Bassanetti, C.; Aljinović, S.; Tomšić, A.; Tomšić, T. Suzbijanje jabučnog savijača (Cydia pomonella L.) metodom konfuzije ShinEtsu® (Isomate C/OFM i Isomate CTT + OFM rosso FLEX) u Medimurju tijekom 2014. Glas. Biljn. Zast. 2015, 15, 277–290. [Google Scholar]

- Witzgall, P.; Stelinski, L.; Gut, L.; Thomson, D. Codling moth management and chemical ecology. Annu. Rev. Entomol. 2008, 53, 503–522. [Google Scholar] [CrossRef]

- Fernández, D.E.; Cichón, L.; Garrido, S.; Ribes-Dasi, M.; Avilla, J. Comparison of lures loaded with codlemone and pear ester for capturing codling moths, Cydia pomonella, in apple and pear orchards using mating disruption. J. Insect Sci. 2010, 10, 139. [Google Scholar] [CrossRef]

- Barić, B.; Pajač Živković, I. Učinkovitost konfuzije u suzbijanju jabukova savijača u Hrvatskoj s posebnim osvrtom na troškove zaštite. Pomol. Croat. Glas. Hrvat. Agron. Drus. 2017, 21, 125–132. [Google Scholar] [CrossRef]

- Miller, J.R.; Gut, L.J. Mating disruption for the 21st century: Matching technology with mechanism. Environ. Entomol. 2015, 44, 427–453. [Google Scholar] [CrossRef]

- Charmillot, P.J.; Hofer, D.; Pasquier, D. Attract and kill: A new method for control of the codling moth Cydia pomonella. Entomol. Exp. Appl. 2000, 94, 211–216. [Google Scholar] [CrossRef]

- Vreysen, M.J.B.; Carpenter, J.E.; Marec, F. Improvement of the sterile insect technique for codling moth Cydia pomonella (Linnaeus) (Lepidoptera Tortricidae) to facilitate expansion of field application. J. Appl. Entomol. 2010, 134, 165–181. [Google Scholar] [CrossRef]

- Thistlewood, H.M.A.; Judd, G.J.R. Twenty-five Years of Research Experience with the Sterile Insect Technique and Area-Wide Management of Codling Moth, Cydia pomonella (L.), in Canada. Insects 2019, 10, 292. [Google Scholar] [CrossRef]

- Gümüssoy, A.; Yüksel, E.; Özer, G.; Imren, M.; Canhilal, R.; Amer, M.; Dababat, A.A. Identification and Biocontrol Potential of Entomopathogenic Nematodes and Their Endosymbiotic Bacteria in Apple Orchards against the Codling Moth, Cydia pomonella (L.) (Lepidoptera: Tortricidae). Insects 2022, 13, 1085. [Google Scholar] [CrossRef]

- Laffon, L.; Bischoff, A.; Gautier, H.; Gilles, F.; Gomez, L.; Lescourret, F.; Franck, P. Conservation Biological Control of Codling Moth (Cydia pomonella): Effects of Two Aromatic Plants, Basil (Ocimum basilicum) and French Marigolds (Tagetes patula). Insects 2022, 13, 908. [Google Scholar] [CrossRef]

- Ju, D.; Mota-Sanchez, D.; Fuentes-Contreras, E.; Zhang, Y.L.; Wang, X.Q.; Yang, X.Q. Insecticide resistance in the Cydia pomonella (L.): Global status, mechanisms, and research directions. Pest. Biochem. Physiol. 2021, 178, 104925. [Google Scholar] [CrossRef]

- Reyes, M.; Franck, P.; Charmillot, P.J.; Ioriatti, C.; Olivares, J.; Pasqualini, E.; Sauphanor, B. Diversity of insecticide resistance mechanisms and spectrum in European populations of the codling moth, Cydia pomonella. Pest Manag. Sci. 2007, 63, 890–902. [Google Scholar] [CrossRef]

- Schulze-Bopp, S.; Jehle, J.A. Development of a direct test of baculovirus resistance in wild codling moth populations. J. Appl. Entomol. 2013, 137, 153–160. [Google Scholar] [CrossRef]

- Fan, J.; Jehle, J.A.; Rucker, A.; Nielsen, A.L. First Evidence of CpGV Resistance of Codling Moth in the USA. Insects 2022, 13, 533. [Google Scholar] [CrossRef]

- Franck, P.; Reyes, M.; Olivares, J.; Sauphanor, B. Genetic architecture in codling moth populations: Comparison between microsatellite and insecticide resistance markers. Mol. Ecol. 2007, 16, 3554–3564. [Google Scholar] [CrossRef]

- Beers, E.H.; Horton, D.R.; Miliczky, E. Pesticides used against Cydia pomonella disrupt biological control of secondary pests of apple. Biol. Control 2016, 102, 35–43. [Google Scholar] [CrossRef]

- Skendžić, S.; Zovko, M.; Pajač Živković, I.; Lešić, V.; Lemic, D. The impact of climate change on agricultural insect pests. Insects 2021, 12, 440. [Google Scholar] [CrossRef]

- Stoeckli, S.; Hirschi, M.; Spirig, C.; Calanca, P.; Rotach, M.W.; Samietz, J. Impact of climate change on voltinism and prospective diapause induction of a global pest insect—Cydia pomonella (L.). PLoS ONE 2012, 7, e35723. [Google Scholar] [CrossRef]

- Juszczak, R.; Kuchar, L.; Leśny, J.; Olejnik, J. Climate change impact on development rates of the codling moth (Cydia pomonella L.) in the Wielkopolska region, Poland. Int. J. Biometeorol. 2013, 57, 31–44. [Google Scholar] [CrossRef]

- Čirjak, D.; Miklečić, I.; Lemic, D.; Kos, T.; Pajač Živković, I. Automatic Pest Monitoring Systems in Apple Production under Changing Climatic Conditions. Horticulturae 2022, 8, 520. [Google Scholar] [CrossRef]

- Brown, S. Apple. In Fruit Breeding, Handbook of Plant Breeding; Badenes, M.L., Byrne, D.H., Eds.; Springer: Boston, MA, USA, 2012; pp. 329–367. [Google Scholar] [CrossRef]

- FAOSTAT. Food and Agriculture Organization of the United Nations. 2022. Available online: https://www.fao.org/faostat/en/#home (accessed on 10 January 2023).

- O’Shea, K.; Nash, R. An introduction to convolutional neural networks. arXiv 2015, arXiv:1511.08458. [Google Scholar]

- Sun, Y.; Liu, X.; Yuan, M.; Ren, L.; Wang, J.; Chen, Z. Automatic in-trap pest detection using deep learning for pheromone-based Dendroctonus valens monitoring. Biosyst. Eng. 2018, 176, 140–150. [Google Scholar] [CrossRef]

- Bjerge, K.; Nielsen, J.B.; Sepstrup, M.V.; Helsing-Nielsen, F.; Høye, T.T. An Automated Light Trap to Monitor Moths (Lepidoptera) Using Computer Vision-Based Tracking and Deep Learning. Sensors 2021, 21, 343. [Google Scholar] [CrossRef] [PubMed]

- Rustia, D.J.A.; Wu, Y.F.; Shih, P.Y.; Chen, S.K.; Chung, J.Y.; Lin, T.T. Tree-based Deep Convolutional Neural Network for Hierarchical Identification of Low-resolution Insect Images. In Proceedings of the 2021 ASABE Annual International Virtual Meeting, Virtual, 12–16 July 2021; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2021; p. 1. [Google Scholar]

- Suto, J. Codling Moth Monitoring with Camera-Equipped Automated Traps: A Review. Agriculture 2022, 12, 1721. [Google Scholar] [CrossRef]

- Ding, W.; Taylor, G. Automatic moth detection from trap images for pest management. Comput. Electron. Agric. 2016, 123, 17–28. [Google Scholar] [CrossRef]

- Suto, J. Embedded System-Based Sticky Paper Trap with Deep Learning-Based Insect-Counting Algorithm. Electronics 2021, 10, 1754. [Google Scholar] [CrossRef]

- Preti, M.; Moretti, C.; Scarton, G.; Giannotta, G.; Angeli, S. Developing a smart trap prototype equipped with camera for tortricid pests remote monitoring. Bull. Insectol. 2021, 74, 147–160. [Google Scholar]

- Suárez, A.; Molina, R.S.; Ramponi, G.; Petrino, R.; Bollati, L.; Sequeiros, D. Pest detection and classification to reduce pesticide use in fruit crops based on deep neural networks and image processing. In Proceedings of the 2021 XIX Workshop on Information Processing and Control (RPIC), San Juan, Argentina, 3–5 November 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Albanese, A.; Nardello, M.; Brunelli, D. Automated Pest Detection with DNN on the Edge for Precision Agriculture. IEEE J. Emerg. Sel. Top. Circuits Syst. 2021, 11, 458–467. [Google Scholar] [CrossRef]

- Čirjak, D.; Aleksi, I.; Miklečić, I.; Antolković, A.M.; Vrtodušić, R.; Viduka, A.; Lemic, D.; Kos, T.; Pajač Živković, I. Monitoring System for Leucoptera malifoliella (O. Costa, 1836) and Its Damage Based on Artificial Neural Networks. Agriculture 2023, 13, 67. [Google Scholar] [CrossRef]

- Object Detection with TensorFlow Lite Model Maker. Available online: https://www.tensorflow.org/lite/models/modify/model_maker/object_detection#run_%20ob-ject_detection_and_show_the_detection_results/ (accessed on 10 February 2023).

- Module: Tf.keras.metrics. Available online: https://www.tensorflow.org/api_docs/python/tf/keras/metrics (accessed on 28 March 2023).

- COCO. Common Objects in Context. Available online: https://cocodataset.org/#detection-eval (accessed on 13 February 2023).

- Baeldung. Available online: https://www.baeldung.com/cs/training-validation-loss-deep-learning (accessed on 13 February 2023).

- Hasty GmbH. Available online: https://hasty.ai/docs/mp-wiki/metrics/iou-intersection-over-union (accessed on 26 March 2023).

- Kulkarni, A.; Chong, D.; Batarseh, F.A. Foundations of data imbalance and solutions for a data democracy. In Data Democracy, 1st ed.; Batarseh, A., Yang, R., Eds.; Academic Press: Cambridge, MA, USA, 2020; pp. 83–106. [Google Scholar] [CrossRef]

- V7Labs. Available online: https://www.v7labs.com/blog/confusion-matrix-guide (accessed on 9 February 2023).

- Aslan, M.F.; Sabanci, K.; Durdu, A. A CNN-based novel solution for determining the survival status of heart failure patients with clinical record data: Numeric to image. Biomed. Signal Process. Control 2021, 68, 102716. [Google Scholar] [CrossRef]

- PaperspaceBlog. Available online: https://blog.paperspace.com/deep-learning-metrics-precision-recall-accuracy/ (accessed on 12 February 2023).

- Towards Data Science. Available online: https://towardsdatascience.com/the-f1-score-bec2bbc38aa6 (accessed on 12 February 2023).

- Grandini, M.; Bagli, E.; Visani, G. Metrics for multi-class classification: An overview. arXiv 2020. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. arXiv 2019. [Google Scholar] [CrossRef]

- Sasaki, Y. The Truth of the F-Measure; University of Manchester: Manchester, UK, 2007. [Google Scholar]

- Teixeira, A.C.; Ribeiro, J.; Morais, R.; Sousa, J.J.; Cunha, A. A Systematic Review on Automatic Insect Detection Using Deep Learning. Agriculture 2023, 13, 713. [Google Scholar] [CrossRef]

- Suto, J. A Novel Plug-in Board for Remote Insect Monitoring. Agriculture 2022, 12, 1897. [Google Scholar] [CrossRef]

- Wen, C.; Guyer, D. Image-based orchard insect automated identification and classification method. Comput. Electron. Agric. 2012, 89, 110–115. [Google Scholar] [CrossRef]

- Hong, S.-J.; Kim, S.-Y.; Kim, E.; Lee, C.-H.; Lee, J.-S.; Lee, D.-S.; Bang, J.; Kim, G. Moth Detection from Pheromone Trap Images Using Deep Learning Object Detectors. Agriculture 2020, 10, 170. [Google Scholar] [CrossRef]

- Ahmad, I.; Yang, Y.; Yue, Y.; Ye, C.; Hassan, M.; Cheng, X.; Wu, Y.; Zhang, Y. Deep Learning Based Detector YOLOv5 for Identifying Insect Pests. Appl. Sci. 2022, 12, 10167. [Google Scholar] [CrossRef]

- Xia, D.; Chen, P.; Wang, B.; Zhang, J.; Xie, C. Insect Detection and Classification Based on an Improved Convolutional Neural Network. Sensors 2018, 18, 4169. [Google Scholar] [CrossRef]

- Hong, S.-J.; Nam, I.; Kim, S.-Y.; Kim, E.; Lee, C.-H.; Ahn, S.; Park, I.-K.; Kim, G. Automatic Pest Counting from Pheromone Trap Images Using Deep Learning Object Detectors for Matsucoccus thunbergianae Monitoring. Insects 2021, 12, 342. [Google Scholar] [CrossRef]

- Popescu, D.; Ichim, L.; Dimoiu, M.; Trufelea, R. Comparative Study of Neural Networks Used in Halyomorpha Halys Detection. In Proceedings of the 2022 IEEE 30th Mediterranean Conference on Control and Automation (MED), Vouliagmeni, Greece, 28 June–1 July 2022; pp. 182–187. [Google Scholar] [CrossRef]

- RS Components Ltd. Available online: https://uk.rs-online.com/web/generalDisplay.html?id=solutions/single-board-computers-overview (accessed on 27 March 2023).

- Segalla, A.; Fiacco, G.; Tramarin, L.; Nardello, M.; Brunelli, D. Neural networks for pest detection in precision agriculture. In Proceedings of the 2020 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), Trento, Italy, 4–6 November 2020; pp. 7–12. [Google Scholar] [CrossRef]

- Mendoza, Q.A.; Pordesimo, L.; Neilsen, M.; Armstrong, P.; Campbell, J.; Mendoza, P.T. Application of Machine Learning for Insect Monitoring in Grain Facilities. AI 2023, 4, 348–360. [Google Scholar] [CrossRef]

- Zhong, Y.; Gao, J.; Lei, Q.; Zhou, Y. A vision-based counting and recognition system for flying insects in intelligent agriculture. Sensors 2018, 18, 1489. [Google Scholar] [CrossRef] [PubMed]

- Rustia, D.J.A.; Lin, C.E.; Chung, J.Y.; Zhuang, Y.J.; Hsu, J.C.; Lin, T.T. Application of image and environmental sensor network for automated greenhouse insect pest monitoring. J. Asia Pac. Etomol. 2020, 23, 17–28. [Google Scholar] [CrossRef]

- Brunelli, D.; Albanese, A.; d’Acunto, D.; Nardello, M. Energy neutral machine learning based iot device for pest detection in precision agriculture. IEEE Internet Things Mag. 2019, 2, 10–13. [Google Scholar] [CrossRef]

- Wosner, O.; Farjon, G.; Bar-Hillel, A. Object detection in agricultural contexts: A multiple resolution benchmark and comparison to human. Comput. Electron. Agric. 2021, 189, 106404. [Google Scholar] [CrossRef]

- Schrader, M.J.; Smytheman, P.; Beers, E.H.; Khot, L.R. An Open-Source Low-Cost Imaging System Plug-In for Pheromone Traps Aiding Remote Insect Pest Population Monitoring in Fruit Crops. Machines 2022, 10, 52. [Google Scholar] [CrossRef]

| Phases of Creating Analytical Model | Number of Images |

|---|---|

| Training | 139,320 (90%) |

| Validation | 15,480 (10%) |

| Test | 30 (additional new images) |

| Parameter | Value |

|---|---|

| AP | 0.66 |

| APIoU = 0.50 | 0.93 |

| APIoU = 0.75 | 0.80 |

| APs | 0.42 |

| APm | 0.65 |

| APl | 0.57 |

| ARmax1 | 0.30 |

| ARmax10 | 0.71 |

| ARmax100 | 0.75 |

| ARs | 0.59 |

| ARm | 0.74 |

| ARl | 0.63 |

| AP_MOTH | 0.79 |

| AP_INSECT | 0.62 |

| AP_OTHER | 0.55 |

| Class | MOTH | INSECT | OTHER |

|---|---|---|---|

| (n) truth | 189 | 120 | 983 |

| (n) classified | 180 | 199 | 993 |

| Accuracy | 99.3% | 99.46% | 99.07% |

| Precision | 1.0 | 0.97 | 0.99 |

| Recall | 0.95 | 0.97 | 1.0 |

| F1 Score | 0.98 | 0.97 | 0.99 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Čirjak, D.; Aleksi, I.; Lemic, D.; Pajač Živković, I. EfficientDet-4 Deep Neural Network-Based Remote Monitoring of Codling Moth Population for Early Damage Detection in Apple Orchard. Agriculture 2023, 13, 961. https://doi.org/10.3390/agriculture13050961

Čirjak D, Aleksi I, Lemic D, Pajač Živković I. EfficientDet-4 Deep Neural Network-Based Remote Monitoring of Codling Moth Population for Early Damage Detection in Apple Orchard. Agriculture. 2023; 13(5):961. https://doi.org/10.3390/agriculture13050961

Chicago/Turabian StyleČirjak, Dana, Ivan Aleksi, Darija Lemic, and Ivana Pajač Živković. 2023. "EfficientDet-4 Deep Neural Network-Based Remote Monitoring of Codling Moth Population for Early Damage Detection in Apple Orchard" Agriculture 13, no. 5: 961. https://doi.org/10.3390/agriculture13050961

APA StyleČirjak, D., Aleksi, I., Lemic, D., & Pajač Živković, I. (2023). EfficientDet-4 Deep Neural Network-Based Remote Monitoring of Codling Moth Population for Early Damage Detection in Apple Orchard. Agriculture, 13(5), 961. https://doi.org/10.3390/agriculture13050961