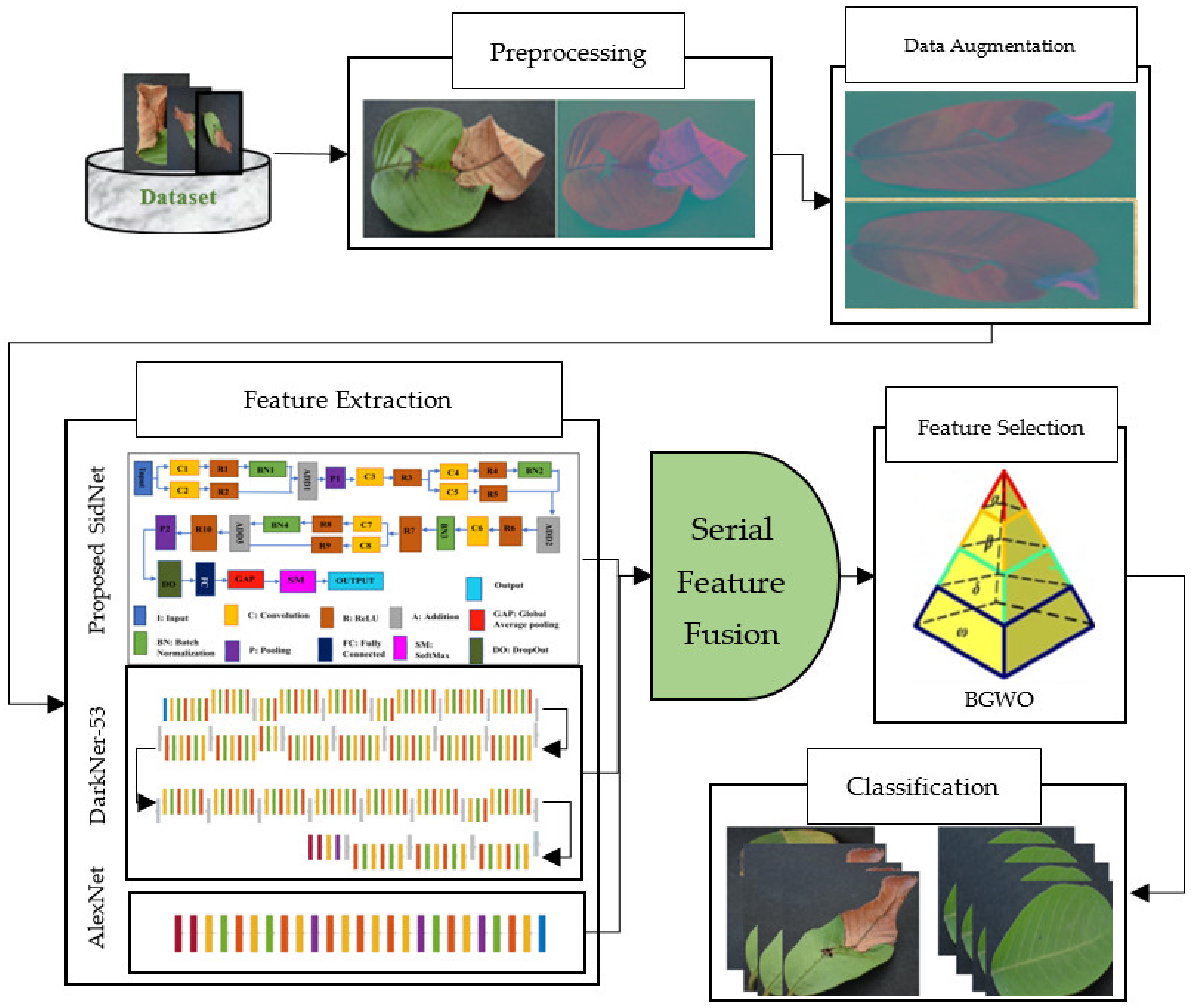

A Hybrid Framework for Detection and Analysis of Leaf Blight Using Guava Leaves Imaging

Abstract

1. Introduction

- A new deep CNN Net named SidNet is presented, which consists of 33 layers along with 35 connections. The pretraining of SidNet is performed on a plant imaging dataset. The features are extracted from the proposed SidNet, darknet53, and AlexNet, which are further fused using serial fusion. The deep features are also known as automatic features; they automatically solve the issues related to contrast, shape, texture, and illumination.

- The features are sorted using an Entropy Algorithm, and for better feature selection, Binary Gray Wolf Optimization is used. The selected features are used to make a single feature vector for classification using an SVM and KNN Classifier to achieve the best performance and results.

- Data Augmentation is performed, as the selected dataset is small; therefore, the images are flipped both horizontally and vertically to make the dataset large.

2. Related Work

3. Materials and Methods

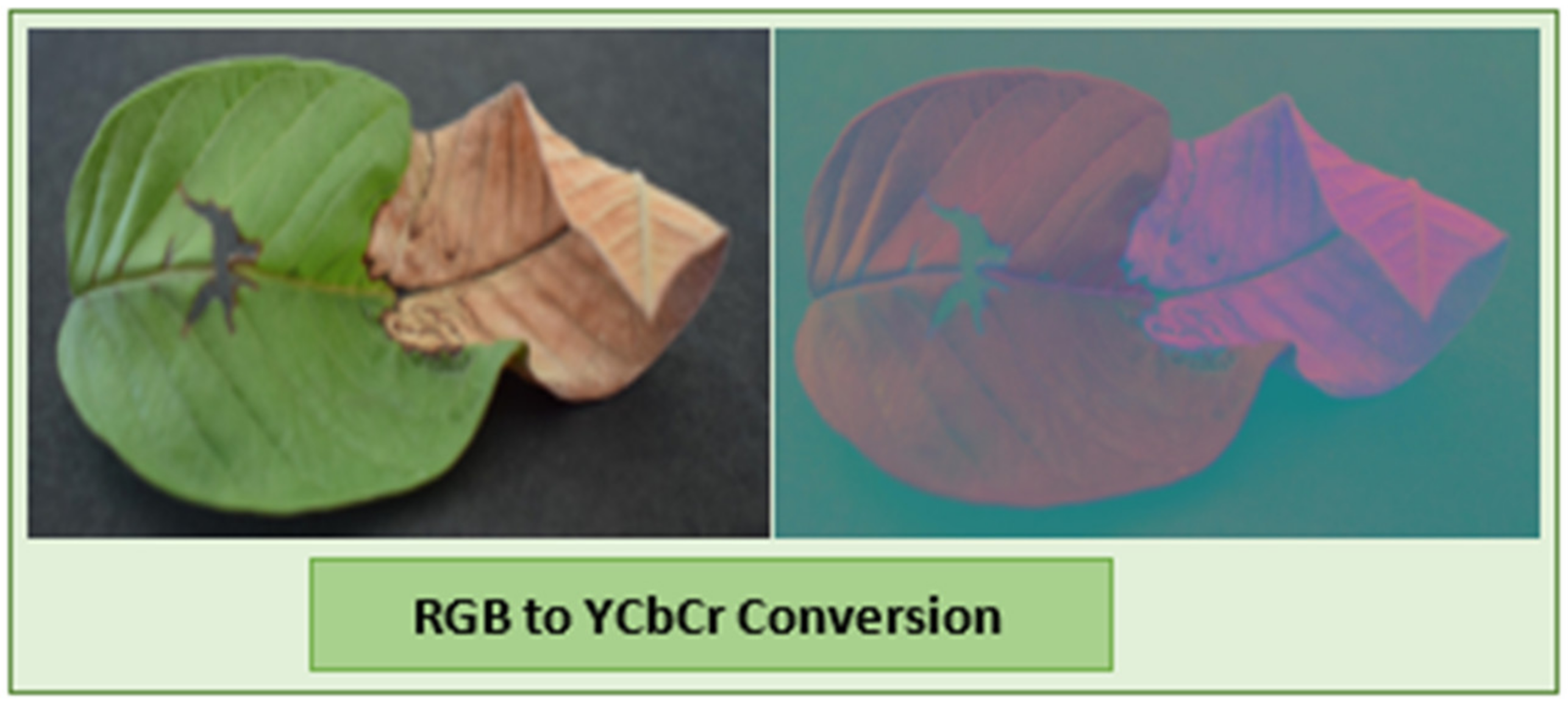

3.1. Image Preprocessing

3.2. Data Augmentation

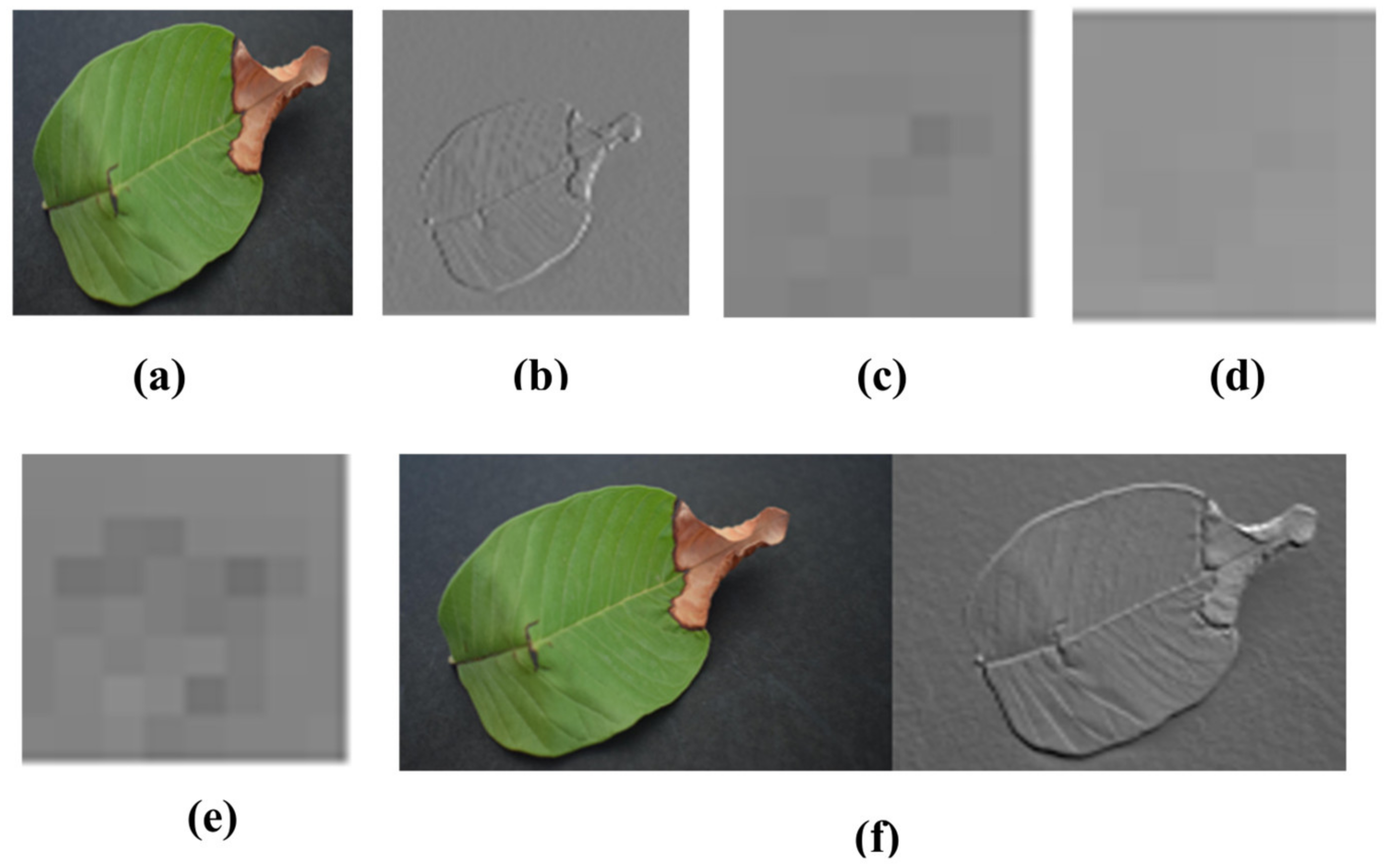

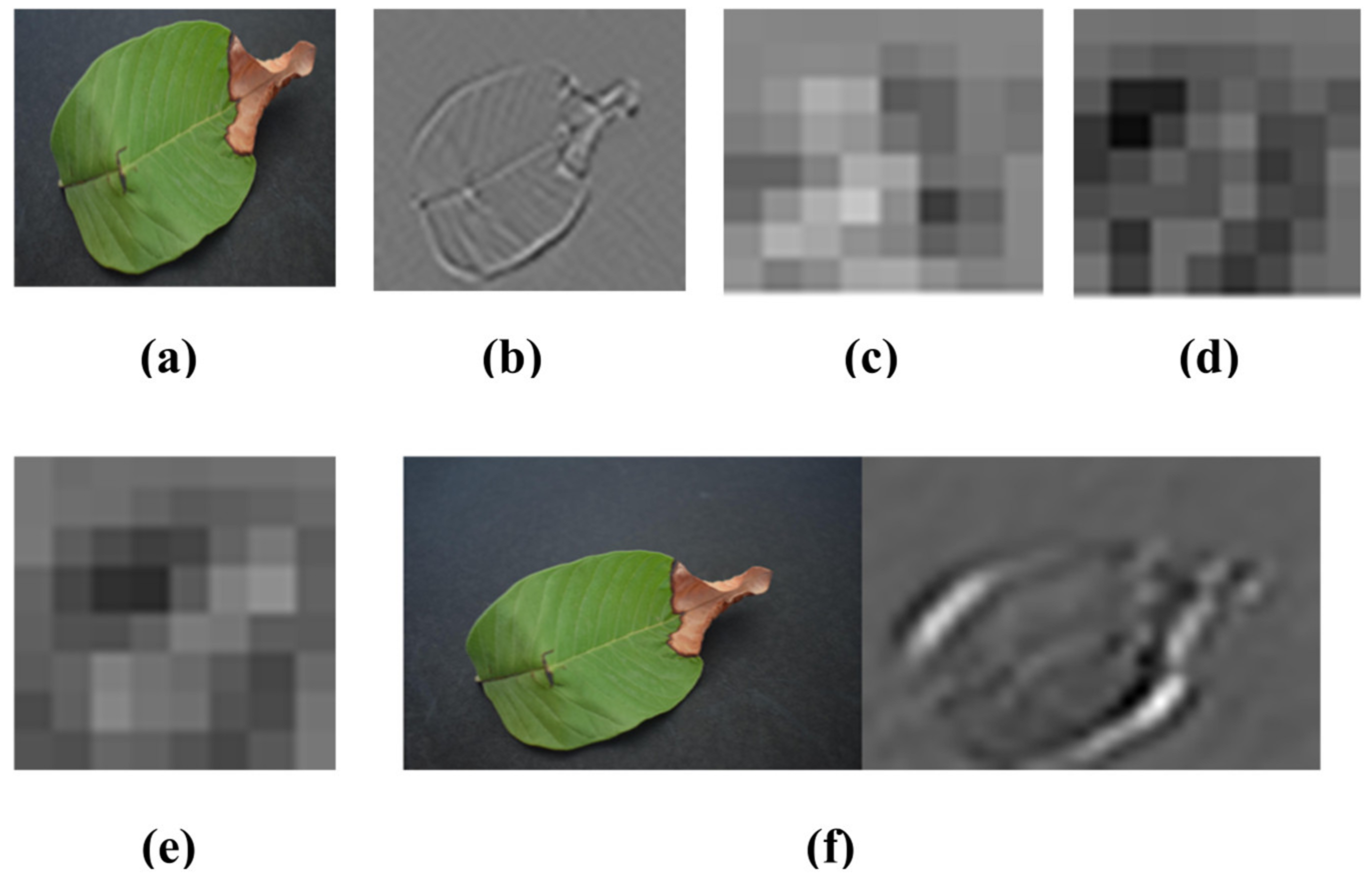

3.3. Feature Extraction

3.4. Proposed SidNet as CNN Net

3.5. DarkNet-53

3.6. AlexNet

3.7. Feature Selection

3.8. Feature Fusion

3.9. Classification

4. Results and Discussion

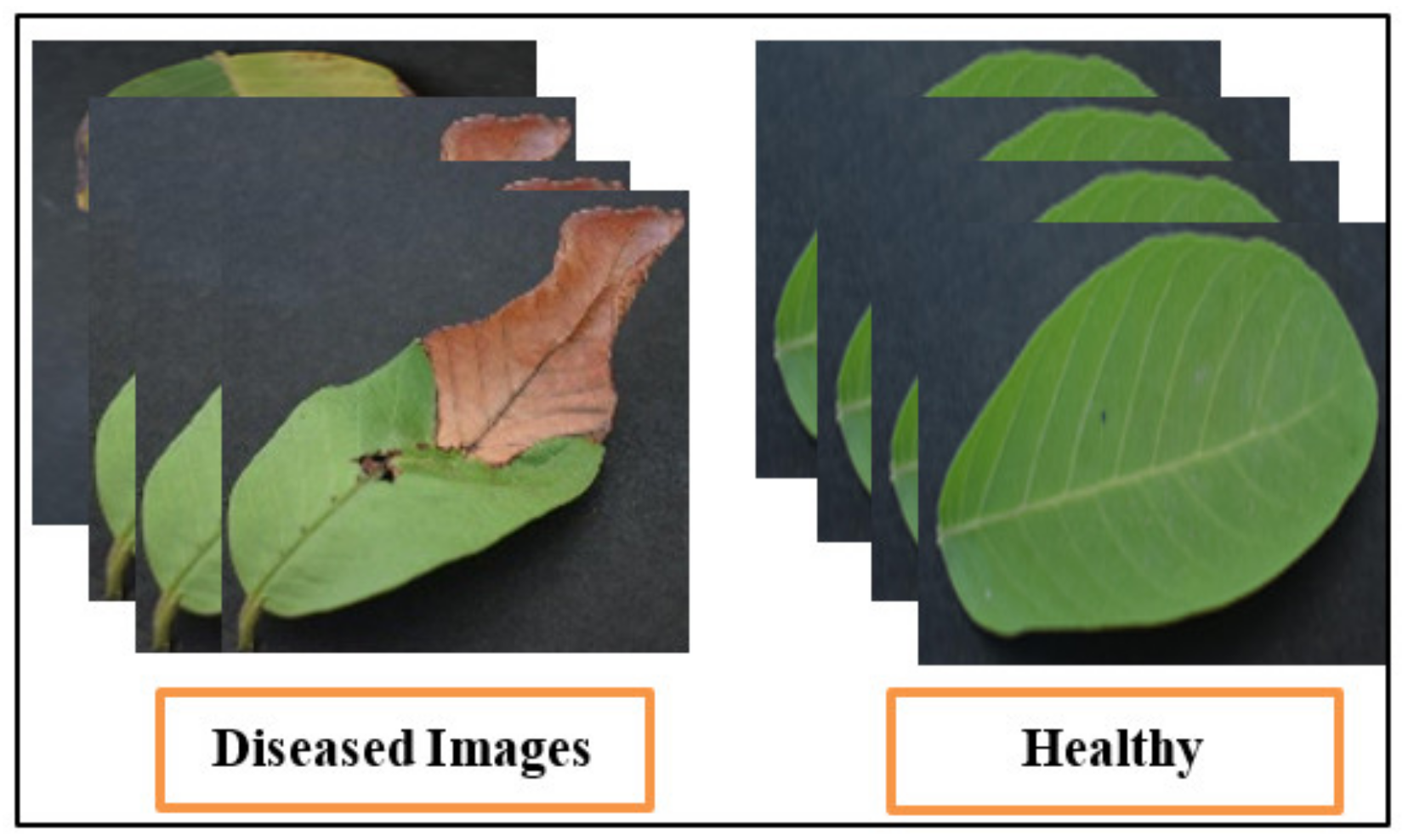

4.1. Dataset

4.2. Performance Evaluation Methods

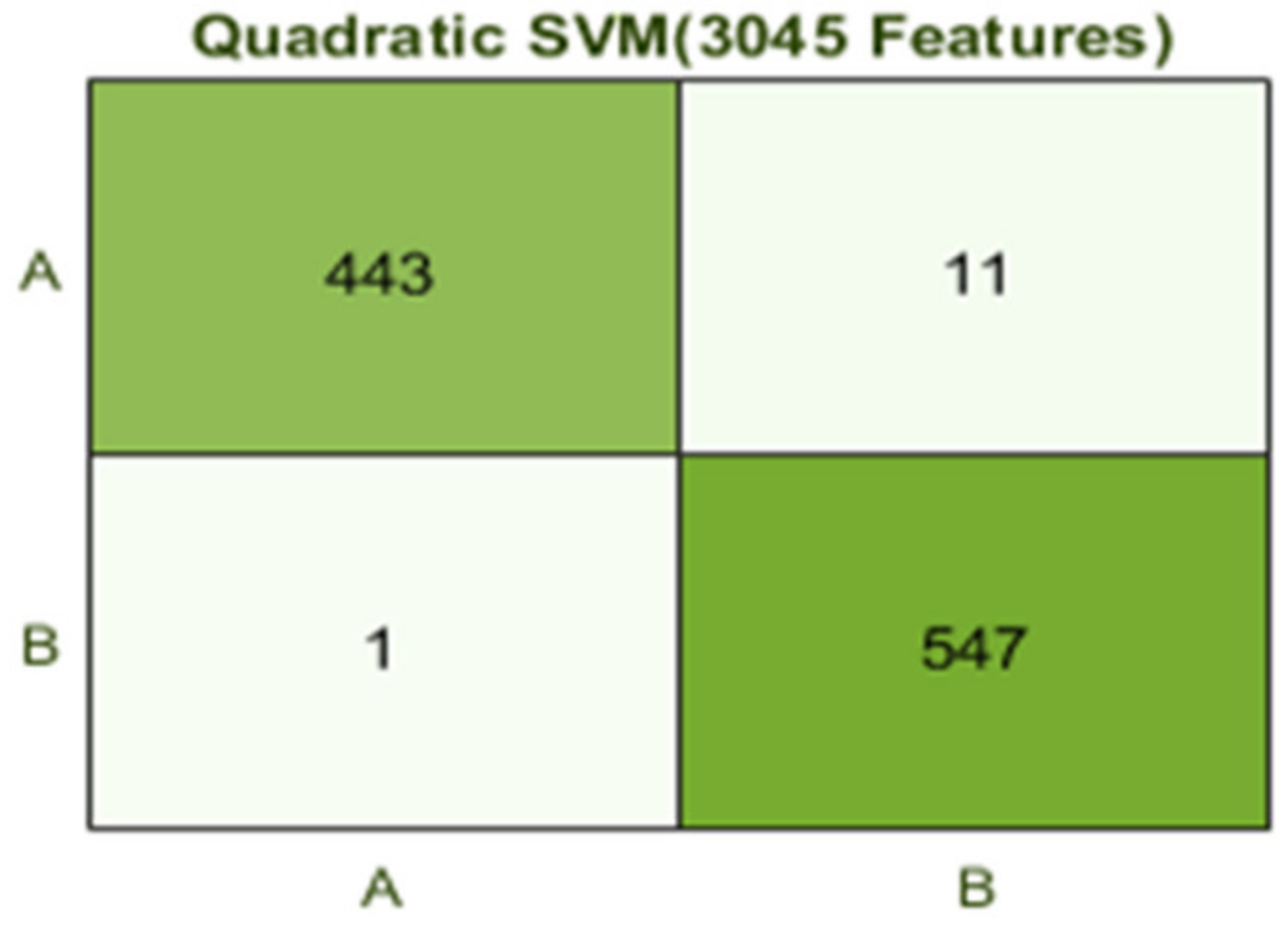

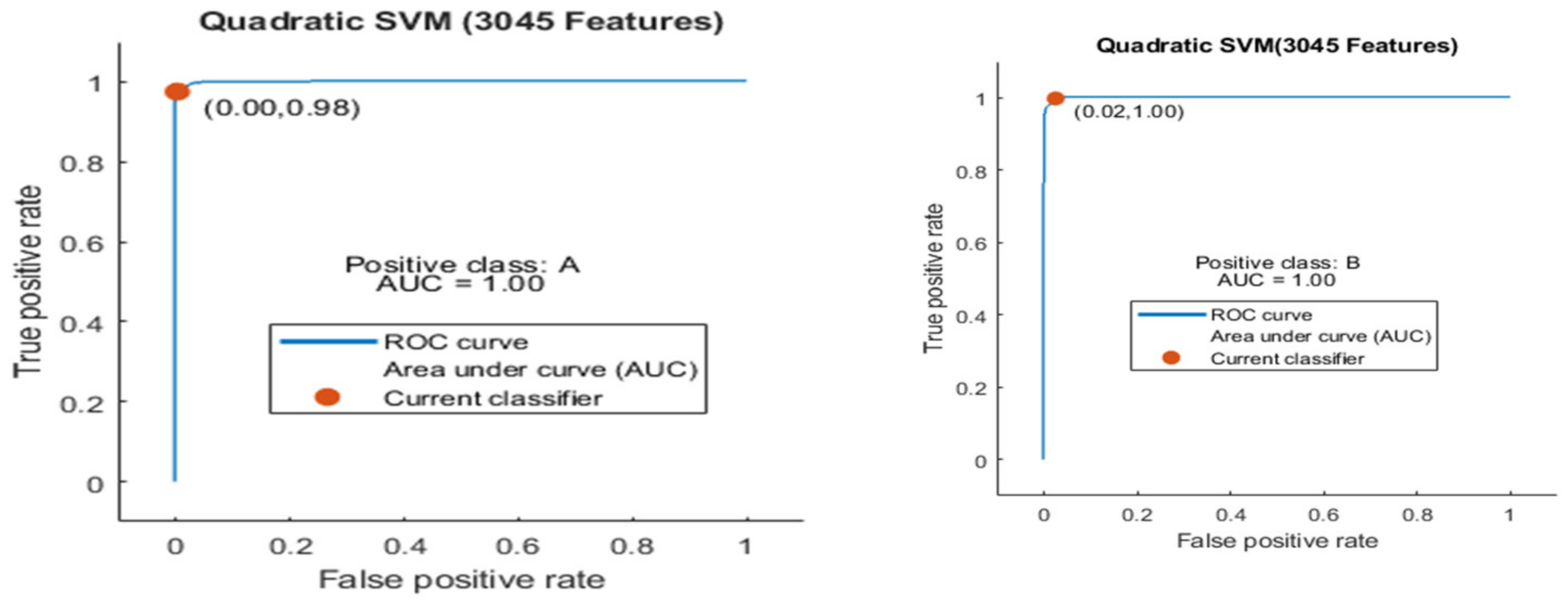

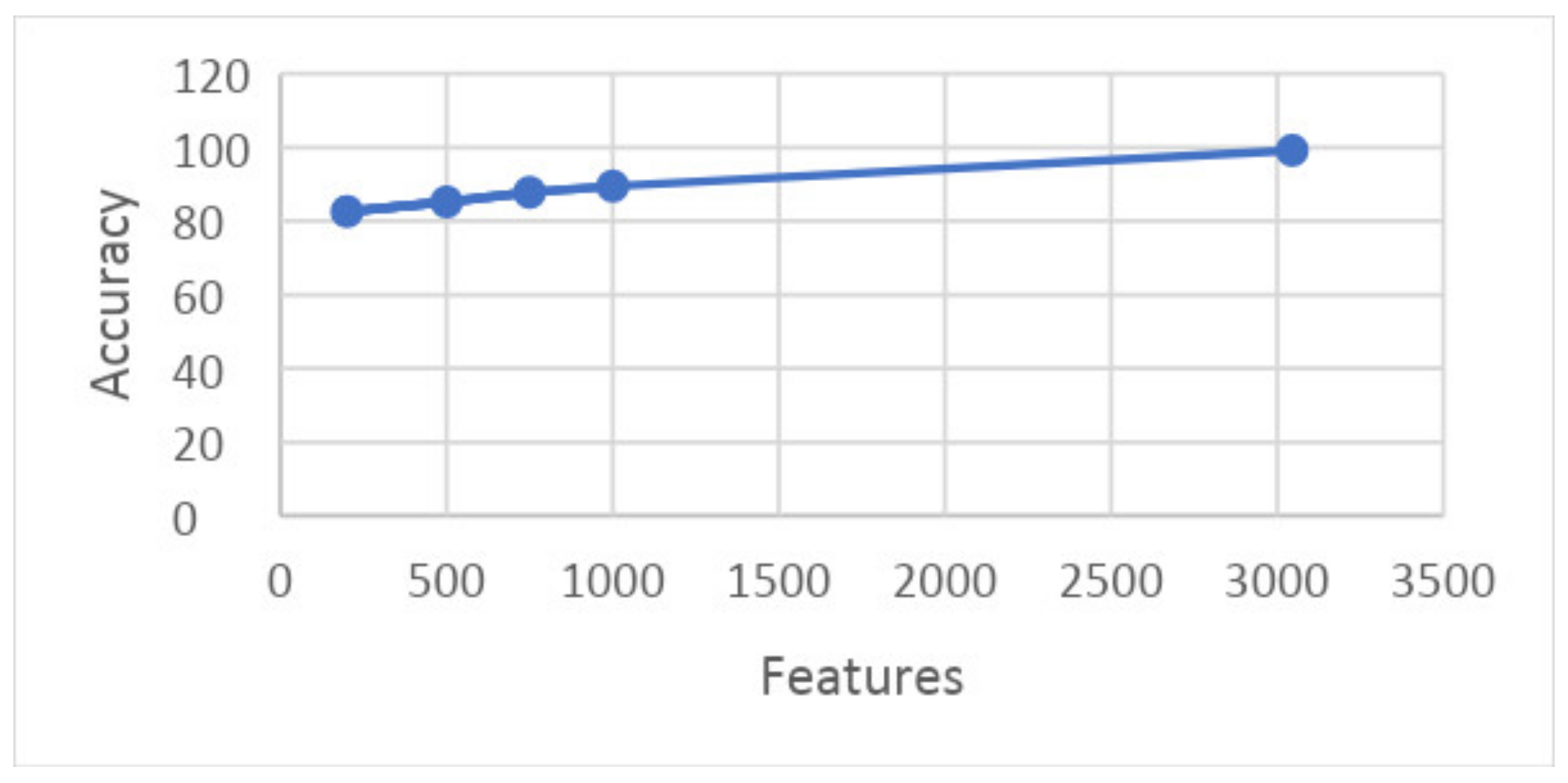

- Experiment_1: Using 5-Fold and 10-Fold Validation on 3045 features

- Experiment_1(a): Using 5 Folds and 3045 Features (1002 × 3045 features)

- Experiment_1(b): Using 10 Folds and 3045 Features (1002 × 3045 features)

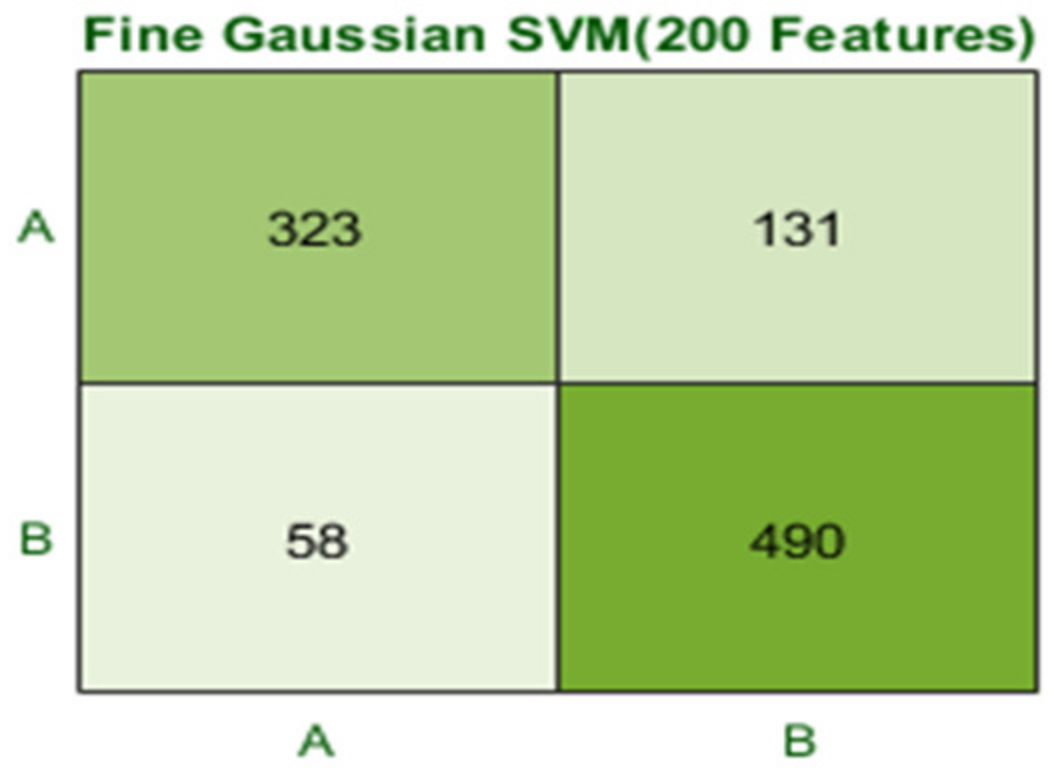

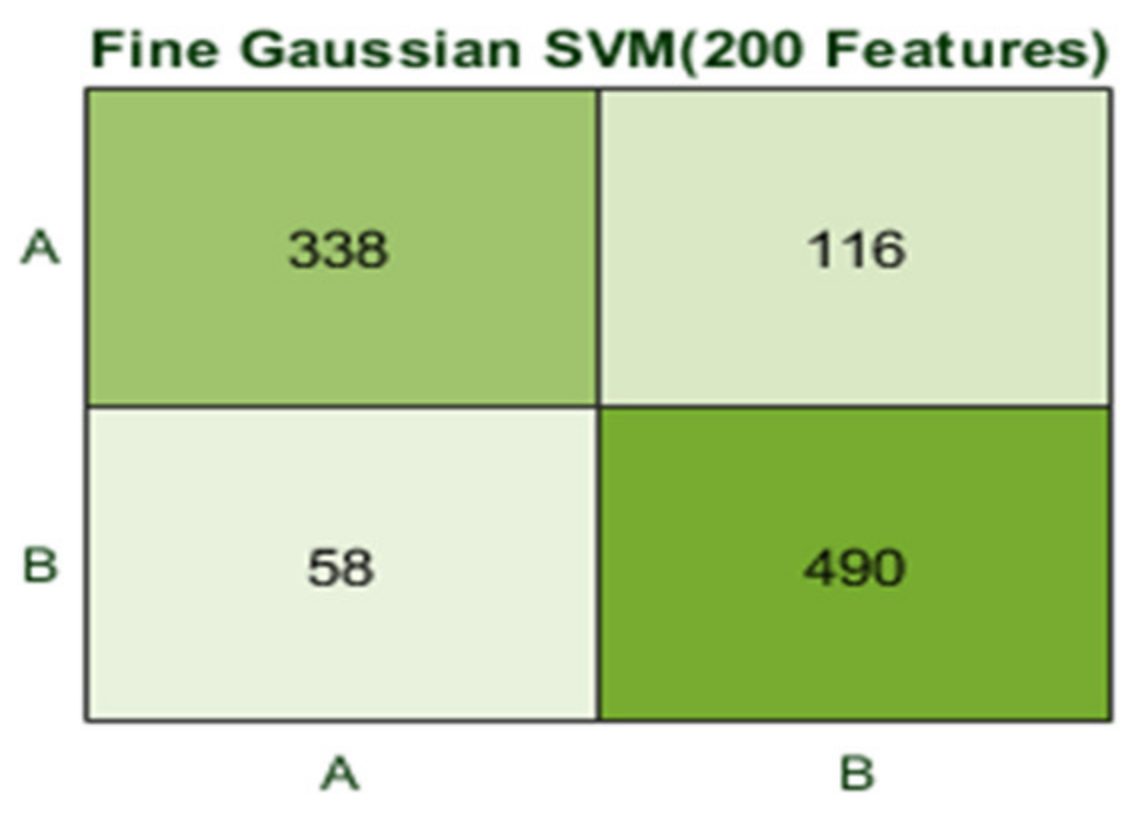

- Experiment_2: Using 5-Fold and 10-Fold Validation on 200 features

- Experiment_2(a): Using 5 Folds and 200 Features (1002 × 200 features)

- Experiment_2(b): Using 10 Folds and 200 Features (1002 × 200 features)

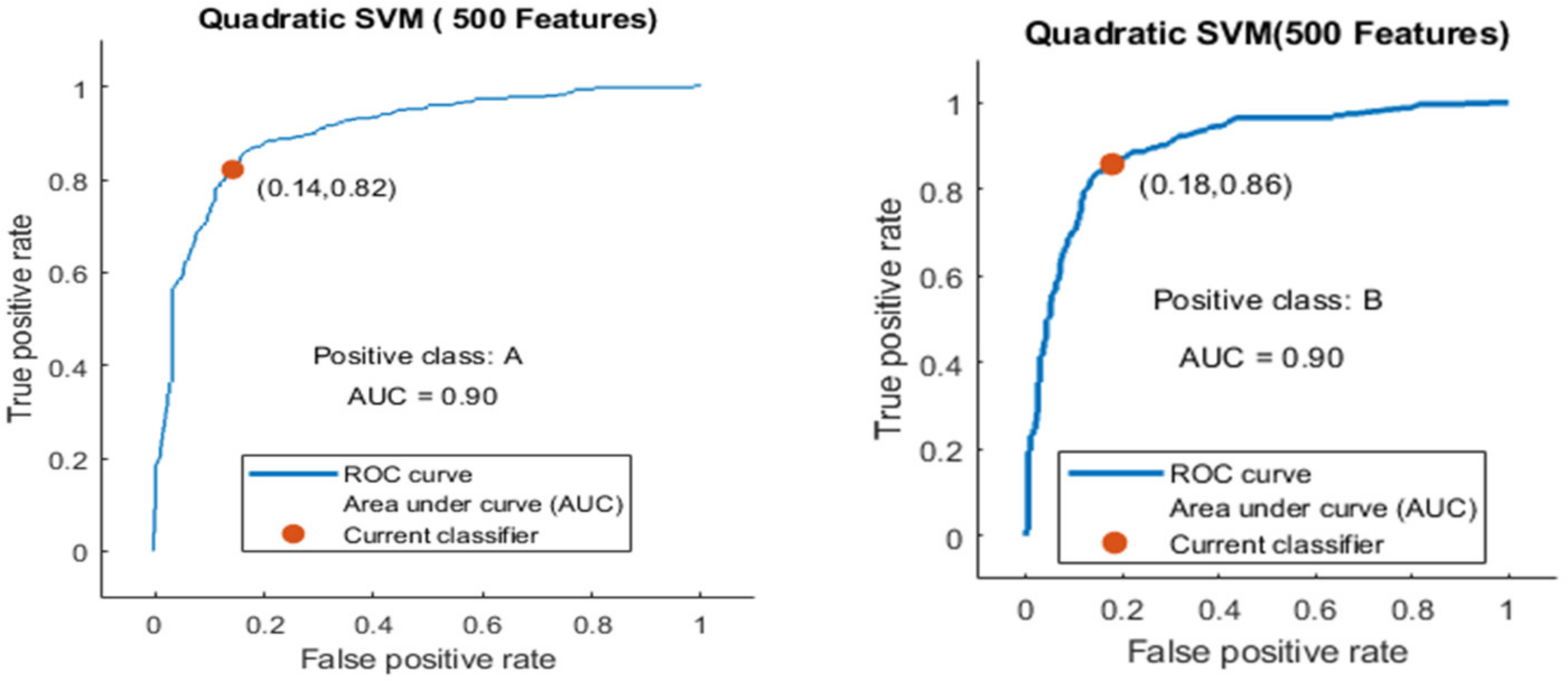

- Experiment_3: Using 5-Fold and 10-Fold Validation on 500 features.

- Experiment_3(a): Using 5 Folds and 500 Features (1002 × 500 features)

- Experiment_3(b): Using 10 Folds and 500 Features (1002 × 500 features)

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Conflicts of Interest

References

- Rehman, A.; Jingdong, L.; Shahzad, B.; Chandio, A.A.; Hussain, I.; Nabi, G.; Iqbal, M.S. Economic perspectives of major field crops of Pakistan: An empirical study. Pac. Sci. Rev. B Humanit. Soc. Sci. 2015, 1, 145–158. [Google Scholar] [CrossRef]

- Rai, M.K.; Asthana, P.; Jaiswal, V.; Jaiswal, U. Biotechnological advances in guava (Psidium guajava L.): Recent developments and prospects for further research. Trees 2010, 24, 1–12. [Google Scholar] [CrossRef]

- Mitra, S.; Thingreingam Irenaeus, K. Guava cultivars of the world. In International Symposia on Tropical and Temperate Horticulture—ISTTH2016; CIRAD Publications: Cairns, Queensland, Australia, 2016; pp. 905–910. [Google Scholar]

- Almadhor, A.; Rauf, H.T.; Lali, M.I.U.; Damaševičius, R.; Alouffi, B.; Alharbi, A. AI-Driven Framework for Recognition of Guava Plant Diseases through Machine Learning from DSLR Camera Sensor Based High Resolution Imagery. Sensors 2021, 21, 3830. [Google Scholar] [CrossRef] [PubMed]

- Misra, A. Guava diseases—Their symptoms, causes and management. In Diseases of Fruits and Vegetables; Springer: Berlin/Heidelberg, Germany, 2004; Volume II, pp. 81–119. [Google Scholar]

- Dhiman, B.; Kumar, Y.; Hu, Y.-C. A general purpose multi-fruit system for assessing the quality of fruits with the application of recurrent neural network. Soft Comput. 2021, 25, 9255–9272. [Google Scholar] [CrossRef]

- Ashraf, R.; Habib, M.A.; Akram, M.; Latif, M.A.; Malik, M.S.A.; Awais, M.; Dar, S.H.; Mahmood, T.; Yasir, M.; Abbas, Z. Deep convolution neural network for big data medical image classification. IEEE Access 2020, 8, 105659–105670. [Google Scholar] [CrossRef]

- Naranjo-Torres, J.; Mora, M.; Hernández-García, R.; Barrientos, R.J.; Fredes, C.; Valenzuela, A. A review of convolutional neural network applied to fruit image processing. Appl. Sci. 2020, 10, 3443. [Google Scholar] [CrossRef]

- Lloret, E.; Plaza, L.; Aker, A. The challenging task of summary evaluation: An overview. Lang. Resour. Eval. 2018, 52, 101–148. [Google Scholar] [CrossRef]

- Bojer, C.S.; Meldgaard, J.P. Kaggle forecasting competitions: An overlooked learning opportunity. Int. J. Forecast. 2021, 37, 587–603. [Google Scholar] [CrossRef]

- Fu, X.; Cao, X. Underwater image enhancement with global–local networks and compressed-histogram equalization. Signal Process. Image Commun. 2020, 86, 115892. [Google Scholar] [CrossRef]

- Tang, Z.; Gao, Y.; Karlinsky, L.; Sattigeri, P.; Feris, R.; Metaxas, D. OnlineAugment: Online Data Augmentation with Less Domain Knowledge; Springer: Berlin/Heidelberg, Germany, 2020; pp. 313–329. [Google Scholar]

- Liu, Z.; Lai, Z.; Ou, W.; Zhang, K.; Zheng, R. Structured optimal graph based sparse feature extraction for semi-supervised learning. Signal Process. 2020, 170, 107456. [Google Scholar] [CrossRef]

- Ghojogh, B.; Samad, M.N.; Mashhadi, S.A.; Kapoor, T.; Ali, W.; Karray, F.; Crowley, M. ‘Feature selection and feature extraction in pattern analysis: A literature review. arXiv 2019, arXiv:1905.02845. [Google Scholar]

- Zhang, J.; Liu, B. A review on the recent developments of sequence-based protein feature extraction methods. Curr. Bioinform. 2019, 14, 190–199. [Google Scholar] [CrossRef]

- Latif, A.; Rasheed, A.; Sajid, U.; Ahmed, J.; Ali, N.; Ratyal, N.I.; Zafar, B.; Dar, S.H.; Sajid, M.; Khalil, T. Content-based image retrieval and feature extraction: A comprehensive review. Math. Probl. Eng. 2019, 2019, 9658350. [Google Scholar] [CrossRef]

- Raj, R.J.S.; Shobana, S.J.; Pustokhina, I.V.; Pustokhin, D.A.; Gupta, D.; Shankar, K. Optimal feature selection-based medical image classification using deep learning model in internet of medical things. IEEE Access 2020, 8, 58006–58017. [Google Scholar] [CrossRef]

- Ali, L.; Wajahat, I.; Golilarz, N.A.; Keshtkar, F.; Bukhari, S.A.C. ‘LDA–GA–SVM: Improved hepatocellular carcinoma prediction through dimensionality reduction and genetically optimized support vector machine. Neural Comput. Appl. 2021, 33, 2783–2792. [Google Scholar] [CrossRef]

- Ozyurt, B.; Akcayol, M.A. A new topic modeling based approach for aspect extraction in aspect based sentiment analysis: SS-LDA. Expert Syst. Appl. 2021, 168, 114231. [Google Scholar] [CrossRef]

- Zhao, J.; Liang, J.-M.; Dong, Z.-N.; Tang, D.-Y.; Liu, Z. Accelerating information entropy-based feature selection using rough set theory with classified nested equivalence classes. Pattern Recognit. 2020, 107, 107517. [Google Scholar] [CrossRef]

- Maleki, N.; Zeinali, Y.; Niaki, S.T.A. A k-NN method for lung cancer prognosis with the use of a genetic algorithm for feature selection. Expert Syst. Appl. 2021, 164, 113981. [Google Scholar] [CrossRef]

- Hu, P.; Pan, J.-S.; Chu, S.-C. Improved binary grey wolf optimizer and its application for feature selection. Knowl. Based Syst. 2020, 195, 105746. [Google Scholar] [CrossRef]

- Tubishat, M.; Idris, N.; Shuib, L.; Abushariah, M.A.; Mirjalili, S. Improved Salp Swarm Algorithm based on opposition based learning and novel local search algorithm for feature selection. Expert Syst. Appl. 2020, 145, 113122. [Google Scholar] [CrossRef]

- Too, J.; Rahim Abdullah, A. Binary atom search optimisation approaches for feature selection. Connect. Sci. 2020, 32, 406–430. [Google Scholar] [CrossRef]

- Özyurt, F. A fused CNN model for WBC detection with MRMR feature selection and extreme learning machine. Soft Comput. 2020, 24, 8163–8172. [Google Scholar] [CrossRef]

- De Silva, K.; Jönsson, D.; Demmer, R.T. A combined strategy of feature selection and machine learning to identify predictors of prediabetes. J. Am. Med. Inform. Assoc. 2020, 27, 396–406. [Google Scholar] [CrossRef] [PubMed]

- Germain, P.-L.; Sonrel, A.; Robinson, M.D. pipeComp, a general framework for the evaluation of computational pipelines, reveals performant single cell RNA-seq preprocessing tools. Genome Biol. 2020, 21, 1–28. [Google Scholar] [CrossRef]

- Kaur, P.; Singh, A.; Chana, I. Computational techniques and tools for omics data analysis: State-of-the-art, challenges, and future directions. Arch. Comput. Methods Eng. 2021, 28, 4595–4631. [Google Scholar] [CrossRef]

- Ghosh, P.; Azam, S.; Jonkman, M.; Karim, A.; Shamrat, F.J.M.; Ignatious, E.; Shultana, S.; Beeravolu, A.R.; De Boer, F. Efficient Prediction of Cardiovascular Disease Using Machine Learning Algorithms With Relief and LASSO Feature Selection Techniques. IEEE Access 2021, 9, 19304–19326. [Google Scholar] [CrossRef]

- Deepa, N.; Prabadevi, B.; Maddikunta, P.K.; Gadekallu, T.R.; Baker, T.; Khan, M.A.; Tariq, U. An AI-based intelligent system for healthcare analysis using Ridge-Adaline Stochastic Gradient Descent Classifier. J. Supercomput. 2021, 77, 1998–2017. [Google Scholar] [CrossRef]

- Amini, F.; Hu, G. A two-layer feature selection method using genetic algorithm and elastic net. Expert Syst. Appl. 2021, 166, 114072. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, H.; Xiao, Y.; Guo, X.; Ma, J. Rethinking the Image Fusion: A Fast Unified Image Fusion Network Based on Proportional Maintenance of Gradient and Intensity. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12797–12804. [Google Scholar] [CrossRef]

- Jung, H.; Kim, Y.; Jang, H.; Ha, N.; Sohn, K. Unsupervised deep image fusion with structure tensor representations. IEEE Trans. Image Process. 2020, 29, 3845–3858. [Google Scholar] [CrossRef]

- Shakya, S. Analysis of artificial intelligence based image classification techniques. J. Innov. Image Process. (JIIP) 2020, 2, 44–54. [Google Scholar] [CrossRef]

- Hong, D.; Wu, X.; Ghamisi, P.; Chanussot, J.; Yokoya, N.; Zhu, X.X. Invariant attribute profiles: A spatial-frequency joint feature extractor for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3791–3808. [Google Scholar] [CrossRef]

- Ke, Z.; Qiu, D.; Li, K.; Yan, Q.; Lau, R.W. Guided Collaborative Training for Pixel-Wise Semi-Supervised Learning; Springer: Berlin/Heidelberg, Germany, 2020; pp. 429–445. [Google Scholar]

- Wang, F.; Liu, H.; Guo, D.; Sun, F. Unsupervised Representation Learning by InvariancePropagation. arXiv 2020, preprint. arXiv:2010.11694. [Google Scholar]

- Karantanellis, E.; Marinos, V.; Vassilakis, E.; Christaras, B. Object-based analysis using unmanned aerial vehicles (UAVs) for site-specific landslide assessment. Remote Sens. 2020, 12, 1711. [Google Scholar] [CrossRef]

- Durmuş, H.; Güneş, E.O.; Kırcı, M. Disease Detection on the Leaves of the Tomato Plants by Using Deep Learning; IEEE: Piscataway, NJ, USA, 2017; pp. 1–5. [Google Scholar]

- Atila, Ü.; Uçar, M.; Akyol, K.; Uçar, E. Plant leaf disease classification using EfficientNet deep learning model. Ecol. Inform. 2021, 61, 101182. [Google Scholar] [CrossRef]

- Ferentinos, K.P. Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 2018, 145, 311–318. [Google Scholar] [CrossRef]

- Azarmdel, H.; Jahanbakhshi, A.; Mohtasebi, S.S.; Muñoz, A.R. Evaluation of image processing technique as an expert system in mulberry fruit grading based on ripeness level using artificial neural networks (ANNs) and support vector machine (SVM). Postharvest Biol. Technol. 2020, 166, 111201. [Google Scholar] [CrossRef]

- Kasinathan, T.; Singaraju, D.; Uyyala, S.R. Insect classification and detection in field crops using modern machine learning techniques. Inf. Process. Agric. 2021, 8, 446–457. [Google Scholar] [CrossRef]

- Yao, J.; Ye, Y. The effect of image recognition traffic prediction method under deep learning and naive Bayes algorithm on freeway traffic safety. Image Vis. Comput. 2020, 103, 103971. [Google Scholar] [CrossRef]

- Atouf, I.; Al Okaishi, W.Y.; Zaaran, A.; Slimani, I.; Benrabh, M. A real-time system for vehicle detection with shadow removal and vehicle classification based on vehicle features at urban roads. Int. J. Power Electron. Drive Syst. 2020, 11, 2091. [Google Scholar] [CrossRef]

- Khan, M.A.; Khan, M.A.; Ahmed, F.; Mittal, M.; Goyal, L.M.; Hemanth, D.J.; Satapathy, S.C. Gastrointestinal diseases segmentation and classification based on duo-deep architectures. Pattern Recognit. Lett. 2020, 131, 193–204. [Google Scholar] [CrossRef]

- Chen, J.; Lian, Y.; Li, Y. ‘Real-time grain impurity sensing for rice combine harvesters using image processing and decision-tree algorithm. Comput. Electron. Agric. 2020, 175, 105591. [Google Scholar] [CrossRef]

- Moubayed, A.; Injadat, M.; Shami, A.; Lutfiyya, H. Student engagement level in an e-learning environment: Clustering using k-means. Am. J. Distance Educ. 2020, 34, 137–156. [Google Scholar] [CrossRef]

- Nanglia, P.; Kumar, S.; Mahajan, A.N.; Singh, P.; Rathee, D. A hybrid algorithm for lung cancer classification using SVM and Neural Networks. ICT Express 2021, 7, 335–341. [Google Scholar] [CrossRef]

- Wu, W.; Li, D.; Du, J.; Gao, X.; Gu, W.; Zhao, F.; Feng, X.; Yan, H. An intelligent diagnosis method of brain MRI tumor segmentation using deep convolutional neural network and SVM algorithm. Comput. Math. Methods Med. 2020, 2020, 6789306. [Google Scholar] [CrossRef]

- Basha, C.Z.; Rohini, G.; Jayasri, A.V.; Anuradha, S. Enhanced and Effective Computerized Classification of X-Ray Images; IEEE: Piscataway, NJ, USA, 2020; pp. 86–91. [Google Scholar]

- Islam, N.; Rashid, M.M.; Wibowo, S.; Xu, C.-Y.; Morshed, A.; Wasimi, S.A.; Moore, S.; Rahman, S.M. Early Weed Detection Using Image Processing and Machine Learning Techniques in an Australian Chilli Farm. Agriculture 2021, 11, 387. [Google Scholar] [CrossRef]

- Sasankar, P.; Kosarkar, U. A Study for Face Recognition Using Techniques PCA and KNN. EasyChair Print. 2021. Available online: https://wwww.easychair.org/publications/preprint_download/gS7Q (accessed on 30 January 2023).

- Bharate, A.A.; Shirdhonkar, M. Classification of Grape Leaves Using KNN and SVM Classifiers; IEEE: Piscataway, NJ, USA, 2020; pp. 745–749. [Google Scholar]

- Goncharov, P.; Ososkov, G.; Nechaevskiy, A.; Uzhinskiy, A.; Nestsiarenia, I. Disease Detection on the Plant Leaves by Deep Learning; Springer: Berlin/Heidelberg, Germany, 2018; pp. 151–159. [Google Scholar]

- De Luna, R.G.; Dadios, E.P.; Bandala, A.A. Automated Image Capturing System for Deep Learning-Based Tomato Plant Leaf Disease Detection and Recognition; IEEE: Piscataway, NJ, USA, 2018; pp. 1414–1419. [Google Scholar]

- Sharma, P.; Berwal, Y.P.S.; Ghai, W. Performance analysis of deep learning CNN models for disease detection in plants using image segmentation. Inf. Process. Agric. 2020, 7, 566–574. [Google Scholar] [CrossRef]

- Oppenheim, D.; Shani, G.; Erlich, O.; Tsror, L. Using deep learning for image-based potato tuber disease detection. Phytopathology 2019, 109, 1083–1087. [Google Scholar] [CrossRef]

- Chowdhury, M.E.; Rahman, T.; Khandakar, A.; Ayari, M.A.; Khan, A.U.; Khan, M.S.; Al-Emadi, N.; Reaz, M.B.I.; Islam, M.T.; Ali, S.H.M. Automatic and Reliable Leaf Disease Detection Using Deep Learning Techniques. AgriEngineering 2021, 3, 294–312. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images. 2009. Available online: http://www.cs.utoronto.ca/~kriz/learning-features-2009-TR.pdf (accessed on 30 January 2023).

- Rauf, H.T.; Lali, M.I.U. A Guava Fruits and Leaves Dataset for Detection and Classification of Guava Diseases through Machine Learning. Mendeley Data 2021, 1. (Dataset). [Google Scholar]

| Ref. | Year | Techniques | Dataset | Diseases | Results % |

|---|---|---|---|---|---|

| [41] | 2018 | Downscaling and squaring method, AlexNet, VGG-16, AlexNetOWTBn. | 87,848 58 classes | Apple Scab, Black Rot, Early Blight, Brown Leaf Spot | 99.53 |

| [55] | 2018 | ResNet-50, Deep Siamese convolutional network, TSNE method, KNN | PVD | Black Rot, Esca, Chlorosis | 90 |

| [56] | 2018 | Transfer learning, F-RCNN, classification. | 4923 | Phoma Rot, Leaf Miner, Target Spot | 95.75 |

| [57] | 2019 | F-CNN, S-CNN, Segmentation, annotation and labeling on region of interest (lesions), random transformation (stretch/rotation/brightness/contrast blur) | Independent dataset | Spider Mite, Target Spot | 98.6 |

| [58] | 2019 | VGG classification, resizing and transformation of images into grayscale, | 2465 | Black dot and scurf | 96 |

| [40] | 2021 | Efficient-Net (B5Ver), Alexnet, ResNet50, classification. | 61,486 | Late Blight, Bacterial Spot | 99.97 |

| [59] | 2021 | Resizing, normalizing and augmentation, Efficient-Net (B7Ver), Efficient-Net (B4Ver), U-net, and modified U-net segmentation model, Score-Cam visualization technique, | 18,161 | Target Spot | 99.9 |

| Measures | Mathematical Expressions |

|---|---|

| Accuracy | |

| Recall | |

| PRC/Positive Prediction | |

| F1 Score |

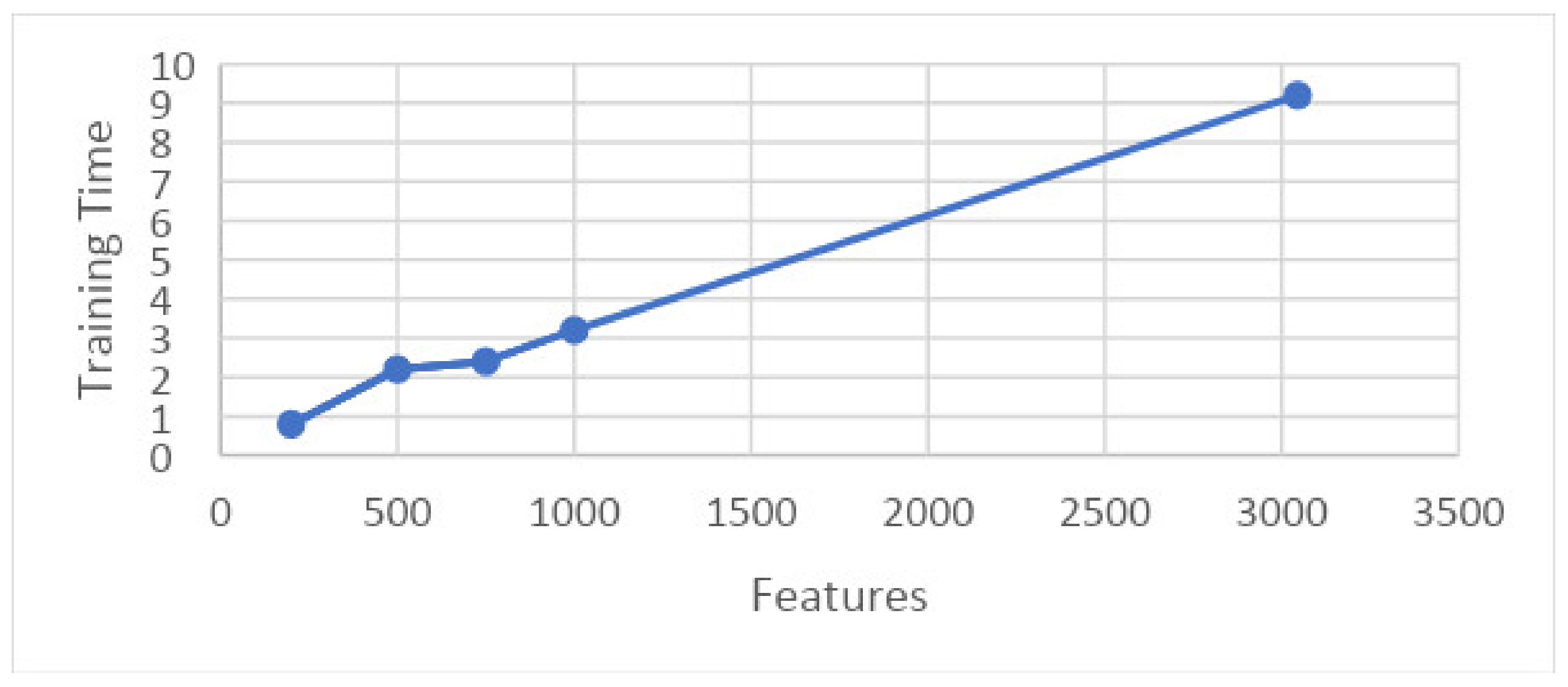

| Test Cases | Experiment # | Folds | Features | Classifier | Accuracy % | Training Time (s) |

|---|---|---|---|---|---|---|

| 1 | 1 (a) | 5 | 3045 | Quadratic SVM | 98.9 | 9.2 |

| 2 | 2 (a) | 5 | 200 | Fine Gaussian SVM | 81.1 | 0.8 |

| 3 | 3 (a) | 5 | 500 | Quadratic SVM | 84.1 | 2.2 |

| 4 | 4 (a) | 5 | 750 | Cubic SVM | 85.6 | 2.4 |

| 5 | 5 (a) | 5 | 1000 | Cubic SVM | 87.6 | 3.2 |

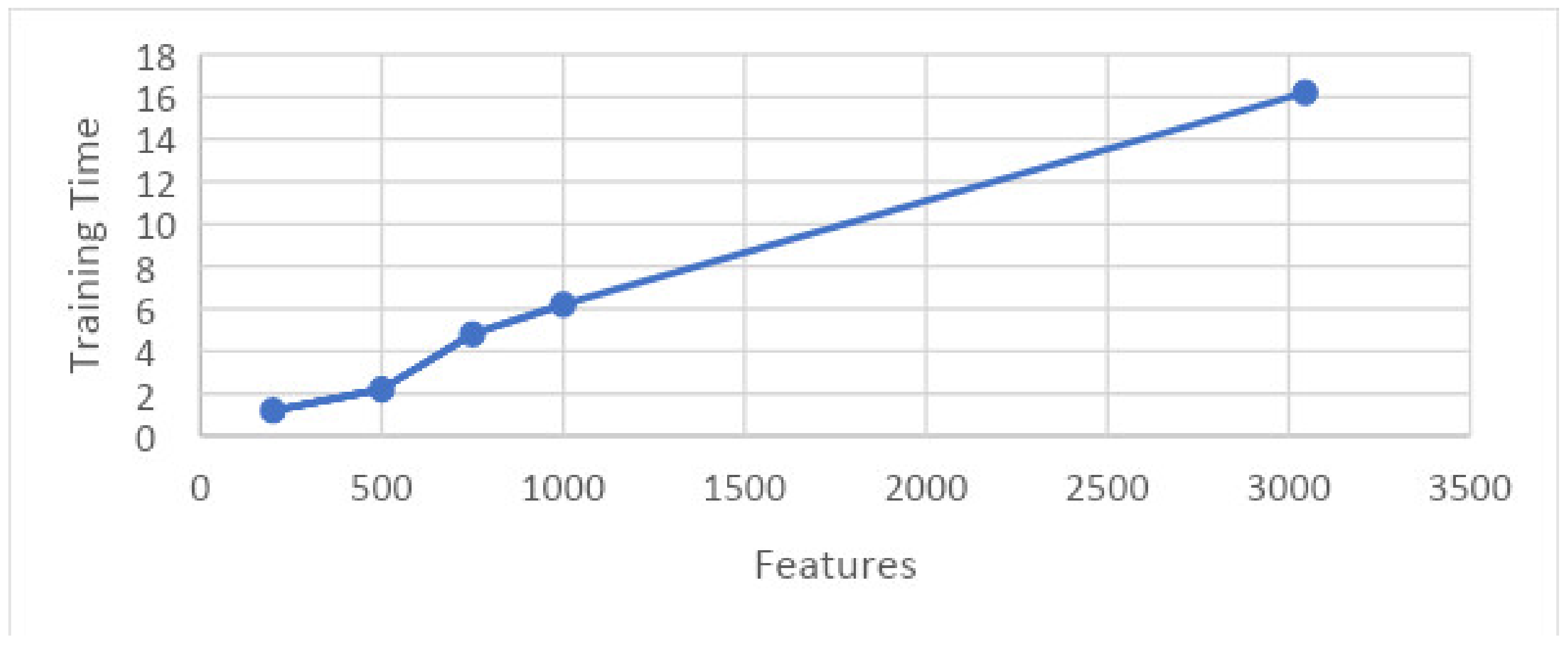

| 6 | 1 (b) | 10 | 3045 | Quadratic SVM | 99.2 | 16.2 |

| 7 | 2 (b) | 10 | 200 | Fine Gaussian SVM | 82.6 | 1.2 |

| 8 | 3 (b) | 10 | 500 | Fine Gaussian SVM | 85.2 | 2.20 |

| 9 | 4 (b) | 10 | 750 | Cubic SVM | 87.8 | 4.8 |

| 10 | 5 (b) | 10 | 1000 | Cubic SVM | 87.5 | 6.2 |

| Classifier | Accuracy (%) | Total Cost | Precision Speed (obs/s) | Training Time (s) | Precision | F1-Score | Recall |

|---|---|---|---|---|---|---|---|

| Quadratic SVM | 98.9 | 12 | 760 | 9.21 | 0.99 | 0.99 | 0.99 |

| Linear SVM | 98.0 | 20 | 780 | 9.65 | 0.98 | 0.98 | 0.98 |

| Cubic SVM | 98.6 | 14 | 770 | 9.43 | 0.985 | 0.985 | 0.99 |

| Fine Gaussian SVM | 80.1 | 199 | 490 | 16.3 | 0.78 | 0.785 | 0.86 |

| Medium Gaussian SVM | 97.3 | 27 | 770 | 9.76 | 0.97 | 0.975 | 0.97 |

| Coarse Gaussian SVM | 92.5 | 75 | 740 | 10.35 | 0.915 | 0.925 | 0.92 |

| Weighted KNN | 95.0 | 50 | 360 | 12.89 | 0.945 | 0.95 | 0.95 |

| FINE KNN | 93.5 | 65 | 360 | 13.62 | 0.935 | 0.935 | 0.94 |

| Medium KNN | 87.8 | 122 | 360 | 12.94 | 0.865 | 0.875 | 0.90 |

| Coarse KNN | 81.3 | 187 | 360 | 12.81 | 0.795 | 0.795 | 0.87 |

| Cosine KNN | 89.8 | 101 | 330 | 13.52 | 0.89 | 0.895 | 0.91 |

| Cubic KNN | 87.5 | 125 | 73 | 58.97 | 0.865 | 0.87 | 0.90 |

| Classifier | Accuracy (%) | Total Cost | Precision Speed (obs/s) | Training Time (s) | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| Quadratic SVM | 99.2 | 8 | 460 | 16.221 | 0.995 | 0.99 | 0.99 |

| Linear SVM | 98.3 | 17 | 440 | 18.23 | 0.98 | 0.98 | 0.98 |

| Cubic SVM | 99.1 | 9 | 450 | 16.7 | 0.99 | 0.99 | 0.99 |

| Fine Gaussian SVM | 85.4 | 146 | 310 | 29.265 | 0.84 | 0.89 | 0.84 |

| Medium Gaussian SVM | 98.3 | 17 | 450 | 17.676 | 0.98 | 0.98 | 0.98 |

| Coarse Gaussian SVM | 93.6 | 64 | 450 | 18.689 | 0.93 | 0.94 | 0.93 |

| Weighted KNN | 94.3 | 57 | 190 | 23.037 | 0.935 | 0.95 | 0.94 |

| FINE KNN | 94.2 | 58 | 190 | 24.374 | 0.935 | 0.95 | 0.94 |

| Medium KNN | 88.2 | 118 | 190 | 23.12 | 0.87 | 0.91 | 0.87 |

| Coarse KNN | 82.7 | 173 | 190 | 23.278 | 0.81 | 0.88 | 0.81 |

| Cosine KNN | 91.0 | 90 | 180 | 24.438 | 0.9 | 0.92 | 0.90 |

| Cubic KNN | 88.3 | 117 | 57 | 73.713 | 0.87 | 0.91 | 0.87 |

| Classifier | Accuracy (%) | Total Cost | Precision Speed (obs/s) | Training Time (s) | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| Fine Gaussian SVM | 81.1 | 189 | 14,000 | 0.81571 | 0.8 | 0.82 | 0.805 |

| Linear SVM | 73.7 | 264 | 16,000 | 1.3486 | 0.735 | 0.735 | 0.73 |

| Cubic SVM | 80.4 | 210 | 15,000 | 1.8572 | 0.805 | 0.805 | 0.8 |

| Quadratic SVM | 78.4 | 216 | 15,000 | 1.7431 | 0.785 | 0.78 | 0.785 |

| Medium Gaussian SVM | 72.8 | 273 | 15,000 | 0.7667 | 0.71 | 0.74 | 0.715 |

| Coarse Gaussian SVM | 60.5 | 396 | 14,000 | 0.671 | 0.565 | 0.71 | 0.5 |

| Weighted KNN | 78.2 | 218 | 7700 | 0.76741 | 0.785 | 0.785 | 0.78 |

| FINE KNN | 74.8 | 253 | 7600 | 1.1917 | 0.75 | 0.75 | 0.75 |

| Medium KNN | 66.8 | 333 | 7800 | 0.8367 | 0.66 | 0.665 | 0.66 |

| Coarse KNN | 62.4 | 377 | 7200 | 0.79092 | 0.59 | 0.675 | 0.555 |

| Cosine KNN | 67.2 | 329 | 7500 | 0.766606 | 0.67 | 0.665 | 0.67 |

| Cubic KNN | 69.0 | 311 | 1200 | 3.8096 | 0.68 | 0.685 | 0.68 |

| Classifier | Accuracy (%) | Total Cost | Precision Speed (obs/s) | Training Time (s) | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| Fine Gaussian SVM | 82.6 | 174 | 8300 | 1.2664 | 0.815 | 0.83 | 0.825 |

| Linear SVM | 73.2 | 269 | 8000 | 1.8576 | 0.725 | 0.73 | 0.73 |

| Cubic SVM | 80.8 | 192 | 8400 | 5.6548 | 0.815 | 0.81 | 0.81 |

| Quadratic SVM | 78.2 | 218 | 7800 | 3.4237 | 0.78 | 0.78 | 0.78 |

| Medium Gaussian SVM | 73.9 | 262 | 8100 | 1.15 | 0.725 | 0.75 | 0.725 |

| Coarse Gaussian SVM | 60.2 | 399 | 8100 | 1.1668 | 0.565 | 0.715 | 0.49 |

| Weighted KNN | 78.6 | 214 | 4100 | 1.2662 | 0.79 | 0.785 | 0.78 |

| FINE KNN | 75.0 | 250 | 4200 | 1.8358 | 0.755 | 0.75 | 0.75 |

| Medium KNN | 65.9 | 342 | 4000 | 1.8129 | 0.655 | 0.655 | 0.65 |

| Coarse KNN | 62.0 | 381 | 4000 | 1.2544 | 0.59 | 0.67 | 0.55 |

| Cosine KNN | 65.9 | 342 | 3900 | 1.274 | 0.665 | 0.665 | 0.655 |

| Cubic KNN | 68.5 | 316 | 960 | 4.57 | 0.675 | 0.68 | 0.68 |

| Classifier | Accuracy (%) | Total Cost | Precision Speed (obs/s) | Training Time (s) | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| Quadratic SVM | 84.1 | 159 | 7000 | 2.2045 | 0.84 | 0.84 | 0.84 |

| Linear SVM | 79.0 | 210 | 6900 | 1.7001 | 0.785 | 0.79 | 0.79 |

| Cubic SVM | 82.4 | 176 | 7300 | 2.2117 | 1.64 | 1.65 | 1.65 |

| Fine Gaussian SVM | 83.6 | 164 | 6900 | 1.2768 | 1.66 | 1.68 | 1.67 |

| Medium Gaussian SVM | 75.8 | 242 | 6900 | 1.1461 | 0.745 | 0.765 | 0.75 |

| Coarse Gaussian SVM | 67.0 | 331 | 6800 | 1.1891 | 0.65 | 0.68 | 0.65 |

| Weighted KNN | 80.4 | 196 | 3100 | 1.5885 | 0.805 | 0.805 | 0.805 |

| FINE KNN | 77.6 | 224 | 3200 | 1.9972 | 0.78 | 0.775 | 0.775 |

| Medium KNN | 69.4 | 307 | 3200 | 1.6358 | 0.685 | 0.69 | 0.685 |

| Coarse KNN | 67.4 | 331 | 3100 | 1.584 | 0.65 | 0.695 | 0.64 |

| Cosine KNN | 68.5 | 316 | 3000 | 1.6165 | 0.685 | 0.685 | 0.685 |

| Cubic KNN | 73.3 | 268 | 470 | 9.3325 | 0.72 | 0.73 | 0.73 |

| Classifier | Accuracy (%) | Total Cost | Precision Speed (obs/sec) | Training Time (s) | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| Fine Gaussian SVM | 85.2 | 148 | 3800 | 2.2088 | 0.845 | 0.86 | 0.85 |

| Linear SVM | 79.8 | 202 | 3600 | 4.5137 | 0.79 | 0.8 | 0.79 |

| Cubic SVM | 84.2 | 158 | 4100 | 4.4002 | 0.845 | 0.84 | 0.84 |

| Quadratic SVM | 84.7 | 153 | 4000 | 4.1644 | 0.845 | 0.845 | 0.845 |

| Medium Gaussian SVM | 75.9 | 241 | 4000 | 2.1057 | 0.75 | 0.765 | 0.755 |

| Coarse Gaussian SVM | 67.7 | 324 | 3900 | 2.0635 | 0.66 | 0.69 | 0.655 |

| Weighted KNN | 82.5 | 175 | 1800 | 2.6916 | 0.83 | 0.825 | 0.825 |

| FINE KNN | 79.4 | 206 | 1700 | 3.7172 | 0.795 | 0.795 | 0.79 |

| Medium KNN | 71.5 | 286 | 1800 | 2.7012 | 0.71 | 0.71 | 0.71 |

| Coarse KNN | 66.4 | 337 | 1800 | 2.667 | 0.645 | 0.685 | 0.63 |

| Cosine KNN | 67.9 | 322 | 1600 | 2.786 | 0.685 | 0.68 | 0.68 |

| Cubic KNN | 75.4 | 246 | 380 | 11.154 | 0.75 | 0.75 | 0.75 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mumtaz, S.; Raza, M.; Okon, O.D.; Rehman, S.U.; Ragab, A.E.; Rauf, H.T. A Hybrid Framework for Detection and Analysis of Leaf Blight Using Guava Leaves Imaging. Agriculture 2023, 13, 667. https://doi.org/10.3390/agriculture13030667

Mumtaz S, Raza M, Okon OD, Rehman SU, Ragab AE, Rauf HT. A Hybrid Framework for Detection and Analysis of Leaf Blight Using Guava Leaves Imaging. Agriculture. 2023; 13(3):667. https://doi.org/10.3390/agriculture13030667

Chicago/Turabian StyleMumtaz, Sidrah, Mudassar Raza, Ofonime Dominic Okon, Saeed Ur Rehman, Adham E. Ragab, and Hafiz Tayyab Rauf. 2023. "A Hybrid Framework for Detection and Analysis of Leaf Blight Using Guava Leaves Imaging" Agriculture 13, no. 3: 667. https://doi.org/10.3390/agriculture13030667

APA StyleMumtaz, S., Raza, M., Okon, O. D., Rehman, S. U., Ragab, A. E., & Rauf, H. T. (2023). A Hybrid Framework for Detection and Analysis of Leaf Blight Using Guava Leaves Imaging. Agriculture, 13(3), 667. https://doi.org/10.3390/agriculture13030667