Deep Learning-Based Segmentation of Intertwined Fruit Trees for Agricultural Tasks

Abstract

:1. Introduction

- -

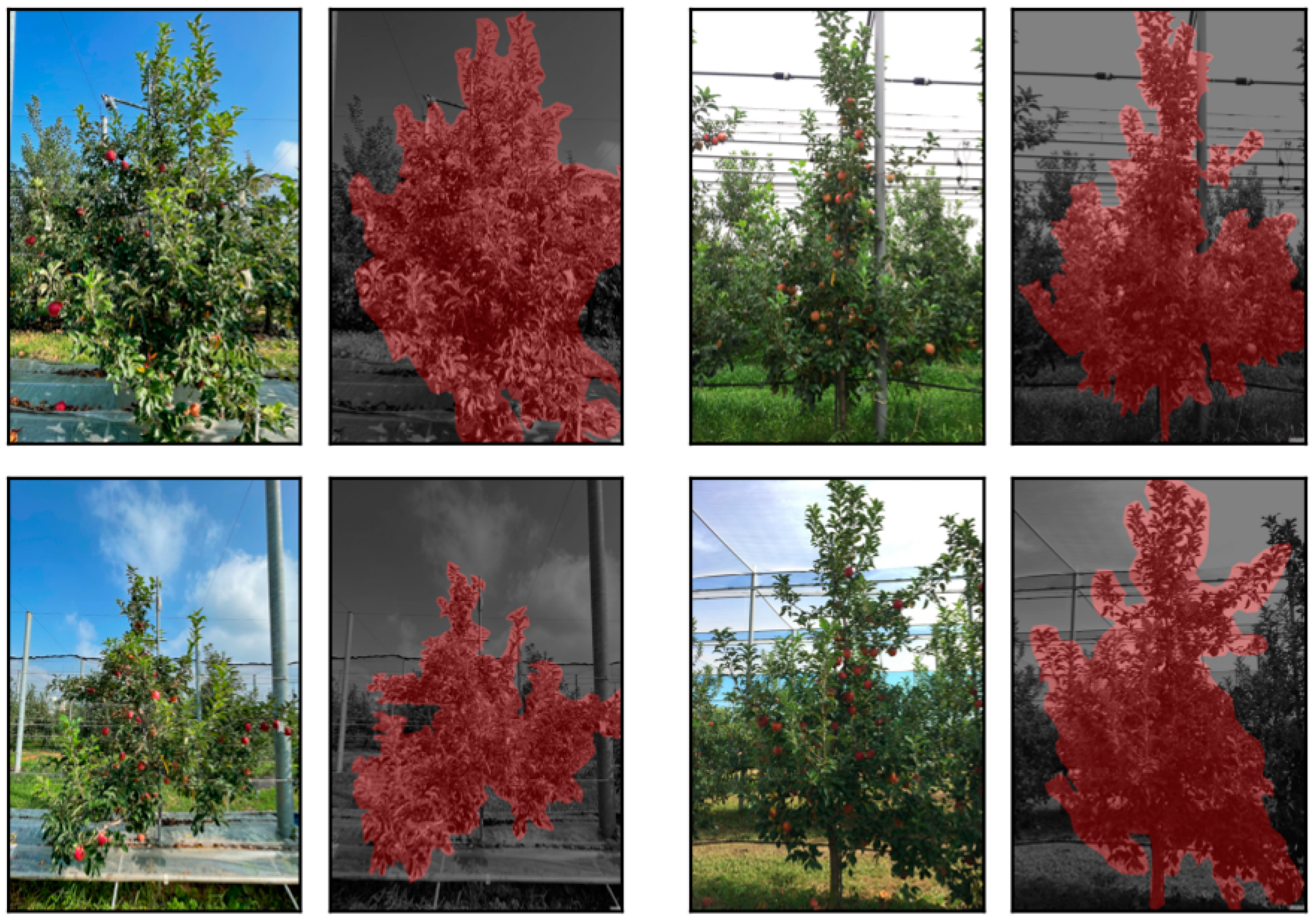

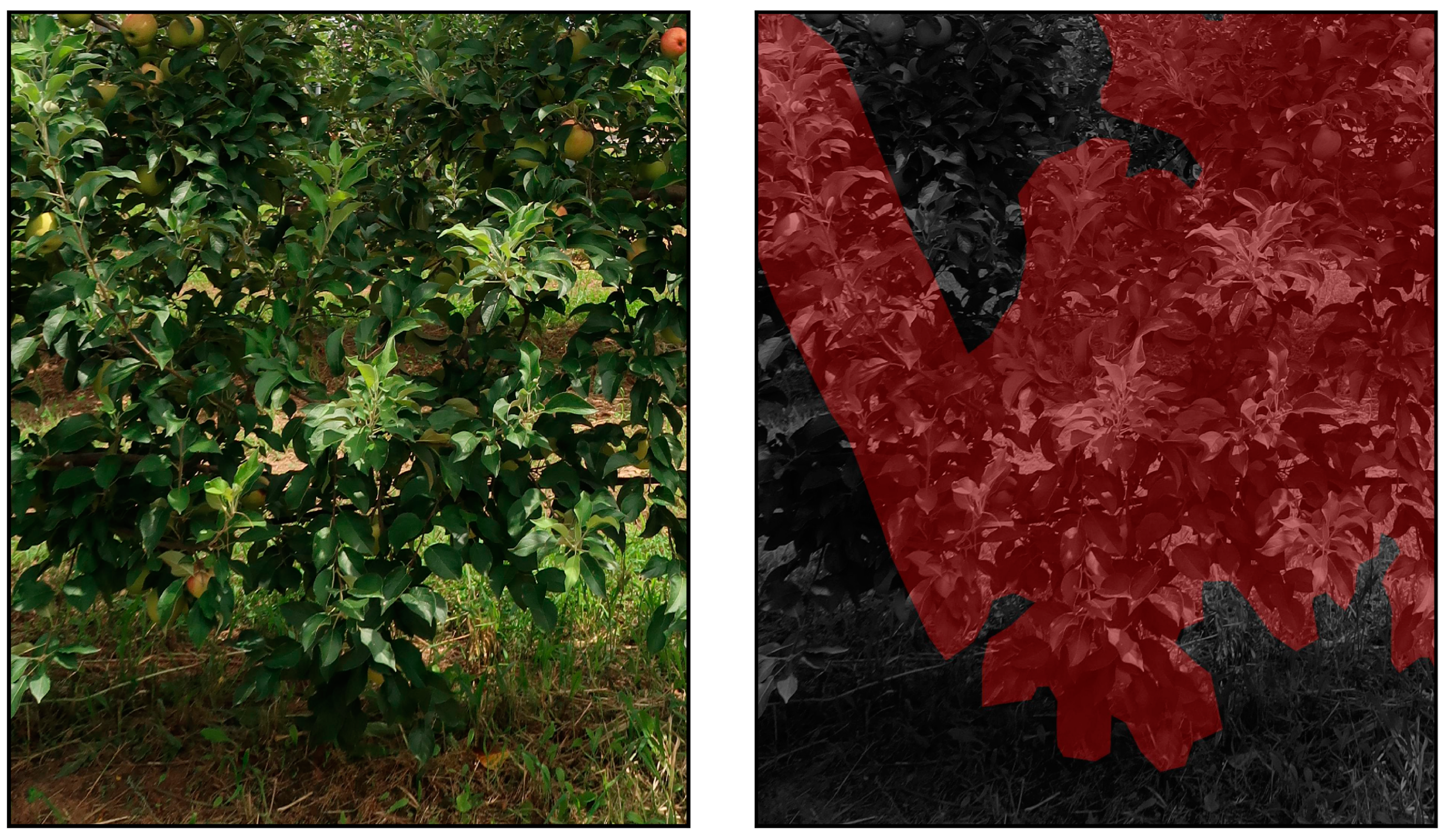

- We built a tree-segmentation dataset of apple trees in which the target tree was intertwined with neighboring trees. A human annotator accurately delineated the outlines of the branches and the trunk using the LabelMe tool. To the best of our knowledge, this dataset is the first to consider intertwined trees. The URL for downloading our dataset is at the end of the main text.

- -

- The CNN and transformer models were trained to segment the target tree using this dataset. The models used were the mask R-CNN with different backbones, YOLACT, and YOLOv8. A comparative study showed that YOLOv8 was the best, with a large margin in terms of average precision (AP). A qualitative analysis also showed the superiority of YOLOv8.

2. Related Works on Tree Segmentation

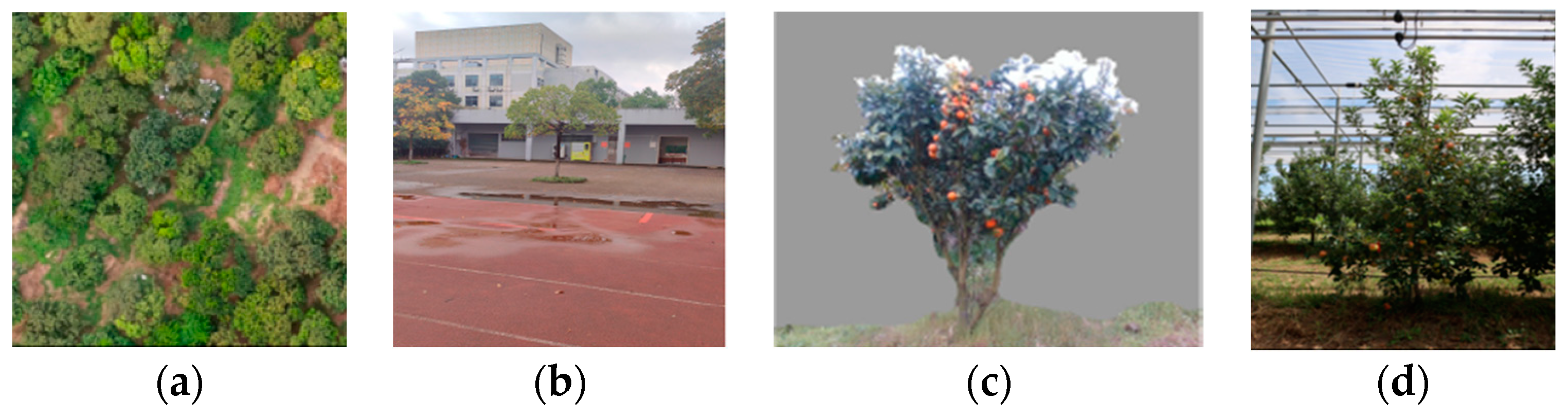

2.1. Summary of Related Studies on Agricultural and Digital Forestry Domains

2.2. Detailed Description of Agricultural Domain

2.3. Discussion

- -

- Research on digital forestry is more plentiful than that in the agricultural domain. In the agricultural domain, the number of cases using RGB-D was higher than that using RGB. Considering several factors such as the increasing importance of agricultural tasks, high-quality smartphone cameras, and high-performance deep learning models pre-trained with RGB images, more active research is required in the agricultural domain using RGB cameras.

- -

- Many studies have used traditional rule-based segmentation algorithms rather than modern deep learning models. Maintaining pace with the rapidly evolving deep-learning-based segmentation models is required for tree segmentation.

- -

- A few public datasets are available. More datasets should be constructed and publicly released to activate research and share objective performance evaluations.

- -

- No research has been conducted on the segmentation of intertwined trees. Because many orchards have intertwined fruit trees, research on this situation should be actively conducted.

3. Materials and Methods

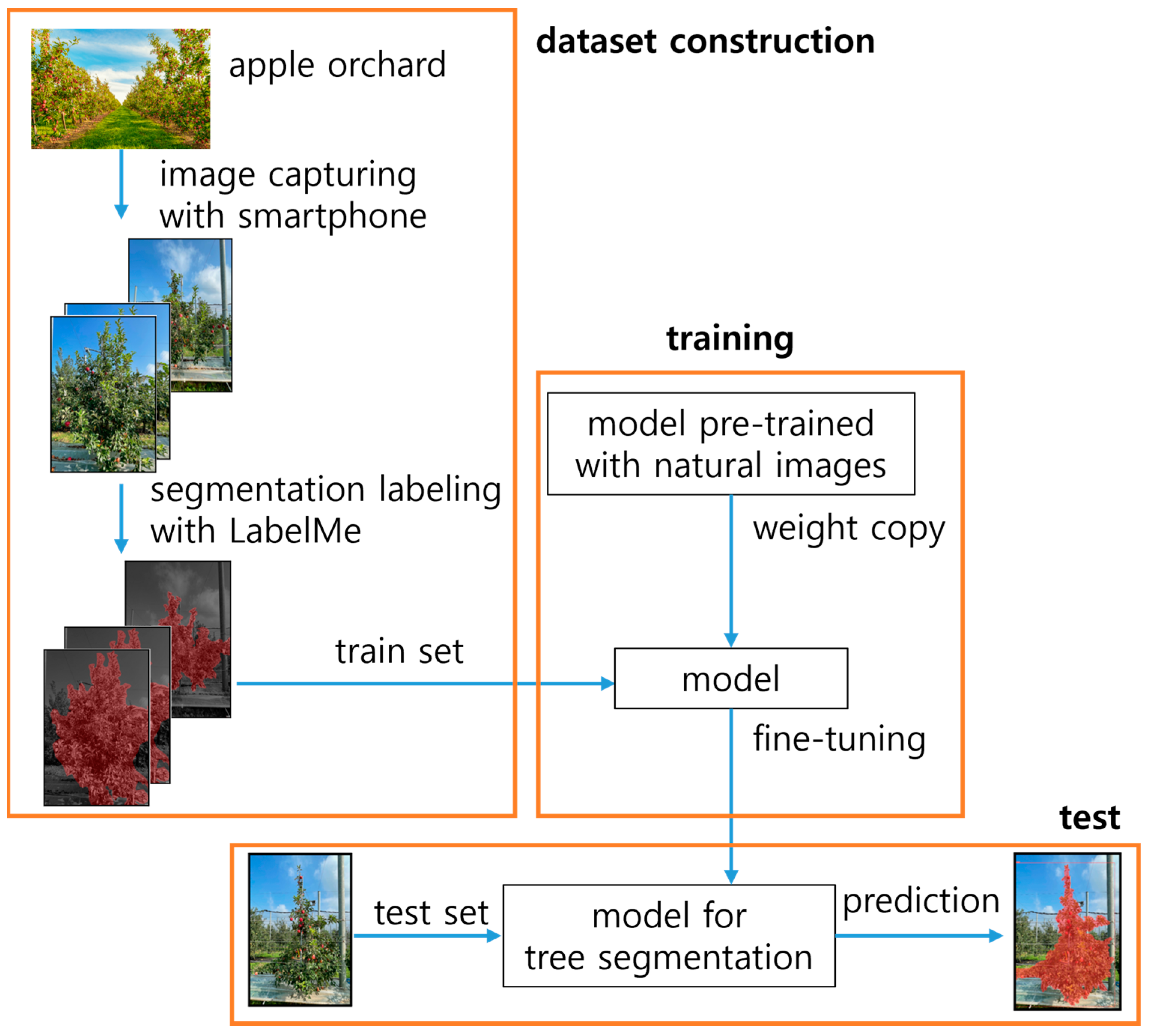

3.1. Dataset Construction

3.1.1. Collection of Apple Tree Images

3.1.2. Labeling of Tree Regions

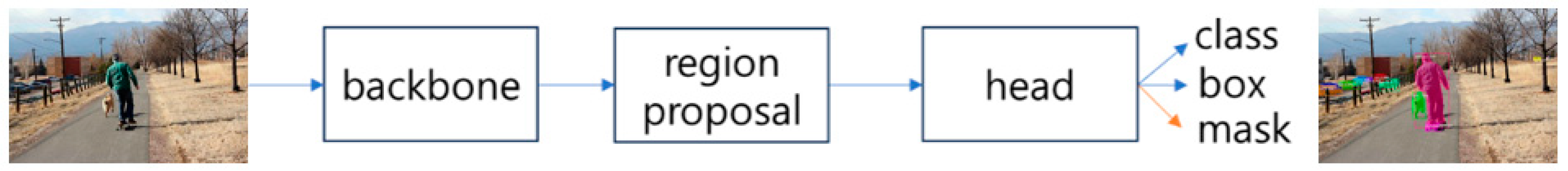

3.2. Deep Learning Models for Tree Segmentation

3.2.1. Selection of Pre-Trained Models

3.2.2. Fine-Tuning Using Our Dataset

4. Results

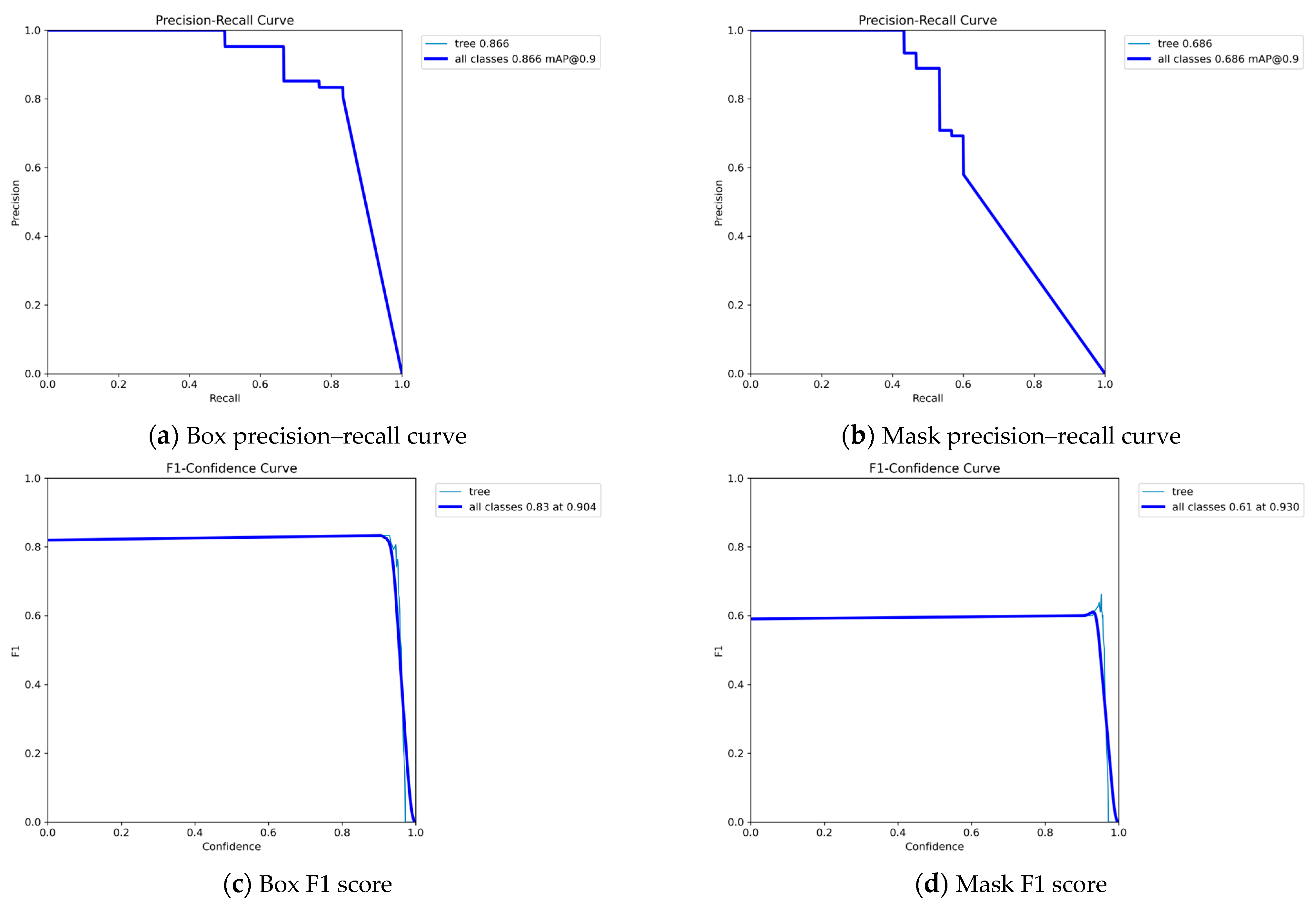

4.1. AP Analysis

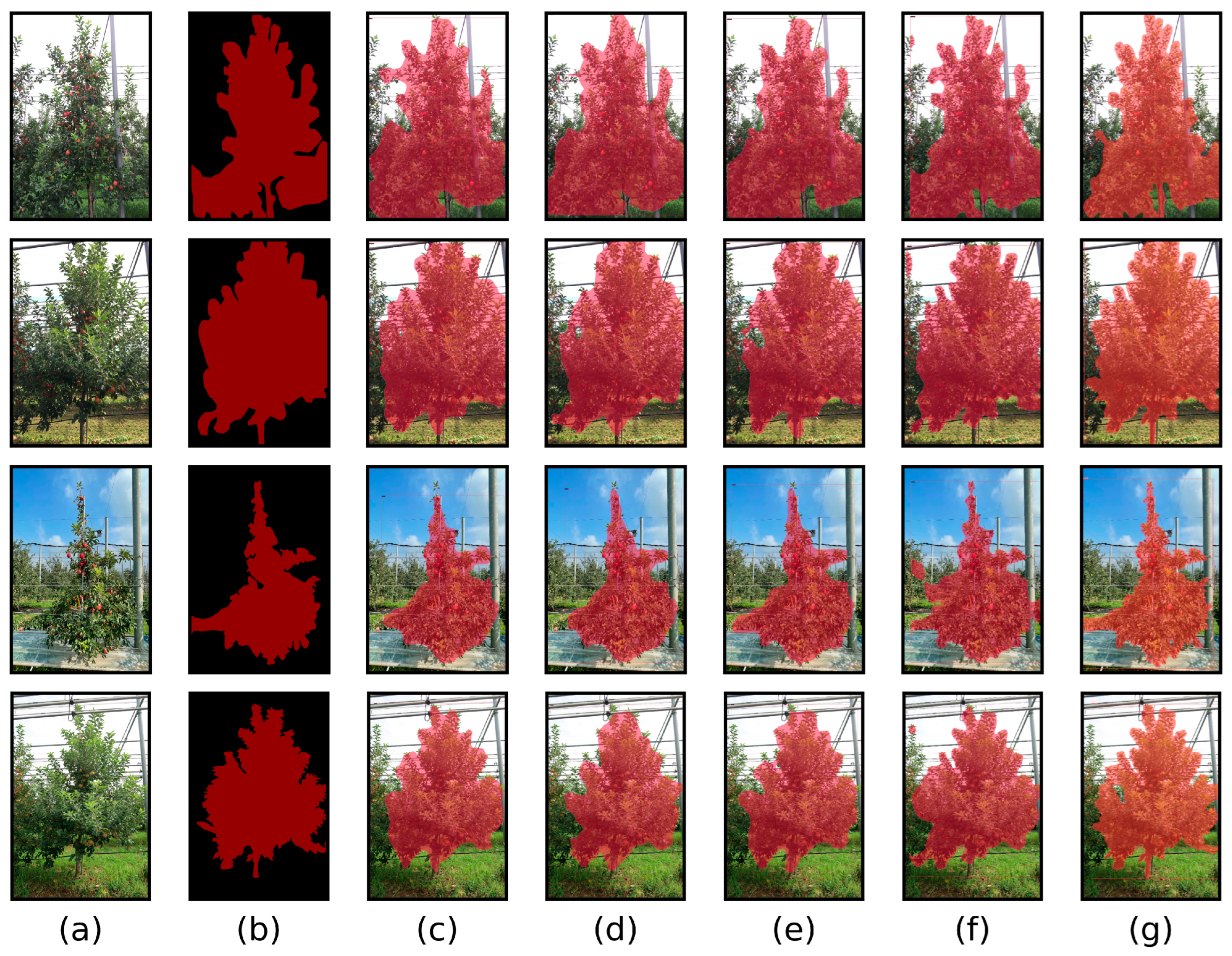

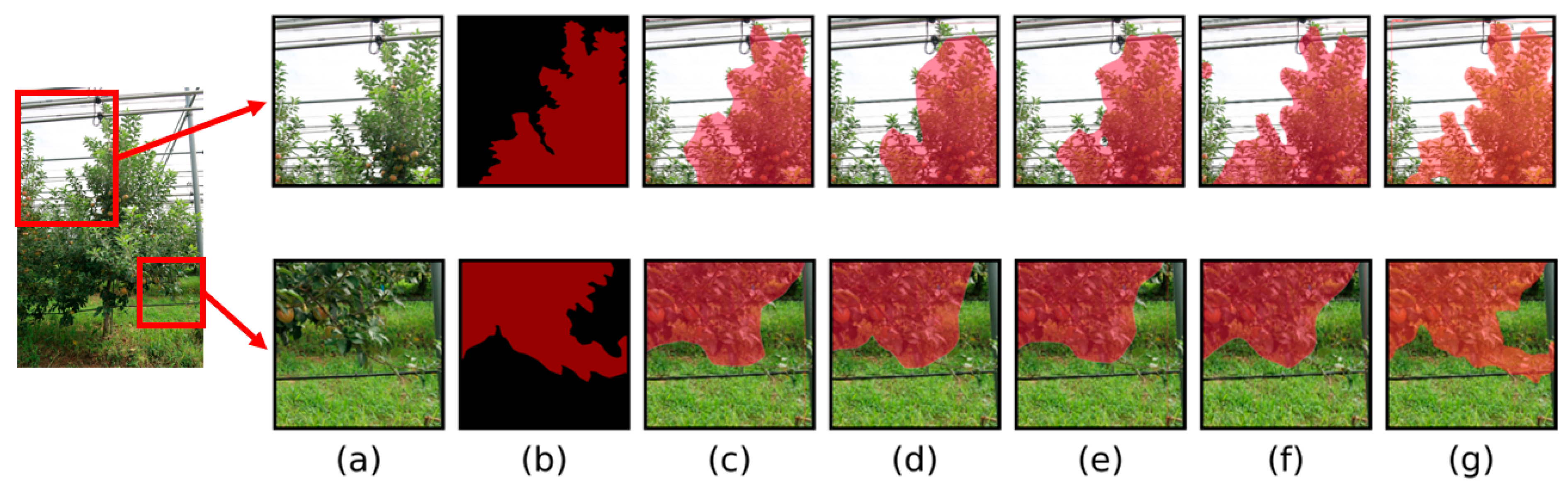

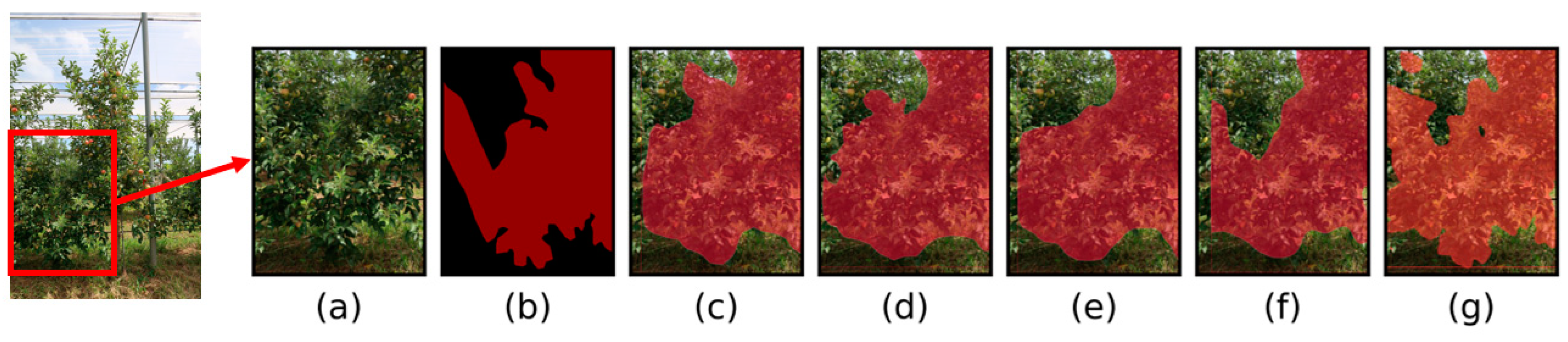

4.2. Qualitative Analysis

4.3. Discussions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Turner-Skoff, J.B.; Cavender, N. The Benefits of Trees for Livable and Sustainable Communities. Plants People Planet 2019, 1, 323–335. [Google Scholar] [CrossRef]

- Chehreh, B.; Moutinho, A.; Viegas, C. Latest Trends on Tree Classification and Segmentation Using UAV Data—A Review of Agroforestry Applications. Remote Sens. 2023, 15, 2263. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.Y.; Porikli, F.; Plaza, A.J.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3523–3542. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Ting, D.; Newbury, R.; Chen, C. Semantic Segmentation for Partially Occluded Apple Trees Based on Deep Learning. Comput. Electron. Agric. 2021, 181, 105952. [Google Scholar] [CrossRef]

- Häni, N.; Roy, P.; Isler, V. A Comparative Study of Fruit Detection and Counting Methods for Yield Mapping in Apple Orchards. J. Field Robot. 2019, 37, 263–282. [Google Scholar] [CrossRef]

- Mo, J.; Lan, Y.; Yang, D.; Wen, F.; Qiu, H.; Chen, X.; Deng, X. Deep Learning-Based Instance Segmentation Method of Litchi Canopy from UAV-Acquired Images. Remote Sens. 2021, 13, 3919. [Google Scholar] [CrossRef]

- Cao, L.; Zheng, X.; Fang, L. The Semantic Segmentation of Standing Tree Images Based on the Yolov7 Deep Learning Algorithm. Electronics 2023, 12, 929. [Google Scholar] [CrossRef]

- Cong, P.; Zhou, J.; Li, S.; Lv, K.; Feng, H. Citrus Tree Crown Segmentation of Orchard Spraying Robot Based on RGB-D Image and Improved Mask R-CNN. Appl. Sci. 2022, 13, 164. [Google Scholar] [CrossRef]

- Diez, Y.; Kentsch, S.; Fukuda, M.; Caceres, M.L.L.; Moritake, K.; Cabezas, M. Deep Learning in Forestry Using UAV-Acquired RGB Data: A Practical Review. Remote Sens. 2021, 13, 2837. [Google Scholar] [CrossRef]

- Xiao, K.; Ma, Y.; Gao, G. An Intelligent Precision Orchard Pesticide Spray Technique Based on the Depth-of-Field Extraction Algorithm. Comput. Electron. Agric. 2017, 133, 30–36. [Google Scholar] [CrossRef]

- Milella, A.; Marani, R.; Petitti, A.; Reina, G. In-Field High Throughput Grapevine Phenotyping with a Consumer-Grade Depth Camera. Comput. Electron. Agric. 2019, 156, 293–306. [Google Scholar] [CrossRef]

- Dong, W.; Roy, P.; Isler, V. Semantic Mapping for Orchard Environments by Merging Two-Sides Reconstructions of Tree Rows. J. Field Robot. 2019, 37, 97–121. [Google Scholar] [CrossRef]

- Gao, G.; Xiao, K.; Jun, Y. A Spraying Path Planning Algorithm Based on Colour-Depth Fusion Segmentation in Peach Orchards. Comput. Electron. Agric. 2020, 173, 105412. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Wang, C. Three-Dimensional Reconstruction of Guava Fruits and Branches Using Instance Segmentation and Geometry Analysis. Comput. Electron. Agric. 2021, 184, 106107. [Google Scholar] [CrossRef]

- Seol, J.; Kim, J.; Son, H.I. Field Evaluations of a Deep Learning-Based Intelligent Spraying Robot with Flow Control for Pear Orchards. Precis. Agric. 2022, 23, 712–732. [Google Scholar] [CrossRef]

- Zhang, J.; He, L.; Karkee, M.; Zhang, Q.; Zhang, X.; Gao, Z. Branch Detection for Apple Trees Trained in Fruiting Wall Architecture Using Depth Features and Regions-Convolutional Neural Network (R-CNN). Comput. Electron. Agric. 2018, 155, 386–393. [Google Scholar] [CrossRef]

- Asaei, H.; Jafari, A.; Loghavi, M. Site-Specific Orchard Sprayer Equipped with Machine Vision for Chemical Usage Management. Comput. Electron. Agric. 2019, 162, 431–439. [Google Scholar] [CrossRef]

- Majeed, Y.; Zhang, J.; Zhang, X.; Fu, L.; Karkee, M.; Zhang, Q.; Whiting, M.D. Deep Learning Based Segmentation for Automated Training of Apple Trees on Trellis Wires. Comput. Electron. Agric. 2020, 170, 105277. [Google Scholar] [CrossRef]

- Song, Z.; Zhou, Z.; Wang, W.; Gao, F.; Fu, L.; Li, R.; Cui, Y. Canopy Segmentation and Wire Reconstruction for Kiwifruit Robotic Harvesting. Comput. Electron. Agric. 2021, 181, 105933. [Google Scholar] [CrossRef]

- Lin, G.; Chen, Z.; Xu, Y.; Wang, M.; Zhang, Z.; Zhu, L. Real-Time Guava Tree-Part Segmentation Using Fully Convolutional Network with Channel and Spatial Attention. Front. Plant Sci. 2022, 13, 991487. [Google Scholar] [CrossRef]

- Chen, Y.; Hou, C.; Tang, Y.; Zhuang, J.; Lin, J.; He, Y.; Guo, Q.; Zhong, Z.; Lei, H.; Luo, S. Citrus Tree Segmentation from UAV Images Based on Monocular Machine Vision in a Natural Orchard Environment. Sensors 2019, 19, 5558. [Google Scholar] [CrossRef]

- Safonova, A.; Guirado, E.; Maglinets, Y.; Alcaraz-Segura, D.; Tabik, S. Olive Tree Biovolume from UAV Multi-Resolution Image Segmentation with Mask R-CNN. Sensors 2021, 21, 1617. [Google Scholar] [CrossRef] [PubMed]

- Lu, Z.; Qi, L.; Zhang, H.; Wan, J.; Zhou, J. Image Segmentation of UAV Fruit Tree Canopy in a Natural Illumination Environment. Agriculture 2022, 12, 1039. [Google Scholar] [CrossRef]

- Gibril, M.B.A.; Shafri, H.Z.M.; Al-Ruzouq, R.; Shanableh, A.; Nahas, F.; Al Mansoori, S. Large-Scale Date Palm Tree Segmentation from Multiscale UAV-Based and Aerial Images Using Deep Vision Transformers. Drones 2023, 7, 93. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.S. Evaluating Tree Detection and Segmentation Routines on Very High Resolution UAV LiDAR Data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7619–7628. [Google Scholar] [CrossRef]

- Hu, X.; Li, D. Research on a Single-Tree Point Cloud Segmentation Method Based on UAV Tilt Photography and Deep Learning Algorithm. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4111–4120. [Google Scholar] [CrossRef]

- Hu, T.; Sun, X.; Su, Y.; Guan, H.; Sun, Q.; Kelly, M.; Guo, Q. Development and Performance Evaluation of a Very Low-Cost UAV-Lidar System for Forestry Applications. Remote Sens. 2020, 13, 77. [Google Scholar] [CrossRef]

- Krůček, M.; Král, K.; Cushman, K.; Missarov, A.; Kellner, J.R. Supervised Segmentation of Ultra-High-Density Drone Lidar for Large-Area Mapping of Individual Trees. Remote Sens. 2020, 12, 3260. [Google Scholar] [CrossRef]

- Kuželka, K.; Slavík, M.; Surový, P. Very High Density Point Clouds from UAV Laser Scanning for Automatic Tree Stem Detection and Direct Diameter Measurement. Remote Sens. 2020, 12, 1236. [Google Scholar] [CrossRef]

- Torresan, C.; Carotenuto, F.; Chiavetta, U.; Miglietta, F.; Zaldei, A.; Gioli, B. Individual Tree Crown Segmentation in Two-Layered Dense Mixed Forests from UAV LiDAR Data. Drones 2020, 4, 10. [Google Scholar] [CrossRef]

- Yan, W.; Guan, H.; Cao, L.; Yu, Y.; Li, C.; Lu, J.G. A Self-Adaptive Mean Shift Tree-Segmentation Method Using UAV LiDAR Data. Remote Sens. 2020, 12, 515. [Google Scholar] [CrossRef]

- Chen, X.; Jiang, K.; Zhu, Y.; Wang, X.; Yun, T. Individual Tree Crown Segmentation Directly from UAV-Borne LiDAR Data Using the PointNet of Deep Learning. Forests 2021, 12, 131. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, X.; Hang, M.; Li, J. Research on the Improvement of Single Tree Segmentation Algorithm Based on Airborne LiDAR Point Cloud. Open Geosci. 2021, 13, 705–716. [Google Scholar] [CrossRef]

- Neuville, R.; Bates, J.S.; Jonard, F. Estimating Forest Structure from UAV-Mounted LiDAR Point Cloud Using Machine Learning. Remote Sens. 2021, 13, 352. [Google Scholar] [CrossRef]

- Chen, Q.; Gao, T.; Zhu, J.; Wu, F.; Li, X.; Lu, D.; Yu, F. Individual Tree Segmentation and Tree Height Estimation Using Leaf-off and Leaf-on UAV-LiDAR Data in Dense Deciduous Forests. Remote Sens. 2022, 14, 2787. [Google Scholar] [CrossRef]

- Li, Y.; Chai, G.; Wang, Y.; Lei, L.; Zhang, X. ACE R-CNN: An Attention Complementary and Edge Detection-Based Instance Segmentation Algorithm for Individual Tree Species Identification Using UAV RGB Images and LiDAR Data. Remote Sens. 2022, 14, 3035. [Google Scholar] [CrossRef]

- Ma, K.; Chen, Z.; Fu, L.; Tian, W.; Jiang, F.; Yi, J.; Du, Z.; Sun, H. Performance and Sensitivity of Individual Tree Segmentation Methods for UAV-LiDAR in Multiple Forest Types. Remote Sens. 2022, 14, 298. [Google Scholar] [CrossRef]

- Qin, H.; Zhou, W.; Yao, Y.; Wang, W. Individual Tree Segmentation and Tree Species Classification in Subtropical Broadleaf Forests Using UAV-Based LiDAR, Hyperspectral, and Ultrahigh-Resolution RGB Data. Remote Sens. Environ. 2022, 280, 113143. [Google Scholar] [CrossRef]

- Terryn, L.; Calders, K.; Bartholomeus, H.; Bartolo, R.E.; Brede, B.; D’hont, B.; Disney, M.; Herold, M.; Lau, A.; Shenkin, A.; et al. Quantifying Tropical Forest Structure through Terrestrial and UAV Laser Scanning Fusion in Australian Rainforests. Remote Sens. Environ. 2022, 271, 112912. [Google Scholar] [CrossRef]

- Deng, S.; Katoh, M.; Yu, X.; Hyyppä, J.; Gao, T. Comparison of Tree Species Classifications at the Individual Tree Level by Combining ALS Data and RGB Images Using Different Algorithms. Remote Sens. 2016, 8, 1034. [Google Scholar] [CrossRef]

- Puliti, S.; Talbot, B.; Astrup, R. Tree-Stump Detection, Segmentation, Classification, and Measurement Using Unmanned Aerial Vehicle (UAV) Imagery. Forests 2018, 9, 102. [Google Scholar] [CrossRef]

- Kattenborn, T.; Eichel, J.; Fassnacht, F.E. Convolutional Neural Networks Enable Efficient, Accurate and Fine-Grained Segmentation of Plant Species and Communities from High-Resolution UAV Imagery. Sci. Rep. 2019, 9, 17656. [Google Scholar] [CrossRef]

- Torres, D.; Queiroz Feitosa, R.; Nigri Happ, P.; Elena Cué La Rosa, L.; Marcato Junior, J.; Martins, J.; Olã Bressan, P.; Gonçalves, W.N.; Liesenberg, V. Applying Fully Convolutional Architectures for Semantic Segmentation of a Single Tree Species in Urban Environment on High Resolution UAV Optical Imagery. Sensors 2020, 20, 563. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Zhou, J.; Wang, H.; Tan, T.; Cui, M.; Huang, Z.; Wang, P.; Zhang, L. Multi-Species Individual Tree Segmentation and Identification Based on Improved Mask R-CNN and UAV Imagery in Mixed Forests. Remote Sens. 2022, 14, 874. [Google Scholar] [CrossRef]

- Firoze, A.; Wingren, C.; Yeh, R.; Benes, B.; Aliaga, D. Tree Instance Segmentation with Temporal Contour Graph. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 2193–2202. [Google Scholar]

- Yang, R.; Fang, W.; Sun, X.; Jing, X.; Fu, L.; Wei, X.; Li, R. An Aerial Point Cloud Dataset of Apple Tree Detection and Segmentation with Integrating RGB Information and Coordinate Information. IEEE Dataport 2023. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 2961–2969. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; Available online: https://openaccess.thecvf.com/content/ICCV2021/html/Liu_Swin_Transformer_Hierarchical_Vision_Transformer_Using_Shifted_Windows_ICCV_2021_paper (accessed on 23 September 2023).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. Available online: https://proceedings.neurips.cc/paper_files/paper/2015/hash/14bfa6bb14875e45bba028a21ed38046-Abstract.html (accessed on 23 September 2023).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. Available online: https://proceedings.neurips.cc/paper_files/paper/2017/hash/3f5ee243547dee91fbd053c1c4a845aa-Abstract.html (accessed on 23 September 2023).

- Terven, J.; Cordova-Esparza, D. A Comprehensive Review of YOLO: From YOLOv1 to YOLOv8 and Beyond. arXiv 2023, arXiv:2304.00501. [Google Scholar] [CrossRef]

- Jocher, G. YOLO by Ultralytics. Available online: https://github. com/ultralytics/ultralytics (accessed on 23 September 2023).

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT++ Better Real-Time Instance Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1108–1121. [Google Scholar] [CrossRef]

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020. [Google Scholar] [CrossRef]

- Lu, Y.; Young, S. A Survey of Public Datasets for Computer Vision Tasks in Precision Agriculture. Comput. Electron. Agric. 2020, 178, 105760. [Google Scholar] [CrossRef]

| Domain | Papers | Sensor Type | Image Type | View * | Segmentation Target | Segmentation Algorithm | Potential Application |

|---|---|---|---|---|---|---|---|

| Agriculture | [10] | RGB-D camera | RGB-D | front | single tree | thresholding point cloud | spraying |

| [11] | RGB-D camera | RGB-D | front | individual trees | thresholding point cloud | phenotyping | |

| [12] | RGB-D camera | RGB-D | front | single tree | deep learning (mask R-CNN) | phenotyping | |

| [13] | RGB-D camera | RGB-D | front | individual trees | color–depth fusion | spraying | |

| [5] | RGB-D camera | RGB-D | front | single tree | thresholding depth map | yield estimation | |

| [4] | RGB-D camera | RGB-D | front | single tree | deep learning (U-Net) | pruning | |

| [14] | RGB-D camera | RGB-D | front | single tree | deep learning (mask R-CNN) | harvesting | |

| [8] | RGB-D camera | RGB-D | front | single tree | deep learning (mask R-CNN) | spraying | |

| [15] | RGB-D camera | RGB-D | front | single tree | deep learning (SegNet) | spraying | |

| [16] | RGB camera | RGB | front | single tree | deep learning (R-CNN) | harvesting | |

| [17] | RGB camera | RGB | front | single tree | thresholding green channel | spraying | |

| [18] | RGB camera | RGB | front | single tree | deep learning (SegNet) | tree training | |

| [19] | RGB camera | RGB | front | single tree | deep learning (DeepLabV3++) | harvesting | |

| [20] | RGB camera | RGB | front | single tree | deep learning (MobileNet) | harvesting | |

| [7] | RGB camera | RGB | front | single tree | deep learning (YOLOv7) | phenotyping | |

| Ours | RGB camera | RGB | front | single tree | deep learning (YOLOv8) | multipurpose | |

| [21] | RGB camera | RGB | top | individual trees | SVM | tree population | |

| [22] | RGB camera | RGB | top | individual trees | deep learning (mask R-CNN) | phenotyping | |

| [23] | RGB camera | RGB | top | individual trees | naïve Bayes | spraying | |

| [24] | RGB camera | RGB | top | individual trees | deep learning (Segformer) | phenotyping | |

| Digital forestry | [25] | LiDAR | point cloud | front | individual trees | region growing | quantifying forest structure |

| [26] | LiDAR | point cloud | top | individual trees | deep learning (T-Net) | quantifying forest structure | |

| [27] | LiDAR | Point cloud | top | single tree | tree growing process | quantifying forest structure | |

| [28] | LiDAR | point cloud | top | individual trees | random forest | quantifying forest structure | |

| [29] | LiDAR | Point cloud | top | individual trees | Hough transform | quantifying forest structure | |

| [30] | LiDAR | point cloud | top | individual trees | region growing | quantifying forest structure | |

| [31] | LiDAR | point cloud | top | individual trees | mean shift | quantifying forest structure | |

| [32] | LiDAR | point cloud | top | individual trees | deep learning (PointNet) | quantifying forest structure | |

| [33] | LiDAR | point cloud | top | single tree | DBSCAN and k-means | quantifying forest structure | |

| [34] | LiDAR | point cloud | front | individual trees | HDBSCAN clustering | quantifying forest structure | |

| [35] | LiDAR | point cloud | top | individual trees | watershed | quantifying forest structure | |

| [36] | LiDAR | RGB | top | individual trees | deep learning (mask R-CNN) | tree species classification | |

| [37] | LiDAR | point cloud | top | individual trees | watershed | quantifying forest structure | |

| [38] | LiDAR | RGB | top | individual trees | watershed and random forest | tree species classification | |

| [39] | LiDAR | point cloud | front and top | individual trees | region growing | quantifying forest structure | |

| [40] | RGB camera | RGB | top | individual trees | watershed and SVM | tree species classification | |

| [41] | RGB camera | RGB | top | tree trumps | region growing | tree population | |

| [42] | RGB camera | RGB | top | individual trees | deep learning (U-Net) | tree species classification | |

| [43] | RGB camera | RGB | top | single tree | deep learning (FC-DenseNet) | quantifying forest structure | |

| [6] | RGB camera | RGB | top | individual trees | deep learning (YOLACT) | quantifying forest structure | |

| [44] | RGB camera | RGB | top | individual trees | deep learning (mask R-CNN) | quantifying forest structure | |

| [45] | RGB camera | RGB | top | individual trees | temporal contour graph | tree population |

| Pre-Trained Models | Hyper-Parameters for Fine-Tuning | ||||

|---|---|---|---|---|---|

| Segmentation Model | Backbone | Loss Function | Optimizer | Batch Size | Learning Rate |

| Mask R-CNN | ResNet50 | CrossEntropyLoss | SGD | 16 | 0.02 |

| Swin-T | CrossEntropyLoss | AdamW | 16 | 0.0001 | |

| Swin-S | CrossEntropyLoss | AdamW | 8 | 0.0001 | |

| YOLACT | ResNet101 | CrossEntropyLoss | SGD | 8 | 0.001 |

| YOLOv8 | CSPDarknet53 | Focal Loss | SGD | 4 | 0.01 |

| Segmentation Model | Backbone | Box AP@0.5 | Box AP@0.75 | Box AP@0.5:0.95 | Mask AP@0.5 | Mask AP@0.75 | Mask AP@0.5:0.95 |

|---|---|---|---|---|---|---|---|

| Mask R-CNN | ResNet50 | 100.0 | 95.7 | 77.1 | 100.0 | 93.3 | 69.0 |

| Swin-T | 100.0 | 91.5 | 78.4 | 100.0 | 95.3 | 71.0 | |

| Swin-S | 100.0 | 100.0 | 81.8 | 100.0 | 95.4 | 73.4 | |

| YOLACT | ResNet101 | 100.0 | 100.0 | 80.2 | 100.0 | 93.2 | 72.1 |

| YOLOv8 | CSPDarknet53 | 99.5 | 99.5 | 93.7 | 99.5 | 99.5 | 84.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

La, Y.-J.; Seo, D.; Kang, J.; Kim, M.; Yoo, T.-W.; Oh, I.-S. Deep Learning-Based Segmentation of Intertwined Fruit Trees for Agricultural Tasks. Agriculture 2023, 13, 2097. https://doi.org/10.3390/agriculture13112097

La Y-J, Seo D, Kang J, Kim M, Yoo T-W, Oh I-S. Deep Learning-Based Segmentation of Intertwined Fruit Trees for Agricultural Tasks. Agriculture. 2023; 13(11):2097. https://doi.org/10.3390/agriculture13112097

Chicago/Turabian StyleLa, Young-Jae, Dasom Seo, Junhyeok Kang, Minwoo Kim, Tae-Woong Yoo, and Il-Seok Oh. 2023. "Deep Learning-Based Segmentation of Intertwined Fruit Trees for Agricultural Tasks" Agriculture 13, no. 11: 2097. https://doi.org/10.3390/agriculture13112097

APA StyleLa, Y.-J., Seo, D., Kang, J., Kim, M., Yoo, T.-W., & Oh, I.-S. (2023). Deep Learning-Based Segmentation of Intertwined Fruit Trees for Agricultural Tasks. Agriculture, 13(11), 2097. https://doi.org/10.3390/agriculture13112097