A High-Precision Crop Classification Method Based on Time-Series UAV Images

Abstract

:1. Introduction

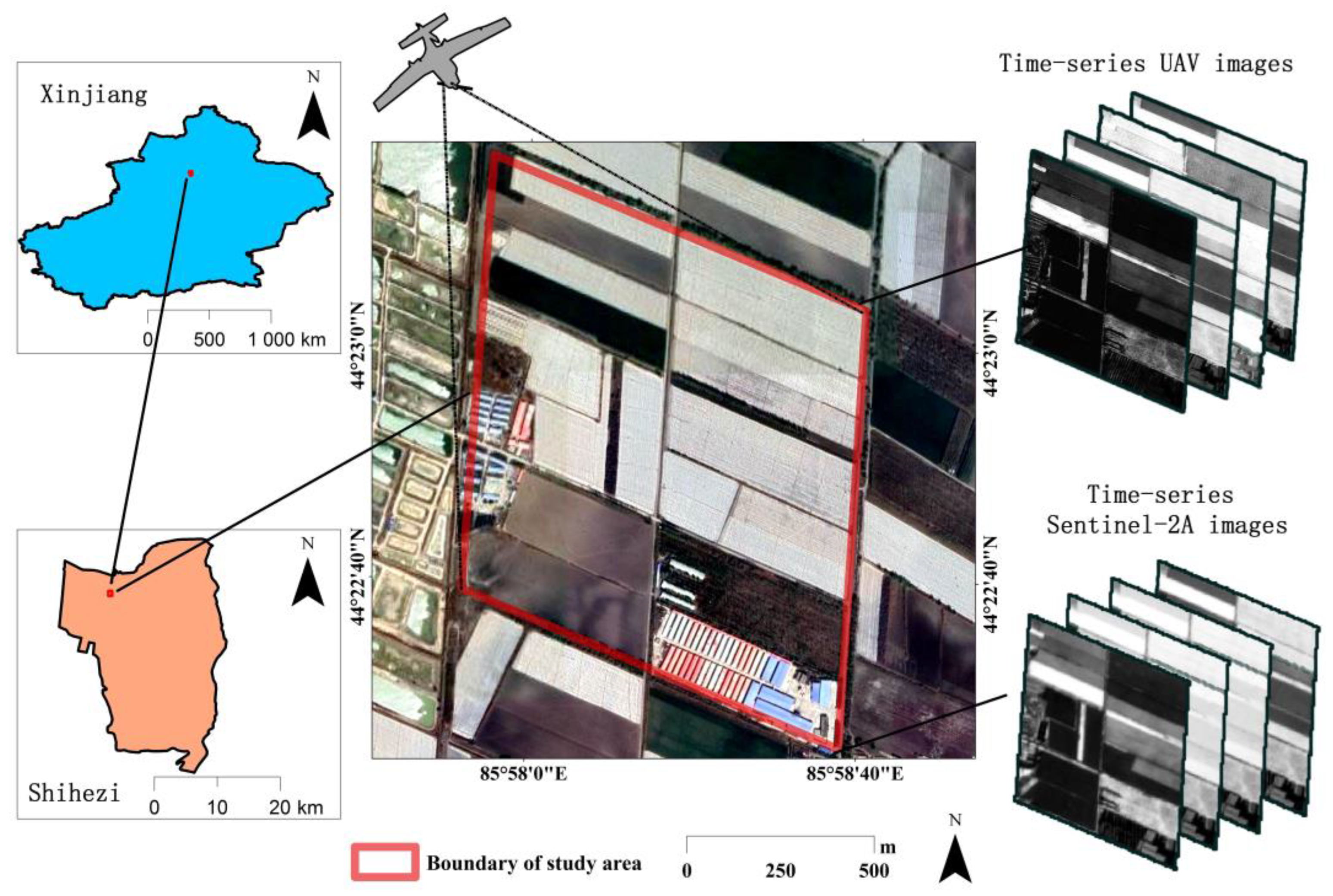

2. Study Area and Data

2.1. Study Area

2.1.1. Location of Study Area

2.1.2. Crop Types in the Study Area

2.2. Data

2.2.1. UAV Data Acquisition and Preprocessing

2.2.2. Satellite Data Acquisition and Preprocessing

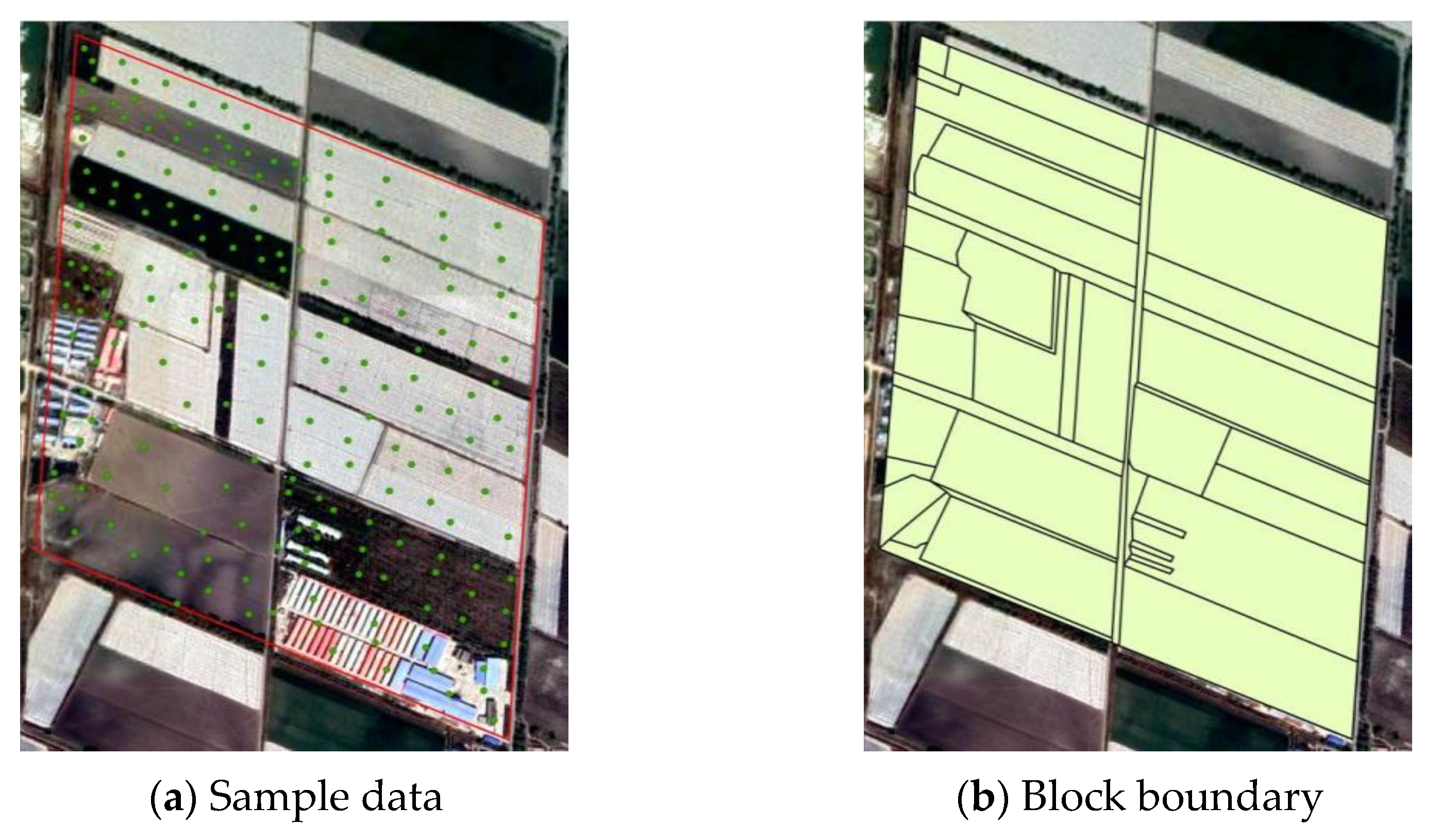

2.2.3. Ground Survey Data

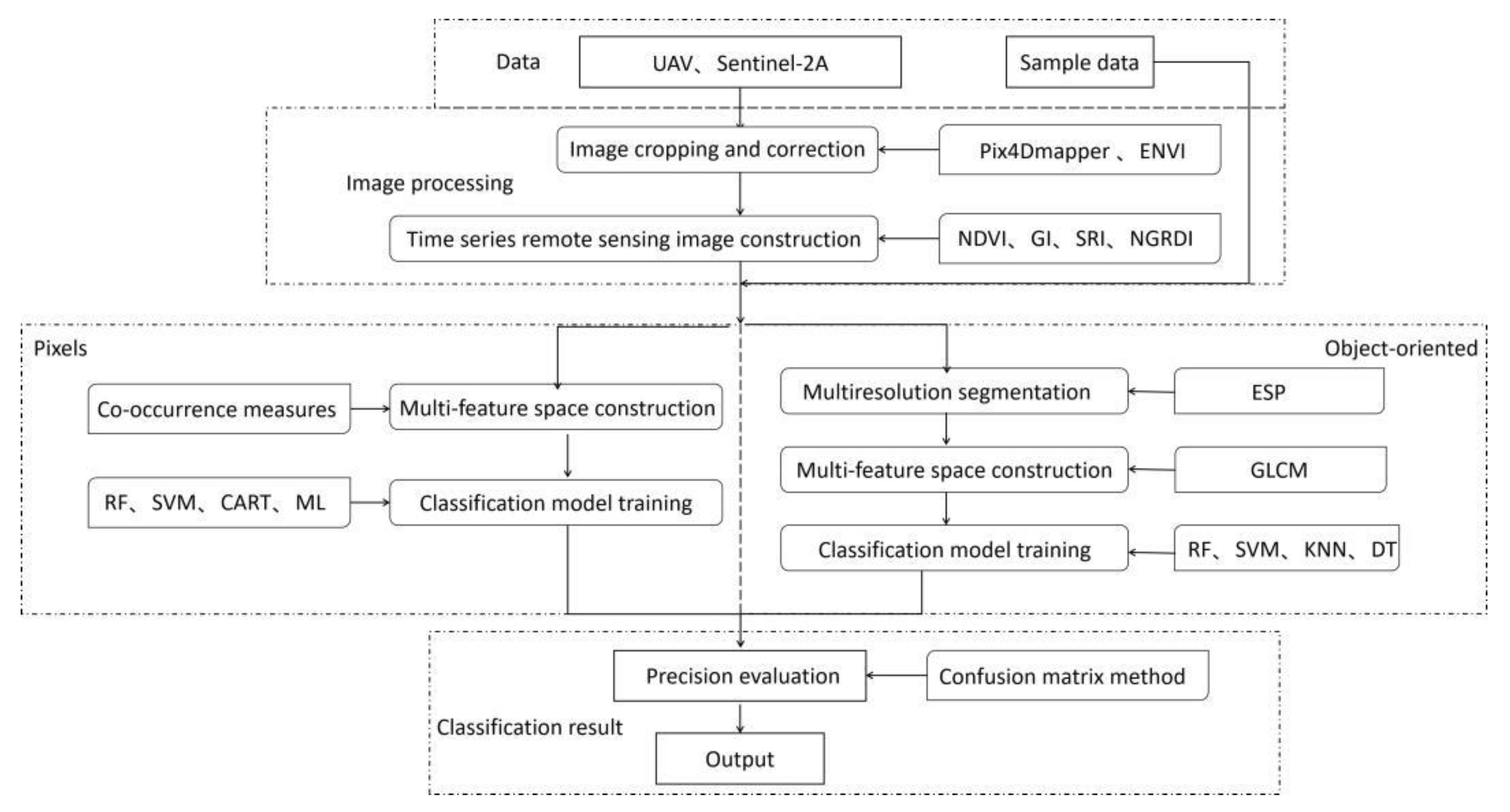

3. Method

3.1. Construction of Time Series UAV Remote Sensing Images

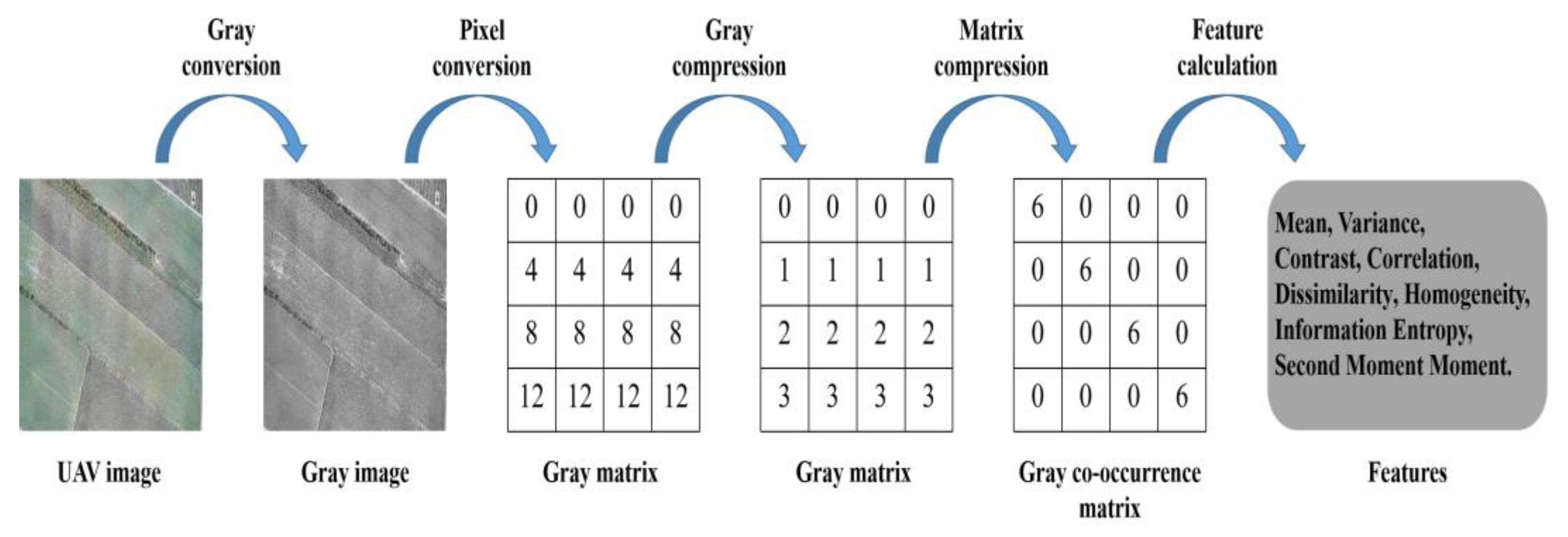

3.2. Multi-Feature Space Fusion

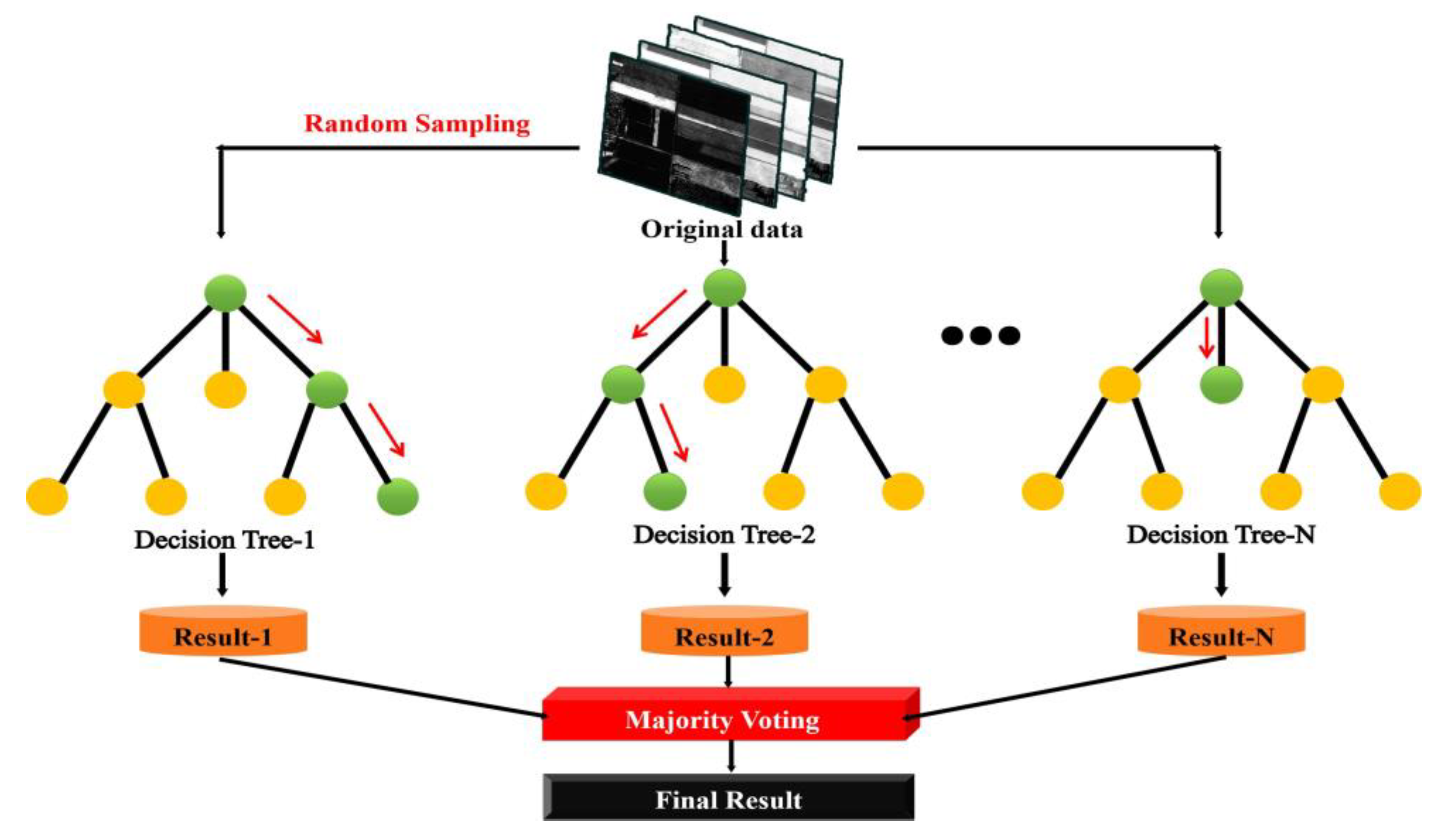

3.3. Remote Sensing Classification Method

3.4. Evaluation Index of Classification Results

4. Results

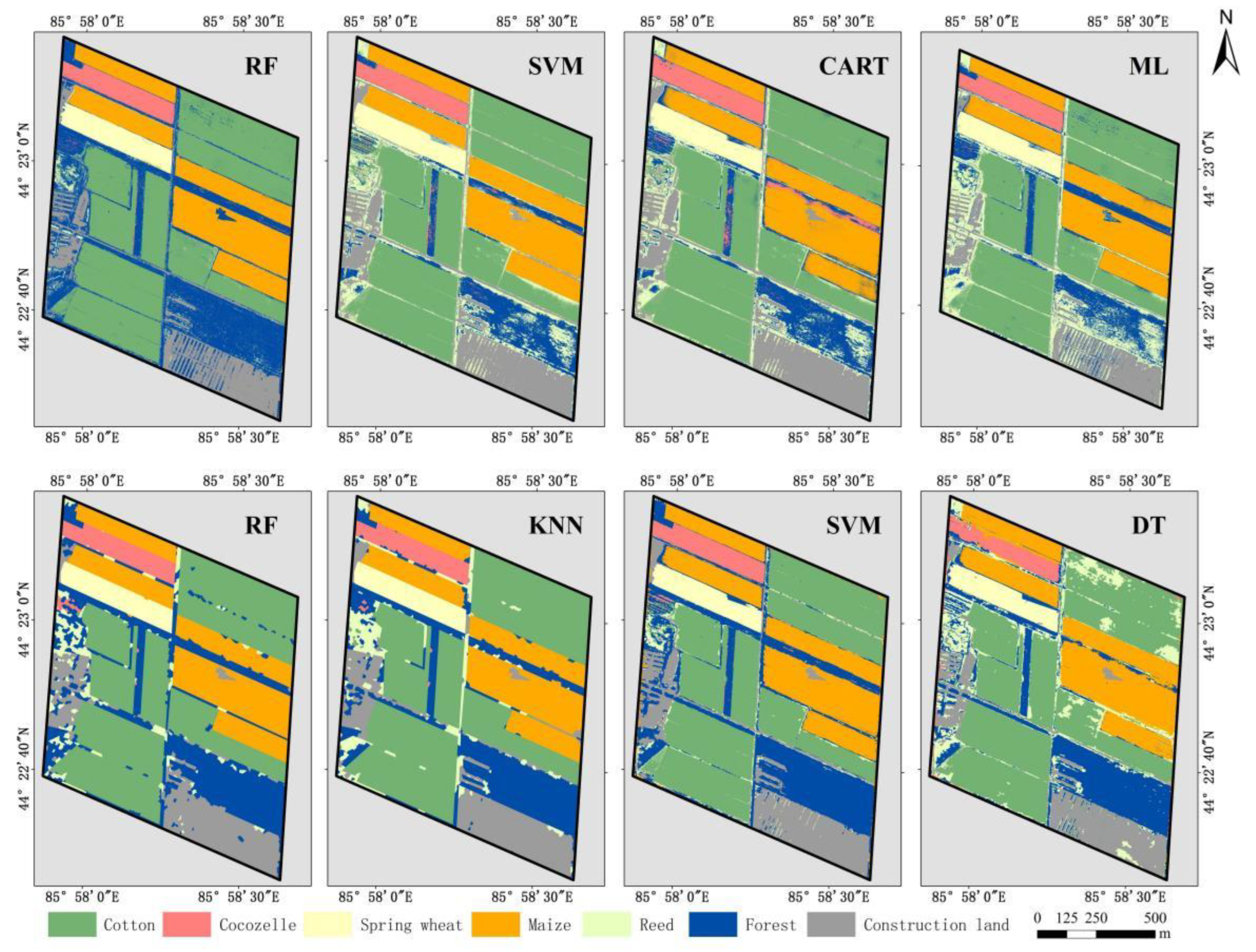

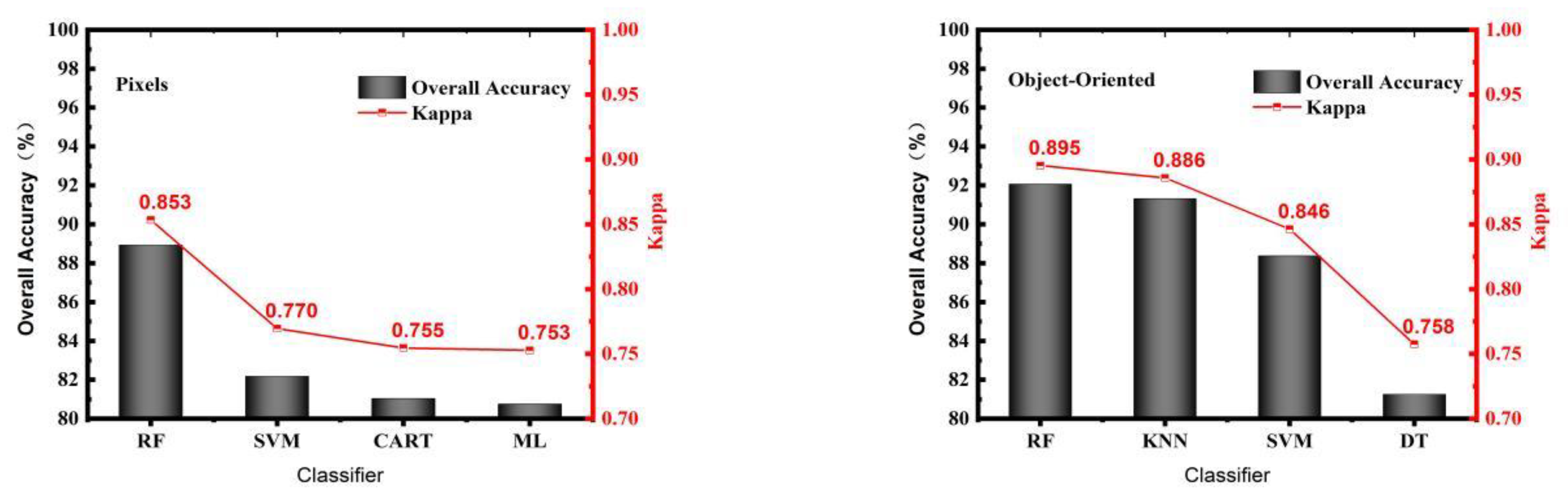

4.1. Comparison and Accuracy Evaluation of Different Classification Methods

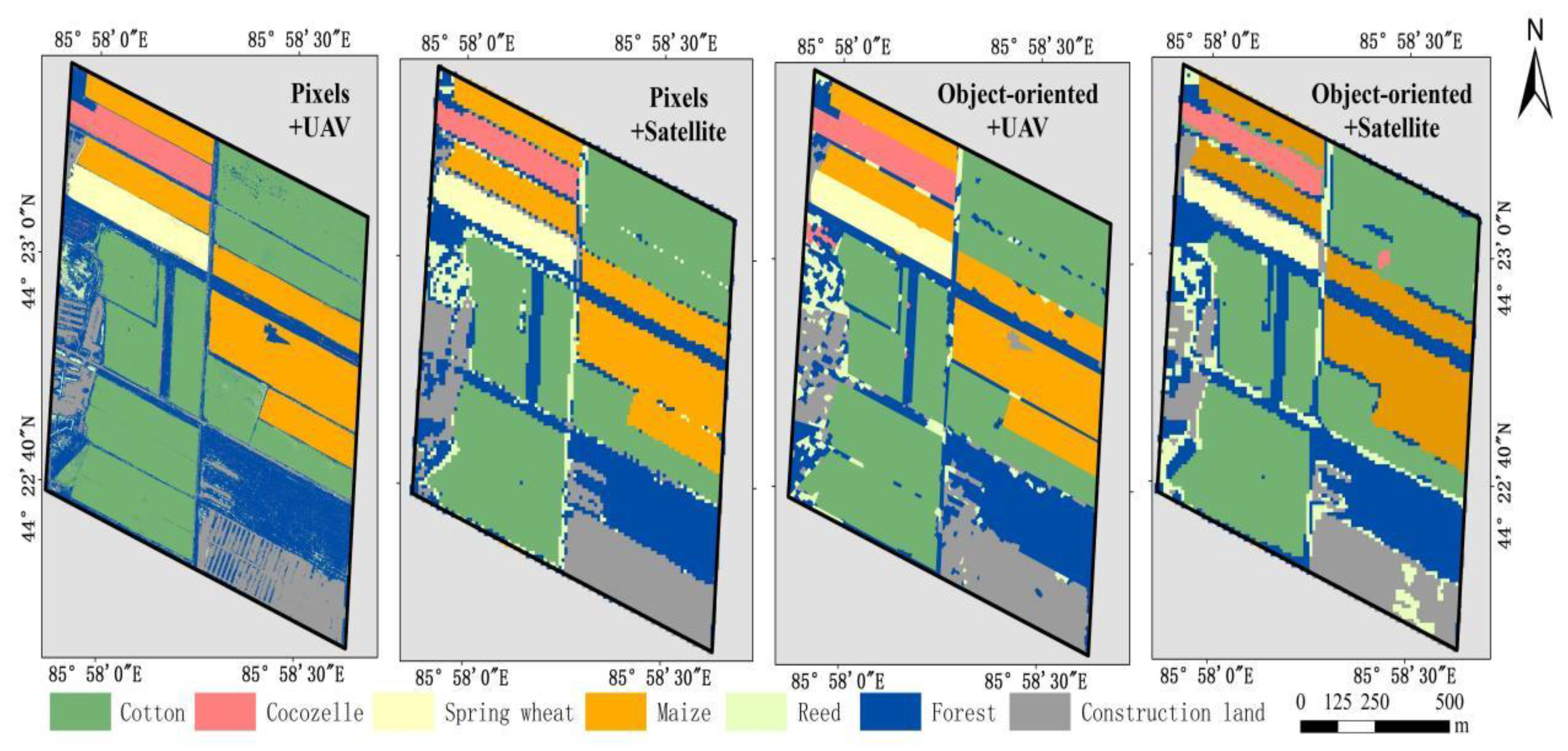

4.2. Comparison and Accuracy Evaluation of Different Image Types

4.3. Comparison and Accuracy Evaluation with Different Time Resolution

5. Discussion

- (1)

- Based on the time-series UAV image, an object-oriented random forest algorithm was used to study crop classification, and good classification accuracy and stability were achieved. With the development of information technology, the method based on deep learning has achieved good results in image processing. Liu et al. [39] proposed a method of UAV land cover image segmentation based on deep learning. The accuracy and average intersection rate of the method were 95.06% and 81.22%, respectively. This experiment was based on the Pytorch open-source framework for model training, which requires constant debugging and parameter iteration, and requires a certain amount of time. In this study, an object-oriented random forest method was used for classification, which took 309 s. Under the condition of ensuring high precision, the classification efficiency was improved, which is conducive to practical application. Therefore, in future studies, we can explore the comparison and analysis between the deep learning algorithm and the algorithm in this study, so as to explore more accurate and efficient crop classification methods.

- (2)

- In this study, cotton, corn, cocozelle and spring wheat were classified for one year. In future studies, experimental work will be carried out for more crop types, regions and years to verify and improve the model algorithm. In order to improve the applicability of the model, we will further expand the classification area and explore the spatio-temporal variation factors affecting the classification accuracy.

6. Conclusions

- (1)

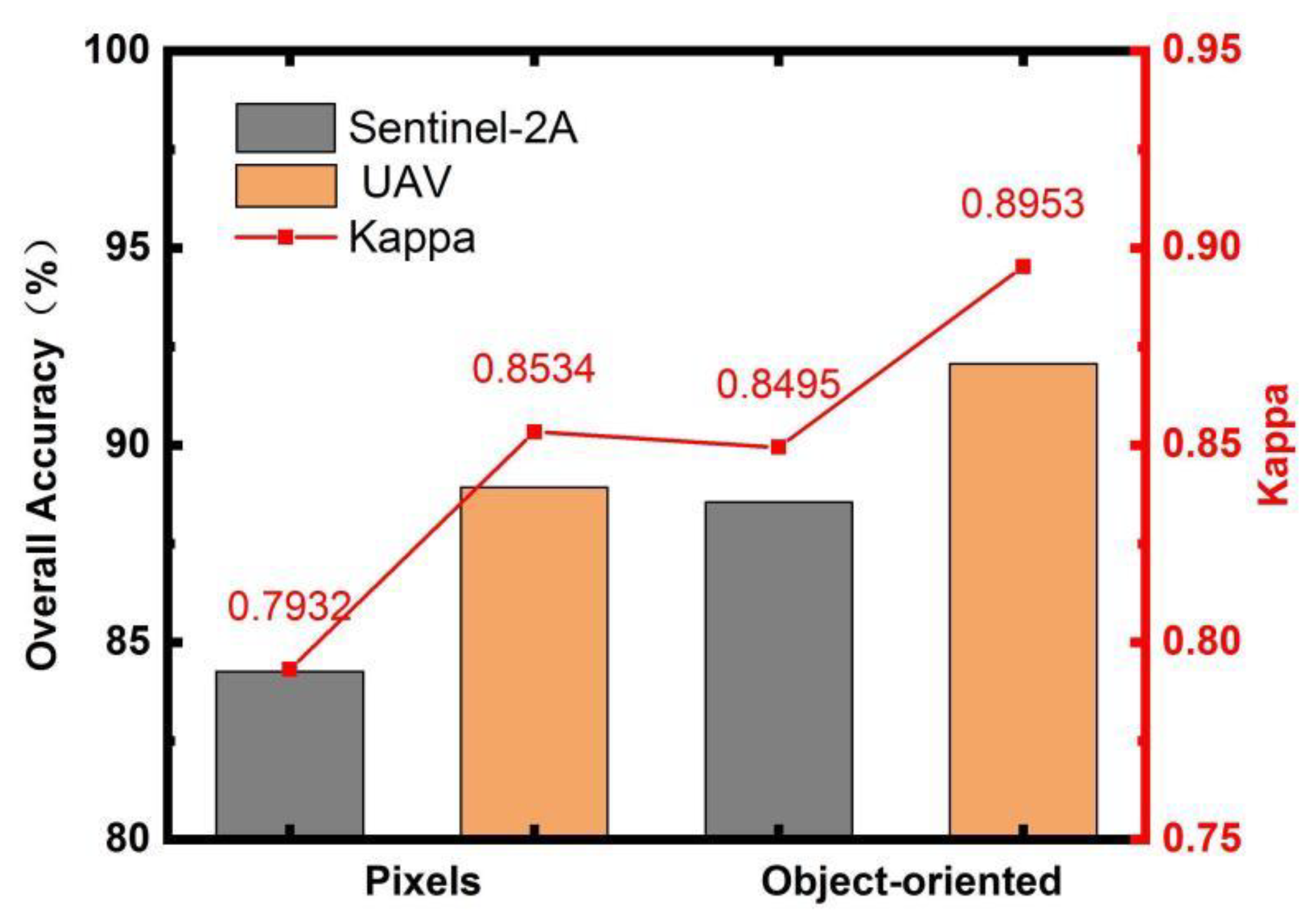

- Time-series UAV images can considerably increase the accuracy of crop classification. The overall accuracy and kappa coefficient of crop classification using time-series UAV images increased by 26.65% and 0.3496, respectively, when compared to a single period. This effectively addressed the drawbacks of single period images and further improved the classification accuracy and efficiency of crops. The result of crop classification using a UAV image was superior to that of a sentinel satellite remote sensing image, and the overall accuracy was improved by 4.67% and 3.51%, respectively, for both pixel-based and object-oriented crop classification. This demonstrates the tremendous benefits of using UAV remote sensing images for crop classification.

- (2)

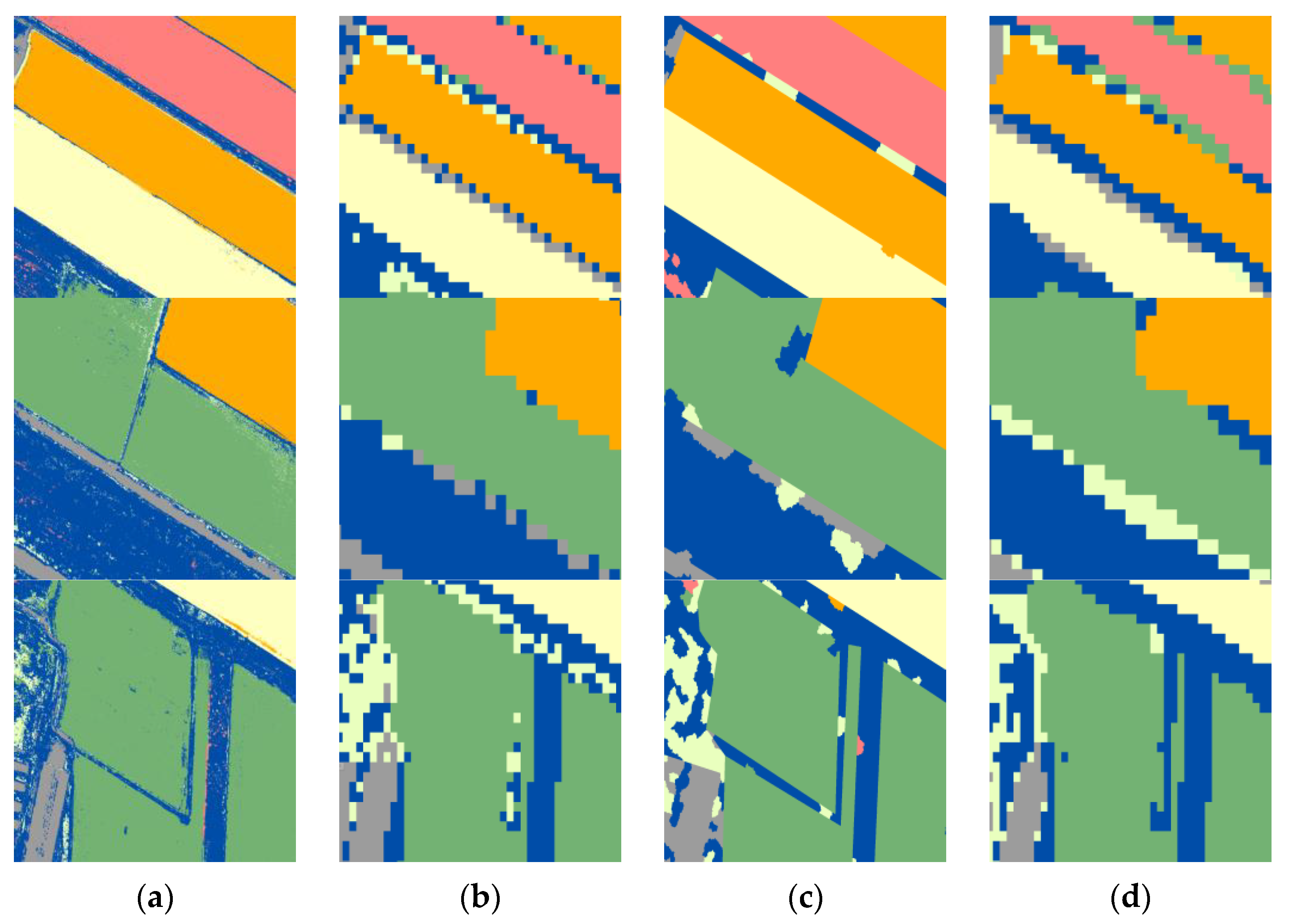

- Crop classification based on object-oriented remote sensing images is a viable and feasible approach to classification. The crop classification results based on pixels frequently exhibited the phenomenon of “mixed pixels”, whereas the plot boundary based on object-oriented classification results was more pronounced, which is more consistent with the actual planting structure space of crops. Compared to pixel-based classification results, the overall accuracy and kappa coefficient of object-oriented classification for time-series UAV remote sensing images were increased by 3.13% and 0.0419, respectively. Consequently, the object-oriented classification method is better suited for the fine classification of crops.

- (3)

- The random forest algorithm provided the most accurate and stable classifications. In pixel-based and object-oriented crop classification, RF earned the highest overall accuracy and kappa coefficient, which were 88.94% and 0.8534, and 92.07% and 0.8953, respectively. For a variety of crops, including cotton, spring wheat, cocozelle, and maize, both the producer’s and user’s RF accuracy exceeded 92%. This demonstrates that RF has achieved an outstanding classification impact in crop remote sensing picture classification, whether pixel-based or object-oriented. It has a high degree of classification precision and consistency for various crop types.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Nomenclature

| CART | Classification And Regression Tree |

| CE | Commission Error |

| DT | Decision Tree |

| ED | Euclidian Distance |

| GI | Greenness Index |

| GLCM | Gray-Level Co-occurrence Matrix |

| KNN | K-Nearest Neighbor |

| ML | Maximum Likelihood |

| MSI | Multispectral Imager |

| NDVI | Normalized Difference Vegetation Index |

| NGRDI | Normalized Green-Red Difference Index |

| NIR | Near Infrared |

| OE | Omission Error |

| OA | Overall Accuracy |

| PA | Producer’s Accuracy |

| RF | Random Forest |

| ROC-LV | Rates of Change of LV |

| SRI | Simple Ratio Index |

| SVM | Support Vector Machine |

| UA | User’s Accuracy |

| UAV | Unmanned Aerial Vehicle |

References

- Feng, Q.; Yang, L.; Wang, W.; Chen, T.; Huang, S. CNN remote sensing crop classification based on time series spectral reconstruction. J. Univ. Chin. Acad. Sci. 2020, 37, 619–628. [Google Scholar]

- Sarı, F.; Koyuncu, F. Multi criteria decision analysis to determine the suitability of agricultural crops for land consolidation areas. Int. J. Eng. Geosci. 2021, 6, 64–73. [Google Scholar] [CrossRef]

- Yi, Z.; Jia, L.; Chen, Q. Crop classification using multi-temporal Sentinel-2 data in the Shiyang River Basin of China. Remote Sens. 2020, 12, 4052. [Google Scholar] [CrossRef]

- Liang, J.; Zheng, Z.; Xia, S.; Zhang, X.; Tang, Y. Crop recognition and evaluationusing red edge features of GF-6 satellite. J. Remote Sens. 2020, 24, 1168–1179. [Google Scholar]

- Zhao, H.; Chen, Z.; Liu, J. Deep learning for crop classification of remote sesing data: Application and challenges. Chin. J. Agric. Resour. Reg. Plan. 2020, 41, 35–49. [Google Scholar]

- Zheng, J.; Song, X.; Yang, G.; Du, X.; Mei, X.; Yang, X. Remote sensing monitoring of rice and wheat canopy nitrogen: A review. Remote Sens. 2022, 14, 5712. [Google Scholar] [CrossRef]

- Zhou, Z.; Li, S.; Zhang, K.; Shao, Y. Crop mapping using remotely sensed spectral and context features based on CNN. Remote Sens. Technol. Appl. 2019, 34, 694–703. [Google Scholar]

- Han, B.; Chen, S.; Zeng, Q.; Sun, S. Time-series classification of Sentinel-1 data based on J-M distance. Sci. Technol. Eng. 2020, 20, 6977–6982. [Google Scholar]

- Jia, B.; Bai, Y.; Wei, Z.; Yan, D.; Zhang, Z. Using MODIS-EVI to identify cropping structure in plains along the Yellow River in inner Mongolia. J. Irrig. Drain. 2021, 40, 114–120. [Google Scholar]

- Yi, Y.; Jia, L. Sentinel-2 study on crop mapping of Shandian River Basin based on images. Remote Sens. Technol. Appl. 2021, 36, 400–410. [Google Scholar]

- Kang, Y.; Meng, Q.; Liu, M.; Zou, Y.; Wang, X. Crop classification based on red ddge features analysis of GF-6 WFV data. Sensors 2021, 21, 4328. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Jiang, X.; Tang, B. Application of feature optimization and convolutional neural network in crop classification. J. Geo-Inf. Sci. 2021, 23, 1071–1081. [Google Scholar]

- Xu, J.; Yang, J.; Xiong, X.; Li, H.; Huang, J.; Ting, K.; Ying, Y.; Lin, T. Towards interpreting multi-temporal deep learning models in crop mapping. Remote Sens. Environ. 2021, 264, 112599. [Google Scholar] [CrossRef]

- He, T.; Fu, Y.; Ding, H.; Zheng, W.; Huang, X.; Li, R.; Wu, S. Evaluation of mangrove wetlands protection patterns in the Guangdong–Hong Kong–Macao Greater Bay area using time-series Landsat imageries. Remote Sens. 2022, 14, 6026. [Google Scholar] [CrossRef]

- Li, X.; Wang, H.; Li, X.; Chi, D.; Tang, Z.; Han, C. Study on crops remote sensing classification based on multi-temporal Landsat 8 OLl images. Remote Sens. Technol. Appl. 2019, 34, 389–397. [Google Scholar]

- Zhang, H.; Cao, X.; Li, Q.; Zhang, M.; Zheng, X. Research on crop identification using multi-temporal NDVI HJ images. Remote Sens. Technol. Appl. 2015, 30, 304–311. [Google Scholar]

- Gu, X.; Zhang, Y.; Sang, H.; Zhai, L.; Li, S. Research on crop classification method based on Sentinel-2 time series combined vegetation index. Remote Sens. Technol. Appl. 2020, 35, 702–711. [Google Scholar]

- Xie, Q.; Lai, K.; Wang, J.; Lopez-Sanchez, J.; Shang, J.; Liao, C.; Zhu, J.; Fu, H.; Peng, X. Crop monitoring and classification using polarimetric RADARSAT-2 time-series data across growing season: A case study in southwestern Ontario, Canada. Remote Sens. 2021, 13, 1394. [Google Scholar] [CrossRef]

- Wang, X.; Qiu, P.; Li, Y.; Cha, M.X. Crops identification in Kaikong River Basin of Xinjiang based on time series Landsat remote sensing images. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2019, 35, 180–188. [Google Scholar]

- Guo, Y.; Liu, Q.; Liu, G.; Huang, C. Extraction of main crops in Yellow River Delta based on MODIS NDVI time series. J. Nat. Resour. 2017, 32, 1808–1818. [Google Scholar]

- Wang, M.; Wang, J.; Chen, L. Mapping paddy rice using weakly supervised long short-term memory network with time series Sentinel optical and SAR images. Agriculture 2020, 10, 483. [Google Scholar] [CrossRef]

- Lu, M.; Gu, X.; Sun, Q.; Li, X.; Chen, T.; Pan, Y. Production capacity evaluation of farmland using long time series of remote sensing images. Agriculture 2022, 12, 1619. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, S.; Liu, H.; Lin, Y.; Wang, J.; Zhu, M.; Gao, L.; Tong, Q. From spectrum to spectrotemporal: Research on time series change detection of remote sensing. Geomat. Inf. Sci. Wuhan Univ. 2021, 46, 451–468. [Google Scholar]

- Sefercik, U.G.; Kavzoğlu, T.; Çölkesen, İ.; Nazar, M.; ÖZTÜRK, M.Y.; Adali, S.; Salih, D.İ.N.Ç. 3D positioning accuracy and land cover classification performance of multispectral RTK UAVs. Int. J. Eng. Geosci. 2023, 8, 119–128. [Google Scholar] [CrossRef]

- Van, L.; Straatsma, M.; Addink, E.; Middelkoop, H. Monitoring height and greenness of non-woody floodplain vegetation with UAV time series. ISPRS J. Photogramm. Remote Sens. 2018, 141, 112–123. [Google Scholar]

- Tian, Z.; Fu, Y.; Liu, S. Rapid crops classification based on UAV low-altitude remote sensing. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2013, 29, 109–116. [Google Scholar]

- Zheng, X. Fine classification of typical crops based on UAV hyperspectral images. Sci. Technol. Innov. 2021, 23, 1–3. [Google Scholar]

- Tian, T.; Wang, D.; Zeng, Y.; Zhang, Y.; Huang, Q. Progress on fine classification of crops based on unmanned aerial vehicle remote sensing. China Agric. Inform. 2020, 32, 1–12. [Google Scholar]

- Xu, Q.; Guo, P.; Qi, J.; Wang, C.; Zhang, G. Construction of SEGT cotton yield estimation model based on UAV image. Transactions of the Chin. Soc. Agric. Eng. (Trans. CSAE) 2020, 36, 44–51. [Google Scholar]

- Kaya, Y.; Polat, N. A linear approach for wheat yield prediction by using different spectral vegetation indices. Int. J. Eng. Geosci. 2023, 8, 52–62. [Google Scholar] [CrossRef]

- Ma, L.; Hu, N.; Li, W.; Qin, W.; Huang, S.; Wang, Z.; Li, W.; Yu, K. Using multispectral drone data to monitor maize’s response to various irrigation modes. J. Plant Nutr. Fertil. 2022, 28, 743–753. [Google Scholar]

- Wang, S.; Zhang, L.; Lin, W.; Huang, Q.; Song, Y.; Ye, M. Study on vegetation coverage and land-use change of Guangdong Province based on MODIS-NDVI. Acta Ecol. Sin. 2022, 42, 2149–2163. [Google Scholar]

- Liang, C.; Huang, Q.; Wang, S.; Wang, C.; Yu, Q.; Wu, W. Identification of citrus orchard under vegetation indexes using multi-temporal remote sensing. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2021, 37, 168–176. [Google Scholar]

- Bo, S.; Han, X.; Ding, L. Automatic selection of segmentation parameters for object oriented image classification. Geomat. Inf. Sci. Wuhan Univ. 2009, 34, 514–517. [Google Scholar]

- Liu, S.; Zhu, H. Object-oriented land use classification based on ultra-high resolution images taken by unmanned aerial vehicle. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2020, 36, 87–94. [Google Scholar]

- Avcı, C.; Budak, M.; Yağmur, N.; Balçik, F. Comparison between random forest and support vector machine algorithms for LULC classification. Int. J. Eng. Geosci. 2023, 8, 1–10. [Google Scholar]

- Ofrizal, A.Y.; Sonobe, R.; Hıroto, Y.; Morita, A.; Ikka, T. Estimating chlorophyll content of Zizania latifolia with hyperspectral data and random forest. Int. J. Eng. Geosci. 2022, 7, 221–228. [Google Scholar]

- Ma, Y.; Ming, D.; Yang, H. Scale estimation of object-oriented image analysis based on spectral-spatial statistics. J. Remote Sens. 2017, 21, 566–578. [Google Scholar]

- Liu, W.; Zhao, L.; Zhou, Y.; Zong, S.; Luo, Y. Deep learning based unmanned aerial vehicle landcover image segmentation method. Trans. Chin. Soc. Agric. Mach. 2020, 51, 221–229. [Google Scholar]

| Vegetation Indices | Equations |

|---|---|

| Greenness Index (GI) | G/R |

| Simple Ratio Index (SRI) | NIR/R |

| Normalized Difference Vegetation Index (NDVI) | (NIR − R)/(NIR + R) |

| Normalized Green-Red Difference Index (NGRDI) | (G − R)/(G + R) |

| Vegetation indices | cotton | |||||

| cocozelle | spring wheat | maize | reed | forest | construction land | |

| NDVI | 0.581 | 2.111 | 1.517 | 0.738 | 0.713 | 1.682 |

| GI | 2.204 | 2.307 | 2.744 | 0.939 | 1.334 | 4.993 |

| SRI | 36.275 | 34.428 | 38.891 | 9.661 | 24.512 | 19.695 |

| NGRDI | 0.684 | 0.758 | 0.923 | 0.277 | 0.501 | 0.411 |

| Vegetation indices | cocozelle | |||||

| cotton | spring wheat | maize | reed | forest | construction land | |

| NDVI | 0.581 | 1.856 | 1.062 | 0.811 | 0.466 | 2.263 |

| GI | 2.204 | 3.761 | 1.752 | 2.599 | 1.764 | 7.197 |

| SRI | 36.275 | 58.453 | 32.778 | 42.366 | 25.331 | 55.968 |

| NGRDI | 0.684 | 1.102 | 0.623 | 0.745 | 0.499 | 1.091 |

| Vegetation indices | spring wheat | |||||

| cotton | cocozelle | maize | reed | forest | construction land | |

| NDVI | 2.111 | 1.856 | 0.826 | 1.575 | 1.398 | 1.303 |

| GI | 2.307 | 3.761 | 2.815 | 1.658 | 1.997 | 5.434 |

| SRI | 34.428 | 58.453 | 42.471 | 26.065 | 33.122 | 27.635 |

| NGRDI | 0.758 | 1.102 | 0.743 | 0.637 | 0.603 | 0.681 |

| Vegetation indices | maize | |||||

| cotton | cocozelle | spring wheat | reed | forest | construction land | |

| NDVI | 1.517 | 1.062 | 0.826 | 0.981 | 0.932 | 1.465 |

| GI | 2.744 | 1.752 | 2.815 | 2.443 | 1.867 | 6.241 |

| SRI | 38.891 | 32.778 | 42.471 | 32.63 | 36.753 | 42.644 |

| NGRDI | 0.923 | 0.623 | 0.743 | 0.804 | 0.528 | 0.944 |

| Methods | Name | Producer’s Accuracy% | User’s Accuracy% | Commission Error% | Omission Error% |

|---|---|---|---|---|---|

| RF | maize | 97.89 | 99.66 | 0.34 | 2.11 |

| spring wheat | 98.64 | 99.96 | 0.04 | 1.36 | |

| cotton | 97.99 | 99.32 | 0.68 | 2.01 | |

| cocozelle | 99.58 | 92.53 | 7.47 | 0.42 | |

| OA | 92.07% | ||||

| kappa | 0.8953 | ||||

| KNN | maize | 96.56 | 99.59 | 0.41 | 3.44 |

| spring wheat | 98.89 | 97.14 | 2.86 | 1.11 | |

| cotton | 99.42 | 98.34 | 1.66 | 0.58 | |

| cocozelle | 97.47 | 97.37 | 2.63 | 2.53 | |

| OA | 91.32% | ||||

| kappa | 0.8858 | ||||

| SVM | maize | 94.21 | 91.27 | 8.73 | 5.79 |

| spring wheat | 91.56 | 98.69 | 1.31 | 8.44 | |

| cotton | 95.13 | 96.96 | 3.04 | 4.87 | |

| cocozelle | 94.98 | 93.11 | 6.89 | 5.02 | |

| OA | 88.38% | ||||

| kappa | 0.8463 | ||||

| DT | maize | 91.47 | 90.15 | 9.85 | 8.53 |

| spring wheat | 90.10 | 93.69 | 6.31 | 9.90 | |

| cotton | 90.28 | 95.87 | 4.13 | 9.72 | |

| cocozelle | 73.00 | 62.60 | 37.40 | 27.00 | |

| OA | 81.26% | ||||

| kappa | 0.7575 | ||||

| Schemes Name | Classification Algorithms | Way of Classification | Overall Accuracy% | Kappa Coefficient |

|---|---|---|---|---|

| Crop classification based on single-period UAV remote sensing images | Random Forest | Pixels | 56.73 | 0.4436 |

| Object-oriented | 65.42 | 0.5457 | ||

| Crop classification based on time-series UAV remote sensing images | Pixels | 88.94 | 0.8534 | |

| Object-oriented | 92.07 | 0.8953 | ||

| Crop classification based on single-period satellite remote sensing images | Pixels | 54.70 | 0.4339 | |

| Object-oriented | 62.81 | 0.5196 | ||

| Crop classification based on time-series satellite remote sensing images | Pixels | 84.27 | 0.7932 | |

| Object-oriented | 88.56 | 0.8495 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Q.; Jin, M.; Guo, P. A High-Precision Crop Classification Method Based on Time-Series UAV Images. Agriculture 2023, 13, 97. https://doi.org/10.3390/agriculture13010097

Xu Q, Jin M, Guo P. A High-Precision Crop Classification Method Based on Time-Series UAV Images. Agriculture. 2023; 13(1):97. https://doi.org/10.3390/agriculture13010097

Chicago/Turabian StyleXu, Quan, Mengting Jin, and Peng Guo. 2023. "A High-Precision Crop Classification Method Based on Time-Series UAV Images" Agriculture 13, no. 1: 97. https://doi.org/10.3390/agriculture13010097

APA StyleXu, Q., Jin, M., & Guo, P. (2023). A High-Precision Crop Classification Method Based on Time-Series UAV Images. Agriculture, 13(1), 97. https://doi.org/10.3390/agriculture13010097