Extracting Tea Plantations from Multitemporal Sentinel-2 Images Based on Deep Learning Networks

Abstract

1. Introduction

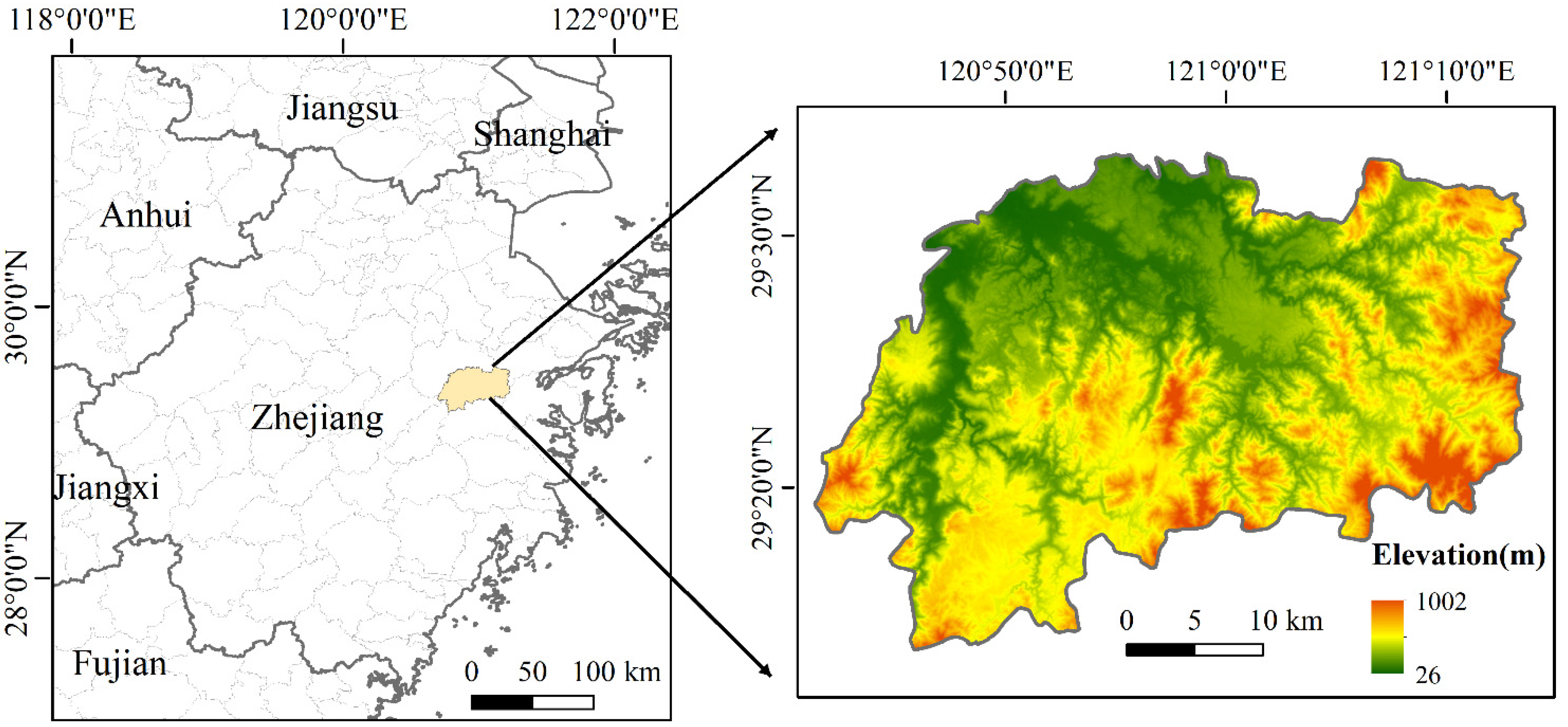

2. Study Area and Data Acquisitions

2.1. Study Area

2.2. Research Data

3. Materials and Methods

3.1. Data Preprocessing

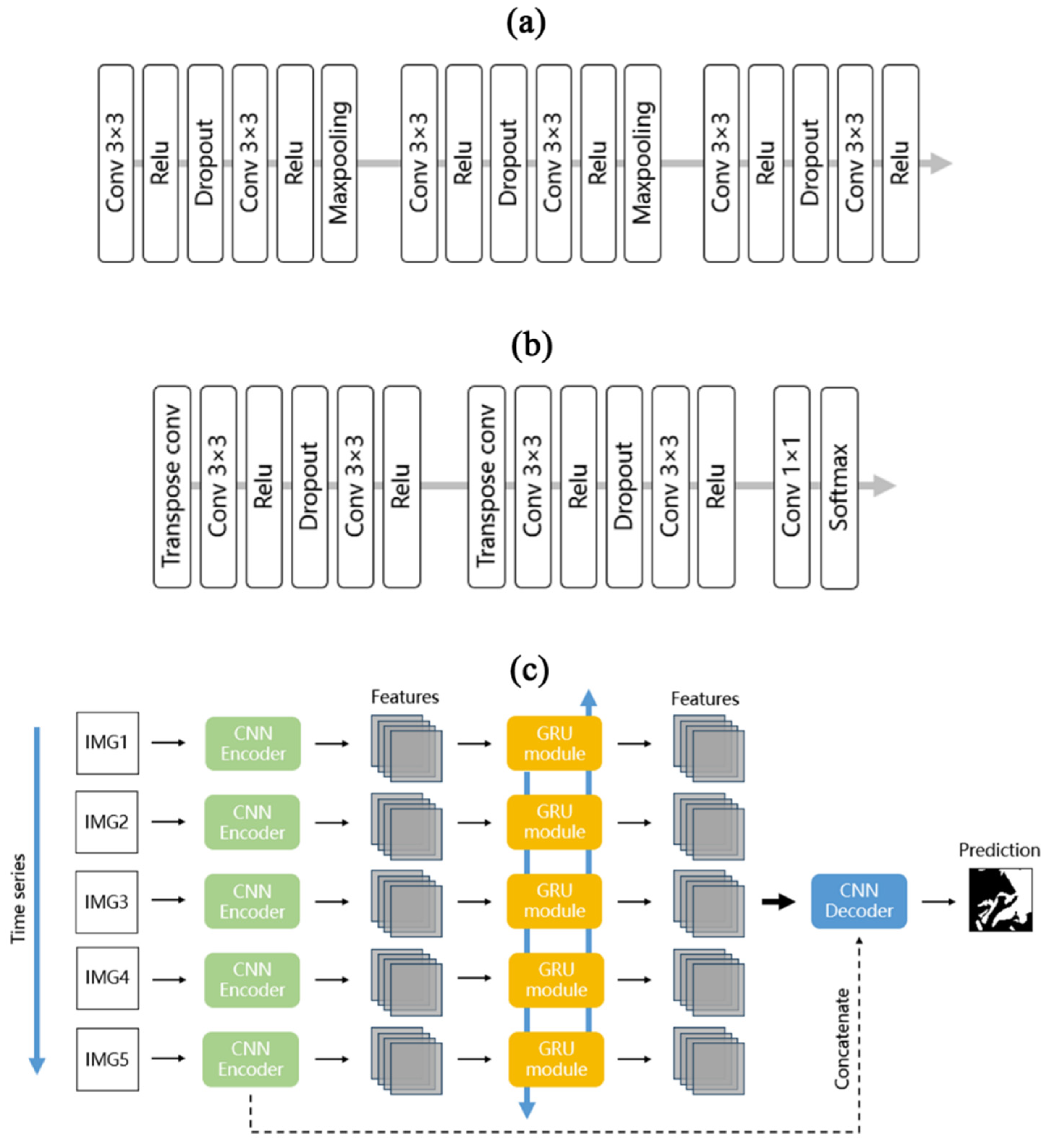

3.2. R-CNN Method for Tea Plantation Extraction

3.3. Other Methods for Comparison

- (1)

- RF [56] classification method: The RF classification algorithm is a traditional machine learning method developed from the decision tree (DT) algorithm, which has the benefits of high training speed and a small possibility of overfitting. It randomly extracts some data from the initial samples and reassembles them into sample subsets, then generates multiple decision trees to train the sample subsets, and finally integrates the voting results of each decision tree to determine the final predicted result of the classification model.

- (2)

- SVM [57] classification method: The SVM classification algorithm is a traditional machine learning method with the advantages of a simple structure and insensitivity to outliers. It maps the sample data into a high-dimensional space and solves for an optimal hyperplane in which to partition the data so that the data closest to the hyperplane on each side are as far away from the hyperplane as possible, based on which the sample data can be classified and predicted.

- (3)

- CNN classification method: The CNN classification model in the comparison experiments is built based on the original UNet structure, i.e., removing the GRU module from the R-CNN model and using only the CNN encoder and CNN decoder.

- (4)

- RNN classification method: The bidirectional GRU module is used to construct the RNN classification model in the comparison experiments.

3.4. Experimental Settings

3.5. Evaluation Indicators

4. Results

4.1. Evaluation of Different Input Features

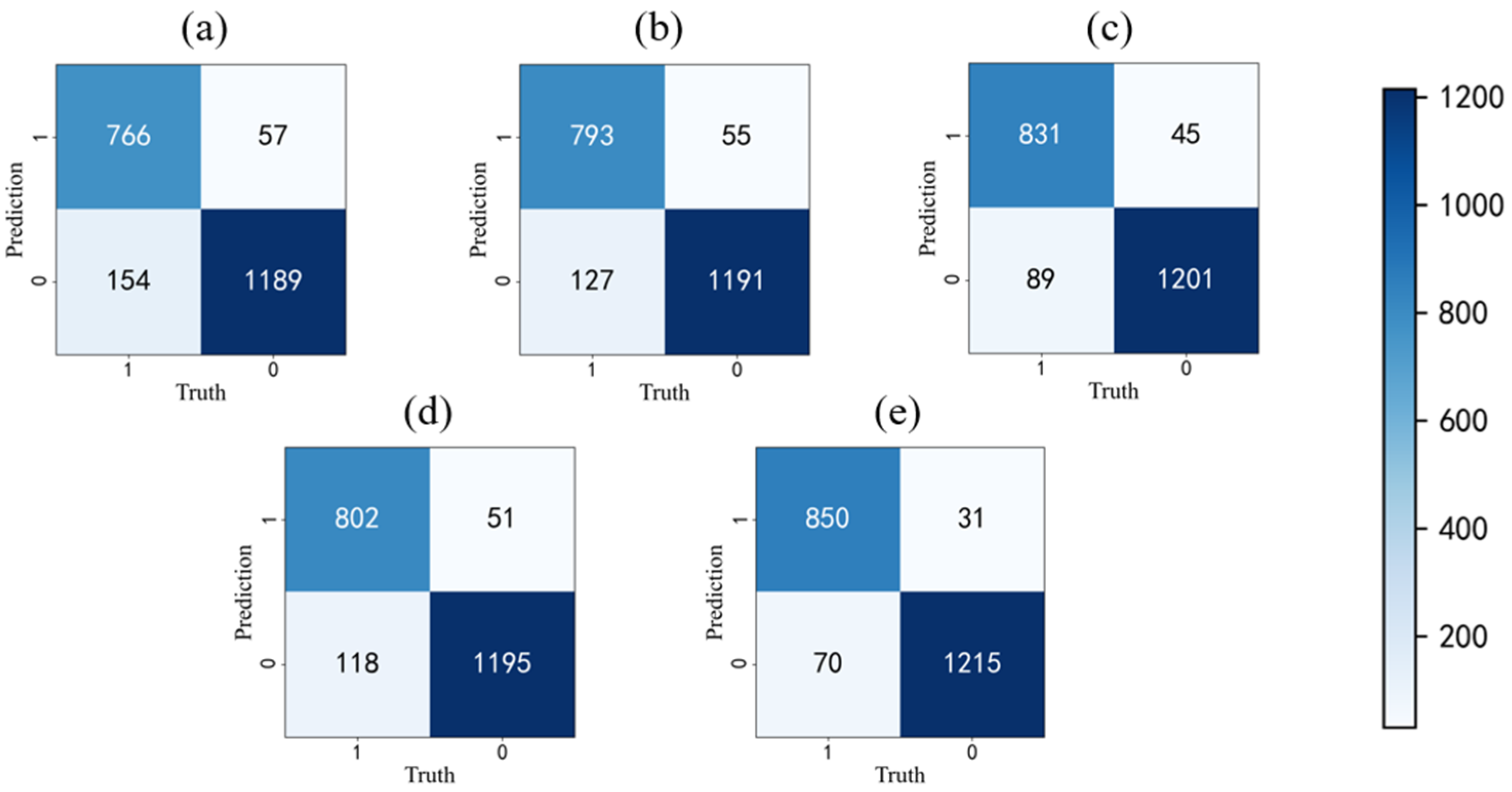

4.2. Evaluation of Different Models

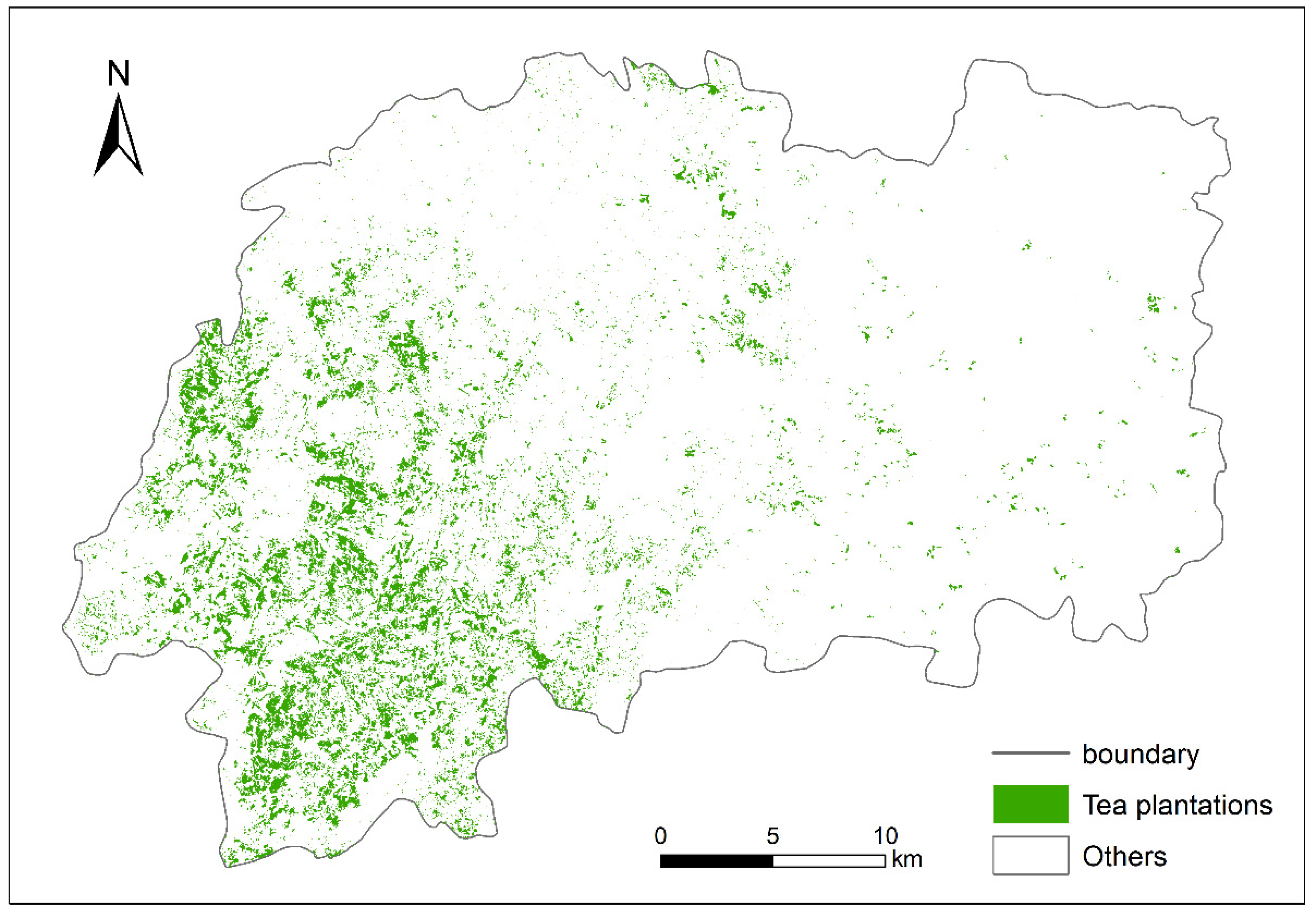

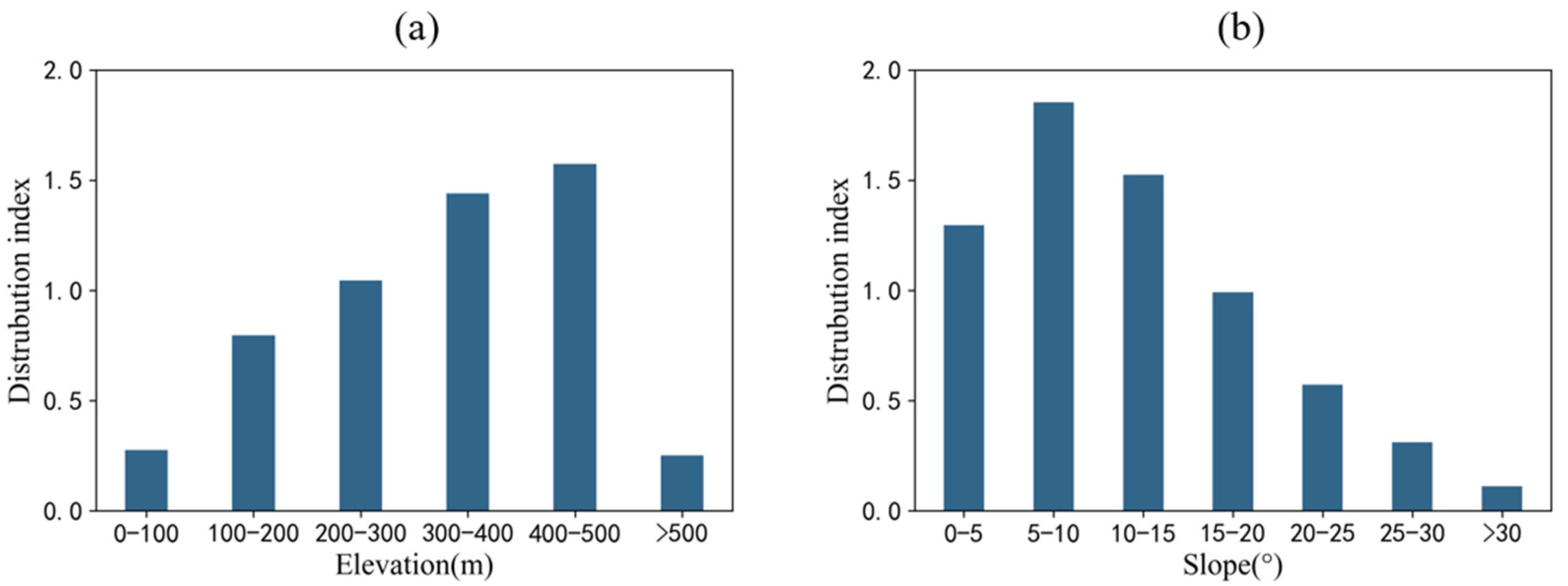

4.3. Spatial Distribution of Tea Plantations in Xinchang County

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Xiao, Z.; Huang, X.; Meng, H.; Zhao, Y. Spatial structure and evolution of tea production in China from 2009 to 2014. Geogr. Res. 2017, 36, 109–120. [Google Scholar]

- Chen, L.; Zhou, Z. Variations of main quality components of tea genetic resources [Camellia sinensis (L.) O. Kuntze] preserved in the China National Germplasm Tea Repository. Plant Foods Hum. Nutr. 2005, 60, 31–35. [Google Scholar] [CrossRef] [PubMed]

- Su, S.; Wan, C.; Li, J.; Jin, X.; Pi, J.; Zhang, Q.; Weng, M. Economic benefit and ecological cost of enlarging tea cultivation in subtropical China: Characterizing the trade-off for policy implications. Land Use Policy 2017, 66, 183–195. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhang, X. Zhe Jiang Stastical Yearbook, 3rd ed.; China Statistics Publishing House: Beijing, China, 2021. [Google Scholar]

- Brezonik, P.L.; Olmanson, L.G.; Bauer, M.E.; Kloiber, S.M. Measuring water clarity and quality in minnesota lakes and rivers: A census-based approach using remote-sensing techniques. Cura Rep. 2007, 37, 3–313. [Google Scholar]

- Enoguanbhor, E.C.; Gollnow, F.; Nielsen, J.O.; Lakes, T.; Walker, B.B. Land cover change in the Abuja City-Region, Nigeria: Integrating GIS and remotely sensed data to support land use planning. Sustainability 2019, 11, 1313. [Google Scholar] [CrossRef]

- Vibhute, A.D.; Gawali, B.W. Analysis and modeling of agricultural land use using remote sensing and geographic information system: A review. Int. J. Eng. Res. Appl. 2013, 3, 81–91. [Google Scholar]

- Li, J.; Pei, Y.; Zhao, S.; Xiao, R.; Sang, X.; Zhang, C. A review of remote sensing for environmental monitoring in China. Remote Sens. 2020, 12, 1130. [Google Scholar] [CrossRef]

- Li, Z.; Chen, G.; Zhang, T. A CNN-transformer hybrid approach for crop classification using multitemporal multisensor images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 847–858. [Google Scholar] [CrossRef]

- Zhao, H.; Chen, Z.; Jiang, H.; Jing, W.; Sun, L.; Feng, M. Evaluation of three deep learning models for early crop classification using sentinel-1A imagery time series—A case study in Zhanjiang, China. Remote Sens. 2019, 11, 2673. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Xu, J.; Yang, J.; Xiong, X.; Li, H.; Huang, J.; Ting, K.; Ying, Y.; Lin, T. Towards interpreting multi-temporal deep learning models in crop mapping. Remote Sens. Environ. 2021, 264, 112599. [Google Scholar] [CrossRef]

- Xie, Y.; Feng, D.; Shen, X.; Liu, Y.; Zhu, J.; Hussain, T.; Baik, S.W. Clustering Feature Constraint Multiscale Attention Network for Shadow Extraction From Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4705414. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, P.; Jin, L.; Tan, B.; Zhao, X.; Liu, D. The application of spectral characteristics of time series Sentinel-2A images in tea land extraction. Sci. Surv. Mapp. 2020, 45, 80–88. [Google Scholar]

- Xiong, H.; Zhou, X.; Wang, X.; Cui, Y. Mapping the spatial distribution of tea plantations with 10 m resolution in Fujian province using Google Earth Engine. J. Geoinf. Sci. 2021, 23, 1325–1337. [Google Scholar]

- Chen, P.; Zhao, C.; Duan, D.; Wang, F. Extracting tea plantations in complex landscapes using Sentinel-2 imagery and machine learning algorithms. Community Ecol. 2022, 23, 163–172. [Google Scholar] [CrossRef]

- Ma, C.; Yang, F.; Wang, X. Extracting tea plantations in southern hilly and mountainous region based on mesoscale spectrum and temporal phenological features. Remote Sens. Land Resour. 2019, 31, 141–148. [Google Scholar] [CrossRef]

- Xu, W.; Huang, S.; Wu, C.; Xiong, Y.; Wang, L.; Lu, N.; Kou, W. The pruning phenological phase-based method for extracting tea plantations by field hyperspectral data and Landsat time series imagery. Geocarto Int. 2022, 37, 2116–2136. [Google Scholar] [CrossRef]

- Wang, B.; He, B.; Lin, N.; Wang, W.; Li, T. Tea plantation remote sensing extraction based on random forest feature selection. J. Jilin Univ. 2022, 52, 1719–1732. [Google Scholar]

- Huang, S.; Xu, W.; Xiong, Y.; Wu, C.; Dai, F.; Xu, H.; Wang, L.; Kou, W. Combining Textures and Spatial Features to Extract Tea Plantations Based on Object-Oriented Method by Using Multispectral Image. Spectrosc. Spectr. Anal. 2021, 41, 2565–2571. [Google Scholar]

- Dihkan, M.; Guneroglu, N.; Karsli, F.; Guneroglu, A. Remote sensing of tea plantations using an SVM classifier and pattern-based accuracy assessment technique. Int. J. Remote Sens. 2013, 34, 8549–8565. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, J.; Yang, Y.; Wang, X. Extraction of tea plantation with high resolution Gaofen-2 image. In Proceedings of the 2019 8th International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Istanbul, Turkey, 16–19 July 2019; pp. 1–6. [Google Scholar]

- Akar, Ö.; Güngör, O. Integrating multiple texture methods and NDVI to the Random Forest classification algorithm to detect tea and hazelnut plantation areas in northeast Turkey. Int. J. Remote Sens. 2015, 36, 442–464. [Google Scholar] [CrossRef]

- Xu, G. Research on Tea Garden Remote Sensing Extraction Based on Object-Oriented and Multi-Metadata Fusion. Master’s Thesis, Shaanxi Normal University, Xi’an, China, 2016. [Google Scholar]

- Yao, J.; Wu, J.; Yang, Y.; Shi, Z. Segmentation in multi-spectral remote sensing images using the fully convolutional neural network. J. Image Graph. 2020, 25, 180–192. [Google Scholar]

- Jamil, A.; Bayram, B. Automatic discriminative feature extraction using Convolutional Neural Network for remote sensing image classification. In Proceedings of the 40th Asian Conference on Remote Sensing, Daejeon, Republic of Korea, 14–18 October 2019. [Google Scholar]

- Ma, A.; Wan, Y.; Zhong, Y.; Wang, J.; Zhang, L. SceneNet: Remote sensing scene classification deep learning network using multi-objective neural evolution architecture search. ISPRS J. Photogramm. Remote Sens. 2021, 172, 171–188. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, Y. Urban land-use mapping using a deep convolutional neural network with high spatial resolution multispectral remote sensing imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Lei, S.; Shi, Z.; Zou, Z. Super-resolution for remote sensing images via local–global combined network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1243–1247. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Segment-before-detect: Vehicle detection and classification through semantic segmentation of aerial images. Remote Sens. 2017, 9, 368. [Google Scholar] [CrossRef]

- Zhang, Q.; Yuan, Q.; Zeng, C.; Li, X.; Wei, Y. Missing data reconstruction in remote sensing image with a unified spatial–temporal–spectral deep convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4274–4288. [Google Scholar] [CrossRef]

- Huang, X.; Zhu, Z.; Li, Y.; Wu, B.; Yang, M. Tea garden detection from high-resolution imagery using a scene-based framework. Photogramm. Eng. Remote Sens. 2018, 84, 723–731. [Google Scholar] [CrossRef]

- Liao, K.; Nie, L.; Yang, Z.; Zhang, H.; Wang, Y.; Peng, J.; Dang, H.; Leng, W. Classification of tea garden based on multi-source high-resolution satellite images using multi-dimensional convolutional neural network. Remote Sens. Nat. Resour. 2022, 34, 152–161. [Google Scholar]

- Tang, Z.; Li, M.; Wang, X. Mapping tea plantations from VHR images using OBIA and convolutional neural networks. Remote Sens. 2020, 12, 2935. [Google Scholar] [CrossRef]

- Özen, B. Identification of Tea Plantation Areas Using Google Cloud Based Random Forest and Deep Learning. Master’s Thesis, Istanbul Technical University, Istanbul, Turkey, 2020. [Google Scholar]

- Rußwurm, M.; Korner, M. Temporal vegetation modelling using long short-term memory networks for crop identification from medium-resolution multi-spectral satellite images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 11–19. [Google Scholar]

- Sun, Z.; Di, L.; Fang, H. Using long short-term memory recurrent neural network in land cover classification on Landsat and Cropland data layer time series. Int. J. Remote Sens. 2019, 40, 593–614. [Google Scholar] [CrossRef]

- Jin, Z.; Huang, J.; Li, B.; Luo, L.; Yao, Y.; Li, R. Suitability evaluation of tea trees cultivation based on GIS in Zhejiang Province. Trans. Chin. Soc. Agric. Eng. 2011, 27, 231–236. [Google Scholar]

- Sefrin, O.; Riese, F.M.; Keller, S. Deep learning for land cover change detection. Remote Sens. 2020, 13, 78. [Google Scholar] [CrossRef]

- Ghosh, P.; Mandal, D.; Bhattacharya, A.; Nanda, M.K.; Bera, S. Assessing crop monitoring potential of sentinel-2 in a spatio-temporal scale. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 227–231. [Google Scholar] [CrossRef]

- Goffi, A.; Stroppiana, D.; Brivio, P.A.; Bordogna, G.; Boschetti, M. Towards an automated approach to map flooded areas from Sentinel-2 MSI data and soft integration of water spectral features. Int. J. Appl. Earth Obs. Geoinf. 2020, 84, 101951. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Li, L.; Li, N.; Lu, D. Mapping tea gardens spatial distribution in northwestern Zhejiang Province using multi-temporal Sentinel-2 imagery. J. Zhejiang A&F Univ. 2019, 36, 841–848. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Zhou, X.-X.; Li, Y.-Y.; Luo, Y.-K.; Sun, Y.-W.; Su, Y.-J.; Tan, C.-W.; Liu, Y.-J. Research on remote sensing classification of fruit trees based on Sentinel-2 multi-temporal imageries. Sci. Rep. 2022, 12, 11549. [Google Scholar] [CrossRef]

- Li, Q.; Liu, J.; Mi, X.; Yang, J.; Yu, T. Object-oriented crop classification for GF-6 WFV remote sensing images based on Convolutional Neural Network. Natl. Remote Sens. Bull. 2021, 25, 549–558. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; SegNet, R.C. A deep convolutional encoder-decoder architecture for image segmentation. arXiv 2015, arXiv:1511.00561. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical image computing and computer-assisted intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Hou, Y.; Liu, Z.; Zhang, T.; Li, Y. C-Unet: Complement UNet for remote sensing road extraction. Sensors 2021, 21, 2153. [Google Scholar] [CrossRef] [PubMed]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Bahdanau, D.; Bengio, Y. On the properties of neural machine translation: Encoder-decoder approaches. Comput. Sci. 2014, 26, 103–111. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Sun, L.; Chen, H.; Pan, J. Analysis of the land use spatiotemporal variation based on DEM—Beijing Yanqing County as an example. J. Mt. Res. 2004, 22, 762–766. [Google Scholar]

- Liu, M.; Fu, B.; Xie, S.; He, H.; Lan, F.; Li, Y.; Lou, P.; Fan, D. Comparison of multi-source satellite images for classifying marsh vegetation using DeepLabV3 Plus deep learning algorithm. Ecol. Indic. 2021, 125, 107562. [Google Scholar] [CrossRef]

- Sothe, C.; Almeida, C.M.d.; Liesenberg, V.; Schimalski, M.B. Evaluating Sentinel-2 and Landsat-8 data to map sucessional forest stages in a subtropical forest in Southern Brazil. Remote Sens. 2017, 9, 838. [Google Scholar] [CrossRef]

- Ji, S.; Zhang, C.; Xu, A.; Shi, Y.; Duan, Y. 3D convolutional neural networks for crop classification with multi-temporal remote sensing images. Remote Sens. 2018, 10, 75. [Google Scholar] [CrossRef]

- Pelletier, C.; Webb, G.I.; Petitjean, F. Temporal convolutional neural network for the classification of satellite image time series. Remote Sens. 2019, 11, 523. [Google Scholar] [CrossRef]

- Bhatnagar, S.; Gill, L.; Ghosh, B. Drone image segmentation using machine and deep learning for mapping raised bog vegetation communities. Remote Sens. 2020, 12, 2602. [Google Scholar] [CrossRef]

- Dang, K.B.; Nguyen, M.H.; Nguyen, D.A.; Phan, T.T.H.; Giang, T.L.; Pham, H.H.; Nguyen, T.N.; Tran, T.T.V.; Bui, D.T. Coastal wetland classification with deep u-net convolutional networks and sentinel-2 imagery: A case study at the tien yen estuary of vietnam. Remote Sens. 2020, 12, 3270. [Google Scholar] [CrossRef]

- Garnot, V.S.F.; Landrieu, L.; Giordano, S.; Chehata, N. Time-space tradeoff in deep learning models for crop classification on satellite multi-spectral image time series. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 6247–6250. [Google Scholar]

- Mou, L.; Bruzzone, L.; Zhu, X.X. Learning spectral-spatial-temporal features via a recurrent convolutional neural network for change detection in multispectral imagery. IEEE Trans. Geosci. Remote Sens. 2018, 57, 924–935. [Google Scholar] [CrossRef]

| Band Name | Central Wavelength (μm) | Spatial Resolution (m) |

|---|---|---|

| B1—Coastal aerosol | 0.443 | 60 |

| B2—Blue | 0.490 | 10 |

| B3—Green | 0.560 | 10 |

| B4—Red | 0.665 | 10 |

| B5—Vegetation Red Edge 1 | 0.705 | 20 |

| B6—Vegetation Red Edge 2 | 0.740 | 20 |

| B7—Vegetation Red Edge 3 | 0.783 | 20 |

| B8—NIR | 0.842 | 10 |

| B8A—Narrow NIR | 0.865 | 20 |

| B9—Water vapor | 0.945 | 60 |

| B10—SWIR Cirrus | 1.375 | 60 |

| B11—SWIR 1 | 1.610 | 20 |

| B12—SWIR 1 | 2.190 | 20 |

| Name | Calculation Formulas |

|---|---|

| NDVI | |

| MNDVI | |

| EVI | |

| NDVIre1 | |

| NDVIre2 | |

| NDVIre3 |

| Initial Learning Rate | Batch Size | Epoch | Loss Function |

|---|---|---|---|

| 0.001 | 8 | 200 | cross entropy loss |

| Features | F1 | IoU |

|---|---|---|

| Common bands | 0.774 | 0.631 |

| Common bands and red-edge vegetation bands | 0.839 | 0.722 |

| Common bands and SWIR bands | 0.821 | 0.697 |

| All spectral bands | 0.885 | 0.793 |

| All spectral bands and vegetation indices | 0.883 | 0.792 |

| Method | F1 | IoU |

|---|---|---|

| SVM | 0.774 | 0.632 |

| RF | 0.812 | 0.684 |

| CNN | 0.855 | 0.747 |

| RNN | 0.844 | 0.730 |

| R-CNN | 0.885 | 0.793 |

| Method | Class | CE (%) | OE (%) | OA (%) | Kappa |

|---|---|---|---|---|---|

| SVM | Tea | 10.2 | 13.3 | 90.2 | 0.798 |

| Others | 9.6 | 7.3 | |||

| RF | Tea | 9.3 | 10.5 | 91.6 | 0.829 |

| Others | 7.7 | 6.7 | |||

| CNN | Tea | 7.5 | 7.4 | 93.7 | 0.871 |

| Others | 5.5 | 5.5 | |||

| RNN | Tea | 7.9 | 9.6 | 92.6 | 0.849 |

| Others | 7.0 | 5.7 | |||

| R-CNN | Tea | 4.9 | 6.3 | 95.3 | 0.904 |

| Others | 4.6 | 3.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yao, Z.; Zhu, X.; Zeng, Y.; Qiu, X. Extracting Tea Plantations from Multitemporal Sentinel-2 Images Based on Deep Learning Networks. Agriculture 2023, 13, 10. https://doi.org/10.3390/agriculture13010010

Yao Z, Zhu X, Zeng Y, Qiu X. Extracting Tea Plantations from Multitemporal Sentinel-2 Images Based on Deep Learning Networks. Agriculture. 2023; 13(1):10. https://doi.org/10.3390/agriculture13010010

Chicago/Turabian StyleYao, Zhongxi, Xiaochen Zhu, Yan Zeng, and Xinfa Qiu. 2023. "Extracting Tea Plantations from Multitemporal Sentinel-2 Images Based on Deep Learning Networks" Agriculture 13, no. 1: 10. https://doi.org/10.3390/agriculture13010010

APA StyleYao, Z., Zhu, X., Zeng, Y., & Qiu, X. (2023). Extracting Tea Plantations from Multitemporal Sentinel-2 Images Based on Deep Learning Networks. Agriculture, 13(1), 10. https://doi.org/10.3390/agriculture13010010