Position Accuracy Assessment of a UAV-Mounted Sequoia+ Multispectral Camera Using a Robotic Total Station

Abstract

:1. Introduction

2. Materials and Methods

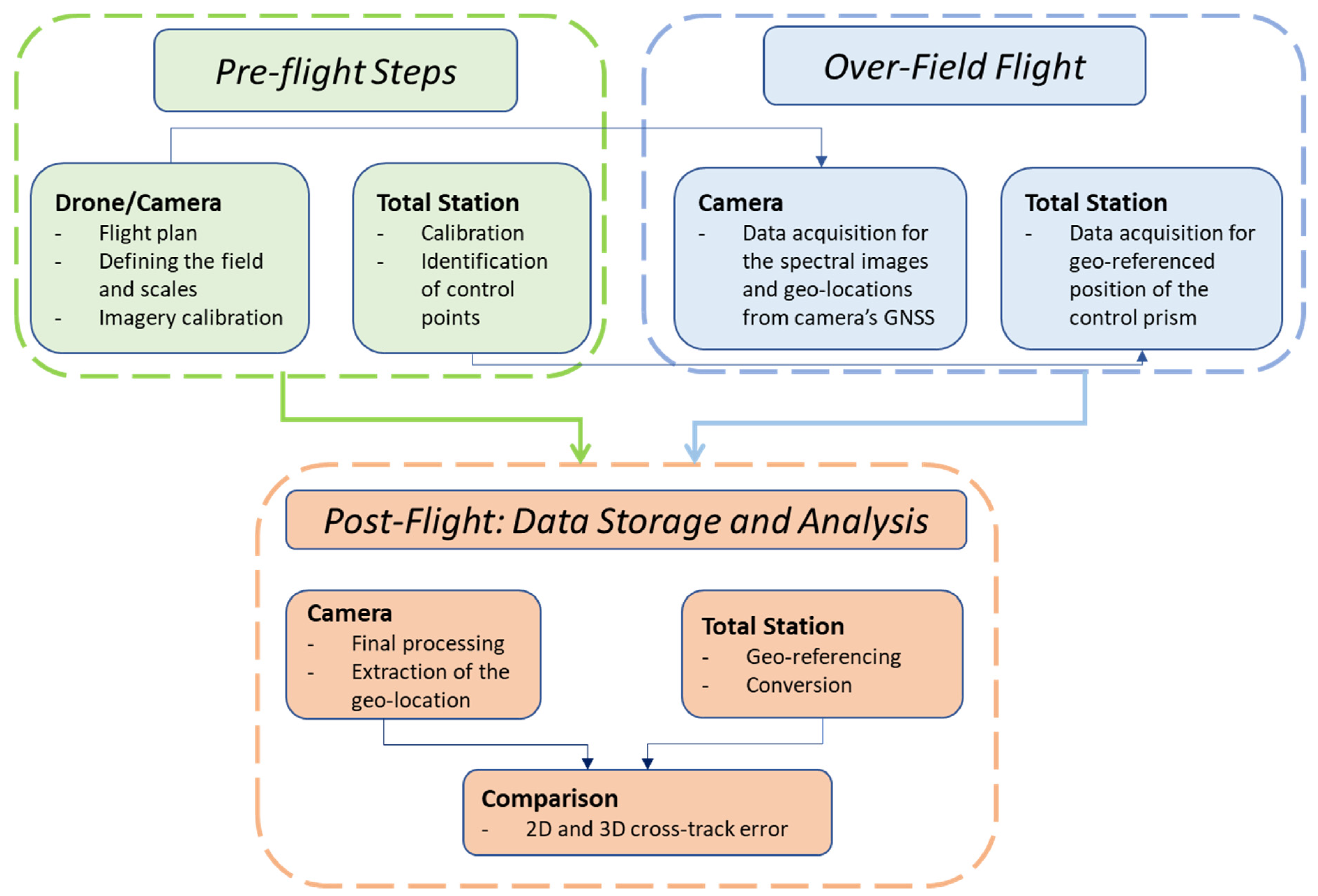

2.1. Workflow

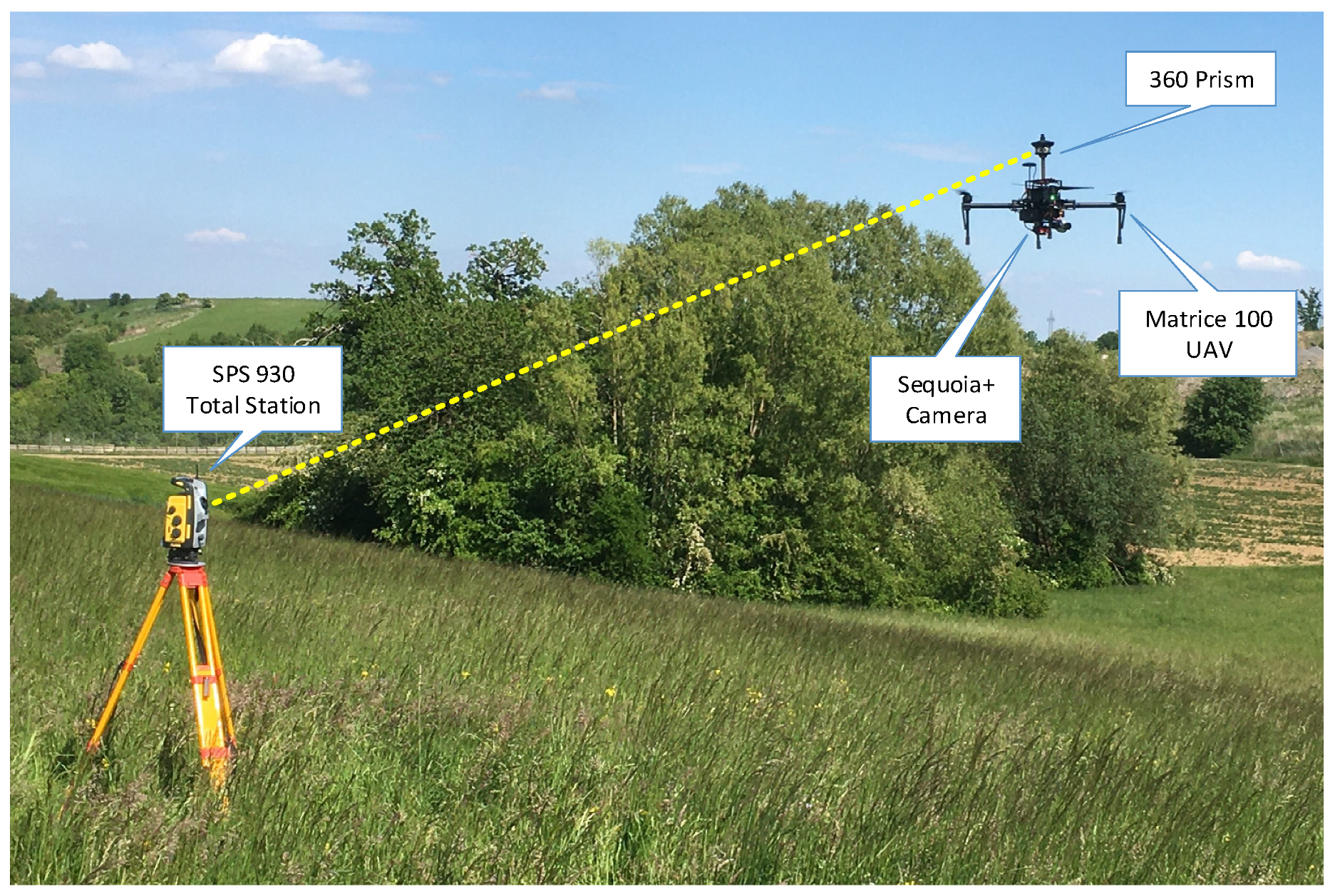

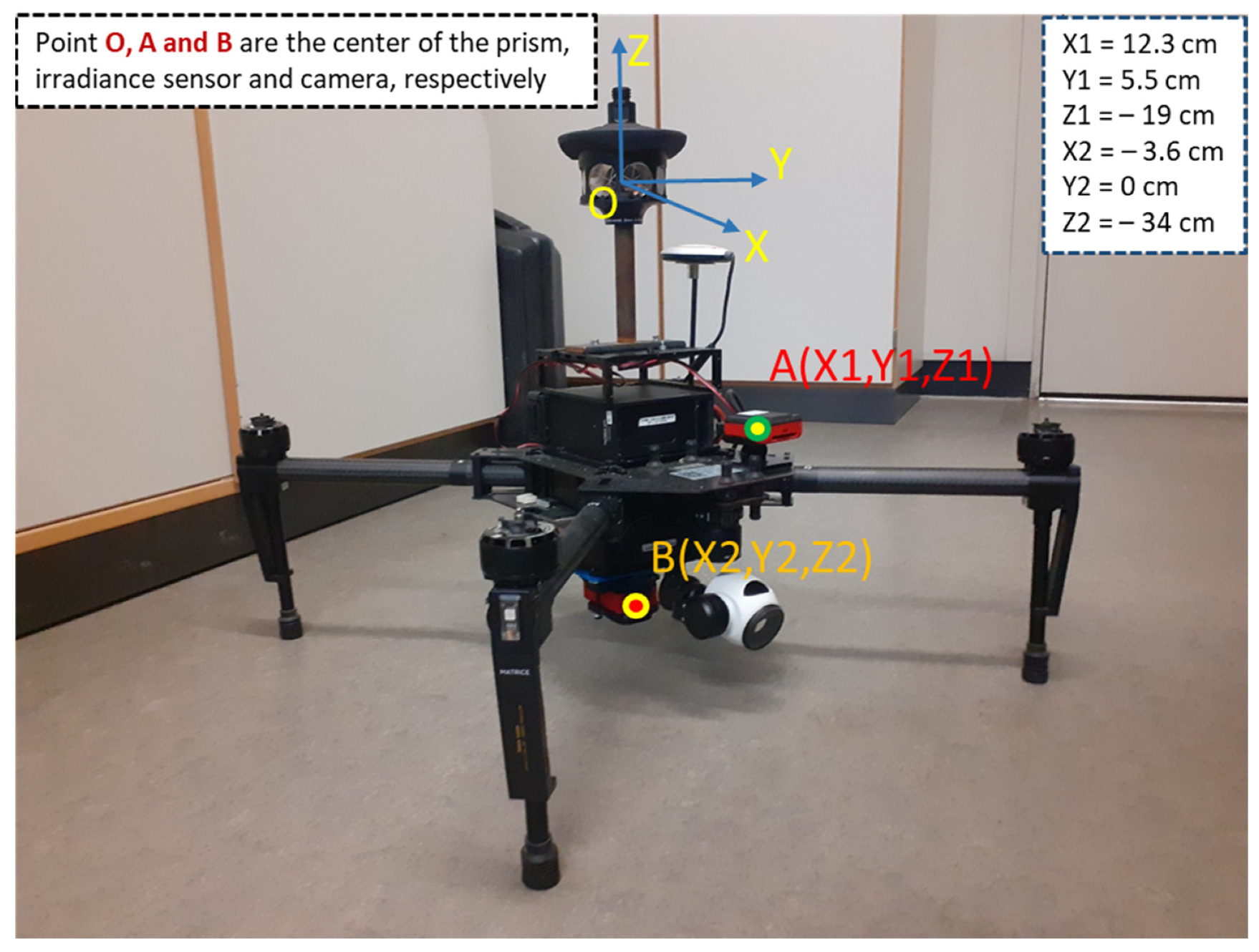

2.2. Instrumentation

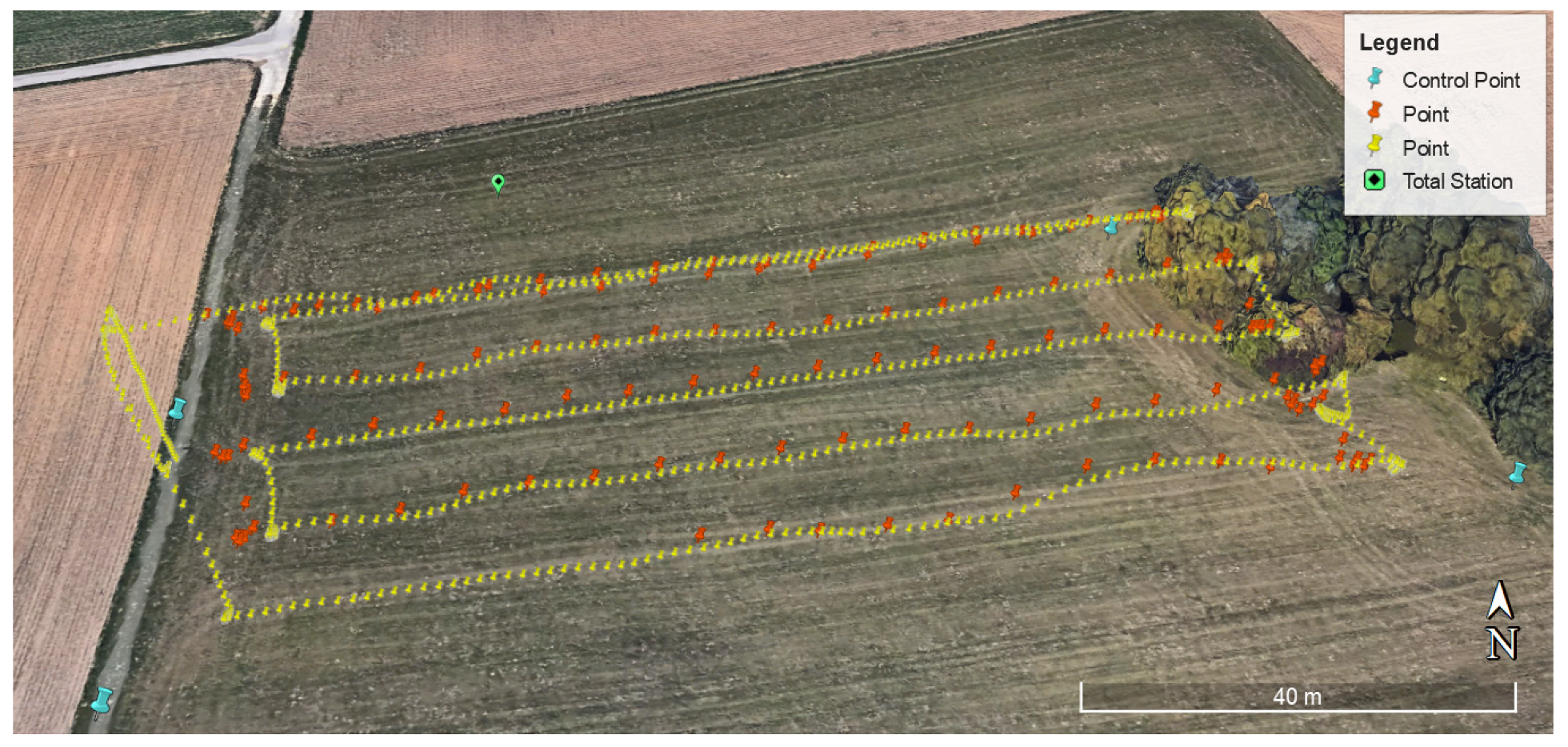

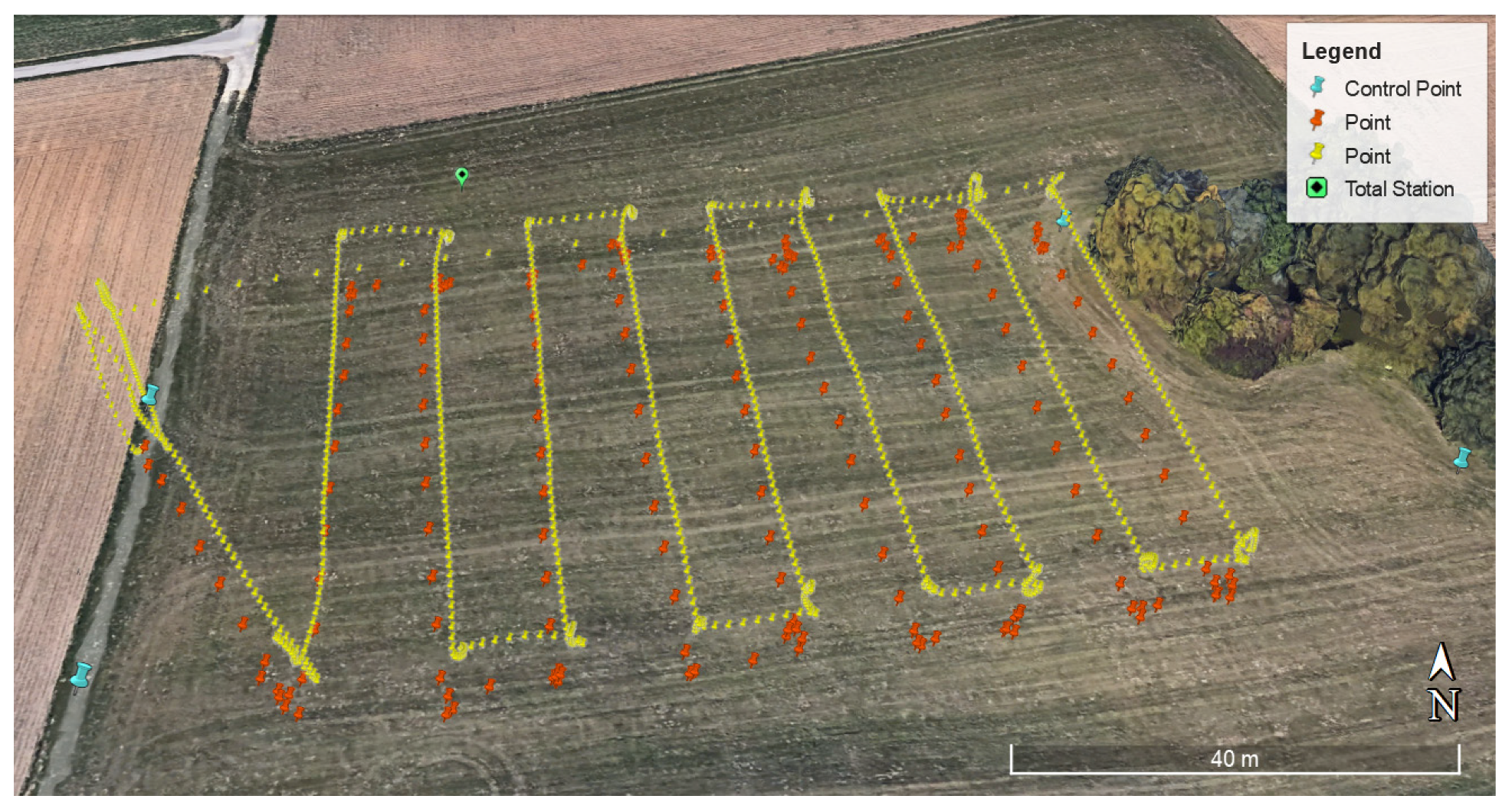

2.3. Experimental Design and Data Acquisition

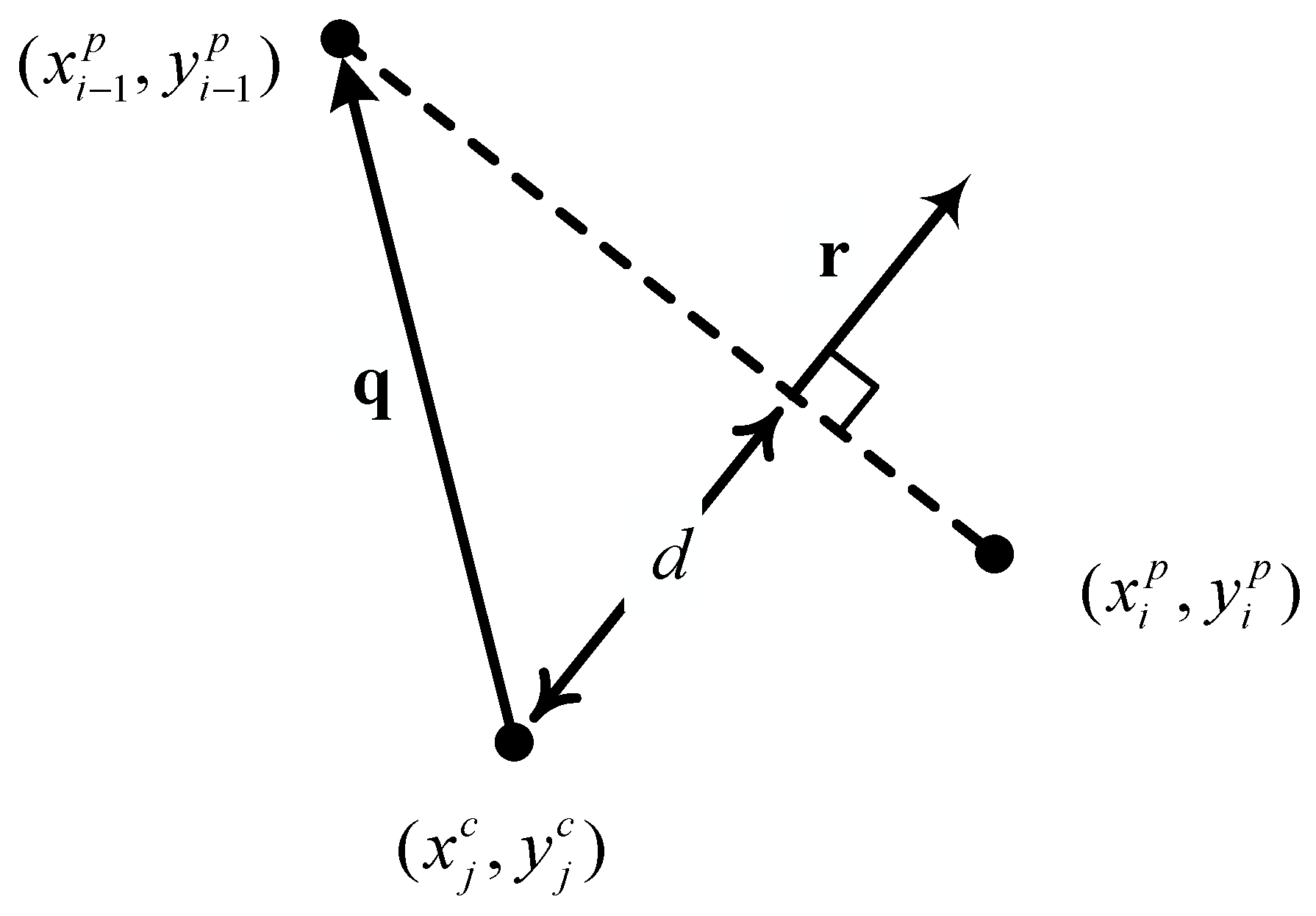

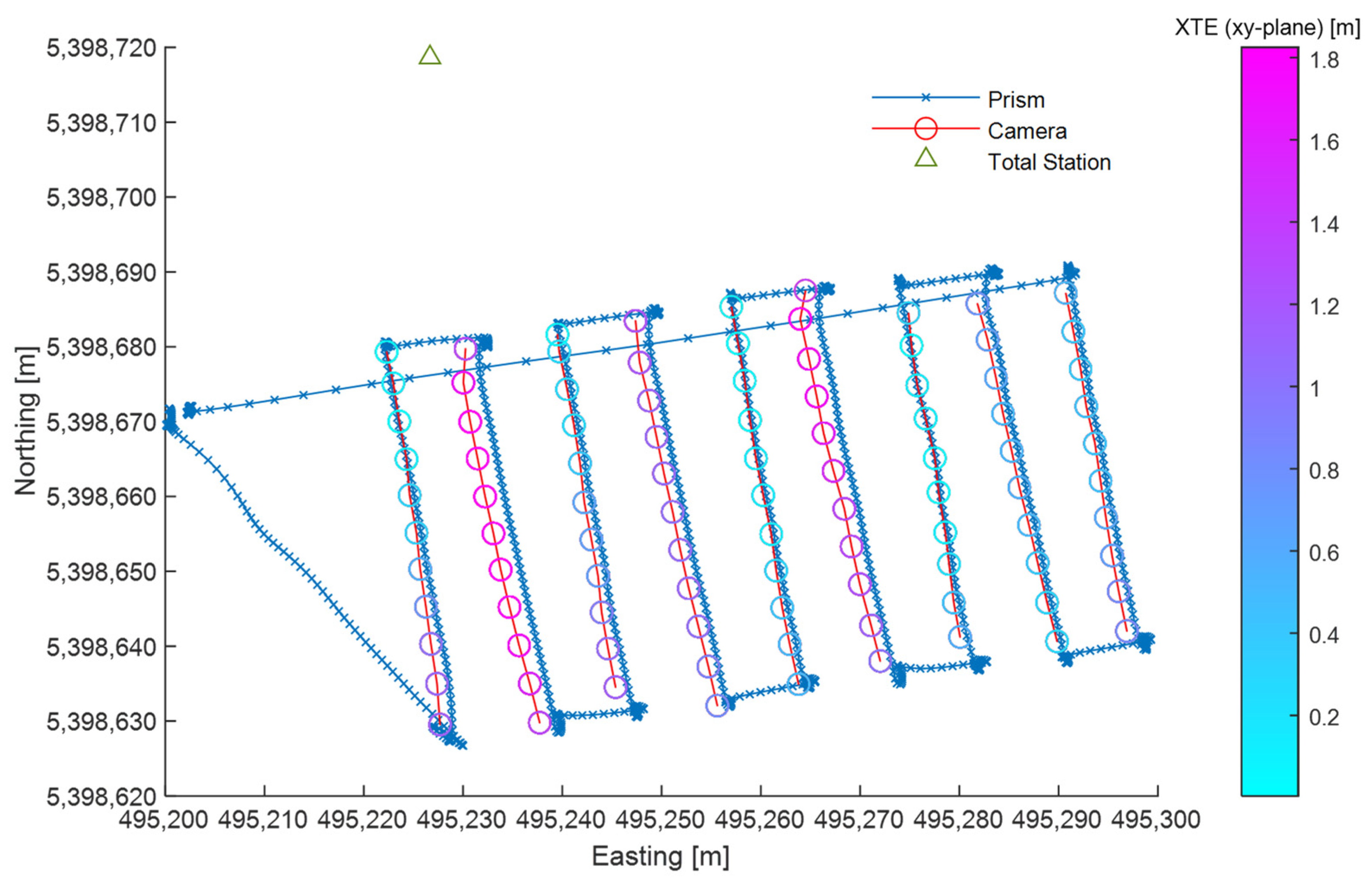

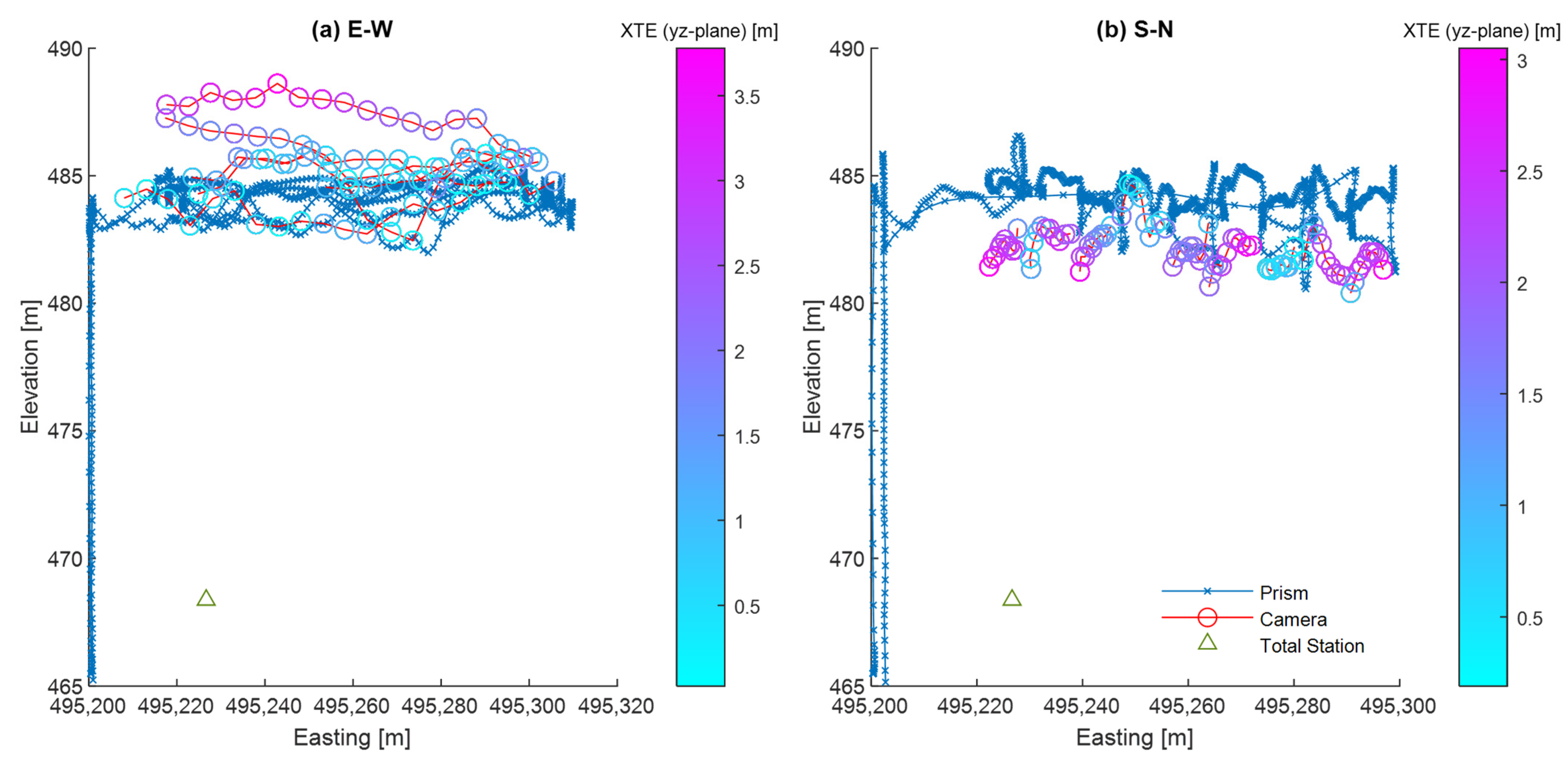

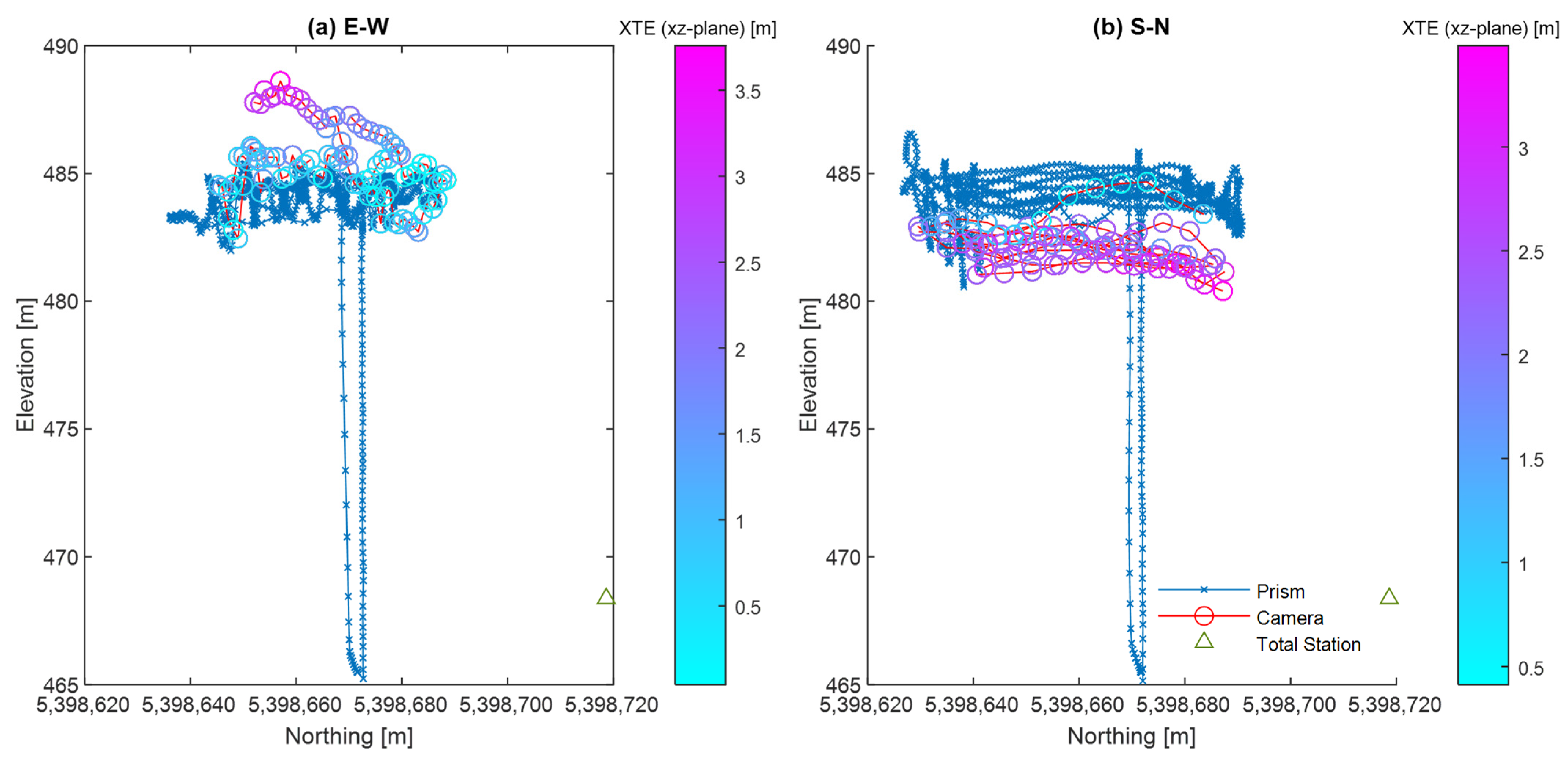

2.4. 2D Cross-Track Error

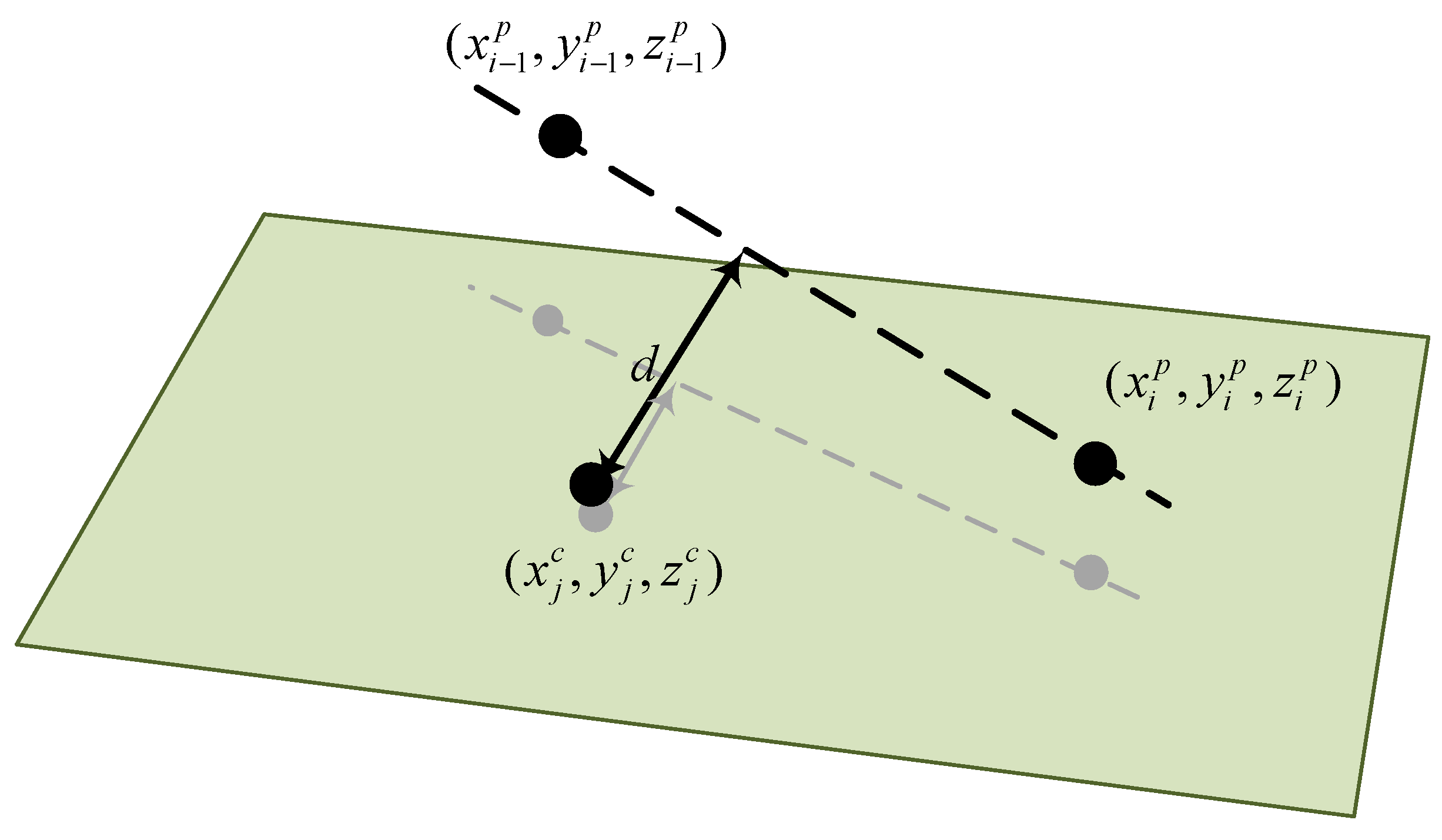

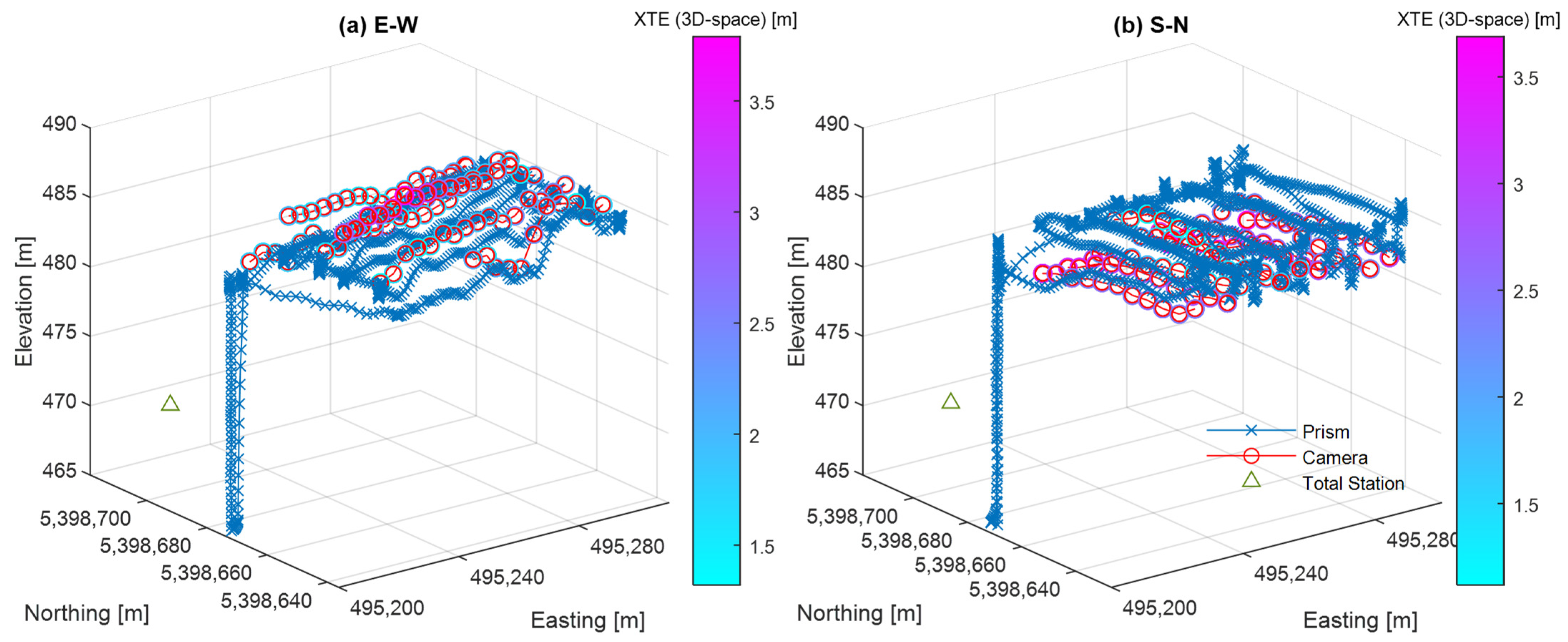

2.5. 3D Cross-Track Error

3. Results and Discussion

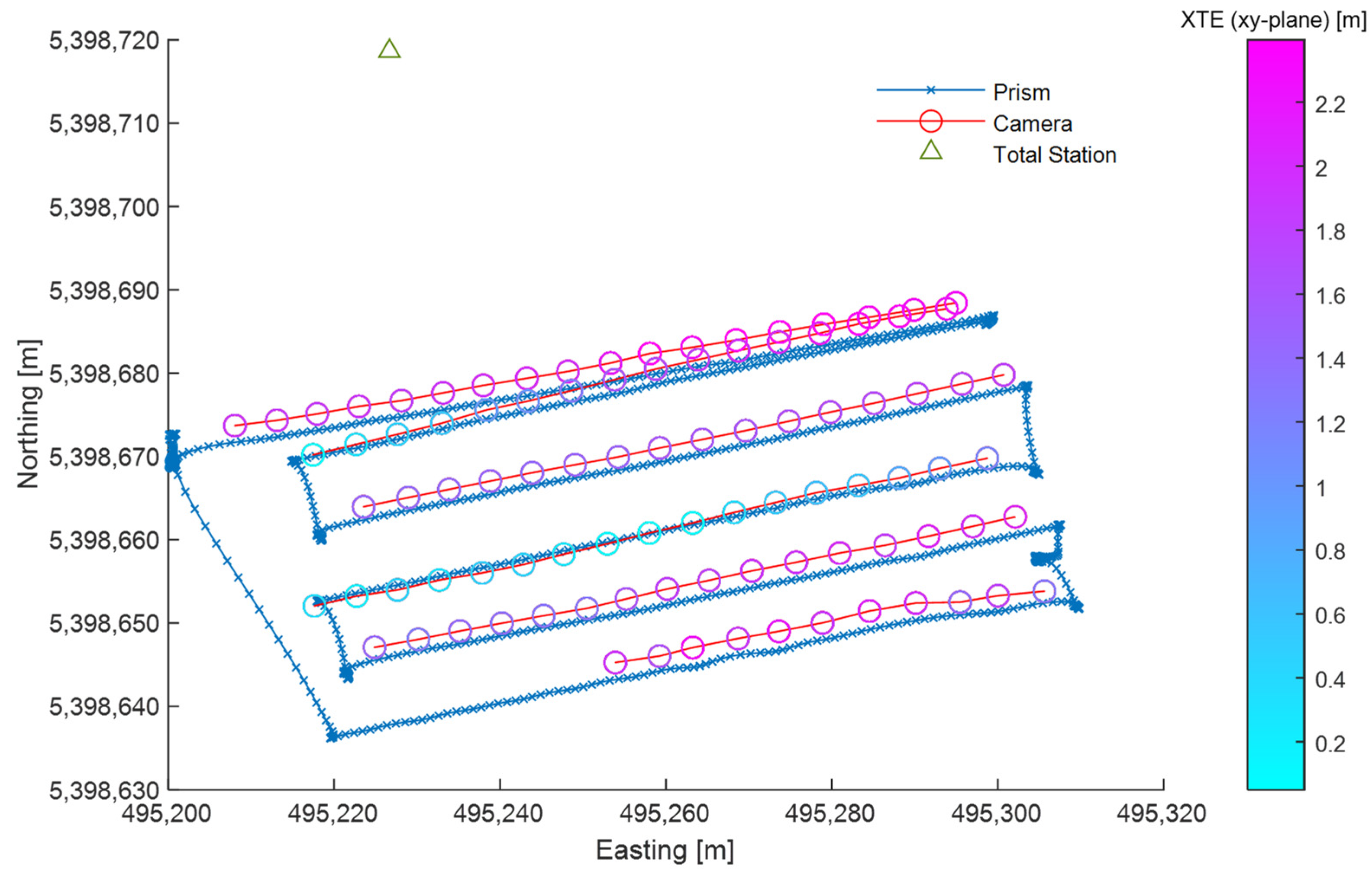

3.1. 2D Cross-Track Error

3.2. Accuracy Assessment

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Franzini, M.; Ronchetti, G.; Sona, G.; Casella, V. Geometric and Radiometric Consistency of Parrot Sequoia Multispectral Imagery for Precision Agriculture Applications. Appl. Sci. 2019, 9, 5314. [Google Scholar] [CrossRef] [Green Version]

- Daponte, P.; De Vito, L.; Glielmo, L.; Iannelli, L.; Liuzza, D.; Picariello, F.; Silano, G. A Review on the Use of Drones for Precision Agriculture. IOP Conf. Ser. Earth Environ. Sci. 2019, 275, 012022. [Google Scholar] [CrossRef]

- Sanz-Ablanedo, E.; Chandler, J.H.; Rodríguez-Pérez, J.R.; Ordóñez, C. Accuracy of Unmanned Aerial Vehicle (UAV) and SfM Photogrammetry Survey as a Function of the Number and Location of Ground Control Points Used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef] [Green Version]

- Islam, N.; Rashid, M.M.; Pasandideh, F.; Ray, B.; Moore, S.; Kadel, R. A Review of Applications and Communication Technologies for Internet of Things (Iot) and Unmanned Aerial Vehicle (Uav) Based Sustainable Smart Farming. Sustainability 2021, 13, 1821. [Google Scholar] [CrossRef]

- Boursianis, A.D.; Papadopoulou, M.S.; Diamantoulakis, P.; Liopa-Tsakalidi, A.; Barouchas, P.; Salahas, G.; Karagiannidis, G.; Wan, S.; Goudos, S.K. Internet of Things (IoT) and Agricultural Unmanned Aerial Vehicles (UAVs) in Smart Farming: A Comprehensive Review. Internet Things 2022, 18, 100187. [Google Scholar] [CrossRef]

- Almalki, F.A.; Soufiene, B.O.; Alsamhi, S.H.; Sakli, H. A Low-Cost Platform for Environmental Smart Farming Monitoring System Based on Iot and UAVs. Sustainability 2021, 13, 5908. [Google Scholar] [CrossRef]

- Buters, T.M.; Belton, D.; Cross, A.T. Multi-Sensor Uav Tracking of Individual Seedlings and Seedling Communities at Millimetre Accuracy. Drones 2019, 3, 81. [Google Scholar] [CrossRef] [Green Version]

- Zaman-Allah, M.; Vergara, O.; Araus, J.L.; Tarekegne, A.; Magorokosho, C.; Zarco-Tejada, P.J.; Hornero, A.; Albà, A.H.; Das, B.; Craufurd, P.; et al. Unmanned Aerial Platform-Based Multi-Spectral Imaging for Field Phenotyping of Maize. Plant. Methods 2015, 11, 1–10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yue, J.; Lei, T.; Li, C.; Zhu, J. The Application of Unmanned Aerial Vehicle Remote Sensing in Quickly Monitoring Crop Pests. Intell. Autom. Soft Comput. 2012, 18, 1043–1052. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Guillén-Climent, M.L.; Hernández-Clemente, R.; Catalina, A.; González, M.R.; Martín, P. Estimating Leaf Carotenoid Content in Vineyards Using High Resolution Hyperspectral Imagery Acquired from an Unmanned Aerial Vehicle (UAV). Agric. For. Meteorol. 2013, 171–172, 281–294. [Google Scholar] [CrossRef] [Green Version]

- Chen, S.; McGovern, E.; Gilmer, A. Coastal Dune Vegetation Mapping Using a Multispectral Sensor Mounted on an UAS. Remote Sens. 2019, 11, 1814. [Google Scholar] [CrossRef] [Green Version]

- Mesas-Carrascosa, F.J.; Torres-Sánchez, J.; Clavero-Rumbao, I.; García-Ferrer, A.; Peña, J.M.; Borra-Serrano, I.; López-Granados, F. Assessing Optimal Flight Parameters for Generating Accurate Multispectral Orthomosaicks by Uav to Support Site-Specific Crop Management. Remote Sens. 2015, 7, 12793–12814. [Google Scholar] [CrossRef] [Green Version]

- Shahbazi, M.; Sohn, G.; Théau, J.; Menard, P. Development and Evaluation of a UAV-Photogrammetry System for Precise 3D Environmental Modeling. Sensors 2015, 15, 27493–27524. [Google Scholar] [CrossRef] [Green Version]

- Turner, D.; Lucieer, A.; Watson, C. An Automated Technique for Generating Georectified Mosaics from Ultra-High Resolution Unmanned Aerial Vehicle (UAV) Imagery, Based on Structure from Motion (SFM) Point Clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef] [Green Version]

- Hugenholtz, C.; Brown, O.; Walker, J.; Barchyn, T.; Nesbit, P.; Kucharczyk, M.; Myshak, S. Spatial Accuracy of UAV-Derived Orthoimagery and Topography: Comparing Photogrammetric Models Processed with Direct Geo-Referencing and Ground Control Points. GEOMATICA 2016, 70, 21–30. [Google Scholar] [CrossRef]

- Mian, O.; Lutes, J.; Lipa, G.; Hutton, J.J.; Gavelle, E.; Borghini, S. Accuracy Assessment of Direct Georeferencing for Photogrammetric Applications on Small Unmanned Aerial Platforms. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2016, 40, 77–83. [Google Scholar] [CrossRef] [Green Version]

- Pix4D Mapper 4.1 User Manual. Available online: support.pix4d.com/hc/en-us/sections/360003718992-Manual (accessed on 18 April 2022).

- Di Gennaro, S.F.; Toscano, P.; Gatti, M.; Poni, S.; Berton, A.; Matese, A. Spectral Comparison of UAV-Based Hyper and Multispectral Cameras for Precision Viticulture. Remote Sens. 2022, 14, 449. [Google Scholar] [CrossRef]

- Assmann, J.J.; Kerby, J.T.; Cunliffe, A.M.; Myers-Smith, I.H. Vegetation Monitoring Using Multispectral Sensors—Best Practices and Lessons Learned from High Latitudes. J. Unmanned Veh. Syst. 2019, 7, 54–75. [Google Scholar] [CrossRef] [Green Version]

- Olsson, P.O.; Vivekar, A.; Adler, K.; Garcia Millan, V.E.; Koc, A.; Alamrani, M.; Eklundh, L. Radiometric Correction of Multispectral Uas Images: Evaluating the Accuracy of the Parrot Sequoia Camera and Sunshine Sensor. Remote Sens. 2021, 13, 577. [Google Scholar] [CrossRef]

- Paraforos, D.S.; Reutemann, M.; Sharipov, G.; Werner, R.; Griepentrog, H.W. Total Station Data Assessment Using an Industrial Robotic Arm for Dynamic 3D In-Field Positioning with Sub-Centimetre Accuracy. Comput. Electron. Agric. 2017, 136, 166–175. [Google Scholar] [CrossRef]

- Caldwell, D. ANSI/NCSL Z540.3:2006: Requirements for the Calibration of Measuring and Test Equipment. NCSLI Meas. 2006, 1, 26–30. [Google Scholar] [CrossRef]

- Sharipov, G.M.; Heiß, A.; Eshkabilov, S.L.; Griepentrog, H.W.; Paraforos, D.S. Variable Rate Application Accuracy of a Centrifugal Disc Spreader Using ISO 11783 Communication Data and Granule Motion Modeling. Comput. Electron. Agric. 2021, 182, 106006. [Google Scholar] [CrossRef]

- Sharipov, G.M.; Paraforos, D.S.; Griepentrog, H.W. Implementation of a Magnetorheological Damper on a No-till Seeding Assembly for Optimising Seeding Depth. Comput. Electron. Agric. 2018, 150, 465–475. [Google Scholar] [CrossRef]

- Weisstein, E.W. Point-Line Distance—3-Dimensional. From MathWorld—A Wolfram Web Resource. Available online: https://mathworld.wolfram.com/Point-LineDistance3-Dimensional.html (accessed on 18 April 2022).

- Heiß, A.; Paraforos, D.S.; Griepentrog, H.W. Determination of Cultivated Area, Field Boundary and Overlapping for A Plowing Operation Using ISO 11783 Communication and D-GNSS Position Data. Agriculture 2019, 9, 38. [Google Scholar] [CrossRef] [Green Version]

- Kikutis, R.; Stankūnas, J.; Rudinskas, D.; Masiulionis, T. Adaptation of Dubins Paths for UAV Ground Obstacle Avoidance When Using a Low Cost On-Board GNSS Sensor. Sensors 2017, 17, 2223. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Weight | 135 g (4.8 oz), including sunshine sensor and cable |

| Sensor spectral characteristics | Green Band 1: 550 nm, 40 nm bandwidth Red Band 2: 660 nm, 40 nm bandwidth Red-edge Band 3: 735 nm, 10 nm bandwidth Near-infrared Band 4: 790 nm, 40 nm bandwidth |

| Focal Length | 3.98 mm |

| 35 mm equivalent focal length | 30 mm |

| Imager resolution | 1280 horizontal × 960 vertical pixels (1.22 megapixels) |

| Image size | 4.8 mm × 3.6 mm |

| Physical Pixel Size | 0.00375 × 0.00375 mm |

| Radiometric resolution | 10-bit sensor (1024 BVs) stored as 16-bit (65,536 BVs) |

| Parameter | Value |

|---|---|

| Distance measurement accuracy | ±(4 mm + 2 ppm) |

| Angle measurement horizontal and vertical accuracy | 0.3 mgon |

| Measurement range | 2500 m |

| Data output rate | max 20 Hz |

| Maximum radial speed of target | 114° s−1 |

| Maximum axial speed of target | 6 m s−1 |

| Synchronized measurement data | <1 ms |

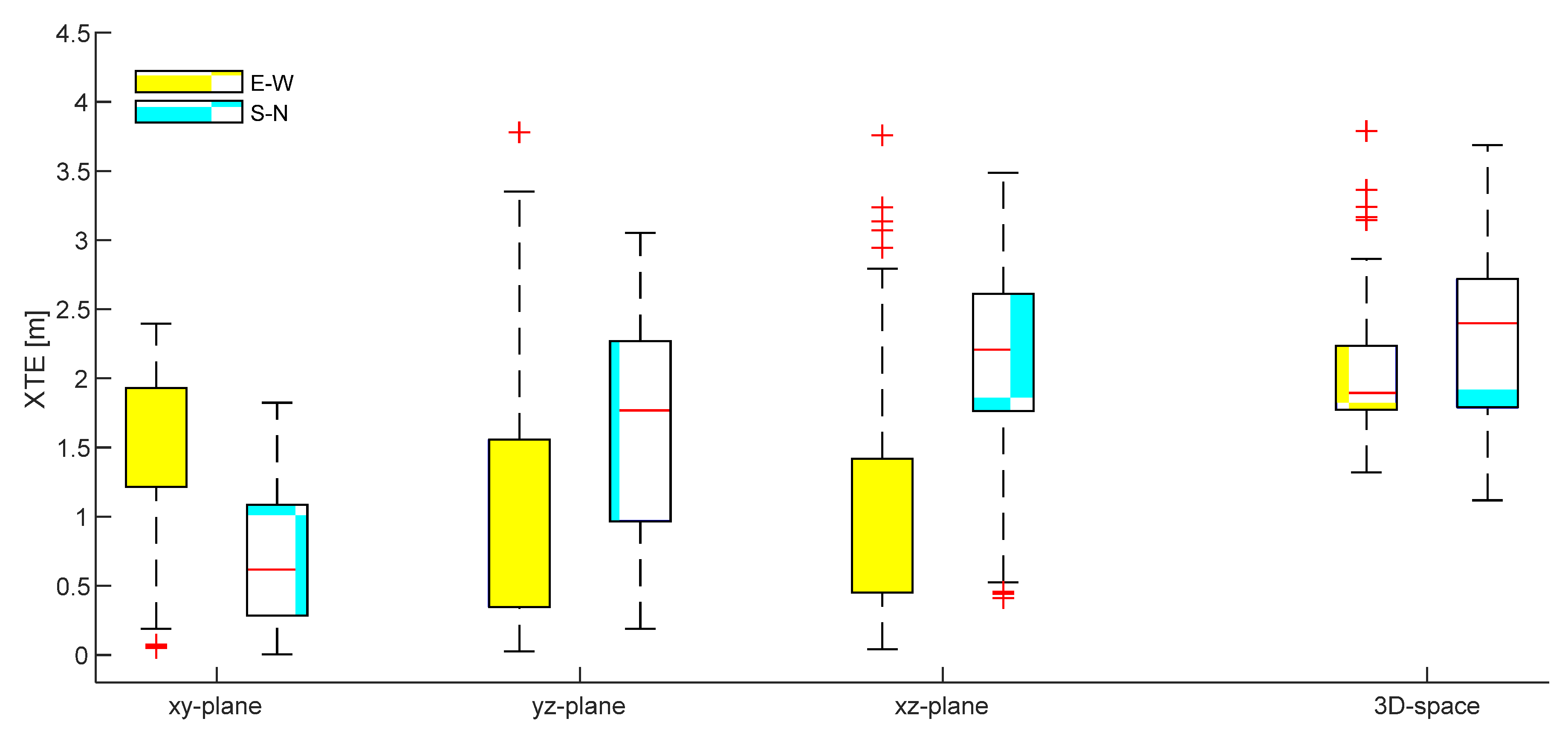

| XTE [m] | |||||

|---|---|---|---|---|---|

| Mean | St. Dev. | 95th per. | Max | ||

| -plane | E–W | 1.47 | 0.65 | 2.33 | 2.39 |

| S–N | 0.74 | 0.55 | 1.78 | 1.82 | |

| -plane | E–W | 1.08 | 0.89 | 3.17 | 3.78 |

| S–N | 1.67 | 0.74 | 2.80 | 3.05 | |

| -plane | E–W | 1.05 | 0.85 | 2.94 | 3.76 |

| S–N | 2.12 | 0.68 | 3.01 | 3.49 | |

| 3D-space | E–W | 2.05 | 0.45 | 3.14 | 3.79 |

| S–N | 2.35 | 0.63 | 3.34 | 3.69 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Paraforos, D.S.; Sharipov, G.M.; Heiß, A.; Griepentrog, H.W. Position Accuracy Assessment of a UAV-Mounted Sequoia+ Multispectral Camera Using a Robotic Total Station. Agriculture 2022, 12, 885. https://doi.org/10.3390/agriculture12060885

Paraforos DS, Sharipov GM, Heiß A, Griepentrog HW. Position Accuracy Assessment of a UAV-Mounted Sequoia+ Multispectral Camera Using a Robotic Total Station. Agriculture. 2022; 12(6):885. https://doi.org/10.3390/agriculture12060885

Chicago/Turabian StyleParaforos, Dimitrios S., Galibjon M. Sharipov, Andreas Heiß, and Hans W. Griepentrog. 2022. "Position Accuracy Assessment of a UAV-Mounted Sequoia+ Multispectral Camera Using a Robotic Total Station" Agriculture 12, no. 6: 885. https://doi.org/10.3390/agriculture12060885

APA StyleParaforos, D. S., Sharipov, G. M., Heiß, A., & Griepentrog, H. W. (2022). Position Accuracy Assessment of a UAV-Mounted Sequoia+ Multispectral Camera Using a Robotic Total Station. Agriculture, 12(6), 885. https://doi.org/10.3390/agriculture12060885