Evaluation of Surgical Skills during Robotic Surgery by Deep Learning-Based Multiple Surgical Instrument Tracking in Training and Actual Operations

Abstract

:1. Introduction

2. Materials and Methods

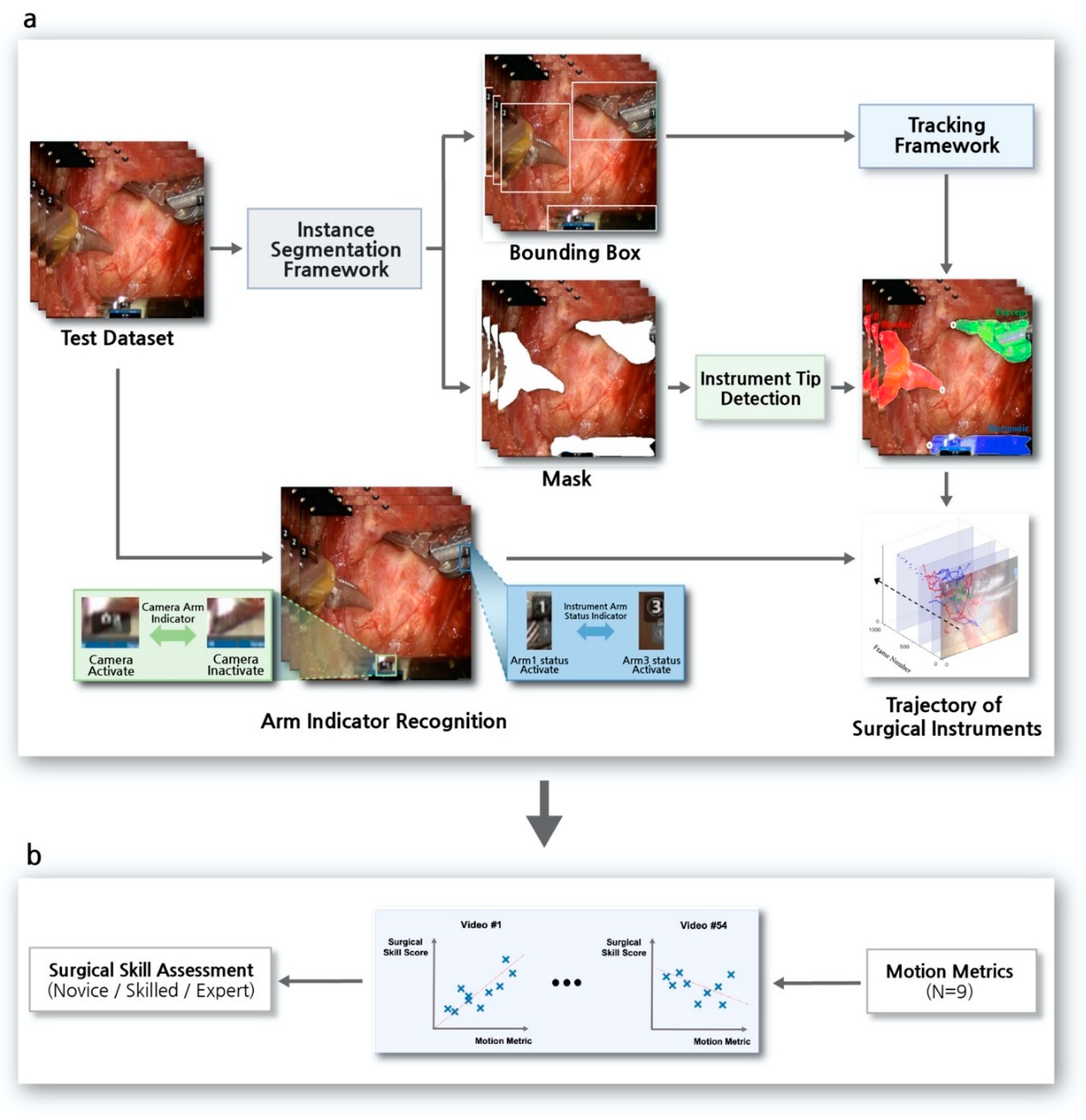

2.1. Study Design

2.2. Surgical Procedure

2.3. Dataset

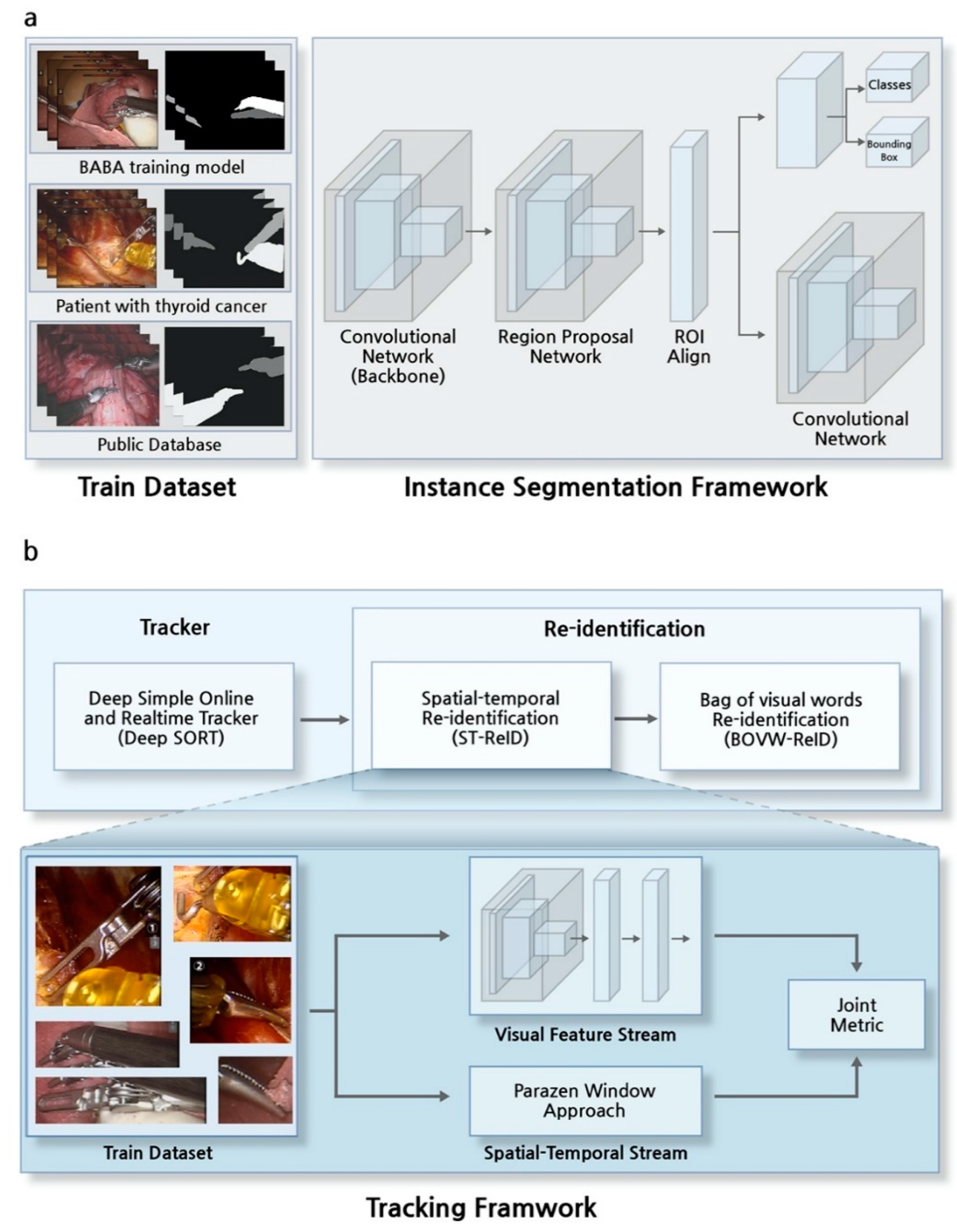

2.4. Instance Segmentation Framework

2.5. Tracker in Tracking Framework

2.6. Re-Identification in Tracking Framework

2.7. Arm-Indicator Recognition on the Robotic Surgery View

2.8. Surgical Skill Prediction Model using Motion Metrics

3. Results

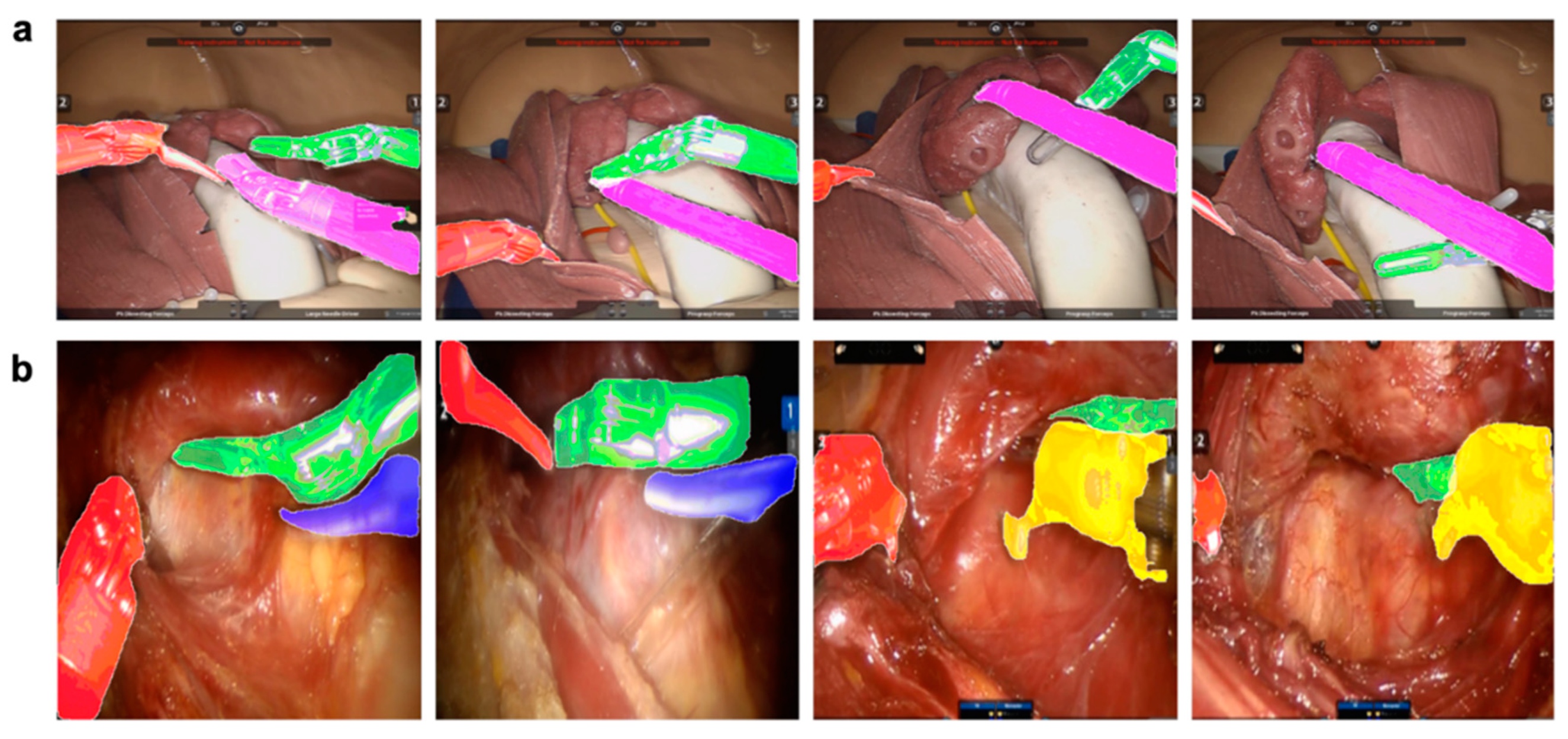

3.1. Results of the Instance Segmentation Framework

3.2. Evaluation of the Tracking Framework

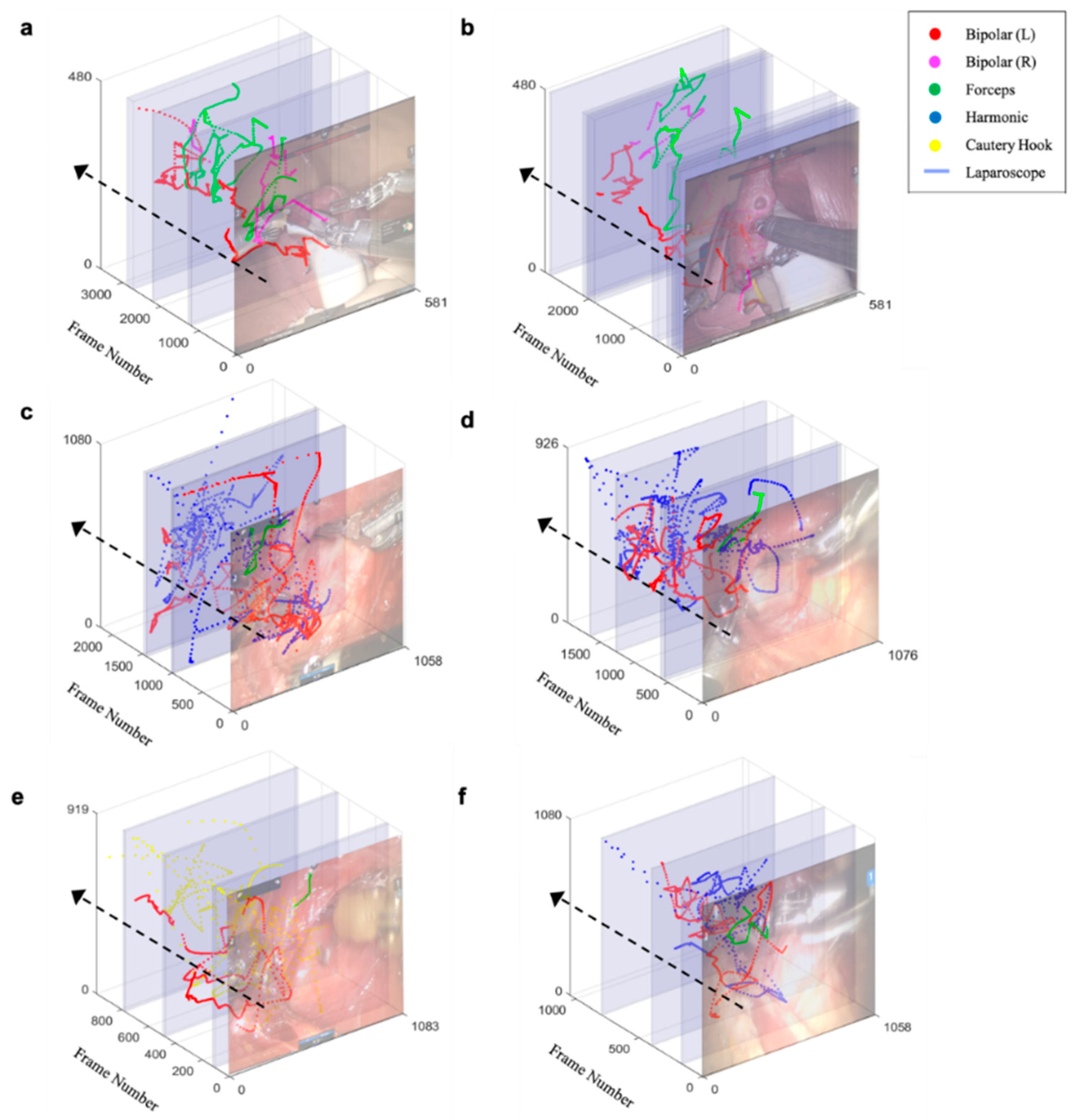

3.3. Trajectory of Multiple Surgical Instruments and Evaluation

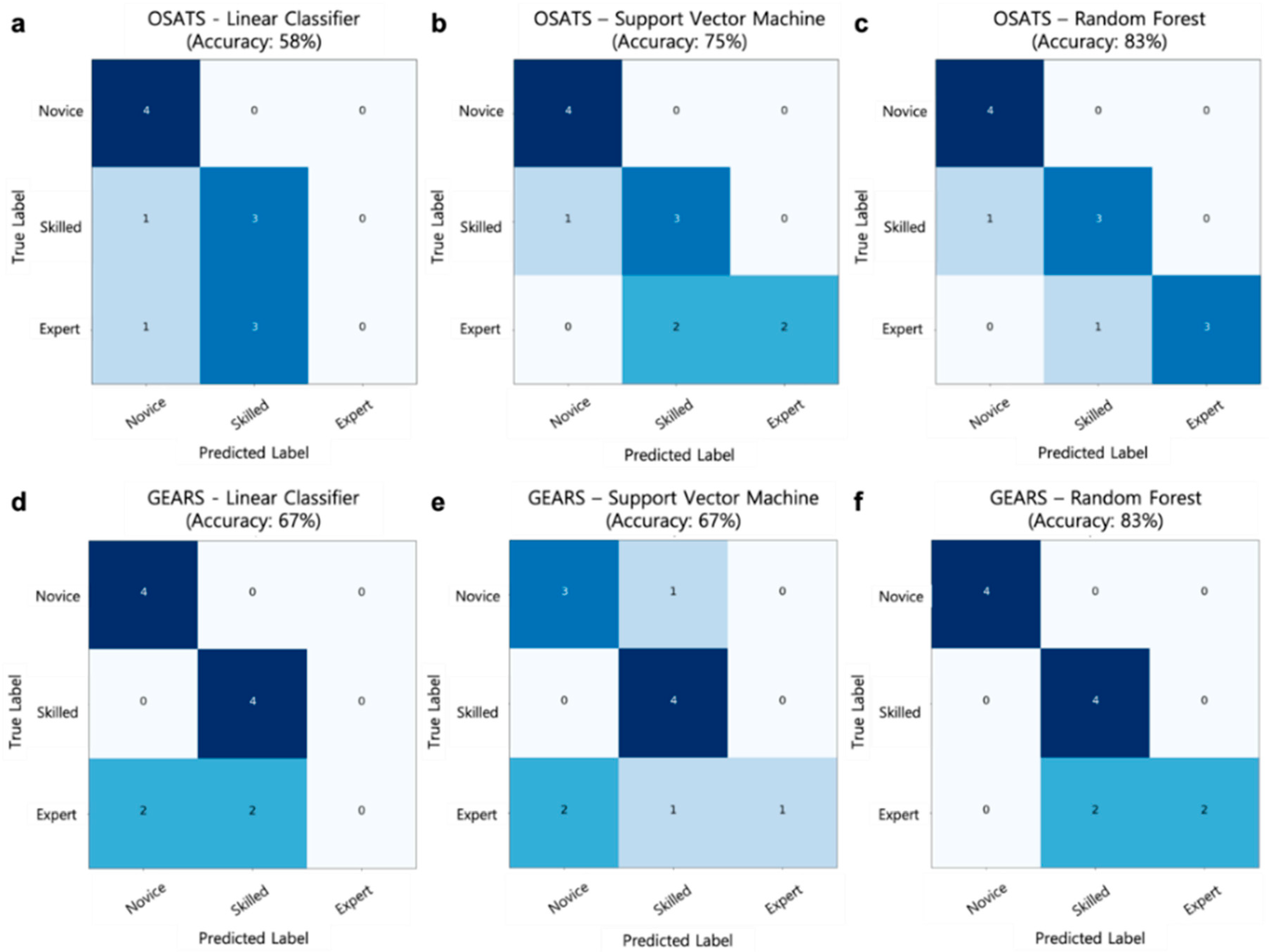

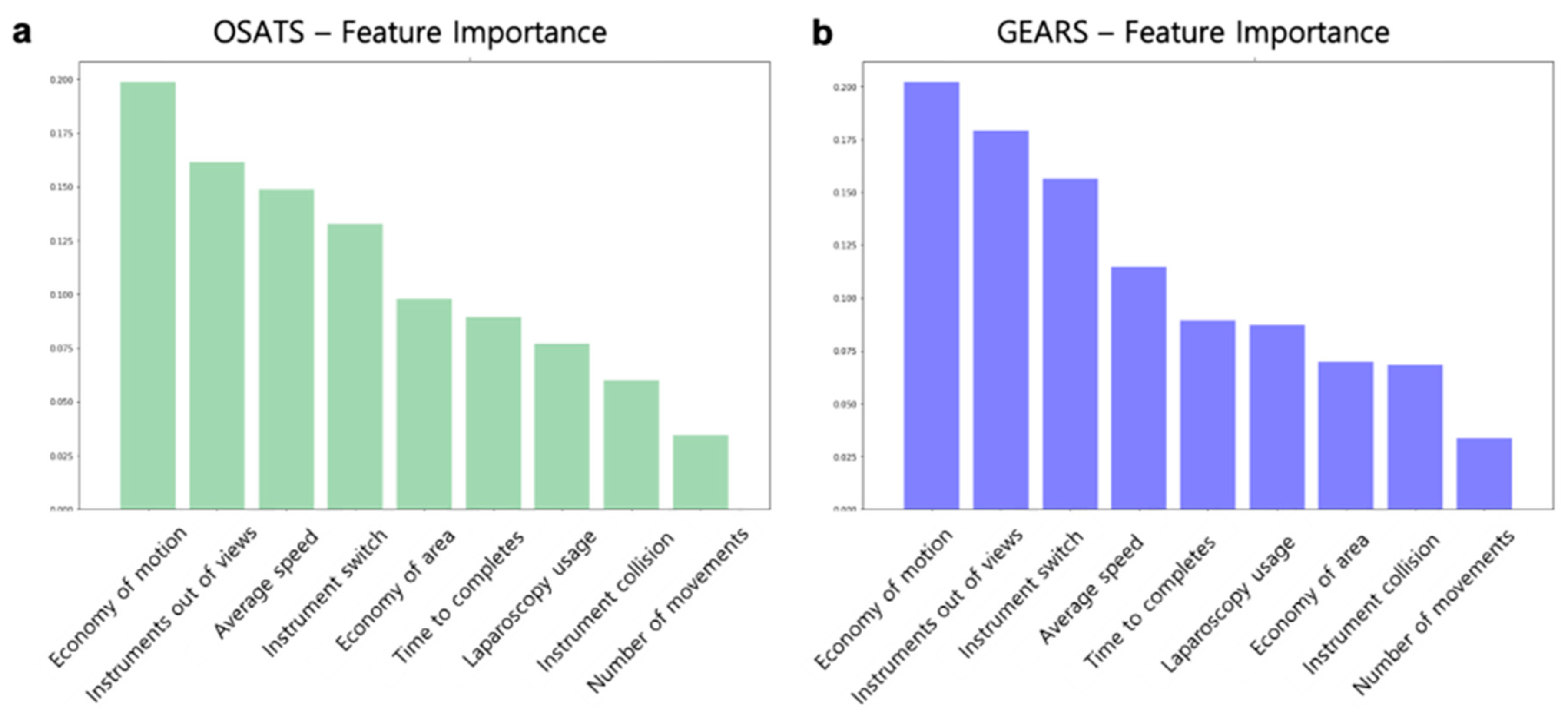

3.4. Performance of the Surgical Skill Prediction Model using Motion Metrics

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Pernar, L.I.; Robertson, F.C.; Tavakkoli, A.; Sheu, E.G.; Brooks, D.C.; Smink, D.S. An appraisal of the learning curve in robotic general surgery. Surg. Endosc. 2017, 31, 4583–4596. [Google Scholar] [CrossRef] [PubMed]

- Martin, J.; Regehr, G.; Reznick, R.; Macrae, H.; Murnaghan, J.; Hutchison, C.; Brown, M. Objective structured assessment of technical skill (OSATS) for surgical residents. Br. J. Surg. 1997, 84, 273–278. [Google Scholar] [CrossRef] [PubMed]

- Goh, A.C.; Goldfarb, D.W.; Sander, J.C.; Miles, B.J.; Dunkin, B.J. Global evaluative assessment of robotic skills: Validation of a clinical assessment tool to measure robotic surgical skills. J. Urol. 2012, 187, 247–252. [Google Scholar] [CrossRef] [PubMed]

- Takeshita, N.; Phee, S.J.; Chiu, P.W.; Ho, K.Y. Global Evaluative Assessment of Robotic Skills in Endoscopy (GEARS-E): Objective assessment tool for master and slave transluminal endoscopic robot. Endosc. Int. Open 2018, 6, 1065–1069. [Google Scholar] [CrossRef] [Green Version]

- Hilal, Z.; Kumpernatz, A.K.; Rezniczek, G.A.; Cetin, C.; Tempfer-Bentz, E.-K.; Tempfer, C.B. A randomized comparison of video demonstration versus hands-on training of medical students for vacuum delivery using Objective Structured Assessment of Technical Skills (OSATS). Medicine 2017, 96, 11. [Google Scholar] [CrossRef]

- Ponto, J. Understanding and evaluating survey research. J. Adv. Pract. Oncol. 2015, 6, 168. [Google Scholar]

- Reiter, A.; Allen, P.K.; Zhao, T. Articulated surgical tool detection using virtually-rendered templates. In Proceedings of the Computer Assisted Radiology and Surgery (CARS), Pisa, Italy, 27–30 June 2012; pp. 1–8. [Google Scholar]

- Mark, J.R.; Kelly, D.C.; Trabulsi, E.J.; Shenot, P.J.; Lallas, C.D. The effects of fatigue on robotic surgical skill training in Urology residents. J. Robot. Surg. 2014, 8, 269–275. [Google Scholar] [CrossRef] [Green Version]

- Brinkman, W.M.; Luursema, J.-M.; Kengen, B.; Schout, B.M.; Witjes, J.A.; Bekkers, R.L. da Vinci skills simulator for assessing learning curve and criterion-based training of robotic basic skills. Urology 2013, 81, 562–566. [Google Scholar] [CrossRef]

- Lin, H.C.; Shafran, I.; Yuh, D.; Hager, G.D. Towards automatic skill evaluation: Detection and segmentation of robot-assisted surgical motions. Comput. Aided Surg. 2006, 11, 220–230. [Google Scholar] [CrossRef]

- Kumar, R.; Jog, A.; Vagvolgyi, B.; Nguyen, H.; Hager, G.; Chen, C.C.G.; Yuh, D. Objective measures for longitudinal assessment of robotic surgery training. J. Thorac. Cardiovasc. Surg. 2012, 143, 528–534. [Google Scholar] [CrossRef] [Green Version]

- Fawaz, H.I.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.-A. Evaluating surgical skills from kinematic data using convolutional neural networks. arXiv 2018, arXiv:1806.02750. [Google Scholar]

- Hung, A.J.; Oh, P.J.; Chen, J.; Ghodoussipour, S.; Lane, C.; Jarc, A.; Gill, I.S. Experts vs super-experts: Differences in automated performance metrics and clinical outcomes for robot-assisted radical prostatectomy. BJU Int. 2019, 123, 861–868. [Google Scholar] [CrossRef] [PubMed]

- Jun, S.-K.; Narayanan, M.S.; Agarwal, P.; Eddib, A.; Singhal, P.; Garimella, S.; Krovi, V. Robotic minimally invasive surgical skill assessment based on automated video-analysis motion studies. In Proceedings of the 2012 4th IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), Rome, Italy, 24–27 June 2012; pp. 25–31. [Google Scholar]

- Speidel, S.; Delles, M.; Gutt, C.; Dillmann, R. Tracking of instruments in minimally invasive surgery for surgical skill analysis. In Proceedings of the International Workshop on Medical Imaging and Virtual Reality, Shanghai, China, 17–18 August 2006; pp. 148–155. [Google Scholar]

- Ryu, J.; Choi, J.; Kim, H.C. Endoscopic vision-based tracking of multiple surgical instruments during robot-assisted surgery. Artif. Organs 2013, 37, 107–112. [Google Scholar] [CrossRef] [PubMed]

- Mishra, K.; Sathish, R.; Sheet, D. Learning latent temporal connectionism of deep residual visual abstractions for identifying surgical tools in laparoscopy procedures. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 58–65. [Google Scholar]

- Sahu, M.; Mukhopadhyay, A.; Szengel, A.; Zachow, S. Addressing multi-label imbalance problem of surgical tool detection using CNN. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1013–1020. [Google Scholar] [CrossRef]

- Sarikaya, D.; Corso, J.J.; Guru, K.A. Detection and localization of robotic tools in robot-assisted surgery videos using deep neural networks for region proposal and detection. IEEE Trans. Med. Imaging 2017, 36, 1542–1549. [Google Scholar] [CrossRef]

- Choi, B.; Jo, K.; Choi, S.; Choi, J. Surgical-tools detection based on Convolutional Neural Network in laparoscopic robot-assisted surgery. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju Island, Korea, 11–15 July 2019; pp. 1756–1759. [Google Scholar]

- García-Peraza-Herrera, L.C.; Li, W.; Gruijthuijsen, C.; Devreker, A.; Attilakos, G.; Deprest, J.; Vander Poorten, E.; Stoyanov, D.; Vercauteren, T.; Ourselin, S. Real-time segmentation of non-rigid surgical tools based on deep learning and tracking. In Proceedings of the International Workshop on Computer-Assisted and Robotic Endoscopy, Athens, Greece, 17 October 2016; pp. 84–95. [Google Scholar]

- Law, H.; Ghani, K.; Deng, J. Surgeon technical skill assessment using computer vision based analysis. In Proceedings of the Machine Learning for Healthcare Conference, Northeastern University, MA, USA, 18–19 August 2017; pp. 88–99. [Google Scholar]

- Kurmann, T.; Neila, P.M.; Du, X.; Fua, P.; Stoyanov, D.; Wolf, S.; Sznitman, R. Simultaneous recognition and pose estimation of instruments in minimally invasive surgery. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Quebec City, QC, Canada, 10–14 September 2017; pp. 505–513. [Google Scholar]

- Twinanda, A.P.; Shehata, S.; Mutter, D.; Marescaux, J.; De Mathelin, M.; Padoy, N. Endonet: A deep architecture for recognition tasks on laparoscopic videos. IEEE Trans. Med. Imaging 2017, 36, 86–97. [Google Scholar] [CrossRef] [Green Version]

- Yu, F.; Croso, G.S.; Kim, T.S.; Song, Z.; Parker, F.; Hager, G.D.; Reiter, A.; Vedula, S.S.; Ali, H.; Sikder, S. Assessment of automated identification of phases in videos of cataract surgery using machine learning and deep learning techniques. JAMA Netw. Open 2019, 2, 191860. [Google Scholar] [CrossRef]

- Khalid, S.; Goldenberg, M.; Grantcharov, T.; Taati, B.; Rudzicz, F. Evaluation of deep learning models for identifying surgical actions and measuring performance. JAMA Netw. Open 2020, 3, 201664. [Google Scholar] [CrossRef]

- García-Peraza-Herrera, L.C.; Li, W.; Fidon, L.; Gruijthuijsen, C.; Devreker, A.; Attilakos, G.; Deprest, J.; Vander Poorten, E.; Stoyanov, D.; Vercauteren, T. ToolNet: Holistically-nested real-time segmentation of robotic surgical tools. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, Canada, 24–28 September 2017; pp. 5717–5722. [Google Scholar]

- Pakhomov, D.; Premachandran, V.; Allan, M.; Azizian, M.; Navab, N. Deep residual learning for instrument segmentation in robotic surgery. arXiv 2017, arXiv:1703.08580. [Google Scholar]

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable person re-identification: A benchmark. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1116–1124. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Yu, H.W.; Yi, J.W.; Seong, C.Y.; Kim, J.-k.; Bae, I.E.; Kwon, H.; Chai, Y.J.; Kim, S.-j.; Choi, J.Y.; Lee, K.E. Development of a surgical training model for bilateral axillo-breast approach robotic thyroidectomy. Surg. Endosc. 2018, 32, 1360–1367. [Google Scholar] [CrossRef]

- Lee, K.E.; Kim, E.; Koo, D.H.; Choi, J.Y.; Kim, K.H.; Youn, Y.-K. Robotic thyroidectomy by bilateral axillo-breast approach: Review of 1026 cases and surgical completeness. Surg. Endosc. 2013, 27, 2955–2962. [Google Scholar] [CrossRef] [PubMed]

- Oropesa, I.; Sánchez-González, P.; Chmarra, M.K.; Lamata, P.; Fernández, A.; Sánchez-Margallo, J.A.; Jansen, F.W.; Dankelman, J.; Sánchez-Margallo, F.M.; Gómez, E.J. EVA: Laparoscopic instrument tracking based on endoscopic video analysis for psychomotor skills assessment. Surg. Endosc. 2013, 27, 1029–1039. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jin, A.; Yeung, S.; Jopling, J.; Krause, J.; Azagury, D.; Milstein, A.; Fei-Fei, L. Tool detection and operative skill assessment in surgical videos using region-based convolutional neural networks. arXiv 2018, arXiv:1802.08774. [Google Scholar]

- Liu, S.Y.-W.; Kim, J.S. Bilateral axillo-breast approach robotic thyroidectomy: Review of evidences. Gland Surg. 2017, 6, 250. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, Q.; Zhu, J.; Zhuang, D.; Fan, Z.; Zheng, L.; Zhou, P.; Yu, F.; Wang, G.; Ni, G.; Dong, X. Robotic lateral cervical lymph node dissection via bilateral axillo-breast approach for papillary thyroid carcinoma: A single-center experience of 260 cases. J. Robot. Surg. 2019, 1–7. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Christou, N.; Mathonnet, M. Complications after total thyroidectomy. J. Visc. Surg. 2013, 150, 249–256. [Google Scholar] [CrossRef] [PubMed]

- Allan, M.; Shvets, A.; Kurmann, T.; Zhang, Z.; Duggal, R.; Su, Y.-H.; Rieke, N.; Laina, I.; Kalavakonda, N.; Bodenstedt, S. 2017 robotic instrument segmentation challenge. arXiv 2019, arXiv:1902.06426. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Wang, G.; Lai, J.; Huang, P.; Xie, X. Spatial-temporal person re-identification. In Proceedings of the AAAI Conference on Artificial Intelligence, Honululu, HI, USA, 27 January–1 February 2019; pp. 8933–8940. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Laina, I.; Rieke, N.; Rupprecht, C.; Vizcaíno, J.P.; Eslami, A.; Tombari, F.; Navab, N. Concurrent segmentation and localization for tracking of surgical instruments. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Quebec City, QC, Canada, 11–13 September 2017; pp. 664–672. [Google Scholar]

- Shvets, A.; Rakhlin, A.; Kalinin, A.A.; Iglovikov, V. Automatic instrument segmentation in robot-assisted surgery using deep learning. arXiv 2018, arXiv:1803.01207. [Google Scholar]

- Bishop, G.; Welch, G. An introduction to the kalman filter. Proc SIGGRAPH Course 2001, 8, 41. [Google Scholar]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef] [Green Version]

- Peng, X.; Wang, L.; Wang, X.; Qiao, Y. Bag of visual words and fusion methods for action recognition: Comprehensive study and good practice. Comput. Vis. Image Underst. 2016, 150, 109–125. [Google Scholar] [CrossRef] [Green Version]

- Yoo, J.-C.; Han, T.H. Fast normalized cross-correlation. Circuits Syst. Signal Process. 2009, 28, 819. [Google Scholar] [CrossRef]

- Yu, C.; Yang, S.; Kim, W.; Jung, J.; Chung, K.-Y.; Lee, S.W.; Oh, B. Acral melanoma detection using a convolutional neural network for dermoscopy images. PLoS ONE 2018, 13, e0193321. [Google Scholar] [CrossRef]

- Yamazaki, Y.; Kanaji, S.; Matsuda, T.; Oshikiri, T.; Nakamura, T.; Suzuki, S.; Hiasa, Y.; Otake, Y.; Sato, Y.; Kakeji, Y. Automated surgical instrument detection from laparoscopic gastrectomy video images using an open source convolutional neural network platform. J. Am. Coll. Surg. 2020. [Google Scholar] [CrossRef] [PubMed]

- Vernez, S.L.; Huynh, V.; Osann, K.; Okhunov, Z.; Landman, J.; Clayman, R.V. C-SATS: Assessing surgical skills among urology residency applicants. J. Endourol. 2017, 31, 95–100. [Google Scholar] [CrossRef]

- Pagador, J.B.; Sánchez-Margallo, F.M.; Sánchez-Peralta, L.F.; Sánchez-Margallo, J.A.; Moyano-Cuevas, J.L.; Enciso-Sanz, S.; Usón-Gargallo, J.; Moreno, J. Decomposition and analysis of laparoscopic suturing task using tool-motion analysis (TMA): Improving the objective assessment. Int. J. Comput. Assist. Radiol. Surg. 2012, 7, 305–313. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Paisitkriangkrai, S.; Shen, C.; Van Den Hengel, A. Learning to rank in person re-identification with metric ensembles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–12 June 2015; pp. 1846–1855. [Google Scholar]

- Lee, D.; Yu, H.W.; Kim, S.; Yoon, J.; Lee, K.; Chai, Y.J.; Choi, J.Y.; Kong, H.-J.; Lee, K.E.; Cho, H.S. Vision-based tracking system for augmented reality to localize recurrent laryngeal nerve during robotic thyroid surgery. Sci. Rep. 2020, 10, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Reiter, A.; Allen, P.K.; Zhao, T. Appearance learning for 3D tracking of robotic surgical tools. Int. J. Robot. Res. 2014, 33, 342–356. [Google Scholar] [CrossRef]

- Reiter, A.; Allen, P.K.; Zhao, T. Learning features on robotic surgical tools. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Providence, RI, USA, 16–21 June 2012; pp. 38–43. [Google Scholar]

- Nisky, I.; Hsieh, M.H.; Okamura, A.M. The effect of a robot-assisted surgical system on the kinematics of user movements. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 6257–6260. [Google Scholar]

- Allan, M.; Ourselin, S.; Thompson, S.; Hawkes, D.J.; Kelly, J.; Stoyanov, D. Toward detection and localization of instruments in minimally invasive surgery. IEEE Trans. Biomed. Eng. 2013, 60, 1050–1058. [Google Scholar] [CrossRef]

- Allan, M.; Chang, P.-L.; Ourselin, S.; Hawkes, D.J.; Sridhar, A.; Kelly, J.; Stoyanov, D. Image based surgical instrument pose estimation with multi-class labelling and optical flow. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Nice, France, 1–5 October 2012; pp. 331–338. [Google Scholar]

| Training Dataset | No. of Videos | Total No. of Frames | Types of Surgical Instrument | |||

|---|---|---|---|---|---|---|

| Bipolar | Forceps | Harmonic | Cautery Hook | |||

| BABA training model (Instance Segmentation Framework [39]) | 10 | 84 | 158 | 82 | - | - |

| Patients with thyroid cancer (Instance Segmentation Framework [39]) | 2 | 454 | 311 | 194 | 141 | 311 |

| Public database [38] (Instance Segmentation Framework [39]) | 8 | 1766 | 1.451 | 1351 | - | - |

| Patients with thyroid cancer (ST-ReID [40]) | 3 | 253 | 99 | 77 | 81 | 58 |

| ReID Method | BABA Training Model (Rank-1) | Patients with Thyroid Cancer (Rank-1) |

|---|---|---|

| BOVW-ReID [46] | 68.3% | 57.9% |

| ST-ReID [40] | 91.7% | 85.2% |

| BOVW-ReID [46] + ST-ReID [40] | 93.3% | 88.1% |

| Test Dataset (No. of Videos) | No. of Frames | RMSE (mm) | AUC (1 mm) | AUC (2 mm) | AUC (5 mm) | Pearson-r (x-axis) | Pearson-r (y-axis) |

|---|---|---|---|---|---|---|---|

| BABA training model (n = 14) | 125,984 | 2.83 ± 1.34 | 0.73 ± 0.05 | 0.83 ± 0.02 | 0.92 ± 0.02 | 0.93 ± 0.02 | 0.91 ± 0.04 |

| Patients with thyroid cancer (n = 40) | 387,884 | 3.7 ± 2.29 | 0.69 ± 0.04 | 0.76 ± 0.06 | 0.84 ± 0.03 | 0.89 ± 0.03 | 0.86 ± 0.03 |

| Average (n = 54) | 513,868 | 3.52 ± 2.12 | 0.7 ± 0.05 | 0.78 ± 0.06 | 0.86 ± 0.05 | 0.9 ± 0.03 | 0.87 ± 0.04 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, D.; Yu, H.W.; Kwon, H.; Kong, H.-J.; Lee, K.E.; Kim, H.C. Evaluation of Surgical Skills during Robotic Surgery by Deep Learning-Based Multiple Surgical Instrument Tracking in Training and Actual Operations. J. Clin. Med. 2020, 9, 1964. https://doi.org/10.3390/jcm9061964

Lee D, Yu HW, Kwon H, Kong H-J, Lee KE, Kim HC. Evaluation of Surgical Skills during Robotic Surgery by Deep Learning-Based Multiple Surgical Instrument Tracking in Training and Actual Operations. Journal of Clinical Medicine. 2020; 9(6):1964. https://doi.org/10.3390/jcm9061964

Chicago/Turabian StyleLee, Dongheon, Hyeong Won Yu, Hyungju Kwon, Hyoun-Joong Kong, Kyu Eun Lee, and Hee Chan Kim. 2020. "Evaluation of Surgical Skills during Robotic Surgery by Deep Learning-Based Multiple Surgical Instrument Tracking in Training and Actual Operations" Journal of Clinical Medicine 9, no. 6: 1964. https://doi.org/10.3390/jcm9061964