Examining the Visual Search Behaviour of Experts When Screening for the Presence of Diabetic Retinopathy in Fundus Images

Abstract

1. Introduction

2. Materials and Methods

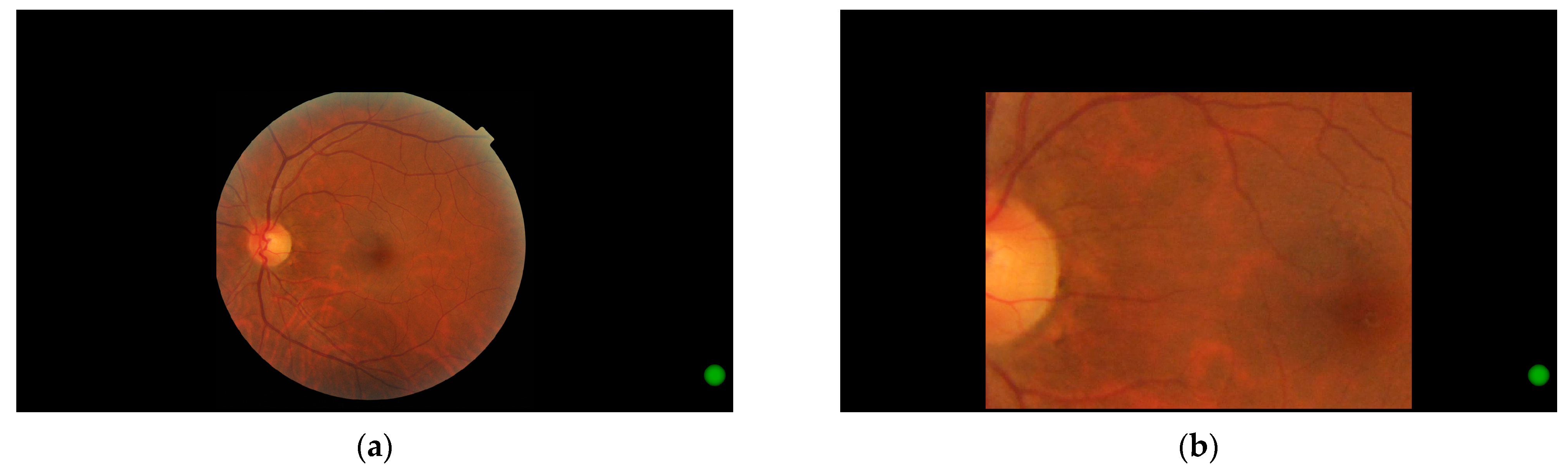

2.1. Software

2.2. Participants

2.3. Grading Analysis

- A score of 0 was considered correct, with all others considered incorrect;

- Scores of 0 or 1 were considered correct, with all other responses regarded as incorrect;

- Images and responses were converted to referable and not referable bins, with referable defined as more than mild non-proliferative diabetic retinopathy, in keeping with other studies [34]. Scores were then calculated using this modified criterion.

2.4. Zoom Behaviour Analysis

- Measured gaze position was converted to image pixel coordinates within the visible region.

- Corresponding coordinates on the original image were calculated from this position.

- Gaze position in the raw data was overwritten with these new coordinates.

2.5. Eye Movement Analysis

3. Results

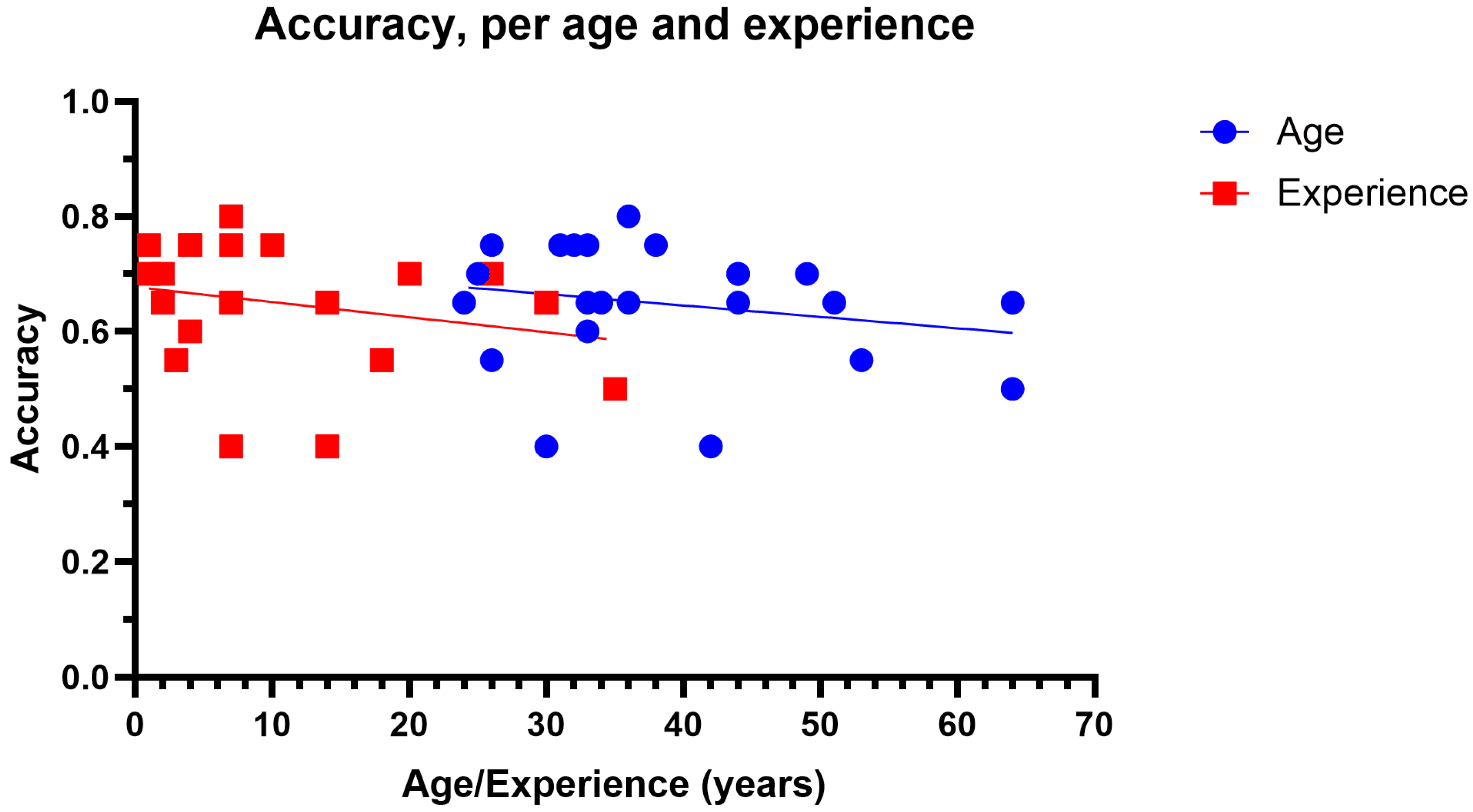

3.1. Grading Accuracy

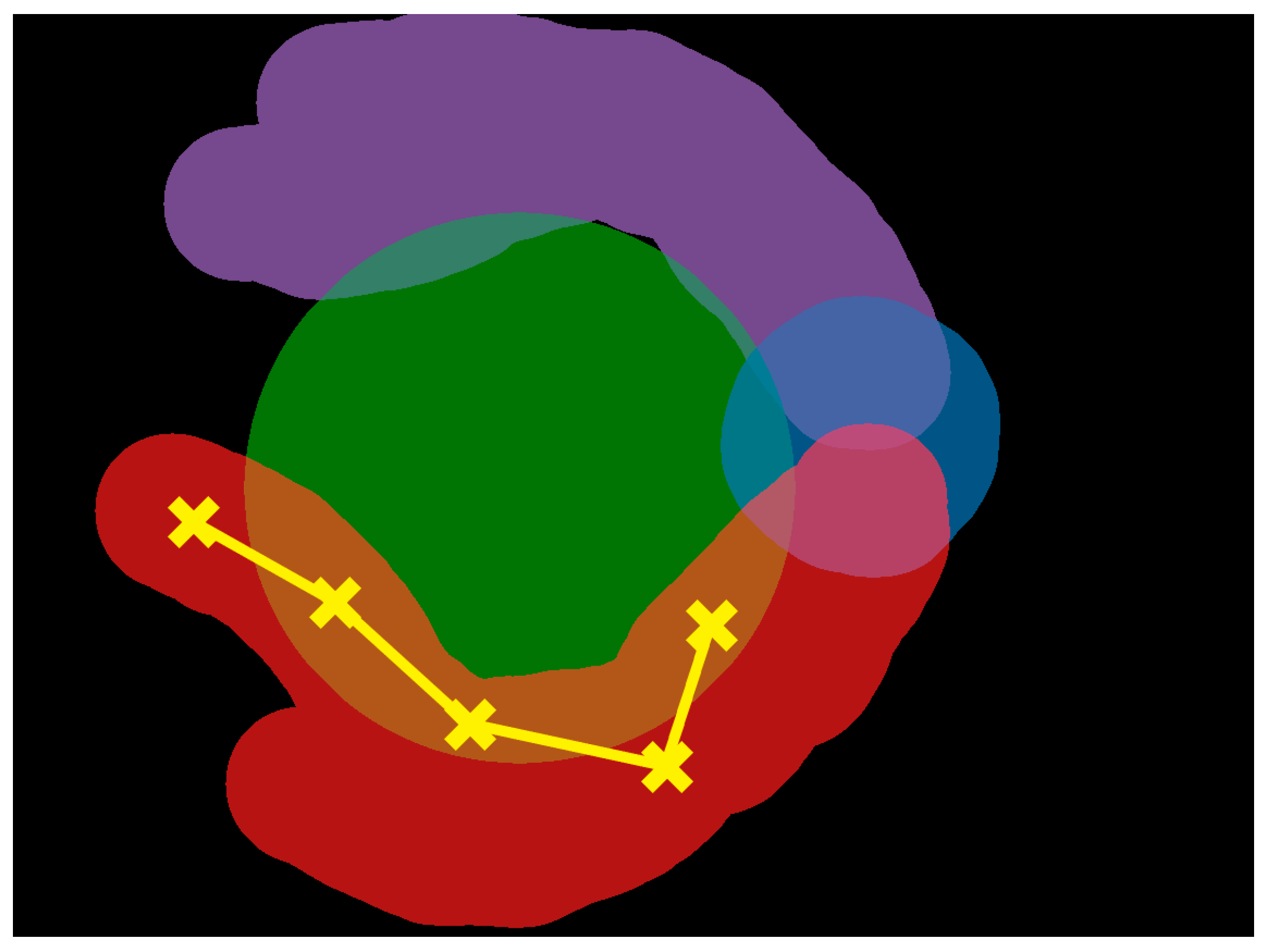

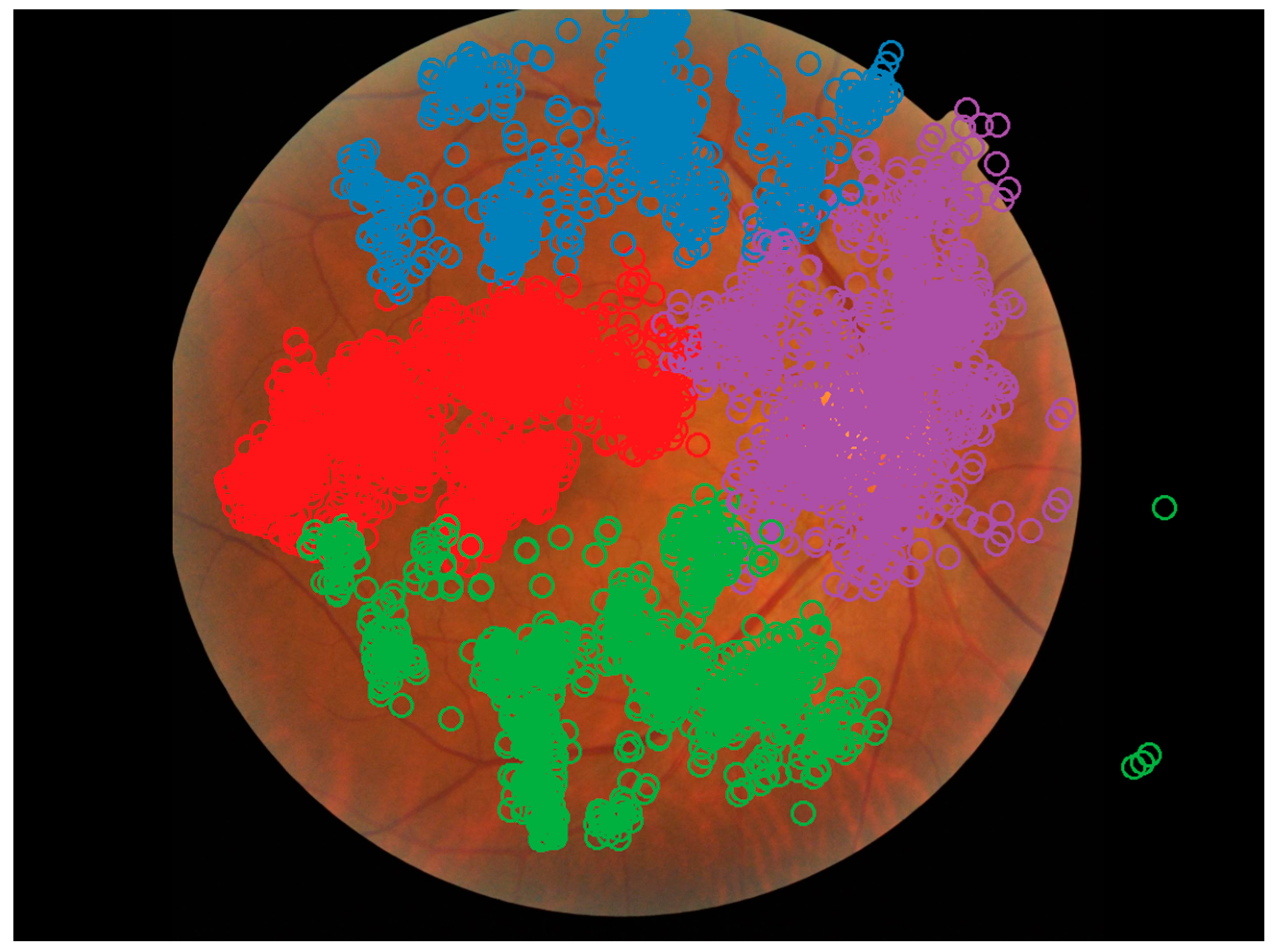

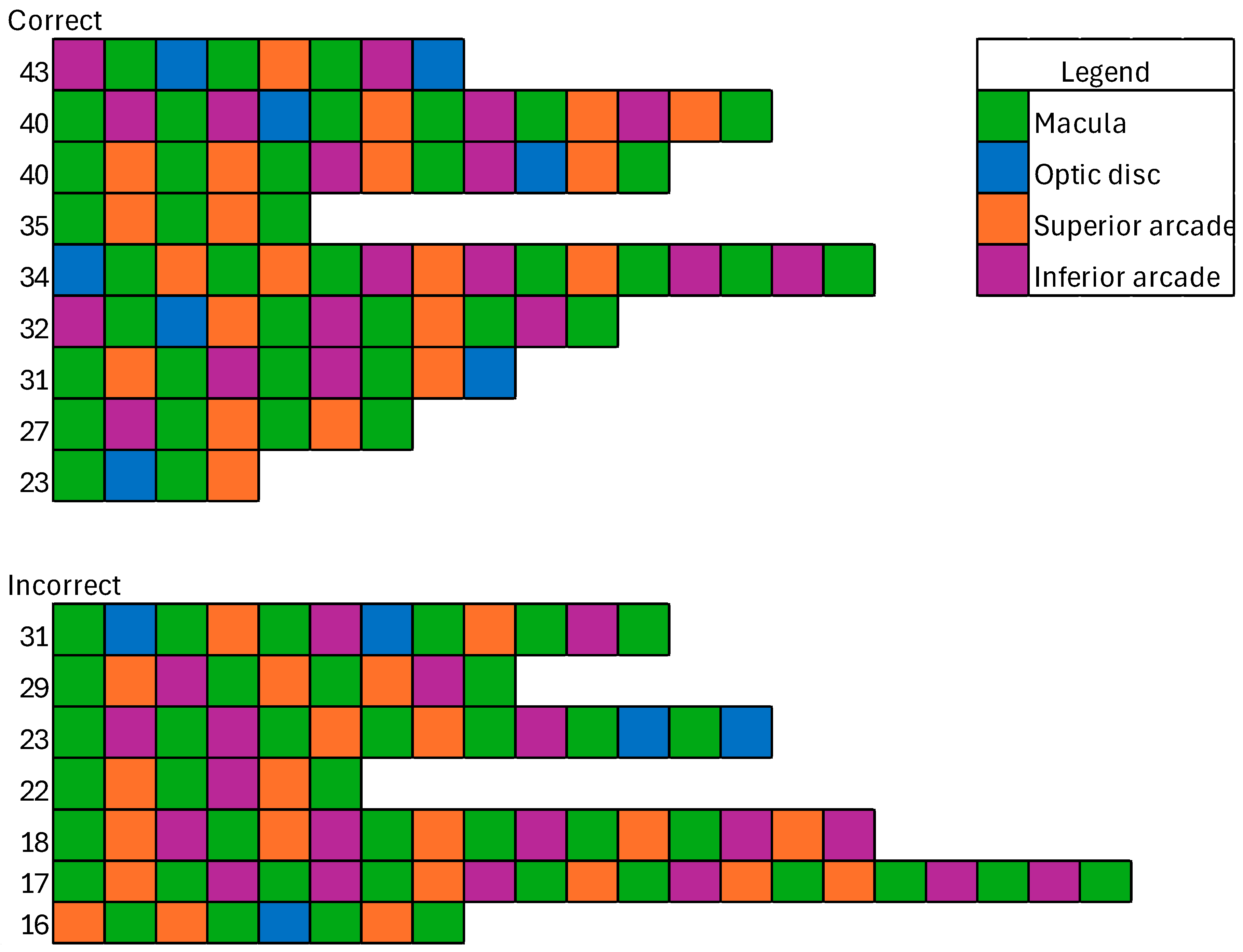

3.2. Areas of Interest

3.3. Zoom Behaviour

3.4. Eye Movements

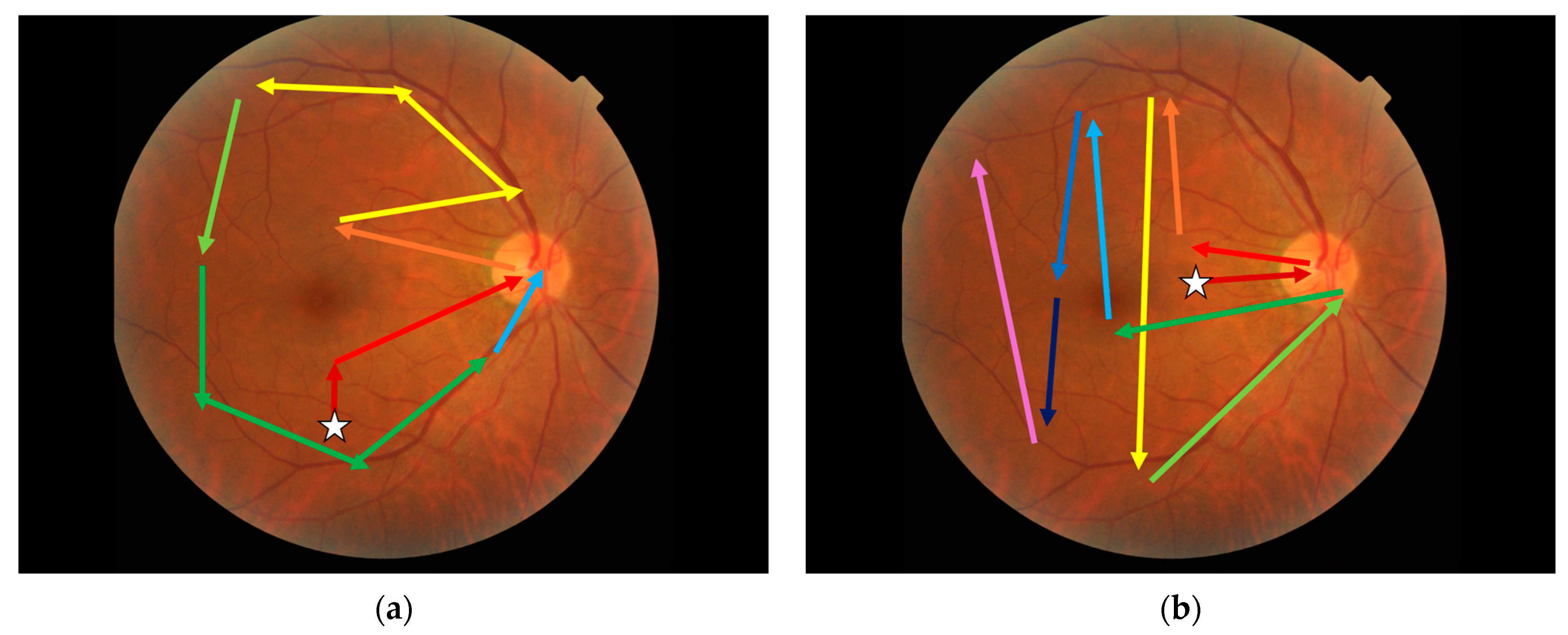

3.5. Visual Search Patterns

4. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- International Diabetes Federation. IDF Diabetes Atlas, 10th ed.; International Diabetes Fedration: Brussels, Belgium, 2021; 141p, Available online: https://www.diabetesatlas.org/ (accessed on 9 June 2023).

- Flaxman, S.R.; Bourne, R.R.; Resnikoff, S.; Ackland, P.; Braithwaite, T.; Cicinelli, M.V.; Das, A.; Jonas, J.B.; Keeffe, J.; Kempen, J.H.; et al. Global causes of blindness and distance vision impairment 1990–2020: A systematic review and meta-analysis. Lancet Glob. Health 2017, 5, e1221–e1234. [Google Scholar] [CrossRef]

- McKay, R.; McCarty, C.A.; Taylor, H.R. Diabetic retinopathy in Victoria, Australia: The Visual Impairment Project. Br. J. Ophthalmol. 2000, 84, 865–870. [Google Scholar] [CrossRef]

- Wilkinson, C.; Ferris, F.L.; Klein, R.E.; Lee, P.P.; Agardh, C.D.; Davis, M.; Dills, D.; Kampik, A.; Pararajasegaram, R.; Verdaguer, J.T. Proposed international clinical diabetic retinopathy and diabetic macular edema disease severity scales. Ophthalmology 2003, 110, 1677–1682. [Google Scholar] [CrossRef]

- Early Treatment Diabetic Retinopathy Study Research Group. Grading Diabetic Retinopathy from Stereoscopic Color Fundus Photographs—An Extension of the Modified Airlie House Classification. Ophthalmology 1991, 98, 786–806. [Google Scholar] [CrossRef]

- Mitchell, P.; Foran, S.; Wong, T.Y.; Chua, B.; Patel, I.; Ojaimi, E.; Foran, J. Guidelines for the Management of Diabetic Retinopathy; National Health and Medical Research Council; Commonwealth of Australia: Canberra, Australia, 2008. [Google Scholar]

- Wong, T.Y.; Sun, J.; Kawasaki, R.; Ruamviboonsuk, P.; Gupta, N.; Lansingh, V.C.; Maia, M.; Mathenge, W.; Moreker, S.; Muqit, M.M.; et al. Guidelines on Diabetic Eye Care. Ophthalmology 2018, 125, 1608–1622. [Google Scholar] [CrossRef]

- Public Health England. Diabetic Eye Screening Standards Valid for Data Collected from 1 April 2019 [Internet]. 2021. Available online: https://www.gov.uk/government/publications/diabetic-eye-screening-programme-standards/diabetic-eye-screening-standards-valid-for-data-collected-from-1-april-2019 (accessed on 5 December 2023).

- Optometry Australia. Clinical Guideline: Examination and Management of Patients with Diabetes [Internet]. 2018. Available online: https://www.optometry.org.au/wp-content/uploads/Professional_support/Guidelines/clinical_guideline_diabetes_revised_sept_2018_final_designed.pdf (accessed on 28 September 2021).

- Schmid, K.L.; Swann, P.G.; Pedersen, C.; Schmid, L.M. The detection of diabetic retinopathy by Australian optometrists. Clin. Exp. Optom. 2002, 85, 221–228. [Google Scholar] [CrossRef]

- Krause, J.; Gulshan, V.; Rahimy, E.; Karth, P.; Widner, K.; Corrado, G.S.; Peng, L.; Webster, D.R. Grader Variability and the Importance of Reference Standards for Evaluating Machine Learning Models for Diabetic Retinopathy. Ophthalmology 2018, 125, 1264–1272. [Google Scholar] [CrossRef]

- Idris, I.; Sellahewa, L.; Simpson, C.; Maharajan, P.; Duffy, J. Grader agreement, and sensitivity and specificity of digital photography in a community optometry-based diabetic eye screening program. Clin. Ophthalmol. 2014, 8, 1345–1349. [Google Scholar] [CrossRef]

- Teoh, C.S.; Wong, K.H.; Xiao, D.; Wong, H.C.; Zhao, P.; Chan, H.W.; Yuen, Y.S.; Naing, T.; Yogesan, K.; Koh, V.T.C. Variability in Grading Diabetic Retinopathy Using Retinal Photography and Its Comparison with an Automated Deep Learning Diabetic Retinopathy Screening Software. Healthcare 2023, 11, 1697. [Google Scholar] [CrossRef]

- Fahadullah, M.; Memon, N.A.; Salim, S.; Ahsan, S.; Fahim, M.F.; Mumtaz, S.N.; Shaikh, S.A.; Memon, M.S. Diagnostic accuracy of non-mydriatic fundus camera for screening of diabetic retinopathy: A hospital based observational study in Pakistan. J. Pak. Med. Assoc. 2019, 69, 378–382. [Google Scholar]

- Sallam, A.; Scanlon, P.H.; Stratton, I.M.; Jones, V.; Martin, C.N.; Brelen, M.; Johnston, R.L. Agreement and reasons for disagreement between photographic and hospital biomicroscopy grading of diabetic retinopathy. Diabet. Med. 2011, 28, 741–746. [Google Scholar] [CrossRef]

- Fingeret, M.; Medeiros, F.A.; Susanna, R.; Weinreb, R.N. Five rules to evaluate the optic disc and retinal nerve fiber layer for glaucoma. Optom.-J. Am. Optom. Assoc. 2005, 76, 661–668. [Google Scholar] [CrossRef]

- Yoshioka, N.; Wong, E.; Kalloniatis, M.; Yapp, M.; Hennessy, M.P.; Agar, A.; Healey, P.R.; Hayen, A.; Zangerl, B. Influence of education and diagnostic modes on glaucoma assessment by optometrists. Ophthalmic Physiol. Opt. 2015, 35, 682–698. [Google Scholar] [CrossRef]

- Leong, J.; Nicolaou, M.; Emery, R.; Darzi, A.; Yang, G.-Z. Visual search behaviour in skeletal radiographs: A cross-speciality study. Clin. Radiol. 2007, 62, 1069–1077. [Google Scholar] [CrossRef] [PubMed]

- Uchida, S.; Hiraoka, S.-I.; Kawamura, K.; Sakamoto, K.; Akiyama, R.; Tanaka, S. Machine Learning Analysis of Gaze Data for Enhanced Precision in Diagnosing Oral Mucosal Diseases. J. Clin. Med. 2023, 13, 136. [Google Scholar] [CrossRef] [PubMed]

- Krupinski, E.A.; Chao, J.; Hofmann-Wellenhof, R.; Morrison, L.; Curiel-Lewandrowski, C. Understanding Visual Search Patterns of Dermatologists Assessing Pigmented Skin Lesions Before and After Online Training. J. Digit. Imaging 2014, 27, 779–785. [Google Scholar] [CrossRef] [PubMed]

- Drew, T.; Lavelle, M.; Kerr, K.F.; Shucard, H.; Brunyé, T.T.; Weaver, D.L.; Elmore, J.G. More scanning, but not zooming, is associated with diagnostic accuracy in evaluating digital breast pathology slides. J. Vis. 2021, 21, 7. [Google Scholar] [CrossRef]

- Indian Retina Research Associates (IRRA); Verma, A.; Alagorie, A.R.; Ramasamy, K.; van Hemert, J.; Yadav, N.; Pappuru, R.R.; Tufail, A.; Nittala, M.G.; Sadda, S.R.; et al. Distribution of peripheral lesions identified by mydriatic ultra-wide field fundus imaging in diabetic retinopathy. Graefe’s Arch. Clin. Exp. Ophthalmol. 2020, 258, 725–733. [Google Scholar] [CrossRef]

- Silva, P.S.; Cavallerano, J.D.; Sun, J.K.; Soliman, A.Z.; Aiello, L.M.; Aiello, L.P. Peripheral Lesions Identified by Mydriatic Ultrawide Field Imaging: Distribution and Potential Impact on Diabetic Retinopathy Severity. Ophthalmology 2013, 120, 2587–2595. [Google Scholar] [CrossRef]

- Murphy, T.I.; Douglass, A.G.; van Wijngaarden, P.; Armitage, J.A. Programmatically Localizing Diabetic Retinopathy Features in 45-Degree Retinal Photographs Using Anatomical Colocation. J. Clin. Med. 2024, 13, 807. [Google Scholar] [CrossRef]

- Coutrot, A.; Hsiao, J.H.; Chan, A.B. Scanpath modeling and classification with hidden Markov models. Behav. Res. Methods 2018, 50, 362–379. [Google Scholar] [CrossRef]

- Al-Moteri, M.O.; Symmons, M.; Plummer, V.; Cooper, S. Eye tracking to investigate cue processing in medical decision-making: A scoping review. Comput. Hum. Behav. 2017, 66, 52–66. [Google Scholar] [CrossRef]

- Brockmole, J.R.; Henderson, J.M. Short Article: Recognition and Attention Guidance during Contextual Cueing in Real-World Scenes: Evidence from Eye Movements. Q. J. Exp. Psychol. 2006, 59, 1177–1187. [Google Scholar] [CrossRef] [PubMed]

- O’Neill, E.C.; Kong, Y.X.G.; Connell, P.P.; Ni Ong, D.; Haymes, S.A.; Coote, M.A.; Crowston, J.G. Gaze Behavior among Experts and Trainees during Optic Disc Examination: Does How We Look Affect What We See? Investig. Opthalmol. Vis. Sci. 2011, 52, 3976–3983. [Google Scholar] [CrossRef] [PubMed]

- Rangrej, S.B.; Sivaswamy, J.; Srivastava, P. Scan, dwell, decide: Strategies for detecting abnormalities in diabetic retinopathy. PLoS ONE 2018, 13, e0207086. [Google Scholar] [CrossRef]

- Kok, E.M.; Jarodzka, H. Before your very eyes: The value and limitations of eye tracking in medical education. Med. Educ. 2017, 51, 114–122. [Google Scholar] [CrossRef]

- Rajalakshmi, R.; Prathiba, V.; Arulmalar, S.; Usha, M. Review of retinal cameras for global coverage of diabetic retinopathy screening. Eye 2021, 35, 162–172. [Google Scholar] [CrossRef]

- Li, T.; Gao, Y.; Wang, K.; Guo, S.; Liu, H.; Kang, H. Diagnostic assessment of deep learning algorithms for diabetic retinopathy screening. Inf. Sci. 2019, 501, 511–522. [Google Scholar] [CrossRef]

- Murphy, T.I.; Abel, L.A.; Armitage, J.A.; Douglass, A.G. Effects of tracker location on the accuracy and precision of the Gazepoint GP3 HD for spectacle wearers. Behav. Res. Methods 2024, 56, 43–52. [Google Scholar] [CrossRef]

- Bellemo, V.; Lim, Z.W.; Lim, G.; Nguyen, Q.D.; Xie, Y.; Yip, M.Y.T.; Hamzah, H.; Ho, J.; Lee, X.Q.; Hsu, W.; et al. Artificial intelligence using deep learning to screen for referable and vision-threatening diabetic retinopathy in Africa: A clinical validation study. Lancet Digit. Health 2019, 1, e35–e44. [Google Scholar] [CrossRef]

- Krippendorff, K. Estimating the Reliability, Systematic Error and Random Error of Interval Data. Educ. Psychol. Meas. 1970, 30, 61–70. [Google Scholar] [CrossRef]

- Heeman, J.; Theeuwes, J.; Van der Stigchel, S. The time course of top-down control on saccade averaging. Vis. Res. 2014, 100, 29–37. [Google Scholar] [CrossRef] [PubMed]

- Frey, B.J.; Dueck, D. Clustering by Passing Messages Between Data Points. Science 2007, 315, 972–976. [Google Scholar] [CrossRef]

- Levenshtein, V.I. Binary codes capable of correcting deletions, insertions, and reversals. Soviet Physics Doklady 1966, 10, 707–710. [Google Scholar]

- Wedel, M.; Yan, J.; Smith, P.; Siegel, E.; Li, H.A. A Hidden Markov Model to Identify Regions of Interest from Eye Movements, with an Application to Nodule Detection in Chest X-Rays. SSRN Electron. J. 2014. Available online: https://cir.nii.ac.jp/crid/1363107371198844160 (accessed on 7 July 2023). [CrossRef]

- Wang, Y.T.; Tadarati, M.; Wolfson, Y.; Bressler, S.B.; Bressler, N.M. Comparison of Prevalence of Diabetic Macular Edema Based on Monocular Fundus Photography vs. Optical Coherence Tomography. JAMA Ophthalmol. 2016, 134, 222–227. [Google Scholar] [CrossRef]

- Marzi, G.; Balzano, M.; Marchiori, D. K-Alpha Calculator–Krippendorff’s Alpha Calculator: A user-friendly tool for computing Krippendorff’s Alpha inter-rater reliability coefficient. MethodsX 2024, 12, 102545. [Google Scholar] [CrossRef]

- Jonas, R.A.; Wang, Y.X.; Yang, H.; Li, J.J.; Xu, L.; Panda-Jonas, S.; Jonas, J.B. Optic Disc—Fovea Distance, Axial Length and Parapapillary Zones. The Beijing Eye Study 2011. PLoS ONE 2015, 10, e0138701. [Google Scholar] [CrossRef]

- Nowroozzadeh, M.H.; Moshksar, S.; Azimi, A.; Rasti, A.; Sedaghat, A. Comparison of retinal vascular arcade trajectory between eyes with an idiopathic macular hole and the healthy fellow eye. Int. Ophthalmol. 2022, 42, 2219–2225. [Google Scholar] [CrossRef]

- Murphy, T.; Armitage, J.; van Wijngaarden, P.; Abel, L.; Douglass, A. Unmasking visual search: An objective framework for grouping eye tracking data. Investig. Ophthalmol. Vis. Sci. 2024, 65, 5179. [Google Scholar]

- Munuera-Gifre, E.; Saez, M.; Juvinyà-Canals, D.; Rodríguez-Poncelas, A.; Barrot-De-La–Puente, J.; Franch-Nadal, J.; Romero-Aroca, P.; Barceló, M.A.; Coll-De-Tuero, G. Analysis of the location of retinal lesions in central retinographies of patients with Type 2 diabetes. Acta Ophthalmol. 2020, 98, E13–E21. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Xie, J.; Zhang, L.; Cui, Y.; Zhang, G.; Wang, J.; Zhang, A.; Chen, X.; Huang, T.; Meng, Q. Differential distribution of manifest lesions in diabetic retinopathy by fundus fluorescein angiography and fundus photography. BMC Ophthalmol. 2020, 20, 471. [Google Scholar] [CrossRef] [PubMed]

| Age (Years) | Years of Clinical Experience | |||||||

|---|---|---|---|---|---|---|---|---|

| Mean | Min | Max | SD | Mean | Min | Max | SD | |

| Ophthalmologist | 48 | 33 | 64 | 11.40 | 16.5 | 1 | 35 | 11.86 |

| Optometrist | 33.9 | 24 | 51 | 8.13 | 8.5 | 1 | 30 | 8.55 |

| Severity | Optometrist | Ophthalmologist | Mann–Whitney U | |

|---|---|---|---|---|

| Referable | All images | 0.857 (±0.332) | 0.875 (±0.332) | U = 23,560.0, p = 0.59 |

| Not referable | 0.820 (±0.385) | 0.861 (±0.348) | U = 5683.5, p = 0.43 | |

| Referable | 0.893 (±0.310) | 0.923 (±0.268) | U = 5676.0, p = 0.47 | |

| Grade score = 0 or 1 | All images | 0.807 (±0.396) | 0.813 (±0.392) | U = 23,860.0, p = 0.88 |

| No DR | 0.983 (±0.129) | 1.000 (±0.000) | U = 914.5, p = 0.49 | |

| Mild NPDR | 0.922 (±0.269) | 0.896 (±0.309) | U = 2217.0, p = 0.60 | |

| Moderate NPDR | 0.578 (±0.499) | 0.625 (±0.495) | U = 514.5, p = 0.71 | |

| Severe NPDR | 0.756 (±0.435) | 0.625 (±0.495) | U = 610.5, p = 0.26 | |

| Proliferative DR | 0.667 (±0.475) | 0.867 (±0.346) | U = 720.0, p = 0.04 | |

| Grade score = 0 | All images | 0.653 (±0.477) | 0.638 (±0.482) | U = 24,380.0, p = 0.74 |

| No DR | 0.800 (±0.403) | 0.806 (±0.402) | U = 924, p = 0.95 | |

| Mild NPDR | 0.667 (±0.474) | 0.688 (±0.468) | U = 2115.0, p = 0.47 | |

| Moderate NPDR | 0.444 (±0.503) | 0.292 (±0.464) | U = 622.5, p = 0.22 | |

| Severe NPDR | 0.622 (±0.490) | 0.458 (±0.509) | U = 628.5, p = 0.20 | |

| Proliferative DR | 0.667 (±0.475) | 0.867 (±0.346) | U = 720.0, p = 0.04 |

| Fixation Count: Correct | Fixation Count: Incorrect | |||||

| AOI | Optometrist | Ophthalmologist | Mann–Whitney U | Optometrist | Ophthalmologist | Mann–Whitney U |

| All | 18.93 (±18.32) | 14.60 (±16.09) | U = 275,265.5, p < 0.01 | 20.35 (±19.46) | 16.80 (±17.56) | U = 79,749.5, p < 0.01 |

| Arcade superior | 17.04 (±12.43) | 13.97 (±12.87) | U = 11,512.5, p < 0.01 | 19.52 (±17.62) | 16.69 (±17.62) | U = 3435.5, p = 0.02 |

| Arcade inferior | 16.44 (±12.85) | 11.34 (±9.80) | U = 11,743,5, p < 0.01 | 17.88 (±14.62) | 14.47 (±12.55) | U = 3348.5, p = 0.08 |

| Optic disc | 10.46 (±8.23) | 9.12 (±7.39) | U = 10,002.5, p = 0.08 | 10.14 (±9.44) | 11.89 (±14.62) | U = 3046.5, p = 0.38 |

| Macula | 36.94 (±24.99) | 28.54 (±23.29) | U = 12,411.0, p < 0.01 | 42.97 (±22.07) | 30.84 (±22.93) | U = 3922.0, p < 0.01 |

| Visits: Correct | Visits: Incorrect | |||||

| AOI | Optometrist | Ophthalmologist | Mann–Whitney U | Optometrist | Ophthalmologist | Mann–Whitney U |

| All | 6.36 (±4.21) | 4.40 (±2.88) | U = 297,322.5, p < 0.01 | 6.30 (±4.03) | 5.07 (±3.33) | U = 83,294.0, p < 0.01 |

| Arcade superior | 6.35 (±3.81) | 4.56 (±3.09) | U = 12,252.0, p < 0.01 | 6.56 (±3.93) | 5.35 (±3.30) | U = 3337.5, p = 0.05 |

| Arcade inferior | 6.16 (±4.10) | 4.51 (±3.13) | U = 11,695.0, p < 0.01 | 6.04 (±3.94) | 5.00 (±3.43) | U = 3342.5, p = 0.08 |

| Optic disc | 5.06 (±3.15) | 3.70 (±2.15) | U = 11,065.5, p < 0.01 | 4.72 (±3.21) | 3.94 (±2.86) | U = 3320.5, p = 0.06 |

| Macula | 8.52 (±4.72) | 5.47 (±3.01) | U = 13,931.0, p < 0.01 | 8.80 (±4.41) | 6.47 (±3.56) | U = 38,469.0, p < 0.01 |

| Total Time (Seconds): Correct | Total Time (Seconds): Incorrect | |||||

| AOI | Optometrist | Ophthalmologist | Mann–Whitney U | Optometrist | Ophthalmologist | Mann–Whitney U |

| All | 6.00 (±5.88) | 4.99 (±5.60) | U = 260,404.0, p < 0.01 | 6.71 (±7.05) | 5.71 (±5.52) | U = 74,272.0, p = 0.14 |

| Arcade superior | 5.08 (±3.78) | 4.24 (±3.65) | U = 11,092.0, p = 0.02 | 6.03 (±5.24) | 4.94 (±4.33) | U = 3221.0, p = 0.13 |

| Arcade inferior | 5.13 (±3.83) | 4.06 (±3.62) | U = 10,991.0, p = 0.01 | 6.00 (±5.15) | 5.22 (±4.45) | U = 3122.0, p = 0.34 |

| Optic disc | 3.20 (±2.51) | 2.99 (±2.31) | U = 9296.0, p = 0.53 | 3.17 (±3.18) | 3.88 (±4.78) | U = 2833.0, p = 0.93 |

| Macula | 12.30 (±7.93) | 10.15 (±8.33) | U = 11,788.0, p < 0.01 | 14.84 (±9.14) | 10.87 (±6.91) | U = 3667.0, p < 0.01 |

| Dwell Time (Seconds): Correct | Dwell Time (Seconds): Incorrect | |||||

| AOI | Optometrist | Ophthalmologist | Mann–Whitney U | Optometrist | Ophthalmologist | Mann–Whitney U |

| All | 1.00 (±0.98) | 1.04 (±1.01) | U = 17,374,140.0, p = 0.02 | 1.06 (±1.13) | 1.19 (±1.19) | U = 4,852,876.5, p < 0.01 |

| Arcade superior | 0.87 (±0.89) | 0.90 (±0.95) | U = 1,110,123.0, p = 0.31 | 0.97 (±0.96) | 0.81 (±0.73) | U = 364,432.5, p = 0.24 |

| Arcade inferior | 1.03 (±0.87) | 1.09 (±0.88) | U = 856,611.0, p = 0.01 | 1.04 (±1.00) | 1.23 (±1.02) | U = 211,855.5, p < 0.01 |

| Optic disc | 1.54 (±1.33) | 1.56 (±1.46) | U = 205,146.0, p = 0.55 | 1.63 (±1.53) | 1.99 (±1.81) | U = 49,374.0, p = 0.01 |

| Macula | 0.94 (±0.94) | 0.95 (±0.90) | U = 3,043,863.0, p = 0.59 | 1.03 (±1.16) | 1.16 (±1.16) | U = 852,885.0, p < 0.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Murphy, T.I.; Armitage, J.A.; Abel, L.A.; van Wijngaarden, P.; Douglass, A.G. Examining the Visual Search Behaviour of Experts When Screening for the Presence of Diabetic Retinopathy in Fundus Images. J. Clin. Med. 2025, 14, 3046. https://doi.org/10.3390/jcm14093046

Murphy TI, Armitage JA, Abel LA, van Wijngaarden P, Douglass AG. Examining the Visual Search Behaviour of Experts When Screening for the Presence of Diabetic Retinopathy in Fundus Images. Journal of Clinical Medicine. 2025; 14(9):3046. https://doi.org/10.3390/jcm14093046

Chicago/Turabian StyleMurphy, Timothy I., James A. Armitage, Larry A. Abel, Peter van Wijngaarden, and Amanda G. Douglass. 2025. "Examining the Visual Search Behaviour of Experts When Screening for the Presence of Diabetic Retinopathy in Fundus Images" Journal of Clinical Medicine 14, no. 9: 3046. https://doi.org/10.3390/jcm14093046

APA StyleMurphy, T. I., Armitage, J. A., Abel, L. A., van Wijngaarden, P., & Douglass, A. G. (2025). Examining the Visual Search Behaviour of Experts When Screening for the Presence of Diabetic Retinopathy in Fundus Images. Journal of Clinical Medicine, 14(9), 3046. https://doi.org/10.3390/jcm14093046