AI-Based Prediction of Bone Conduction Thresholds Using Air Conduction Audiometry Data

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection and Participants

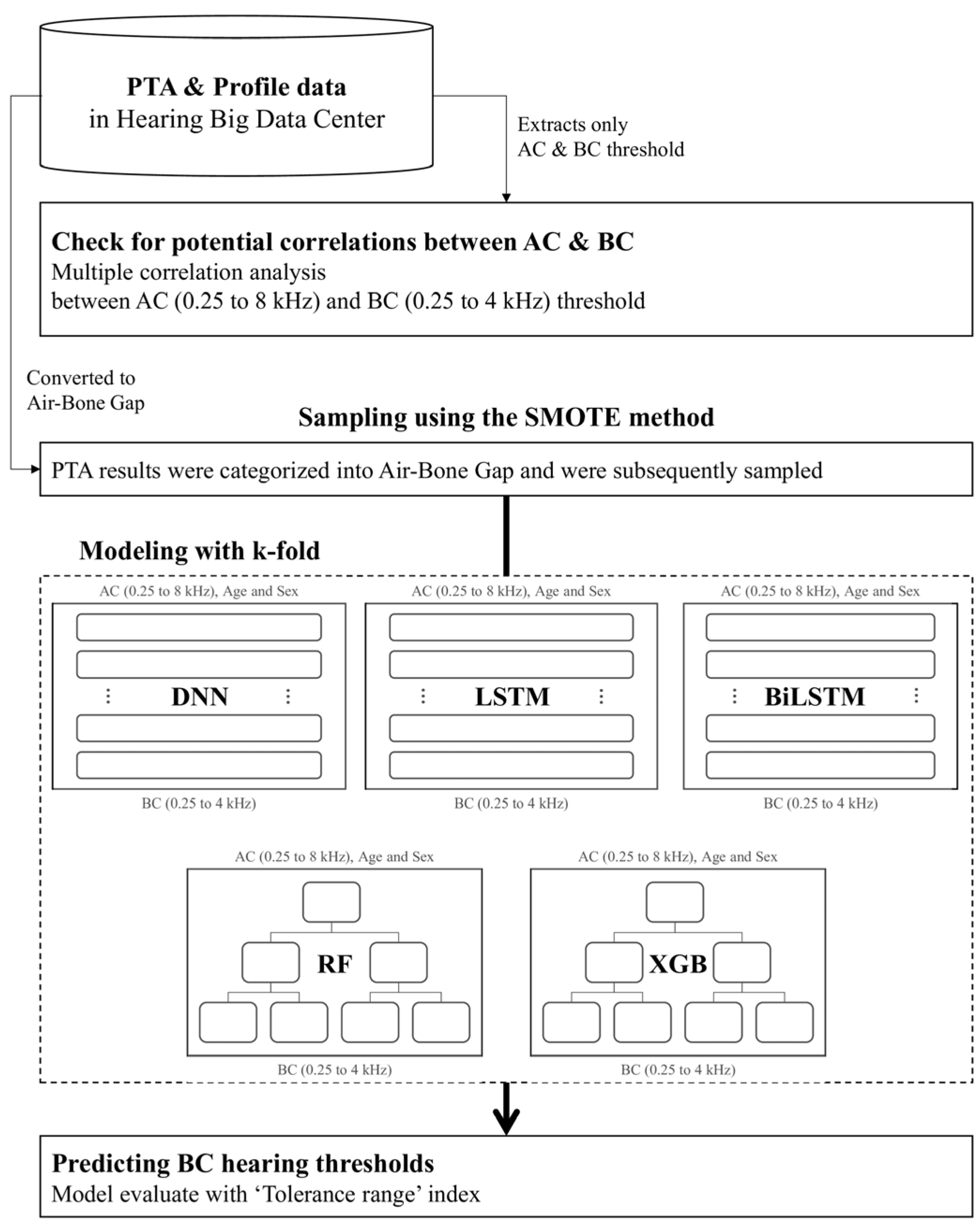

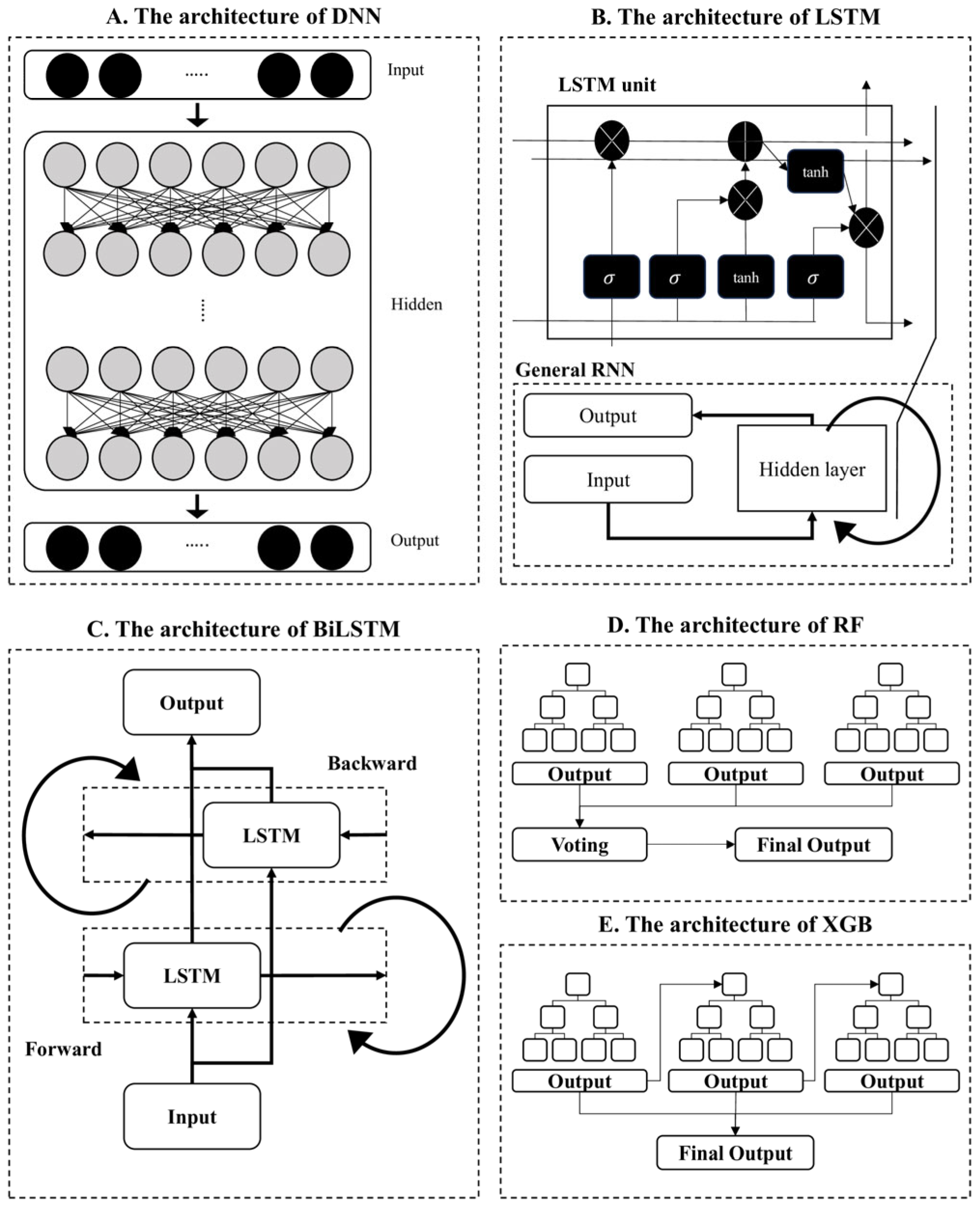

2.2. Experimental Design

2.3. Data Processing and Model Training

2.4. Evaluation

3. Results

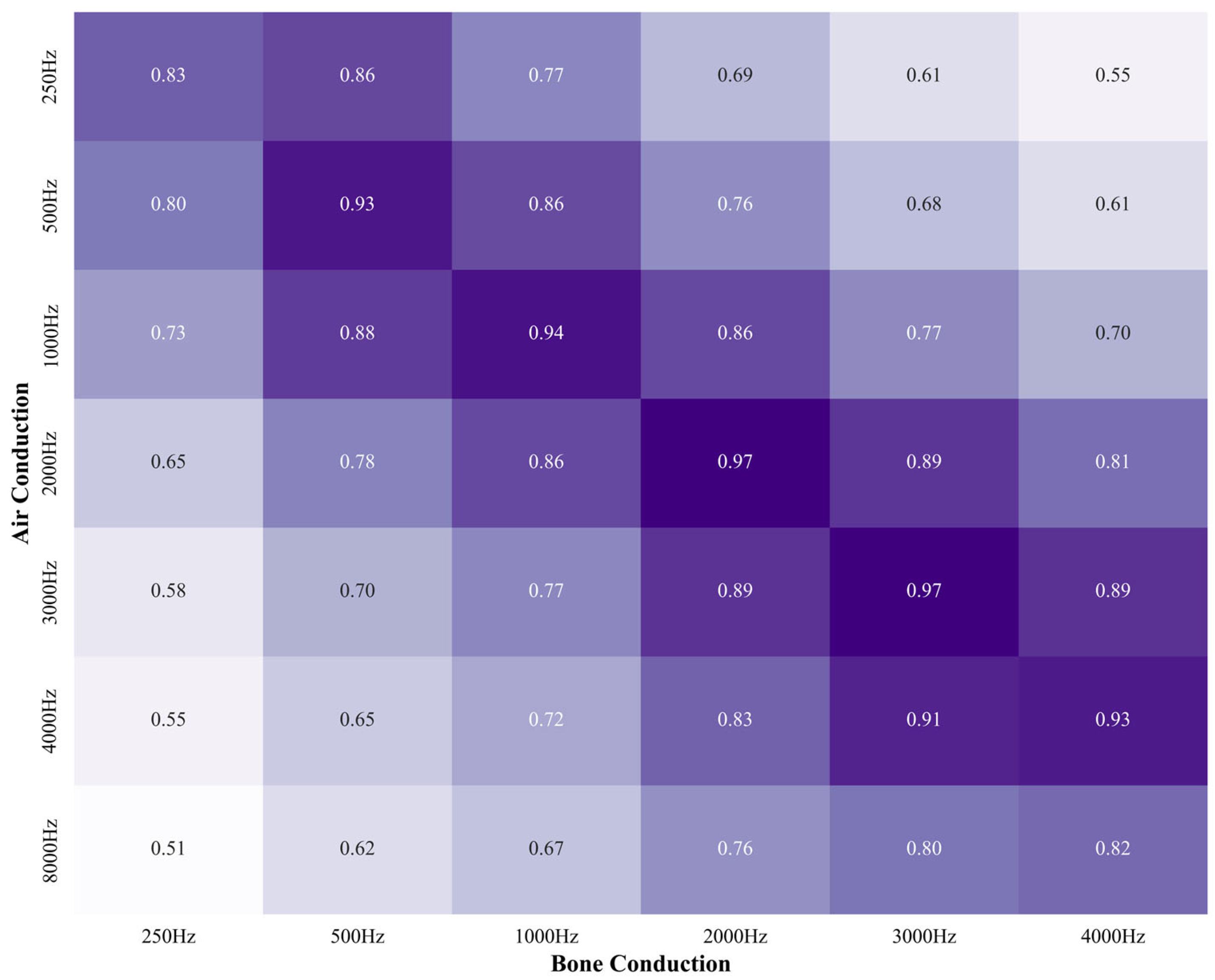

3.1. Correlation Between AC and BC

3.2. BC Threshold Prediction from AC

3.3. ABG Classification

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| HL | Hearing loss |

| YLDs | Years lived with disability |

| PTA | Pure tone audiometry |

| AC | Air conduction |

| BC | Bone conduction |

| ABG | Air–bone gap |

| ML | Machine learning |

| DL | Deep learning |

| AI | Artificial intelligence |

| HBDC | Hearing Big Data Center |

| DNN | Deep Neural Network |

| LSTM | Long Short-Term Memory |

| BiLSTM | Bidirectional Long Short-Term Memory |

| RF | Random Forest |

| XGB | Extreme Gradient Boosting |

| SMOTE | Synthetic Minority Over-sampling Technique |

| MSE | Mean squared error |

| CIs | Confidence intervals |

| ABR | Auditory brainstem response |

| OAE | Otoacoustic emissions |

References

- Newsted, D.; Rosen, E.; Cooke, B.; Beyea, M.M.; Simpson, M.T.W.; Beyea, J.A. Approach to hearing loss. Can. Fam. Physician 2020, 66, 803–809. [Google Scholar] [PubMed]

- Wilson, B.S.; Tucci, D.L.; Merson, M.H.; O’Donoghue, G.M. Global hearing health care: New findings and perspectives. Lancet 2017, 390, 2503–2515. [Google Scholar] [CrossRef] [PubMed]

- Wilson, B.S.; Tucci, D.L.; O’Donoghue, G.M.; Merson, M.H.; Frankish, H. A Lancet Commission to address the global burden of hearing loss. Lancet 2019, 393, 2106–2108. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Yoon, C.Y.; Lee, J.; Kong, T.H.; Seo, Y.J. Clinical characteristics of the “Gap” between the prevalence and incidence of hearing loss using National Health Insurance Service data. PLoS ONE 2024, 19, e0299478. [Google Scholar] [CrossRef] [PubMed]

- Salmon, M.K.; Brant, J.; Hohman, M.H.; Leibowitz, D. Audiogram interpretation. In Basic Otorhinolaryngology, 2nd ed.; Brant, J.A., Hohman, M.H., Eds.; Thieme: New York, NY, USA, 2020; pp. 91–104. [Google Scholar]

- Yoon, C.Y.; Lee, J.; Kong, T.H.; Seo, Y.J. Importance of small vessel disease as a possible cause of sudden sensorineural hearing loss. PLoS ONE 2024, 19, e0302447. [Google Scholar] [CrossRef] [PubMed]

- Diehl, P.U.; Singer, Y.; Zilly, H.; Schönfeld, U.; Meyer-Rachner, P.; Berry, M.; Sprekeler, H.; Sprengel, E.; Pudszuhn, A.; Hofmann, V.M. Restoring speech intelligibility for hearing aid users with deep learning. Sci. Rep. 2023, 13, 2719. [Google Scholar] [CrossRef] [PubMed]

- Gong, Q.; Liu, Y.; Peng, Z. Estimating Hearing Thresholds from Stimulus-Frequency Otoacoustic Emissions. Trends Hear. 2020, 24, 2331216520960053. [Google Scholar] [CrossRef] [PubMed]

- Huang, E.I.; Wu, Y.C.; Chuang, H.M.; Huang, T.C. Bone-conduction threshold and air-bone gap may predict frequency-specific air-conduction threshold after tympanoplasty. PLoS ONE 2021, 16, e0248421. [Google Scholar] [CrossRef] [PubMed]

- Kawamura, Y.; Suzuki, H.; Nguyen, T.N.; Ohkubo, J.I.; Wakasugi, T.; Kitamura, T. Frequency-specific prediction model of hearing outcomes in patients with idiopathic sudden sensorineural hearing loss. Eur. Arch. Otorhinolaryngol. 2022, 279, 4727–4733. [Google Scholar] [CrossRef] [PubMed]

- Uhm, T.; Lee, J.E.; Yi, S.; Choi, S.W.; Oh, S.J.; Kong, S.K.; Lee, I.W.; Lee, H.M. Predicting hearing recovery following treatment of idiopathic sudden sensorineural hearing loss with machine learning models. Am. J. Otolaryngol. 2021, 42, 102858. [Google Scholar] [CrossRef] [PubMed]

- Elsayed, A.M.; Hunter, L.L.; Keefe, D.H.; Feeney, M.P.; Brown, D.K.; Meinzen-Derr, J.K.; Baroch, K.; Sullivan-Mahoney, M.; Francis, K.; Schaid, L.G. Air and Bone Conduction Click and Tone-Burst Auditory Brainstem Thresholds Using Kalman Adaptive Processing in Nonsedated Normal-Hearing Infants. Ear Hear. 2015, 36, 471–481. [Google Scholar] [CrossRef] [PubMed]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Abiodun, O.I.; Jantan, A.; Omolara, A.E.; Dada, K.V.; Mohamed, N.A.; Arshad, H. State-of-the-art in artificial neural network applications: A survey. Heliyon 2018, 4, e00938. [Google Scholar] [CrossRef] [PubMed]

- Bengio, Y.; Xplore, I.; Now, P. Learning Deep Architectures for AI. In Foundations and Trends in Machine Learning v 2; Now Publishers IEEE Xplore: Piscataway, NJ, USA, 2009; pp. 1–127. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- Lee, J.; Cho, W.H.; Kong, T.H.; Jung, S.S.; Han, W.; Park, S.; Seo, Y.J. Contralateral bone conducted sound wave propagation on the skull bones in fresh frozen cadaver. Sci. Rep. 2023, 13, 7479. [Google Scholar] [CrossRef] [PubMed]

| Air–Bone Gap | Raw | % | Train | |||

|---|---|---|---|---|---|---|

| Before SMOTE | % | After SMOTE | % | |||

| No | 39,910 | 56.26 | 32,000 | 45.11 | 32,000 | 40.93 |

| Yes | 31,027 | 43.74 | 24,749 | 34.89 | 32,000 | 40.93 |

| Test | ||||||

| 14,188 | 20.00 | 14,188 | 18.15 | |||

| Total | 70,937 | 100 | 70,937 | 100 | 78,188 | 100 |

| All Values | All Patients | Tolerance Range (±5 dB) | Tolerance Range (±10 dB) | ||

|---|---|---|---|---|---|

| 72,864 | 12,144 | TRUE | Accuracy | TRUE | Accuracy |

| DNN | Values | 53,648.6 | 0.632 | 67,977.8 | 0.799 |

| CI | (±153.64) | (±0.0) | (±137.99) | (±0.0) | |

| Patients | 8065.6 | 0.568 | 11,556.0 | 0.814 | |

| CI | (±23.39) | (±0.0) | (±29.55) | (±0.0) | |

| LSTM | Values | 54,486.8 | 0.641 | 69,052.2 | 0.811 |

| CI | (±774.23) | (±0.01) | (±498.84) | (±0.01) | |

| Patients | 8114.0 | 0.572 | 11,882.8 | 0.838 | |

| CI | (±215.32) | (±0.01) | (±97.03) | (±0.01) | |

| BiLSTM | Values | 54,738.4 | 0.643 | 69,212.8 | 0.813 |

| CI | (±261.44) | (±0.0) | (±169.33) | (±0.0) | |

| Patients | 8201.8 | 0.578 | 11,884.4 | 0.838 | |

| CI | (±72.69) | (±0.01) | (±34.96) | (±0.0) | |

| RF | Values | 53,949.7 | 0.741 | 64,698.4 | 0.895 |

| CI | (±27.76) | (±0.0) | (±16.9) | (±0.0) | |

| Patients | 8769.8 | 0.724 | 11,332.2 | 0.931 | |

| CI | (±10.26) | (±0.0) | (±3.09) | (±0.0) | |

| XGB | Values | 54,168.8 | 0.742 | 64,537.8 | 0.892 |

| CI | (±38.55) | (±0.0) | (±22.25) | (±0.0) | |

| Patients | 8649.0 | 0.714 | 11,337.5 | 0.933 | |

| CI | (±14.41) | (±0) | (±4.43) | (±0) | |

| Model | Gap | Accuracy | Sensitivity | Precision | F1 |

|---|---|---|---|---|---|

| DNN | 15 dB | 0.41 | 0.338 | 0.785 | 0.473 |

| CI | (±0.0) | (±0.0) | (±0.01) | (±0.0) | |

| 10 dB | 0.586 | 0.542 | 0.837 | 0.658 | |

| CI | (±0.0) | (±0.01) | (±0.0) | (±0.0) | |

| LSTM | 15 dB | 0.41 | 0.355 | 0.778 | 0.487 |

| CI | (±0.0) | (±0.01) | (±0.01) | (±0.01) | |

| 10 dB | 0.586 | 0.566 | 0.827 | 0.672 | |

| CI | (±0.0) | (±0.01) | (±0.0) | (±0.01) | |

| BiLSTM | 15 dB | 0.41 | 0.353 | 0.78 | 0.486 |

| CI | (±0.0) | (±0.0) | (±0.0) | (±0.0) | |

| 10 dB | 0.586 | 0.562 | 0.83 | 0.67 | |

| CI | (±0.0) | (±0.01) | (±0.0) | (±0.01) | |

| RF | 15 dB | 0.512 | 0.408 | 0.826 | 0.546 |

| CI | (±0.0) | (±0.0) | (±0.0) | (±0.0) | |

| 10 dB | 0.31 | 0.192 | 0.739 | 0.305 | |

| CI | (±0.0) | (±0.0) | (±0.0) | (±0.0) | |

| XGB | 15 dB | 0.512 | 0.405 | 0.827 | 0.544 |

| CI | (±0.0) | (±0.0) | (±0.0) | (±0.0) | |

| 10 dB | 0.31 | 0.188 | 0.739 | 0.3 | |

| CI | (±0.0) | (±0.0) | (±0.0) | (±0.0) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoon, C.Y.; Lee, J.; Kim, J.; You, S.; Kwak, C.; Seo, Y.J. AI-Based Prediction of Bone Conduction Thresholds Using Air Conduction Audiometry Data. J. Clin. Med. 2025, 14, 6549. https://doi.org/10.3390/jcm14186549

Yoon CY, Lee J, Kim J, You S, Kwak C, Seo YJ. AI-Based Prediction of Bone Conduction Thresholds Using Air Conduction Audiometry Data. Journal of Clinical Medicine. 2025; 14(18):6549. https://doi.org/10.3390/jcm14186549

Chicago/Turabian StyleYoon, Chul Young, Junhun Lee, Jiwon Kim, Sunghwa You, Chanbeom Kwak, and Young Joon Seo. 2025. "AI-Based Prediction of Bone Conduction Thresholds Using Air Conduction Audiometry Data" Journal of Clinical Medicine 14, no. 18: 6549. https://doi.org/10.3390/jcm14186549

APA StyleYoon, C. Y., Lee, J., Kim, J., You, S., Kwak, C., & Seo, Y. J. (2025). AI-Based Prediction of Bone Conduction Thresholds Using Air Conduction Audiometry Data. Journal of Clinical Medicine, 14(18), 6549. https://doi.org/10.3390/jcm14186549