AI Based Clinical Decision-Making Tool for Neurologists in the Emergency Department

Abstract

1. Introduction

2. Materials and Methods

2.1. Standard Protocol Approvals, Registrations, and Patient Consents

2.2. Cohort Identification

2.3. Framework Development

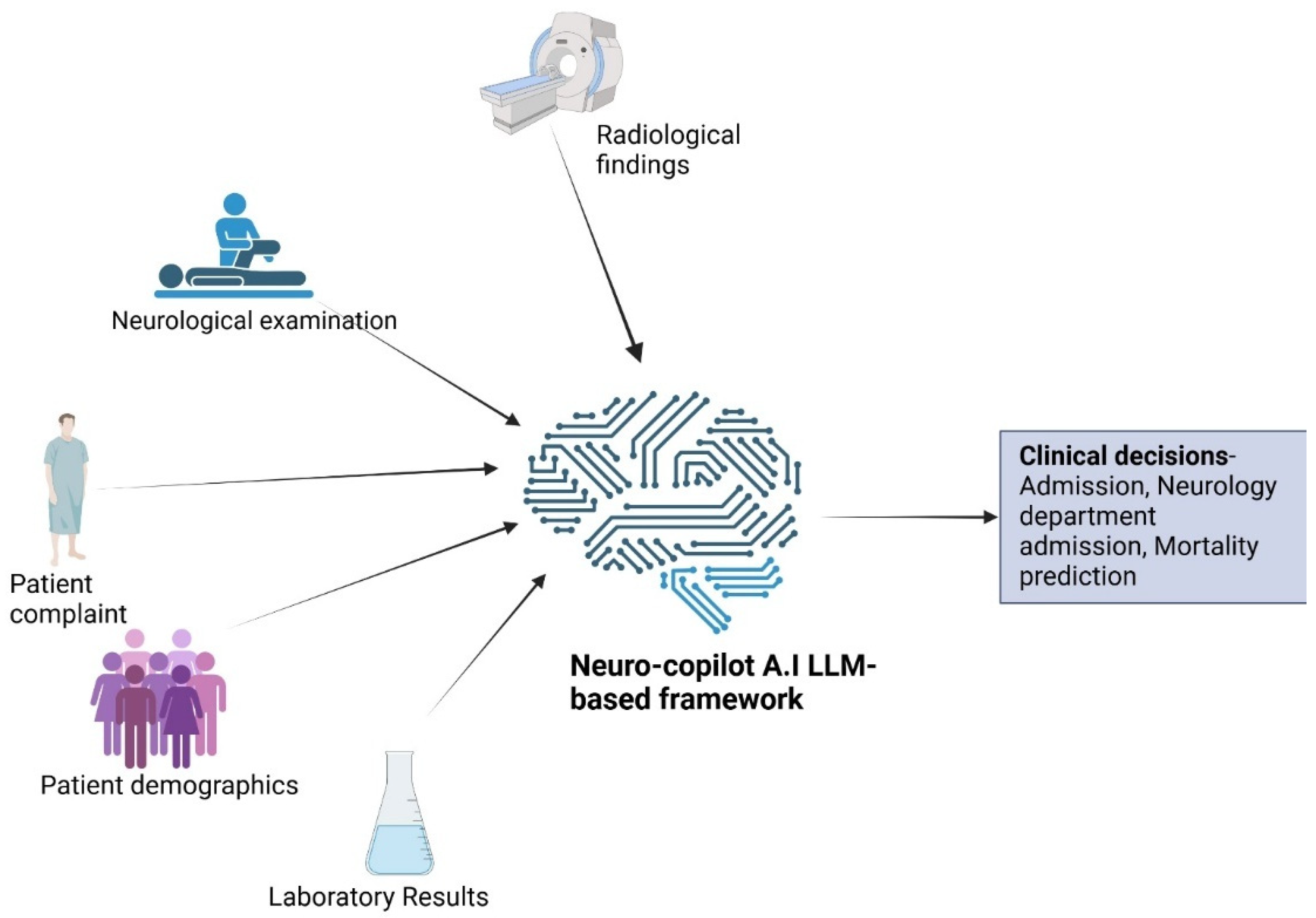

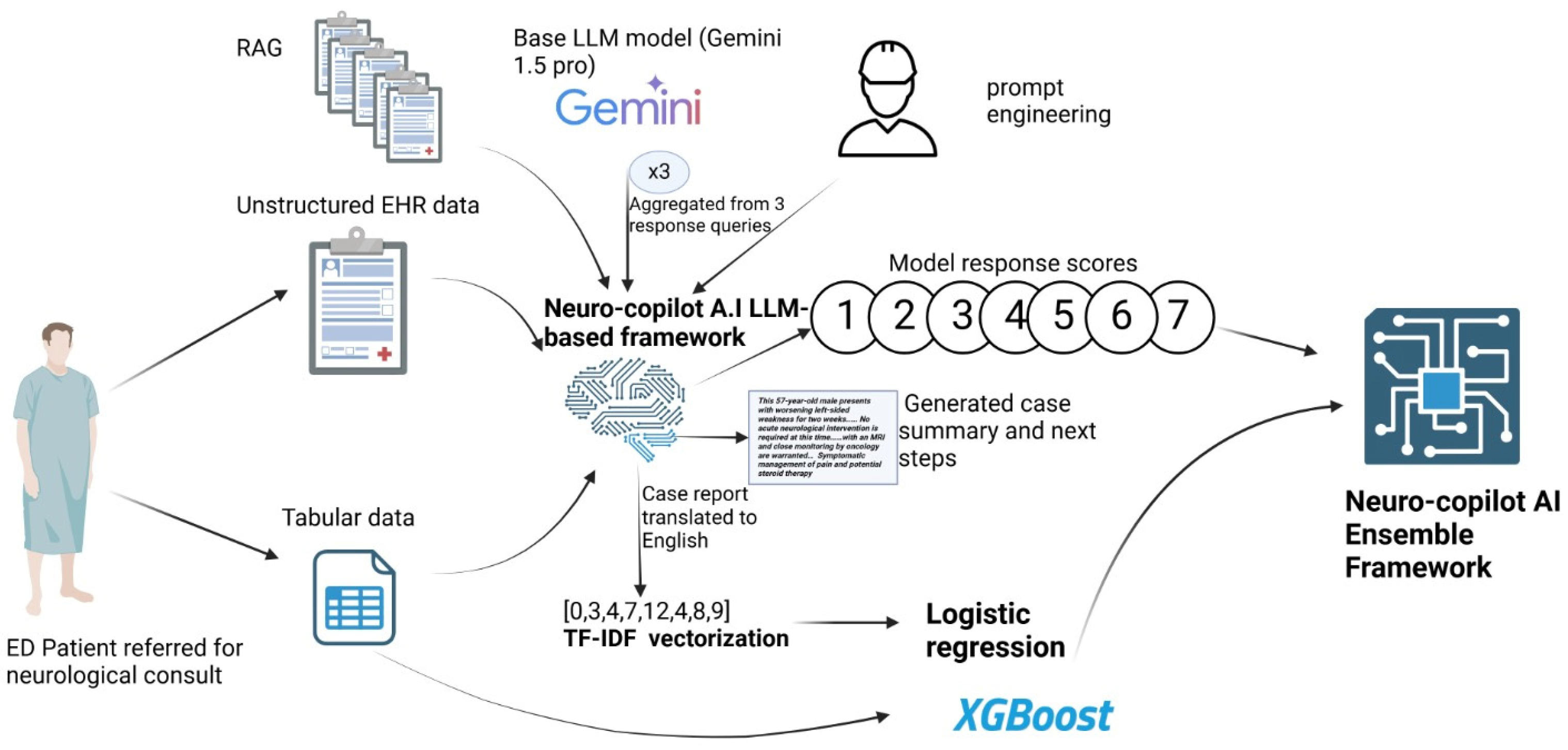

LLM-Based Framework Development

2.4. Machine Learning Framework Development

2.5. Ensemble Framework Development

3. Overview of Analyses

Statistical Analysis

4. Results

4.1. Cohort Characteristics

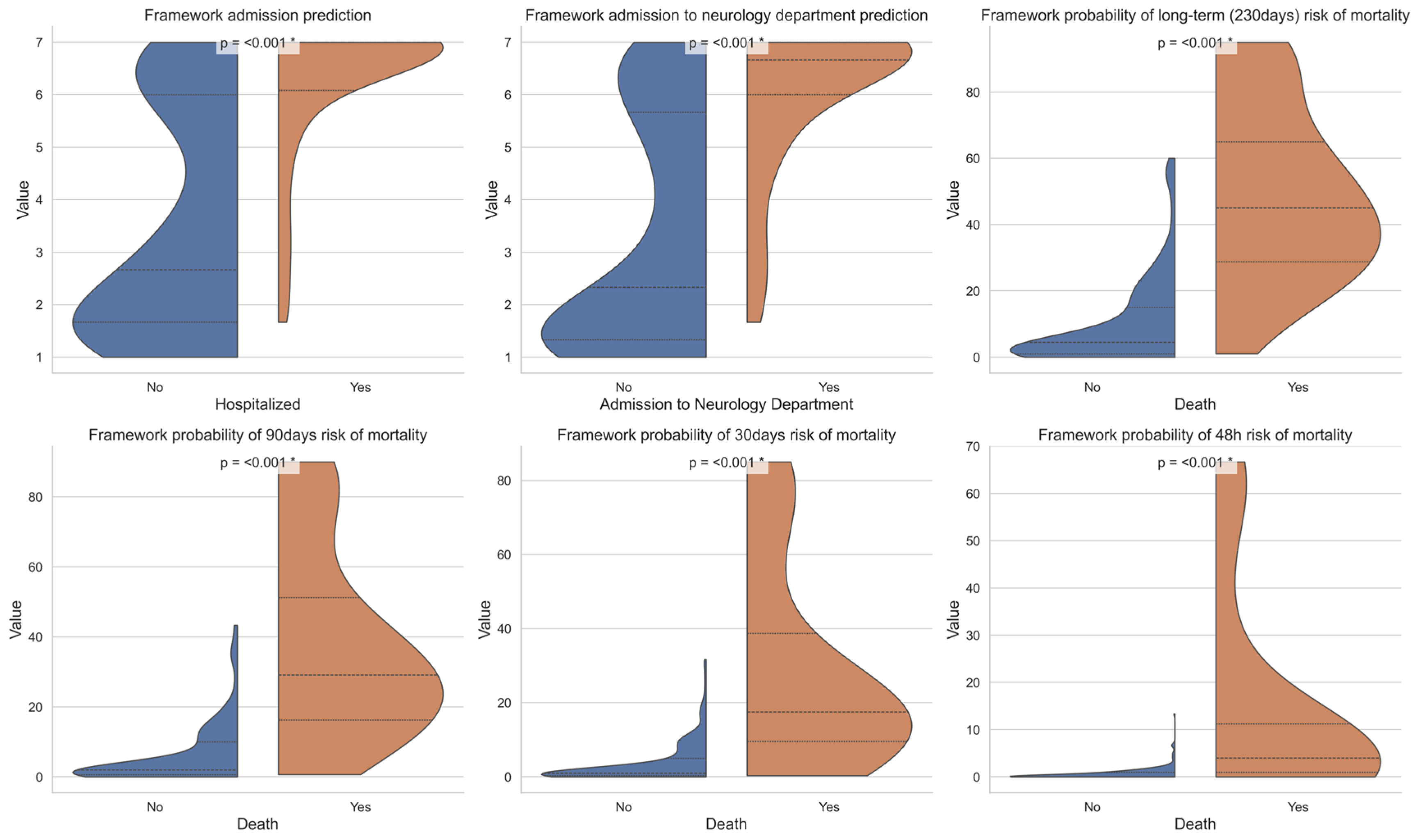

4.2. Admission Prediction

4.3. Mortality Prediction

4.4. Comparison to Expert Assessments

5. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cairns, C.; Ashman, J.J.; Kang, K. Emergency Department Visit Rates by Selected Characteristics: United States, 2022; NCHS Data Briefs 2024; CDC: Atlanta, GA, USA, 2024. [Google Scholar] [CrossRef]

- Kenny, J.F.; Chang, B.C.; Hemmert, K.C. Factors Affecting Emergency Department Crowding. Emerg. Med. Clin. N. Am. 2020, 38, 573–587. [Google Scholar] [CrossRef]

- Lindner, G.; Woitok, B.K. Emergency department overcrowding. Wien. Klin. Wochenschr. 2020, 133, 229–233. [Google Scholar] [CrossRef] [PubMed]

- Duseja, R.; Bardach, N.S.; Lin, G.A.; Yazdany, J.; Dean, M.L.; Clay, T.H.; Boscardin, W.J.; Dudley, R.A. Revisit Rates and Associated Costs After an Emergency Department Encounter. Ann. Intern. Med. 2015, 162, 750–756. [Google Scholar] [CrossRef]

- Rodziewicz, T.L.; Houseman, B.; Vaqar, S.; Hipskind, J.E. Medical Error Reduction and Prevention. In StatPearls; StatPearls Publishing: St. Petersburg, FL, USA, 2024. Available online: http://www.ncbi.nlm.nih.gov/books/NBK499956/ (accessed on 20 August 2024).

- Pelaccia, T.; Messman, A.M.; Kline, J.A. Misdiagnosis and failure to diagnose in emergency care: Causes and empathy as a solution. Patient Educ. Couns. 2020, 103, 1650–1656. [Google Scholar] [CrossRef] [PubMed]

- Varner, C. Emergency departments are in crisis now and for the foreseeable future. Can. Med. Assoc. J. 2023, 195, E851–E852. [Google Scholar] [CrossRef] [PubMed]

- Majersik, J.J.; Ahmed, A.; Chen, I.-H.A.; Shill, H.; Hanes, G.P.; Pelak, V.S.; Hopp, J.L.; Omuro, A.; Kluger, B.; Leslie-Mazwi, T. A Shortage of Neurologists—We Must Act Now. Neurology 2021, 96, 1122–1134. [Google Scholar] [CrossRef]

- Callaghan, B.C.; Burke, J.F.; Kerber, K.A.; Skolarus, L.E.; Ney, J.P.; Magliocco, B.; Esper, G.J. The association of neurologists with headache health care utilization and costs. Neurology 2018, 90, e525–e533. [Google Scholar] [CrossRef]

- van der Linden, M.C.; Brand, C.L.v.D.; Wijngaard, I.R.v.D.; de Beaufort, R.A.Y.; van der Linden, N.; Jellema, K. A dedicated neurologist at the emergency department during out-of-office hours decreases patients’ length of stay and admission percentages. J. Neurol. 2018, 265, 535–541. [Google Scholar] [CrossRef]

- Hill, C.E.; Lin, C.C.; Burke, J.F.; Kerber, K.A.; Skolarus, L.E.; Esper, G.J.; Magliocco, B.; Callaghan, B.C. Claims data analyses unable to properly characterize the value of neurologists in epilepsy care. Neurology 2019, 92, e973–e987. [Google Scholar] [CrossRef]

- Alowais, S.A.; Alghamdi, S.S.; Alsuhebany, N.; Alqahtani, T.; Alshaya, A.I.; Almohareb, S.N.; Aldairem, A.; Alrashed, M.; Bin Saleh, K.; Badreldin, H.A.; et al. Revolutionizing healthcare: The role of artificial intelligence in clinical practice. BMC Med. Educ. 2023, 23, 689. [Google Scholar] [CrossRef]

- Gorenshtein, A.; Sorka, M.; Khateb, M.; Aran, D.; Shelly, S. Agent-guided AI-powered interpretation and reporting of nerve conduction studies and EMG (INSPIRE). Clin. Neurophysiol. 2025, 177, 2110792. [Google Scholar] [CrossRef] [PubMed]

- Gorenshtein, A.; Weisblat, Y.; Khateb, M.; Kenan, G.; Tsirkin, I.; Fayn, G.; Geller, S.; Shelly, S. AI-Based EMG Reporting: A Randomized Controlled Trial. J. Neurol. 2025, 272, 586. [Google Scholar] [CrossRef]

- Sorka, M.; Gorenshtein, A.; Abramovitch, H.; Soontrapa, P.; Shelly, S.; Aran, D. AI vs human performance in conversational hospi-tal-based neurological diagnosis. medRxiv 2025. [Google Scholar] [CrossRef]

- Kachman, M.M.; Brennan, I.; Oskvarek, J.J.; Waseem, T.; Pines, J.M. How artificial intelligence could transform emergency care. Am. J. Emerg. Med. 2024, 81, 40–46. [Google Scholar] [CrossRef]

- Barash, Y.; Klang, E.; Konen, E.; Sorin, V. ChatGPT-4 Assistance in Optimizing Emergency Department Radiology Referrals and Imaging Selection. J. Am. Coll. Radiol. 2023, 20, 998–1003. [Google Scholar] [CrossRef] [PubMed]

- Hoppe, J.M.; Auer, M.K.; Strüven, A.; Massberg, S.; Stremmel, C. ChatGPT With GPT-4 Outperforms Emergency Department Physicians in Diagnostic Accuracy: Retrospective Analysis. J. Med. Internet Res. 2024, 26, e56110. [Google Scholar] [CrossRef]

- Berg, H.T.; van Bakel, B.; van de Wouw, L.; Jie, K.E.; Schipper, A.; Jansen, H.; O’Connor, R.D.; van Ginneken, B.; Kurstjens, S. ChatGPT and Generating a Differential Diagnosis Early in an Emergency Department Presentation. Ann. Emerg. Med. 2024, 83, 83–86. [Google Scholar] [CrossRef]

- Voigtlaender, S.; Pawelczyk, J.; Geiger, M.; Vaios, E.J.; Karschnia, P.; Cudkowicz, M.; Dietrich, J.; Haraldsen, I.R.J.H.; Feigin, V.; Owolabi, M.; et al. Artificial intelligence in neurology: Opportunities, challenges, and policy implications. J. Neurol. 2024, 271, 2258–2273. [Google Scholar] [CrossRef]

- Sarker, I.H. Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications and Research Directions. SN Comput. Sci. 2021, 2, 420. [Google Scholar] [CrossRef]

- Jiang, L.Y.; Liu, X.C.; Pour Nejatian, N.; Nasir-Moin, M.; Wang, D.; Abidin, A.; Eaton, K.; Riina, H.A.; Laufer, I.; Punjabi, P.; et al. Health system-scale language models are all-purpose prediction engines. Nature 2023, 619, 357–362. [Google Scholar] [CrossRef]

- Glicksberg, B.S.; Timsina, P.; Patel, D.; Sawant, A.; Vaid, A.; Raut, G.; Charney, A.W.; Apakama, D.; Carr, B.G.; Freeman, R.; et al. Evaluating the accuracy of a state-of-the-art large language model for prediction of admissions from the emergency room. J. Am. Med. Informatics Assoc. 2024, 31, 1921–1928. [Google Scholar] [CrossRef]

- Zhao, C.; Lee, K.; Do, D. Neurology consults in emergency departments. Neurol. Clin. Pract. 2020, 10, 149–155. [Google Scholar] [CrossRef]

| Features | Total (n = 1368) | Hospitalized (n = 625) | Discharged (n = 743) | p-Value |

|---|---|---|---|---|

| Age at arrival to emergency department (ED) (median, interquartile range (IQR)) | 58.6 [38.37–74.5] | 68.6 [51.9–77.7] | 48 [31.5–67.5] | <0.001 |

| Male (n, %) | 663 (48.46%) | 335 (53.6%) | 328 (44.14%) | <0.001 |

| Mortality | 106 (7.74%) | 86 (13.7%) | 20 (2.69%) | <0.001 |

| Time from arrival to ED to mortality (Days) (M ± standard deviation (SD)) | 56.91 ± 57.35 | 52.90 ± 58.03 | 74.15 ± 52.20 | 0.028 |

| Time from arrival to ED to neurological consult (Hours) [M ± SD] | 2.1 ± 2.4 | 1.91 ± 2.59 | 2.27 ± 2.21 | <0.001 |

| Number of neurological consults after 19:00 until 8:00 (Night shift) | 364 (26.6%) | 225 (36%) | 139 (18.7%) | <0.001 |

| Priority level 1 (P1) | 142 (10.38%) | 121 (19.36%) | 21 (2.82%) | <0.001 |

| ICD-9 code with diseases of the nervous system at release from ED | 728 (53.21%) | 416 (66.56%) | 312 (41.99%) | <0.001 |

| Neurological category of consult based on ICD-9 code on release | ||||

| Stroke and cerebrovascular disorders | 301 (22%) | 275 (44%) | 26 (3.49%) | <0.001 |

| Seizure disorders | 141 (10.3%) | 62 (9.92%) | 80 (10.76%) | 0.67 |

| Headache disorders | 179 (13%) | 18 (0.16%) | 161 (21.66%) | <0.001 |

| Neuromuscular disorders | 45 (3.28%) | 11 (1.76%) | 34 (4.57%) | <0.001 |

| Central demyelinating disorders | 16 (1.16%) | 15 (2.4%) | 1 (0.13%) | <0.001 |

| Infections of the nervous system disorders | 13 (0.95%) | 12 (1.92%) | 1 (0.13%) | 0.001 |

| Neurodegenerative disorders | 5 (0.36%) | 3 (0.48%) | 2 (0.26%) | 0.84 |

| Other disorder of central nervous system (CNS) | 28 (2.04%) | 21 (3.36%) | 7 (0.94%) | <0.001 |

| International Classification of Diseases-9 (ICD-9) code without diseases of the nervous system and sense organs at release from ED | 640 (46.78%) | 209 (33.44%) | 431 (58%) | <0.001 |

| Imaging conducted at ED | ||||

| Computed tomography (CT) | 1075 (78.58%) | 558 (89.28%) | 517 (69.31%) | <0.001 |

| Magnetic resonance imaging (MRI) | 43 (3.14%) | 43 (6.88%) | 0 | <0.001 |

| Electrocardiography (ECG) | 836 (61.1%) | 515 (82.4%) | 321 (43.2%) | <0.001 |

| Laboratory data | ||||

| Blood test | 1261 (92.1%) | 617 (98.7%) | 644 (86.6%) | <0.001 |

| Lumbar puncture | 69 (5.04%) | 45 (7.2%) | 24 (3.23%) | 0.001 |

| Urine test | 215 (15.71%) | 126 (20.16%) | 89 (11.97%) | <0.001 |

| Treatment data | ||||

| Tissue-type plasminogen activator | 29 (2.11%) | 29 (4.64%) | 0 | <0.001 |

| Opiate drugs | 39 (2.85%) | 8 (1.28%) | 31 (4.17%) | 0.002 |

| Triptan drugs | 1 (0.07%) | 1 (0.16%) | 0 | 1 |

| Corticosteroid therapy | 71 (5.19%) | 47 (3.43%) | 24 (3.23%) | <0.001 |

| Anticonvulsant drugs | 151 (11.03%) | 92 (14.72%) | 59 (7.94%) | <0.001 |

| Antibiotic drugs | 68 (4.97%) | 58 (9.28%) | 10 (1.34%) | <0.001 |

| Antiplatelet drugs | 144 (10.52%) | 135 (21.6%) | 9 (1.21%) | <0.001 |

| Antiemetic drugs | 166 (12.13%) | 65 (10.4%) | 101 (13.59%) | 0.085 |

| IV fluid therapy | 351 (25.65%) | 168 (26.88%) | 184 (24.76%) | 0.37 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gorenshtein, A.; Fistel, S.; Sorka, M.; Telman, G.; Winer, R.; Peretz, S.; Aran, D.; Shelly, S. AI Based Clinical Decision-Making Tool for Neurologists in the Emergency Department. J. Clin. Med. 2025, 14, 6333. https://doi.org/10.3390/jcm14176333

Gorenshtein A, Fistel S, Sorka M, Telman G, Winer R, Peretz S, Aran D, Shelly S. AI Based Clinical Decision-Making Tool for Neurologists in the Emergency Department. Journal of Clinical Medicine. 2025; 14(17):6333. https://doi.org/10.3390/jcm14176333

Chicago/Turabian StyleGorenshtein, Alon, Shiri Fistel, Moran Sorka, Gregory Telman, Raz Winer, Shlomi Peretz, Dvir Aran, and Shahar Shelly. 2025. "AI Based Clinical Decision-Making Tool for Neurologists in the Emergency Department" Journal of Clinical Medicine 14, no. 17: 6333. https://doi.org/10.3390/jcm14176333

APA StyleGorenshtein, A., Fistel, S., Sorka, M., Telman, G., Winer, R., Peretz, S., Aran, D., & Shelly, S. (2025). AI Based Clinical Decision-Making Tool for Neurologists in the Emergency Department. Journal of Clinical Medicine, 14(17), 6333. https://doi.org/10.3390/jcm14176333