1. Introduction

In recent years, large language models (LLMs) such as ChatGPT, Google Gemini, and Microsoft Copilot have emerged as novel sources of health information, gradually supplementing—and in some cases replacing—traditional online search engines like Google. These tools promise conversational, easily digestible outputs and are being increasingly adopted by the general public for medical questions. Recent studies indicate that 14% of laypeople now use LLMs as their first source for health information [

1]. However, adoption patterns vary: healthcare professionals, older adults, and women tend to be more hesitant in adopting LLMs for medical use [

2].

LLMs have shown promising results across a broad range of healthcare applications, including generating patient-friendly discharge summaries [

3], providing general health advice [

4,

5], and supporting patient education [

6]. Their development builds on broader advances in artificial intelligence that have also impacted biomedical research, such as molecular modeling [

7]. Beyond these applications, LLMs have demonstrated potential in analyzing mental health dialogs [

8], simplifying complex content such as electronic health records [

9,

10], and streamlining clinical communication and operational efficiency [

11]. Their rapid advancement—driven by transformer-based architectures and large-scale training—has made them widely accessible and highly persuasive, further increasing their influence in patient-facing settings.

Despite this potential, concerns persist regarding the reliability and safety of LLMs in medical contexts. Evaluations consistently highlight risks related to hallucinated facts, oversimplification, and factual inaccuracies—issues that could lead to harm when these tools are used without supervision [

12,

13]. Most studies to date have focused on technical benchmarks such as factual accuracy or performance on structured question-answering tasks, while real-world deployment challenges—including equity, emotional nuance, and guideline adherence—remain underexplored [

14]. Ensuring transparent, ethical, and guideline-conforming information is an ongoing challenge for the safe integration of LLMs into clinical practice [

15,

16].

One clinically relevant scenario where these capabilities and limitations intersect is the management of incidentally discovered kidney tumors. Such findings have become more common with the widespread use of cross-sectional imaging for unrelated complaints, such as back pain or abdominal discomfort [

17]. Although many of these lesions are asymptomatic and indolent, they often provoke significant patient anxiety, especially when disclosed without sufficient context. Up to 33% of incidentally detected renal tumors may be benign, with rates reaching 20% for tumors ≤ 4 cm in size [

18,

19,

20,

21]. Despite advances in imaging, CT-based accuracy remains limited in differentiating benign from malignant tumors; it is sometimes as low as 17% [

19]. This diagnostic uncertainty often leads patients to seek additional information online [

22,

23]. When treatment is required, options include minimally invasive interventions such as robot-assisted partial nephrectomy, often supported by 3D surgical planning [

24], alongside alternative approaches such as surveillance or percutaneous ablation.

Patients increasingly turn to LLMs to interpret such findings and explore treatment options. Yet it remains unknown how well current models handle emotionally sensitive, high-stakes medical scenarios, particularly those involving possible malignancies. To date, no systematic evaluation has examined how LLMs respond to patient queries in the context of an incidental kidney tumor diagnosis.

This study addresses that gap by evaluating the quality, completeness, clarity, and safety of responses generated by three major LLMs—ChatGPT-4o, Google Gemini, and Microsoft Copilot—to typical patient questions following the incidental discovery of a kidney tumor. The focus is on whether these tools provide guideline-conform, comprehensible, and safe information or whether they risk introducing misinformation, omissions, or misleading reassurance in a high-stakes clinical context.

2. Materials and Methods

This study was designed to evaluate the quality of responses provided by three publicly accessible LLMs—ChatGPT-4o, Microsoft Copilot, and Google Gemini 2.5 Pro—in the context of a clinically realistic patient scenario. The use case centered on a common situation: a patient undergoing a CT scan for lower back pain is unexpectedly informed of a suspicious renal lesion. This scenario was selected for its high relevance in daily urological practice and its frequent role in prompting patients to seek additional health information online.

To simulate typical patient behavior, ten standardized questions were developed based on clinical experience, the published literature, and themes commonly raised by patients after the incidental discovery of a kidney tumor. These questions ranged from basic concerns about diagnosis (e.g., “Do I have cancer?”) to treatment-related decisions (e.g., “Can I wait and see?”). The phrasing was intentionally plain and reflective of how a layperson might formulate queries to an AI chatbot. The same ten prompts were used across all tested LLMs without additional clarification, contextualization, or prompt engineering. The full list of questions is included in

Appendix A.1.

Each question was entered into each LLM on the same date in July 2025 using the default settings and interface provided by each respective platform. Only the initial response was analyzed; no follow-up prompts or clarifying dialog were used. The same user account was employed for each session to reduce variation due to personalization or system-specific session effects.

The quality of LLM responses was evaluated by five independent reviewers, all board-certified urologists with 3 to 15 years of clinical experience and from multiple institutions. Reviewers assessed each response according to a structured rubric consisting of six evaluation domains: (a) accuracy, defined as factual correctness and adherence to current medical guidelines; (b) completeness, referring to the degree to which the response addressed all aspects of the patient’s query; (c) clarity, referring to the comprehensibility of the response for a general audience; (d) currency, referring to the use of up-to-date terminology and knowledge; (e) harm potential, indicating the risk that a patient might be misled or harmed by the advice given (scored inversely); and (f) hallucinations, defined as the presence of fabricated or unverifiable content. The evaluation rubric used in our study aligns with methods employed in prior LLM assessments [

4].

Each domain was rated on a five-point Likert scale, with higher scores representing better performance. Reviewers also flagged responses with factual errors, omissions, or a potentially misleading content. Although reviewers were not blinded to the identity of the LLMs due to the characteristic phrasing of some platforms, they were unaware of the study hypothesis and instructed to rate responses independently. To ensure consistency and reproducibility, all evaluations were completed within a ten-day period under identical review conditions. The evaluation instrument used for expert review is provided in

Appendix A.2. To complement the main evaluations, an independent scoring framework was also applied by two authors, who independently assessed all LLM responses using the same six-domain rubric. While the rating criteria remained identical, the evaluators were instructed to weigh domains differently based on their clinical judgment—placing more emphasis on factual accuracy and potential for harm. This secondary analysis served as an internal validation check to assess the robustness of the primary findings across different interpretative priorities.

Statistical analysis was conducted using Python (version 3.11) with the pandas, scipy, pingouin, and scikit-posthocs libraries and IBM SPSS Statistics 29 (Armonk, NY, USA). Given the ordinal nature of Likert data and the repeated-measures design (i.e., all raters evaluated all LLMs), non-parametric tests were used.

Descriptive statistics (median, interquartile range) were calculated for each domain and model. Overall differences were assessed using the Friedman test, a non-parametric method suitable for repeated ordinal measures. When significant differences were detected, Wilcoxon signed-rank tests with Bonferroni correction were applied for pairwise comparisons. Correlations between evaluation domains were examined using Spearman’s rank correlation coefficient. A two-sided p-value < 0.05 was considered significant, with adjusted thresholds of α = 0.0083 for six domains and α = 0.0167 for post-hoc comparisons.

To complement the qualitative evaluation, the readability of each LLM-generated response was assessed using two established linguistic metrics: the Flesch Reading Ease (FRE) score and the Flesch–Kincaid Grade Level (FKGL). These measures were computed for each individual answer using the textstat Python package (version 0.7.3), which implements standard readability formulas based on sentence length and syllable count. The FRE score ranges from 0 to 100, with higher values indicating greater readability, while the FKGL estimates the U.S. school grade level required to comprehend the text. Readability scores were calculated across all responses for each LLM and summarized using descriptive statistics.

3. Results

A total of 150 individual evaluations were performed across the three models (10 questions per model, rated by five urologists). The complete dataset of all model responses and reviewer ratings is provided in the

Supplementary Materials for reference. All LLMs demonstrated high overall performance, with median scores exceeding 4.0 across most domains, as shown in

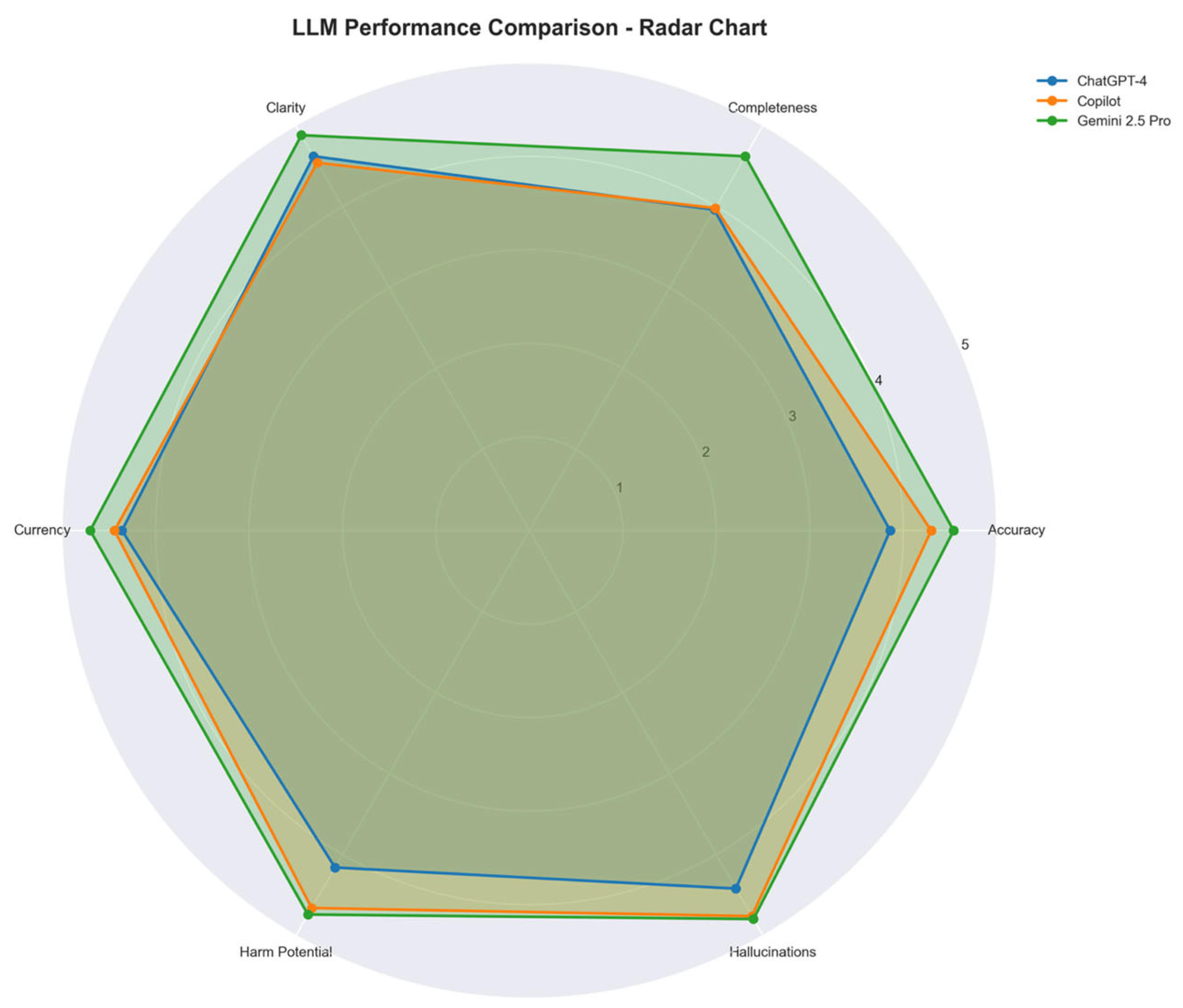

Table 1. Google Gemini 2.5 Pro achieved the highest mean performance (mean score: 4.71), followed by Microsoft Copilot (4.43) and ChatGPT-4o (4.06). Median scores were highest for Gemini in all six domains except hallucinations, where all three models received median scores of 5.0. These differences are visually illustrated in

Figure 1, which compares the mean domain scores across all models using a radar chart.

The Friedman test revealed statistically significant differences between the three LLMs in five of the six evaluation domains, including accuracy (χ2 = 24.64, p < 0.0001), completeness (χ2 = 25.82, p < 0.0001), clarity (χ2 = 16.72, p = 0.0002), currency (χ2 = 20.83, p < 0.0001), and risk of harm (χ2 = 24.40, p < 0.0001). No significant difference was observed in the hallucination domain (χ2 = 1.54, p = 0.46). Pairwise post-hoc comparisons using the Wilcoxon signed-rank test confirmed that Gemini significantly outperformed ChatGPT-4o across all significantly differing domains (p < 0.01 for all comparisons). Copilot outperformed ChatGPT-4o in the domains of harm potential and completeness but was not significantly different from Gemini in most areas.

Evaluation of the individual questions revealed that Questions 4, 8, and 9 received the lowest mean ratings across all models, suggesting these prompts were more complex or ambiguous for LLM interpretation. Nevertheless, Gemini’s scores remained consistently high even for these questions.

For example, in response to the question “Do I have cancer?”, Gemini 2.5 Pro provided a nuanced explanation of diagnostic uncertainty, noting that additional imaging or biopsy is typically required to distinguish benign from malignant lesions. ChatGPT-4o offered a more conversational summary, reassuring the user without emphasizing the importance of follow-up diagnostics. Microsoft Copilot was notable for including a clear disclaimer, explicitly stating that it could not make medical diagnoses, but it failed to convey next steps or key decision criteria. These qualitative differences illustrate how variations in tone, completeness, and risk framing can emerge even for straightforward patient questions.

A positive correlation was observed between scores for accuracy and reduced risk of harm (Spearman’s r = 0.722), indicating that more accurate responses were generally considered safer for patients. Additional correlations were seen between completeness and clarity (r = 0.68), suggesting that models providing more comprehensive answers also tended to be easier to understand. Inter-rater reliability was assessed using Fleiss’ Kappa for each quality domain across all of the evaluated LLM responses. Agreement was substantial for most domains, with the highest consensus in the dimension of harm potential (κ = 0.64), indicating consistent risk assessment across raters.

The secondary analysis using an independent scoring framework, conducted by two authors applying different domain weightings, corroborated the main findings, with Gemini consistently ranking highest and ChatGPT-4o lowest across the key domains of accuracy, completeness, and harm potential.

The readability analysis supported the qualitative findings. Google Gemini 2.5 Pro demonstrated the highest readability, with a FRE score of 50.58 and an FKGL of 10.59, indicating text suitable for individuals with a 10th–11th grade reading level. ChatGPT-4o followed with an FRE score of 46.48 and FKGL of 11.94. Microsoft Copilot produced the most linguistically complex responses, with a FRE score of 39.05 and FKGL of 13.24, reflecting content that typically requires college-level comprehension. These differences in readability may partly explain variation in clarity ratings among the three models and have implications for patient accessibility, as detailed in

Table 2.

In summary, while all three models demonstrated acceptable performance for answering patient-facing questions related to incidental kidney tumors, Google Gemini 2.5 Pro consistently provided the most accurate, complete, safe, and accessible responses. Microsoft Copilot performed well in safety and empathy-related aspects but presented a higher reading burden. ChatGPT-4o, although generally accurate and readable, lagged behind in completeness and safety domains.

4. Discussion

In this study, we systematically evaluated how three general-purpose LLMs—ChatGPT-4o, Microsoft Copilot, and Google Gemini 2.5 Pro—respond to typical patient questions following the incidental diagnosis of a suspicious kidney tumor. Our findings reveal significant variation across models in both content quality and communication style, highlighting key strengths and persistent limitations in emotionally sensitive, high-stakes clinical scenarios.

Among the models, Google Gemini 2.5 Pro achieved the highest mean ratings across most domains, particularly in completeness, clarity, and low hallucination frequency. Microsoft Copilot was notable for its consistent inclusion of disclaimers about its limitations (e.g., “I’m not a doctor…”), reinforcing its appropriate use as an informational support tool. It also frequently demonstrated empathetic phrasing, offering emotional reassurance and practical advice such as reaching out to friends or counselors. ChatGPT-4o, while often effective in clarity and conversational structure, lagged slightly in completeness and factual accuracy and was less consistent in acknowledging its limitations.

These results align with prior studies that highlight both the promise and limitations of LLMs across medical domains. In prostate and urologic oncology, LLMs were generally accurate but often overly complex or lacking nuance [

25,

26,

27,

28,

29]. For example, studies in prostate cancer consistently noted that while LLMs offer generally accurate responses, they can be overly complex and difficult to interpret for patients [

25,

26,

27]. In the urological domain, other research highlighted both strengths and limitations in LLM-generated communication, including concerns about omission and tone [

27,

28,

29]. Recent studies with real-world patient or clinician comparators offer further perspective. Carl et al. [

30] evaluated GPT-4-based chatbot interactions among urology outpatients and found that most patients perceived the information as useful, understandable, and complete. However, urologists were still rated as more understandable and reliable than the chatbot. In a complementary study, Eckrich et al. [

31] compared LLM-generated responses to case-based urology questions with those from human consultants. While LLMs performed relatively well in linguistic domains such as coherence and comprehensibility, their medical adequacy was significantly inferior, and misinformation hazards were identified in up to 19% of their responses. Notably, most prior evaluations focused on structured or educational scenarios. By contrast, our study centers on the ambiguous and emotionally charged context of incidental imaging findings—an area not previously assessed.

Qualitative communication features also shaped user experience. ChatGPT’s use of follow-up prompts (e.g., “Would it help to walk through your CT findings together?”) and Copilot’s suggestions regarding clinical trials or emotional support reflect an anticipatory, conversational design. These may enhance perceived empathy and engagement.

However, such features raise ethical concerns around role boundaries and trust. While they may encourage patient empowerment, they can also imply clinical authority that LLMs are not equipped to assume. Importantly, our evaluation was limited to single-turn prompts; models were not given the opportunity to clarify or revise their responses. In iterative settings—where patients provide additional context or follow-up—LLMs may demonstrate improved empathy and coherence. Yet, this flexibility may also amplify risks, including the entrenchment of hallucinations or inappropriate reassurance if earlier inaccuracies are reinforced.

Despite overall strong readability scores (as confirmed by Flesch Reading Ease and Grade Level indices), models occasionally produced content with medical jargon or overly technical phrasing, particularly Gemini in some responses. These challenges mirror findings by Demir [

32] and Trapp et al. [

27], who noted that content tailored for lay audiences sometimes failed to achieve true accessibility.

Beyond linguistic accessibility, broader systemic concerns arise regarding equity and fairness in LLM deployment. Recent work has shown that these technologies may widen global resource disparities, particularly where access to computing infrastructure and linguistically diverse training data is limited [

33]. This imbalance is not only technical (e.g., unequal global access to GPU clusters) but also structural—models trained predominantly in English may provide lower-quality responses to non-English-speaking users, further reinforcing linguistic inequities. These trends raise concerns about emerging forms of “AI colonialism,” where technological advances disproportionately benefit wealthy regions. Moreover, the outsourcing of AI development to high-resource countries risks sidelining local medical knowledge systems, cultural communication norms, and health priorities. Without inclusive governance structures and equitable participation in model design and evaluation, these tools may entrench existing healthcare disparities. As LLMs are increasingly deployed in multilingual and resource-constrained environments, addressing these systemic imbalances becomes both an ethical and technical imperative. To mitigate these risks, future development should prioritize decentralization strategies such as localized training of lightweight models, open-access multilingual corpora, and equitable international collaborations to ensure responsible, inclusive deployment across diverse healthcare settings.

Inter-rater agreement in our study was substantial across domains, indicating that the evaluation rubric was reliable and clinically interpretable. The integration of an independent scoring framework provided further validation, confirming general trends while introducing slightly different weightings that highlight the complexity of assessing LLM-generated medical content.

Importantly, 14% of all responses were flagged as potentially problematic by at least one reviewer, most often due to incomplete risk framing, omission of diagnostic guidance, or overly reassuring tone. These issues underscore the need for robust oversight, especially when LLMs are used in high-stakes patient-facing scenarios.

To further contextualize the inherent subjectivity in evaluating hallucination and harm potential, we provide examples flagged by reviewers. Hallucinations were typically defined as factually incorrect medical statements, such as attributing kidney cancer risk to smoking cessation or misrepresenting guideline-recommended diagnostic steps (e.g., “Your doctor may order a PET-CT scan to confirm whether your kidney mass is benign or malignant”—a test not routinely used for initial renal mass evaluation). Responses flagged for harm potential often included overly reassuring language (e.g., “most kidney masses are harmless”) without appropriate emphasis on the need for clinical follow-up or suggested management strategies that could delay care. While guided by clinical expertise and rubric definitions, these qualitative judgments inevitably involved interpretation, underscoring the need for standardized definitions in future work.

Our study intentionally used unmodified prompts to simulate real-world usage, where patients often lack knowledge of prompt optimization. However, LLM performance can vary substantially based on how questions are phrased. Prompt engineering strategies, such as adding context, requesting citations, or adjusting tone, may improve outputs but also introduce variability that depends on the user’s digital literacy. Future work should explore how these factors influence LLM behavior, especially across diverse user populations. This raises an important challenge: not all users are equally equipped to formulate effective queries or evaluate AI-generated responses critically. As access to LLMs becomes more widespread, disparities in prompt literacy may exacerbate existing health information gaps. Incorporating user education, interface design improvements, and guardrails that ensure robust outputs regardless of input quality will be critical to supporting equitable use.

Taken together, our results expand the growing body in the literature dedicated to LLMs in healthcare. While these tools show strong potential in enhancing patient education and communication, they are not yet suitable for unsupervised deployment in high-stakes scenarios such as incidental cancer diagnoses. Further development of domain-specific, guideline-informed models, combined with transparent disclaimers and safety protocols, will be critical for ensuring trustworthy and equitable patient-facing AI applications.

5. Limitations

This study has several limitations that should be considered when interpreting the results.

First, we evaluated LLM responses using a fixed set of 10 standardized patient questions derived from clinical experience and prior studies. While this ensures comparability across models, it does not capture the full variability of real-world patient inputs, including follow-up questions, contextual elaborations, or diverse phrasing styles. Additionally, all prompts were submitted as single-turn inputs, without iterative follow-up questions, which limits our ability to assess the models’ behavior in more dynamic, conversational settings.

Second, all prompts were submitted in English using default settings, without fine-tuning, temperature adjustment, or user-specific customization. As a result, these findings may not generalize to other languages, cultural contexts, or user profiles, particularly those with lower health literacy or different healthcare expectations.

Third, although five board-certified urologists rated all responses using a structured rubric, domains such as “hallucination” and “risk of harm” remain inherently subjective. To mitigate this, we calculated inter-rater reliability and also applied an independent scoring framework, in which two additional reviewers re-scored all responses using the same domains but with a distinct emphasis on accuracy and harm potential. While this validation approach confirmed the main performance trends, it provided additional insight into how subjective domain weighting may influence outcome interpretation.

Fourth, while we incorporated Flesch Reading Ease and Grade Level indices to quantify readability, these metrics do not capture nuances such as tone, emotional resonance, or content relevance—factors which are especially important in emotionally sensitive diagnoses like cancer.

Fifth, the study evaluated only general-purpose, publicly available LLMs at a single time point in July 2025. Given the rapid evolution of these models, including changes to training data, safety filters, and system instructions, the findings represent a temporal snapshot. As such, model performance and output quality may shift with future updates, potentially affecting reproducibility. This underscores the need for continuous, transparent re-evaluation as LLMs evolve.

Additionally, our analysis did not include responses generated in other commonly used formats, such as voice-based interactions or app-integrated chatbots, which may shape user experience differently. Future research should explore how LLM output quality varies across platforms and modalities, especially given the growing use of mobile health applications and AI-powered virtual assistants in clinical and home settings.

Finally, this study did not assess real-world patient outcomes or behavior after exposure to LLM-generated content. As such, the practical impact on patient understanding, decision-making, or trust remains speculative, especially given the growing use of mobile health applications and AI-powered virtual assistants in clinical and home settings, where accessibility, personalization, and trust may differ considerably from desktop-based usage.

6. Conclusions

This study highlights both the potential and limitations of large language models (LLMs) in patient-facing healthcare communication. When presented with standardized patient questions following the incidental diagnosis of a kidney tumor, ChatGPT-4o, Microsoft Copilot, and Google Gemini 2.5 Pro each demonstrated distinct strengths and trade-offs.

Google Gemini 2.5 Pro produced the most clinically complete and accurate responses, though sometimes at the expense of readability. Microsoft Copilot stood out for its clear disclaimers and empathetic tone, which may foster trust and emotional reassurance. ChatGPT-4o excelled in clarity and conversational engagement but was less consistent in providing complete and precise medical information.

Despite these promising features, none of the models achieved consistently high performance across all evaluated domains. Occasional hallucinations, omissions of critical clinical detail, and limited tailoring to patient comprehension highlight the current risks of unsupervised use in emotionally charged, high-stakes clinical scenarios.

As LLMs continue to be integrated into healthcare environments, our findings support their cautious use as adjuncts—rather than replacements—for professional counseling. To ensure safer and more effective deployment in patient-facing contexts, we propose two actionable safeguards: (1) mandatory citation of clinical guideline sources within LLM-generated responses to enhance transparency and trust and (2) the implementation of real-time risk alert systems to flag hallucinations or potentially harmful advice before content is delivered.

These measures, combined with domain-specific training, adherence to medical guidelines, and regulatory oversight, will be essential to ensure that LLMs enhance—rather than compromise—patient understanding, trust, and safety.