Large Language Models for Adverse Drug Events: A Clinical Perspective

Abstract

1. Introduction

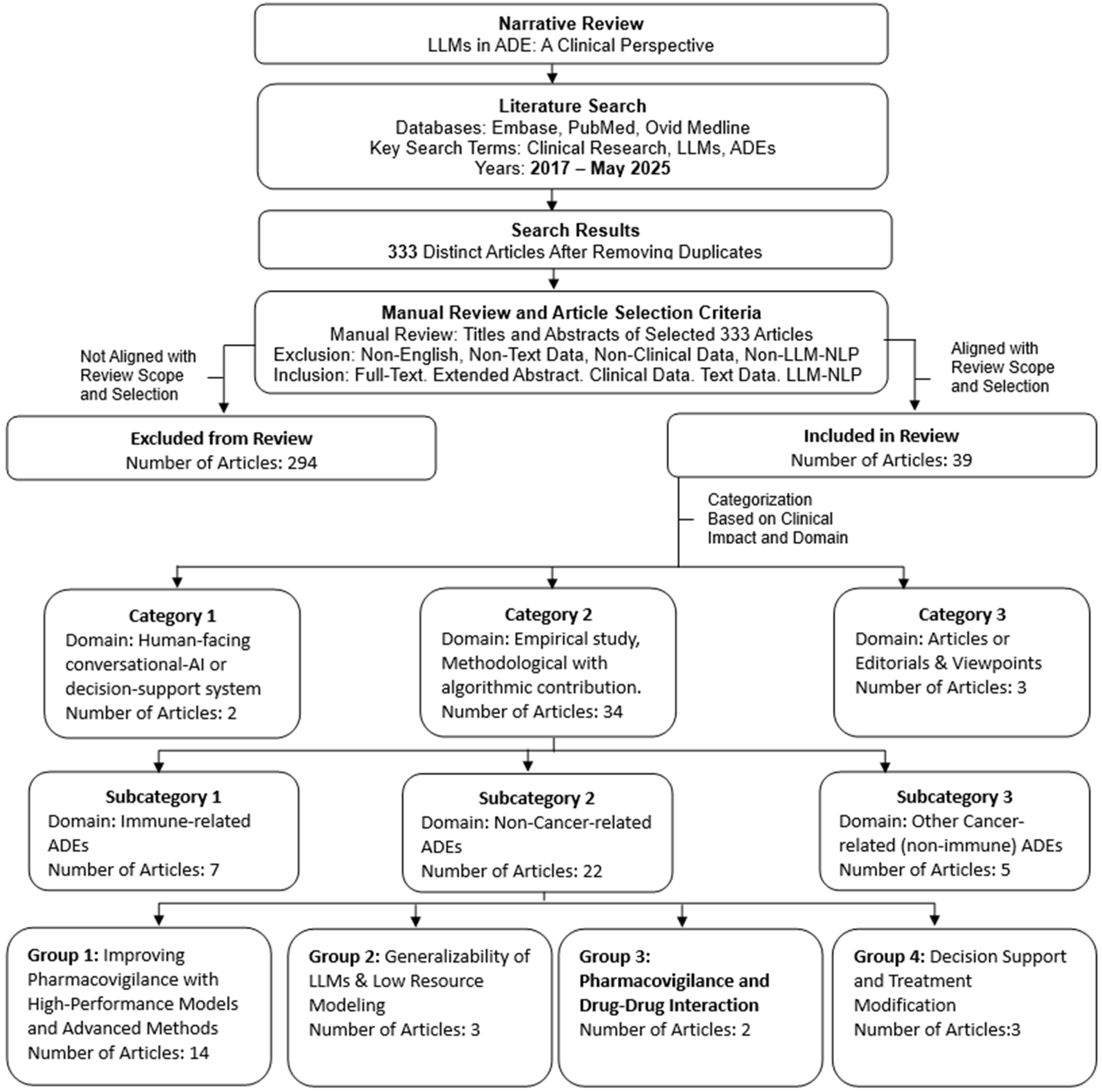

2. Materials and Methods

2.1. Purpose and Scope of the Review

2.2. Inclusion and Exclusion Criteria

2.3. Literature Search

2.4. Article Selection for Review

Rationale for Article Categorization

3. Results

4. Discussion

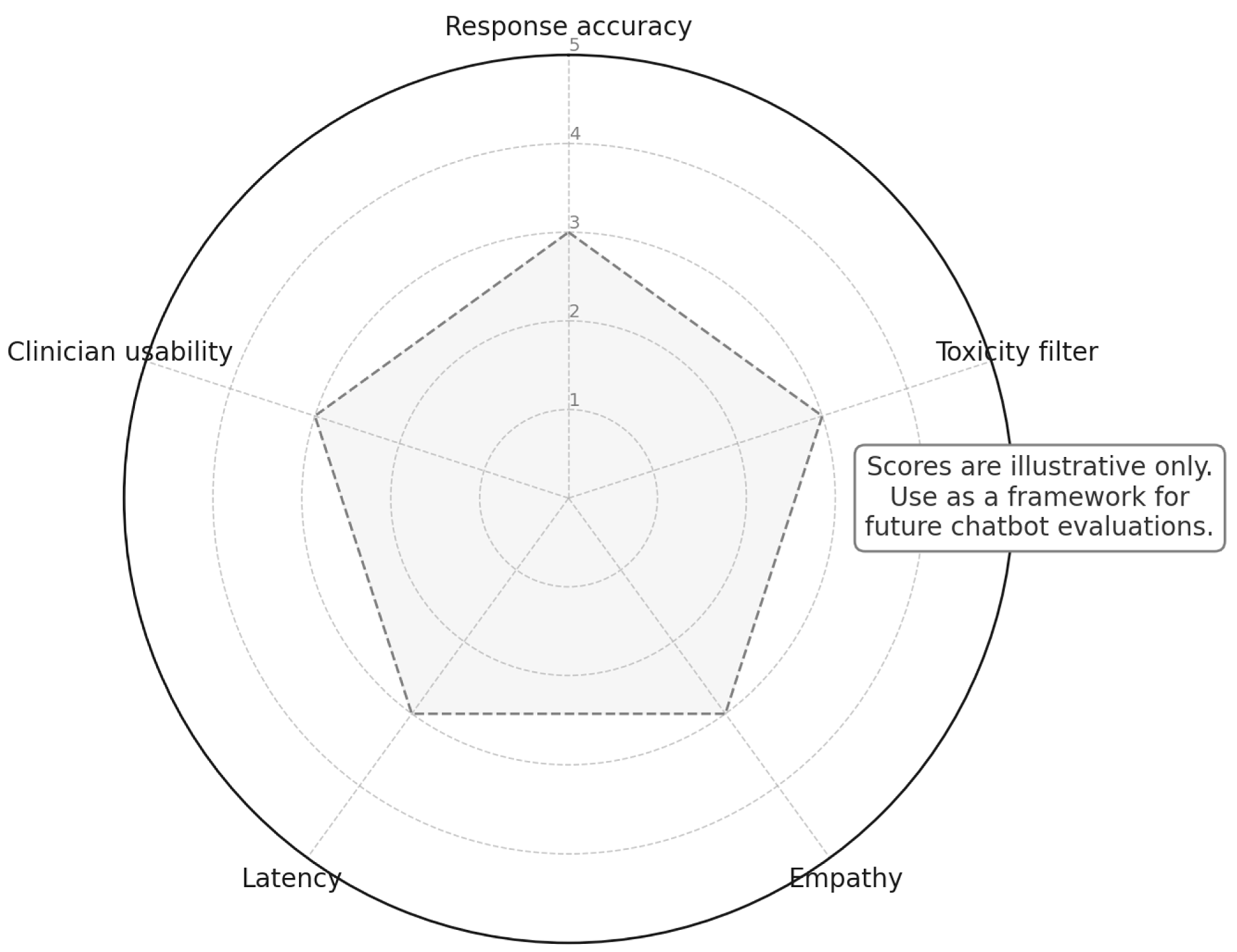

4.1. Human-Facing Conversational AI or Decision Support System

Communicating Outputs to End-Users

4.2. Empirical Studies with Clinical Relevance

4.2.1. Immune-Related ADEs

4.2.2. Other Cancer-Related (Non-Immune) ADEs

4.2.3. Non-Cancer-Related ADEs

Generalizability of LLMs and Low-Resource Modeling

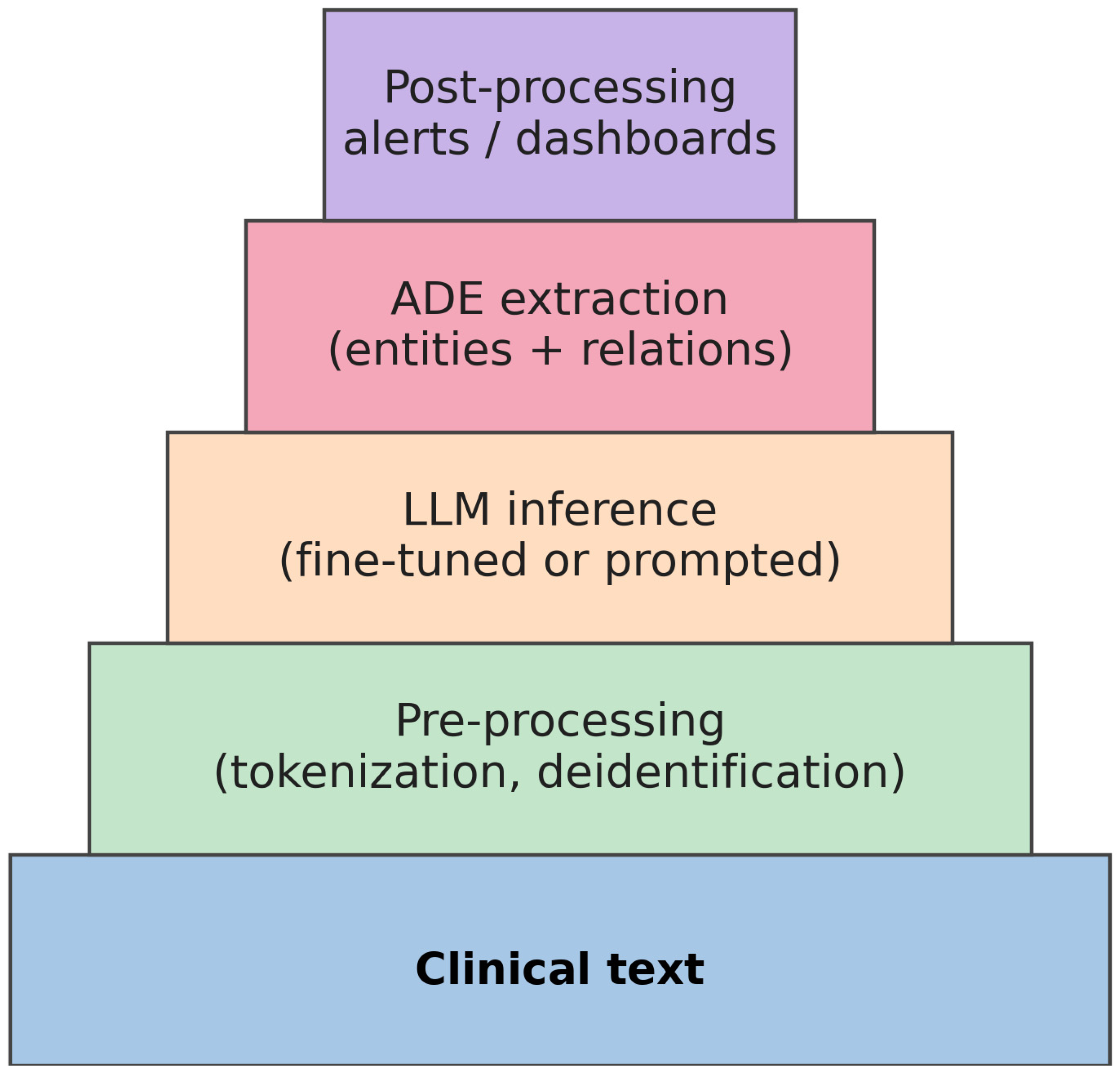

Improving Pharmacovigilance with High-Performance Models and Advanced Methods

Pharmacovigilance and Drug–Drug Interaction

Clinical Decision Support and Treatment Modification

4.2.4. Articles or Editorials and Viewpoints

5. Limitations

5.1. Generalizability

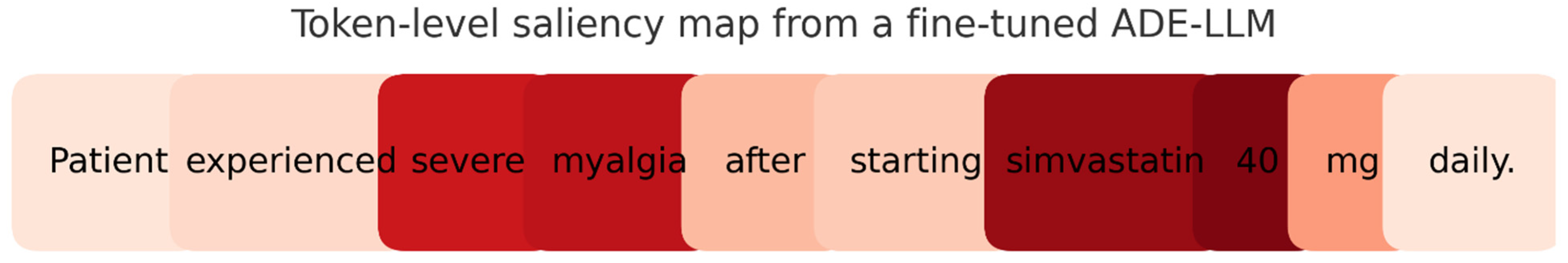

5.2. Interpretability

5.3. Data Quality

5.4. Infrastructure

5.5. Regulatory

6. Future Directions

6.1. LLMs in Prospective Clinical Care

6.2. LLMs in Research: Clinical Trials and Retrospective Analyses

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- AHRQ Patient Safety Network. Medication Errors and Adverse Drug Events. Available online: https://psnet.ahrq.gov/primer/medication-errors-and-adverse-drug-events (accessed on 30 June 2025).

- Zitu, M.M.; Gatti-Mays, M.E.; Johnson, K.C.; Zhang, S.; Shendre, A.; Elsaid, M.I.; Li, L. Detection of Patient-Level Immunotherapy-Related Adverse Events (irAEs) from Clinical Narratives of Electronic Health Records: A High-Sensitivity Artificial Intelligence Model. Pragmat. Obs. Res. 2024, 15, 243–252. [Google Scholar] [CrossRef] [PubMed]

- Golder, S.; O’Connor, K.; Lopez-Garcia, G.; Tatonetti, N.; Gonzalez-Hernandez, G. Leveraging Unstructured Data in Electronic Health Records to Detect Adverse Events from Pediatric Drug Use—A Scoping Review. medRxiv 2025. [Google Scholar] [CrossRef]

- Deng, Y.; Xing, Y.; Quach, J.; Chen, X.; Wu, X.; Zhang, Y.; Moureaud, C.; Yu, M.; Zhao, Y.; Wang, L.; et al. Developing Large Language Models to Detect Adverse Drug Events in Posts on X. J. Biopharm. Stat. 2024; in press. [Google Scholar] [CrossRef]

- Murphy, R.M.; Klopotowska, J.E.; de Keizer, N.F.; Jager, K.J.; Leopold, J.H.; Dongelmans, D.A.; Abu-Hanna, A.; Schut, M.C. Adverse Drug Event Detection Using Natural Language Processing: A Scoping Review of Supervised Learning Methods. PLoS ONE 2023, 18, e0279842. [Google Scholar] [CrossRef]

- Field, T.S.; Gurwitz, J.H.; Harrold, L.R.; Rothschild, J.M.; Debellis, K.; Seger, A.C.; Fish, L.S.; Garber, L.; Kelleher, M.; Bates, D.W. Strategies for Detecting Adverse Drug Events among Older Persons in the Ambulatory Setting. J. Am. Med. Inform. Assoc. 2004, 11, 492–498. [Google Scholar] [CrossRef][Green Version]

- Meng, X.; Yan, X.; Zhang, K.; Liu, D.; Cui, X.; Yang, Y.; Zhang, M.; Cao, C.; Wang, J.; Tang, Y.D. The Application of Large Language Models in Medicine: A Scoping Review. iScience 2024, 27, 109713. [Google Scholar] [CrossRef]

- Sezgin, E.; Sirrianni, J.; Linwood, S.L. Operationalizing and Implementing Pretrained, Large Artificial Intelligence Linguistic Models in the US Health Care System: Outlook of Generative Pretrained Transformer 3 (GPT-3) as a Service Model. JMIR Med. Inform. 2022, 10, e32875. [Google Scholar] [CrossRef]

- Kopacheva, E.; Lincke, A.; Björneld, O.; Hammar, T. Detecting Adverse Drug Events in Clinical Notes Using Large Language Models. Stud. Health Technol. Inform. 2025, 327, 892–893. [Google Scholar]

- Ong, J.C.L.; Chen, M.H.; Ng, N.; Elangovan, K.; Tan, N.Y.T.; Jin, L.; Xie, Q.; Ting, D.S.W.; Rodriguez-Monguio, R.; Bates, D.W.; et al. A Scoping Review on Generative AI and Large Language Models in Mitigating Medication-Related Harm. npj Digit. Med. 2025, 8, 182. [Google Scholar] [CrossRef]

- Baumeister, R.F.; Leary, M.R. Writing Narrative Literature Reviews. Rev. Gen. Psychol. 1997, 1, 311–320. [Google Scholar] [CrossRef]

- Green, B.N.; Johnson, C.D.; Adams, A. Writing Narrative Literature Reviews for Peer-Reviewed Journals: Secrets of the Trade. J. Chiropr. Med. 2006, 5, 101–117. [Google Scholar] [CrossRef]

- Ferrari, R. Writing Narrative Style Literature Reviews. Med. Writ. 2015, 24, 230–235. [Google Scholar] [CrossRef]

- Zitu, M.M.; Le, T.D.; Duong, T.; Haddadan, S.; Garcia, M.; Amorrortu, R.; Thieu, T. Large Language Models in Cancer: Potentials, Risks, and Safeguards. BJR|Artif. Intell. 2025, 2, ubae019. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Polosukhin, I. Attention Is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Sridharan, K.; Sivaramakrishnan, G. Unlocking the Potential of Advanced Large Language Models in Medication Review and Reconciliation: A Proof-of-Concept Investigation. Explor. Res. Clin. Soc. Pharm. 2024, 15, 100492. [Google Scholar] [CrossRef]

- Lenehan, P.; Venkatakrishnan, A.J.; Murugadoss, K.; Agarwal, V.; Singhal, A.; Karnati, C.S.R.; Soundararajan, V. Clinical nSights: A Software Platform to Accelerate Real-World Oncology Analyses. J. Clin. Oncol. 2024, 42, e23316. [Google Scholar] [CrossRef]

- Barman, H.; Venkateswaran, S.; Del Santo, A.D.; Yoo, U.; Silvert, E.; Rao, K.; Raghunathan, B.; Kottschade, L.A.; Block, M.S.; Chandler, G.S.; et al. Identification and Characterization of Immune Checkpoint Inhibitor-Induced Toxicities from Electronic Health Records Using Natural Language Processing. JCO Clin. Cancer Inform. 2024, 8, e2300151. [Google Scholar]

- Bejan, C.A.; Wang, M.; Venkateswaran, S.; Bergmann, E.A.; Hiles, L.; Xu, Y.; Chandler, G.S.; Brondfield, S.; Silverstein, J.; Wright, F.; et al. irAE-GPT: Leveraging Large Language Models to Identify Immune-Related Adverse Events in Electronic Health Records and Clinical Trial Datasets. medRxiv 2025. [Google Scholar] [CrossRef]

- Guillot, J.; Miao, B.; Suresh, A.; Williams, C.; Zack, T.; Wolf, J.L.; Butte, A. Constructing Adverse Event Timelines for Patients Receiving CAR-T Therapy Using Large Language Models. J. Clin. Oncol. 2024, 42 (Suppl. 16), 2555. [Google Scholar] [CrossRef]

- Block, S.; Barman, H.; Venkateswaran, S.; Del Santo, A.G.; Yoo, U.; Silvert, E.; Chandler, G.S.; Wagner, T.; Mohindra, R. The Role of Natural Language Processing Techniques versus Conventional Methods to Gain ICI Safety Insights from Unstructured EHR Data. JCO Glob. Oncol. 2023, 9, 136. [Google Scholar] [CrossRef]

- Petersen, S.K.; Madley, S.; Kjeldsen, J.; Bastholt, L.; Ruhlmann, C.H. 99P Real-World Management of ir-Colitis: A Danish 10-Year Cohort Study. Ann. Oncol. 2024, 24, 100842. [Google Scholar] [CrossRef]

- Sun, V.H.; Heemelaar, J.C.; Hadzic, I.; Raghu, V.K.; Wu, C.Y.; Zubiri, L.; Ghamari, A.; LeBoeuf, N.R.; Abu-Shawer, O.; Kehl, K.L.; et al. Enhancing Precision in Detecting Severe Immune-Related Adverse Events: Comparative Analysis of Large Language Models and International Classification of Disease Codes in Patient Records. J. Clin. Oncol. 2024, 42, 4134–4144. [Google Scholar] [CrossRef]

- Sun, V.; Heemelaar, J.; Hadzic, I.; Raghu, V.; Wu, C.Y.; Zubiri, L.; Ghamari, A.; Suero-Abreu, G.; Wu, J.; Hathaway, N.; et al. Enhancing Early Detection of ICI Myocarditis Cases during Hospitalization: A Role for Large Language Models. Circulation 2024, 150 (Suppl. 1), 4119426. [Google Scholar] [CrossRef]

- Chen, S.; Guevara, M.; Ramirez, N.; Murray, A.; Warner, J.L.; Aerts, H.; Miller, T.A.; Savova, G.K.; Mak, R.H.; Bitterman, D.S. Natural Language Processing to Automatically Extract the Presence and Severity of Esophagitis in Notes of Patients Undergoing Radiotherapy. JCO Clin. Cancer Inform. 2023, 7, e2300048. [Google Scholar] [CrossRef]

- Andrade, G.H.B.; Nishiyama, T.; Fujimaki, T.; Yada, S.; Wakamiya, S.; Takagi, M.; Kato, M.; Miyashiro, I.; Aramaki, E. Assessing Domain Adaptation in Adverse Drug Event Extraction on Real-World Breast Cancer Records. Int. J. Med. Inform. 2024, 191, 105539. [Google Scholar] [CrossRef]

- Kawazoe, Y.; Shimamoto, K.; Seki, T.; Tsuchiya, M.; Shinohara, E.; Yada, S.; Wakamiya, S.; Imai, S.; Hori, S.; Aramaki, E. Post-Marketing Surveillance of Anticancer Drugs Using Natural Language Processing of Electronic Medical Records. npj Digit. Med. 2024, 7, 315. [Google Scholar] [CrossRef]

- Nishiyama, T.; Yamaguchi, A.; Han, P.; Pereira, L.W.K.; Otsuki, Y.; Andrade, G.H.B.; Kudo, N.; Yada, S.; Wakamiya, S.; Aramaki, E.; et al. Automated System to Capture Patient Symptoms from Multitype Japanese Clinical Texts: Retrospective Study. JMIR Med. Inform. 2024, 12, e58977. [Google Scholar] [CrossRef]

- Yanagisawa, Y.; Watabe, S.; Yokoyama, S.; Sayama, K.; Kizaki, H.; Tsuchiya, M.; Imai, S.; Someya, M.; Taniguchi, R.; Yada, S.; et al. Identifying Adverse Events in Outpatients with Prostate Cancer Using Pharmaceutical Care Records in Community Pharmacies: Application of Named Entity Recognition. JMIR Cancer 2025, 11, e69663. [Google Scholar] [CrossRef]

- Dai, X.; Karimi, S.; Sarker, A.; Hachey, B.; Paris, C. MultiADE: A Multi-Domain Benchmark for Adverse Drug Event Extraction. J. Biomed. Inform. 2024, 160, 104744. [Google Scholar] [CrossRef]

- Tavabi, N.; Pruneski, J.; Golchin, S.; Singh, M.; Sanborn, R.; Heyworth, B.; Landschaft, A.; Kimia, A.; Kiapour, A. Building Large-Scale Registries from Unstructured Clinical Notes Using a Low-Resource Natural Language Processing Pipeline. Artif. Intell. Med. 2024, 151, 102847. [Google Scholar] [CrossRef]

- Zitu, M.M.; Zhang, S.; Owen, D.H.; Chiang, C.; Li, L. Generalizability of Machine Learning Methods in Detecting Adverse Drug Events from Clinical Narratives in Electronic Medical Records. Front. Pharmacol. 2023, 14, 1218679. [Google Scholar] [CrossRef] [PubMed]

- Chaichulee, S.; Promchai, C.; Kaewkomon, T.; Kongkamol, C.; Ingviya, T.; Sangsupawanich, P. Multi-Label Classification of Symptom Terms from Free-Text Bilingual Adverse Drug Reaction Reports Using Natural Language Processing. PLoS ONE 2022, 17, e0270595. [Google Scholar] [CrossRef] [PubMed]

- Fan, Y.; Zhou, S.; Li, Y.; Zhang, R. Deep Learning Approaches for Extracting Adverse Events and Indications of Dietary Supplements from Clinical Text. J. Am. Med. Inform. Assoc. 2021, 28, 569–577. [Google Scholar] [CrossRef]

- Hu, Y.; Chen, Q.; Du, J.; Peng, X.; Keloth, V.K.; Zuo, X.; Zhou, Y.; Li, Z.; Jiang, X.; Lu, Z.; et al. Improving Large Language Models for Clinical Named Entity Recognition via Prompt Engineering. J. Am. Med. Inform. Assoc. 2024, 31, 1812–1820. [Google Scholar] [CrossRef]

- Koon, Y.L.; Lam, Y.T.; Tan, H.X.; Teo, D.H.C.; Neo, J.W.; Yap, A.J.Y.; Ang, P.S.; Loke, C.P.W.; Tham, M.Y.; Tan, S.H.; et al. Effectiveness of Transformer-Based Large Language Models in Identifying Adverse Drug Reaction Relations from Unstructured Discharge Summaries in Singapore. Drug Saf. 2025, 48, 667–677. [Google Scholar] [CrossRef]

- Li, F.; Jin, Y.; Liu, W.; Rawat, B.P.S.; Cai, P.; Yu, H. Fine-Tuning Bidirectional Encoder Representations from Transformers (BERT)-Based Models on Large-Scale Electronic Health Record Notes: An Empirical Study. JMIR Med. Inform. 2019, 7, e14830. [Google Scholar] [CrossRef]

- Mahendran, D.; McInnes, B.T. Extracting Adverse Drug Events from Clinical Notes. AMIA Jt. Summits Transl. Sci. Proc. 2021, 2021, 420–429. [Google Scholar]

- McMaster, C.; Chan, J.; Liew, D.F.L.; Su, E.; Frauman, A.G.; Chapman, W.W.; Pires, D.E.V. Developing a Deep Learning Natural Language Processing Algorithm for Automated Reporting of Adverse Drug Reactions. J. Biomed. Inform. 2023, 137, 104265. [Google Scholar] [CrossRef]

- Silverman, A.L.; Sushil, M.; Bhasuran, B.; Ludwig, D.; Buchanan, J.; Racz, R.; Parakala, M.; El-Kamary, S.; Ahima, O.; Belov, A.; et al. Algorithmic Identification of Treatment-Emergent Adverse Events from Clinical Notes Using Large Language Models: A Pilot Study in Inflammatory Bowel Disease. Clin. Pharmacol. Ther. 2024, 115, 1391–1399. [Google Scholar] [CrossRef] [PubMed]

- Guan, H.; Devarakonda, M. Leveraging Contextual Information in Extracting Long-Distance Relations from Clinical Notes. AMIA Annu. Symp. Proc. 2020, 2019, 1051–1060. [Google Scholar]

- Mitra, A.; Rawat, B.P.S.; McManus, D.; Kapoor, A.; Yu, H. Bleeding Entity Recognition in Electronic Health Records: A Comprehensive Analysis of End-to-End Systems. AMIA Annu. Symp. Proc. 2021, 2020, 860–869. [Google Scholar]

- Rawat, B.P.S.; Jagannatha, A.; Liu, F.; Yu, H. Inferring ADR Causality by Predicting the Naranjo Score from Clinical Notes. AMIA Annu. Symp. Proc. 2021, 2020, 1041–1049. [Google Scholar] [PubMed]

- Stanekova, V.; Inglis, J.M.; Lam, L.; Lam, A.; Smith, W.; Shakib, S.; Bacchi, S. Improving the Performance of Machine Learning Penicillin Adverse Drug Reaction Classification with Synthetic Data and Transfer Learning. Intern. Med. J. 2024, 54, 1183–1189. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Ji, J.; Tian, H.; Chen, Y.; Ge, W.; Zhang, H.; Yu, F.; Zou, J.; Nakamura, M.; Liao, J. Chinese-Named Entity Recognition from Adverse Drug Event Records: Radical Embedding-Combined Dynamic Embedding-Based BERT in a Bi-Directional Long Short-Term Conditional Random Field Model. JMIR Med. Inform. 2021, 9, e26407. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Ruan, X.; McNeer, E.; Rossow, K.M.; Choi, L. Developing a Natural Language Processing System Using Transformer-Based Models for Adverse Drug Event Detection in Electronic Health Records. medRxiv 2024. [Google Scholar] [CrossRef]

- Jeong, E.; Su, Y.; Li, L.; Chen, Y. Discovering Severe Adverse Reactions from Pharmacokinetic Drug–Drug Interactions through Literature Analysis and Electronic Health Record Verification. Clin. Pharmacol. Ther. 2025, 117, 1078–1087. [Google Scholar] [CrossRef]

- Ma, J.; Chen, H.; Sun, J.; Huang, J.; He, G.; Yang, G. Efficient Analysis of Drug Interactions in Liver Injury: A Retrospective Study Leveraging Natural Language Processing and Machine Learning. BMC Med. Res. Methodol. 2024, 24, 312. [Google Scholar] [CrossRef]

- Kumar, V.; Rasouliyan, L.; Wang, Y.; Aggarwal, P.; Long, S. Reasons for Discontinuation of Ozempic Using a Natural Language Processing Pipeline on Unstructured Clinical Notes. Value Health 2024, 27, S441. [Google Scholar] [CrossRef]

- Wang, Y.; Rasouliyan, L.; Kumar, V. Reasons for Biologic Treatment Alteration in Inflammatory Bowel Disease: Insights from Unstructured Clinical Notes Derived from Large Language Models. Value Health 2024, 27, S592. [Google Scholar] [CrossRef]

- Washington, N.; Telis, N.; Judge, D.; Pawloski, P.; Grzymski, J.; Chahal, A.; Hajek, C.; Kruisselbrink, T.; Cirulli, E. Retrospective Study Shows Pharmacogenomic Testing Could Reduce Adverse Events Associated with Clopidogrel Use by 38%. Circulation 2024, 150 (Suppl. 1), 4147064. [Google Scholar] [CrossRef]

- Gallifant, J.; Celi, L.A.; Sharon, E.; Bitterman, D.S. Navigating the Complexities of Artificial Intelligence-Enabled Real-World Data Collection for Oncology Pharmacovigilance. JCO Clin. Cancer Inform. 2024, 8, e2400051. [Google Scholar] [CrossRef] [PubMed]

- Lisi, D.M. AI for Detecting and Preventing Adverse Drug Events. Hosp. Pharm. 2025, 50, 32–37. [Google Scholar]

- Matheny, M.E.; Yang, J.; Smith, J.C.; Walsh, C.G.; Al-Garadi, M.A.; Davis, S.E.; Marsolo, K.A.; Fabbri, D.; Reeves, R.R.; Johnson, K.B.; et al. Enhancing Postmarketing Surveillance of Medical Products with Large Language Models. JAMA Netw. Open 2024, 7, e2428276. [Google Scholar] [CrossRef]

- Zitu, M.M.; Li, L.; Elsaid, M.I.; Gatti-Mays, M.E.; Manne, A.; Shendre, A. Comparative Assessment of Manual Chart Review and Patient-Level Adverse Drug Event Identification Using Artificial Intelligence in Evaluating Immunotherapy-Related Adverse Events (irAEs). J. Clin. Oncol. 2023, 41, e13583. [Google Scholar] [CrossRef]

- Nashed, A.; Zhang, S.; Chiang, C.W.; Zitu, M.; Otterson, G.A.; Presley, C.J.; Li, L. Comparative Assessment of Manual Chart Review and ICD Claims Data in Evaluating Immunotherapy-Related Adverse Events. Cancer Immunol. Immunother. 2021, 70, 2761–2769. [Google Scholar] [CrossRef] [PubMed]

| Term | Definition |

|---|---|

| Accuracy | The proportion of correctly predicted instances among all instances evaluated (overall correctness). |

| ACH | Adrenal insufficiency, colitis, hypophysitis; shorthand for three common immune-related adverse events involving adrenal glands, the colon, and the pituitary gland (seen in immunotherapy). |

| ADE | Adverse Drug Event—any harmful or unintended event caused by the use of a medication. |

| AI | Artificial Intelligence—the simulation of human cognitive processes by machines or computer systems. |

| AllergyRoBERTa | A domain-adapted RoBERTa-based model designed for classifying allergy-related information in EHRs (electronic health records). |

| AUROC | Area Under the Receiver Operating Characteristic (ROC) Curve—a metric for evaluating how well a classifier separates classes (also known as AUC). |

| BERT | Bidirectional encoder representations from transformers—a transformer-based language model pretrained on large text corpora, used as a foundation for many NLP tasks. |

| BioBERT | A biomedical domain-adapted version of BERT, pretrained on biomedical literature (PubMed and PMC texts) for biomedical NLP tasks. |

| ChatGPT | OpenAI’s conversational LLM (part of the GPT series) used in zero-shot and few-shot clinical NLP tasks. |

| ClinicalBERT | A BERT model fine-tuned on MIMIC clinical notes for healthcare-specific language understanding. |

| ClinicalLongformer | An adaptation of the Longformer model for handling long clinical documents in ADE extraction tasks (utilizes sparse attention for long contexts). |

| DDI | Drug–drug interaction—an interaction in which one drug affects the activity or effects of another drug, potentially leading to ADEs. |

| DeBERTa | Decoding-enhanced BERT with Disentangled Attention—an advanced transformer model (BERT variant) with improved attention mechanisms for NLP tasks. |

| EHR | Electronic Health Record—a digital version of a patient’s medical chart or history, including diagnoses, medications, and clinical notes. |

| Entity extraction | The process of identifying and extracting named entities (e.g., medications, symptoms) from unstructured clinical text. |

| F1 Macro (Macro-F1) | The F1-score averaged across all classes, giving equal weight to each class regardless of its frequency (macro-averaged F1). |

| F1 Micro (Micro-F1) | The F1-score calculated globally across all instances by pooling predictions from all classes (micro-averaged F1). |

| F1 score | The harmonic mean of precision and recall—a single metric that balances both for classification performance. |

| Few-shot prompting | A technique where only a few example prompts are provided to an LLM to demonstrate a task, enabling the model to perform the task without additional training. |

| Fine-tuning | The process of training a pretrained model on a specific new dataset to specialize it for a particular task. |

| Gemini Pro | An advanced transformer-based large language model tested for performance on clinical NLP tasks. |

| GPT-4 | The fourth-generation model in OpenAI’s Generative Pretrained Transformer series—a state-of-the-art LLM used for complex language tasks, including medical NLP. |

| HIPAA | Health Insurance Portability and Accountability Act—a U.S. law that mandates the protection and confidential handling of patient health information. |

| ICD | International Classification of Diseases—a standardized system of codes for diagnoses and health conditions, used worldwide in healthcare documentation. |

| ICI | Immune Checkpoint Inhibitor—a type of cancer immunotherapy drug (e.g., anti-PD-1 or anti-CTLA-4 antibodies) that unleashes the immune system by blocking inhibitory receptors; often associated with immune-related adverse events. |

| irAE | Immune-related Adverse Event—an adverse effect of immunotherapy (such as checkpoint inhibitors) where the immune system attacks normal organs (e.g., colitis or pneumonitis). |

| LLM | Large Language Model—an AI model trained on massive text datasets capable of understanding, generating, and summarizing human-like language. |

| LLaMA | Large Language Model Meta AI—an open-source family of LLMs developed by Meta (Facebook). |

| Longformer | A transformer model variant optimized for long documents via sparse attention, allowing it to efficiently process long clinical texts. |

| MADE | Medication and Adverse Drug Events—a benchmark dataset for evaluating models on ADE extraction tasks. |

| MEPS | Myocarditis, encephalitis, pneumonitis, and severe cutaneous adverse reactions—an acronym grouping several serious immune-related adverse events often observed during immunotherapy. |

| MeDeBERTa | A medical-domain variant of DeBERTa fine-tuned for adverse event (ADE) extraction tasks. |

| MedNERCRJA | A BERT-based Japanese-language medical named the entity recognition model (tailored for Japanese clinical text). |

| MedNERN | Medical Named Entity Recognition—the task of identifying and classifying medical terms (like drugs, diseases, symptoms) in text. |

| NER (Named Entity Recognition) | An NLP task of detecting and labeling spans of text that constitute named entities (such as medication names or disease names). |

| NLP | Natural Language Processing—a field of AI focused on enabling computers to understand and manipulate human language text and speech. |

| PGx | Pharmacogenomics—the study of how an individual’s genetic makeup affects their response to drugs. |

| Pharmacovigilance | The monitoring, detection, assessment, and prevention of adverse effects or any drug-related problems, especially after a drug has been released to the market. |

| Precision | The proportion of retrieved instances that are relevant—in classification, the fraction of positive predictions that are actually correct (true positives divided by total predicted positives). |

| RAG | Retrieval-Augmented Generation—an approach where an LLM incorporates information retrieved from external sources into its generated responses. |

| Recall | The proportion of actual positive instances that are correctly identified—also known as sensitivity or true positive rate (true positives divided by total actual positives). |

| Relation extraction | The task of identifying and classifying relationships between entities in text (for example, determining if a drug is associated with a specific adverse event). |

| RoBERTa | A Robustly Optimized BERT approach—a transformer model that improves on BERT’s pretraining methodology, leading to better performance on many NLP tasks. |

| SADR | Severe adverse drug reaction—a particularly harmful or serious adverse drug reaction; often used in the context of signals or reports indicating serious drug-related harms. |

| SciBERT | A BERT-based model trained on a large corpus of scientific publications (Semantic Scholar) to better handle scientific terminology. |

| SciFive | A biomedical domain adaptation of the T5 transformer model, pretrained and fine-tuned for various scientific and biomedical NLP tasks. |

| SE-K | A lightweight model pipeline for surgical note data extraction, which achieves high efficiency and performance (AUROC ~0.94) on registry information extraction tasks. |

| Sensitivity | True positive rate—the proportion of actual positives correctly identified by a model (recall). |

| Specificity | True negative rate—the proportion of actual negatives correctly identified by a model. |

| Transformer | A deep learning model architecture based on self-attention mechanisms, foundational to many modern language models (e.g., BERT, GPT). |

| Zero-shot learning | A scenario where a model is applied to perform a task without any direct prior examples or training specifically for that task (learning on the fly). |

| Ref | Study Objective | LLM Approach | Model Performance | Clinical Impact/Advancement |

|---|---|---|---|---|

| [16] | Evaluate the capabilities of four advanced LLMs in medication review and reconciliation. | • ChatGPT-3.5 • Gemini Pro • Claude-Instant • LLaMA-2-13B | • Comparative accuracy; Claude/Gemini generally higher • Frequent DDI/genomics errors across models. | • Potential to assist clinical pharmacists • Patients’ safety may be at risk without expert oversight |

| [17] | Present Clinical nSights platform for real-world oncology analysis using deidentified and harmonized EHR data. | • BERT augmented curation • nCognito de-identification • Graph/distribution harmonization | • Effective phenotype extraction (e.g., tumor markers, irAEs) • Supports Longitudinal data-analysis | • Rapid cohort building and longitudinal dashboards • Enables real-world evidence generation |

| Ref | Study Objective | Clinical Data Source and Size | LLM Approach | Metric and Performance | Clinical Impact/Advancements |

|---|---|---|---|---|---|

| [18] | Detect ICI-induced irAEs (MEPS) | Mayo Clinic EHR notes, 9290 patients | • SciBERT fine-tuned (drug-to-phenotype) | Micro-F1 = 0.84 for patient level MEPS identification | • Detected toxicities missed by ICD; • Supports real-world safety |

| [19] | Detect ICI-induced irAEs | VUMC (100 pts/26,432 notes), UCSF (70 pts/487 notes), Roche (272 pts/272 notes) | • GPT-4o zero-shot (irAE-GPT) | Micro-F1: 0.66 (UCSF), 0.62 (Roche), 0.56 (VUMC) at patient level | • Reduces manual review • Strengthens cross-site safety monitoring |

| [20] | Detect CART-related AEs | UCSF deidentified clinical data warehouse: 4183 notes/253 patients | • GPT-4 zero-shot (Azure API) | Accuracy = 64.0% (10% sample) at note level | • Automates extraction • Reduces chart review burden for complex CAR-T AEs |

| [21] | Detect ICI-induced irAEs (adrenal insufficiency, colitis, hypophysitis; ACH). | Mayo Clinic EHR unstructured notes, ~9000 patients | • SciBERT fine-tuned (Augmented Curation) | Micro-F1 = 0.85 at the patient level | • Manual review time reduced from ~9 weeks to ~10 min • Accelerates safety assessments |

| [22] | Manage ICI-induced IR colitis | Southern Denmark melanoma CPI cohort, 85 patients | • AI language model | BIOx required in 45% of cases | • Reduces prolonged GC exposure • Expedites BIOx initiation |

| [23] | Detect ICI-induced myocarditis | Progress notes for 1874 ICI admissions (22 myocarditis cases) | • Mistral-7B OpenOrca with RAG (zero-shot) | Sensitivity = 95.5%; Specificity = 95.4% at patient level | • One-day screening enabling early intervention with accuracy |

| [24] | Detect ICI-induced irAEs | MGH EHR, progress/discharge notes, 7555 admissions (3521 patients) | • Mistral OpenOrca with RAG | Sensitivity = 94.7%; Specificity = 93.7% at patient level | • Chart-review time cut from 15 min to 9.5 s |

| Ref | Study Objective | Clinical Data Source and Size | LLM Approach | Metric and Performance | Clinical Impact/ Advancements |

|---|---|---|---|---|---|

| [25] | Detect esophagitis presence and severity | Brigham/Dana-Farber EHR—Gold: 1524 notes/124 pts; Silver: 2420 notes/1832 pts; Validation: 345 notes/75 pts | • PubMedBERT fine-tuned (gold + silver) | Macro-F1: 0.92 (any vs. none), 0.82 (grade 2+ vs. ≤1), 0.74 (none vs. grade 1 vs. grade 2–3) | • Detected esophagitis in 100% of test cases vs. 33% by ICD-10, • Enabled enhanced AE detection |

| [26] | Detect chemotherapy-induced ADEs in breast cancer | OICI breast cancer notes: 1928 notes/434 pts | • Japanese BERT fine-tuned; domain adaptation (800 docs) | Micro-F1 = 0.84 on OICI test set | • Uncovered previously unreported ADEs; • Improved safety surveillance |

| [27] | Signal detection of chemotherapy-associated AEs | Univ. of Tokyo EMRs: 44,502 pts; 175,624 DPC records; 4.86 M progress, 3.61 M nursing, 122 K discharge notes; median follow-up 1874 days | • MedNERN (BERT fine-tuned on Japanese clinical text) | HRs: 1.63 (phlebitis), 3.85 (oral mucositis), 4.71 (thrombocytopenia), 3.34 (alopecia), all p < 0.001 | • Reflects known AE frequencies • Enables automated postmarketing surveillance |

| [28] | Detect taxane-induced peripheral neuropathy | Kyoto Univ Hospital EHR: 215 pts; 159,736 physician, 40,385 nursing, 23,073 discharge, 5663 radiology, 1147 radioisotope, 29,148 pharmacist notes | • MedNERCRJA BERT NER + Levenshtein normalization | Detection rate = 60.7% at 30 days (manual = 74.0%); Pearson r = 0.87 | • Near-real-time symptom tracking • Reduces monitoring costs |

| [29] | Detect chemotherapy-induced AEs in outpatient settings | 1008 annotated assessment notes; applied to 2193 ARAT-related clinical notes | • MedNERCRJA (BERT-based NER) | Macro-F1 = 0.72 (exact match) on assessment notes | • Supports pharmacist-led outpatient AE monitoring |

| Ref | Study Objective | Clinical Data Source and Size | LLM Approach | Metric and Performance | Clinical Impact/ Advancements |

|---|---|---|---|---|---|

| [30] | Benchmark multi-domain ADE extraction | n2c2: 505/66,810; MADE: 1089/61,563; PHEE: 4827/4844; PsyTAR: 3147/3169; CADEC: 1250/7593; CADECv2: 3548/22,389 | • GPT-4 in-context (5-shot) | Micro-F1: 92.2% (drug), 51.8% (ADE)—on n2c2; MADE: 91.8% (drug), 68.7% (ADE) | • Enables unified active ADE surveillance across diverse text types |

| [31] | Extract surgical registry variables from EHR | Stanford Health EHR: 2137 patients/5432 notes | • Majority-vote ensemble: SE-K, SE-E, fine-tuned BioClinicalBERT | AUROC = 0.94 ± 0.04 on 2021 preoperative notes | • Accelerates registry creation • Enables faster surgical outcomes research |

| [32] | Detect ICI-related ADEs across institutions | ICIOSU: 47 pts/1394 notes; n2c2: 505 notes | • ClinicalBERT fine-tuned (cross-dataset) | Micro-F1 = 0.78 (ICI → n2c2); F1 = 0.74 (n2c2 → ICI) | • Enables generalizable ADE detection across health systems |

| Ref | Study Objective | Clinical Data Source and Size | LLM Approach | Metric and Performance | Clinical Impact/ Advancements |

|---|---|---|---|---|---|

| [33] | Classify allergy-related symptoms | Songklanagarind Hospital EHR: 79,912 pharmacist-reviewed records | • Ensemble (NBSVM, ULMFiT, XLM-RoBERTa, AllergyRoBERTa) | Exact Match Ratio = 95.33%; Micro-F1 = 98.88% | • Accelerates allergy coding • Reduces pharmacist workload |

| [34] | Extract dietary supplement AEs and indications | UMN CDR: 7000 sentences (NER); 3000 sentences (RE) | • BERT large cased + CRF (NER) • attention-based BiLSTM (RE) | NER Micro-F1 = 0.86; RE Micro-F1 = 0.89 | • Enables dietary supplement safety surveillance using clinical notes |

| [35] | Extract neuro-AEs, problems, treatments, tests | MTSamples: 163 summaries; VAERS: 91 reports | • GPT-4 with task-specific prompt framework + 5-shot | Relaxed Micro-F1 = 0.861 (MTSamples), 0.736 (VAERS) | • Reduces annotation effort • Enables rapid ADE extraction |

| [36] | Identify causally related drug–AE pairs | Annotated 5088 segments; Singapore discharge: 1647; MIMIC-III: 4418 | • BioMELECTRA-Large fine-tuned + BiLSTM | Micro-F1 = 0.74 (vs. 0.64 traditional) | • Automates ADE causality identification. • Reduces manual review burden |

| [37] | Normalize medications and ADEs to vocabularies | 1.5M EHR notes; MADE: 1089 notes; NCBI: 793 abstracts; CDR: 1500 abstracts | • EhrBERT1M (BioBERT fine-tuned on 1M EHRs) | Micro-F1 = 68.82% (MADE); Macro-F1 = 90.35% (NCBI), 93.82% (CDR) | • Enables large-scale pharmacovigilance and concept normalization |

| [38] | Extract ADE relations | n2c2-2018: 505 discharge summaries (MIMIC-III) | • BERT fine-tuned | Precision = 0.93; Recall = 0.96; Micro-F1 = 0.94 | • Enables real-time ADE surveillance • Reduces clinical risk |

| [39] | Detect ADR mentions | Austin Health EMR: 861 discharge summaries (311 ADRs) | • MeDeBERTa (DeBERTa pretrained on 1.1M docs, fine-tuned NER) | ROC-AUC = 0.955 (95% CI: 0.933–0.978) | • Bypasses ICD-10 coding • Increases ADR reporting efficiency |

| [40] | Detect serious AEs from IBD immunosuppressants | UCSF EHR: 928 pts/928 notes (703 SAEs) | • HUCSF-BERT fine-tuned hierarchical model | Macro-F1 = 0.68 | • Enables automated drug safety surveillance |

| [41] | Extract ADE and reason relations | MADE: 21 pts/1092 notes; n2c2 (MIMIC-III): 505 summaries | • BERT fine-tuned + edge sampling | Micro-F1 improvement: +6.4% (MADE), +6.7% (n2c2) | • Improves ADE surveillance accuracy • Enhances postmarketing safety |

| [42] | Extract bleeding-related entities | Northwestern U discharge summaries: 1079 notes | • BERT variants, LM-LSTM-CRF joint LM | Token-level Macro-F1 = 0.75; Sentence-level = 0.84; Note-level = 0.96 | • Supports anticoagulant prescribing by automating bleeding event extraction |

| [43] | Predict bleeding ADR causality (Naranjo) | UMass EHR: 991 pts/1385 discharge summaries | • ClinicalBERT + logistic regression | Macro-F1 = 0.50; Weighted F1 = 0.63 | • Reduces manual chart review in causality assessment |

| [44] | Classify penicillin ADR risk labels | EHR: 12,232 pts; Synthetic: 69 labels; Test: 1000 cases | • BERT with transfer learning | AUC = 0.995 (high vs. low risk); 0.984 (allergy vs. intolerance) | • Enables automation in penicillin allergy de-labeling workflows |

| [45] | Identify ADR-related entities | 24,890 ADR reports (147,451 annotated entities) | • BBCRadical (BERT + BiLSTM-CRF with radical embeddings) | Micro-F1 = 96.2%; Precision = 96.4%; Recall = 96.0% | • Enhances ADR report quality and postmarketing evaluation |

| [46] | Detect ADEs using scalable model | n2c2: 505 notes; VUMC: 112 pts/1541 notes | • ClinicalLongformer (split-based 10-chunk) | Macro-F1 = 0.868 (n2c2); F1 = 0.786 (VUMC) | • Enables scalable ADE surveillance from real-world notes |

| Ref | Study Objective | Clinical Data Source and Size | LLM Approach | Metric and Performance | Clinical Impact/ Advancements |

|---|---|---|---|---|---|

| [47] | Identify DDI–severe adverse drug reactions (SADRs) signals | PubMed: 160,321 abstracts (111 DDI–SADR signals); Validation: VUMC (3.18M pts), All of Us (239K pts) | • SciFive PubMed Large fine-tuned | Validated 17 true interactions; 9 novel signals not in DrugBank | • Helps clinicians avoid prescribing PK DDI combinations with unrecognized SADRs |

| [48] | Identify DDIs increasing isoniazid hepatotoxicity risk | Changsha EMR: 3209 isoniazid pts/128 injury cases; 25,130 ADR records | • Chinese BERT + logistic regression | 20 signal drugs identified; strongest OR = 2.69 for cefepime | • Enhances pharmacovigilance by identifying DDI signals for isoniazid safety monitoring |

| Ref | Study Objective | Clinical Data Source and Size | LLM Approach | Metric and Performance | Clinical Impact/ Advancements |

|---|---|---|---|---|---|

| [49] | Extract semaglutide discontinuation reasons | OMNY Health EHR: 90,955 patients/1.22 M sentences | • Transformer QA model fine-tuned + classification model | Accuracy = 91.4% for reason classification | • Automates discontinuation reason extraction • Enhancing pharmacovigilance |

| [50] | Extract biologic discontinuation/switching reasons (IBD) | OMNY Health EHRs (4 systems, 2017–2023), 10.6 M notes | • RoBERTa QA → Gemini Pro 1.5 fine-tuned | Accuracy = 94.5% for 7 discontinuation reasons | • Unlocks unstructured EHR insights to inform IBD biologic therapy optimization |

| [51] | Extract PGx-guided clopidogrel dosing insights | 100K patients with paired EHR and exome data | • GPT-4 prompt-based dosage extraction | Pharmacogenomic testing could cut ADEs by 38% | • Enables population-scale PGx decision support; • Could prevent 1 thrombosis per ~30 patients |

| Ref | Main Theme | Opportunities Highlighted | Challenges Discussed | Policy/Practice Implication |

|---|---|---|---|---|

| [52] | AI-enabled real-world data for oncology pharmacovigilance | Use of NLP for adverse event detection from EHRs; Three Ps framework (Processing, Pipelines, Patient outcomes) | Data labeling issues, causality attribution challenges, data silos, fairness in AI | • Encourages better data sharing, robust labeling, standardization, and focus on health equity in pharmacovigilance design |

| [53] | AI in detecting and preventing adverse drug events | Applications of AI/ML and LLMs in ADE detection from EHRs, social media, and predictive drug modeling | Inaccuracy of LLMs, limited real-time validation, privacy issues, biases, and system integration challenges | • Pharmacists should lead AI evaluation, advocate for responsible AI use, and integrate AI knowledge into clinical practice |

| [54] | LLMs in FDA postmarketing surveillance via the Sentinel System | LLMs for signal detection, probabilistic phenotyping, enhancing narrative data use | Infrastructure needs, hallucinations, inference limitations, equity concerns | • Highlights the need for cautious and equitable LLM deployment in regulatory surveillance systems |

| Area | Visible Gains | Underlying Challenges |

|---|---|---|

| Speed and efficiency | • Large reductions in manual review time • Rapid screening enables earlier intervention | • Accuracy and safety still require clinician oversight |

| Scalability and text mining | • Cohort building and surveillance over millions of notes • Near-real-time dashboards for real-world data | • Cross-site/domain shift may reduce external performance • Data harmonization and provenance tracking are required |

| Decision support | • PGx-guided and treatment-modification insights • Structured hand-offs can streamline clinician workflow | • Hallucination/mis-advice risk without guard-rails • High-risk interactions must be escalated, not auto-actioned |

| Generalizability | • Domain adaptation across hospitals and synthetic augmentation can boost performance | • Bias and under-representation from different hospital sites may affect performance. |

| Interpretability | • Explains why the case was flagged, so gains trusts, helps to spot mistakes quickly, and act faster. | • Keep explanations faithful and brief—only true drivers, with rule checks and calibrated confidence. |

| Privacy and data sharing | • Federated training can enable multi-site learning | • Privacy constraints limit centralizing raw notes • Robust auditing is still required under federation |

| Infrastructure and equity | • Lightweight models enable CPU-friendly deployment | • GPU/compute demands and resource gaps may widen disparities |

| Regulatory and governance | • Sentinel-style surveillance and audit trails emerging | • Standards for hallucinations/false positives still unsettled • Prospective validation and change-control needed |

| Priority | Concrete Action | Rationale/Example Refs |

|---|---|---|

| Immediate | Release open benchmark datasets with site-level (held-out hospital) splits—train on hospital, test on a different, unseen hospital (not used for training) to measure true cross-site generalizability. | Improves cross-site generalizability; MADE, n2c2; Guan 2020 et al. [41] |

| Capture clinician feedback via “accept/override” dashboards | Turns false alerts into training data; Sridharan et al. [16]; Koon et al. [36] | |

| Deploy lightweight CPU-friendly models (e.g., SE-K) | Enables adoption without GPUs; Tavabi et al. [31] | |

| Embed real-time bias audits (age, sex, race dashboards) | Reveals equity gaps early; Gallifant et al. [52] | |

| Near-term | Federated or differential-privacy fine-tuning across health systems | Robustness without raw data sharing; early EHR pilots |

| Add causal explanation layers (counterfactual reasoning) | From heat-maps to actionable justifications, Rawat et al. [43] | |

| Run prospective, multi-center impact studies with FDA-style audit trails | Regulatory-grade evidence; Matheny et al. [54] | |

| Extend to multimodal ADE detection (text + labs ± imaging) | Captures false negatives of text-only models; Staneková et al. [44] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zitu, M.M.; Owen, D.; Manne, A.; Wei, P.; Li, L. Large Language Models for Adverse Drug Events: A Clinical Perspective. J. Clin. Med. 2025, 14, 5490. https://doi.org/10.3390/jcm14155490

Zitu MM, Owen D, Manne A, Wei P, Li L. Large Language Models for Adverse Drug Events: A Clinical Perspective. Journal of Clinical Medicine. 2025; 14(15):5490. https://doi.org/10.3390/jcm14155490

Chicago/Turabian StyleZitu, Md Muntasir, Dwight Owen, Ashish Manne, Ping Wei, and Lang Li. 2025. "Large Language Models for Adverse Drug Events: A Clinical Perspective" Journal of Clinical Medicine 14, no. 15: 5490. https://doi.org/10.3390/jcm14155490

APA StyleZitu, M. M., Owen, D., Manne, A., Wei, P., & Li, L. (2025). Large Language Models for Adverse Drug Events: A Clinical Perspective. Journal of Clinical Medicine, 14(15), 5490. https://doi.org/10.3390/jcm14155490