Artificial intelligence methods have evolved sufficiently to be widely applied in automatic data analysis, providing standardized and reproducible results comparable to those of highly skilled specialists, while assisting less experienced personnel. This allows for a reduction in repetitive work and draws attention to the choice of medical treatment and accurate patient cases. Convolutional neural networks, attention-based transformers, and traditional machine learning approaches using radiomics features are at the forefront of medical applications, where the automatic understanding of various imaging techniques is needed (such as computed tomography—CT, magnetic resonance imaging—MRI, X-ray, radiographs, and positron emission tomography—PET) to examine internal organs and tissues. Moreover, graph neural networks are also making strides in this field. Finally, rapid progress in large language models has led to the integration of speech recognition and text analysis technologies, further streamlining radiologists’ workflows [1].

This collection of research highlights the influence of artificial intelligence (AI) and machine learning (ML) on healthcare, demonstrating their capacity to improve diagnostics, treatment strategies, and patient care. The presented studies address critical healthcare challenges, offering solutions that enhance precision, productivity, and healthcare accessibility. The compilation includes works on diagnostic improvement, featuring models such as convolutional neural networks (CNNs) and transformers for the early and accurate identification of various conditions, including different types of cancer, stroke, intracranial hemorrhage, and acute aortic syndrome (AAS). AI’s integration into radiology is showcased, facilitating tasks like the automatic segmentation of anatomical structures, cancer prediction using radiomics, and image quality optimization, thereby enhancing workflow efficiency and consistency. The collection also presents AI applications in surgical planning and intraoperative guidance, as evidenced by studies evaluating preoperative imaging predictors and AI tools for detecting surgical wound infections. These advancements enable personalized medicine by customizing interventions based on individual patient characteristics. Innovative technologies such as microwave imaging for breast cancer detection and steady-state thermal imaging are featured, illustrating AI’s role in developing non-invasive diagnostic methods that offer safer and more accessible alternatives to conventional techniques. The compilation also includes insights into AI-driven tools designed to track disease progression in chronic conditions and monitor postoperative recovery. Novel AI methodologies addressing rare and complex diagnoses, such as early-stage osteosarcoma detection, bone mineral density screening in cystic fibrosis, and biomarker identification in leukemia, are presented. The collection incorporates articles on AI-powered tools for emergency settings, capable of detecting rib fractures, coronary occlusion, or chest abnormalities, ensuring effective patient management in critical scenarios. Studies focusing on tuberculosis and COVID-19 detection from radiographs emphasize AI’s potential in global health applications, reflecting the development of AI-powered tools aimed at democratizing healthcare. The compilation also addresses practical aspects of AI integration, such as interrater variability, reproducibility, and the need for standardized benchmarks. This comprehensive collection serves as a valuable resource for healthcare professionals, researchers, and technologists seeking to understand and leverage AI’s potential in medicine. By combining in-depth technical insights with practical clinical applications, these studies contribute to improving patient outcomes, streamlining workflows, and promoting a more equitable healthcare system.

Deep learning models can be valuable when quick assessment of chest X-rays is needed to differentiate between acute aortic syndrome and thoracic aortic aneurysm without expert personnel available, as indicated in [2]. Employing a four-view network based on EfficientNet and incorporating an attention module could enhance breast cancer diagnosis from mammograms [3]. Implementing data augmentation techniques and developing novel network architectures may substantially improve the detection of tuberculosis and COVID-19 in CT scans [4]. Selecting the appropriate hyperparameters can optimize deep model performance, as shown in the case of brain tumor classification using MRI data [5].

Object localization within medical data can be accomplished through various methods. The most prevalent approach involves semantic segmentation using the U-Net network architecture, which assigns each pixel to a specific class. For example, when examining H&E (haematoxylin and eosin)-stained images, an accurate cell count across different classes is crucial for proper diagnosis, making precise segmentation, often achieved through U-Net architecture, essential [6]. The U-Net model facilitates the identification of heart and lung anatomy in chest X-rays, necessary for cardiothoracic ratio calculation, as shown in [7]. It can also be employed for the precise segmentation of the appendix with distinct boundaries, critical for diagnosing acute appendicitis from CT scans [8]. The determination of temporomandibular joint placement in ultrasonography images is explored in [9]. U-Net networks enhanced with pyramid attention modules and preprocessed with stick filter derivatives demonstrate 91–93% accuracy in lung fissure segmentation in CT images [10]. Despite its power, the U-Net architecture has limitations. In [11], researchers propose augmenting features derived from this model with a graph neural network to better capture the spatial relationships of features significant in COVID-19 diagnosis from CT scans. Moreover, repurposing existing datasets for different applications is challenging. In [12], researchers propose an algorithm to convert the original three-label annotation in the BraTS dataset to two-label annotations suitable for post-operative settings, which were then used to train the nnU-Net for brain segmentation in treatment response monitoring. The same network architecture proved to yield very good outcomes, with an F1-score of 94% for the automatic segmentation of the nasolacrimal canal in CBCT (cone beam computed tomography) imaging [13]. Ref. [14] introduces a novel semantic segmentation network for brain tumor annotation within the BraTS dataset, achieving 88–93% segmentation accuracy depending on the category. It is noteworthy that determining the training methodology can be complex, as the number of classes used may influence the outcomes, as demonstrated in research on osteosarcoma in X-rays and normal radiographs, where using more classes for model training yielded better quality results [15]. An alternative approach to object detection employs variations in Mask R-CNNs to determine approximate rectangular region boundaries. Enhancing mask detection with Mask R-CNN enables high-quality appendix segmentation in CT scans, as evidenced in [16]. Utilizing Faster R-CNN with the Feature Pyramid Network allows for rib fracture detection on chest radiographs with 89% accuracy [17].

Convolutional neural networks have numerous potential applications beyond those already mentioned. The detection of anomalies in optical coherence tomography (OCT) images using local region analysis has demonstrated 99% accuracy [18]. Denoising techniques are valuable for enhancing the quality of the medical data obtained from non-contrast chest and low-dose abdominal CT scans, as the image quality can vary depending on the equipment used [19]. Research presented in [20] indicates that employing denoising methods can particularly improve the output of older imaging devices. In the context of diffusion tensor imaging, while deep learning denoising techniques reduce femorotibial factorial anisotropy regardless of voxel size, these approaches may help to address the challenge of lower signal-to-noise ratios [21]. A broad review of various techniques concerning coronary artery calcium scoring on non-dedicated CT is described in [22]. An application of CNNs for pediatric neuroimaging presents the potential of this approach in [23].

Recent advancements have shown that attention mechanisms and transformer networks are not only effective in automated text analysis, but also yield impressive results in image processing. For example, [24] introduced the Vertebrae-aware Vision Transformer to ensure precise and efficient spine segmentation from CT scans. A transformer-based model was employed for bone age estimation from radiographs, achieving a mean absolute error of 4.1 months [25], which surpasses the traditional machine learning methods outlined in [26]. The tedious task of manually analyzing H&E-stained WSI (whole slide images) can now be replaced by an attention-based network, enhancing accuracy and subsequently reducing the number of required surgeries [27]. The Efficient Spatial Channel Attention Network for breast cancer detection in histopathological images has demonstrated superior performance compared to conventional convolutional neural networks, attaining 94% accuracy [28]. Transformers used in combination with the inverse Fourier transform enhance the performance of the convolution layer in rosette trajectory magnetic resonance imaging [29].

Numerous organizations opt to enhance their medical data acquisition equipment with artificial intelligence-based analysis software. The research outlined in [30] indicates that an AI-powered ECG (electrocardiogram) biomarker could identify coronary occlusion in resuscitated patients, performing comparably to an expert consensus. The application of AI systems for detecting dental caries enables the establishment of a gold standard and minimizes discrepancies among dentists’ assessments [31]. The findings presented in [32] demonstrate that implementing an AI-based system for identifying limb fractures in radiographs decreased the overall disagreement between physicians. An AI-driven system for screening low bone mineral density in low-dose chest CT scans proves valuable [33], as does AI support in OCTA (optical coherence tomography angiography) analysis for diagnosing various medical conditions [34]. These AI models can also streamline effective dose calculations in PET/CT, eliminating variations between operators [35]. There are also approaches on how to create simple, mobile applications that could enhance physician work. In [36], a RedScar mobile application for post-operative scar healing condition determination is introduced. The newly suggested, highly sensitive imaging technique proved to better detect breast cancer compared to MRI and infrared imaging [37]. The conclusions in [38] indicate that collaborative analysis and discussion accelerate the achievement of more accurate results when humans are learning to use new equipment.

The effectiveness of radiomic features in describing image content has been demonstrated, enabling further data classification. Research in [39] indicates that when extracted from CT or MRI scans of lung or prostate tumors, these features are influenced by individual variations in contrast. This underscores the importance of normalizing data before applying such methods [40]. Conversely, radiomic information derived from the MRI T2W modality yields more standardized results, potentially enhancing PIRADS (prostate imaging reporting data system) scores in a broader context [41]. Texture analysis, specifically gray-level co-occurrence matrix parameters, has shown a strong correlation with the histopathological grades of head and neck squamous cell carcinoma, emerging as an age-independent marker according to the study presented in [42]. A comparison of several traditional classification methods based on radiomic features with convolutional neural networks, trained both with and without transfer learning, was conducted to reduce the false-positive rates in prostate cancer detection using MRI images. The findings reveal that the tumor location affects the performance of all approaches, though deep learning models appear to be less susceptible to this issue [43].

A system for categorizing patients with idiopathic macular holes into those with favorable or unfavorable vision six months post vitrectomy can be developed using logistic regression, based on carefully chosen features from OCT data and medical records [44]. In another study, logistic regression and XGBoost were employed on features extracted via casual forest from functional MRI time series data, achieving 97% accuracy in distinguishing between healthy individuals and Parkinson’s disease patients [45]. A comparable approach demonstrated the effectiveness of the SAFE (scan and find early) microwave imaging device in detecting breast cancer in young women (83%) and those with dense breasts (91%) [46]. Various machine learning models were assessed to identify an accurate model for dose estimation in CT [47].

Statistical analyses reveal that the left atrial volume and function derived from four-chamber measurements are equivalent to biplane methods when appropriate bias correction factors are applied [48]. Preoperative CT findings correlate with the duration of laparoscopic appendectomy in pediatric cases, as demonstrated in [49]. Research has also shown that categorizing osteophytes into four distinct types based on CT scans impacts the planning of surgical interventions [50]. Statistical analysis of multi-color flow cytometry data using t-SNE (t-distributed stochastic neighbor embedding) enabled the identification of multiple clusters in leukemic cells, based on their antigen expression composition and intensity [51]. K-means analysis was applied to determine the separation of the total kidney volume in patients treated with tolvaptan and allowed it to be shown between others that high annual growth was associated with a responder phenotype [52].

Utilizing fully labeled data to train models enables the resolution of numerous challenges in automated medical data analysis. However, the process of creating annotations is time-consuming and costly, necessitating the exploration of alternative methods when labels are unavailable. In such cases, unsupervised algorithms prove valuable. For instance, research in [53] demonstrates that employing non-linear dimensionality reduction techniques, such as locally linear embedding and isometric feature maps, facilitates the identification of active lesions in MRI scans during the planning of multiple sclerosis diagnosis and treatment. A study in [54] introduces a k-means approach for detecting and quantifying immunohistochemical staining. This method, when applied to the tissue of interest with normalized color and a removed extraneous background, yields good outcomes.

Transformer networks emerged as a solution to various challenges in automated text processing and comprehension. When trained on extensive datasets, these models demonstrate good capabilities in swift knowledge acquisition and, through generative approaches, can operate across different modalities. Consequently, their application as a supportive tool in medicine is a logical choice. For example, in medical imaging analysis for diagnosis and treatment planning, findings must be documented textually, forming the basis of communication. To streamline this process, an automatic speech recognition system can be employed, as shown in [55]. Advanced language models such as GPT-4 can automatically describe the imaging data content, serving as an initial step towards assisting radiologists in image description. However, using these models without proper preparation leads to high error rates, while employing the current prompts can enhance results, as outlined in [56], along with appropriate tuning [57]. In [58], researchers compare GPT-4o with Cloud 3.5 Sonnet models for the automatic detection of acute ischemic stroke in diffusion-weighted imaging, revealing superior diagnostic abilities in the latter model, albeit still noting a high error rate that necessitates further investigation.

Table 1 summarizes the application of various AI approaches to medical data. Within the publication cycle, three main areas related to the topic of artificial intelligence in medicine can be distinguished. The largest group of articles (24) is the implementation of systems in specific medical issues, mainly using deep learning, U-Net, or CNNs. Most of them are studies of the proposed architecture for one problem, but there are also four publications testing and comparing different architectures. Three of the publications concern histopathological images. A very frequently discussed issue in this group of publications is image segmentation applied to a wide class of organs and tissues. A summary of such papers is presented in Table 2.

Table 1.

Summary of medical research using various AI techniques.

Table 2.

Summary of topic papers focused on cell or organ segmentation (MAE—mean absolute error, IoU—intersection over union, F1—F1-score, DSC—Dice similarity coefficient, HD—Hausdorff distance, and JAFROC—free-response receiver operating characteristic).

The second largest group of publications (12) concerned the use of artificial intelligence systems for the classification of medical data. The most commonly used method was logistic regression, but other regressors were also used, e.g., random forest, and once the stochastic t-SNE method was used. A summary of topic papers focused on pathology detection and classification is shown in Table 3.

Table 3.

Summary of topic papers focused on pathology detection and classification (AUC—area under the curve).

The third group of publications are analyses of the use of existing software based on artificial intelligence (e.g., The Quantitative ECG (QCG™) system, PixelShine, or A.I. DeNoise™). The cycle also contains three review papers.

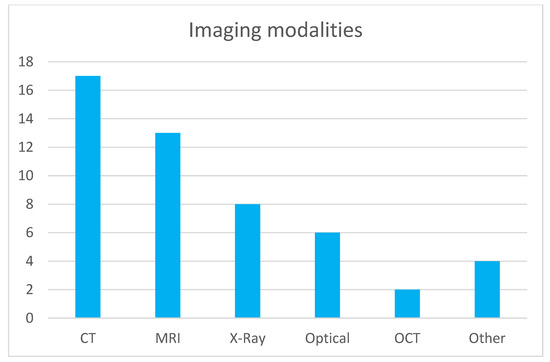

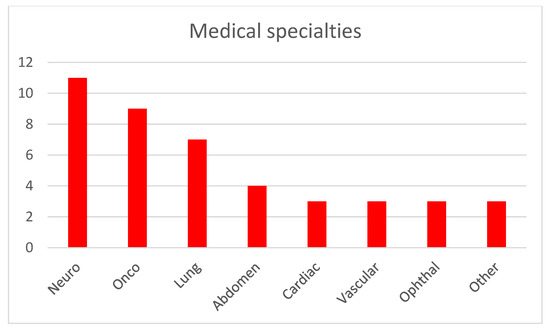

Figure 1 illustrates the distribution of imaging modalities used in publications within this topic. CT and MRI are predominant, which is expected given their widespread use across medical specialties. This correlates with the number of commercially available AI solutions for CT and MRI [1], representing the modalities with the most developed image-based diagnostic algorithms. Radiography is the next most common modality, and similarly, numerous FDA-approved (Food and Drug Administration) machine learning models exist for the analysis of X-ray images. Optical imaging, primarily microscopy of histological specimens, follows in prevalence. Other modalities such as OCT, ultrasound (US), and thermography are utilized to a much lesser extent. Regarding medical specialties (shown in Figure 2), publications on image analysis methods for neurology, pulmonology, and oncology (excluding brain and lung cancers) are the most prevalent. This is due to the advanced development of these medical fields and the very large number of conditions where the use of diagnostic imaging is essential. The number of described methods for these specialties also correlates with the number of commercially available AI algorithms, which are the most abundant for neurology and pulmonology applications [1]. The number of AI algorithms presented for other specialties is relatively similar and small.

Figure 1.

Modality distribution in topic publications.

Figure 2.

Medical specialties addressed by the described AI methods.

In image analysis tasks, where the primary research problem involves detecting and classifying pathologies or predicting their occurrence, algorithms based on deep learning dominate. It is noteworthy that deep learning methods increasingly incorporate attention mechanisms, which often lead to improved performance. The topic also includes studies employing radiomics, particularly texture analysis [59]. These methods, combined with classical machine learning algorithms or statistical analysis, remain effective tools for biomedical image analysis. However, their popularity is declining in favor of deep learning-based approaches.

For the segmentation tasks described in the topic papers, dedicated deep learning architectures dominate. In particular, the U-Net architecture and its variants are prevalent. Alternative approaches, such as oscillator neural networks, which have proven effective in biomedical image segmentation [60], are virtually absent. The transformer-based models, previously employed in large language models like ChatGPT, are emerging. However, due to the limited number of topic publications utilizing these methods, comparing their performance with classical convolutional neural networks is challenging. The growing popularity of transformer-based algorithms in biomedical image analysis is noteworthy, due to their often-superior performance compared to deep neural networks [24].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Obuchowicz, R.; Lasek, J.; Wodziński, M.; Piórkowski, A.; Strzelecki, M.; Nurzynska, K. Artificial Intelligence-Empowered Radiology—Current Status and Critical Review. Diagnostics 2025, 15, 282. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.-T.; Wang, B.-C.; Chung, J.-Y. Identifying Acute Aortic Syndrome and Thoracic Aortic Aneurysm from Chest Radiography in the Emergency Department Using Convolutional Neural Network Models. Diagnostics 2024, 14, 1646. [Google Scholar] [CrossRef] [PubMed]

- Wen, X.; Li, J.; Yang, L. Breast Cancer Diagnosis Method Based on Cross-Mammogram Four-View Interactive Learning. Tomography 2024, 10, 848–868. [Google Scholar] [CrossRef]

- Kolhar, M.; Al Rajeh, A.M.; Kazi, R.N.A. Augmenting Radiological Diagnostics with AI for Tuberculosis and COVID-19 Disease Detection: Deep Learning Detection of Chest Radiographs. Diagnostics 2024, 14, 1334. [Google Scholar] [CrossRef]

- Aamir, M.; Namoun, A.; Munir, S.; Aljohani, N.; Alanazi, M.H.; Alsahafi, Y.; Alotibi, F. Brain Tumor Detection and Classification Using an Optimized Convolutional Neural Network. Diagnostics 2024, 14, 1714. [Google Scholar] [CrossRef]

- Kiyuna, T.; Cosatto, E.; Hatanaka, K.C.; Yokose, T.; Tsuta, K.; Motoi, N.; Makita, K.; Shimizu, A.; Shinohara, T.; Suzuki, A.; et al. Evaluating Cellularity Estimation Methods: Comparing AI Counting with Pathologists’ Visual Estimates. Diagnostics 2024, 14, 1115. [Google Scholar] [CrossRef] [PubMed]

- Kufel, J.; Paszkiewicz, I.; Kocot, S.; Lis, A.; Dudek, P.; Czogalik, Ł.; Janik, M.; Bargieł-Łączek, K.; Bartnikowska, W.; Koźlik, M.; et al. Deep Learning in Cardiothoracic Ratio Calculation and Cardiomegaly Detection. J. Clin. Med. 2024, 13, 4180. [Google Scholar] [CrossRef]

- Baştuğ, B.T.; Güneri, G.; Yıldırım, M.S.; Çorbacı, K.; Dandıl, E. Fully Automated Detection of the Appendix Using U-Net Deep Learning Architecture in CT Scans. J. Clin. Med. 2024, 13, 5893. [Google Scholar] [CrossRef]

- Lasek, J.; Nurzynska, K.; Piórkowski, A.; Strzelecki, M.; Obuchowicz, R. Deep Learning for Ultrasonographic Assessment of Temporomandibular Joint Morphology. Tomography 2025, 11, 27. [Google Scholar] [CrossRef]

- Fufin, M.; Makarov, V.; Alfimov, V.I.; Ananev, V.V.; Ananeva, A. Pulmonary Fissure Segmentation in CT Images Using Image Filtering and Machine Learning. Tomography 2024, 10, 1645–1664. [Google Scholar] [CrossRef]

- Rashid, P.Q.; Türker, I. Lung Disease Detection Using U-Net Feature Extractor Cascaded by Graph Convolutional Network. Diagnostics 2024, 14, 1313. [Google Scholar] [CrossRef] [PubMed]

- Sørensen, P.J.; Ladefoged, C.N.; Larsen, V.A.; Andersen, F.L.; Nielsen, M.B.; Poulsen, H.S.; Carlsen, J.F.; Hansen, A.E. Repurposing the Public BraTS Dataset for Postoperative Brain Tumour Treatment Response Monitoring. Tomography 2024, 10, 1397–1410. [Google Scholar] [CrossRef] [PubMed]

- Haylaz, E.; Gumussoy, I.; Duman, S.B.; Kalabalik, F.; Eren, M.C.; Demirsoy, M.S.; Celik, O.; Bayrakdar, I.S. Automatic Segmentation of the Nasolacrimal Canal: Application of the nnU-Net v2 Model in CBCT Imaging. J. Clin. Med. 2025, 14, 778. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, R.; Zhu, Z.; Li, P.; Bai, Y.; Wang, M. Lightweight MRI Brain Tumor Segmentation Enhanced by Hierarchical Feature Fusion. Tomography 2024, 10, 1577–1590. [Google Scholar] [CrossRef]

- Hasei, J.; Nakahara, R.; Otsuka, Y.; Nakamura, Y.; Ikuta, K.; Osaki, S.; Hironari, T.; Miwa, S.; Ohshika, S.; Nishimura, S.; et al. The Three-Class Annotation Method Improves the AI Detection of Early-Stage Osteosarcoma on Plain Radiographs: A Novel Approach for Rare Cancer Diagnosis. Cancers 2025, 17, 29. [Google Scholar] [CrossRef]

- Dandıl, E.; Baştuğ, B.T.; Yıldırım, M.S.; Çorbacı, K.; Güneri, G. MaskAppendix: Backbone-Enriched Mask R-CNN Based on Grad-CAM for Automatic Appendix Segmentation. Diagnostics 2024, 14, 2346. [Google Scholar] [CrossRef]

- Lee, K.; Lee, S.; Kwak, J.S.; Park, H.; Oh, H.; Koh, J.C. Development and Validation of an Artificial Intelligence Model for Detecting Rib Fractures on Chest Radiographs. J. Clin. Med. 2024, 13, 3850. [Google Scholar] [CrossRef]

- Tiosano, L.; Abutbul, R.; Lender, R.; Shwartz, Y.; Chowers, I.; Hoshen, Y.; Levy, J. Anomaly Detection and Biomarkers Localization in Retinal Images. J. Clin. Med. 2024, 13, 3093. [Google Scholar] [CrossRef] [PubMed]

- Gohmann, R.F.; Schug, A.; Krieghoff, C.; Seitz, P.; Majunke, N.; Buske, M.; Kaiser, F.; Schaudt, S.; Renatus, K.; Desch, S.; et al. Interrater Variability of ML-Based CT-FFR in Patients without Obstructive CAD before TAVR: Influence of Image Quality, Coronary Artery Calcifications, and Location of Measurement. J. Clin. Med. 2024, 13, 5247. [Google Scholar] [CrossRef]

- Drews, M.A.; Demircioğlu, A.; Neuhoff, J.; Haubold, J.; Zensen, S.; Opitz, M.K.; Forsting, M.; Nassenstein, K.; Bos, D. Impact of AI-Based Post-Processing on Image Quality o Non-Contrast Computed Tomography of the Chest and Abdomen. Diagnostics 2024, 14, 612. [Google Scholar] [CrossRef]

- Santos, L.; Hsu, H.-Y.; Nelson, R.R.; Sullivan, B.; Shin, J.; Fung, M.; Lebel, M.R.; Jambawalikar, S.; Jaramillo, D. Impact of Deep Learning Denoising Algorithm on Diffusion Tensor Imaging of the Growth Plate on Different Spatial Resolutions. Tomography 2024, 10, 504–519. [Google Scholar] [CrossRef] [PubMed]

- Aromiwura, A.A.; Kalra, D.K. Artificial Intelligence in Coronary Artery Calcium Scoring. J. Clin. Med. 2024, 13, 3453. [Google Scholar] [CrossRef]

- da Rocha, J.L.D.; Lai, J.; Pandey, P.; Myat, P.S.M.; Loschinskey, Z.; Bag, A.K.; Sitaram, R. Artificial Intelligence for Neuroimaging in Pediatric Cancer. Cancers 2025, 17, 622. [Google Scholar] [CrossRef]

- Li, X.; Hong, Y.; Xu, Y.; Hu, M. VerFormer: Vertebrae-Aware Transformer for Automatic Spine Segmentation from CT Images. Diagnostics 2024, 14, 1859. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Chen, W.; Joshi, T.; Zhang, X.; Loh, P.-L.; Jog, V.; Bruce, R.J.; Garrett, J.W.; McMillan, A.B. BAE-ViT: An Efficient Multimodal Vision Transformer for Bone Age Estimation. Tomography 2024, 10, 2058–2072. [Google Scholar] [CrossRef] [PubMed]

- Nurzyńska, K.; Piórkowski, A.; Strzelecki, M.; Kociołek, M.; Banyś, R.P.; Obuchowicz, R. Differentiating age and sex in vertebral body CT scans—Texture analysis versus deep learning approach. Biocybern. Biomed. Eng. 2024, 44, 20–30. [Google Scholar] [CrossRef]

- Song, J.H.; Kim, E.R.; Hong, Y.; Sohn, I.; Ahn, S.; Kim, S.-H.; Jang, K.-T. Prediction of Lymph Node Metastasis in T1 Colorectal Cancer Using Artificial Intelligence with Hematoxylin and Eosin-Stained Whole-Slide-Images of Endoscopic and Surgical Resection Specimens. Cancers 2024, 16, 1900. [Google Scholar] [CrossRef]

- Aldakhil, L.A.; Alhasson, H.F.; Alharbi, S.S. Attention-Based Deep Learning Approach for Breast Cancer Histopathological Image Multi-Classification. Diagnostics 2024, 14, 1402. [Google Scholar] [CrossRef]

- Yalcinbas, M.F.; Ozturk, C.; Ozyurt, O.; Emir, U.E.; Bagci, U. Rosette Trajectory MRI Reconstruction with Vision Transformers. Tomography 2025, 11, 41. [Google Scholar] [CrossRef]

- Park, M.J.; Choi, Y.J.; Shim, M.; Cho, Y.; Park, J.; Choi, J.; Kim, J.; Lee, E.; Kim, S.-Y. Performance of ECG-Derived Digital Biomarker for Screening Coronary Occlusion in Resuscitated Out-of-Hospital Cardiac Arrest Patients: A Comparative Study between Artificial Intelligence and a Group of Experts. J. Clin. Med. 2024, 13, 1354. [Google Scholar] [CrossRef]

- Boldt, J.; Schuster, M.; Krastl, G.; Schmitter, M.; Pfundt, J.; Stellzig-Eisenhauer, A.; Kunz, F. Developing the Benchmark: Establishing a Gold Standard for the Evaluation of AI Caries Diagnostics. J. Clin. Med. 2024, 13, 3846. [Google Scholar] [CrossRef] [PubMed]

- Herpe, G.; Nelken, H.; Vendeuvre, T.; Guenezan, J.; Giraud, C.; Mimoz, O.; Feydy, A.; Tasu, J.-P.; Guillevin, R. Effectiveness of an Artificial Intelligence Software for Limb Radiographic Fracture Recognition in an Emergency Department. J. Clin. Med. 2024, 13, 5575. [Google Scholar] [CrossRef] [PubMed]

- Welsner, M.; Navel, H.; Hosch, R.; Rathsmann, P.; Stehling, F.; Mathew, A.; Sutharsan, S.; Strassburg, S.; Westhölter, D.; Taube, C.; et al. Opportunistic Screening for Low Bone Mineral Density in Adults with Cystic Fibrosis Using Low-Dose Computed Tomography of the Chest with Artificial Intelligence. J. Clin. Med. 2024, 13, 5961. [Google Scholar] [CrossRef]

- Barca, I.C.; Potop, V.; Arama, S.S. Monitoring Progression in Hypertensive Patients with Dyslipidemia Using Optical Coherence Tomography Angiography: Can A.I. Be Improved? J. Clin. Med. 2024, 13, 7584. [Google Scholar] [CrossRef] [PubMed]

- Eom, Y.; Park, Y.-J.; Lee, S.; Lee, S.-J.; An, Y.-S.; Park, B.-N.; Yoon, J.-K. Automated Measurement of Effective Radiation Dose by 18F-Fluorodeoxyglucose Positron Emission Tomography/Computed Tomography. Tomography 2024, 10, 2144–2157. [Google Scholar] [CrossRef]

- Craus-Miguel, A.; Munar, M.; Moyà-Alcover, G.; Contreras-Nogales, A.M.; González-Hidalgo, M.; Segura-Sampedro, J.J. Enhancing Surgical Wound Monitoring: A Paired Cohort Study Evaluating a New AI-Based Application for Automatic Detection of Potential Infections. J. Clin. Med. 2024, 13, 7863. [Google Scholar] [CrossRef]

- Sritharan, N.; Gutierrez, C.; Perez-Raya, I.; Gonzalez-Hernandez, J.-L.; Owens, A.; Dabydeen, D.; Medeiros, L.; Kandlikar, S.; Phatak, P. Breast Cancer Screening Using Inverse Modeling of Surface Temperatures and Steady-State Thermal Imaging. Cancers 2024, 16, 2264. [Google Scholar] [CrossRef]

- Nielsen, M.Ø.; Ljoki, A.; Zerahn, B.; Jensen, L.T.; Kristensen, B. Reproducibility and Repeatability in Focus: Evaluating LVEF Measurements with 3D Echocardiography by Medical Technologists. Diagnostics 2024, 14, 1729. [Google Scholar] [CrossRef]

- Watzenboeck, M.L.; Beer, L.; Kifjak, D.; Röhrich, S.; Heidinger, B.H.; Prayer, F.; Milos, R.-I.; Apfaltrer, P.; Langs, G.; Baltzer, P.A.T.; et al. Contrast Agent Dynamics Determine Radiomics Profiles in Oncologic Imaging. Cancers 2024, 16, 1519. [Google Scholar] [CrossRef]

- Gibała, S.; Obuchowicz, R.; Lasek, J.; Piórkowski, A.; Nurzynska, K. Textural Analysis Supports Prostate MR Diagnosis in PIRADS Protocol. Appl. Sci. 2023, 13, 9871. [Google Scholar] [CrossRef]

- Liu, J.-C.; Ruan, X.-H.; Chun, T.-T.; Yao, C.; Huang, D.; Wong, H.-L.; Lai, C.-T.; Tsang, C.-F.; Ho, S.-H.; Ng, T.-L.; et al. MRI T2w Radiomics-Based Machine Learning Models in Imaging Simulated Biopsy Add Diagnostic Value to PI-RADS in Predicting Prostate Cancer: A Retrospective Diagnostic Study. Cancers 2024, 16, 2944. [Google Scholar] [CrossRef] [PubMed]

- de Oliveira, L.A.P.; Lopes, D.L.G.; Gomes, J.P.P.; da Silveira, R.V.; Nozaki, D.V.A.; Santos, L.F.; Castellano, G.; Lopes, S.L.P.d.C.; Costa, A.L.F. Enhanced Diagnostic Precision: Assessing Tumor Differentiation in Head and Neck Squamous Cell Carcinoma Using Multi-Slice Spiral CT Texture Analysis. J. Clin. Med. 2024, 13, 4038. [Google Scholar] [CrossRef] [PubMed]

- Rippa, M.; Schulze, R.; Kenyon, G.; Himstedt, M.; Kwiatkowski, M.; Grobholz, R.; Wyler, S.; Cornelius, A.; Schindera, S.; Burn, F. Evaluation of Machine Learning Classification Models for False-Positive Reduction in Prostate Cancer Detection Using MRI Data. Diagnostics 2024, 14, 1677. [Google Scholar] [CrossRef] [PubMed]

- Mase, Y.; Matsui, Y.; Imai, K.; Imamura, K.; Irie-Ota, A.; Chujo, S.; Matsubara, H.; Kawanaka, H.; Kondo, M. Preoperative OCT Characteristics Contributing to Prediction of Postoperative Visual Acuity in Eyes with Macular Hole. J. Clin. Med. 2024, 13, 4826. [Google Scholar] [CrossRef]

- Solana-Lavalle, G.; Cusimano, M.D.; Steeves, T.; Rosas-Romero, R.; Tyrrell, P.N. Causal Forest Machine Learning Analysis of Parkinson’s Disease in Resting-State Functional Magnetic Resonance Imaging. Tomography 2024, 10, 894–911. [Google Scholar] [CrossRef]

- Yurtseven, A.; Janjic, A.; Cayoren, M.; Bugdayci, O.; Aribal, M.E.; Akduman, I. XGBoost Enhances the Performance of SAFE: A Novel Microwave Imaging System for Early Detection of Malignant Breast Cancer. Cancers 2025, 17, 214. [Google Scholar] [CrossRef]

- Ferrante, M.; De Marco, P.; Rampado, O.; Gianusso, L.; Origgi, D. Effective Dose Estimation in Computed Tomography by Machine Learning. Tomography 2025, 11, 2. [Google Scholar] [CrossRef]

- Assadi, H.; Sawh, N.; Bailey, C.; Matthews, G.; Li, R.; Grafton-Clarke, C.; Mehmood, Z.; Kasmai, B.; Swoboda, P.P.; Swift, A.J.; et al. Validation of Left Atrial Volume Correction for Single Plane Method on Four-Chamber Cine Cardiac MRI. Tomography 2024, 10, 459–470. [Google Scholar] [CrossRef]

- Taskent, I.; Ece, B.; Narsat, M.A. Are Preoperative CT Findings Useful in Predicting the Duration of Laparoscopic Appendectomy in Pediatric Patients? A Single Center Study. J. Clin. Med. 2024, 13, 5504. [Google Scholar] [CrossRef]

- Taçyıldız, A.E.; İnceoğlu, F. Classification of Osteophytes Occurring in the Lumbar Intervertebral Foramen. Tomography 2024, 10, 618–631. [Google Scholar] [CrossRef]

- Nollmann, C.; Moskorz, W.; Wimmenauer, C.; Jäger, P.S.; Cadeddu, R.P.; Timm, J.; Heinzel, T.; Haas, R. Characterization of CD34+ Cells from Patients with Acute Myeloid Leukemia (AML) and Myelodysplastic Syndromes (MDS) Using a t-Distributed Stochastic Neighbor Embedding (t-SNE) Protocol. Cancers 2024, 16, 1320. [Google Scholar] [CrossRef] [PubMed]

- Dev, H.; Hu, Z.; Blumenfeld, J.D.; Sharbatdaran, A.; Kim, Y.; Zhu, C.; Shimonov, D.; Chevalier, J.M.; Donahue, S.; Wu, A.; et al. The Role of Baseline Total Kidney Volume Growth Rate in Predicting Tolvaptan Efficacy for ADPKD Patients: A Feasibility Study. J. Clin. Med. 2025, 14, 1449. [Google Scholar] [CrossRef]

- Uwaeze, J.; Narayana, P.A.; Kamali, A.; Braverman, V.; Jacobs, M.A.; Akhbardeh, A. Automatic Active Lesion Tracking in Multiple Sclerosis Using Unsupervised Machine Learning. Diagnostics 2024, 14, 632. [Google Scholar] [CrossRef]

- Zamojski, D.; Gogler, A.; Scieglinska, D.; Marczyk, M. EpidermaQuant: Unsupervised Detection and Quantification of Epidermal Differentiation Markers on H-DAB-Stained Images of Reconstructed Human Epidermis. Diagnostics 2024, 14, 1904. [Google Scholar] [CrossRef] [PubMed]

- Jelassi, M.; Jemai, O.; Demongeot, J. Revolutionizing Radiological Analysis: The Future of French Language Automatic Speech Recognition in Healthcare. Diagnostics 2024, 14, 895. [Google Scholar] [CrossRef] [PubMed]

- Wada, A.; Akashi, T.; Shih, G.; Hagiwara, A.; Nishizawa, M.; Hayakawa, Y.; Kikuta, J.; Shimoji, K.; Sano, K.; Kamagata, K.; et al. Optimizing GPT-4 Turbo Diagnostic Accuracy in Neuroradiology through Prompt Engineering and Confidence Thresholds. Diagnostics 2024, 14, 1541. [Google Scholar] [CrossRef]

- Koyun, M.; Cevval, Z.K.; Reis, B.; Ece, B. Detection of Intracranial Hemorrhage from Computed Tomography Images: Diagnostic Role and Efficacy of ChatGPT-4o. Diagnostics 2025, 15, 143. [Google Scholar] [CrossRef]

- Koyun, M.; Taskent, I. Evaluation of Advanced Artificial Intelligence Algorithms’ Diagnostic Efficacy in Acute Ischemic Stroke: A Comparative Analysis of ChatGPT-4o and Claude 3.5 Sonnet Models. J. Clin. Med. 2025, 14, 571. [Google Scholar] [CrossRef]

- Szczypiński, P.M.; Strzelecki, M.; Materka, A.; Klepaczko, A. Mazda—The Software Package for Textural Analysis of Biomedical Images. In Computers in Medical Activity; Kącki, E., Rudnicki, M., Stempczyńska, J., Eds.; Advances in Soft Computing; Springer: Berlin/Heidelberg, Germany, 2009; Volume 65, pp. 73–84. [Google Scholar] [CrossRef]

- Strzelecki, M. Texture boundary detection using network of synchronised oscillators. Electron. Lett. 2004, 40, 466–467. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).