The Potential of Automated Assessment of Cognitive Function Using Non-Neuroimaging Data: A Systematic Review

Abstract

:1. Introduction

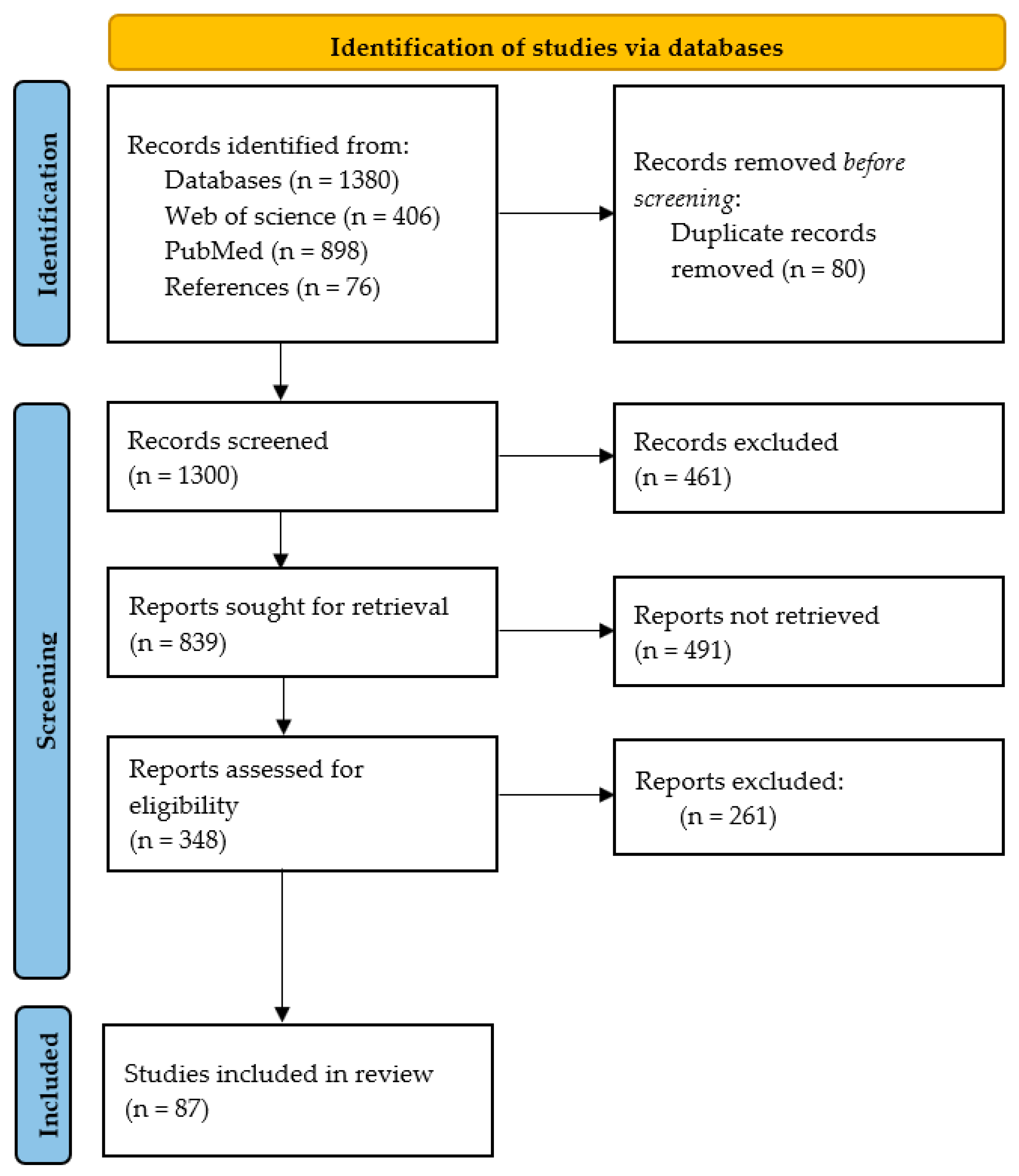

2. Methods

2.1. Search Strategy

2.2. Exclusion and Inclusion Criteria

- Studies conducted in a language other than English.

- Studies that have focused on automated cognitive assessment using medical imaging data, such as magnetic resonance imaging (MRI), positron emission tomography (PET), and computed tomography (CT) scans.

- Studies assessing cognitive impairment associated with diseases, such as HIV, cancer, stroke, peri- and post-operative procedures, etc.

- Studies of cognitive assessment in children, adolescents, or nonhuman participants (for example, monkeys and chimpanzees).

- Articles whose full text was not freely available online.

- Studies that discuss detection or diagnosis within the scope of conversion from MCI to AD.

- Studies that provide a limited description of data modalities, subjects, AI techniques, devices, or performance metrics.

- Studies assessing the diagnosis of cognitive impairment or cognitive function associated with neurodegenerative diseases.

- Studies distinguishing between control and cognitively impaired participants.

- Studies predicting cognitive scores with artificial intelligence algorithms or statistical analysis using non-neuroimaging data.

- Studies comparing the conventional approach of assessment with automated assessment.

- Studies that discuss digital, computerized, or automated assessment of cognitive decline.

2.3. Definitions of What Is Known

2.3.1. Background and Concepts

2.3.2. Conventional Assessment Tools

| Tool | Purpose | Domain | Maximum Score Possible | Administration Time |

|---|---|---|---|---|

| LABIS Graf et al., 2008 [43] | IADL screening (Functional evaluation) | Eight domains: Ability to use telephone, shopping, food preparation, housekeeping, laundry, transportation, responsibility for own medications, and ability to handle finances | 8 points | 10 to 15 min |

| Katz ADL Index Katz et al., 1970 [44] | ADL screening (Functional evaluation) | Six domains: bathing, dressing, toileting, transferring, continence, and feeding | 6 points | Less than 5 min |

| MMSE Folstein et al., 1975 [39] | Cognitive screening (Cognitive evaluation) | Five domains: orientation (to time and place), memory (immediate and delayed recall), concentration and attention and calculation, three-word recall, language, and visual construction | 30 points | Between 5 to 10 min |

| Mini-cog Borson et al., 2000 [40] | (Cognitive evaluation) | Two domains: A 3-item recall component and a clock drawing test | 5 points | Takes less than 3 min |

| MoCA Nasreddine et al., 2005 [38] | MCI and Dementia screening | Eight domains: Visuospatial/executive, naming, memory, attention, language, abstraction, delayed recall, and orientation (to time and place) | 30 points | Approximately 10 min |

3. Results

3.1. Automated Assessment Tools

3.1.1. Game-Based

3.1.2. Digital Versions of Conventional Tools

3.1.3. Original Computerized Tests and Batteries

3.1.4. Virtual Reality/Wearable Sensors/Smart Home Technologies

3.1.5. Artificial Intelligence-Based (AI-Based) Tools

3.2. Comparative Analysis of Automated and Conventional Cognitive Assessment Tools

| Tool | Participant | Domain Assessed By the AA | Comparative Metrics Reported for Both the Conventional Approach (CA) and Automated Approach (AA) | Time Taken to Administer | Observation | Reference | ||

|---|---|---|---|---|---|---|---|---|

| Automated tools compared with conventional tools like MoCA | MoCA (CA) ACE-R (CA) CANS-MCI (AA) | 35 participants (20 CN and 15 MCI) | Memory, executive function, and language/spatial fluency | AUC (MoCA) = 0.890 AUC (ACE-R) = 0.822 Sens (CA) = 0.90 Spec (CA)= 0.67 (sens and spec value is for both MoCA and ACE-R) | AUC (CANS-MCI) = 0.867 Sens (AA) = 0.89 Spec (AA)= 0.73 | MoCA ~ 10 min ACE-R ~ 15 min CANS-MCI ~ 30 min | Of the 3 examples cited here, AA and CA appear to have a close and competitive outcome. | [91] |

| CDT (CA) CDT (AA) | 70 (20 AD, 30 MCI and 20 CN) patients | Executive and visual-spatial function | Sens (CA) = 0.63 Spec (CA) = 0.83 | Sens (AA) = 0.81 Spec (AA) = 0.72 | NA | [63] | ||

| MoCA-k (CA) mSTS-MCI (AA) | 177 participants (103 CN and 74 MCI) | Memory, attention, and executive function | AUC (CA) = 0.819 Sens (CA) = 0.94 Spec (CA) = 0.60 | AUC (AA) = 0.985 Sens (AA) = 0.99 Spec (AA) = 0.93 | mSTS-MCI ~ 10–15 min | [68] | ||

| Automated tools with high correlation when compared with the conventional approach | mSTS-MCI | 177 participants (103 CN and 74 MCI) | Memory, attention, and executive function. Reaction time is assessed for attention while the other 2 measures performance. | r = 0.773 correlation with MoCA-K (Korean version of MoCA) Sens = 0.99 Spec = 0.93 (sens and spec at optimal cutoff) | 10–15 min | Findings reflected in the correlation between both approaches show a positively high association between both. | [68] | |

| CoCoSc | 160 participant (59 CI and 101 CN) | Six subtests covering five cognitive domains including learning and memory, executive functions, orientation, attention and working memory and time- and event-based prospective memory are scored based on completion of the task. | r = 0.71 correlation with MoCA AUC = 0.78 Sens = 0.78 Spec = 0.69 | 15 min | [92] | |||

| CCS | 60 participants (20 CN and 40 mild-moderate dementia but only 34 completed the CCS task) | Three domains were assessed concentration, memory, and visuospatial with related tasks and scored based on correct responses provided in 1 min for each task. | r = 0.78 Correlation with MoCA Sens = 0.94 Spec = 0.60 AUC = 0.94 | 1 min per task | [67] | |||

| C-ABC (Computerized assessment battery for cognition) | 701 participants (422 dementia, 145 MCI, and 574 CN) | Sensorimotor skills, attention, orientation, and immediate memory, among others | r = 0.753 Correlation with MMSE score Sens = 0.77 Spec = 0.71 Average values for distinguishing MCI from CN | ~5 min | [33] | |||

| MoCA-CC | 176 participants (83 CN and 93 MCI) | Eight cognitive domains: executive function, memory, language, visuoconstructional skills among others | r = 0.93 correlation with MoCA-BJ AUC= 0.97 Sens = 0. 958 Spec = 0.871 | ~10 min | [64] | |||

3.3. Advantages of Automated Assessment

4. Discussion

4.1. Limitations

4.2. Authors’ Opinion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AA | Automated Assessment |

| Accu | Accuracy |

| ACE-R | Addenbrooke’s Cognitive Examination-Revised |

| AD | Alzheimer’s Disease |

| ADL | Activity of Daily Living |

| AI | Artificial Intelligence |

| ANAM | Automated Neuropsychological Assessment Metrics |

| AUC | Area Under the ROC (Receiver Operating Characteristics) Curve |

| BHA | Brain Health Assessment |

| CA | Conventional Assessment |

| CAAB | Clinical Assessment using Activity Behavior |

| C-ABC | Computerized Assessment Battery for Cognition |

| CAMCI | Computer Assessment of Mild Cognitive Impairment |

| CANS-MCI | Computer-Administered Neuropsychological Screen for Mild Cognitive Impairment |

| CANTAB | Cambridge Neuropsychological Test Automated Battery |

| CAVIRE | Cognitive Assessment by Virtual Reality |

| CCC | Concordance Correlation Coefficients |

| CCS | Computerized Cognitive Screening |

| CDT | Clock Drawing Test |

| CI | Cognitively Impaired |

| CoCoSc | Computerized Cognitive Screen |

| CN | Cognitively Normal/Healthy Adult |

| CST | Computer Self-Test |

| CT scan | Computed Tomography scan |

| dTMT | Digital Trail Making Test |

| eCDT | Electronic Clock Drawing Test |

| eMoCA | Electronic Montreal Cognitive Assessment |

| ePDT | Electronic Pentagon Drawing Test |

| eTMT | Electronic Trail Making Test |

| FCD | Functional Cognitive Disorder |

| GPCOG | General Practitioner Assessment of Cognition |

| HIV | Human Immunodeficiency Viruses |

| HK-VMT | Hong Kong–Vigilance and Memory Test |

| IADL | Instrumental Activity of daily living |

| LABIS | Lawton and Brody IADL scale |

| MCI | Mild Cognitive Impairment |

| MRI | Magnetic Resonance Imaging |

| MIS | Memory Impairment Screen |

| MMSE | Mini-Mental State Examination |

| Mini-cog | Mini-Cognitive |

| MoCA | Montreal Cognitive Assessment |

| MoCA-K | Korean version of Montreal Cognitive Assessment |

| MoCA-BJ | Montreal Cognitive Assessment–Beijing version |

| mSTS-MCI | Mobile Screening Test System for Screening Mild Cognitive Impairment |

| NNCT | NAIHA Neuro Cognitive Test |

| NHATS | National Health and Aging Trends Study |

| PC-based | Personal Computer-based |

| PD | Parkinson Disease |

| PDT | Pentagon Drawing Test |

| PET scan | Positron Emission Tomography |

| r | Pearson Correlation |

| r1 | Bivariate Correlation Coefficients |

| Saturn | Self-Administered Tasks Uncovering Risk of Neurodegeneration |

| Sens | Sensitivity |

| SLE | Systemic Lupus Erythematosus |

| Spec | Specificity |

| TIA | Transient Ischemic Attack |

| TMT | Trail Making Test |

| UPSA-B | University of California, San Diego Performance-Based Skills Assessment Brief |

| WAIS-IV | Wechsler Adult Intelligence Scale | Fourth Edition |

| WTMT | Walking Trail Making Test |

| VPC | Web-based Visual-Paired Comparison |

| VRFCAT | Virtual Reality Functional Capacity Assessment Tool |

References

- Mohamed, A.A.; Marques, O. Diagnostic Efficacy and Clinical Relevance of Artificial Intelligence in Detecting Cognitive Decline. Cureus 2023, 15, e47004. [Google Scholar] [CrossRef] [PubMed]

- Roebuck-Spencer, T.M.; Glen, T.; Puente, A.E.; Denney, R.L.; Ruff, R.M.; Hostetter, G.; Bianchini, K.J. Cognitive Screening Tests Versus Comprehensive Neuropsychological Test Batteries: A National Academy of Neuropsychology Education Paper. Arch. Clin. Neuropsychol. 2017, 32, 491–498. [Google Scholar] [CrossRef] [PubMed]

- Cullen, B.; O’Neill, B.; Evans, J.J.; Coen, R.F.; Lawlor, B.A. A Review of Screening Tests for Cognitive Impairment. J. Neurol. Neurosurg. Psychiatry 2007, 78, 790–799. [Google Scholar] [CrossRef] [PubMed]

- Ismail, Z.; Rajji, T.K.; Shulman, K.I. Brief Cognitive Screening Instruments: An Update. Int. J. Geriatr. Psychiatry 2010, 25, 111–120. [Google Scholar] [CrossRef]

- Dhakal, A.; Bobrin, B.D. Cognitive Deficits. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2023. [Google Scholar]

- Porsteinsson, A.P.; Isaacson, R.S.; Knox, S.; Sabbagh, M.N.; Rubino, I. Diagnosis of Early Alzheimer’s Disease: Clinical Practice in 2021. J. Prev. Alzheimers Dis. 2021, 8, 371–386. [Google Scholar] [CrossRef]

- Chen, L.; Zhen, W.; Peng, D. Research on Digital Tool in Cognitive Assessment: A Bibliometric Analysis. Front. Psychiatry 2023, 14, 1227261. [Google Scholar] [CrossRef]

- Bohr, A.; Memarzadeh, K. The Rise of Artificial Intelligence in Healthcare Applications. In Artificial Intelligence in Healthcare; Elsevier: Amsterdam, The Netherlands, 2020; pp. 25–60. ISBN 978-0-12-818438-7. [Google Scholar]

- Lindenmayer, J.-P.; Goldring, A.; Borne, S.; Khan, A.; Keefe, R.S.E.; Insel, B.J.; Thanju, A.; Ljuri, I.; Foreman, B. Assessing Instrumental Activities of Daily Living (iADL) with a Game-Based Assessment for Individuals with Schizophrenia. Schizophr. Res. 2020, 223, 166–172. [Google Scholar] [CrossRef]

- Vallejo, V.; Wyss, P.; Rampa, L.; Mitache, A.V.; Müri, R.M.; Mosimann, U.P.; Nef, T. Evaluation of a Novel Serious Game Based Assessment Tool for Patients with Alzheimer’s Disease. PLoS ONE 2017, 12, e0175999. [Google Scholar] [CrossRef]

- Juncos-Rabadán, O.; Pereiro, A.X.; Facal, D.; Reboredo, A.; Lojo-Seoane, C. Do the Cambridge Neuropsychological Test Automated Battery Episodic Memory Measures Discriminate Amnestic Mild Cognitive Impairment? Int. J. Geriatr. Psychiatry 2014, 29, 602–609. [Google Scholar] [CrossRef]

- Junkkila, J.; Oja, S.; Laine, M.; Karrasch, M. Applicability of the CANTAB-PAL Computerized Memory Test in Identifying Amnestic Mild Cognitive Impairment and Alzheimers Disease. Dement. Geriatr. Cogn. Disord. 2012, 34, 83–89. [Google Scholar] [CrossRef]

- Saxton, J.; Morrow, L.; Eschman, A.; Archer, G.; Luther, J.; Zuccolotto, A. Computer Assessment of Mild Cognitive Impairment. Postgrad. Med. 2009, 121, 177–185. [Google Scholar] [CrossRef] [PubMed]

- Wong, W.T.; Tan, N.C.; Lim, J.E.; Allen, J.C.; Lee, W.S.; Quah, J.H.M.; Paulpandi, M.; Teh, T.A.; Lim, S.H.; Malhotra, R. Comparison of Time Taken to Assess Cognitive Function Using a Fully Immersive and Automated Virtual Reality System vs. the Montreal Cognitive Assessment. Front. Aging Neurosci. 2021, 13, 756891. [Google Scholar] [CrossRef] [PubMed]

- Beltrami, D.; Gagliardi, G.; Rossini Favretti, R.; Ghidoni, E.; Tamburini, F.; Calzà, L. Speech Analysis by Natural Language Processing Techniques: A Possible Tool for Very Early Detection of Cognitive Decline? Front. Aging Neurosci. 2018, 10, 369. [Google Scholar] [CrossRef] [PubMed]

- Javed, A.R.; Fahad, L.G.; Farhan, A.A.; Abbas, S.; Srivastava, G.; Parizi, R.M.; Khan, M.S. Automated Cognitive Health Assessment in Smart Homes Using Machine Learning. Sustain. Cities Soc. 2021, 65, 102572. [Google Scholar] [CrossRef]

- Veneziani, I.; Marra, A.; Formica, C.; Grimaldi, A.; Marino, S.; Quartarone, A.; Maresca, G. Applications of Artificial Intelligence in the Neuropsychological Assessment of Dementia: A Systematic Review. J. Pers. Med. 2024, 14, 113. [Google Scholar] [CrossRef]

- Wesnes, K.A. Cognitive Function Testing: The Case for Standardization and Automation. Br. Menopause Soc. J. 2006, 12, 158–163. [Google Scholar] [CrossRef]

- Wang, X.; Zhou, S.; Ye, N.; Li, Y.; Zhou, P.; Chen, G.; Hu, H. Predictive Models of Alzheimer’s Disease Dementia Risk in Older Adults with Mild Cognitive Impairment: A Systematic Review and Critical Appraisal. BMC Geriatr. 2024, 24, 531. [Google Scholar] [CrossRef]

- Wild, K.; Howieson, D.; Webbe, F.; Seelye, A.; Kaye, J. Status of Computerized Cognitive Testing in Aging: A Systematic Review. Alzheimers Dement. 2008, 4, 428–437. [Google Scholar] [CrossRef]

- Zygouris, S.; Tsolaki, M. Computerized Cognitive Testing for Older Adults: A Review. Am. J. Alzheimers Dis. Dement. 2015, 30, 13–28. [Google Scholar] [CrossRef]

- Cubillos, C.; Rienzo, A. Digital Cognitive Assessment Tests for Older Adults: Systematic Literature Review. JMIR Ment. Health 2023, 10, e47487. [Google Scholar] [CrossRef]

- Öhman, F.; Hassenstab, J.; Berron, D.; Schöll, M.; Papp, K.V. Current Advances in Digital Cognitive Assessment for Preclinical Alzheimer’s Disease. Alzheimers Dement. Diagn. Assess. Dis. Monit. 2021, 13, e12217. [Google Scholar] [CrossRef] [PubMed]

- Chan, J.Y.C.; Kwong, J.S.W.; Wong, A.; Kwok, T.C.Y.; Tsoi, K.K.F. Comparison of Computerized and Paper-and-Pencil Memory Tests in Detection of Mild Cognitive Impairment and Dementia: A Systematic Review and Meta-Analysis of Diagnostic Studies. J. Am. Med. Dir. Assoc. 2018, 19, 748–756.e5. [Google Scholar] [CrossRef] [PubMed]

- Millett, G.; Naglie, G.; Upshur, R.; Jaakkimainen, L.; Charles, J.; Tierney, M.C. Computerized Cognitive Testing in Primary Care: A Qualitative Study. Alzheimer Dis. Assoc. Disord. 2018, 32, 114–119. [Google Scholar] [CrossRef] [PubMed]

- Giebel, C.M.; Knopman, D.; Mioshi, E.; Khondoker, M. Distinguishing Frontotemporal Dementia From Alzheimer Disease Through Everyday Function Profiles: Trajectories of Change. J. Geriatr. Psychiatry Neurol. 2021, 34, 66–75. [Google Scholar] [CrossRef] [PubMed]

- Björngrim, S.; Van Den Hurk, W.; Betancort, M.; Machado, A.; Lindau, M. Comparing Traditional and Digitized Cognitive Tests Used in Standard Clinical Evaluation—A Study of the Digital Application Minnemera. Front. Psychol. 2019, 10, 2327. [Google Scholar] [CrossRef]

- Frazier, S.; Pitts, B.J.; McComb, S. Measuring Cognitive Workload in Automated Knowledge Work Environments: A Systematic Literature Review. Cogn. Technol. Work 2022, 24, 557–587. [Google Scholar] [CrossRef]

- Sirilertmekasakul, C.; Rattanawong, W.; Gongvatana, A.; Srikiatkhachorn, A. The Current State of Artificial Intelligence-Augmented Digitized Neurocognitive Screening Test. Front. Hum. Neurosci. 2023, 17, 1133632. [Google Scholar] [CrossRef]

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. A Model for Types and Levels of Human Interaction with Automation. IEEE Trans. Syst. Man Cybern. Part Syst. Hum. 2000, 30, 286–297. [Google Scholar] [CrossRef]

- Kehl-Floberg, K.E.; Marks, T.S.; Edwards, D.F.; Giles, G.M. Conventional Clock Drawing Tests Have Low to Moderate Reliability and Validity for Detecting Subtle Cognitive Impairments in Community-Dwelling Older Adults. Front. Aging Neurosci. 2023, 15, 1210585. [Google Scholar] [CrossRef]

- Wallace, S.E.; Donoso Brown, E.V.; Simpson, R.C.; D’Acunto, K.; Kranjec, A.; Rodgers, M.; Agostino, C. A Comparison of Electronic and Paper Versions of the Montreal Cognitive Assessment. Alzheimer Dis. Assoc. Disord. 2019, 33, 272–278. [Google Scholar] [CrossRef]

- Noguchi-Shinohara, M.; Domoto, C.; Yoshida, T.; Niwa, K.; Yuki-Nozaki, S.; Samuraki-Yokohama, M.; Sakai, K.; Hamaguchi, T.; Ono, K.; Iwasa, K.; et al. A New Computerized Assessment Battery for Cognition (C-ABC) to Detect Mild Cognitive Impairment and Dementia around 5 Min. PLoS ONE 2020, 15, e0243469. [Google Scholar] [CrossRef] [PubMed]

- Yao, L.; Shono, Y.; Nowinski, C.; Dworak, E.M.; Kaat, A.; Chen, S.; Lovett, R.; Ho, E.; Curtis, L.; Wolf, M.; et al. Prediction of Cognitive Impairment Using Higher Order Item Response Theory and Machine Learning Models. Front. Psychiatry 2024, 14, 1297952. [Google Scholar] [CrossRef] [PubMed]

- Chan, J.Y.C.; Bat, B.K.K.; Wong, A.; Chan, T.K.; Huo, Z.; Yip, B.H.K.; Kowk, T.C.Y.; Tsoi, K.K.F. Evaluation of Digital Drawing Tests and Paper-and-Pencil Drawing Tests for the Screening of Mild Cognitive Impairment and Dementia: A Systematic Review and Meta-Analysis of Diagnostic Studies. Neuropsychol. Rev. 2022, 32, 566–576. [Google Scholar] [CrossRef] [PubMed]

- Devos, P.; Debeer, J.; Ophals, J.; Petrovic, M. Cognitive Impairment Screening Using M-Health: An Android Implementation of the Mini-Mental State Examination (MMSE) Using Speech Recognition. Eur. Geriatr. Med. 2019, 10, 501–509. [Google Scholar] [CrossRef]

- Chatzidimitriou, E.; Ioannidis, P.; Moraitou, D.; Konstantinopoulou, E.; Aretouli, E. The Cognitive and Behavioral Correlates of Functional Status in Patients with Frontotemporal Dementia: A Pilot Study. Front. Hum. Neurosci. 2023, 17, 1087765. [Google Scholar] [CrossRef]

- Nasreddine, Z.S.; Phillips, N.A.; Bédirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A Brief Screening Tool For Mild Cognitive Impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef]

- Folstein, M.F.; Folstein, S.E.; McHugh, P.R. Mini-Mental State. J. Psychiatr. Res. 1975, 12, 189–198. [Google Scholar] [CrossRef]

- Borson, S.; Scanlan, J.; Brush, M.; Vitaliano, P.; Dokmak, A. The Mini-Cog: A Cognitive? Vital Signs? Measure for Dementia Screening in Multi-Lingual Elderly. Int. J. Geriatr. Psychiatry 2000, 15, 1021–1027. [Google Scholar] [CrossRef]

- Cipriani, G.; Danti, S.; Picchi, L.; Nuti, A.; Fiorino, M.D. Daily Functioning and Dementia. Dement. Neuropsychol. 2020, 14, 93–102. [Google Scholar] [CrossRef]

- Loewenstein, D.A.; Arguelles, S.; Bravo, M.; Freeman, R.Q.; Arguelles, T.; Acevedo, A.; Eisdorfer, C. Caregivers’ Judgments of the Functional Abilities of the Alzheimer’s Disease Patient: A Comparison of Proxy Reports and Objective Measures. J. Gerontol. B Psychol. Sci. Soc. Sci. 2001, 56, P78–P84. [Google Scholar] [CrossRef]

- Graf, C. The Lawton Instrumental Activities of Daily Living Scale. AJN Am. J. Nurs. 2008, 108, 52–62. [Google Scholar] [CrossRef] [PubMed]

- Katz, S.; Downs, T.D.; Cash, H.R.; Grotz, R.C. Progress in Development of the Index of ADL. Gerontologist 1970, 10, 20–30. [Google Scholar] [CrossRef] [PubMed]

- Nielsen, L.M.; Kirkegaard, H.; Østergaard, L.G.; Bovbjerg, K.; Breinholt, K.; Maribo, T. Comparison of Self-Reported and Performance-Based Measures of Functional Ability in Elderly Patients in an Emergency Department: Implications for Selection of Clinical Outcome Measures. BMC Geriatr. 2016, 16, 199. [Google Scholar] [CrossRef] [PubMed]

- Royall, D.R.; Lauterbach, E.C.; Kaufer, D.; Malloy, P.; Coburn, K.L.; Black, K.J. The Cognitive Correlates of Functional Status: A Review From the Committee on Research of the American Neuropsychiatric Association. J. Neuropsychiatry Clin. Neurosci. 2007, 19, 249–265. [Google Scholar] [CrossRef] [PubMed]

- Ye, S.; Ko, B.; Phi, H.; Eagleman, D.; Flores, B.; Katz, Y.; Huang, B.; Hosseini Ghomi, R. Validity of Computer Based Administration of Cognitive Assessments Compared to Traditional Paper-Based Administration: Psychiatry and Clinical Psychology. medRxiv 2020. [Google Scholar] [CrossRef]

- Valladares-Rodriguez, S.; Pérez-Rodriguez, R.; Fernandez-Iglesias, J.M.; Anido-Rifón, L.; Facal, D.; Rivas-Costa, C. Learning to Detect Cognitive Impairment through Digital Games and Machine Learning Techniques: A Preliminary Study. Methods Inf. Med. 2018, 57, 197–207. [Google Scholar] [CrossRef]

- Sternin, A.; Burns, A.; Owen, A.M. Thirty-Five Years of Computerized Cognitive Assessment of Aging—Where Are We Now? Diagnostics 2019, 9, 114. [Google Scholar] [CrossRef]

- Zeng, Z.; Fauvel, S.; Hsiang, B.T.T.; Wang, D.; Qiu, Y.; Khuan, P.C.O.; Leung, C.; Shen, Z.; Chin, J.J. Towards Long-Term Tracking and Detection of Early Dementia: A Computerized Cognitive Test Battery with Gamification. In Proceedings of the 3rd International Conference on Crowd Science and Engineering, Singapore, 28–31 July 2018; ACM: New York, NY, USA, 2018; pp. 1–10. [Google Scholar]

- Cheng, X.; Gilmore, G.C.; Lerner, A.J.; Lee, K. Computerized Block Games for Automated Cognitive Assessment: Development and Evaluation Study. JMIR Serious Games 2023, 11, e40931. [Google Scholar] [CrossRef]

- Lee, K.; Jeong, D.; Schindler, R.C.; Short, E.J. SIG-Blocks: Tangible Game Technology for Automated Cognitive Assessment. Comput. Hum. Behav. 2016, 65, 163–175. [Google Scholar] [CrossRef]

- Kawahara, Y.; Ikeda, Y.; Deguchi, K.; Kurata, T.; Hishikawa, N.; Sato, K.; Kono, S.; Yunoki, T.; Omote, Y.; Yamashita, T.; et al. Simultaneous Assessment of Cognitive and Affective Functions in Multiple System Atrophy and Cortical Cerebellar Atrophy in Relation to Computerized Touch-Panel Screening Tests. J. Neurol. Sci. 2015, 351, 24–30. [Google Scholar] [CrossRef]

- Yang, J.; Jiang, R.; Ding, H.; Au, R.; Chen, J.; Li, C.; An, N. Designing and Evaluating MahjongBrain: A Digital Cognitive Assessment Tool Through Gamification. In HCI International 2023—Late Breaking Papers; Gao, Q., Zhou, J., Duffy, V.G., Antona, M., Stephanidis, C., Eds.; Lecture Notes in Computer Science; Springer Nature Switzerland: Cham, Switzerland, 2023; Volume 14055, pp. 264–278. ISBN 978-3-031-48040-9. [Google Scholar]

- Hsu, W.-Y.; Rowles, W.; Anguera, J.A.; Zhao, C.; Anderson, A.; Alexander, A.; Sacco, S.; Henry, R.; Gazzaley, A.; Bove, R. Application of an Adaptive, Digital, Game-Based Approach for Cognitive Assessment in Multiple Sclerosis: Observational Study. J. Med. Internet Res. 2021, 23, e24356. [Google Scholar] [CrossRef] [PubMed]

- Oliva, I.; Losa, J. Validation of the Computerized Cognitive Assessment Test: NNCT. Int. J. Environ. Res. Public Health 2022, 19, 10495. [Google Scholar] [CrossRef] [PubMed]

- Berg, J.-L.; Durant, J.; Léger, G.C.; Cummings, J.L.; Nasreddine, Z.; Miller, J.B. Comparing the Electronic and Standard Versions of the Montreal Cognitive Assessment in an Outpatient Memory Disorders Clinic: A Validation Study. J. Alzheimers Dis. 2018, 62, 93–97. [Google Scholar] [CrossRef] [PubMed]

- Snowdon, A.; Hussein, A.; Kent, R.; Pino, L.; Hachinski, V. Comparison of an Electronic and Paper-Based Montreal Cognitive Assessment Tool. Alzheimer Dis. Assoc. Disord. 2015, 29, 325–329. [Google Scholar] [CrossRef]

- Park, I.; Kim, Y.J.; Kim, Y.J.; Lee, U. Automatic, Qualitative Scoring of the Interlocking Pentagon Drawing Test (PDT) Based on U-Net and Mobile Sensor Data. Sensors 2020, 20, 1283. [Google Scholar] [CrossRef]

- Park, S.-Y.; Schott, N. The Trail-Making-Test: Comparison between Paper-and-Pencil and Computerized Versions in Young and Healthy Older Adults. Appl. Neuropsychol. Adult 2022, 29, 1208–1220. [Google Scholar] [CrossRef]

- Dahmen, J.; Cook, D.; Fellows, R.; Schmitter-Edgecombe, M. An Analysis of a Digital Variant of the Trail Making Test Using Machine Learning Techniques. Technol. Health Care 2017, 25, 251–264. [Google Scholar] [CrossRef]

- Heimann-Steinert, A.; Latendorf, A.; Prange, A.; Sonntag, D.; Müller-Werdan, U. Digital Pen Technology for Conducting Cognitive Assessments: A Cross-over Study with Older Adults. Psychol. Res. 2021, 85, 3075–3083. [Google Scholar] [CrossRef]

- Müller, S.; Preische, O.; Heymann, P.; Elbing, U.; Laske, C. Increased Diagnostic Accuracy of Digital vs. Conventional Clock Drawing Test for Discrimination of Patients in the Early Course of Alzheimer’s Disease from Cognitively Healthy Individuals. Front. Aging Neurosci. 2017, 9, 101. [Google Scholar] [CrossRef]

- Yu, K.; Zhang, S.; Wang, Q.; Wang, X.; Qin, Y.; Wang, J.; Li, C.; Wu, Y.; Wang, W.; Lin, H. Development of a Computerized Tool for the Chinese Version of the Montreal Cognitive Assessment for Screening Mild Cognitive Impairment. Int. Psychogeriatr. 2015, 27, 213–219. [Google Scholar] [CrossRef]

- Xie, S.S.; Goldstein, C.M.; Gathright, E.C.; Gunstad, J.; Dolansky, M.A.; Redle, J.; Hughes, J.W. Performance of the Automated Neuropsychological Assessment Metrics (ANAM) in Detecting Cognitive Impairment in Heart Failure Patients. Heart Lung 2015, 44, 387–394. [Google Scholar] [CrossRef] [PubMed]

- Dougherty, J.H.; Cannon, R.L.; Nicholas, C.R.; Hall, L.; Hare, F.; Carr, E.; Dougherty, A.; Janowitz, J.; Arunthamakun, J. The Computerized Self Test (CST): An Interactive, Internet Accessible Cognitive Screening Test For Dementia. J. Alzheimers Dis. 2010, 20, 185–195. [Google Scholar] [CrossRef] [PubMed]

- Scanlon, L.; O’Shea, E.; O’Caoimh, R.; Timmons, S. Usability and Validity of a Battery of Computerised Cognitive Screening Tests for Detecting Cognitive Impairment. Gerontology 2016, 62, 247–252. [Google Scholar] [CrossRef] [PubMed]

- Park, J.-H.; Jung, M.; Kim, J.; Park, H.Y.; Kim, J.-R.; Park, J.-H. Validity of a Novel Computerized Screening Test System for Mild Cognitive Impairment. Int. Psychogeriatr. 2018, 30, 1455–1463. [Google Scholar] [CrossRef] [PubMed]

- Fung, A.W.-T.; Lam, L.C.W. Validation of a Computerized Hong Kong—Vigilance and Memory Test (HK-VMT) to Detect Early Cognitive Impairment in Healthy Older Adults. Aging Ment. Health 2020, 24, 186–192. [Google Scholar] [CrossRef]

- Dawadi, P.N.; Cook, D.J.; Schmitter-Edgecombe, M. Automated Cognitive Health Assessment From Smart Home-Based Behavior Data. IEEE J. Biomed. Health Inform. 2016, 20, 1188–1194. [Google Scholar] [CrossRef]

- Dawadi, P.N.; Cook, D.J.; Schmitter-Edgecombe, M. Automated Cognitive Health Assessment Using Smart Home Monitoring of Complex Tasks. IEEE Trans. Syst. Man Cybern. Syst. 2013, 43, 1302–1313. [Google Scholar] [CrossRef]

- Javed, A.R.; Saadia, A.; Mughal, H.; Gadekallu, T.R.; Rizwan, M.; Maddikunta, P.K.R.; Mahmud, M.; Liyanage, M.; Hussain, A. Artificial Intelligence for Cognitive Health Assessment: State-of-the-Art, Open Challenges and Future Directions. Cogn. Comput. 2023, 15, 1767–1812. [Google Scholar] [CrossRef]

- Vacante, M.; Wilcock, G.K.; De Jager, C.A. Computerized Adaptation of The Placing Test for Early Detection of Both Mild Cognitive Impairment and Alzheimer’s Disease. J. Clin. Exp. Neuropsychol. 2013, 35, 846–856. [Google Scholar] [CrossRef]

- Sato, K.; Niimi, Y.; Mano, T.; Iwata, A.; Iwatsubo, T. Automated Evaluation of Conventional Clock-Drawing Test Using Deep Neural Network: Potential as a Mass Screening Tool to Detect Individuals with Cognitive Decline. Front. Neurol. 2022, 13, 896403. [Google Scholar] [CrossRef]

- Youn, Y.C.; Pyun, J.-M.; Ryu, N.; Baek, M.J.; Jang, J.-W.; Park, Y.H.; Ahn, S.-W.; Shin, H.-W.; Park, K.-Y.; Kim, S. Use of the Clock Drawing Test and the Rey–Osterrieth Complex Figure Test-Copy with Convolutional Neural Networks to Predict Cognitive Impairment. Alzheimer’s Res. Ther. 2020, 13, 85. [Google Scholar] [CrossRef] [PubMed]

- Kaser, A.N.; Lacritz, L.H.; Winiarski, H.R.; Gabirondo, P.; Schaffert, J.; Coca, A.J.; Jiménez-Raboso, J.; Rojo, T.; Zaldua, C.; Honorato, I.; et al. A Novel Speech Analysis Algorithm to Detect Cognitive Impairment in a Spanish Population. Front. Neurol. 2024, 15, 1342907. [Google Scholar] [CrossRef] [PubMed]

- Hajjar, I.; Okafor, M.; Choi, J.D.; Moore, E.; Abrol, A.; Calhoun, V.D.; Goldstein, F.C. Development of Digital Voice Biomarkers and Associations with Cognition, Cerebrospinal Biomarkers, and Neural Representation in Early Alzheimer’s Disease. Alzheimers Dement. Diagn. Assess. Dis. Monit. 2023, 15, e12393. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Asgari, M.; Gale, R.; Wild, K.; Dodge, H.; Kaye, J. Improving the Assessment of Mild Cognitive Impairment in Advanced Age with a Novel Multi-Feature Automated Speech and Language Analysis of Verbal Fluency. Front. Psychol. 2020, 11, 535. [Google Scholar] [CrossRef] [PubMed]

- Robin, J.; Xu, M.; Kaufman, L.D.; Simpson, W. Using Digital Speech Assessments to Detect Early Signs of Cognitive Impairment. Front. Digit. Health 2021, 3, 749758. [Google Scholar] [CrossRef]

- Yeung, A.; Iaboni, A.; Rochon, E.; Lavoie, M.; Santiago, C.; Yancheva, M.; Novikova, J.; Xu, M.; Robin, J.; Kaufman, L.D.; et al. Correlating Natural Language Processing and Automated Speech Analysis with Clinician Assessment to Quantify Speech-Language Changes in Mild Cognitive Impairment and Alzheimer’s Dementia. Alzheimers Res. Ther. 2021, 13, 109. [Google Scholar] [CrossRef]

- Ruengchaijatuporn, N.; Chatnuntawech, I.; Teerapittayanon, S.; Sriswasdi, S.; Itthipuripat, S.; Hemrungrojn, S.; Bunyabukkana, P.; Petchlorlian, A.; Chunamchai, S.; Chotibut, T.; et al. An Explainable Self-Attention Deep Neural Network for Detecting Mild Cognitive Impairment Using Multi-Input Digital Drawing Tasks. Alzheimers Res. Ther. 2022, 14, 111. [Google Scholar] [CrossRef]

- Chen, S.; Stromer, D.; Alabdalrahim, H.A.; Schwab, S.; Weih, M.; Maier, A. Automatic Dementia Screening and Scoring by Applying Deep Learning on Clock-Drawing Tests. Sci. Rep. 2020, 10, 20854. [Google Scholar] [CrossRef]

- Park, J.-H. Non-Equivalence of Sub-Tasks of the Rey-Osterrieth Complex Figure Test with Convolutional Neural Networks to Discriminate Mild Cognitive Impairment. BMC Psychiatry 2024, 24, 166. [Google Scholar] [CrossRef]

- Bergeron, M.F.; Landset, S.; Zhou, X.; Ding, T.; Khoshgoftaar, T.M.; Zhao, F.; Du, B.; Chen, X.; Wang, X.; Zhong, L.; et al. Utility of MemTrax and Machine Learning Modeling in Classification of Mild Cognitive Impairment. J. Alzheimers Dis. 2020, 77, 1545–1558. [Google Scholar] [CrossRef]

- Nakaoku, Y.; Ogata, S.; Murata, S.; Nishimori, M.; Ihara, M.; Iihara, K.; Takegami, M.; Nishimura, K. AI-Assisted In-House Power Monitoring for the Detection of Cognitive Impairment in Older Adults. Sensors 2021, 21, 6249. [Google Scholar] [CrossRef]

- Rykov, Y.G.; Patterson, M.D.; Gangwar, B.A.; Jabar, S.B.; Leonardo, J.; Ng, K.P.; Kandiah, N. Predicting Cognitive Scores from Wearable-Based Digital Physiological Features Using Machine Learning: Data from a Clinical Trial in Mild Cognitive Impairment. BMC Med. 2024, 22, 36. [Google Scholar] [CrossRef]

- Jia, X.; Wang, Z.; Huang, F.; Su, C.; Du, W.; Jiang, H.; Wang, H.; Wang, J.; Wang, F.; Su, W.; et al. A Comparison of the Mini-Mental State Examination (MMSE) with the Montreal Cognitive Assessment (MoCA) for Mild Cognitive Impairment Screening in Chinese Middle-Aged and Older Population: A Cross-Sectional Study. BMC Psychiatry 2021, 21, 485. [Google Scholar] [CrossRef]

- Trevethan, R. Sensitivity, Specificity, and Predictive Values: Foundations, Pliabilities, and Pitfalls in Research and Practice. Front. Public Health 2017, 5, 307. [Google Scholar] [CrossRef]

- Senthilnathan, S. Usefulness of Correlation Analysis. SSRN Electron. J. 2019. [Google Scholar] [CrossRef]

- Janssens, A.C.J.W.; Martens, F.K. Reflection on Modern Methods: Revisiting the Area under the ROC Curve. Int. J. Epidemiol. 2020, 49, 1397–1403. [Google Scholar] [CrossRef]

- Ahmed, S.; De Jager, C.; Wilcock, G. A Comparison of Screening Tools for the Assessment of Mild Cognitive Impairment: Preliminary Findings. Neurocase 2012, 18, 336–351. [Google Scholar] [CrossRef]

- Wong, A.; Fong, C.; Mok, V.C.; Leung, K.; Tong, R.K. Computerized Cognitive Screen (CoCoSc): A Self-Administered Computerized Test for Screening for Cognitive Impairment in Community Social Centers. J. Alzheimers Dis. 2017, 59, 1299–1306. [Google Scholar] [CrossRef]

- Larner, A.J. Screening Utility of the Montreal Cognitive Assessment (MoCA): In Place of—or as Well as—the MMSE? Int. Psychogeriatr. 2012, 24, 391–396. [Google Scholar] [CrossRef]

- Tierney, M.C.; Naglie, G.; Upshur, R.; Moineddin, R.; Charles, J.; Liisa Jaakkimainen, R. Feasibility and Validity of the Self-Administered Computerized Assessment of Mild Cognitive Impairment with Older Primary Care Patients. Alzheimer Dis. Assoc. Disord. 2014, 28, 311–319. [Google Scholar] [CrossRef]

- Phillips, M.; Rogers, P.; Haworth, J.; Bayer, A.; Tales, A. Intra-Individual Reaction Time Variability in Mild Cognitive Impairment and Alzheimer’s Disease: Gender, Processing Load and Speed Factors. PLoS ONE 2013, 8, e65712. [Google Scholar] [CrossRef]

- Lehr, M.; Prud’hommeaux, E.; Shafran, I.; Roark, B. Fully Automated Neuropsychological Assessment for Detecting Mild Cognitive Impairment. In Proceedings of the Interspeech 2012, Portland, OR, USA, 9–13 September 2012; ISCA: Singapore, 2012; pp. 1039–1042. [Google Scholar]

- Calamia, M.; Weitzner, D.S.; De Vito, A.N.; Bernstein, J.P.K.; Allen, R.; Keller, J.N. Feasibility and Validation of a Web-Based Platform for the Self-Administered Patient Collection of Demographics, Health Status, Anxiety, Depression, and Cognition in Community Dwelling Elderly. PLoS ONE 2021, 16, e0244962. [Google Scholar] [CrossRef]

- Bissig, D.; Kaye, J.; Erten-Lyons, D. Validation of SATURN, a Free, Electronic, Self-administered Cognitive Screening Test. Alzheimers Dement. Transl. Res. Clin. Interv. 2020, 6, e12116. [Google Scholar] [CrossRef]

- Ip, E.H.; Barnard, R.; Marshall, S.A.; Lu, L.; Sink, K.; Wilson, V.; Chamberlain, D.; Rapp, S.R. Development of a Video-Simulation Instrument for Assessing Cognition in Older Adults. BMC Med. Inform. Decis. Mak. 2017, 17, 161. [Google Scholar] [CrossRef]

- Satoh, T.; Sawada, Y.; Saba, H.; Kitamoto, H.; Kato, Y.; Shiozuka, Y.; Kuwada, T.; Shima, S.; Murakami, K.; Sasaki, M.; et al. Assessment of Mild Cognitive Impairment Using CogEvo: A Computerized Cognitive Function Assessment Tool. J. Prim. Care Community Health 2024, 15, 21501319241239228. [Google Scholar] [CrossRef]

- Dwolatzky, T.; Dimant, L.; Simon, E.S.; Doniger, G.M. Validity of a Short Computerized Assessment Battery for Moderate Cognitive Impairment and Dementia. Int. Psychogeriatr. 2010, 22, 795–803. [Google Scholar] [CrossRef]

- Ye, S.; Sun, K.; Huynh, D.; Phi, H.Q.; Ko, B.; Huang, B.; Hosseini Ghomi, R. A Computerized Cognitive Test Battery for Detection of Dementia and Mild Cognitive Impairment: Instrument Validation Study. JMIR Aging 2022, 5, e36825. [Google Scholar] [CrossRef]

- Patrick, K.S.; Chakrabati, S.; Rhoads, T.; Busch, R.M.; Floden, D.P.; Galioto, R. Utility of the Brief Assessment of Cognitive Health (BACH) Computerized Screening Tool in Identifying MS-Related Cognitive Impairment. Mult. Scler. Relat. Disord. 2024, 82, 105398. [Google Scholar] [CrossRef]

- Yuen, K.; Beaton, D.; Bingham, K.; Katz, P.; Su, J.; Diaz Martinez, J.P.; Tartaglia, M.C.; Ruttan, L.; Wither, J.E.; Kakvan, M.; et al. Validation of the Automated Neuropsychological Assessment Metrics for Assessing Cognitive Impairment in Systemic Lupus Erythematosus. Lupus 2022, 31, 45–54. [Google Scholar] [CrossRef]

- Rodríguez-Salgado, A.M.; Llibre-Guerra, J.J.; Tsoy, E.; Peñalver-Guia, A.I.; Bringas, G.; Erlhoff, S.J.; Kramer, J.H.; Allen, I.E.; Valcour, V.; Miller, B.L.; et al. A Brief Digital Cognitive Assessment for Detection of Cognitive Impairment in Cuban Older Adults. J. Alzheimers Dis. 2021, 79, 85–94. [Google Scholar] [CrossRef]

- Fukui, Y.; Yamashita, T.; Hishikawa, N.; Kurata, T.; Sato, K.; Omote, Y.; Kono, S.; Yunoki, T.; Kawahara, Y.; Hatanaka, N.; et al. Computerized Touch-Panel Screening Tests for Detecting Mild Cognitive Impairment and Alzheimer’s Disease. Intern. Med. 2015, 54, 895–902. [Google Scholar] [CrossRef]

- Takechi, H.; Yoshino, H. Usefulness of CogEvo, a Computerized Cognitive Assessment and Training Tool, for Distinguishing Patients with Mild Alzheimer’s Disease and Mild Cognitive Impairment from Cognitively Normal Older People. Geriatr. Gerontol. Int. 2021, 21, 192–196. [Google Scholar] [CrossRef]

- Kouzuki, M.; Miyamoto, M.; Tanaka, N.; Urakami, K. Validation of a Novel Computerized Cognitive Function Test for the Rapid Detection of Mild Cognitive Impairment. BMC Neurol. 2022, 22, 457. [Google Scholar] [CrossRef]

- Ruano, L.; Sousa, A.; Severo, M.; Alves, I.; Colunas, M.; Barreto, R.; Mateus, C.; Moreira, S.; Conde, E.; Bento, V.; et al. Development of a Self-Administered Web-Based Test for Longitudinal Cognitive Assessment. Sci. Rep. 2016, 6, 19114. [Google Scholar] [CrossRef]

- Curiel, R.E.; Crocco, E.; Rosado, M.; Duara, R.; Greig, M.T.; Raffo, A.; Loewenstein, D.A. A Brief Computerized Paired Associate Test for the Detection of Mild Cognitive Impairment in Community-Dwelling Older Adults. J. Alzheimers Dis. 2016, 54, 793–799. [Google Scholar] [CrossRef]

- Dawadi, P.N.; Cook, D.J.; Schmitter-Edgecombe, M.; Parsey, C. Automated Assessment of Cognitive Health Using Smart Home Technologies. Technol. Health Care 2013, 21, 323–343. [Google Scholar] [CrossRef]

- Maito, M.A.; Santamaría-García, H.; Moguilner, S.; Possin, K.L.; Godoy, M.E.; Avila-Funes, J.A.; Behrens, M.I.; Brusco, I.L.; Bruno, M.A.; Cardona, J.F.; et al. Classification of Alzheimer’s Disease and Frontotemporal Dementia Using Routine Clinical and Cognitive Measures across Multicentric Underrepresented Samples: A Cross Sectional Observational Study. Lancet Reg. Health Am. 2023, 17, 100387. [Google Scholar] [CrossRef]

- Tsai, C.-F.; Chen, C.-C.; Wu, E.H.-K.; Chung, C.-R.; Huang, C.-Y.; Tsai, P.-Y.; Yeh, S.-C. A Machine-Learning-Based Assessment Method for Early-Stage Neurocognitive Impairment by an Immersive Virtual Supermarket. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 2124–2132. [Google Scholar] [CrossRef]

- Xiao, Y.; Jia, Z.; Dong, M.; Song, K.; Li, X.; Bian, D.; Li, Y.; Jiang, N.; Shi, C.; Li, G. Development and Validity of Computerized Neuropsychological Assessment Devices for Screening Mild Cognitive Impairment: Ensemble of Models with Feature Space Heterogeneity and Retrieval Practice Effect. J. Biomed. Inform. 2022, 131, 104108. [Google Scholar] [CrossRef]

- Lagun, D.; Manzanares, C.; Zola, S.M.; Buffalo, E.A.; Agichtein, E. Detecting Cognitive Impairment by Eye Movement Analysis Using Automatic Classification Algorithms. J. Neurosci. Methods 2011, 201, 196–203. [Google Scholar] [CrossRef]

- Kang, M.J.; Kim, S.Y.; Na, D.L.; Kim, B.C.; Yang, D.W.; Kim, E.-J.; Na, H.R.; Han, H.J.; Lee, J.-H.; Kim, J.H.; et al. Prediction of Cognitive Impairment via Deep Learning Trained with Multi-Center Neuropsychological Test Data. BMC Med. Inform. Decis. Mak. 2019, 19, 231. [Google Scholar] [CrossRef] [PubMed]

- Xiao, H.; Fangfang, H.; Qiong, W.; Shuai, Z.; Jingya, Z.; Xu, L.; Guodong, S.; Yan, Z. The Value of Handgrip Strength and Self-Rated Squat Ability in Predicting Mild Cognitive Impairment: Development and Validation of a Prediction Model. Inq. J. Health Care Organ. Provis. Financ. 2023, 60, 004695802311552. [Google Scholar] [CrossRef] [PubMed]

- Na, K.-S. Prediction of Future Cognitive Impairment among the Community Elderly: A Machine-Learning Based Approach. Sci. Rep. 2019, 9, 3335. [Google Scholar] [CrossRef] [PubMed]

- Kalafatis, C.; Modarres, M.H.; Apostolou, P.; Marefat, H.; Khanbagi, M.; Karimi, H.; Vahabi, Z.; Aarsland, D.; Khaligh-Razavi, S.-M. Validity and Cultural Generalisability of a 5-Minute AI-Based, Computerised Cognitive Assessment in Mild Cognitive Impairment and Alzheimer’s Dementia. Front. Psychiatry 2021, 12, 706695. [Google Scholar] [CrossRef] [PubMed]

- O’Malley, R.P.D.; Mirheidari, B.; Harkness, K.; Reuber, M.; Venneri, A.; Walker, T.; Christensen, H.; Blackburn, D. Fully Automated Cognitive Screening Tool Based on Assessment of Speech and Language. J. Neurol. Neurosurg. Psychiatry 2021, 92, 12–15. [Google Scholar] [CrossRef]

- Zhou, H.; Park, C.; Shahbazi, M.; York, M.K.; Kunik, M.E.; Naik, A.D.; Najafi, B. Digital Biomarkers of Cognitive Frailty: The Value of Detailed Gait Assessment Beyond Gait Speed. Gerontology 2022, 68, 224–233. [Google Scholar] [CrossRef]

- Alzheimer’s Association. 2024 Alzheimer’s Disease Facts and Figures. Alzheimers Dement 2024, 20, 3708–3821. [Google Scholar] [CrossRef]

- Handzlik, D.; Richmond, L.L.; Skiena, S.; Carr, M.A.; Clouston, S.A.P.; Luft, B.J. Explainable Automated Evaluation of the Clock Drawing Task for Memory Impairment Screening. Alzheimers Dement. Diagn. Assess. Dis. Monit. 2023, 15, e12441. [Google Scholar] [CrossRef]

- Wei, W.; Zhào, H.; Liu, Y.; Huang, Y. Traditional Trail Making Test Modified into Brand-New Assessment Tools: Digital and Walking Trail Making Test. J. Vis. Exp. 2019, 153, e60456. [Google Scholar] [CrossRef]

- Drapeau, C.E.; Bastien-Toniazzo, M.; Rous, C.; Carlier, M. Nonequivalence of Computerized and Paper-and-Pencil Versions of Trail Making Test. Percept. Mot. Skills 2007, 104, 785–791. [Google Scholar] [CrossRef]

- Sacco, G.; Ben-Sadoun, G.; Bourgeois, J.; Fabre, R.; Manera, V.; Robert, P. Comparison between a Paper-Pencil Version and Computerized Version for the Realization of a Neuropsychological Test: The Example of the Trail Making Test. J. Alzheimers Dis. 2019, 68, 1657–1666. [Google Scholar] [CrossRef] [PubMed]

- Jee, H.; Park, J. Feasibility of a Novice Electronic Psychometric Assessment System for Cognitively Impaired. J. Exerc. Rehabil. 2020, 16, 489–495. [Google Scholar] [CrossRef] [PubMed]

- Cahn-Hidalgo, D.; Estes, P.W.; Benabou, R. Validity, Reliability, and Psychometric Properties of a Computerized, Cognitive Assessment Test (Cognivue ®). World J. Psychiatry 2020, 10, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Tornatore, J.B.; Hill, E.; Laboff, J.A.; McGann, M.E. Self-Administered Screening for Mild Cognitive Impairment: Initial Validation of a Computerized Test Battery. J. Neuropsychiatry Clin. Neurosci. 2005, 17, 98–105. [Google Scholar] [CrossRef]

- Shopin, L.; Shenhar-Tsarfaty, S.; Ben Assayag, E.; Hallevi, H.; Korczyn, A.D.; Bornstein, N.M.; Auriel, E. Cognitive Assessment in Proximity to Acute Ischemic Stroke/Transient Ischemic Attack: Comparison of the Montreal Cognitive Assessment Test and MindStreams Computerized Cognitive Assessment Battery. Dement. Geriatr. Cogn. Disord. 2013, 36, 36–42. [Google Scholar] [CrossRef]

- Ritsner, M.S.; Blumenkrantz, H.; Dubinsky, T.; Dwolatzky, T. The Detection of Neurocognitive Decline in Schizophrenia Using the Mindstreams Computerized Cognitive Test Battery. Schizophr. Res. 2006, 82, 39–49. [Google Scholar] [CrossRef]

- Hammers, D.; Spurgeon, E.; Ryan, K.; Persad, C.; Barbas, N.; Heidebrink, J.; Darby, D.; Giordani, B. Validity of a Brief Computerized Cognitive Screening Test in Dementia. J. Geriatr. Psychiatry Neurol. 2012, 25, 89–99. [Google Scholar] [CrossRef]

- Segkouli, S.; Paliokas, I.; Tzovaras, D.; Lazarou, I.; Karagiannidis, C.; Vlachos, F.; Tsolaki, M. A Computerized Test for the Assessment of Mild Cognitive Impairment Subtypes in Sentence Processing. Aging Neuropsychol. Cogn. 2018, 25, 829–851. [Google Scholar] [CrossRef]

- Gills, J.L.; Bott, N.T.; Madero, E.N.; Glenn, J.M.; Gray, M. A Short Digital Eye-Tracking Assessment Predicts Cognitive Status among Adults. GeroScience 2021, 43, 297–308. [Google Scholar] [CrossRef]

- Larøi, F.; Canlaire, J.; Mourad, H.; Van Der Linden, M. Relations between a Computerized Shopping Task and Cognitive Tests in a Group of Persons Diagnosed with Schizophrenia Compared with Healthy Controls. J. Int. Neuropsychol. Soc. 2010, 16, 180–189. [Google Scholar] [CrossRef]

- Da Motta, C.; Carvalho, C.B.; Castilho, P.; Pato, M.T. Assessment of Neurocognitive Function and Social Cognition with Computerized Batteries: Psychometric Properties of the Portuguese PennCNB in Healthy Controls. Curr. Psychol. 2021, 40, 4851–4862. [Google Scholar] [CrossRef]

- Mackin, R.S.; Rhodes, E.; Insel, P.S.; Nosheny, R.; Finley, S.; Ashford, M.; Camacho, M.R.; Truran, D.; Mosca, K.; Seabrook, G.; et al. Reliability and Validity of a Home-Based Self-Administered Computerized Test of Learning and Memory Using Speech Recognition. Aging Neuropsychol. Cogn. 2022, 29, 867–881. [Google Scholar] [CrossRef] [PubMed]

- Cavedoni, S.; Chirico, A.; Pedroli, E.; Cipresso, P.; Riva, G. Digital Biomarkers for the Early Detection of Mild Cognitive Impairment: Artificial Intelligence Meets Virtual Reality. Front. Hum. Neurosci. 2020, 14, 245. [Google Scholar] [CrossRef] [PubMed]

- Lim, J.E.; Wong, W.T.; Teh, T.A.; Lim, S.H.; Allen, J.C.; Quah, J.H.M.; Malhotra, R.; Tan, N.C. A Fully-Immersive and Automated Virtual Reality System to Assess the Six Domains of Cognition: Protocol for a Feasibility Study. Front. Aging Neurosci. 2021, 12, 604670. [Google Scholar] [CrossRef]

- Jamshed, M.; Shahzad, A.; Riaz, F.; Kim, K. Exploring Inertial Sensor-Based Balance Biomarkers for Early Detection of Mild Cognitive Impairment. Sci. Rep. 2024, 14, 9829. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Babatope, E.Y.; Ramírez-Acosta, A.Á.; Avila-Funes, J.A.; García-Vázquez, M. The Potential of Automated Assessment of Cognitive Function Using Non-Neuroimaging Data: A Systematic Review. J. Clin. Med. 2024, 13, 7068. https://doi.org/10.3390/jcm13237068

Babatope EY, Ramírez-Acosta AÁ, Avila-Funes JA, García-Vázquez M. The Potential of Automated Assessment of Cognitive Function Using Non-Neuroimaging Data: A Systematic Review. Journal of Clinical Medicine. 2024; 13(23):7068. https://doi.org/10.3390/jcm13237068

Chicago/Turabian StyleBabatope, Eyitomilayo Yemisi, Alejandro Álvaro Ramírez-Acosta, José Alberto Avila-Funes, and Mireya García-Vázquez. 2024. "The Potential of Automated Assessment of Cognitive Function Using Non-Neuroimaging Data: A Systematic Review" Journal of Clinical Medicine 13, no. 23: 7068. https://doi.org/10.3390/jcm13237068

APA StyleBabatope, E. Y., Ramírez-Acosta, A. Á., Avila-Funes, J. A., & García-Vázquez, M. (2024). The Potential of Automated Assessment of Cognitive Function Using Non-Neuroimaging Data: A Systematic Review. Journal of Clinical Medicine, 13(23), 7068. https://doi.org/10.3390/jcm13237068