Prediction of White Matter Hyperintensity in Brain MRI Using Fundus Photographs via Deep Learning

Abstract

1. Introduction

2. Materials and Methods

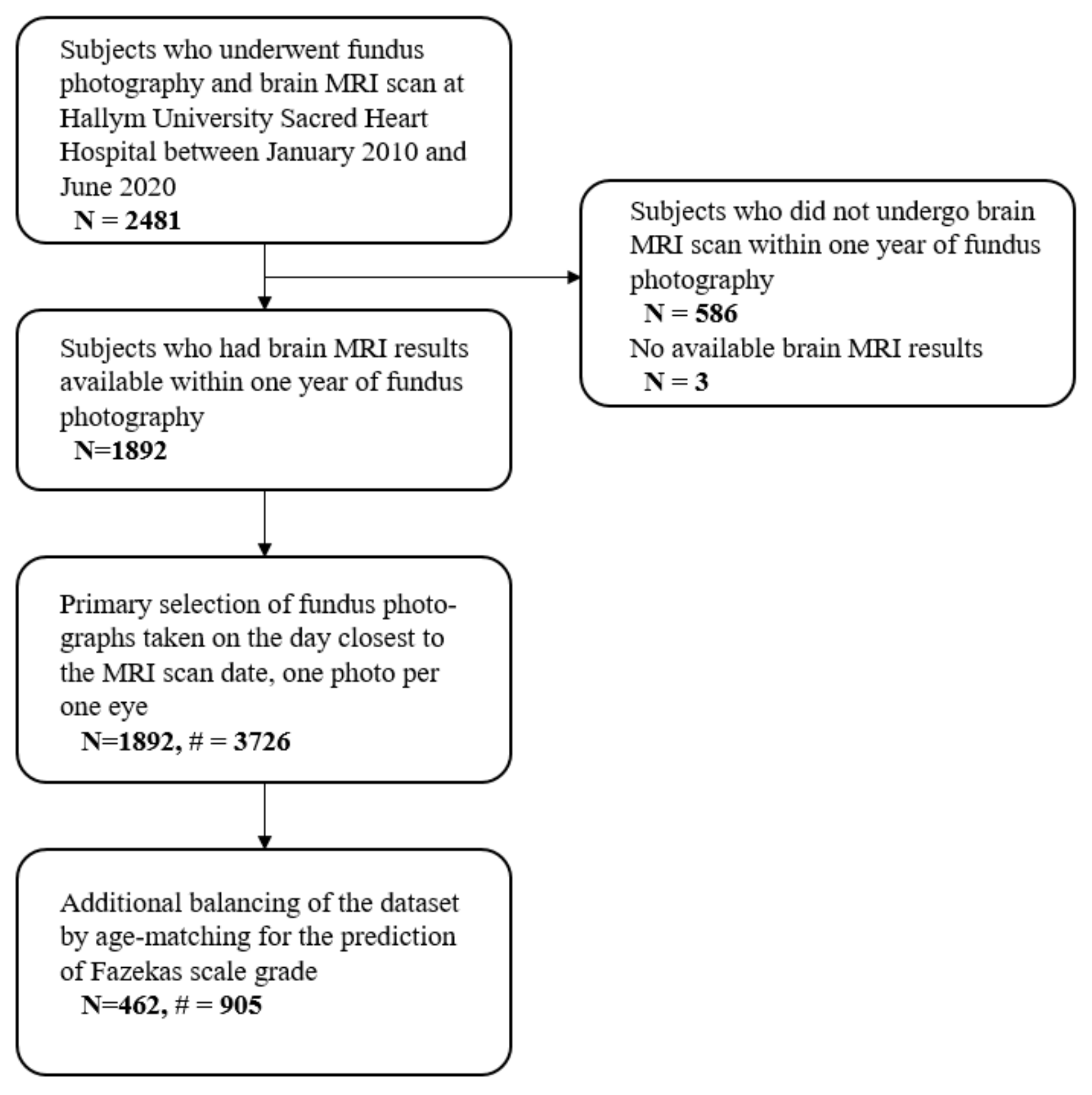

2.1. Study Participants

2.2. Grading Cerebral White Matter Hyperintensities

2.3. Collection of Fundus Photographs

2.4. Construction of Datasets

2.5. Data Pre-Processing & Augmentation

2.6. Training Convolutional Neural Networks

2.7. Implementation of Salience Maps

2.8. Statistical Analysis

3. Results

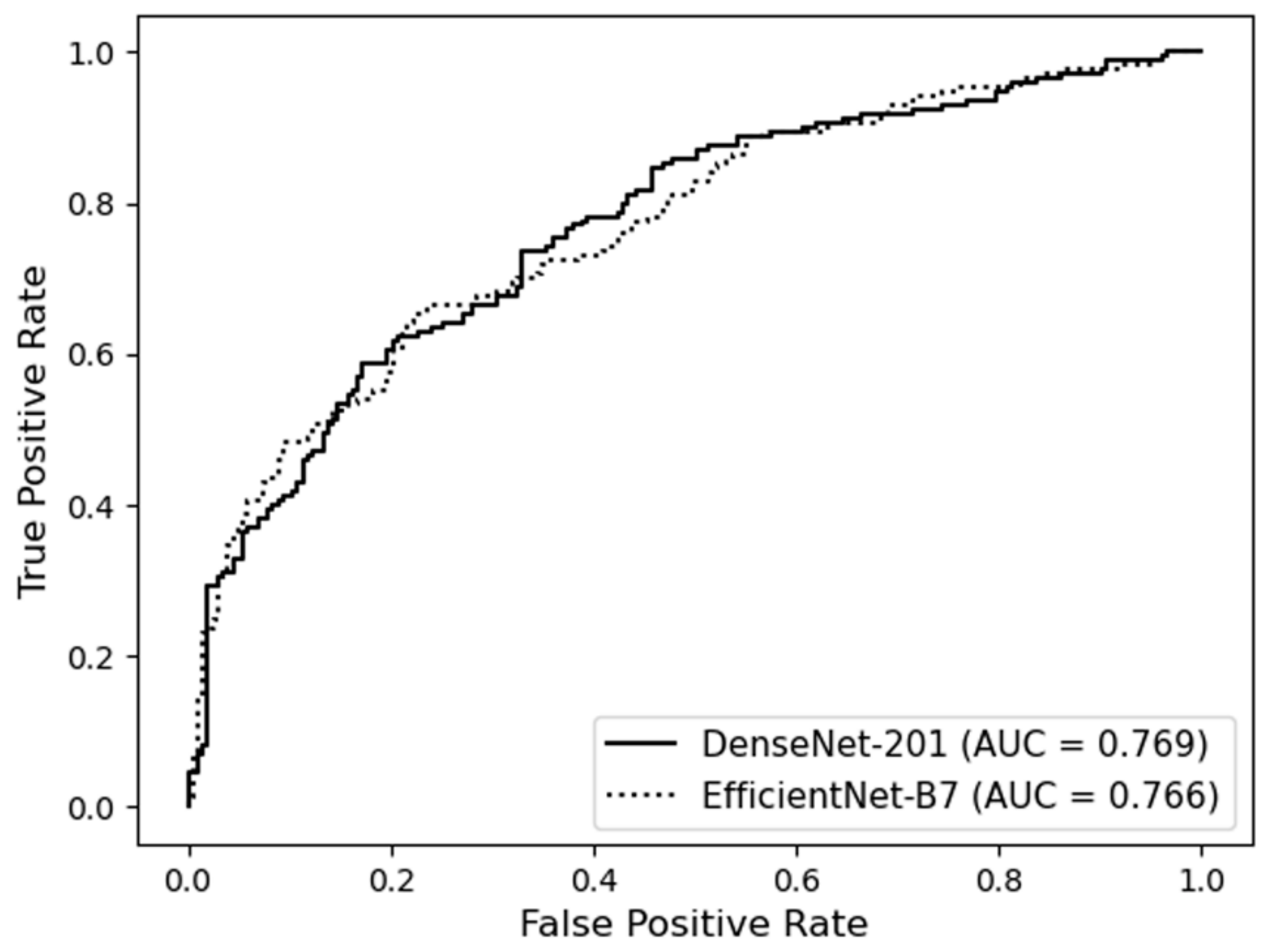

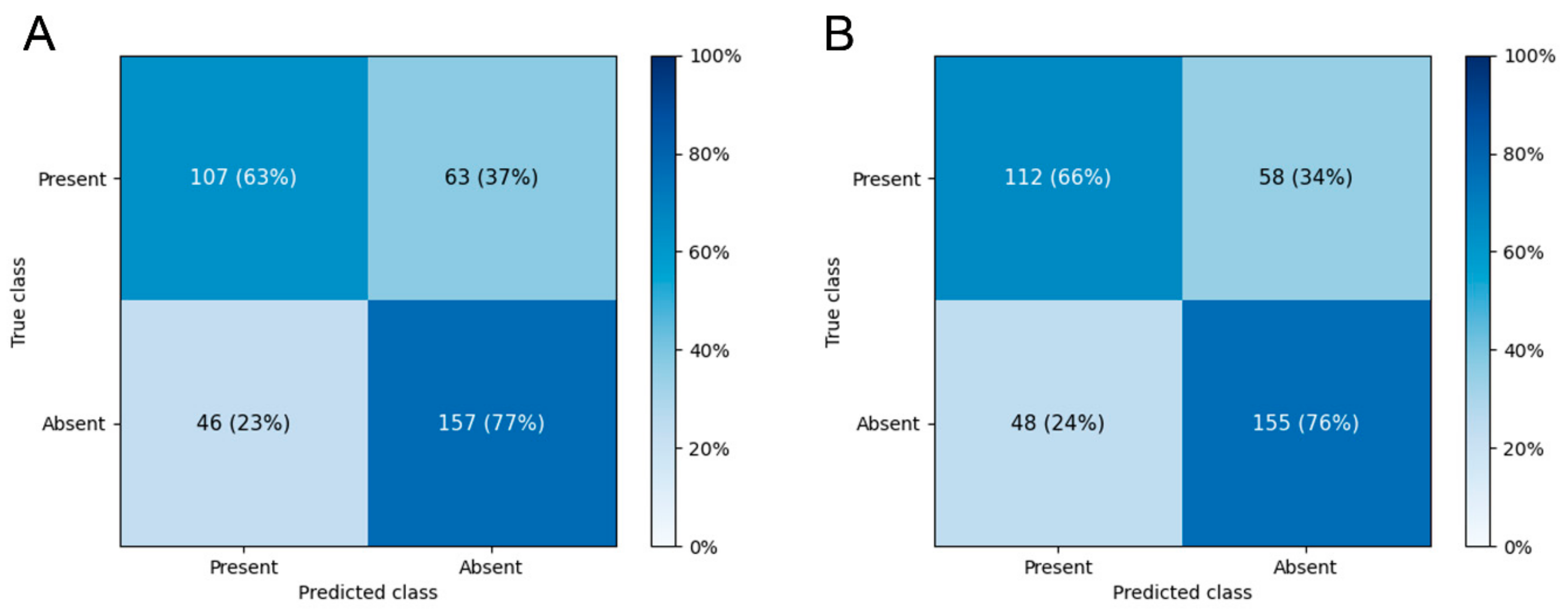

3.1. Prediction of the Presence of WMH

3.2. Falsely Classified Cases

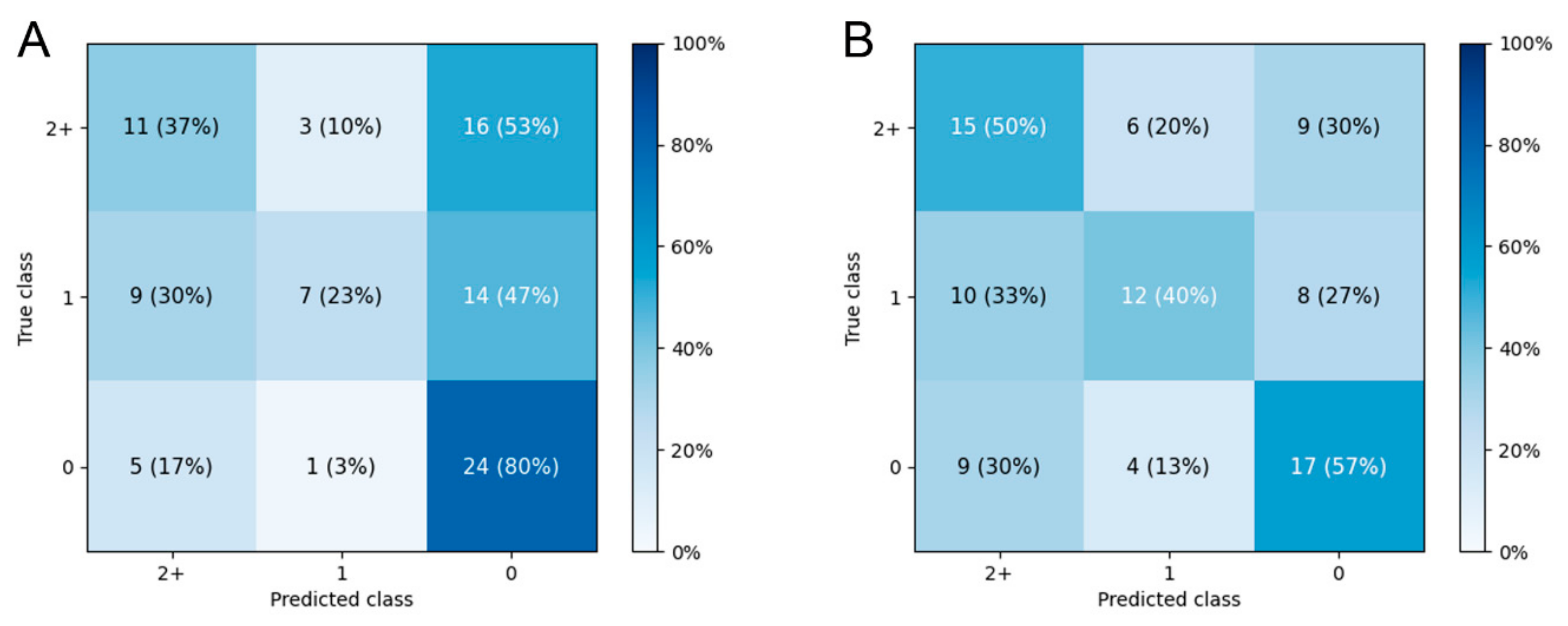

3.3. Prediction of Fazekas Scale Grade

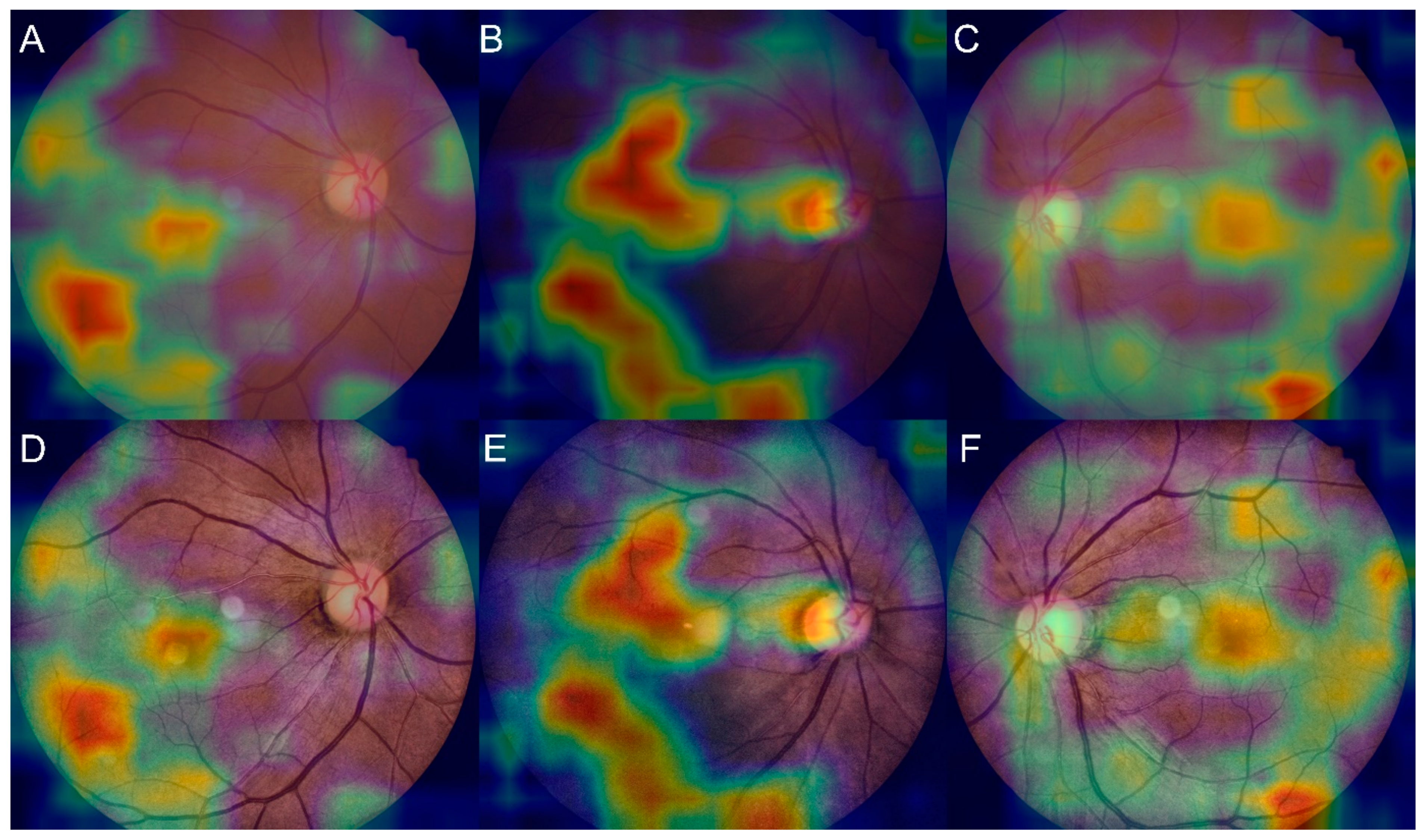

3.4. Salience Maps for High-Grade FS

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mok, V.; Kim, J.S. Prevention and management of cerebral small vessel disease. J. Stroke 2015, 17, 111. [Google Scholar] [CrossRef] [PubMed]

- Cannistraro, R.J.; Badi, M.; Eidelman, B.H.; Dickson, D.W.; Middlebrooks, E.H.; Meschia, J.F. CNS small vessel disease: A clinical review. Neurology 2019, 92, 1146–1156. [Google Scholar] [CrossRef] [PubMed]

- Khan, U.; Porteous, L.; Hassan, A.; Markus, H.S. Risk factor profile of cerebral small vessel disease and its subtypes. J. Neurol. Neurosurg. Psychiatry 2007, 78, 702–706. [Google Scholar] [CrossRef] [PubMed]

- MacGillivray, T.J.; Trucco, E.; Cameron, J.R.; Dhillon, B.; Houston, J.G.; van Beek, E.J. Retinal imaging as a source of biomarkers for diagnosis, characterization and prognosis of chronic illness or long-term conditions. Br. J. Radiol. 2014, 87, 20130832. [Google Scholar] [CrossRef] [PubMed]

- Patton, N.; Aslam, T.; Macgillivray, T.; Pattie, A.; Deary, I.J.; Dhillon, B. Retinal vascular image analysis as a potential screening tool for cerebrovascular disease: A rationale based on homology between cerebral and retinal microvasculatures. J. Anat. 2005, 206, 319–348. [Google Scholar] [CrossRef]

- Cabrera DeBuc, D.; Somfai, G.M.; Koller, A. Retinal microvascular network alterations: Potential biomarkers of cerebrovascular and neural diseases. Am. J. Physiol. Heart Circ. Physiol. 2017, 312, H201–H212. [Google Scholar] [CrossRef]

- Seidelmann, S.B.; Claggett, B.; Bravo, P.E.; Gupta, A.; Farhad, H.; Klein, B.E.; Klein, R.; di Carli, M.; Solomon, S.D. Retinal vessel calibers in predicting long-term cardiovascular outcomes: The atherosclerosis risk in communities study. Circulation 2016, 134, 1328–1338. [Google Scholar] [CrossRef]

- Wong, T.Y.; Klein, R.; Klein, B.E.; Tielsch, J.M.; Hubbard, L.; Nieto, F.J. Retinal microvascular abnormalities and their relationship with hypertension, cardiovascular disease, and mortality. Surv. Ophthalmol. 2001, 46, 59–80. [Google Scholar] [CrossRef]

- Chan, V.T.; Tso, T.H.; Tang, F.; Tham, C.; Mok, V.; Chen, C.; Wong, T.Y.; Cheung, C.Y. Using retinal imaging to study dementia. J. Vis. Exp. 2017, 129, e56137. [Google Scholar] [CrossRef]

- Sahiner, B.; Pezeshk, A.; Hadjiiski, L.M.; Wang, X.; Drukker, K.; Cha, K.H.; Summers, R.M.; Giger, M.L. Deep learning in medical imaging and radiation therapy. Med. Phys. 2019, 46, e1–e36. [Google Scholar] [CrossRef]

- Sorin, V.; Barash, Y.; Konen, E.; Klang, E. Deep learning for natural language processing in radiology—Fundamentals and a systematic review. J. Am. Coll. Radiol. 2020, 17, 639–648. [Google Scholar] [CrossRef] [PubMed]

- Lee, E.J.; Kim, Y.H.; Kim, N.; Kang, D.W. Deep into the brain: Artificial intelligence in stroke imaging. J. Stroke 2017, 19, 277–285. [Google Scholar] [CrossRef] [PubMed]

- Roshani, M.; Sattari, M.A.; Ali, P.J.M.; Roshani, G.H.; Nazemi, B.; Corniani, E.; Zazemi, E. Application of GMDH neural network technique to improve measuring precision of a simplified photon attenuation based two-phase flowmeter. Flow Meas. Instrum. 2020, 75, 101804. [Google Scholar] [CrossRef]

- Peng, Y.; Dharssi, S.; Chen, Q.; Keenan, T.D.; Agrón, E.; Wong, W.T.; Chew, E.Y.; Lu, Z. DeepSeeNet: A deep learning model for automated classification of patient-based age-related macular degeneration severity from color fundus photographs. Ophthalmology 2019, 126, 565–575. [Google Scholar] [CrossRef]

- Liu, H.; Li, L.; Wormstone, I.M.; Qiao, C.; Zhang, C.; Liu, P.; Li, S.; Wang, H.; Mou, D.; Pang, R.; et al. Development and validation of a deep learning system to detect glaucomatous optic neuropathy using fundus photographs. JAMA Ophthalmol. 2019, 137, 1353–1360. [Google Scholar] [CrossRef]

- Lau, A.Y.; Mok, V.; Lee, J.; Fan, T.; Zeng, J.; Lam, B.; Wong, A.; Kwok, C.; Lai, M.; Zee, B. Retinal image analytics detects white matter hyperintensities in healthy adults. Ann. Clin. Transl. Neurol. 2019, 6, 98–105. [Google Scholar] [CrossRef]

- Schmidt, R.; Schmidt, H.; Haybaeck, J.; Loitfelder, M.; Weis, S.; Cavalieri, M.; Seiler, S.; Enzinger, C.; Ropele, S.; Erkinjuntti, T.; et al. Heterogeneity in age-related white matter changes. Acta. Neuropathol. 2011, 122, 171–185. [Google Scholar] [CrossRef]

- Van der Flier, W.M.; van Straaten, E.C.; Barkhof, F.; Ferro, J.M.; Pantoni, L.; Basile, A.M.; Inzitari, D.; Erkinjuntti, T.; Wahlund, L.O.; Rostrup, E.; et al. Medial temporal lobe atrophy and white matter hyperintensities are associated with mild cognitive deficits in non-disabled elderly people: The LADIS study. J. Neurol. Neurosurg. Psychiatry 2005, 76, 1497–1500. [Google Scholar] [CrossRef]

- Eriksson, M.I.; Summanen, P.; Gordin, D.; Forsblom, C.; Shams, S.; Liebkind, R.; Tatlisumak, T.; Putaala, J.; Groop, P.H.; Martola, J.; et al. Cerebral small-vessel disease is associated with the severity of diabetic retinopathy in type 1 diabetes. BMJ Open Diab. Res. Care 2021, 9, e002274. [Google Scholar] [CrossRef]

- Maillard, P.; Carmichael, O.; Harvey, D.; Fletcher, E.; Reed, B.; Mungas, D.; DeCarli, C. FLAIR and diffusion MRI signals are independent predictors of white matter hyperintensities. Am. J. Neuroradiol. 2013, 34, 54–61. [Google Scholar] [CrossRef]

- Fazekas, F.; Chawluk, J.B.; Alavi, A.; Hurtig, H.I.; Zimmerman, R.A. MR signal abnormalities at 1.5 T in Alzheimer’s dementia and normal aging. Am. J. Roentgenol. 1987, 149, 351–356. [Google Scholar] [CrossRef] [PubMed]

- Pizer, S.M.; Johnston, R.E.; Ericksen, J.P.; Yankaskas, B.C.; Muller, K.E. Contrast-limited adaptive histogram equalization: Speed and effectiveness. In Proceedings of the First Conference on Visualization in Biomedical Computing, Atlanta, GA, USA, 22–25 May 1990; pp. 337–345. [Google Scholar]

- Huang, G.; Liu, Z.; van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference of Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Yao, H.; Zhu, D.L.; Jiang, B.; Yu, P. Negative log likelihood ratio loss for deep neural network classification. In Proceedings of the 2019 Future Technologies Conference, San Francisco, CA, USA, 25–26 October 2019; pp. 276–282. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Perkins, N.J.; Schisterman, E.F. The inconsistency of “optimal” cutpoints obtained using two criteria based on the receiver operating characteristic curve. Am. J. Epidemiol. 2006, 163, 670–675. [Google Scholar] [CrossRef] [PubMed]

- Doubal, F.N.; de Haan, R.; MacGillivray, T.J.; Cohn-Hokke, P.E.; Dhillon, B.; Dennis, M.S.; Wardlaw, J.M. Retinal arteriolar geometry is associated with cerebral white matter hyperintensities on magnetic resonance imaging. Int. J. Stroke 2010, 5, 434–439. [Google Scholar] [CrossRef] [PubMed]

- Mutlu, U.; Cremers, L.G.; de Groot, M.; Hofman, A.; Niessen, W.J.; van der Lugt, A.; Klaver, C.C.; Ikram, M.A.; Vernooij, M.W.; Ikram, M.K. Retinal microvasculature and white matter microstructure: The Rotterdam study. Neurology 2016, 87, 1003–1010. [Google Scholar] [CrossRef]

- Qiu, C.; Ding, J.; Sigurdsson, S.; Fisher, D.E.; Zhang, Q.; Eiriksdottir, G.; Klein, R.; van Buchem, M.A.; Gudnason, V.; Cotch, M.F.; et al. Differential associations between retinal signs and CMBs by location: The AGES-Reykjavik study. Neurology 2018, 90, e142–e148. [Google Scholar] [CrossRef]

- Doubal, F.; MacGillivray, T.; Patton, N.; Dhillon, B.; Dennis, M.; Wardlaw, J. Fractal analysis of retinal vessels suggests that a distinct vasculopathy causes lacunar stroke. Neurology 2010, 74, 1102–1107. [Google Scholar] [CrossRef]

- Doubal, F.N.; Dhillon, B.; Dennis, M.S.; Wardlaw, J.M. Retinopathy in ischemic stroke subtypes. Stroke 2009, 40, 389–393. [Google Scholar] [CrossRef][Green Version]

- Poplin, R.; Varadarajan, A.V.; Blumer, K.; Liu, Y.; McConnell, M.V.; Corrado, G.S.; Peng, L.; Webster, D.R. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat. Biomed. Eng. 2018, 2, 158–164. [Google Scholar] [CrossRef]

- Adedigba, A.P.; Adeshininat, S.A.; Aibinu, A.M. Deep learning-based mammogram classification using small dataset. In Proceedings of the 2019 15th International Conference on Electronics, Computer and Computation (ICECCO), Abuja, Nigeria, 10–12 December 2019; pp. 1–6. [Google Scholar]

- Fernando, M.S.; Simpson, J.E.; Matthews, F.; Brayne, C.; Lewis, C.E.; Barber, R.; Kalaria, R.N.; Forster, G.; Esteves, F.; Wharton, S.B.; et al. White matter lesions in an unselected cohort of the elderly: Molecular pathology suggests origin from chronic hypoperfusion injury. Stroke 2006, 37, 1391–1398. [Google Scholar] [CrossRef]

- Fernando, M.S.; O’Brien, J.T.; Perry, R.H.; English, P.; Forster, G.; McMeekin, W.; Slade, J.Y.; Golkhar, A.; Matthews, F.E.; Barber, R.; et al. Comparison of the pathology of cerebral white matter with post-mortem magnetic resonance imaging (MRI) in the elderly brain. Neuropathol. Appl. Neurobiol. 2004, 30, 385–395. [Google Scholar] [CrossRef]

- Nunley, K.A.; Metti, A.L.; Klein, R.; Klein, B.E.; Saxton, J.A.; Orchard, T.J.; Costacou, T.; Aizenstein, H.J.; Rosano, C. Long-term changes in retinal vascular diameter and cognitive impairment in type 1 diabetes. Diab. Vasc. Dis. Res. 2018, 15, 223–232. [Google Scholar] [CrossRef] [PubMed]

- Ting, D.S.; Peng, L.; Varadarajan, A.V.; Liu, T.Y. Novel retinal imaging in assessment of cardiovascular risk factors and systemic vascular diseases. In Diabetic Retinopathy and Cardiovascular Disease; Sabanayagam, C., Wong, T.Y., Eds.; Karger Publishers: Basel, Switzerland, 2019; Volume 27, pp. 106–118. [Google Scholar]

- Nagasawa, T.; Tabuchi, H.; Masumoto, H.; Enno, H.; Niki, M.; Ohara, Z.; Yoshizumi, Y.; Ohsugi, H.; Mitamura, Y. Accuracy of ultrawide-field fundus ophthalmoscopy-assisted deep learning for detecting treatment-naïve proliferative diabetic retinopathy. Int. Ophthalmol. 2019, 39, 2153–2159. [Google Scholar] [CrossRef] [PubMed]

- Matsuba, S.; Tabuchi, H.; Ohsugi, H.; Enno, H.; Ishitobi, N.; Masumoto, H.; Kiuchi, Y. Accuracy of ultra-wide-field fundus ophthalmoscopy-assisted deep learning, a machine-learning technology, for detecting age-related macular degeneration. Int. Ophthalmol. 2019, 39, 1269–1275. [Google Scholar] [CrossRef] [PubMed]

- Nagasato, D.; Tabuchi, H.; Ohsugi, H.; Masumoto, H.; Enno, H.; Ishitobi, N.; Sonobe, T.; Kameoka, M.; Niki, M.; Hayashi, K.; et al. Deep neural network-based method for detecting central retinal vein occlusion using ultrawide-field fundus ophthalmoscopy. J. Ophthalmol. 2018, 2018, 1875431. [Google Scholar] [CrossRef]

| Whole Dataset | Training Dataset | Test Dataset | ||||

|---|---|---|---|---|---|---|

| Fundus N | Patients N | Fundus N | Patients N | Fundus N | Patients N | |

| Prediction of the presence of WMH | ||||||

| Overall | 3726 | 1892 | 3353 | 1703 | 373 | 189 |

| Absent WMH | 2024 | 1019 | 1821 | 917 | 203 | 102 |

| Present WMH | 1702 | 873 | 1532 | 786 | 170 | 87 |

| Prediction of Fazekas scale | ||||||

| Overall | 905 | 462 | 814 | 416 | 91 | 46 |

| Grade 0 | 303 | 154 | 272 | 138 | 31 | 16 |

| Grade 1 | 302 | 154 | 272 | 139 | 30 | 15 |

| Grade 2+ | 300 | 154 | 270 | 139 | 30 | 15 |

| Model | Diagnostic Performance, % (95% CI) | AUC (95% CI) | |||

|---|---|---|---|---|---|

| Sensitivity | Specificity | PPV | NPV | ||

| DenseNet-201 | 66.1 ± 8.8 | 71.3 ± 8.0 | 66.5 ± 4.1 | 71.8 ± 3.6 | 0.736 ± 0.030 |

| EfficientNet-B7 | 61.7 ± 9.8 | 73.9 ± 7.4 | 66.9 ± 3.5 | 70.1 ± 4.2 | 0.724 ± 0.026 |

| Model | Fold 0 | Fold 1 | Fold 2 | Fold 3 | Fold 4 | Fold 5 | Fold 6 | Fold 7 | Fold 8 | Fold 9 |

|---|---|---|---|---|---|---|---|---|---|---|

| DenseNet-201 | 0.721 | 0.655 | 0.635 | 0.729 | 0.653 | 0.617 | 0.649 | 0.714 | 0.620 | 0.657 |

| EfficientNet-B7 | 0.674 | 0.628 | 0.692 | 0.714 | 0.689 | 0.626 | 0.692 | 0.710 | 0.626 | 0.643 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cho, B.-J.; Lee, M.; Han, J.; Kwon, S.; Oh, M.S.; Yu, K.-H.; Lee, B.-C.; Kim, J.H.; Kim, C. Prediction of White Matter Hyperintensity in Brain MRI Using Fundus Photographs via Deep Learning. J. Clin. Med. 2022, 11, 3309. https://doi.org/10.3390/jcm11123309

Cho B-J, Lee M, Han J, Kwon S, Oh MS, Yu K-H, Lee B-C, Kim JH, Kim C. Prediction of White Matter Hyperintensity in Brain MRI Using Fundus Photographs via Deep Learning. Journal of Clinical Medicine. 2022; 11(12):3309. https://doi.org/10.3390/jcm11123309

Chicago/Turabian StyleCho, Bum-Joo, Minwoo Lee, Jiyong Han, Soonil Kwon, Mi Sun Oh, Kyung-Ho Yu, Byung-Chul Lee, Ju Han Kim, and Chulho Kim. 2022. "Prediction of White Matter Hyperintensity in Brain MRI Using Fundus Photographs via Deep Learning" Journal of Clinical Medicine 11, no. 12: 3309. https://doi.org/10.3390/jcm11123309

APA StyleCho, B.-J., Lee, M., Han, J., Kwon, S., Oh, M. S., Yu, K.-H., Lee, B.-C., Kim, J. H., & Kim, C. (2022). Prediction of White Matter Hyperintensity in Brain MRI Using Fundus Photographs via Deep Learning. Journal of Clinical Medicine, 11(12), 3309. https://doi.org/10.3390/jcm11123309