Assessing a Digital Scorecard on Global Immunization Progress: Stakeholder Views and Implications for Enhancing Performance and Accountability

Abstract

1. Introduction

- What are the informational needs of immunization program officers related to monitoring performance and communicating about immunization systems?

- How do stakeholders see the appropriateness of the IA2030 scorecard, and what are its use cases?

- What design changes would better enable stakeholders to enhance accountability, track program performance, and advocate for resources?

- How have other health initiatives used digital visual analytic platforms (i.e., dashboards and scorecards) to enhance accountability, track program performance, and advocate for resources?

2. Materials and Methods

2.1. Key Informant Interviews

2.1.1. Recruitment and Interview Process

2.1.2. Analysis

2.2. Document Review

2.3. Integrated Qualitative Findings

3. Results

3.1. Key Informant Interviews

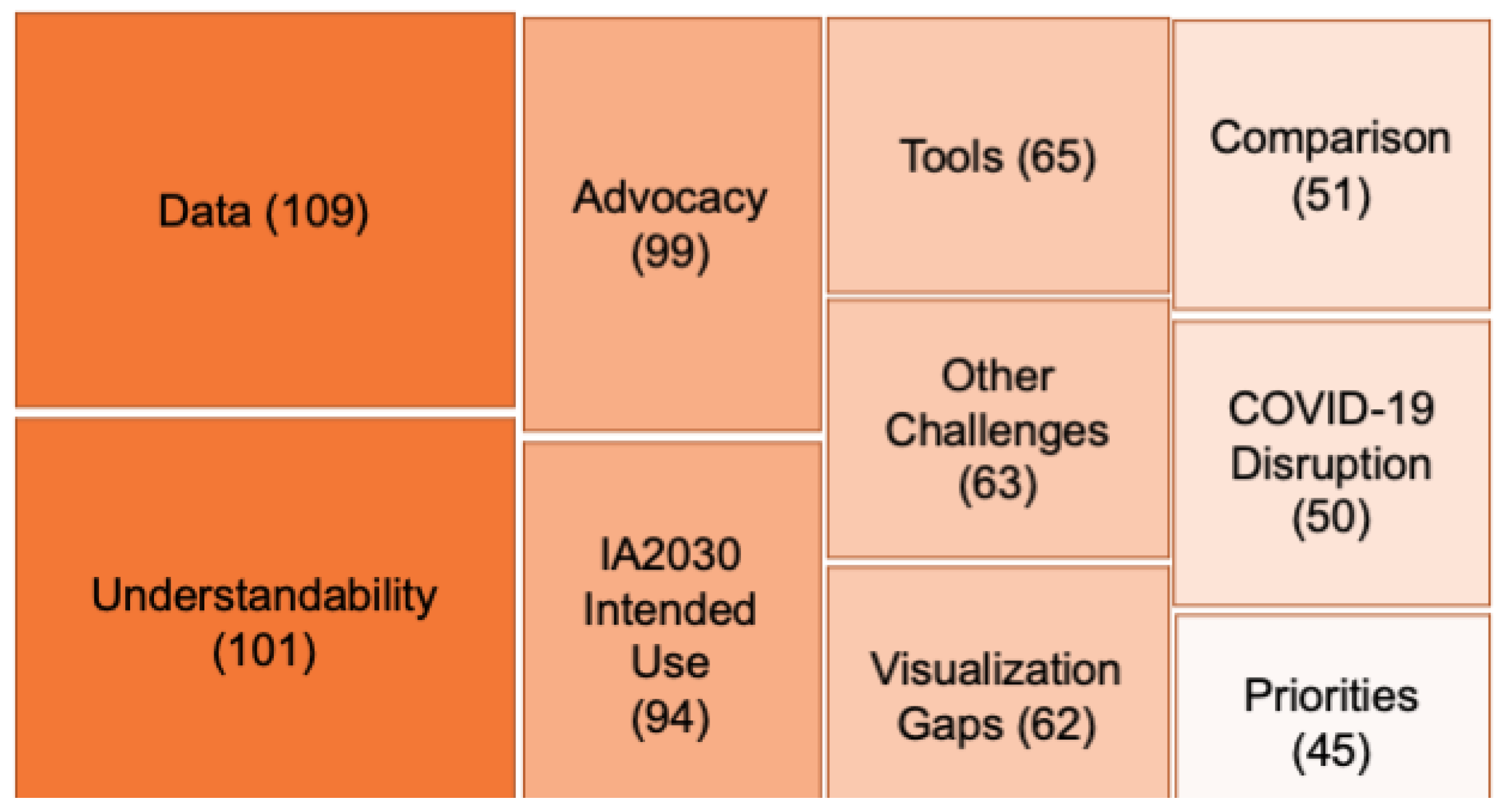

3.2. Interview Transcript Analysis

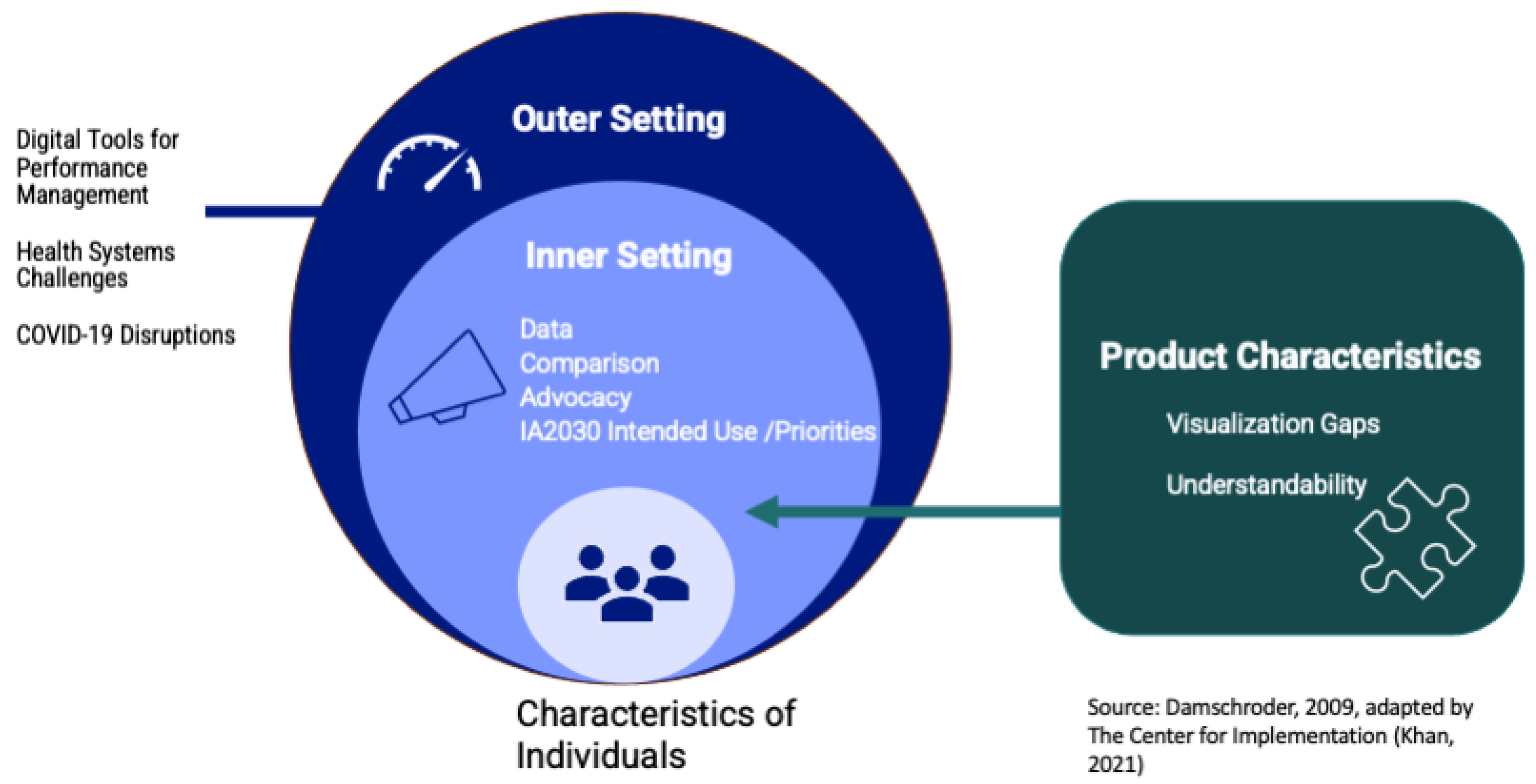

3.2.1. Outer Setting Context for Scorecard Use

“At the end, COVID-19 introduced a lot of new things in the program [like] data visualization dashboards… [and the] use of digital tools,” said a regional monitoring specialist [KII01].

“We were having weekly meetings [to] prepare for the implementation of COVID vaccine. And that was the tool we were looking at every time we had a meeting, so we would start a meeting with this tool, and we would say, okay, that’s where we are in terms of objectives.”

“[Our] region uses a surveillance system for VPD [vaccine-preventable diseases]—polio, measles, rubella and CRS. We do a weekly data bulletin to show the data. It should work hand in hand … fit in the scorecard because the indicators [are] used in all the regions of WHO.”

“It was difficult to get the vaccines to [people] on time during the pandemic. There’s also the problem of personnel, there’s not a lot of people you could mobilize and the priority went to COVID vaccination.”

3.2.2. Inner Setting Context for Scorecard Use

“If we had the data further broken down to regional or district level, it becomes useful also at the subnational level for managers of the EPI at the regional and district level for purposes of advocacy.”

“If it’s not timely, then it’s not going to be reliable, and then it becomes a library,” said a regional manager [KII10]. “By the time you are publishing the book, it’s already outdated.”

“They need to understand the data. It is good for us to have [data] here in scorecard so that we don’t have any doubt.”

“There should be a way of communication [in] negotiation with the ministry of health regarding their data here [on the scorecard].”

“When we will have a scorecard showing trends of countries over years, this will generate more power to support our requests for funding and support for countries,” said one regional immunization manager, KII07, “definitely it’s going to help.”

“It’s important that this tool is helpful for [the regions] not just for the WHO, UNICEF, or the donors,” said one regional monitoring specialist, K001.

“If we’re measuring progress,… it’s more useful if we compare with our peers, countries that have similar characteristics and context while also looking at the performance against global targets.”

3.2.3. Product Characteristics

The graphical representation is quite attractive. It is very easy to look at what is missing, off track, on track, where we are standing.

3.3. Document Review

“Officials saw the proportion was low… this indicator was red showing it was not on track…” Officials took action with “coverage increasing from 42% to 83% in the province.”

“Members of Congress immediately started citing our figures in their own demands for a response.”

3.4. Merged Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Template for Framework Chart

- -

- Overarching Perspective: Summary

- -

- Illustrative Quotes (1–3):

| Category | Code | Definition | Quote | Summary |

| Instructions | ||||

| Copied from codebook | Include the best 2–3 quotes with this code in the transcript. Remove “uh” and other filler words. | Condense informants’ comments on this code across all coded quotes in transcript. Note where codes are overlapping. | ||

| Outer Setting | Health System Challenges | Barriers to sustaining and improving routine immunization performance, such as financial and personnel management. | ||

| Tools | Discussion of data visualization or management tools to monitor immunization systems at the country or regional level. | |||

| COVID-19 Disruptions | Any mention of COVID-19, particularly the financial and human resources related to pandemic response, COVID-19 vaccine coverage, and disruptions to immunizations | |||

| Inner Setting | Advocacy | Potential use of scorecard in making advocacy presentations or other high-level communications to policy makers or other influencers. Includes use of scorecard for decision making and implementation. Includes references to the certain level it will be used at (regional, country-level, subnational) and stakeholders. | ||

| Data | Discusses units used to display data, such as number of outbreaks, number of people, number of deaths. Refers to the need for standardization of units or clear indication of what units a visualization uses. Could also reference where the data should come from (i.e., regional or country level) or a lack of data (e.g., missing data in general or on scorecard website). | |||

| Comparison | Discusses either the need for the dashboard to provide suitable means for comparison across indicators and regions or country-level comparisons | |||

| IA2030 Intended Use | Refers to adaptation/tailoring of IA2030 objectives and indicators for country or region. Not specific to scorecard (can be double coded). | |||

| Priorities | Refers to current or new changes in prioritization in immunization goals, including decision making based on the use of data. Not necessarily specific to IA2030 framework/scorecard (can be double coded). | |||

| Product Characteristics | Visualization Gaps | Features missing from scorecard | ||

| Understandability | Ease or difficulties in interpretation/understandability of the dashboard. This could refer to the language, the visualization, color scheme, or the meaning of on track-off track. Also includes ease or difficulties in interpreting the overview dashboard. | |||

Appendix B. Platforms Reviewed

| Name of Platform | Website Address |

| State of Vermont Immunization & Infectious Disease Scorecard | https://embed.clearimpact.com/Scorecard/Embed/600 (accessed on 29 May 2023) |

| The ALMA Scorecard for Accountability and Action | https://scorecardhub.org/guides-and-toolkits/country-level-scorecard-tool-introduction/ (accessed on 29 May 2023) |

| Countdown to 2030 Dashboards | https://data.unicef.org/countdown-2030/ (accessed on 29 May 2023) |

| NCD Scorecard | Archive image: https://www.c3health.org/blog/global-ncd-scorecard/ (accessed on 29 May 2023) |

| NTD ConnectOR | https://www.cor-ntd.org/research-outcomes/dashboard (accessed on 29 May 2023) |

| Goalkeepers | https://www.gatesfoundation.org/goalkeepers/ (accessed on 29 May 2023) |

| VIEW-hub | https://view-hub.org/ (accessed on 29 May 2023) |

| UNICEF Immunization coverage estimates dashboard | https://data.unicef.org/resources/immunization-coverage-estimates-data-visualization/ (accessed on 29 May 2023) |

| Congressional District Health Dashboard | https://www.congressionaldistricthealthdashboard.org (accessed on 29 May 2023) |

| The Marshall Project: COVID Cases in Prisons | https://www.themarshallproject.org/coronavirus (accessed on 29 May 2023) |

| COVID-19 data of the Region of the Americas | https://www.paho.org/en/topics/coronavirus-infections/coronavirus-disease-covid-19-pandemic (accessed on 29 May 2023) |

| Washington State COVID-19 Data Dashboard | https://doh.wa.gov/emergencies/covid-19/data-dashboard#technical (accessed on 29 May 2023) |

| Johns Hopkins Coronavirus Resource Center | https://coronavirus.jhu.edu/ (accessed on 29 May 2023) |

| 2022 Scorecard on State Health System Performance | https://www.commonwealthfund.org/publications/scorecard/2022/jun/2022-scorecard-state-health-system-performance (accessed on 29 May 2023) |

| WHO Coronavirus (COVID-19) Dashboard | https://covid19.who.int/ (accessed on 29 May 2023) |

References

- Vaccines and Immunization. Available online: https://www.who.int/health-topics/vaccines-and-immunization (accessed on 30 August 2023).

- O’Brien, K.L.; Lemango, E.; Nandy, R.; Lindstrand, A. The immunization Agenda 2030: A vision of global impact, reaching all, grounded in the realities of a changing world. Vaccine 2022. [Google Scholar] [CrossRef]

- Carter, A.; Msemburi, W.; Sim, S.Y.; Gaythorpe, K.A.; Lambach, P.; Lindstrand, A.; Hutubessy, R. Modeling the impact of vaccination for the immunization Agenda 2030: Deaths averted due to vaccination against 14 pathogens in 194 countries from 2021 to 2030. Vaccine 2023, in press. [Google Scholar] [CrossRef]

- Watson, O.J.; Barnsley, G.; Toor, J.; Hogan, A.B.; Winskill, P.; Ghani, A.C. Global impact of the first year of COVID-19 vaccination: A mathematical modelling study. Lancet Infect. Dis. 2022, 22, 1293–1302. [Google Scholar] [CrossRef]

- Shet, A.; Carr, K.; Danovaro-Holliday, M.C.; Sodha, S.V.; Prosperi, C.; Wunderlich, J.; Wonodi, C.; Reynolds, H.W.; Mirza, I.; Gacic-Dobo, M.; et al. Impact of the SARS-CoV-2 pandemic on routine immunisation services: Evidence of disruption and recovery from 170 countries and territories. Lancet Glob. Health 2022, 10, e186–e194. [Google Scholar] [CrossRef]

- COVID-19 Pandemic Fuels Largest Continued Backslide in Vaccinations in Three Decades. Available online: https://www.who.int/news/item/15-07-2022-covid-19-pandemic-fuels-largest-continued-backslide-in-vaccinations-in-three-decades (accessed on 8 August 2023).

- What’s Really Happening with Global Vaccine Access?|Gavi, the Vaccine Alliance. Available online: https://www.gavi.org/vaccineswork/whats-really-happening-global-vaccine-access (accessed on 8 August 2023).

- Immunization Agenda 2030, “Implementing the Immunization Agenda 2030: A Framework for Action through Coordinated Planning, Monitoring & Evaluation, Ownership & Accountability, and Communications & Advocacy”. Available online: https://www.who.int/publications/m/item/implementing-the-immunization-agenda-2030 (accessed on 10 August 2023).

- Immunization Agenda 2030. “IA2030 Scorecard for Immunization Agenda 2030”. Available online: https://scorecard.immunizationagenda2030.org (accessed on 11 May 2023).

- Kaplan, R.S.; Norton, D.P. The Balanced Scorecard—Measures That Drive Performance. Harvard Business Review, 1 January 1992. Available online: https://hbr.org/1992/01/the-balanced-scorecard-measures-that-drive-performance-2 (accessed on 10 August 2023).

- Springer, M.V.; Sales, A.E.; Islam, N.; McBride, A.C.; Landis-Lewis, Z.; Tupper, M.; Corches, C.L.; Robles, M.C.; Skolarus, L.E. A step toward understanding the mechanism of action of audit and feedback: A qualitative study of implementation strategies. Implement. Sci. 2021, 16, 35. [Google Scholar] [CrossRef]

- Amer, F.; Hammoud, S.; Khatatbeh, H.; Lohner, S.; Boncz, I.; Endrei, D. The deployment of balanced scorecard in health care organizations: Is it beneficial? A systematic review. BMC Health Serv. Res. 2022, 22, 65. [Google Scholar] [CrossRef]

- Edward, A.; Kumar, B.; Kakar, F.; Salehi, A.S.; Burnham, G.; Peters, D.H. Configuring Balanced Scorecards for Measuring Health System Performance: Evidence from 5 Years’ Evaluation in Afghanistan. PLoS Med. 2011, 8, e1001066. [Google Scholar] [CrossRef]

- van Elten, H.J.; Sülz, S.; van Raaij, E.M.; Wehrens, R. Big Data Health Care Innovations: Performance Dashboarding as a Process of Collective Sensemaking. J. Med. Internet Res. 2022, 24, e30201. [Google Scholar] [CrossRef] [PubMed]

- Stojanovic, F. “Scorecards vs. Dashboards: Definitions, Benefits, and Differences to Help You Implement the Best Performance Management Strategy|Databox Blog”, Databox. Available online: https://databox.com/scorecard-vs-dashboard (accessed on 30 August 2023).

- Chishtie, J.A.; Marchand, J.-S.; Turcotte, L.A.; Bielska, I.A.; Babineau, J.; Cepoiu-Martin, M.; Irvine, M.; Munce, S.; Abudiab, S.; Bjelica, M.; et al. Visual Analytic Tools and Techniques in Population Health and Health Services Research: Scoping Review. J. Med. Internet Res. 2020, 22, e17892. [Google Scholar] [CrossRef] [PubMed]

- Nash, D.; Geng, E. Goal-Aligned, Epidemic Intelligence for the Public Health Response to the COVID-19 Pandemic. Am. J. Public Health 2020, 110, 1154–1156. [Google Scholar] [CrossRef] [PubMed]

- Bevan, N.; Macleod, M. Usability measurement in context. Behav. Inf. Technol. 1994, 13, 132–145. [Google Scholar] [CrossRef]

- Weiner, B.J.; Lewis, C.C.; Stanick, C.; Powell, B.J.; Dorsey, C.N.; Clary, A.S.; Boynton, M.H.; Halko, H. Psychometric assessment of three newly developed implementation outcome measures. Implement. Sci. 2017, 12, 108. [Google Scholar] [CrossRef] [PubMed]

- Palinkas, L.A.; Horwitz, S.M.; Green, C.A.; Wisdom, J.P.; Duan, N.; Hoagwood, K. Purposeful Sampling for Qualitative Data Collection and Analysis in Mixed Method Implementation Research. Adm. Policy Ment. Health 2015, 42, 533–544. [Google Scholar] [CrossRef] [PubMed]

- Nelson, J. Using conceptual depth criteria: Addressing the challenge of reaching saturation in qualitative research. Qual. Res. 2017, 17, 554–570. [Google Scholar] [CrossRef]

- Gale, N.K.; Heath, G.; Cameron, E.; Rashid, S.; Redwood, S. Using the framework method for the analysis of qualitative data in multi-disciplinary health research. BMC Med. Res. Methodol. 2013, 13, 117. [Google Scholar] [CrossRef] [PubMed]

- Saldaña, J. The Coding Manual for Qualitative Researchers; SAGE: Thousand Oaks, CA, USA, 2021. [Google Scholar]

- Damschroder, L.J.; Aron, D.C.; Keith, R.E.; Kirsh, S.R.; Alexander, J.A.; Lowery, J.C. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Sci. Implement. 2009, 4, 50. [Google Scholar] [CrossRef] [PubMed]

- Dalglish, S.L.; Khalid, H.; McMahon, S.A. Document analysis in health policy research: The READ approach. Health Policy Plan. 2020, 35, 1424–1431. [Google Scholar] [CrossRef] [PubMed]

- The Consolidated Framework for Implementation Research (CFIR) 2.0. (2022). Adapted from “The Updated Consolidated Framework for Implementation Research based on User Feedback,” by Damschroder, L.J., Reardon, C.M., Widerquist, M.A.O., et al., 2022, Implementation Sci 14, 75. Copyright by The Center for Implementation. Available online: https://thecenterforimplementation.com/toolbox/cfir (accessed on 3 January 2024).

- PEMAT Tool for Audiovisual Materials (PEMAT-A/V). Available online: https://www.ahrq.gov/health-literacy/patient-education/pemat-av.html (accessed on 13 October 2023).

- Arsenault, C.; Harper, S.; Nandi, A.; Rodríguez, J.M.M.; Hansen, P.M.; Johri, M. An equity dashboard to monitor vaccination coverage. Bull. World Health Organ. 2017, 95, 128–134. [Google Scholar] [CrossRef] [PubMed]

- Wariri, O.; Nkereuwem, E.; Erondu, N.A.; Edem, B.; Nkereuwem, O.O.; Idoko, O.T.; Agogo, E.; Enegela, J.E.; Sesay, T.; Conde, I.S.; et al. A scorecard of progress towards measles elimination in 15 west African countries, 2001–2019: A retrospective, multicountry analysis of national immunisation coverage and surveillance data. Lancet Glob. Health 2021, 9, e280–e290. [Google Scholar] [CrossRef]

- WHO/UNICEF Estimates of National Immunization Coverage. Available online: https://www.who.int/news-room/questions-and-answers/item/who-unicef-estimates-of-national-immunization-coverage (accessed on 19 June 2023).

- Betto, F.; Sardi, A.; Garengo, P.; Sorano, E. The Evolution of Balanced Scorecard in Healthcare: A Systematic Review of Its Design, Implementation, Use, and Review. Int. J. Environ. Res. Public Health 2022, 19, 10291. [Google Scholar] [CrossRef]

- The Marshall Project, “Impact Report: Summer 2020”. Summer 2020. Available online: https://d63kb4t2ifcex.cloudfront.net/upload/assets/Summer-2020-Impact-Report.pdf (accessed on 10 August 2023).

- “Tracking the Spread of Coronavirus in Prisons”, The Marshall Project. Available online: https://www.themarshallproject.org/2020/04/24/tracking-the-spread-of-coronavirus-in-prisons (accessed on 10 August 2023).

- Rosinski, A.A.; Narine, S.; Yamey, G. Developing a scorecard to assess global progress in scaling up diarrhea control tools: A qualitative study of academic leaders and implementers. PLoS ONE 2013, 8, e67320. [Google Scholar] [CrossRef] [PubMed]

- Lozano, R.; Soliz, P.; Gakidou, E.; Abbott-Klafter, J.; Feehan, D.M.; Vidal, C.; Ortiz, J.P.; Murray, C.J. Benchmarking of performance of Mexican states with effective coverage. Lancet 2006, 368, 1729–1741. [Google Scholar] [CrossRef] [PubMed]

- Chishtie, J.A.; Babineau, J.; Bielska, I.A.; Cepoiu-Martin, M.; Irvine, M.; Koval, A.; Marchand, J.-S.; Turcotte, L.; Jeji, T.; Jaglal, S. Visual Analytic Tools and Techniques in Population Health and Health Services Research: Protocol for a Scoping Review. JMIR Res. Protoc. 2019, 8, e14019. [Google Scholar] [CrossRef] [PubMed]

| Interview # | Level | Gender | Job Description | WHO Region |

|---|---|---|---|---|

| 1 | Regional | Female | Program Manager | AMR |

| 2 | Subnational | Male | M&E | AMR |

| 3 | National | Male | Technical Advisor | AMR |

| 4 | Regional | Female | Program Manager | EUR |

| 5 | National | Male | Program Manager | AFR |

| 6 | National | Male | Program Manager | EMR |

| 7 | Regional | Male | M&E | EMR |

| 8 | National | Male | Program Manager | EMR |

| 9 | Regional | Male | Program Manager | AFR |

| 10 | National | Male | M&E | AFR |

| 11 | Subnational | Male | M&E | EMR |

| 12 | Regional | Female | Program Manager | AFR |

| 13 | National | Male | Technical Advisor | AFR |

| 14 | Regional | Male | Program Manager | AMR |

| 15 | Regional | Male | Program Manager | SEAR |

| Theme | Outcome + Concurrence - Mixed Findings | What Did the Platforms Show? | What Did the Key Informant Participants Say? |

|---|---|---|---|

| Outer Setting Themes | |||

| Tools | + | Large number of COVID-19 dashboards, with six reviewed. | Increased use of visual dashboards during pandemic |

| Health system challenges and COVID disruptions | - | Visualized routine child, quantitative health indicators | Concerned about management capacity, financial challenge, governance limitations, health worker exhaustion |

| Inner Setting Themes | |||

| Data | + | U.S.-focused platforms visualized data by state and county-level. | Sought to use scorecard for district, regional and community-level planning and advocacy |

| - | Large numbers of indicators were displayed by all platforms One-third of platforms were not updated in 2023. | Country-level participants found “Dashboard,” with 18 indicators, overly complex. Platforms should be regularly updated to avoid irrelevance. | |

| Advocacy and Priorities | + | Platforms are described as helping identify service gaps, drive actionable decision making. | Envisioned using scorecard for rapid policy briefing, stakeholder engagement, support data-driven decisions |

| - | One-third of sample platforms had related events to capture attention of policy makers. | While participants said policy-focused events would help foster change, IA2030 scorecard does not describe such events. | |

| Comparison | + | Most platforms explicitly set up for comparison between countries or other geographic entities | Aimed to compare country performance to peers to create “positive pressure” |

| Product Characteristics | |||

| Understand-ability | + - | Eight of the 15 tools signal their intention to be accessible and “easy to use.” Dashboards tended to be more complex. | Regional level users felt scorecard is easy to use. Country-level users felt the overview dashboard, with 18 indicators, should be simpler. |

| - | Platforms used reddish color to signal poor performance and green for good performance. | Scorecard uses reddish and yellow color to signal poor performance. Does not use green. | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Weeks, R.; Vishwanath, P.; Stewart, K.A.; Liang, C.; Efe-Aluta, O.; Olayinka, F.; Kim, C.I.; Macarayan, E.; Niehaus, L.; Bar-Zeev, N.; et al. Assessing a Digital Scorecard on Global Immunization Progress: Stakeholder Views and Implications for Enhancing Performance and Accountability. Vaccines 2024, 12, 193. https://doi.org/10.3390/vaccines12020193

Weeks R, Vishwanath P, Stewart KA, Liang C, Efe-Aluta O, Olayinka F, Kim CI, Macarayan E, Niehaus L, Bar-Zeev N, et al. Assessing a Digital Scorecard on Global Immunization Progress: Stakeholder Views and Implications for Enhancing Performance and Accountability. Vaccines. 2024; 12(2):193. https://doi.org/10.3390/vaccines12020193

Chicago/Turabian StyleWeeks, Rose, Padmini Vishwanath, Katy Atkins Stewart, Christine Liang, Oniovo Efe-Aluta, Folake Olayinka, Carolyn Inae Kim, Erlyn Macarayan, Lori Niehaus, Naor Bar-Zeev, and et al. 2024. "Assessing a Digital Scorecard on Global Immunization Progress: Stakeholder Views and Implications for Enhancing Performance and Accountability" Vaccines 12, no. 2: 193. https://doi.org/10.3390/vaccines12020193

APA StyleWeeks, R., Vishwanath, P., Stewart, K. A., Liang, C., Efe-Aluta, O., Olayinka, F., Kim, C. I., Macarayan, E., Niehaus, L., Bar-Zeev, N., & Wonodi, C. (2024). Assessing a Digital Scorecard on Global Immunization Progress: Stakeholder Views and Implications for Enhancing Performance and Accountability. Vaccines, 12(2), 193. https://doi.org/10.3390/vaccines12020193