1. Introduction

Learning disabilities affect a significant portion of the student population, creating unique barriers to academic achievement that require specialized educational interventions. Students with conditions such as dyslexia, dyscalculia, and dysgraphia face distinct cognitive, academic, and behavioral challenges that traditional teaching approaches often struggle to address effectively. Despite the availability of established intervention methods, providing truly individualized support that adapts to each student’s specific learning patterns remains a persistent challenge in special education.

The emergence of artificial intelligence (AI) in education has opened unprecedented possibilities for addressing these challenges. Recent systematic reviews have documented AI’s transformative potential across educational settings, demonstrating significant positive impacts on academic performance, personalized learning, and educational management [

1,

2]. The democratization of sophisticated AI capabilities, particularly following the release of ChatGPT in November 2022, has accelerated the adoption of these technologies in educational contexts.

Current AI applications in education encompass machine learning tools, intelligent tutoring systems, chatbots, educational games, and virtual reality devices, showing promise in enhancing learning environments through adaptive feedback, automated evaluation, and real-time personalization [

2,

3]. However, implementation challenges persist, including digital divides, privacy concerns, insufficient teacher training, and questions about maintaining balanced human–technology integration [

1,

4].

Despite extensive documentation of AI’s general educational impact through multiple systematic reviews examining hundreds of studies [

1,

2], a critical gap exists in understanding AI applications specifically designed for students with learning disabilities. While previous reviews have examined AI’s broad educational influence, none have provided a comprehensive analysis of how these technologies address the specific needs of this vulnerable population.

AI-based interventions promise to revolutionize special education by offering scalable, customized solutions that adapt in real-time to individual learning patterns and challenges. From intelligent tutoring systems to game-based learning platforms, these technologies could provide the individualized support that students with learning disabilities need to reach their full potential. However, the specific effectiveness of these interventions for this population remains under-researched and lacks systematic evaluation.

This systematic review addresses this critical gap by focusing specifically on AI-based interventions for students with learning disabilities. The primary objectives are the following:

RQ1: To what extent are AI-based educational interventions effective in improving learning outcomes for students with learning disabilities when compared to traditional teaching methods or control groups?

RQ2: What specific types of AI technologies (e.g., personalized learning systems, generative AI, game-based learning, assistive apps) are most frequently studied, and which demonstrate the greatest effectiveness for students with learning disabilities?

By addressing these questions, this review seeks to provide educators, policymakers, and researchers with evidence-based insights to guide the implementation and further development of AI technologies in special education settings, ensuring that the transformative potential of AI extends to all learners, including those with learning disabilities.

2. Materials and Methods

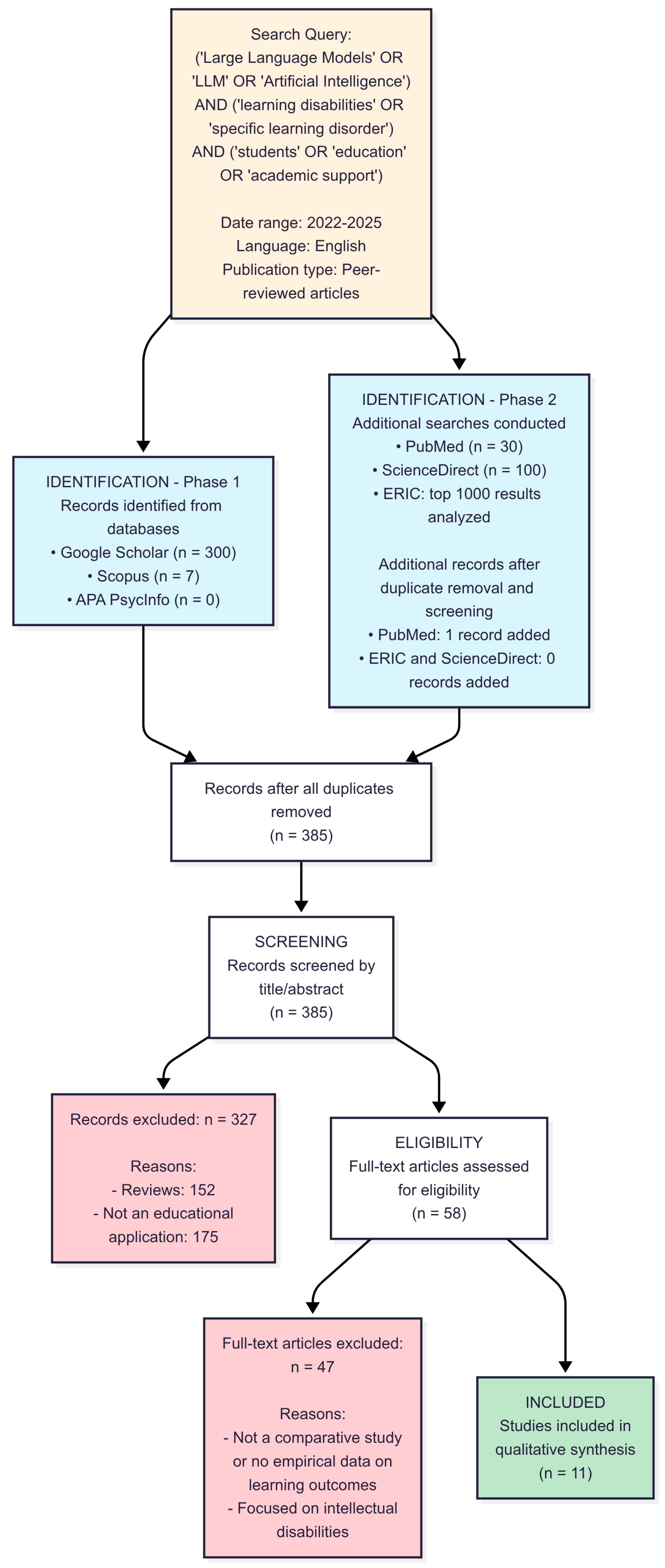

This systematic review was conducted following the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) 2020 guidelines [

5]. To ensure comprehensive coverage and address the limitations of previous searches, a systematic search was performed across multiple electronic databases: Google Scholar, ScienceDirect, APA PsycInfo, ERIC, Scopus, and PubMed.

The search was conducted between April 2025 and July 2025 to identify experimental studies published from 2022 to the present. This timeframe was chosen to focus on the most recent advancements in AI, particularly following the widespread adoption of Large Language Models (LLMs).

The following search query was consistently applied across all databases, adapting to the different search formats:

The search was limited to peer-reviewed articles published in English.

2.1. Inclusion and Exclusion Criteria (PICOS Framework)

To ensure a focused and rigorous review, inclusion and exclusion criteria were defined using the PICOS framework (Population, Intervention, Comparison, Outcomes, Study Design):

Population (P): Studies involving students of any age (from primary school to university) with a formal diagnosis of a specific learning disability (SLD) as defined by established diagnostic criteria (e.g., DSM-5). This includes conditions such as dyslexia, dyscalculia, dysgraphia, and other specific learning disorders. Studies focusing primarily on intellectual disabilities or autism spectrum disorder (ASD) without a co-occurring SLD were excluded, though studies with mixed populations were considered if data for the SLD subgroup were reported;

Intervention (I): The intervention had to be educational in nature and utilize an identifiable artificial intelligence (AI) component. This included personalized/adaptive learning systems, intelligent tutoring systems (ITS), AI-based games, generative AI applications, and AI-powered assistive technologies. Interventions where the AI component was not clearly described or was limited to basic automation were excluded;

Comparison (C): Included studies were required to have a comparative design. This could involve a control group receiving traditional instruction or no intervention, a comparison with another form of technology, or a pre-test/post-test design where outcomes were compared against a baseline;

Outcomes (O): Studies had to measure and report quantitative data on educational or cognitive outcomes. Primary outcomes of interest included academic performance (e.g., reading fluency, mathematical skills), cognitive functions (e.g., memory, attention), and student engagement. Studies that did not provide empirical data on learning outcomes were excluded;

Study Design (S): Only peer-reviewed experimental or quasi-experimental studies were included. This encompasses randomized controlled trials (RCTs), non-randomized controlled trials, and single-subject designs. Literature reviews, meta-analyses, theoretical papers, conference abstracts, and dissertations were excluded.

2.2. Study Selection and PRISMA Flow Diagram

The study selection process followed a systematic multi-stage screening procedure, as detailed in the PRISMA flow diagram (

Figure 1). We independently conducted the full-text assessment to determine adherence to all inclusion criteria, with disagreements resolved through discussion and consensus. This rigorous evaluation process resulted in 11 studies meeting all criteria for inclusion in the final qualitative and quantitative syntheses.

2.3. Data Extraction

A standardized data extraction form was developed to systematically collect information from each of the 11 included studies. The extracted data were organized into three main categories to ensure comprehensive capture of relevant study characteristics and findings.

The first category encompassed general and methodological information, including the primary author and publication year, the specific learning disability under investigation, participant characteristics such as sample size and age or grade level, the study design employed, and details regarding the comparison group when applicable. This foundational information provided the necessary context for evaluating study quality and generalizability.

The second category focused on AI intervention details, capturing the type of AI technology utilized, such as personalized learning systems or generative AI applications, the specific name of the AI tool or platform when provided by the authors, and the context in which the intervention was implemented, whether in school, home, or clinical settings. This detailed characterization of interventions was essential for understanding the diversity of AI applications and their implementation contexts.

The third category addressed outcomes and effectiveness measures, documenting the specific learning outcomes assessed, such as reading comprehension or mathematical fluency, the measurement tools employed, including standardized tests and other assessment instruments, the reported effectiveness of interventions, and a comprehensive summary of key quantitative findings. This included effect sizes and p-values where available, providing the quantitative foundation necessary for evaluating intervention impact and facilitating potential future meta-analyses.

The authors independently extracted the data, and any discrepancies were resolved through discussion to ensure accuracy and consistency.

2.4. Risk of Bias Assessment

To address a key limitation of the original manuscript and to critically appraise the quality of the included studies, a formal risk of bias assessment was conducted. Given the heterogeneity of the study designs, two different validated tools were employed to ensure appropriate evaluation across the diverse methodological approaches represented in the literature.

For quasi-experimental studies, the Risk Of Bias In Non-randomised Studies of Interventions (ROBINS-I) tool was utilized, as this instrument provides a comprehensive framework for assessing bias across multiple domains in non-randomized intervention studies. For case series, case studies, and single-group pre–post studies, the appropriate JBI Critical Appraisal Checklist was applied, recognizing that these study designs require different methodological considerations and bias assessment criteria than comparative studies.

We independently assessed each study. The evaluation focused on domains such as confounding variables, selection of participants, classification of interventions, measurement of outcomes, and missing data. Based on the assessment, each study was assigned an overall risk of bias judgment (e.g., Low, Moderate, High, or Serious). A summary of this assessment is presented in the Results section, with more detailed evaluations provided in

Appendix B (

Table A12 and

Table A13).

2.5. AI-Assisted Content Generation and Verification (GAMER Statement)

In adherence with the Guidelines for Reporting AI-Assisted Materials in Scholarly Work (GAMER) [

6], this section provides a transparent account of how AI tools were utilized in the preparation of this manuscript.

Large Language Models (specifically, Claude 3 Opus and Google Gemini 2.5 pro) were used for the following tasks:

Summarization: Assisting in the initial summarization of key findings manually extracted from the included articles;

Data Organization: Helping to structure and organize the extracted data from the author notes into formatted tables for the

Section 3 and

Appendix A and

Appendix B;

Drafting Support: Aiding in the initial drafting of sections of the manuscript, particularly the abstract and parts of the discussion, by rephrasing and improving clarity.

The following step-wise human validation process was rigorously applied to all AI-generated content:

Source Verification: Every piece of information or data generated by the AI was manually cross-referenced with the original source articles and data files to ensure complete accuracy;

Fact-Checking: All claims, summaries, and quantitative data points were independently verified by the authors;

Critical Review and Editing: All AI-generated text was critically reviewed, rewritten, and edited by the authors to ensure it accurately reflected the source material, aligned with the study’s narrative, and met academic and scientific standards. The final manuscript represents the authors’ own work and intellectual contribution.

No AI tools were used for the core methodological tasks of this review, such as study selection, data extraction, or the risk of bias assessment, which were performed entirely by the human authors.

3. Results

The 11 included studies represent a diverse range of research conducted between 2022 and 2025. The studies varied significantly in terms of the specific learning disabilities addressed, participant demographics, AI technologies employed, and methodological design. For all subsequent percentage calculations and statistical analyses, we considered 10 independent experiments to avoid double-counting evidence from the overlapping studies.

It is important to note some methodological specificities regarding the selection and nature of the samples. For instance, while Samuelsson [

7] did not use formal diagnostic criteria, the performance-based identification of math learning disabilities (MLD) through a bottom 25% cut-off on arithmetic fact fluency pre-tests represents a methodologically sound approach that aligns with established research practices in the field. This cut-off criterion is consistent with recommendations from multiple studies cited by Samuelsson, including Cowan & Powell [

8], Geary et al. [

9], and de Smedt & Gilmore [

10], who have used similar percentile-based approaches to identify students with mathematical learning difficulties. Finally, in the case study by Rizos et al. [

11], which included one participant with dyslexia and one with autism spectrum disorder, only the results pertaining to the participant with dyslexia were considered for this analysis, in line with the inclusion criteria.

The AI technologies were varied, with personalized/adaptive learning systems and game-based learning being the most common. Other interventions included assistive technologies (e.g., text-to-speech), recommendation systems, intelligent tutoring systems (ITS), and generative AI (ChatGPT).

Methodologically, the studies were predominantly quasi-experimental. The risk of bias assessment, detailed further in

Section 3.1, revealed that no studies were rated as having a low risk of bias, with most presenting a moderate to high risk. A summary of the key characteristics of each included study is provided in

Table 1.

3.1. Risk of Bias in Included Studies

The methodological quality of the 11 included studies was assessed using the ROBINS-I and JBI Critical Appraisal tools. The overall risk of bias was found to be considerable across the board. No studies were rated as having a “Low” risk of bias. The majority of studies (70%, n = 7) were assessed as having a “Moderate” risk of bias. Three studies (30%) were rated as “High” risk, and one study (10%) was rated as having a “Serious” risk of bias.

The most common methodological limitations identified were the lack of appropriate randomization, inadequate blinding of participants or outcome assessors, and the absence of a control group in several studies. Many studies were primarily focused on technological development, with less rigorous clinical evaluation. The detailed breakdown of the risk of bias for each study is presented in

Table 1, with additional methodological details and study design information provided in

Table 2.

3.2. Effectiveness of AI Interventions

All 11 studies reported positive outcomes, though methodological limitations require cautious interpretation. Key quantitative findings from stronger studies include a wide range of outcomes, from academic performance in reading and mathematics to cognitive functions like attention and memory. The interventions led to statistically significant improvements, often with large effect sizes. For example, Gharaibeh et al. [

15] found that a ChatGPT-based intervention produced a very large effect on reading comprehension (Cohen’s d = −1.66) compared to traditional instruction. Similarly, Effect sizes across studies ranged from moderate to very large, with particularly strong outcomes observed for mathematics interventions and cognitive training programs (see

Table 3 for detailed quantitative results).

Interventions using assistive technology also showed strong results. Chukwuemeka & Agbarakwe [

19] found that the Speechify app led to significantly higher performance and retention in reading compared to two other teaching methods, with a mean gain of +15.20 points on their reading performance test. Cognitive training programs also yielded significant results; Fami et al. [

20] reported a 77.53% mean improvement in working memory and a 40.37% improvement in academic function (reading) following their mixed cognitive intervention.

4. Discussion

This systematic review provides preliminary but compelling evidence that AI-based interventions can be effective in supporting students with learning disabilities. The unanimous positive outcomes reported across all 11 included studies, despite their methodological diversity, suggest a promising potential for these technologies. The interventions demonstrated success across a wide range of learning disabilities, including dyslexia and math disabilities, and were implemented in various contexts from primary schools to universities.

Moving beyond a simple declaration of effectiveness, the quantitative data reveals the magnitude of these positive impacts. For instance, an AI system for arithmetic practice yielded a large effect size (d = 0.80) for the general student population and an even larger effect (d = 1.63) for students with math learning disabilities [

7]. These concrete figures underscore the potential of AI to deliver significant and measurable educational benefits.

4.1. Publication Bias and the Unanimity Problem

A critical concern that emerges from this systematic review is the potential for significant publication bias. The fact that all 11 included studies reported positive outcomes is statistically improbable and raises serious questions about the completeness of the available evidence base.

The unanimous positive results across diverse populations, interventions, and methodological approaches suggest systematic underreporting of negative or null findings. In any genuine research domain, some interventions would be expected to show no effect or even negative effects, especially given the methodological diversity of the included studies. This pattern is particularly concerning in AI and special education research, a nascent field where positive results may receive preferential treatment in publication decisions.

Unfortunately, the small number of included studies and their methodological heterogeneity preclude the construction of a funnel plot, which would have provided visual evidence of publication bias through asymmetrical distribution of effect sizes. This analytical limitation further compounds our inability to assess the true extent of missing negative or null results in the literature. Additionally, searches in grey literature sources yielded no relevant studies, suggesting that unpublished negative findings may be particularly difficult to access in this emerging research area.

4.2. Long-Term Effects and the Cognitive Offloading Paradox

A critical gap identified in this review is the near-complete absence of long-term follow-up. While studies report immediate gains, none have tracked students over extended periods to assess skill retention or potential negative consequences, such as “cognitive offloading”. This phenomenon, where learners become dependent on technology to perform tasks rather than developing the underlying cognitive skills, is a major concern, particularly for students with learning disabilities who need to strengthen, not bypass, their cognitive functions [

21]. Empirical studies using neurophysiological measurements have begun to demonstrate that intensive use of AI assistants, while supportive, can reduce the user’s neural engagement, leading to the accumulation of a “cognitive debt” [

22]. For instance, students using AI for math practice answered more problems correctly but scored lower on conceptual understanding tests, suggesting that AI may enhance procedural skills without fostering deeper learning [

23]. To address this significant gap, future research must move beyond short-term efficacy studies and embrace comprehensive longitudinal designs that can capture the full spectrum of AI’s impact on learning processes.

We strongly recommend the adoption of longitudinal frameworks that incorporate specific follow-up periods at regular intervals, such as at 6, 12, and 24 months post-intervention, to systematically assess the durability of observed learning gains. These extended timeframes are essential for distinguishing between temporary performance improvements and sustained educational benefits that persist beyond the immediate intervention period.

Equally important is the systematic measurement of skill retention to determine whether improvements are maintained over time in the absence of the AI tool. This assessment is fundamental for understanding whether students develop genuine competency or become dependent on technological scaffolding for performance. Without such evaluation, we cannot determine if AI interventions produce lasting educational value or merely create performance illusions that dissipate when support is removed.

Research designs must also incorporate rigorous assessment of skill transfer, evaluating whether students can successfully apply learned skills to novel tasks and contexts that do not involve the AI intervention. This transfer assessment is particularly important for students with learning disabilities, who often struggle with generalizing skills across different contexts and may be especially vulnerable to developing narrow, context-dependent competencies.

Finally, longitudinal studies should include systematic monitoring for cognitive atrophy by periodically assessing core cognitive skills to ensure they are not degrading due to over-reliance on technology. This monitoring is essential for detecting any unintended consequences of AI dependency, such as the deterioration of fundamental cognitive processes that students might otherwise develop through more effortful, unassisted practice. Such comprehensive longitudinal approaches will provide the nuanced understanding necessary to guide responsible implementation of AI technologies in educational settings for students with learning disabilities.

4.3. Implications for Practice and Policy

For educators and practitioners, these findings suggest that AI tools can be powerful supplements to, but not replacements for, high-quality instruction. The key is to select the right tool for the right need, for example, using text-to-speech applications to support reading access while employing adaptive practice systems to build specific skills. AI tools can also serve as personalized support that is more “discreet” and less “embarrassing” than traditional disability services, reducing the stigma associated with seeking help [

12]. A “complementary AI model,” where technology reduces extraneous cognitive load [

24] while maintaining the necessary challenge for skill development, appears most promising.

For policymakers, the evidence supports the funding of pilot programs to explore the integration of AI in special education. However, given the methodological limitations of the current evidence base, widespread, mandated adoption would be premature. Policy should focus on creating frameworks for the rigorous evaluation of AI tools, ensuring they are not only effective but also equitable and safe. Furthermore, providing professional development for teachers is crucial for the successful and responsible implementation of these technologies.

4.4. Future Research Directions

Based on the findings and limitations of this review, several critical priorities emerge for advancing research in this field. Most urgently, there is a compelling need for well-designed randomized controlled trials with adequate sample sizes and active control groups to establish a more definitive evidence base. The current literature’s reliance on small-scale studies and quasi-experimental designs significantly limits our ability to draw robust conclusions about the efficacy of AI interventions for students with learning disabilities.

Longitudinal investigations represent another essential research priority, as detailed in

Section 4.3. These studies are critical for understanding skill retention patterns and examining the potential for cognitive offloading effects that may emerge over extended periods of AI tool usage. Without such long-term perspectives, we cannot fully assess whether observed benefits represent genuine learning gains or temporary performance enhancements.

The research community must also confront the substantial risk of publication bias in this emerging field. Researchers should commit to pre-registering their studies and publishing all findings, including null or negative results, to create a more balanced and comprehensive evidence base. This transparency is particularly crucial given the commercial interests and technological enthusiasm that may influence publication patterns in AI research.

Moving forward, research should evolve beyond simply asking whether AI is effective to investigating more nuanced questions about differential effectiveness. Specifically, studies should examine which AI technologies demonstrate the greatest efficacy, for which specific learning disabilities, and under which particular instructional conditions. This granular approach will provide the detailed guidance necessary for evidence-based implementation decisions.

Student voice represents a notably underexplored dimension in current research. In-depth qualitative assessments of student perceptions are essential for understanding how AI influences the learning experience and for informing responsible implementation strategies. Students with learning disabilities possess unique insights into how these tools can support self-advocacy and learning autonomy, perspectives that are crucial for developing truly supportive interventions.

The field would benefit substantially from developing and adopting a core set of standardized outcome measures to facilitate meaningful comparison and synthesis across studies. The current heterogeneity in assessment approaches severely limits our ability to build cumulative knowledge and conduct robust meta-analyses.

Finally, given the extensive body of research examining AI applications in general education populations, future studies must prioritize disaggregated data reporting when samples include students with learning disabilities. Many existing studies that evaluate AI interventions in mixed populations fail to provide separate analyses for students with learning disabilities, thereby limiting our understanding of differential effects and potentially masking benefits or risks specific to this vulnerable population. We strongly recommend that researchers conducting AI studies in educational settings explicitly report outcomes separately for students with and without learning disabilities when both populations are included in their samples, as this disaggregated approach is essential for building an evidence base that truly serves the needs of students with learning disabilities.

4.5. Conclusions

This systematic review indicates that AI-based interventions hold significant, tangible promise for improving academic and cognitive outcomes for students with learning disabilities. The evidence, though preliminary and methodologically limited, is unanimously positive and shows that AI can provide powerful, personalized support. However, the path forward requires a balanced and critical approach. Educators, policymakers, and researchers must work together to foster innovation while demanding rigorous evidence of long-term effectiveness and safety. By focusing on high-quality research and responsible implementation, the field can harness the transformative potential of AI to create more equitable and effective learning environments for all students.