1. Introduction

Autism spectrum disorder (ASD) is a neurodevelopmental disorder characterized by social communication disorders, interest limitations, and repetitive behaviors. According to the latest estimates, one in every 150 children has ASD, and the global prevalence continues to rise [

1]. The heterogeneity of ASD, along with its early onset and persistent impact, poses significant challenges for diagnosis and intervention. Early identification and intervention are of vital importance because the nervous system shows a high degree of plasticity in early childhood. Timely behavioral therapy, language training, and social skills development have been proven to alleviate symptoms, promote cognitive development, and improve long-term outcomes.

Resting-state functional magnetic resonance imaging (rs-fMRI) offers a non-invasive approach to studying the functional organization of the brain, providing crucial insights into the altered connection patterns that play a key role in diagnosing ASD [

2]. By capturing spontaneous brain activity at rest, rs-fMRI enables researchers to identify subtle differences in neural networks that typically indicate autism-related abnormalities, thereby supporting more accurate and early diagnostic evaluations. In addition to its diagnostic value, it is equally important to consider the clinical perspectives associated with biomedical applications. From a safety monitoring standpoint, non-invasive imaging techniques such as rs-fMRI minimize potential risks compared with more invasive diagnostic approaches, thereby ensuring patient safety—a critical factor in clinical settings, especially for pediatric populations. Furthermore, understanding the interaction between imaging signals and biological tissues is essential for interpreting the resulting data accurately. The blood-oxygen-level-dependent (BOLD) signals captured in rs-fMRI, for instance, reflect complex neurovascular coupling mechanisms, which can vary across individuals and developmental stages. Accounting for these physiological interactions not only enhances diagnostic reliability but also helps bridge the gap between neuroimaging biomarkers and clinical decision-making in ASD detection.

Recently, there have been many applications that utilize resting-state functional magnetic resonance imaging data to support ASD classification tasks [

3,

4]. Almuqhim et al. proposed a model called the ASD-SAENet model and designed and implemented a sparse autoencoder to optimize the extraction of classification features [

5]. Kan et al. proposed a low-level functional module based on self-supervised soft clustering and orthogonal clustering readout operations to determine the similar behaviors between ROI groups [

6]. Wang et al. proposed a model called RGTNet. Specifically, a graph encoder was designed to extract time-dependent features with remote dependencies and a new graph sparse fitting weighted aggregation method was employed to alleviate the problem of dimensionality explosion [

7]. However, most existing methods rely on a single brain map to define regions, which may limit the generalization and robustness of learning representations. To address such issues, Wen et al. proposed a multi-view convolutional neural network based on prior brain structure learning, which combines graph structure learning with multi-task graph embedding learning to enhance classification performance and identify potential functional subnetworks [

8]. Zhu et al. developed a novel multi-view GNN for multimodal brain networks. They treated each modal as a view of the brain network and used contrastive learning for multi-modal fusion [

9]. Song et al. used a multi-view attention fusion module to enhance the spectral convolutional network to extract useful information [

10]. Although they all extract features from data based on different views, the performance of these models is poor, which is due to the inherent heterogeneity of the ABIDE dataset, while population graphs have a natural advantage in handling multi-site data. In the population graph, edge weights can be determined based on the phenotypic characteristics of the subjects (such as collection location, age, and gender), further weakening the impact of differences in different sites, age groups, and gender on the data.

In terms of the application of population graphs, Cao et al. constructed a deep ASD diagnosis framework based on 16-layer GCN. Integrating ResNet cells and DropEdge policies into this framework avoids problems such as vanishing gradients, overfitting, and oversmoothing [

11]. Tian et al. proposed an extensible hierarchical graph convolutional network. Firstly, they constructed GCN based on brain regions of interest to extract structural and functional connection features between different ROIs, and combined them with scale information to build a population-based GCN model [

12]. Although these methods can alleviate the impact of data heterogeneity, they lack feature representations of different views.

The research deficiencies of the specific existing methods are shown in

Table 1. To address these deficiencies and integrate the advantages of these methods, we propose a multi-atlas guided multi-view contrast learning (MAMVCL) for ASD classification. Our main contributions are as follows:

- (1)

Our method constructs functional connectivity matrices based on three distinct brain atlases, capturing diverse perspectives of brain functional organization.

- (2)

The interaction between the brain regions of the subjects was conducted through the Target-aware attention aggregator layer to obtain the local features of all subjects. By constructing population graphs among different subjects and performing graph convolution operations, the global features of all subjects are obtained for message passing among similar subjects to obtain global features.

- (3)

We adopt graph contrastive learning to align global and local feature representations, and use consistency loss to constrain different brain atlas feature representations of the same encoder.

- (4)

Extensive experiments on the Autism Brain Imaging Data Exchange (ABIDE-I) dataset demonstrate that our model achieves an accuracy of 85.71%, outperforming existing state-of-the-art approaches.

Table 1.

The flaws of the existing methods.

Table 1.

The flaws of the existing methods.

| Category | Defect |

|---|

| Single-view model | The model limits the generalization and robustness of learning representations |

| Multi-view model | The performance of the model is poor and the heterogeneity of the data has not been alleviated |

| Population graph model | The model lacks feature representations of different views |

The structure of this paper is organized as follows:

Section 2 details the proposed model, including its methodologies and the loss functions employed.

Section 3 presents the experimental setup, featuring comparative experiments, ablation studies, and more.

Section 4 discusses key aspects such as hyperparameters, interpretable analysis, and more.

Section 5 concludes the paper and outlines directions for future research.

2. Materials and Methods

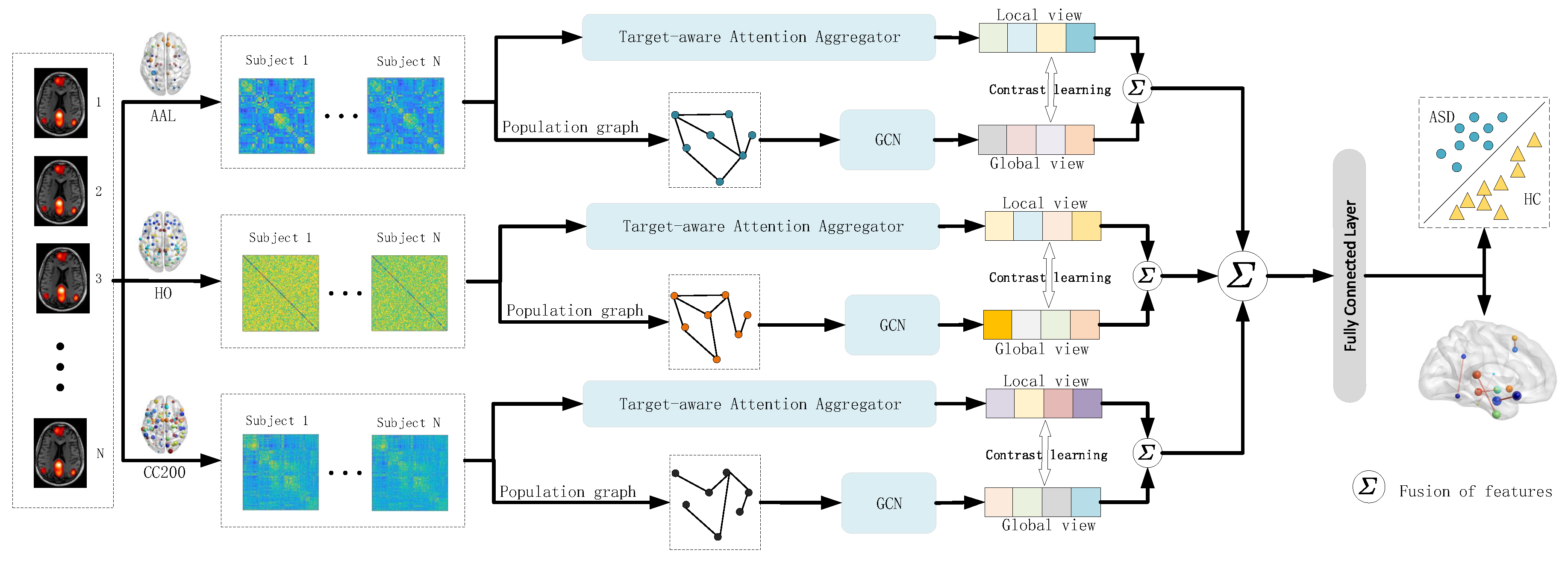

The overall framework of the model proposed in this paper is shown in

Figure 1. The model integrates the feature representations of three different brain atlas visuals. In each brain atlas framework, the imaging features extracted from the brain atlas and the phenotypic features of the subjects are used to construct their respective population graph. Then, on the constructed population graph, the graph convolution operation is applied to obtain the global features of the subjects. Meanwhile, the Target-aware attention aggregator layer (

Figure 2) was used to interact between the brain regions of the subjects and extract local features. Then, graph contrastive learning is conducted on the global and local features to ensure the consistency of the feature representation of the subjects [

13]. Finally, the improved features are applied to the classification task.

2.1. Target-Aware Attention Aggregator

The multi-head self-attention (MSA) mechanism is designed to capture semantic correlations between input tokens [

14]. We added the MSA mechanism at the beginning of the Target-aware attention aggregator module.

To effectively capture interaction patterns between brain regions, we have designed a Node-Centric Attention Aggregation (NCAA) module. Given the brain network representation , where B denotes the batch size, represents the number of brain regions, and D indicates the feature dimension, the NCAA module centers on each brain region to aggregate information from the remaining regions, thereby enhancing node representations.

Specifically, for the

i-th brain region, we begin by concatenating its feature vector

with the feature vectors of its neighboring nodes

. This concatenated input is then fed into an attention mapping function

, which generates the attention weight distribution for the neighboring nodes.

Subsequently, we apply these weights to perform a weighted aggregation of the neighbor features, which is then combined with the center node’s own features to derive the updated node representation.

Ultimately, the enhanced representations of all nodes are concatenated to form an updated brain network representation.

In this manner, the NCAA module dynamically models the dependencies of each brain region with the rest of the brain, centering on individual regions to highlight task-relevant interaction patterns while suppressing irrelevant or noisy connections.

2.2. Population Graph Construction

2.2.1. The Nodes of the Population Graph

We calculate the average time series of each brain region in the rs-fMRI image data and obtain the functional connectivity matrix using the Pearson correlation coefficient. The elements of the upper triangular matrix are chosen as the node initial features of the population graph. The total number of subjects across all sites is N.

2.2.2. The Edges of the Population Graph

In a population graph, edges signify the similarity between pairs of samples. To form these edges, we combine imaging data with phenotypic information, including site and age, within a scoring mechanism to refine the population graph. For the retained edges, the cosine similarity between the phenotypic features of the paired subjects is computed to establish the final edge weights [

15].

To construct a sparse population graph with enhanced connections among similar subjects, we implement a scoring mechanism to filter edges, yielding a binarized matrix

F, calculated as detailed in Equation (

4):

where

represents the score matrix of each edge in the fully connected population graph, and

is a hyperparameter that adjusts the sparsity of the population graph. The matrix A is calculated as shown in Equation (

5)

where

represents the similarity of the imaging data between the

i-th and

j-th subjects.

represents the function for calculating the similarity between the feature tables of two subjects.

represents the number of phenotypic data, and

indicates the

m-th phenotypic feature of the

i-th sample.

For categorical information such as site information, the calculation method of

is shown in Equation (

6):

For quantifiable data such as age, the calculation method of

is shown in Equation (

7):

where

is a hyperparameter, which is a manually adjusted threshold.

To uncover hidden relationships among subject characteristics, we utilize an attention mechanism to augment the dimensionality of their phenotypic features

P. The cosine similarity between these enhanced features is then computed to establish the edge weights. Leveraging the previously derived binarized matrix

F, we filter the edges of the population graph. The weighted adjacency matrix

W of the population graph is calculated as follows:

where

represents the cosine similarity calculation.

It is worth noting that similar principles of feature enhancement and signal integration are widely explored in other biomedical domains, particularly at the hardware and interface level. For example, the design of electronic interfaces for single-photon avalanche diodes (SPADs) has been shown to significantly improve the sensitivity and temporal resolution of biomedical imaging systems by optimizing signal acquisition and noise suppression [

16,

17]. Such interface-level innovations share conceptual similarities with our approach: both aim to maximize the extraction of meaningful information from complex, heterogeneous data sources — whether through physical electronic circuitry or through graph-based modeling of subject relationships. Integrating these perspectives provides a broader context for understanding how the proposed population graph serves as an “interface” between raw imaging data, phenotypic characteristics, and downstream analytical tasks.

2.3. Graph Convolutional Network

The model proposed in this paper utilizes a multi-layer GCN, with its hierarchical propagation rule defined as follows:

where

is the adjacency matrix of an undirected graph, augmented with the identity matrix

to include self-loops,

is the degree matrix of

,

is the trainable weight matrix for layer

l, and

is the activation matrix at layer

l, with

serving as the initial input feature matrix.

Prior to GCN convolution, a DropEdge strategy is implemented, which randomly removes a subset of edges from the input graph during each training iteration [

18]. However, no edges are removed during testing to preserve the graph’s integrity. This approach helps address overfitting. Additionally, residual connections are introduced in each convolutional layer to retain complete feature information, enhancing the model’s robustness [

19].

2.4. Perturbation-Driven Explainable Framework

The core idea of this model is to use the complementarity of different brain atlases in brain region division to improve the classification performance. However, due to the complexity of multi-atlas models and other factors, the use of a model based on a single brain atlas for analysis is more convincing in terms of medical interpretation. First, we trained the model based on a single brain atlas to obtain the corresponding best classification accuracy , which was used as a reference benchmark for subsequent interpretability experiments

To quantitatively assess the importance of various brain regions in the classification task, we developed perturbation-based experimental methods. Specifically, for a subject under a given brain atlas, we consider two inputs: the functional connectivity matrix

and its upper triangular feature

, where

M represents the number of brain regions defined by the atlas, and

denotes the dimension of the upper triangular feature. Then we set the index of the brain region with experiment as

i, and we removed the features related to

i on

S and

U, respectively:

Here, the mask functions and denote zeroing or removing the features associated with index i in S and U, respectively. Subsequently, the perturbed features and are input into the trained model to obtain the corresponding classification accuracy .

By comparing

with the best benchmark accuracy

, we defined the importance index of brain regions as:

Among them, a larger indicates that the masked brain region i is more important in the classification task. This analysis can be combined with different brain atlas perspectives to reveal the potential biological significance of brain regions in disease classification.

2.5. Loss Function

2.5.1. Muti-Altas Consistency Loss Function

As our model integrates multiple brain atlases, which represent distinct perspectives of the same brain network, they should exhibit consistency in the final analysis. To achieve this, we introduce consistency constraints to optimize the similarity across different views [

20], regularized as follows:

where

represents the membership vector of the

i-th view following graph convolution learning,

denotes the membership vector of the

i-th view after Target-aware attention aggregator layer learning, and

V constitutes the set of distinct views within our model. Incorporating this loss function encourages convergence between the model outputs for the

i-th and

j-th views.

2.5.2. Muti-View Contrastive Loss Function

To enhance the consistency of representations across different perspectives, we have designed a contrastive loss function [

13]. Let the representations output by two distinct models or branches be

and

. These are first mapped to a shared latent space using a common non-linear projection head:

The projection function consists of two linear transformations combined with a non-linear activation (ELU), employing Xavier initialization to ensure numerical stability.

Subsequently, we define the similarity between the two representations as follows:

where

serves as a temperature parameter, used to adjust the smoothness of the distribution. Based on this similarity, we construct normalized similarity matrices from

to

and from

to

. These are then utilized, along with a given positive sample mask

, to compute the contrastive loss:

The final total loss is a weighted combination of the two:

where

acts as a balancing coefficient.

This loss function explicitly aligns the representation spaces of the two distinct model outputs, enhancing the consistency and discriminability of representations across perspectives.

2.5.3. Cross-Entropy Loss Function

This paper focuses on a binary classification task, utilizing the cross-entropy loss as the primary predictive loss function [

21], defined as follows:

Here, denotes the true label of subject n, and represents the predicted probability of the positive class.

Combining the above components, the final loss function is:

Here, and are hyperparameters that balance the contributions of each loss term.

3. Results

3.1. Data Acquisition and Pre-Processing

The experimental data for this study were derived from rs-fMRI data within the initial phase of the ABIDE-I database, a publicly accessible multi-site repository containing data from 1112 individuals across 17 sites. We employed the Configurable Pipeline for the Analysis of Connectomes (CPAC) [

22] for image preprocessing, encompassing slice-timing correction, motion correction, skull stripping, and voxel intensity normalization. This study generated 875 high-quality MRI images, comprising 405 individuals with ASD and 470 typically developing controls (TC). Detailed demographic data are provided in the accompanying

Table 2.

Subsequently, using the Harvard–Oxford (HO) [

23], Automated Anatomical Labeling (AAL) [

24], and Craddock 200 (CC200) brain atlases [

25], respectively, the preprocessed data were mapped onto various regional levels. The Pearson correlation coefficient was then computed between the average time series of all regions of interest (ROIs) across these different brain atlases to construct the functional connectivity matrices of brain networks from multiple perspectives.

3.2. Experimental Setup

3.2.1. Experimental Parameter

The edge filtering threshold is set to

, the quantifiable data scoring mechanism parameter to

, and the loss function parameters to

and

. For a fair comparison, the source codes of all competing methods were run in the same environment, with hyperparameters configured according to the optimal settings recommended in their respective papers. Detailed equipment and model parameters are presented in the

Table 3.

3.2.2. Performance Evaluation

We implemented a 10-fold cross-validation approach to evaluate the efficacy of our algorithm. For performance assessment, we adopted accuracy (

ACC), precision (

PRE), recall (

RECALL), F1 score (

F1), and area under the curve (

AUC) as primary metrics. The calculation methods for these performance metrics are detailed as follows:

where

,

,

, and

represent true positives, true negatives, false positives, and false negatives, respectively.

3.3. Competing Methods

Among the comparative methods, we chose two prominent baseline techniques: Support Vector Machine (SVM) and Random Forest (RF). Additionally, we assessed several cutting-edge deep learning networks, including ASD-DiagNet, ASD-SADnet, BrainGNN, DeepGCN, BNT, FBNetGen, MVS-GCN, GCN-MDD, TP-MIDA, deepManReg, DG-DMSGCN, RGTNet, GBT, and CcSi-MHAHGEL. A concise overview of these 16 methods is provided below.

- (1)

Random Forest and SVM: The upper triangular vector of the functional connectivity matrix serves as the feature set, with classifiers implemented using Random Forest and Support Vector Machine techniques.

- (2)

ASD-DiagNet [

26]: The ASD-DiagNet model employs a joint learning approach, integrating autoencoders with single-layer perceptrons to improve the quality of extracted features and enhance classification performance.

- (3)

ASD-SAENet [

5]: The ASD-SAENet model is designed to classify patients with ASD from typical control subjects using fMRI data, integrating a sparse autoencoder (SAE) to optimize feature extraction, which is then fed into a deep neural network (DNN) to enable enhanced classification of ASD-prone fMRI brain scans.

- (4)

BrainGNN [

27]: The BrainGNN model based on graph neural networks facilitates ASD prediction by utilizing two types of Brain Functional Networks (BFNs) as adjacency matrices.

- (5)

Deep-GCN [

11]: The Deep-GCN model develops a comprehensive ASD diagnostic framework utilizing a 16-layer population GCN.

- (6)

Brain network transformer (BNT) [

6]: The BNT model integrates a self-attention mechanism and introduces an orthogonal clustering readout operator, utilizing self-supervised soft clustering and orthogonal projection methods.

- (7)

FBNetGen [

28]: The FBNetGen model focuses on the learnable generation of brain networks while exploring the interpretability of these generated networks for downstream applications.

- (8)

MVS-GCN [

8]: The MVS-GCN model employs threshold-based measures to partition an adjacency matrix into three matrices with varying sparsity levels, thereby improving the comprehensiveness of the derived features.

- (9)

GCN-MDD [

29]: Integrates the k-Nearest Neighbors (kNN) algorithm to construct graphs and employs a GCN model for disease prediction.

- (10)

TP-MIDA [

30]: The TP-MIDA model leverages the Tangent Pearson embedding method to extract features and applies domain adaptation techniques to reduce the site-specific dependence of functional connectivity features.

- (11)

deepManReg [

31]: The deepManReg model employs multiple deep neural networks tailored to different modalities, jointly training them to align multimodal features into a shared latent space, and subsequently utilizes cross-modal manifolds to regularize the classification network, enhancing phenotype prediction accuracy.

- (12)

DG-DMSGCN [

32]: The DG-DMSGCN model incorporates a sliding window dual-GCN to extract features while capturing the spatiotemporal characteristics of fMRI data across varying sequence lengths. Subsequently, a novel dynamic multi-site GCN is employed to aggregate features derived from multiple medical sites.

- (13)

RGTNet [

7]: The RGTNet model develops a graph encoder to extract time-dependent features with long-range dependencies and introduces a novel sparse graph fitting weighted clustering method to mitigate the dimensionality explosion challenge.

- (14)

GBT [

33]: The GBT model introduces a Transformer module that selectively eliminates small singular values from the attention weight matrix to capture the most relevant graph representations. Additionally, it incorporates a geometry-oriented representation learning module, which enforces low-order intra-class compactness and high-order inter-class diversity constraints on the learned representations to enhance their discriminability.

- (15)

CcSi-MHAHGEL [

34]: The CcSi-MHAHGEL model introduces a Class-Consistency and Site-Independence Multiview Hyperedge-Aware HyperGraph Embedding Learning framework, designed to integrate Functional Connectivity Networks (FCNs) constructed from multiple brain atlases within a multi-site fMRI study.

3.4. Results of Comparison Methods

Table 4 presents the quantitative results for each key performance metric:

ACC,

Pre,

Recall,

F1, and

AUC. All values are reported as mean ± standard deviation (SD), providing a robust measure of the model’s stability across 10 cross-validation folds.

The proposed MAMVCL model demonstrated the highest performance across all metrics, achieving an ACC of 85.71%. This represents a substantial improvement over baseline methods, such as Random Forest and SVM, underscoring the limitations of traditional machine learning approaches in effectively handling the inherent complexity and heterogeneity of neuroimaging data. Compared to state-of-the-art deep learning models, MAMVCL outperformed graph-based methods including BrainGNN, Deep-GCN, and CcSi-MHAHGEL, as well as attention- and transformer-oriented models such as BNT, GBT, and RGTNet. These results indicate enhanced discriminability and robustness of MAMVCL, particularly against class imbalance.

The superior performance of MAMVCL highlights its ability to effectively integrate multi-atlas features, extract the global features of the subjects by using the convolution of the population graph, extract the local features of the subjects by using the Target-aware attention aggregator layer, and constrain the consistency of the feature representation of the subjects by using graph contrastive learning. This combination enables the model to mitigate the impact of noise in the data, address the variability of specific sites, and capture complex brain connection patterns, which are crucial for accurate ASD classification.

3.5. Ablation Experiment

To assess the effectiveness of the Target-aware attention aggregator (TAA) and graph contrast learning (GCL) modules within our proposed model, we conducted experiments on four distinct variants. These variants are defined as follows:

- (1)

Variant I: Employs only the GCN module utilizing population graphs.

- (2)

Variant II: Relies solely on the Target-aware attention aggregator module.

- (3)

Variant III: Combines the Target-aware attention aggregator module with the GCN module for population graphs, but omits graph contrast learning to align the outputs of the same subjects.

The experimental results for these variants are presented in

Table 5. The data indicate that our full model surpasses the performance of all variants, demonstrating that the integration of the TAA and GCL modules significantly enhances the model’s effectiveness. Specifically, the TAA module, grounded in individual brain networks, provides a localized perspective, while the GCN module, applied to population graphs, captures implicit relationships between subjects, offering a global viewpoint. Graph contrast learning further refines the model by aligning the output features of these two modules, ensuring a comprehensive and cohesive final output.

To quantitatively evaluate the superiority of the proposed model and the contributions of each component, we conducted a paired t-test on the classification accuracy obtained from 30 repeated cross-validation runs. Variant III (integrating TAA and GCN) performed significantly better than Variant I (containing only TAA) (p = 4.1710−30) and Variant II (containing only GCN) (p = 3.8710−19). Furthermore, the complete MAMVCL model containing the GCL module has a much higher accuracy rate than Variant III (p = 5.4210−13). These results confirm that each component makes a significant contribution to the overall performance, and the proposed model has higher classification accuracy statistically compared with its dissolved variants.

4. Discussion

4.1. Phenotypic Information Analysis

Table 6 summarizes the impact of various phenotypic feature combinations on model performance. Among individual features, site (S) consistently delivered the highest results, achieving an

ACC of 84.28% and an

AUC of 91.76%, markedly outperforming age (A) and gender (G) [

35]. This indicates that site information plays a predominant role in model classification. When examining feature combinations, the S + A pairing yielded the best performance (

ACC = 85.71%, F1 = 86.84%,

AUC = 92.93%), suggesting a complementary effect between site and age that bolsters the model’s discriminative capability. In contrast, the S + G combination resulted in reduced performance, while the A + G pair exhibited the weakest outcome, highlighting the limited discriminative power of gender, either alone or in combination. Incorporating additional phenotypic traits, such as eye status (E), handedness (H), and IQ score (FVP) [

36], did not lead to further improvements, with performance stabilizing between 80% and 82%, falling short of the optimal S + A combination. This implies that adding more phenotypic data may introduce redundancy or noise, thereby diminishing overall effectiveness. Collectively, these findings emphasize that site is the most informative feature, with age providing complementary benefits when paired with site. The contributions of other features appear minimal or even detrimental.

Therefore, the S + A combination is recommended as the most effective set of phenotypic features for constructing a population graph model. This is also in line with the research results of slopen [

37].

4.2. Interpretability Analysis

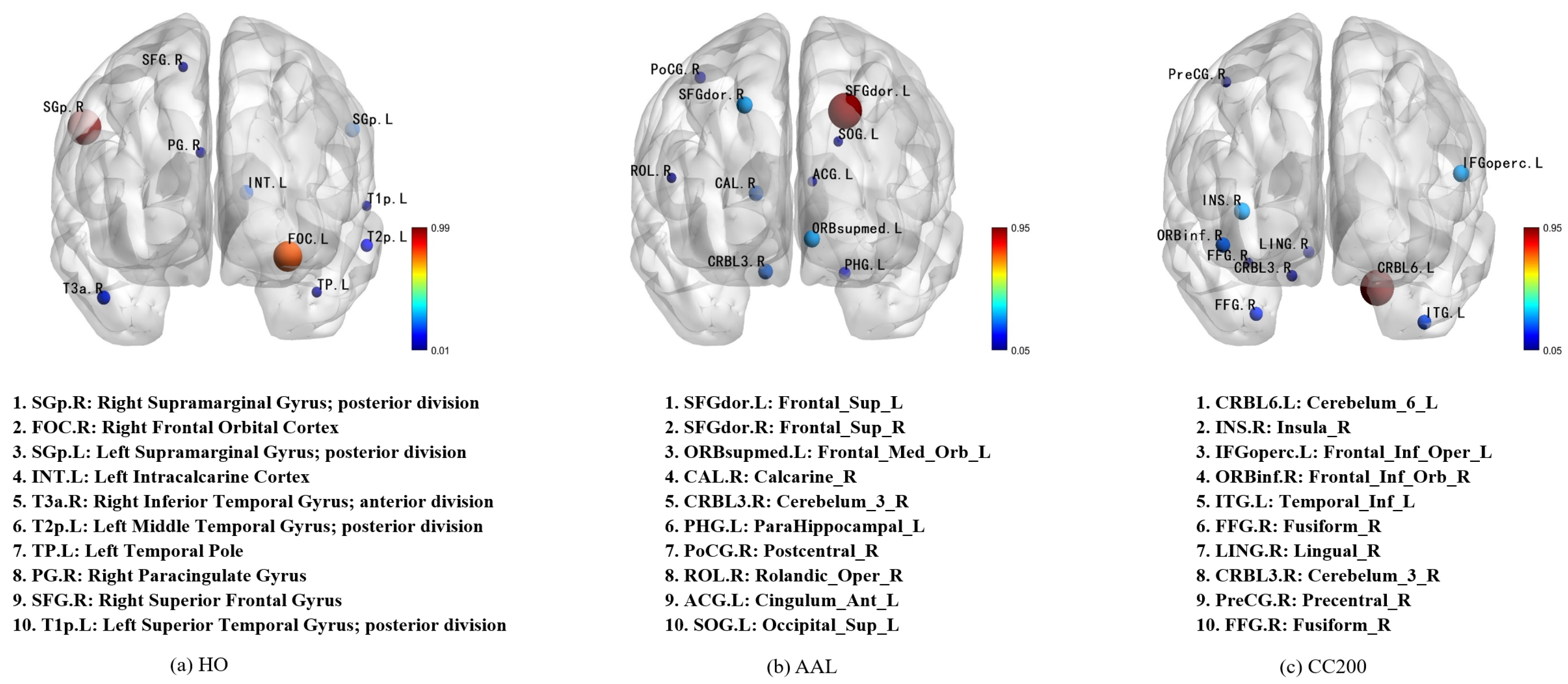

To clarify the proposed model, we employed perturbation experiments to identify the most distinctive brain regions. These experiments were conducted on three different brain atlases used in the research (

Figure 3). Since the CC200 map was generated in our analysis through monomeric BOLD time series clustering and lacked a predefined label for each ROI, we aligned its 200 ROis with the AAL brain map based on their spatial positions to enhance our understanding of the potential classification mechanism. The ten most important features in each mind map are identified and highlighted. The interpretable analyses of the HO, AAL, and CC200 brain atlases are respectively shown in

Figure 4.

By observing the first and second images in

Figure 4, it was found that the region most relevant to ASD diagnosis is located at Right Supramarginal Gyrus. This finding was supported by Wada et al., who demonstrated that this region is associated with the maintenance of emotion recognition ability. The ability to recognize emotions is closely related to ASD symptoms [

38]. In the second images, the two most important features, Frontal_Sup_L and Frontal_Sup_R, correspond to the dorsolateral frontal gyrus, a region medically considered to play a key role in language understanding [

39]. Combining the first and third figures, we also found that the area around Cerebelum_6_L was identified as the region most relevant to ASD classification. The researchers confirmed that this link was previously shown to have a significant relationship with ASD pathology [

40,

41,

42].

In addition, our population-level perturbation analysis shows conceptual parallels with finite element modeling (FEM)-based approaches for population-specific brain modeling, such as the study by [

43]. Both strategies aim to capture how localized structural or functional perturbations propagate through the broader system to affect global behavior. In FEM-based population models, the spatial distribution of stress or strain fields is used to infer regions most responsible for functional deviations. Similarly, our multi-atlas perturbation framework identifies brain regions whose alterations most significantly influence ASD classification outcomes. This analogy underscores that both methods—despite differing in implementation—share a common objective: revealing region-specific contributions to system-level properties in a non-invasive and interpretable manner. Integrating such perspectives enriches the interpretability of our model and highlights its potential relevance for broader biomedical modeling applications.

Figure 3.

Visualization of the most important brain regions across different atlases. The ten most important brain regions identified from the HO, AAL, and CC200 atlases are visualized, with node size indicating their contribution strength to the model. The full names and abbreviations of these regions are listed in descending order of importance, providing an interpretable view of feature relevance under different parcellation schemes.

Figure 3.

Visualization of the most important brain regions across different atlases. The ten most important brain regions identified from the HO, AAL, and CC200 atlases are visualized, with node size indicating their contribution strength to the model. The full names and abbreviations of these regions are listed in descending order of importance, providing an interpretable view of feature relevance under different parcellation schemes.

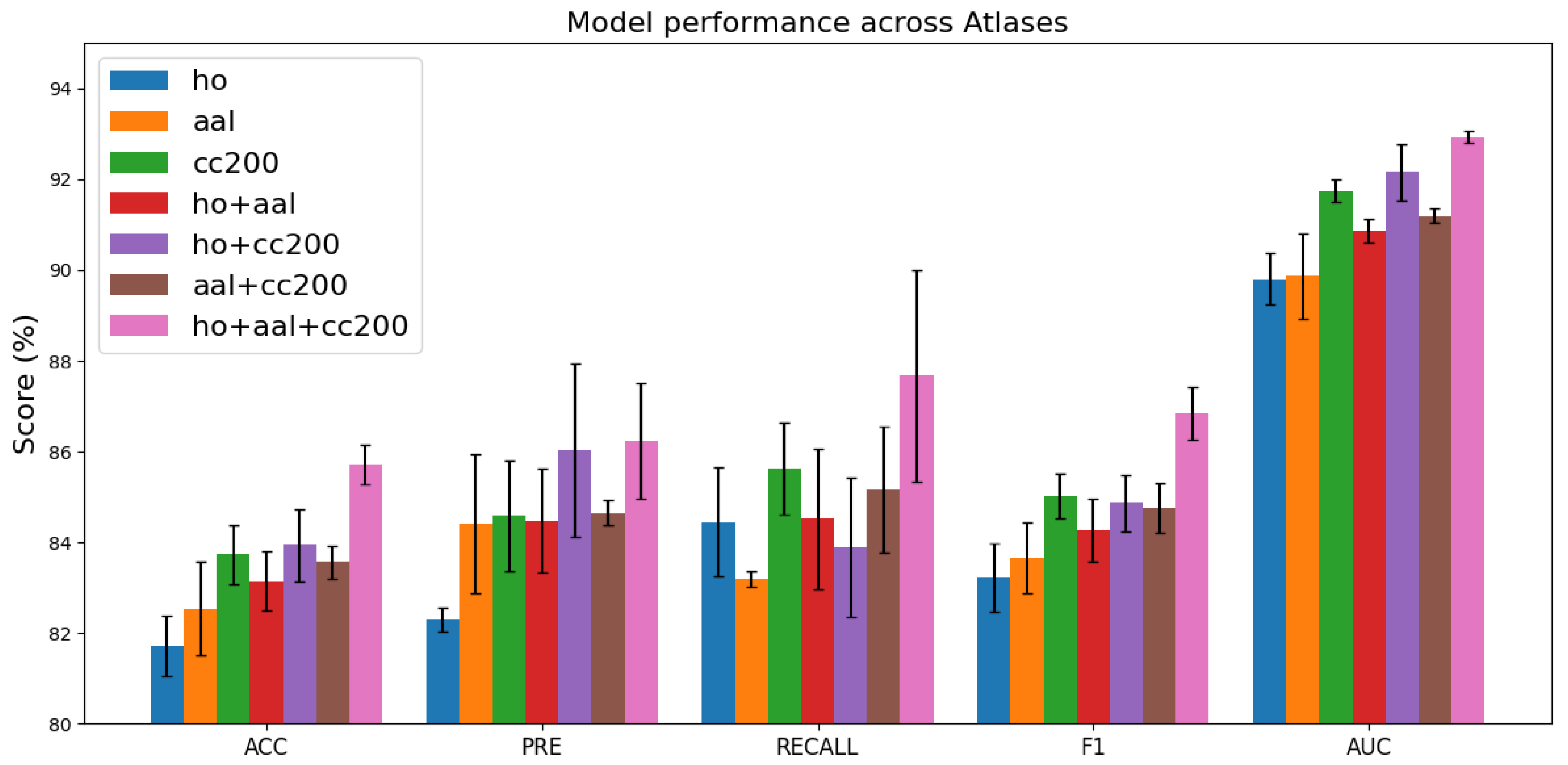

4.3. Different Brain Atlas Combinations on the Model

Figure 4 illustrates the classification performance across various brain atlas configurations. Models trained on a single atlas, such as HO, AAL, or CC200, exhibit

ACC values ranging from 81% to 83%. In contrast, integrating multiple atlases consistently enhances performance, with the combination of all three atlases (HO + AAL + CC200) yielding the best results, achieving an

ACC of approximately 85.7%. These findings indicate that the complementary information from diverse brain regions strengthens the robustness of feature representations and enhances the model’s generalization capability. Moreover, the low standard deviations observed in most cases reflect stable performance, while the three-atlas configuration delivers the highest average performance with relatively manageable variability.

Figure 4.

Comparison of model performance across different brain atlas combinations. Variations in performance metrics under different atlas configurations illustrate how parcellation choice influences classification accuracy and predictive effectiveness.

Figure 4.

Comparison of model performance across different brain atlas combinations. Variations in performance metrics under different atlas configurations illustrate how parcellation choice influences classification accuracy and predictive effectiveness.

4.4. Hyperparametric Analysis

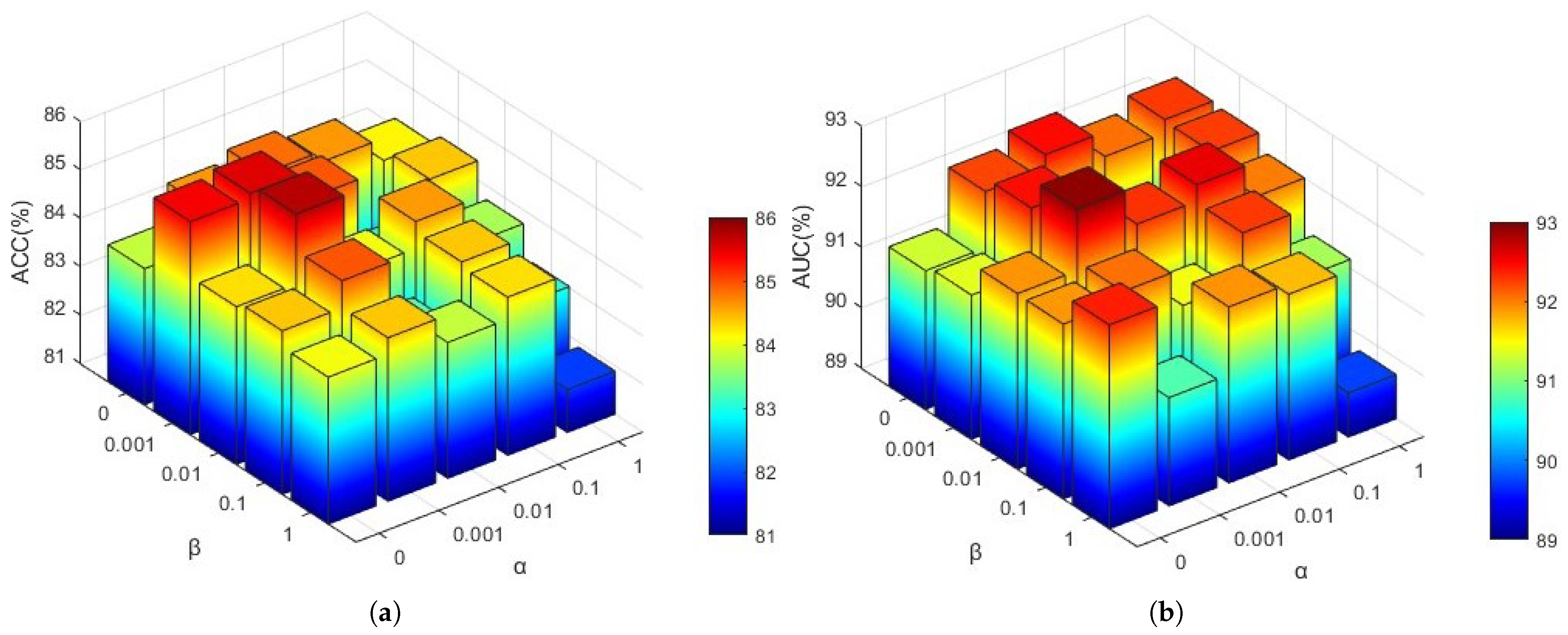

4.4.1. The Influence of the Loss Function

To investigate the influence of hyperparameters in Equation (

19), we analyzed the effects of view consistency loss (

) and contrast loss (

) on model performance. Employing a grid search approach, we evaluated various combinations of these coefficients with values [0, 0.001, 0.01, 0.1, 1]. The resulting

ACC and

AUC values are presented in

Figure 5.

As illustrated in

Figure 5, the optimal

ACC and

AUC values are achieved when

and

. This suggests that an excessively small

value may prevent the outputs of different brain atlases for the same subject from aligning closely, whereas an overly large

could alter the effective feature values used for classification across different views. Similarly, a

value that is too small may lead to significant disparities between the global and local features of the same subject, while an excessively large

might enforce excessive consistency between global and local feature regions.

In this study, hyperparameter optimisation was conducted using a grid search approach, which exhaustively explores the parameter space and is commonly used in machine learning tasks due to its simplicity and interpretability. Alternative approaches such as Taguchi design or ANOVA-based optimisation could potentially improve efficiency by reducing the search space and considering factor interactions. However, since our model involves a relatively small set of hyperparameters, grid search was sufficient and computationally manageable.

4.4.2. The Influence of Constructing the Edges of the Population Graph

The hyperparameters and play a critical role in shaping the structure of the population graph by determining how edges are formed between subjects. Specifically, controls the similarity threshold for connecting nodes based on their feature representations, while regulates the influence of age proximity during the construction of graph edges. To determine an appropriate range for , we first conducted a preliminary sensitivity analysis by varying its value within the range of 0.3 to 0.7. The results revealed that the model exhibited consistently better classification performance when was set between 0.60 and 0.65. Based on this observation, we subsequently adopted this narrower interval as the search space for grid-based hyperparameter tuning, allowing a more precise exploration of the optimal threshold.

As shown in

Figure 6, the model achieves optimal classification performance when

and

, indicating a delicate balance between connectivity and noise. If

is set too low, the resulting graph becomes overly dense, introducing numerous spurious edges that degrade the discriminative capacity of the learned representations. Conversely, an excessively high

leads to a sparse graph, which restricts information flow among similar subjects during message passing. A similar trade-off is observed for

: smaller values hinder effective communication among nodes with similar age characteristics, while larger values introduce irrelevant age-related connections that may obscure the underlying patterns. These observations underscore the importance of jointly tuning

and

to achieve a graph topology that both preserves meaningful relationships and suppresses noise, ultimately enhancing the quality of representation learning.

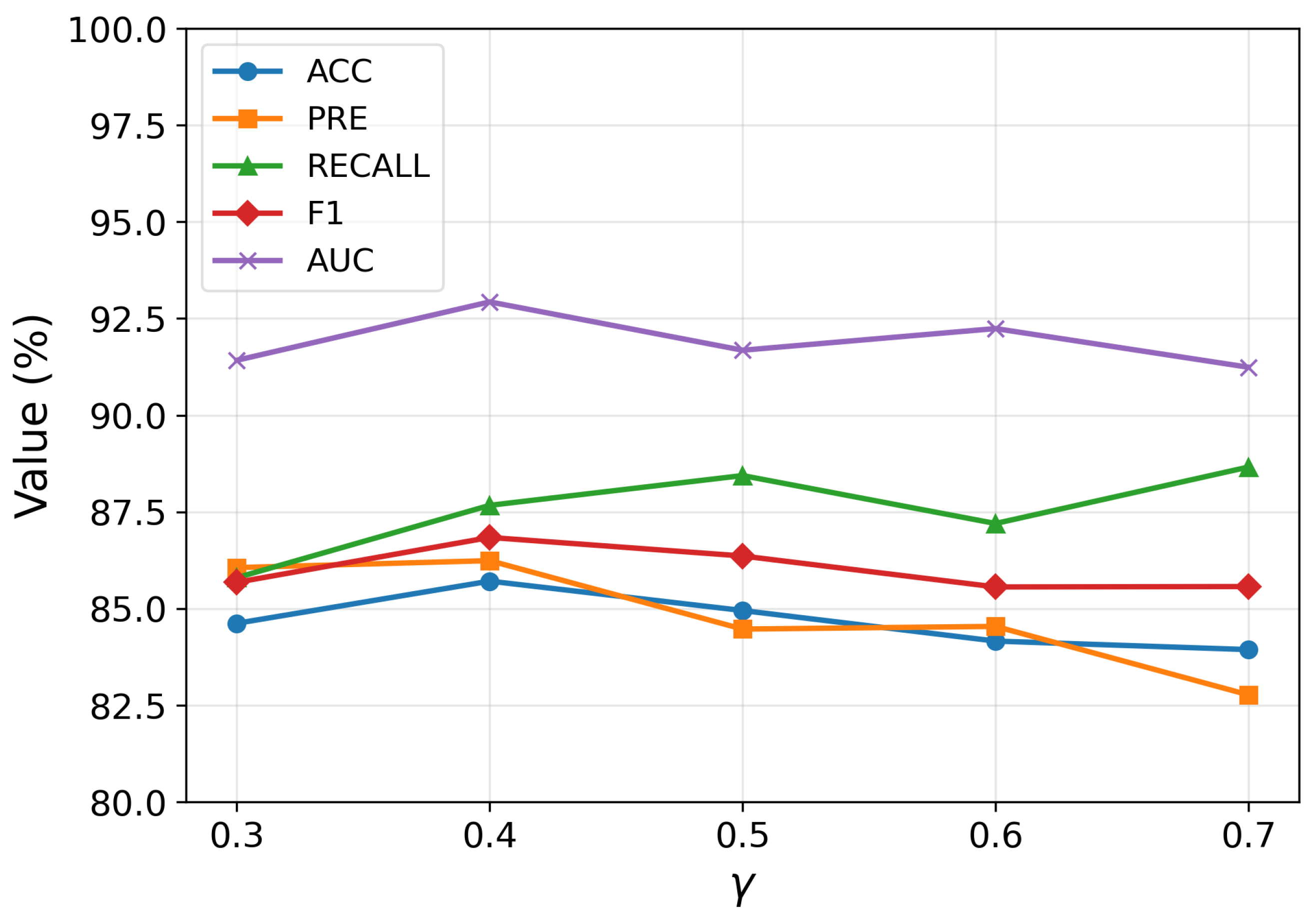

4.4.3. The Influence of Parameters in Graph Contrast Learning

The hyperparameter

directly affects the optimization objective in the contrastive learning stage by balancing the alignment between local and global representations. As depicted in

Figure 7, the model exhibits its best performance when

. This result suggests that an appropriate weighting of the two alignment objectives is essential for effective representation learning. When

is too small, the contribution of local information is underemphasized, potentially leading to insufficient capture of fine-grained structural patterns. Conversely, an excessively large

can overemphasize local alignment at the expense of global semantic consistency, resulting in suboptimal generalization. The optimal value reflects a scenario in which global context plays a more dominant role than local details, highlighting the significance of integrating broader structural information in the contrastive framework. This balance allows the model to learn robust, semantically meaningful representations that improve downstream classification performance.

4.4.4. The Attention Layers in Target-Aware Attention Aggregator Layer

The results presented in

Figure 8 illustrate the impact of varying the number of attention layers on the model’s performance. Notably, the configuration with three attention layers achieved the highest overall performance, including the best

ACC, suggesting that this structure provides sufficient representational capacity without leading to overfitting. When the number of layers falls below three, the model exhibits clear signs of underfitting, failing to adequately capture local features. Conversely, increasing the number of layers beyond three results in a slight decline or fluctuation in performance, indicating that deeper attention stacking does not consistently enhance generalization and may introduce redundancy or noise. Therefore, these findings suggest that three attention layers strike an optimal balance between model complexity and generalization capability.

4.5. Limitations and Future Work

Although our model demonstrates good performance, there are still several limitations. First, the construction of the population graph requires knowledge of the entire dataset, which means that incorporating new subjects necessitates retraining the mode—a limitation that affects scalability in real-world scenarios. Second, the use of multiple atlases, while improving feature diversity, increases the computational cost and training complexity. Finally, the current framework relies solely on resting-state functional connectivity, and does not yet leverage complementary information from other imaging modalities such as structural MRI or diffusion imaging. To further evaluate the reliability of our framework, it is essential to consider perspectives inspired by stress analysis, particularly in terms of uncertainty management and feature robustness. In real-world scenarios, data are often affected by noise, variability, and acquisition inconsistencies, which may influence model performance. Our approach demonstrates stable classification results even under moderate perturbations, indicating a certain degree of robustness. Nevertheless, incorporating uncertainty-aware strategies—such as Bayesian modeling, dropout-based inference, or sensitivity analysis—could further enhance the model’s reliability.

Future research will aim to address these limitations in several ways. One direction is to explore incremental or fine-tuning strategies to enable efficient model adaptation to new samples without the need for full retraining. Specifically, this could involve transfer learning techniques to reuse and adapt pre-trained model weights for new data distributions, incremental learning approaches that progressively update the model as new samples become available, or online learning frameworks capable of continuously integrating streaming data. Such strategies would significantly enhance the scalability and clinical applicability of the framework. Another promising direction involves integrating multimodal neuroimaging data (e.g., structural and diffusion MRI) to provide a more comprehensive representation of brain structure and function, which could further improve diagnostic performance. Additionally, evaluating the model on larger and more diverse cohorts will be crucial for validating its generalization ability and robustness across different populations and acquisition sites. Future work could investigate the contribution and stability of individual features through robustness tests or perturbation-based analyses, providing deeper insight into the interpretability and generalizability of the proposed method.