Spatial Analysis of Navigation in Virtual Geographic Environments

Abstract

:Featured Application

Abstract

1. Introduction

1.1. User Testing and Evaluation of VGEs

1.2. Spatial Analysis of User Navigation and Interaction

1.3. Cognitive Styles and Route Preferences in VR Navigation

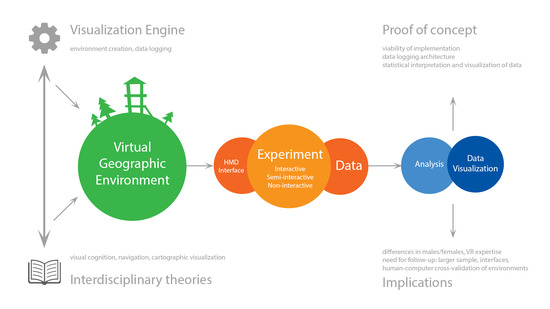

2. Methods and Technologies

2.1. Visualization Engine

- virtual movement (trajectory of movement in virtual space)

- mouse movement (looking around and/or changing direction of movement)

- head movement (looking around using a head-mounted display)

- keyboard interaction (eliciting movement in virtual space)

- participant–object collisions in the virtual world (interaction with features in virtual space)

- (optionally) eye-tracking data (participants’ eye fixations on objects)

2.2. OSIVQ

3. User Study

3.1. Procedure

- Inquiry into their demographical data and possible health limitations regarding vision and motion sickness.

- Exploration of a small-scale interactive training environment with a task (Figure 1b, map B).

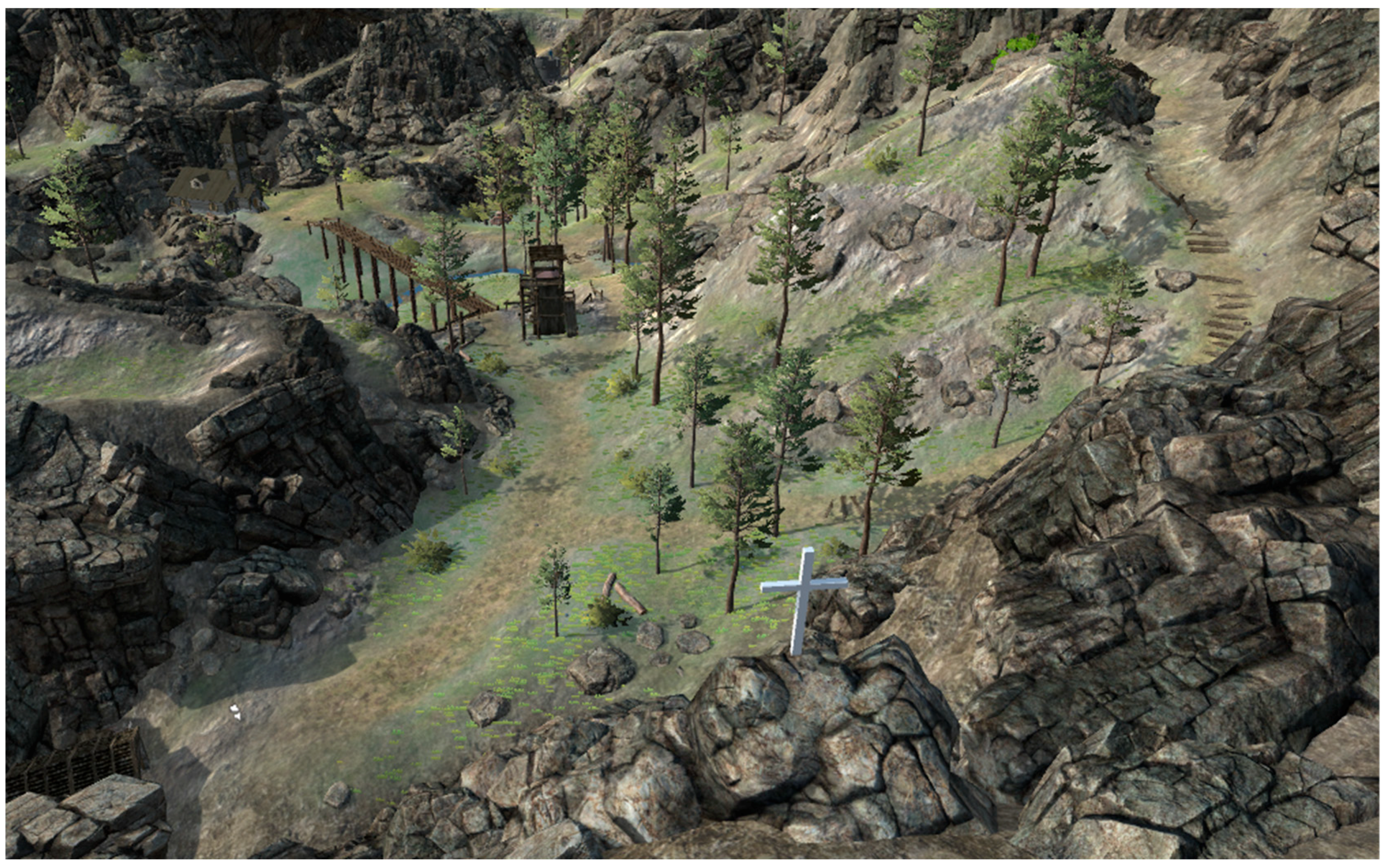

- Free exploration of an interactive experimental virtual environment (Figure 1a, map A) and memorization of its features.

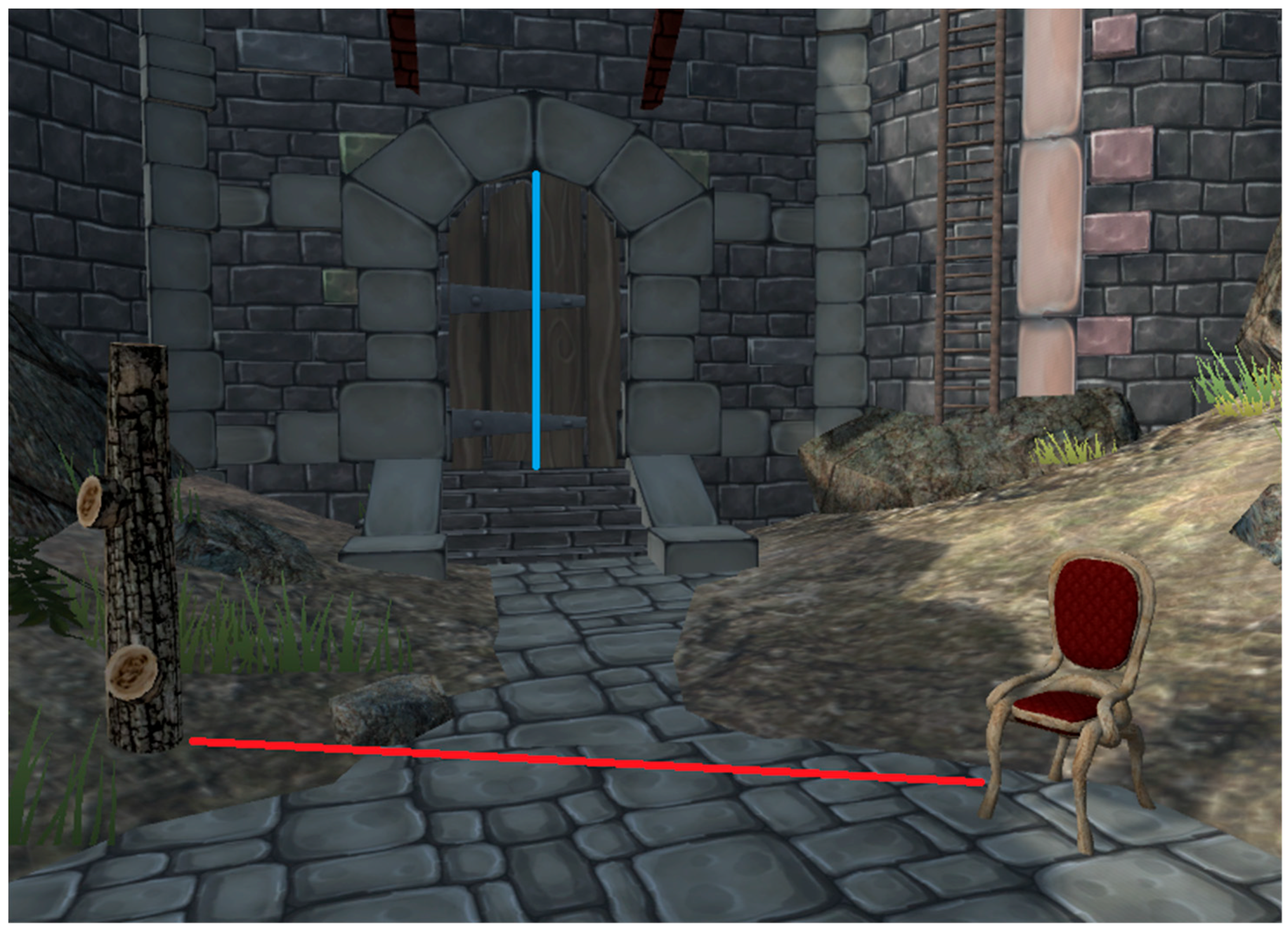

- Observation of another semi-interactive scene (Figure 1c, map C) and answering questions about the spatial layout of the specific components in the scene.

- Choice of the correct appearance of various objects in the semi-interactive scene (Figure 1c, map C) in a multiple-choice questionnaire.

- Completion of the OSIVQ questionnaire.

3.2. Participants

3.3. Stimuli and Tasks

3.3.1. Spatial Relations Identification Task (SRIT)

3.3.2. Object Visual Recognition Test (OVRT)

4. Results

4.1. Exploration of the Virtual Environment–Descriptive Data

4.1.1. Overall Required Time

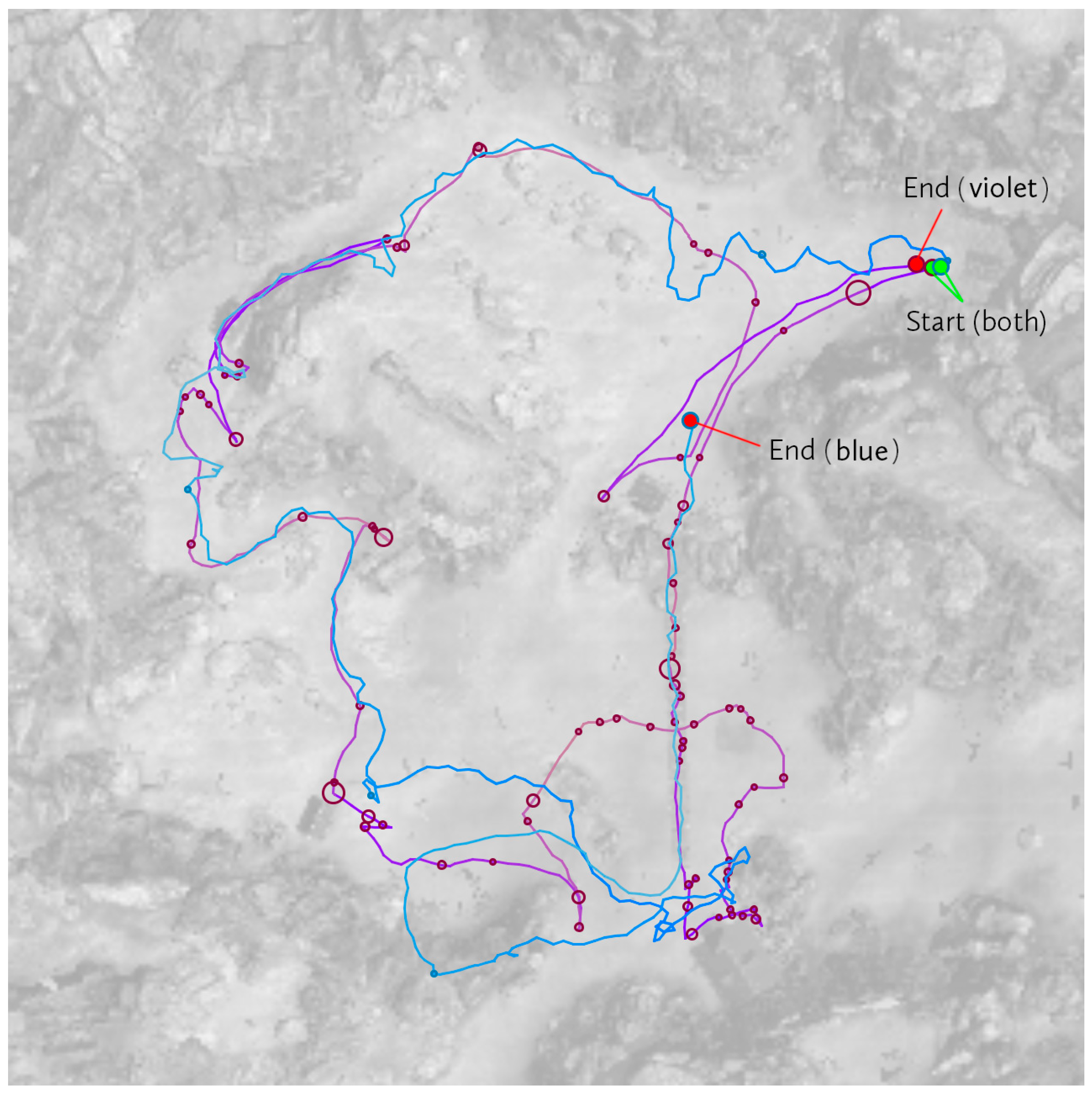

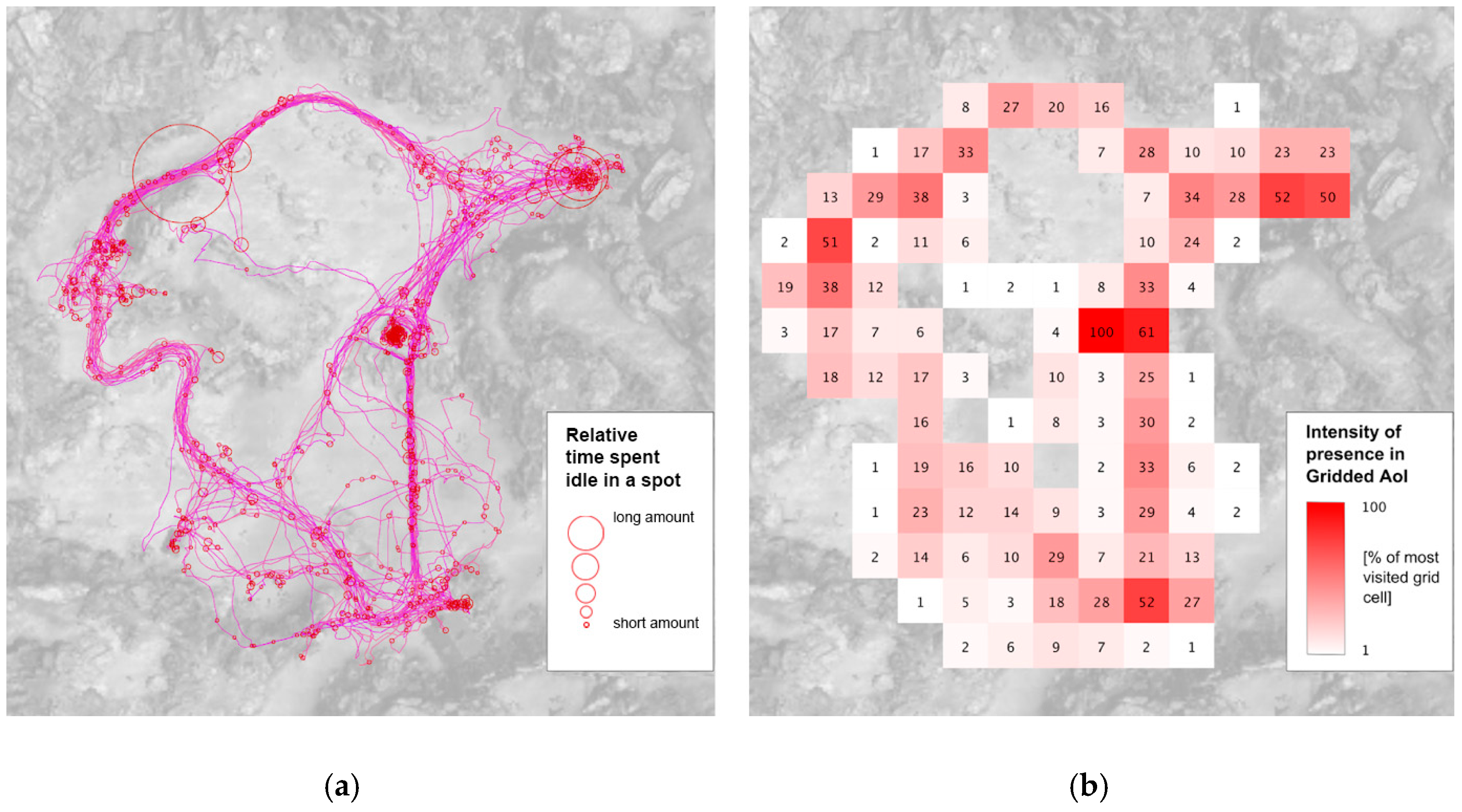

4.1.2. Visualization of Exploration Strategies Based on User Logs

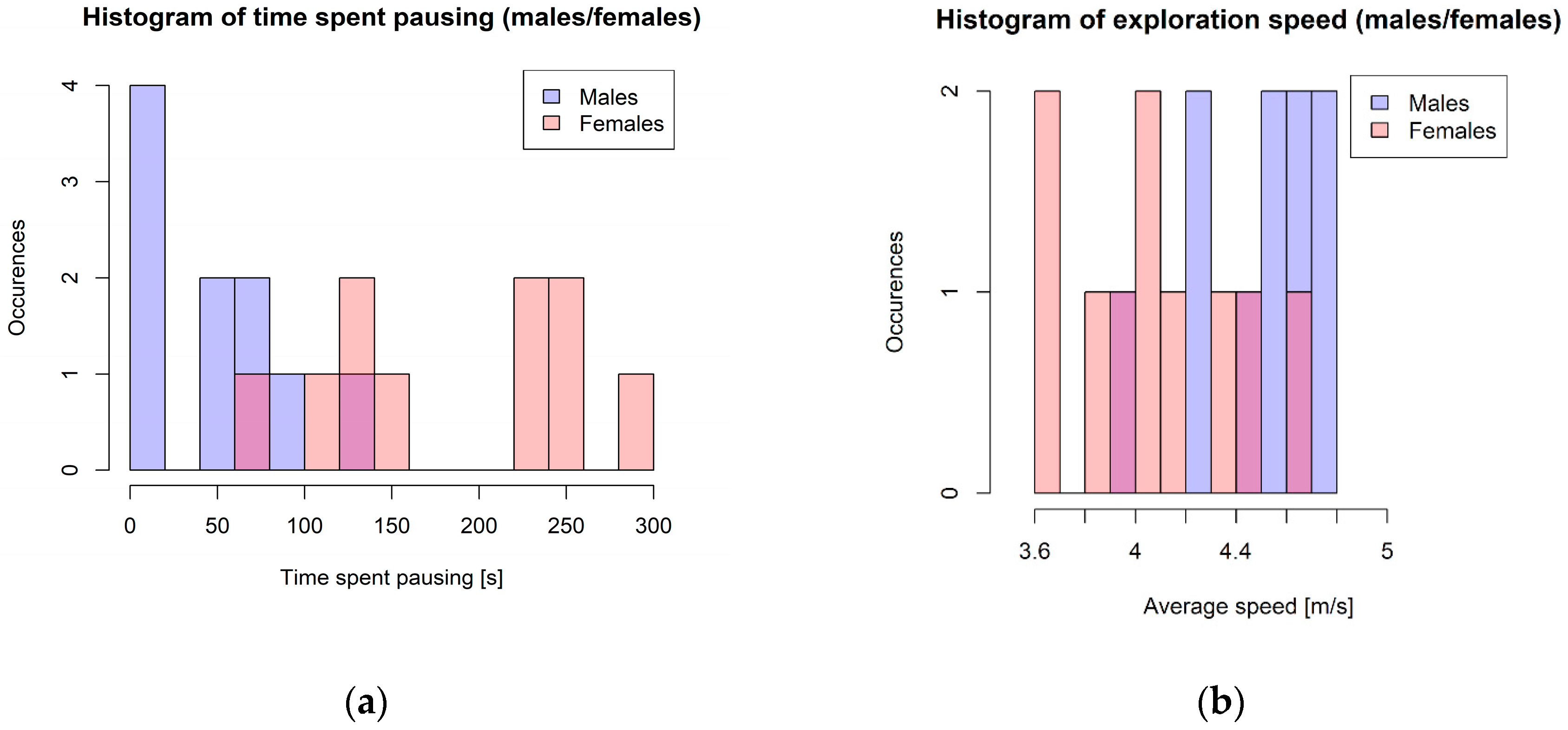

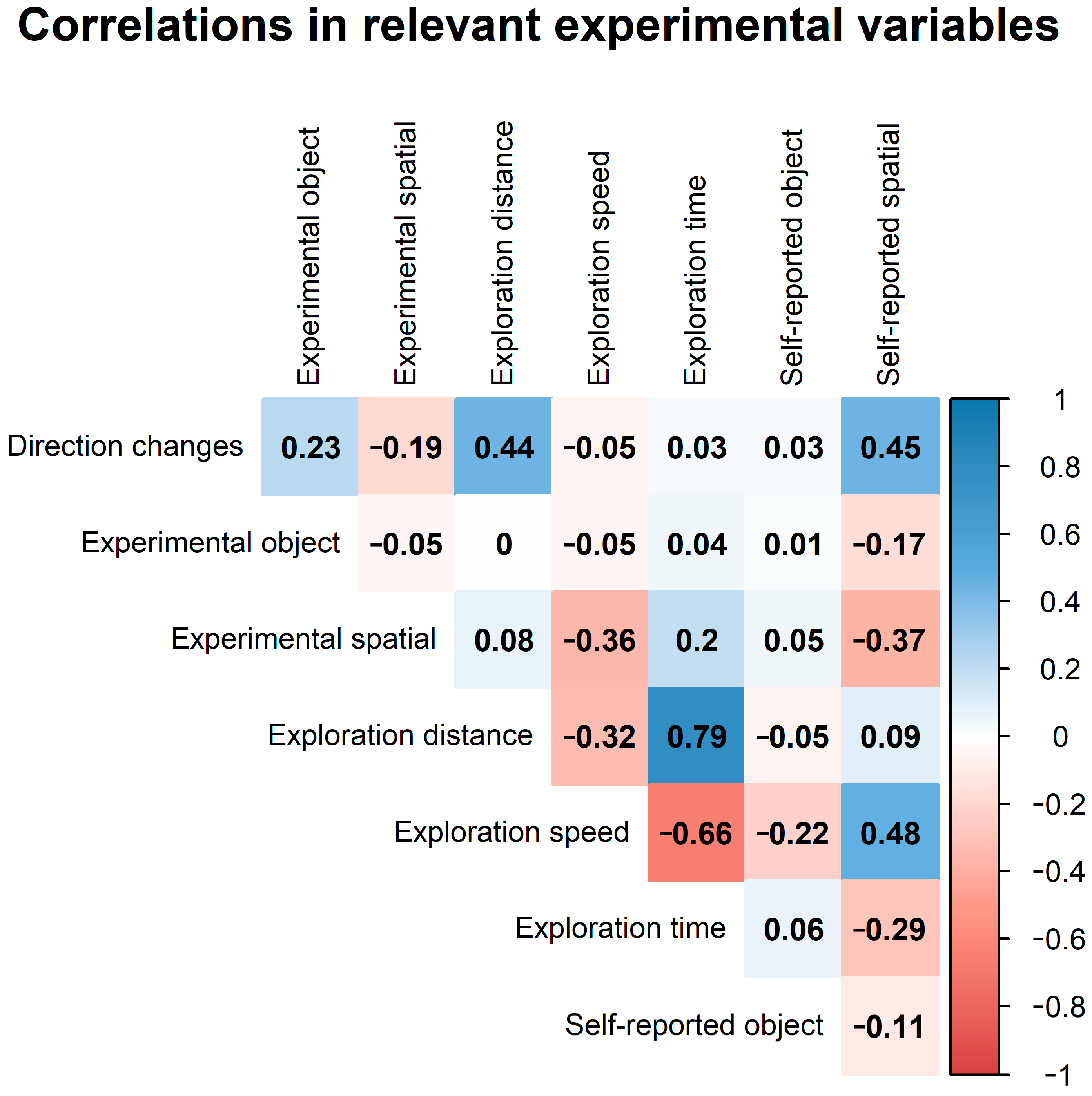

4.1.3. Statistical Analysis of User Logs and Mental Measurements

- exploration time (the total time a participant spent in the environment, in seconds)

- time spent pausing (the amount of time spent not walking, in seconds)

- average exploration speed (walking/pausing time considered, in metres per second)

- travel distance (the overall distance a participant travelled, in metres)

- number of stops (the number of times a participant paused exploration)

- number of keypresses (raw movement interface usage)

- number of direction changes (the number of times a participant changed their keyboard-driven movement direction)

- self-reported OSIVQ object (on a 0–5 scale)

- self-reported OSIVQ spatial (on a 0–5 scale)

- Object Visual Recognition Test (on a 0–10 scale)

- Spatial Relations Identification Task (on a 0–10 scale)

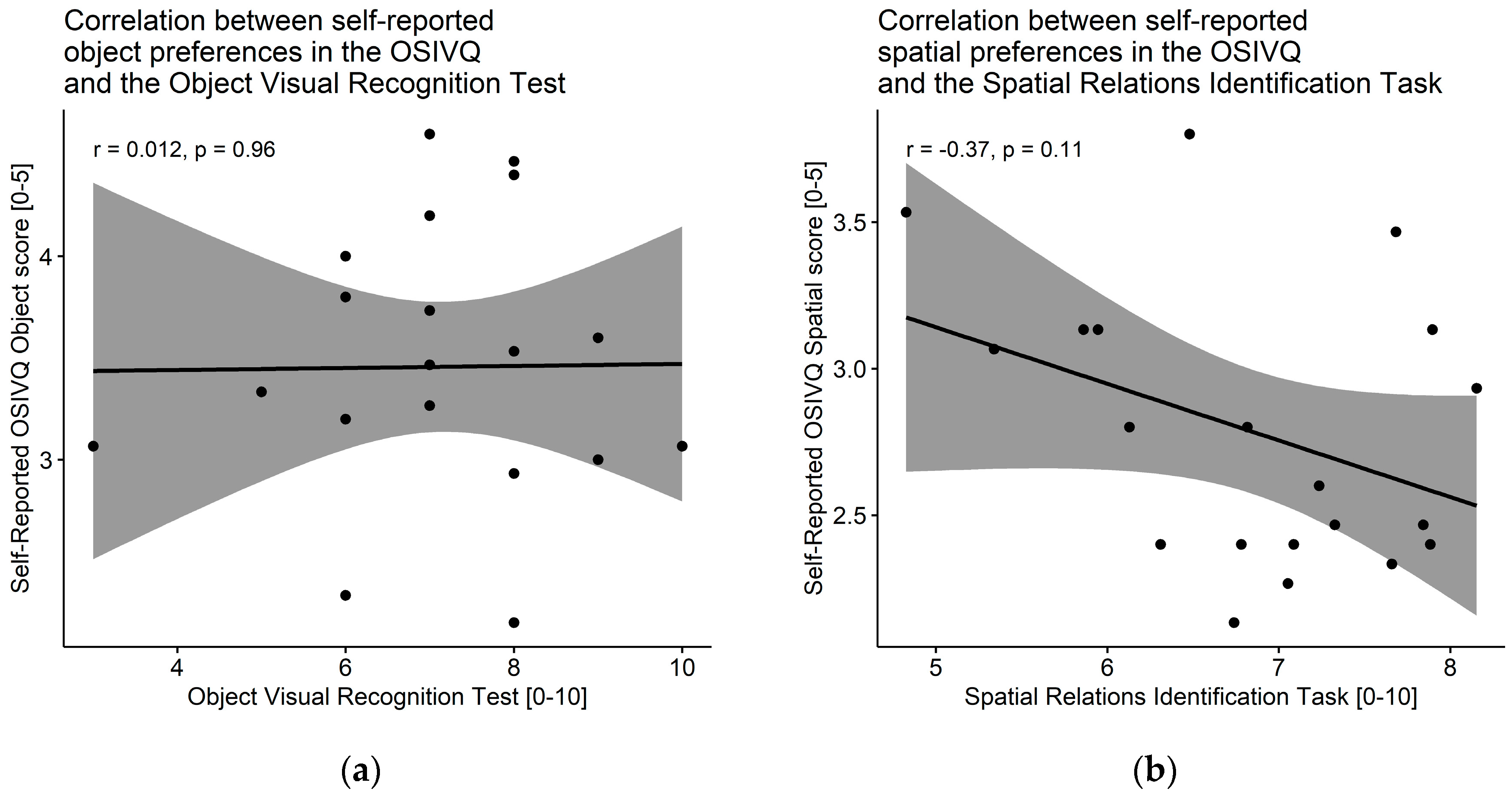

4.2. Correlation Analyses

4.2.1. Self-Reported OSIVQ Object Score and Object Visual Recognition Test

4.2.2. Self-Reported OSIVQ Spatial Score and Spatial Relations Identification Task

5. Discussion and Conclusions

5.1. Methods for Analyzing and Visualizing User Strategies

5.2. Exploration of User Activities and Strategies

5.3. Impact of Findings

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Spatial Relations Identification Task

- How tall is the ladder in the background?

- How tall is the tree stump?

- How tall is the door?

- How tall is the other ladder?

- How far away from you is the chair?

- How far away is the doorway?

- What is the distance between the chair and the flag with the skull?

- How far away is the chair from the tree stump?

- How high up from the ground are the two flags on the battlements?

- (This is the second value for the previous question, which asks for two values)

Appendix B. Object Visual Recognition Test

References

- Robertson, A.; Zelenko, M. Voices from a Virtual Past. An Oral History of a Technology Whose Time Has Come Again. Available online: http://www.theverge.com/a/virtual-reality (accessed on 1 October 2018).

- Lin, H.; Gong, J. Exploring Virtual Geographic Environments. Geogr. Inf. Sci. 2001, 7, 1–7. [Google Scholar] [CrossRef]

- Lin, H.; Chen, M.; Lu, G.; Zhu, Q.; Gong, J.; You, X.; Wen, Y.; Xu, B.; Hu, M. Virtual Geographic Environments (VGEs): A New Generation of Geographic Analysis Tool. Earth Sci. Rev. 2013, 126, 74–84. [Google Scholar] [CrossRef] [Green Version]

- Voinov, A.; Çöltekin, A.; Chen, M.; Beydoun, G. Virtual geographic environments in socio-environmental modeling: A fancy distraction or a key to communication? Int. J. Digit. Earth 2017, 11, 408–419. [Google Scholar] [CrossRef]

- Konečný, M. Cartography: Challenges and Potentials in Virtual Geographic Environments Era. Ann. GIS 2011, 17, 135–146. [Google Scholar] [CrossRef]

- Lin, H.; Batty, M.; Jørgensen, S.E.; Fu, B.; Konečný, M.; Voinov, A.; Torrens, P.; Lu, G.; Zhu, A.X.; Wilson, J.P.; Gong, J. Virtual Environments Begin to Embrace Process-based Geographic Analysis. Trans. GIS 2015, 19, 493–498. [Google Scholar] [CrossRef] [Green Version]

- Thompson, E.M.; Horne, M.; Fleming, D. Virtual Reality Urban Modeling—An Overview. In Proceedings of the International Conference on Construction Applications of Virtual Reality, Orlando, FL, USA, 3–4 August 2006. [Google Scholar]

- Jamei, E.; Mortimer, M.; Seyedmahmoudian, M.; Horan, B.; Stojcevski, A. Investigating the Role of Virtual Reality in Planning for Sustainable Smart Cities. Sustainability 2017, 9, 2006. [Google Scholar] [CrossRef]

- Moreno, A.; Segura, Á.; Zlatanova, S.; Posada, J.; García-Alonso, A. Benefit of the integration of semantic 3D models in a fire-fighting VR simulator. Appl. Geomat. 2012, 4, 143–153. [Google Scholar] [CrossRef]

- Havenith, H.B.; Cerfontaine, P.; Mreyen, A.S. How Virtual Reality Can help Visualise and Assess Geohazards. Int. J. Digit. Earth 2017, 12, 173–189. [Google Scholar] [CrossRef]

- Maggi, S.; Fabrikant, S.I.; Imbert, J.P.; Hurter, C. How do Display Design and User Characteristics Matter in Animations? An Empirical Study with Air Traffific Control Displays. Cartographica 2015, 51, 25–37. [Google Scholar] [CrossRef]

- Slater, M.; Usoh, M. Presence in immersive virtual environments. In Proceedings of the IEEE Conference—Virtual Reality Annual International Symposium, San Jose, CA, USA, 18–22 September 1993. [Google Scholar]

- Tamasi, T. The Evolution of Computer Graphics. In Proceedings of the NVISION 08, San Jose, CA, USA, 25–28 August 2008. [Google Scholar]

- Juřík, V.; Herman, L.; Šašinka, Č.; Stachoň, Z.; Chmelík, J. When the Display Matters: A Multifaceted Perspective on 3D Geovisualizations. Open Geosci. 2017, 9, 89–100. [Google Scholar] [CrossRef]

- Zhang, F.; Hu, M.; Che, W.; Lin, H.; Fang, C. Framework for Virtual Cognitive Experiment in Virtual Geographic Environments. ISPRS Int. J. Geo Inf. 2018, 7, 36. [Google Scholar] [CrossRef]

- Herman, L.; Řezník, T.; Stachoň, Z.; Russnák, J. The Design and Testing of 3DmoveR: An Experimental Tool for Usability Studies of Interactive 3D Maps. Cartogr. Perspect. 2018, 9, 31–63. [Google Scholar] [CrossRef]

- Kubíček, P.; Šašinka, Č.; Stachoň, Z.; Herman, L.; Juřík, V.; Urbánek, T.; Chmelík, J. Identification of altitude profiles in 3D geovisualizations: The role of interaction and spatial abilities. Int. J. Digit. Earth 2019, 12, 156–172. [Google Scholar] [CrossRef]

- Juřík, V.; Herman, L.; Kubíček, P.; Stachoň, Z.; Šašinka, Č. Cognitive Aspects of Collaboration in 3D Virtual Environments. In International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; ISPRS: Leopoldshöhe, Germany, 2016; Volume XLI-B2, pp. 663–670. [Google Scholar] [CrossRef]

- Špriňarová, K.; Juřík, V.; Šašinka, Č.; Herman, L.; Štěrba, Z.; Stachoň, Z.; Chmelík, J.; Kozlíková, B. Human-computer Interaction in Real 3D and Pseudo-3D Cartographic Visualization: A Comparative Study. In Cartography-Maps Connecting the World; Springer: Cham, Switzerland, 2015; pp. 59–73. [Google Scholar] [CrossRef]

- Herman, L.; Stachoň, Z. Comparison of User Performance with Interactive and Static 3D Visualization —Pilot Study. In International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; ISPRS: Leopoldshöhe, Germany, 2016; Volume XLI-B2, pp. 655–661. [Google Scholar] [CrossRef]

- Stachoň, Z.; Kubíček, P.; Málek, F.; Krejčí, M.; Herman, L. The Role of Hue and Realism in Virtual Reality. In 7th International Conference on Cartography and GIS; Bandrova, T., Konečný, M., Eds.; Bulgarian Cartographic Association: Sofia, Bulgaria, 2018; Volume 2, pp. 932–941. [Google Scholar]

- Plesa, M.A.; Cartwright, W. Evaluating the Effectiveness of Non-Realistic 3D Maps for Navigation with Mobile Devices. In Lecture Notes in Geoinformation and Cartography Map-Based Mobile Services; Meng, L., Ed.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 80–104. [Google Scholar] [CrossRef]

- Lokka, I.E.; Çöltekin, A.; Wiener, J.; Fabrikant, S.I.; Röcke, C. Virtual environments as memory training devices in navigational tasks for older adults. Sci. Rep. 2018, 8. [Google Scholar] [CrossRef] [PubMed]

- Popelka, S.; Dědková, P. Extinct Village 3D visualization and its Evaluation with Eye-Movement Recording. In Computational Science and Its Applications; Springer: Cham, Switzerland, 2014; pp. 786–795. [Google Scholar] [CrossRef]

- Zanola, S.; Fabrikant, S.I.; Coltekin, A. The Effect of Realism on the Confidence in Spatial Data Quality in Stereoscopic 3D Displays. In Proceedings of the 24th International Cartography Conference, Santiago, Chile, 15–21 November 2009. [Google Scholar] [CrossRef]

- Gregory, R.J. Psychological Testing: History, Principles, and Applications; Pearson: Boston, MA, USA, 2016. [Google Scholar]

- Manson, S.; Kne, L.; Dyke, K.; Shannon, J.; Eria, S. Using Eye-tracking and Mouse Metrics to Test Usability of Web Mapping Navigation. Cartogr. Geogr. Inf. Sci. 2012, 39, 48–60. [Google Scholar] [CrossRef]

- Wilkening, J.; Fabrikant, S.I. How Users Interact with a 3D Geo-browser under Time Pressure. Cartogr. Geogr. Inf. Sci. 2013, 40, 40–52. [Google Scholar] [CrossRef]

- You, M.; Chen, C.W.; Liu, H.; Lin, H. A Usability Evaluation of web Map Zoom and Pan Functions. Int. J. Des. 2007, 1, 15–25. [Google Scholar]

- Glawischnig, S.; Mahdavi, A. Human Interface Devices and Building Information Systems—A Usability Study. IADIS Int. J. 2013, 11, 29–142. [Google Scholar]

- Roth, R.E. Cartographic Interaction Primitives: Framework and Synthesis. Cartogr. J. 2012, 49, 376–395. [Google Scholar] [CrossRef]

- Bowman, D.A.; Kruijff, E.; LaViola, J.J.; Poupyrev, I. 3D User Interfaces: Theory and Practice; Addison Wesley Longman Publishing: Redwood City, CA, USA, 2005; p. 512. [Google Scholar]

- Chittaro, L.; Ieronutti, L. A Visual Tool for Tracing Users’ Behavior in Virtual Environments. In Proceedings of the Working Conference on Advanced Visual Interfaces, Gallipoli, Italy, 25–28 May 2004; pp. 41–47. [Google Scholar]

- Zanbaka, C.A.; Lok, B.C.; Babu, S.V.; Ulinsky, A.C.; Hodges, L.F. Comparison of Path Visualizations and Cognitive Measures Relative to Travel Technique in a Virtual Environment. IEEE Trans. Vis. Comput. Graph. 2005, 11, 694–705. [Google Scholar] [CrossRef] [Green Version]

- Cirio, G.; Marchal, M.; Olivier, A.H.; Pettré, J. Kinematic Evaluation of Virtual Walking Trajectories. IEEE Trans. Vis. Comput. Graph. 2013, 19, 671–680. [Google Scholar] [CrossRef] [Green Version]

- Treves, R.; Viterbo, P.; Haklay, M. Footprints in the sky: Using student tracklogs from a “bird’s eye view” virtual field trip to enhance learning. J. Geogr. High. Educ. 2015, 39, 97–110. [Google Scholar] [CrossRef]

- Herman, L.; Stachoň, Z. Controlling 3D Geovisualizations on Touch Screen—The Role of Users Age and Gestures Intuitiveness. In 7th International Conference on Cartography and GIS; Bandrova, T., Konečný, M., Eds.; Bulgarian Cartographic Association: Sofia, Bulgaria, 2018; Volume 1, pp. 473–480. [Google Scholar]

- Paivio, A.; Csapo, K. Short-term sequential memory for pictures and words. Psychon. Sci. 1971, 24, 50–51. [Google Scholar] [CrossRef] [Green Version]

- Ausburn, L.J.; Ausburn, F.B. Cognitive styles: Some information and implications for instructional design. Educ. Technol. Res. Dev. 1978, 26, 337–354. [Google Scholar]

- Martinsen, O.; Kaufmann, G. Cognitive style and creativity. In Encyclopedia of Creativity; Runco, M.A., Pritsker, S.R., Eds.; Academic Press: New York, NY, USA, 1999; Volume 1, pp. 273–282. [Google Scholar]

- Kirton, M.J. Adaption-Innovation: In the Context of Diversity and Change; Routledge: New York, NY, USA, 2008. [Google Scholar]

- Blazhenkova, O.; Kozhevnikov, M. The New Object-Spatial-Verbal Cognitive Style Model: Theory and Measurement. Appl. Cogn. Psychol. 2009, 23, 638–663. [Google Scholar] [CrossRef]

- Peterson, E.R.; Deary, I.J.; Austin, E.J. A New Measure of Verbal–Imagery Cognitive Style: VICS. Personal. Individ. Differ. 2005, 38, 1269–1281. [Google Scholar] [CrossRef]

- Kozhevnikov, M.; Kosslyn, S.; Shephard, J. Spatial versus object visualizers: A new characterization of visual cognitive style. Mem. Cogn. 2005, 33, 710–726. [Google Scholar] [CrossRef] [Green Version]

- Blajenkova, O.; Kozhevnikov, M.; Motes, M.A. Object-Spatial Imagery: A New Self-Report Imagery Questionnaire. Appl. Cogn. Psychol. 2006, 20, 239–263. [Google Scholar] [CrossRef]

- Jonassen, D.H.; Grabowski, B.L. Handbook of Individual Differences, Learning, and Instruction; Routledge: Abingdon, UK, 2012. [Google Scholar]

- Golledge, R.G. Human Wayfinding and Cognitive Maps. In The Colonization of Unfamiliar Landscapes: The Archaeology of Adaptation; Routledge: New York, NY, USA, 2003; pp. 25–43. [Google Scholar]

- Schiller, D.; Eichenbaum, H.; Buffalo, E.A.; Davachi, L.; Foster, D.J.; Leutgeb, S.; Ranganath, C. Memory and Space: Towards an Understanding of the Cognitive Map. J. Neurosci. 2015, 35, 13904–13911. [Google Scholar] [CrossRef] [Green Version]

- Epstein, R.A.; Patai, E.Z.; Julian, J.B.; Spiers, H.J. The cognitive map in humans: Spatial navigation and beyond. Nat. Neurosci. 2017, 20, 1504–1513. [Google Scholar] [CrossRef]

- Lynch, K. The Image of the City; MIT Press: Cambridge, MA, USA, 1960. [Google Scholar]

- Gartner, G.; Huang, H. Progress in Location-Based Services 2016; Lecture Notes in Geoinformation and Cartography; Springer: Berlin, Germany, 2017. [Google Scholar]

- Franz, M.O.; Mallot, H. Biomimetic robot navigation. Robot. Auton. Syst. 2000, 30, 133–153. [Google Scholar] [CrossRef] [Green Version]

- Golledge, R.G. Path selection and route preference in human navigation: A progress report. In Spatial Information Theory A Theoretical Basis for GIS. Lecture Notes in Computer Science; Frank, A.U., Kuhn, W., Eds.; Springer: Berlin, Germany, 1995. [Google Scholar] [CrossRef]

- Vilar, E.; Rebelo, F.; Noriega, P.; Teles, J.; Mayhorn, C. The influence of environmental features on route selection in an emergency situation. Appl. Ergon. 2013, 44, 618–627. [Google Scholar] [CrossRef]

- Duckham, M.; Kulik, L. “Simplest” Paths: Automated Route Selection for Navigation. In Proceedings of the International Conference on Spatial Information Theory, Ittingen, Switzerland, 24–28 September 2003. [Google Scholar]

- Collett, T.S.; Zeil, J. The selection and use of landmarks by insects. In Orientation and Communication in Arthropods; Birkhäuser: Basel, Switzerland, 1997; p. 84. [Google Scholar] [CrossRef]

- Nys, M.; Gyselinck, V.; Orriols, E.; Hickmann, M. Landmark and route knowledge in children’s spatial representation of a virtual environment. Front. Psychol. 2015, 5, 1522. [Google Scholar] [CrossRef]

- Buchroithner, M.F.; Knust, C. True-3D in cartography—Current hard and softcopy developments. In Geospatial Visualisation; Moore, A., Drecki, I., Eds.; Springer: Heidelberg, Germany, 2013; pp. 41–65. [Google Scholar]

- Herman, L.; Stachoň, Z.; Stuchlík, R.; Hladík, J.; Kubíček, P. Touch Interaction with 3D Geographical Visualization on Web: Selected Technological and User Issues. In International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Dimopoulou, E., van Oosterom, P., Eds.; ISPRS: Leopoldshöhe, Germany, 2016; Volume XLII-2/W2, pp. 33–40. [Google Scholar] [CrossRef]

- Gibson, J.J. The Senses Considered as Perceptual Systems; Allen and Unwin: London, UK, 1966. [Google Scholar]

- Hadavi, S.; Kaplan, R.; Hunter, M.C.R. Environmental affordances: A practical approach for design of nearby outdoor settings in urban residential areas. Landsc. Urban Plan. 2014, 134, 19–32. [Google Scholar] [CrossRef]

- Wiener, J.M.; Schnee, A.; Mallot, H.A. Use and interaction of navigation strategies in regionalized environments. J. Environ. Psychol. 2004, 4, 475–493. [Google Scholar] [CrossRef]

- Meilinger, T. Strategies of Orinetation in Environmental Spaces; Logos Verlag: Berlin, Germany, 2008; pp. 72–80. [Google Scholar]

- Sutcliffe, A.G.; Kaur, K.D. Evaluating the usability of virtual reality user interfaces. Behav. Inf. Technol. 2000, 19, 415–426. [Google Scholar] [CrossRef]

- Nguyen-Vo, T.; Riecke, B.E.; Stuerzlinger, W.; Pham, D.M.; Kruijff, E. Do We Need Actual Walking in VR? Learning with Actual Rotation Might Suffice for Efficient Locomotion. In Proceedings of the 11th International Conference on Spatial Cognition, Tübingen, Germany, 5–8 September 2018. [Google Scholar]

- Šikl, R.; Šimeček, M. Visual space perception at different levels of depth description. Atten. Percept. Psychophys. 2015, 77, 2098–2107. [Google Scholar] [CrossRef]

- Kozhevnikov, M.; Hegarty, M. A dissociation between object manipulation spatial ability and spatial orientation ability. Mem. Cogn. 2001, 29, 745–756. [Google Scholar] [CrossRef] [Green Version]

- Stachoň, Z.; Šašinka, Č.; Čeněk, J.; Štěrba, Z.; Angsuesser, S.; Fabrikant, S.I.; Štampach, R.; Morong, K. Cross-cultural differences in figure–ground perception of cartographic stimuli. Cartogr. Geogr. Inf. Sci. 2018, 46, 82–94. [Google Scholar] [CrossRef]

| Sex | Mean | Std. Dev. | Median | 1st Qu. | 3rd Qu. | Shapiro–Wilk Test | p-Value | Wilcoxon W | Cohen’s d | |

|---|---|---|---|---|---|---|---|---|---|---|

| Exploration Time [s] | M | 316.2 | 141.27 | 314 | 216.8 | 429 | 0.78 | 0.03 * | 21.5 | 1.15 |

| F | 475.2 | 136.03 | 450.5 | 371 | 600.8 | 0.11 | ||||

| Time Spent Pausing [s] | M | 48.3 | 41.65 | 47 | 11 | 70 | 0.4 | 0.0001 ** | 4 | 2.30 |

| F | 184.4 | 72.76 | 187.5 | 124.2 | 244.5 | 0.17 | ||||

| Exploration Speed [m/s] | M | 4.47 | 0.26 | 4.55 | 4.31 | 4.66 | 0.36 | 0.012 * | 83 | 1.33 |

| F | 4.09 | 0.31 | 4.05 | 3.91 | 4.32 | 0.85 | ||||

| Direction Changes [Count] | M | 377.4 | 309 | 316.5 | 246.2 | 426.2 | 0.89 | 0.019 * | 81.5 | 1.12 |

| F | 111.8 | 133.29 | 54 | 19 | 173.8 | 0.8 |

| VR Users | Mean | Std. Dev. | Median | 1st Qu. | 3rd Qu. | Shapiro–Wilk Test | p-Value | Wilcoxon W | Cohen’s d | |

|---|---|---|---|---|---|---|---|---|---|---|

| Spatial Relations Identification Task [0–10] | Non-proficient | 6.12 | 0.88 | 5.95 | 5.6 | 6.76 | 0.85 | 0.009 ** | 6 | 0.01 |

| Proficient | 7.37 | 0.59 | 7.37 | 6.99 | 7.86 | 0.6 |

| Method | Preservation of Spatial Component | Attitude to Temporal Component | Suitable for Groups of Users | Statistical or Numerical Comparison | Dimensionality of Data |

|---|---|---|---|---|---|

| Gridded AoI | yes | aggregation | yes | yes | 2D or 3D |

| Statistics | no | aggregation or selected moments | yes | yes | 2D or 3D |

| Video | yes | preservation | only individual users | only visual | 3D |

| Views (screenshots) | yes | preservation | only individual users | only visual | 3D |

| Virtual trajectory | yes | preservation | only individual users | only visual | 2D or 3D |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ugwitz, P.; Juřík, V.; Herman, L.; Stachoň, Z.; Kubíček, P.; Šašinka, Č. Spatial Analysis of Navigation in Virtual Geographic Environments. Appl. Sci. 2019, 9, 1873. https://doi.org/10.3390/app9091873

Ugwitz P, Juřík V, Herman L, Stachoň Z, Kubíček P, Šašinka Č. Spatial Analysis of Navigation in Virtual Geographic Environments. Applied Sciences. 2019; 9(9):1873. https://doi.org/10.3390/app9091873

Chicago/Turabian StyleUgwitz, Pavel, Vojtěch Juřík, Lukáš Herman, Zdeněk Stachoň, Petr Kubíček, and Čeněk Šašinka. 2019. "Spatial Analysis of Navigation in Virtual Geographic Environments" Applied Sciences 9, no. 9: 1873. https://doi.org/10.3390/app9091873

APA StyleUgwitz, P., Juřík, V., Herman, L., Stachoň, Z., Kubíček, P., & Šašinka, Č. (2019). Spatial Analysis of Navigation in Virtual Geographic Environments. Applied Sciences, 9(9), 1873. https://doi.org/10.3390/app9091873