A Deep Learning Method for Bearing Fault Diagnosis through Stacked Residual Dilated Convolutions

Abstract

:1. Introduction

- To meet the requirements of real-time fault diagnosis of rotating machinery, the fault diagnosis model needs to perform continuous and rapid diagnosis based on the vibration signal collected in real-time [5].

- To improve the effectiveness of the fault diagnosis model, the essential features need to be extracted to avoid local false features caused by ambient noise and fluctuations in working conditions [6].

- To keep good performance under different working conditions, the fault diagnosis model needs to have good generalization performance under different load and noise conditions [7].

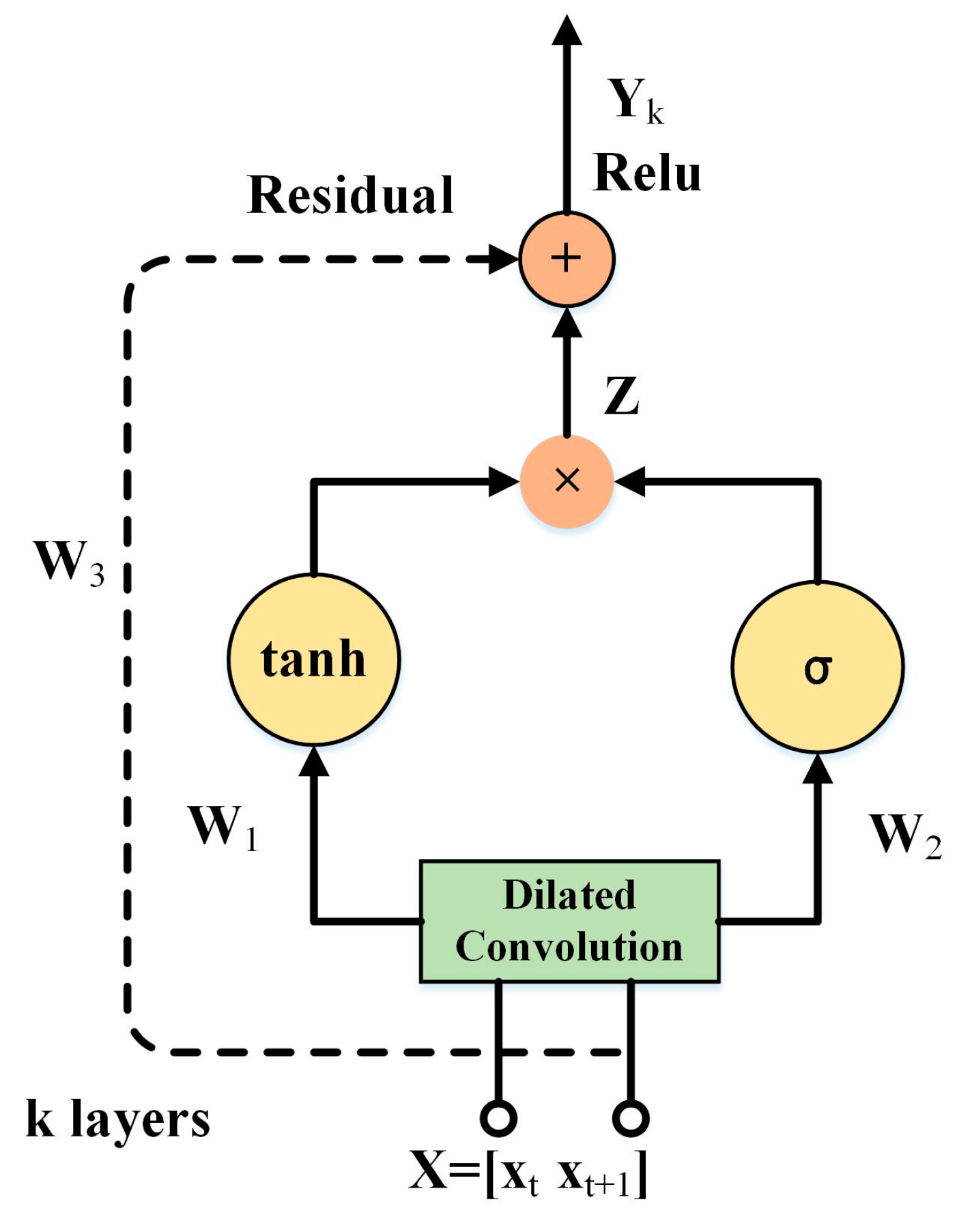

- A novel effective deep learning framework is proposed, which subtly integrates the dilated convolution, the input gate structure of LSTM and the residual network.

- This algorithm performs pretty well under the noisy environment by effectively enlarging the receptive field and controlling the entry of information.

- This algorithm keeps high diagnostic accuracy across different workloads due to the ability to automatically and efficiently extract essential features.

2. SRDCNN-Based Intelligent Fault Diagnosis Method

2.1. Framework of SRDCNN Model

2.2. Residual Dilated Convolutional Layer

2.2.1. Dilated Convolution

2.2.2. Input Gate Structure of LSTM

2.2.3. Residual Network

3. Validation of Proposed SRDCNN Model

3.1. Data Description

3.2. Case Study I: Comparison with Traditional Deep Convolutional Neural Networks

3.3. Case Study II: Performance under Different Ambient Noise

3.4. Case Study III: Performance across Different Working Loads

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Randall, R.B.; Antoni, J. Rolling element bearing diagnostics—A tutorial. Mech. Syst. Signal Process. 2011, 25, 485–520. [Google Scholar] [CrossRef]

- Duan, J.; Shi, T.; Zhou, H.; Xuan, J.; Zhang, Y. Multiband Envelope Spectra Extraction for Fault Diagnosis of Rolling Element Bearings. Sensors 2018, 18, 1466. [Google Scholar] [CrossRef]

- He, M.; He, D. A Deep Learning Based Approach for Bearing Fault Diagnosis. IEEE Trans. Ind. Appl. 2017, 53, 3057–3065. [Google Scholar] [CrossRef]

- Li, B.; Chow, M.-Y.; Tipsuwan, Y.; Hung, J. Neural-network-based motor rolling bearing fault diagnosis. IEEE Trans. Ind. Electron. 2000, 47, 1060–1069. [Google Scholar] [CrossRef]

- Irfan, M.; Saad, N.; Ibrahim, R.; Asirvadam, V.S. An on-line condition monitoring system for induction motors via instantaneous power analysis. J. Mech. Sci. Technol. 2015, 29, 1483–1492. [Google Scholar] [CrossRef]

- Dong, G.; Chen, J. Noise resistant time frequency analysis and application in fault diagnosis of rolling element bearings. Mech. Syst. Signal Process. 2012, 33, 212–236. [Google Scholar] [CrossRef]

- Zhu, Z.; Peng, G.; Chen, Y.; Gao, H.; Chen, Y. A convolutional neural network based on a capsule network with strong generalization for bearing fault diagnosis. Neurocomputing 2019, 323, 62–75. [Google Scholar] [CrossRef]

- Zhang, Y.; Randall, R. Rolling element bearing fault diagnosis based on the combination of genetic algorithms and fast kurtogram. Mech. Syst. Signal Process. 2009, 23, 1509–1517. [Google Scholar] [CrossRef]

- Wang, L.; Liu, Z.; Miao, Q.; Zhang, X. Time–frequency analysis based on ensemble local mean decomposition and fast kurtogram for rotating machinery fault diagnosis. Mech. Syst. Signal Process. 2018, 103, 60–75. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [Green Version]

- Collobert, R.; Weston, J. A unified architecture for natural language processing: Deep neural networks with multitask learning. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 160–167. [Google Scholar]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.; Mohamed, A.R.; Jaitly, N.; Sainath, T. Deep neural networks for acoustic modeling in speech recognition. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the 26th Annual Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Lu, C.; Wang, Z.-Y.; Qin, W.-L.; Ma, J. Fault diagnosis of rotary machinery components using a stacked denoising autoencoder-based health state identification. Signal Process. 2017, 130, 377–388. [Google Scholar] [CrossRef]

- Zhang, W.; Peng, G.; Li, C.; Chen, Y.; Zhang, Z.; Wang, X. A New Deep Learning Model for Fault Diagnosis with Good Anti-Noise and Domain Adaptation Ability on Raw Vibration Signals. Sensors 2017, 17, 425. [Google Scholar] [CrossRef] [PubMed]

- Jing, L.; Zhao, M.; Li, P.; Xu, X. A convolutional neural network based feature learning and fault diagnosis method for the condition monitoring of gearbox. Measurement 2017, 111, 1–10. [Google Scholar] [CrossRef]

- Zhang, W.; Li, C.; Peng, G.; Chen, Y.; Zhang, Z. A deep convolutional neural network with new training methods for bearing fault diagnosis under noisy environment and different working load. Mech. Syst. Signal Process. 2018, 100, 439–453. [Google Scholar] [CrossRef]

- Zhuang, Z.; Qin, W. Intelligent fault diagnosis of rolling bearing using one-dimensional multi-scale deep convolutional neural network based health state classification. In Proceedings of the IEEE 15th International Conference on Networking, Sensing and Control, Zhuhai, China, 27–29 March 2018; pp. 1–6. [Google Scholar]

- Lu, C.; Wang, Z.; Zhou, B. Intelligent fault diagnosis of rolling bearing using hierarchical convolutional network based health state classification. Adv. Eng. Inform. 2017, 32, 139–151. [Google Scholar] [CrossRef]

- Wen, L.; Li, X.; Gao, L.; Zhang, Y. A New Convolutional Neural Network-Based Data-Driven Fault Diagnosis Method. IEEE Trans. Ind. Electron. 2018, 65, 5990–5998. [Google Scholar] [CrossRef]

- Zhang, C.; Bengio, S.; Hardt, M.; Recht, B.; Vinyals, O. Understanding deep learning requires rethinking generalization. arXiv 2016, arXiv:1611.03530. [Google Scholar]

- Krueger, D.; Ballas, N.; Jastrzebski, S.; Arpit, D.; Courville, A. Deep Nets Don’t Learn via Memorization. In Proceedings of the International Conference of Learning Representations (ICLR), Toulon, France, 24–26 April 2017. [Google Scholar]

- Yang, Y.; Liao, Y.; Meng, G.; Lee, J. A hybrid feature selection scheme for unsupervised learning and its application in bearing fault diagnosis. Expert Syst. Appl. 2011, 38, 11311–11320. [Google Scholar] [CrossRef]

- Rad, A.R.; Banazadeh, M. Probabilistic Risk-Based Performance Evaluation of Seismically Base-Isolated Steel Structures Subjected to Far-Field Earthquakes. Buildings 2018, 8, 128. [Google Scholar]

- Tajammolian, H.; Khoshnoudian, F.; Rad, A.R.; Loghman, V. Seismic Fragility Assessment of Asymmetric Structures Supported on TCFP Bearings Subjected to Near-field Earthquakes. Structures 2018, 13, 66–78. [Google Scholar] [CrossRef]

- Han, Y.; Tang, B.; Deng, L. An enhanced convolutional neural network with enlarged receptive fields for fault diagnosis of planetary gearboxes. Comput. Ind. 2019, 107, 50–58. [Google Scholar] [CrossRef]

- Pan, H.; He, X.; Tang, S.; Meng, F. An Improved Bearing Fault Diagnosis Method using One-Dimensional CNN and LSTM. J. Mech. Eng. 2018, 64, 443–452. [Google Scholar]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Oord, A.V.D.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kavukcuoglu, K. Wavenet: A generative model for raw audio. arXiv 2016, arXiv:1609.03499. [Google Scholar]

- Kalchbrenner, N.; Espeholt, L.; Simonyan, K.; Oord, A.V.D.; Graves, A.; Kavukcuoglu, K. Neural machine translation in linear time. arXiv 2016, arXiv:1610.10099. [Google Scholar]

- Borovykh, A.; Bohte, S.; Oosterlee, C.W. Conditional time series forecasting with convolutional neural networks. arXiv 2017, arXiv:1703.04691. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Smith, W.A.; Randall, R.B. Rolling element bearing diagnostics using the Case Western Reserve University data: A benchmark study. Mech. Syst. Signal Process. 2015, 64, 100–131. [Google Scholar] [CrossRef]

- Maaten, L.V.D.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Fault Location | None | Ball | Inner Ring | Outer Ring | Load (hp) | Speed (rpm) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Fault diameter (mil) | 0 | 7 | 14 | 21 | 7 | 14 | 21 | 7 | 14 | 21 | |||

| Dataset A | Train | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 0 | 1797 |

| Test | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | |||

| Dataset B | Train | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 1 | 1772 |

| Test | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | |||

| Dataset C | Train | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 2 | 1750 |

| Test | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | |||

| Dataset D | Train | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 500 | 3 | 1730 |

| Test | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | |||

| Model | 1d-DCNN | 2d-DCNN | LSTM-CNN | SRDCNN |

|---|---|---|---|---|

| Input layer | 1024 × 1 | 32 × 32 | 1024 × 1 | 1024 × 1 |

| Layer-1 | Conv1d (16 × 1, 16) | Conv2d (5 × 5, 16) | Conv1d (32 × 1, 20) | RDConv1d (64 × 1, 32) |

| Layer-2 | Pooling (2 × 1, 2) | Pooling (2 × 2, 2) | Pooling (2 × 2, 2) | RDConv1d (32 × 1, 32) |

| Layer-3 | Conv1d (3 × 1, 32) | Conv2d (5 × 5, 32) | LSTM (100) | RDConv1d (16 × 1, 64) |

| Layer-4 | Pooling (2 × 1, 2) | Pooling (2 × 2, 2) | Dense (10) | RDConv1d (8 × 1, 64) |

| Layer-5 | Conv1d (3 × 1, 64) | Flatten | Flatten | |

| Layer-6 | Pooling (2 × 1, 2) | Dense (100) | Dense (100) | |

| Layer-7 | Conv1d (3 × 1, 64) | Dense (10) | Dense (10) | |

| Layer-8 | Pooling (2 × 1, 2) | |||

| Layer-9 | Conv1d (3 × 1, 64) | |||

| Layer-10 | Pooling (2 × 1, 2) | |||

| Layer-11 | Flatten | |||

| Layer-12 | Dense (100) | |||

| Layer-13 | Dense (10) | |||

| Mean accuracy | 0.986 | 0.992 | 0.978 | 0.994 |

| Standard deviation | 0.0064 | 0.0043 | 0.0071 | 0.0015 |

| Description | Source Dataset | Target Dataset | |

|---|---|---|---|

| Dataset details | Training set B | Test set C | Test set D |

| Training set C | Test set B | Test set D | |

| Training set D | Test set B | Test set C | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhuang, Z.; Lv, H.; Xu, J.; Huang, Z.; Qin, W. A Deep Learning Method for Bearing Fault Diagnosis through Stacked Residual Dilated Convolutions. Appl. Sci. 2019, 9, 1823. https://doi.org/10.3390/app9091823

Zhuang Z, Lv H, Xu J, Huang Z, Qin W. A Deep Learning Method for Bearing Fault Diagnosis through Stacked Residual Dilated Convolutions. Applied Sciences. 2019; 9(9):1823. https://doi.org/10.3390/app9091823

Chicago/Turabian StyleZhuang, Zilong, Huichun Lv, Jie Xu, Zizhao Huang, and Wei Qin. 2019. "A Deep Learning Method for Bearing Fault Diagnosis through Stacked Residual Dilated Convolutions" Applied Sciences 9, no. 9: 1823. https://doi.org/10.3390/app9091823