SURF-BRISK–Based Image Infilling Method for Terrain Classification of a Legged Robot

Abstract

:1. Introduction

2. Materials and Methods

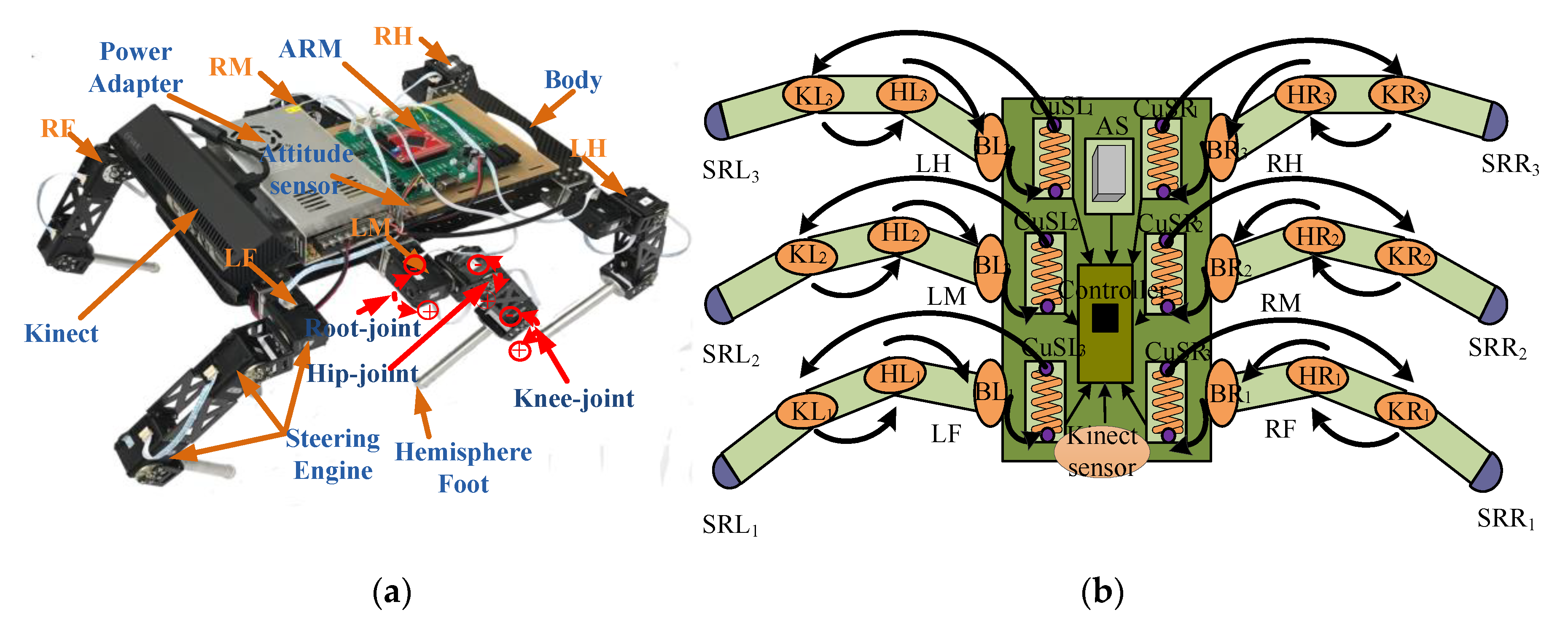

2.1. Hexapod Walking Robot: SmartHex

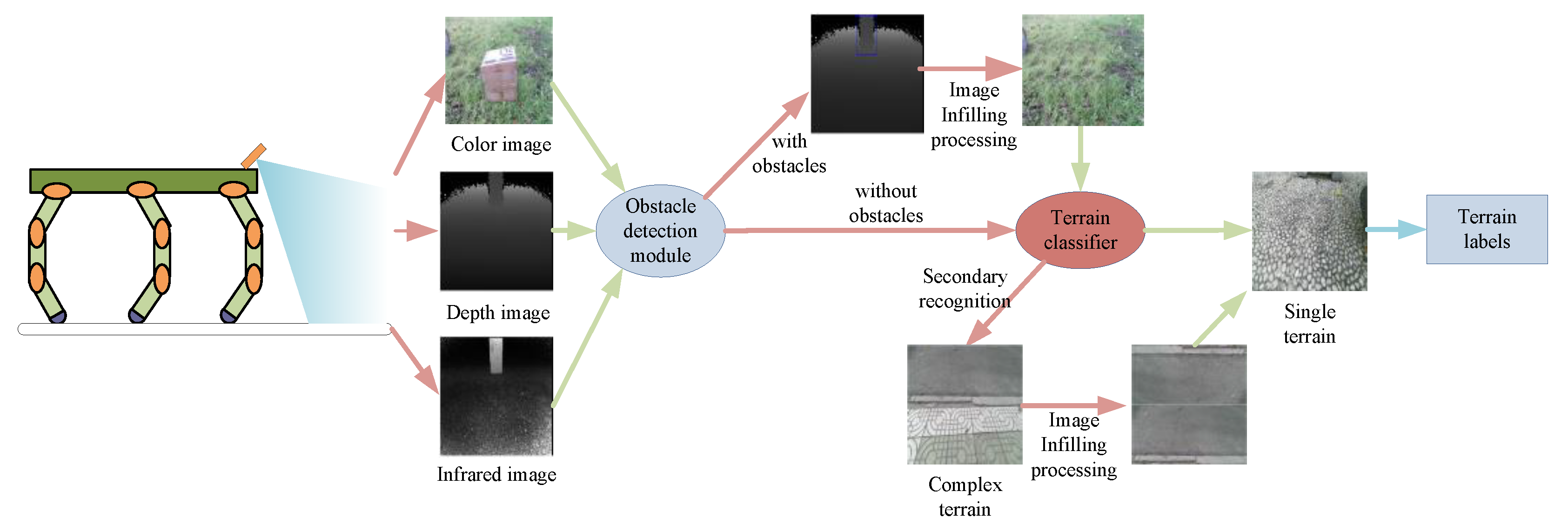

2.2. Terrain Classification Methodology

2.2.1. Obstacle Detection Module

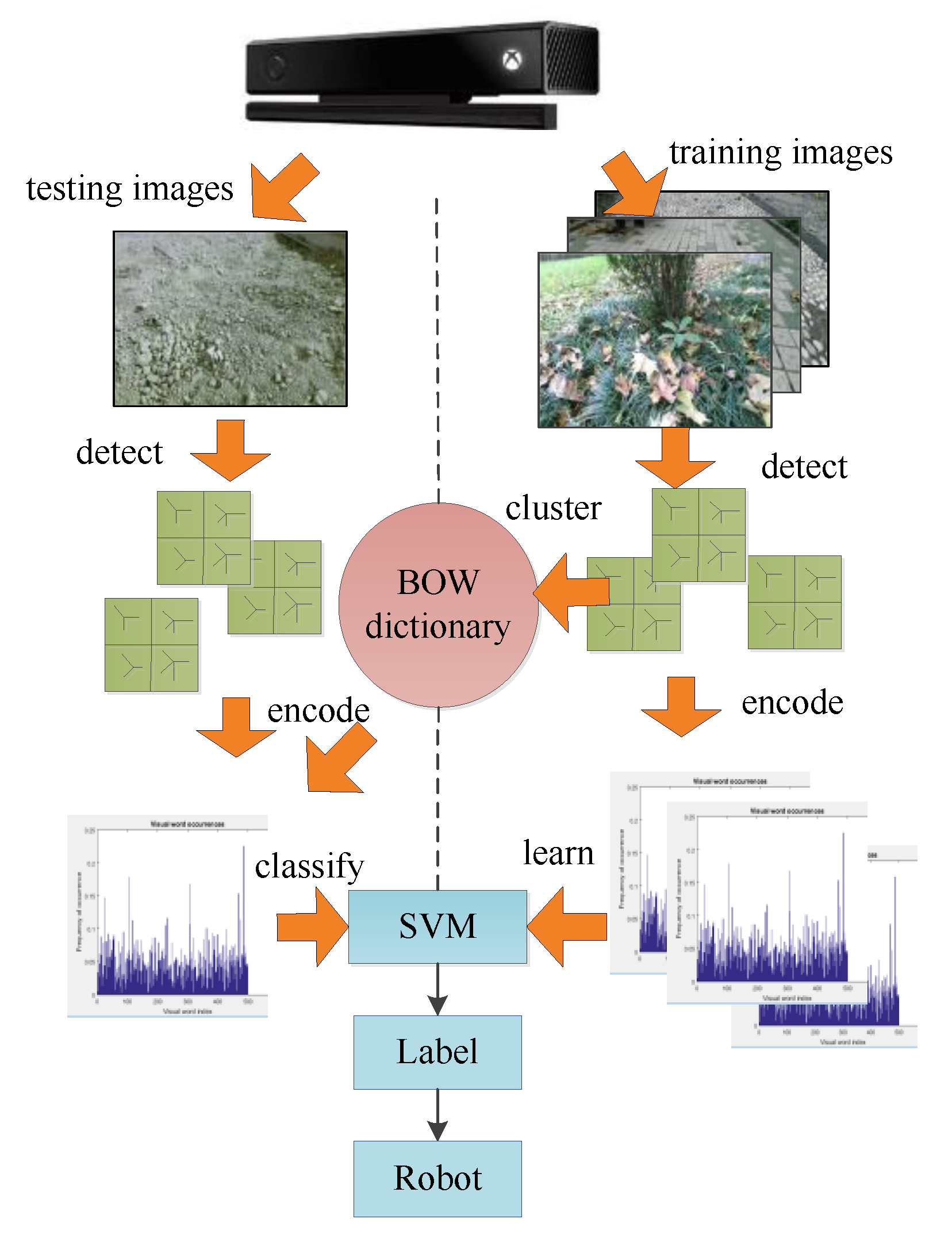

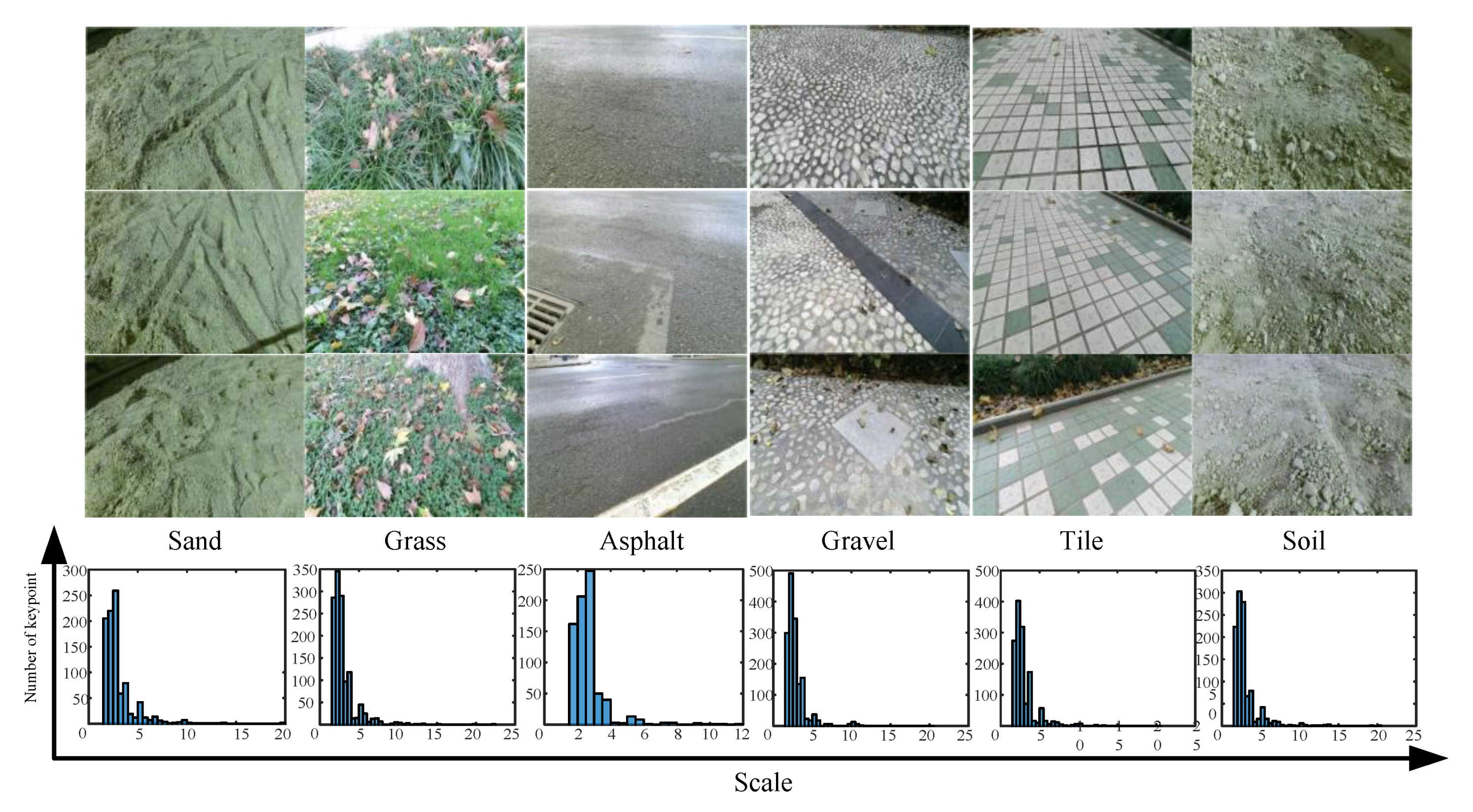

2.2.2. Terrain Classification Module

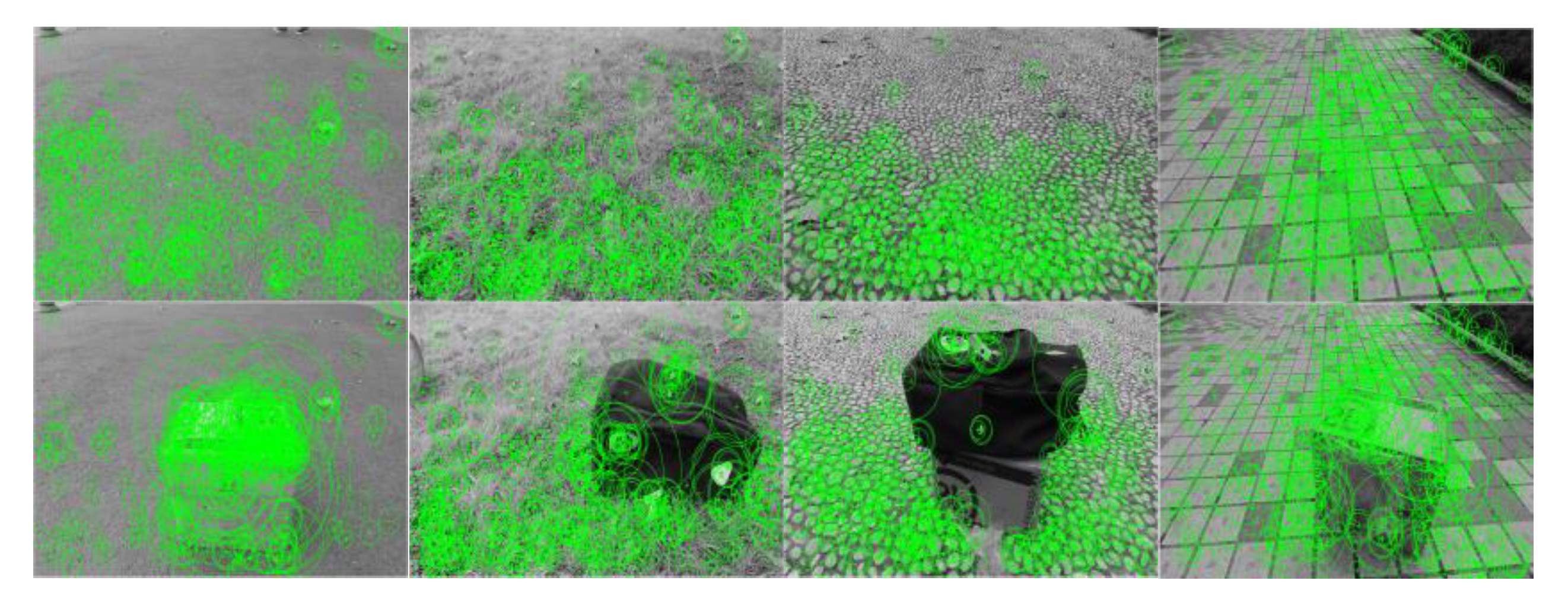

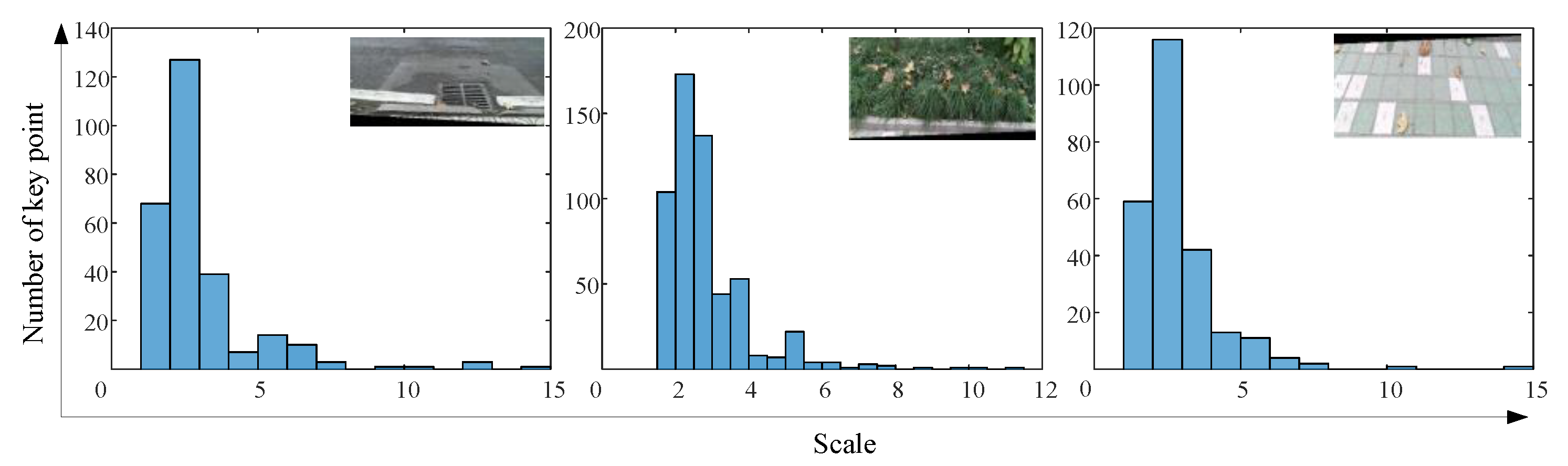

A. Point of Interesting Extracted by SURF

B. Descriptors by BRISK

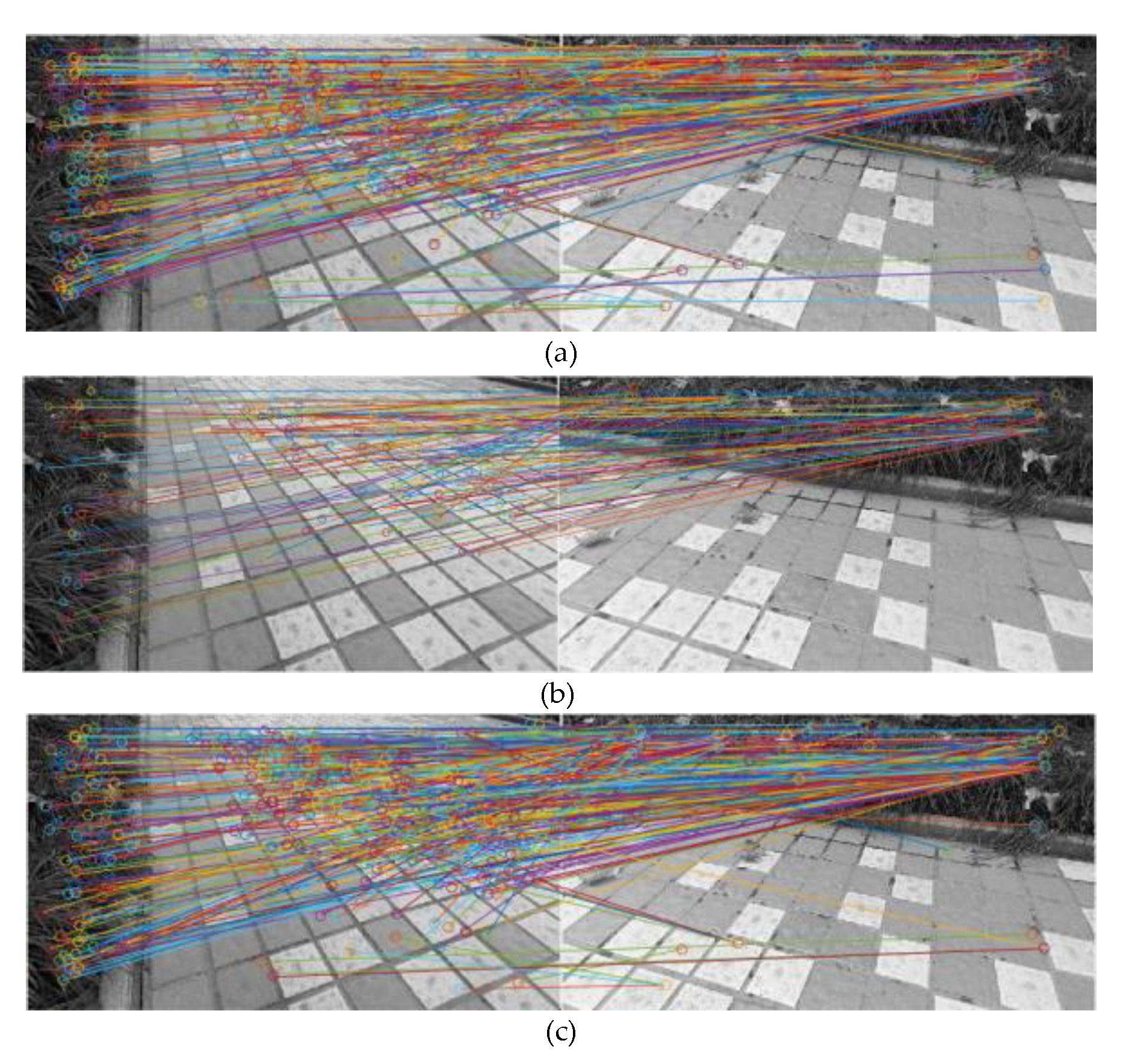

C. Local Feature Matching

D. BoW Model and SVM

2.3. Complex Terrain Recognition

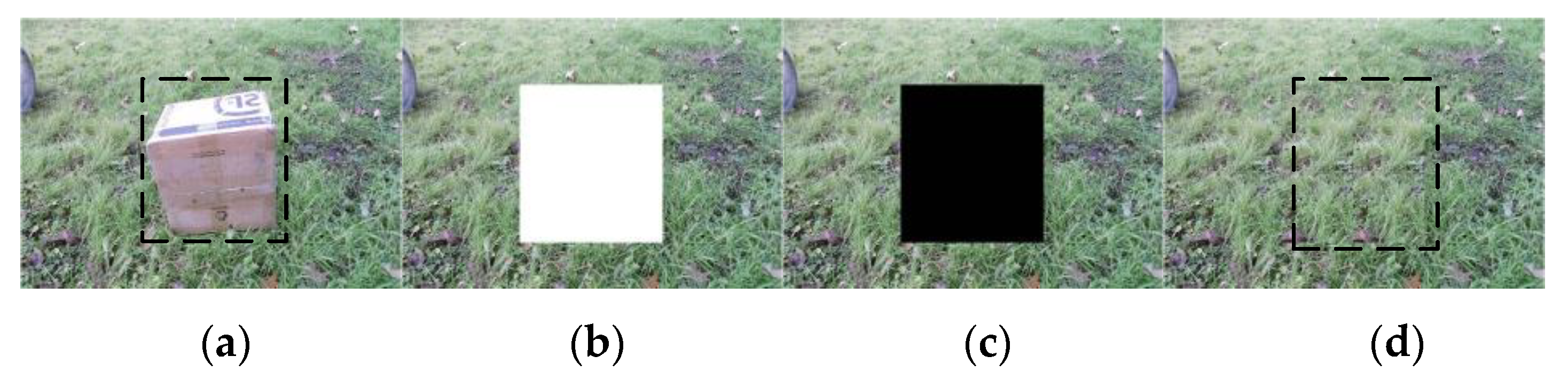

2.3.1. Image Local Infilling for Terrain with Obstacles

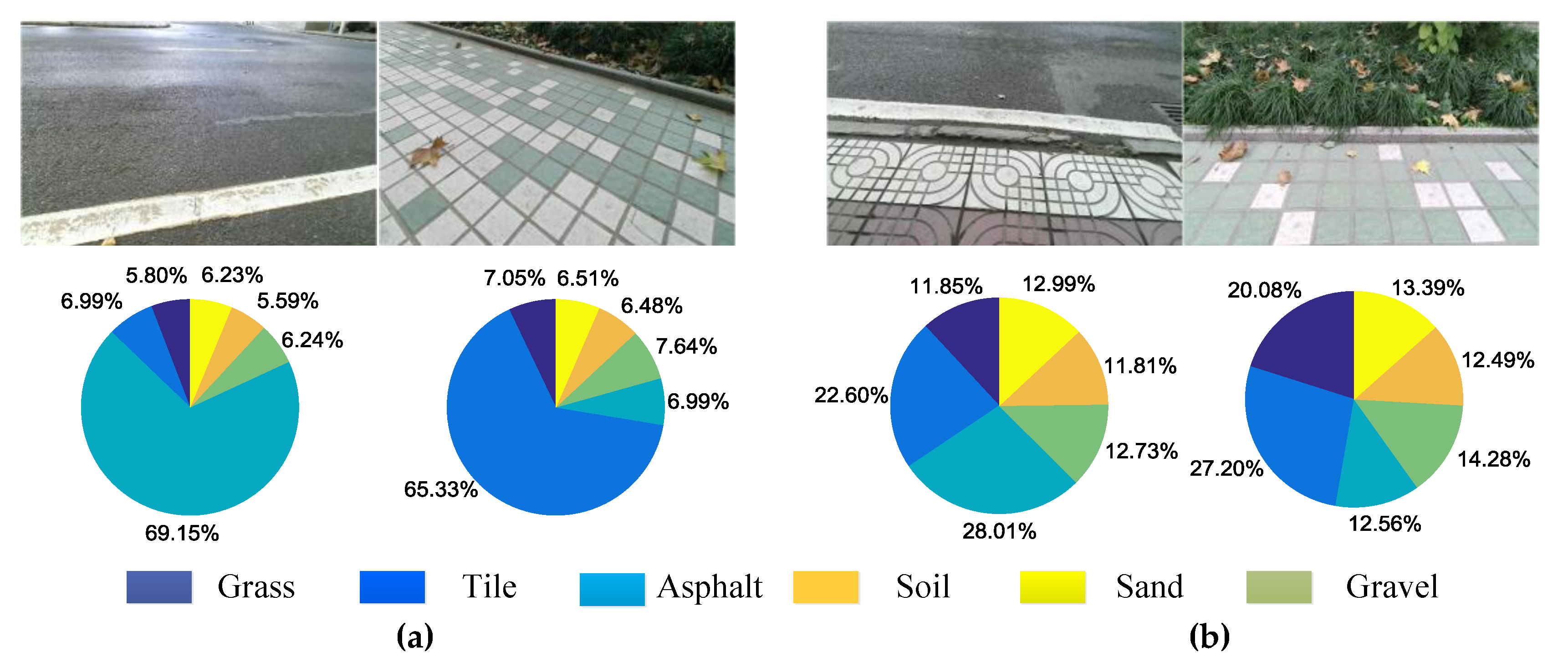

2.3.2. Image Infilling for Mixed Terrain

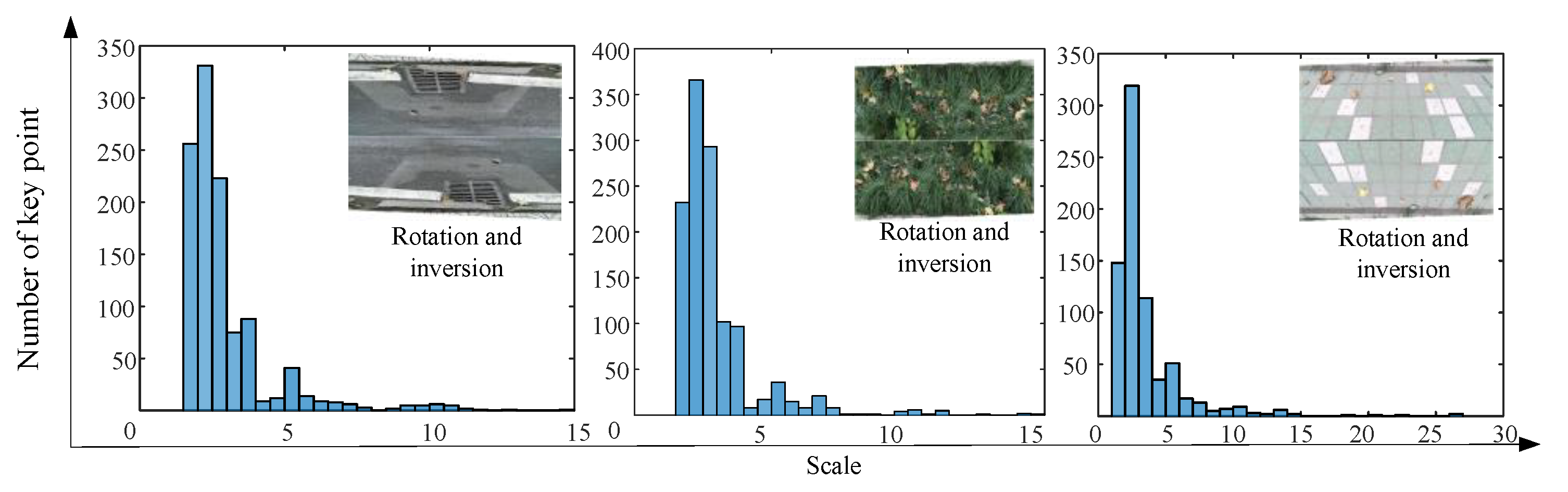

3. Results

3.1. Complex Terrain

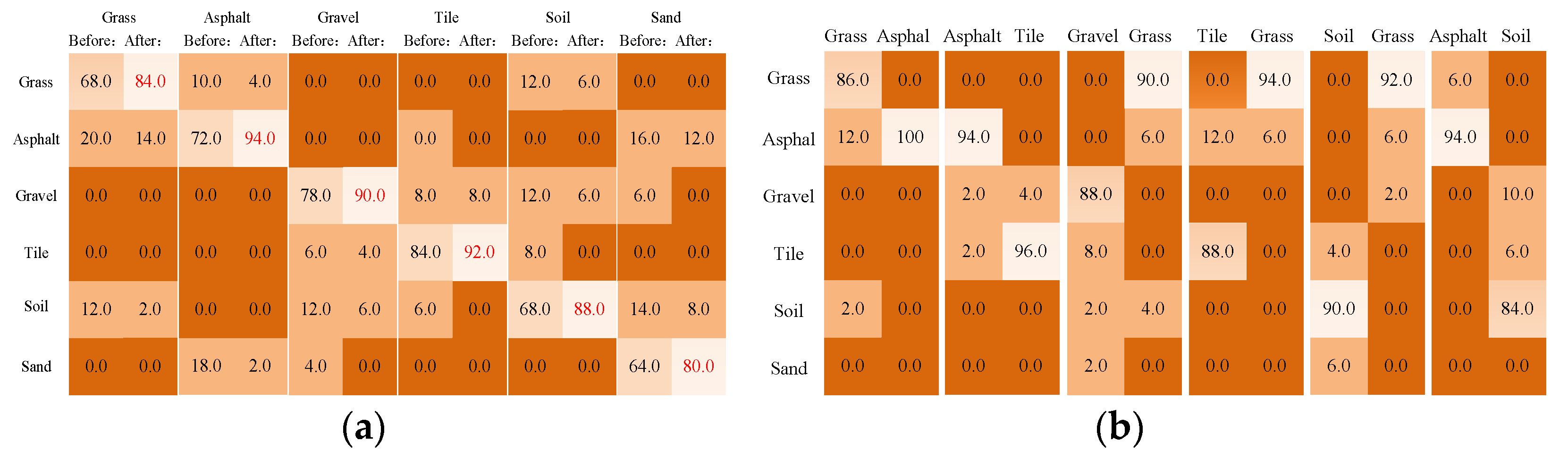

3.2. Robot Platform Application

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Yu, Z.; Chen, J.; Dai, Z. Study on Forces Simulation of Gecko Robot Moving on the Ceiling. Adv. Intell. Soft Comput. 2012, 125, 81–88. [Google Scholar] [CrossRef]

- Abbaspour, R. Design and Implementation of Multi-Sensor Based Autonomous Minesweeping Robot. In Proceedings of the International Congress on Ultra Modern Telecommunications & Control Systems & Workshops, Moscow, Russia, 18–20 October 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 443–449. [Google Scholar] [CrossRef]

- Ayers, J. Localization and Self-Calibration of a Robot for Volcano Exploration. In Proceedings of the ICRA 04 2004 IEEE International Conference on Robotics and Automation, New Orleans, LA, USA, 26 April–1 May 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 1, pp. 586–591. [Google Scholar] [CrossRef]

- Zhao, S.D.; Yuh, J.K. Experimental Study on Advanced Underwater Robot Control. IEEE Trans. Robot. 2005, 21, 695–703. [Google Scholar] [CrossRef]

- Cui, Y.; Gejima, Y.; Kobayashi, T. Study on Cartesian-Type Strawberry-Harvesting Robot. Sens. Lett. 2013, 11, 1223–1228. [Google Scholar] [CrossRef]

- Semler, L.; Furst, J. Wavelet-Based Texture Classification of Tissues in Computed Tomography. In Proceedings of the IEEE International Conference on Image Processing, Atlanta, GA, USA, 8–11 October 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 265–270. [Google Scholar]

- Paschos, G. Perceptually uniform color spaces for color texture analysis: An empirical evaluation. IEEE Trans. Image Proc. 2001, 10, 932–937. [Google Scholar] [CrossRef]

- Liu, X.; Wang, D. Texture classification using spectral histograms. IEEE Trans. Image Proc. 2003, 6, 661–670. [Google Scholar] [CrossRef]

- Pietikäinen, M.; Mäenpää, T.; Viertola, J. Color Texture Classification with Color Histograms and Local Binary Patterns; IWTAS: New York, NY, USA, 2002; pp. 109–112. [Google Scholar]

- Zenker, S.; Aksoy, E.E.; Goldschmidt, D. Visual Terrain Classification for Selecting Energy Efficient Gaits of a Hexapod Robot. In Proceedings of the IEEE/ASME International Conference, Wollongong, Australia, 9–12 July 2013; IEEE: Piscataway, NJ, USA; Advanced Intelligent Mechatronics (AIM): Marseille, France, 2013; pp. 577–584. [Google Scholar] [CrossRef]

- Khan, Y.; Komma, P.; Bohlmann, K. Grid-Based Visual Terrain Classification for Outdoor Robots Using Local Features. In Proceedings of the IEEE Symposium on Computational Intelligence in Vehicles and Transportation Systems, Paris, France, 11–15 April 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 16–22. [Google Scholar] [CrossRef]

- Kim, J.; Kim, D.; Lee, D. Non-contact Terrain Classification for Autonomous Mobile Robot. In Proceedings of the IEEE International Conference on Robotics and Biomimetics, Guilin, China, 19–23 December 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 824–829. [Google Scholar] [CrossRef]

- Ojeda, L.; Borenstein, J.; Witus, G.; Karlsen, R. Terrain characterization and classification with a mobile robot. J. Field Robot. 2006, 9, 103–122. [Google Scholar] [CrossRef]

- Larson, A.C.; Voyles, R.M.; Bae, J. Evolving Gaits for Increased Selectivity in Terrain Classification. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Sheraton Hotel and Marina, San Diego, CA, USA, 29 October–2 November 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 3691–3696. [Google Scholar] [CrossRef]

- Hoepflingen, M.A.; Remy, C.D.; Hutter, M. Terrain Classification for Legged Robots. In Proceedings of the IEEE International Conference on Robotics and Automation, ICRA 2010, Anchorage, AK, USA, 3–7 May 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 2828–2833. [Google Scholar] [CrossRef]

- Jitpakdee, R.; Maneewam, T. Neural Networks Terrain Classification Using Inertial Measurement Unit for an Autonomous Vehicle. In Proceedings of the SICE Annual Conference, Tokyo, Japan, 20–22 August 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 554–558. [Google Scholar] [CrossRef]

- Filitchkin, P.; Byl, K. Feature-Based Terrain Classification for LittleDog. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura, Portugal, 7–12 October 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1387–1392. [Google Scholar] [CrossRef]

- Zuo, L.; Wang, M.; Yang, Y. Complex Terrain Classification algorithm Based on Multi-Sensors Fusion. In Proceedings of the 32nd Chinese Control Conference (CCC), Xi’an, China, 26–28 July 2013; Inspec Accession Number: 13886652. pp. 5722–5727. [Google Scholar]

- Ugur, E.; Dogar, M.R.; Cakmak, M.; Sahin, E. The learning and use of traversability affordance using range images on a mobile robot. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; IEEE: Piscataway, NJ, USA, 2007. [Google Scholar] [CrossRef]

- Zhu, Y.G.; Jin, B. Trajectory Correction and Locomotion Analysis of a Hexapod Walking Robot with Semi-Round Rigid Feet. Sensors 2016, 9, 1392. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.G.; Jin, B. Compliance control of a legged robot based on improved adaptive control: Method and experiments. Int. J. Robot. Autom. 2016, 5, 366–373. [Google Scholar] [CrossRef]

- Zhu, Y.G.; Wu, Y.S.; Liu, Q.; Guo, T.; Qin, R.; Hui, J.Z. A backward control based on σ -Hopf oscillator with decoupled parameters for smooth locomotion of bio-inspired legged robot. Robot. Auton. Syst. 2018, 106, 165–178. [Google Scholar] [CrossRef]

- Zhu, Y.G.; Guo, T.; Liu, Q.; Zhu, Q.; Zhao, X.; Jin, B. Turning and Radius Deviation Correction for a Hexapod Walking Robot Based on an Ant-Inspired Sensory Strategy. Sensors 2017, 17, 2710. [Google Scholar] [CrossRef]

- Discant, A.; Rogozan, A. Sensors for Obstacle Detection a Survey. In Proceedings of the 30th International Spring Seminar on the Electronics Technology, Cluj-Napoca, Romania, 9–13 May 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 100–105. [Google Scholar] [CrossRef]

- Zhu, Y.G.; Yi, B.M.; Guo, T. A Simple Outdoor Environment Obstacle Detection Method Based on Information Fusion of Depth and Infrared. J. Robot. 2016, 9, 1–10. [Google Scholar] [CrossRef]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 5, 603–619. [Google Scholar] [CrossRef]

- Comaniciu, D. Rr Vision and Pattern Recognition. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Hilton Head Island, SC, USA, 15 June 2000; IEEE: Piscataway, NJ, USA, 2000; pp. 142–149. [Google Scholar] [CrossRef]

- Qin, L. Category Related BoW Model for Image Classification. J. Inf. Comput. Sci. 2015, 9, 3547–3554. [Google Scholar] [CrossRef]

- Yadav, S.; Shukla, S. Analysis of k-Fold Cross-Validation over Hold-Out Validation on Colossal Datasets for Quality Classification. In Proceedings of the IEEE International Conference on Advanced Computing, Bhimavaram, India, 27–28 February 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 71–83. [Google Scholar] [CrossRef]

- Leutenegger, S.; Chli, M.; Siegwart, R. BRISK: Binary Robust Invariant Scalable Keypoints. In Proceedings of the 2011 IEEE International Conference on Computer Vision-ICCV, Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA, 2011; Volume 11, pp. 2548–2555. [Google Scholar] [CrossRef]

- Cui, Z.; Li, Z. Two kinds of improved template matching recognition algorithm. Comput. Eng. Des. 2006, 6, 1083–1084. [Google Scholar]

- Fei-Fei, L.; Perona, P. A Bayesian Hierarchical Model for Learning Natural Scene Categories. In Proceedings of the Conference on IEEE Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–26 June 2005; IEEE Computer Society: Washington, DC, USA, 2005; pp. 524–531. [Google Scholar] [CrossRef]

- Wang, Q.; Wang, C. Review of k-means algorithm for clustering. Electr. Des. Eng. 2014, 6, 479–484. [Google Scholar]

- Chapelle, O. Training a Support Vector Machine in the Primal. Neural Comput. 2007, 19, 1155–1178. [Google Scholar] [CrossRef] [Green Version]

- Ren, X.; Malik, J. Learning a Classification Model for Segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; IEEE: Piscataway, NJ, USA, 2003; pp. 11–17. [Google Scholar] [CrossRef]

- Song, X.; Zhou, L.; Li, Z. Review on superpixel methods in image segmentation. J. Image Gr. 2015, 20, 599–608. [Google Scholar]

- Lee, S.Y.; Kwak, D.M. A terrain Classification Method for UGV Autonomous Navigation Based on SURF. In Proceedings of the International Conference on Ubiquitous Robots & Ambient Intelligence, Incheon, Korea, 23–26 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 303–306. [Google Scholar] [CrossRef]

- Ordonez, C. Terrain identification for R Hex-type robots. Unmanned Syst. Technol. XV 2013, 3, 292–298. [Google Scholar] [CrossRef]

- Holder, C.J.; Breckon, T.P. From On-Road to Off: Transfer Learning Within a Deep Convolutional Neural Network for Segmentation and Classification of Off-Road Scenes. Springer Int. Publ. 2016, 9, 149–162. [Google Scholar] [CrossRef]

- Dallaire, P. Learning Terrain Types with the Pitman-Yor Process Mixtures of Gaussians for a Legged Robot. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 3457–3463. [Google Scholar] [CrossRef]

- Manduchi, R.; Castano, A.; Talukder, A. Obstacle Detection and Terrain Classification for Autonomous Off-Road Navigation. Auton. Robots 2005, 1, 81–102. [Google Scholar] [CrossRef]

| Descriptor Type | Detection Time (ms) | Matching Time (ms) |

|---|---|---|

| SURF | 852 | 1251 |

| BRISK | 127 | 98 |

| SURF-BRISK | 765 | 142 |

| Images with Obstacle |  |  |  |  |  | |

| First round | Asphalt | Asphalt | Asphalt | Asphalt | Asphalt | |

| Actual terrain | Grass | Grass | Grass | Grass | Gravel | |

| ILI | White | Asphalt | Asphalt | Tile | Tile | Tile |

| Black | Asphalt | Asphalt | Asphalt | Tile | Tile | |

| Terrain | Grass | Grass | Grass | Grass | Gravel | |

| Images |  |  |  |  |  | |||||

| Actual terrain | Tile | Grass | Tile | Grass | Grass | |||||

| Output label | Asphalt | Tile | Asphalt | Asphalt | Soil | |||||

| R-I | Tile | Grass | Tile | Grass | Grass | |||||

| Scores | Before | After | Before | After | Before | After | Before | After | Before | After |

| Sand | 10.07 | 9.56 | 15.36 | 14.58 | 12.64 | 10.43 | 11.19 | 12.41 | 10.53 | 8.72 |

| Grass | 8.42 | 8.01 | 21.65 | 28.05 | 15.68 | 13.05 | 26.43 | 37.45 | 34.23 | 45.18 |

| Asphalt | 31.41 | 15.68 | 16.47 | 15.12 | 19.29 | 15.64 | 25.37 | 14.56 | 14.76 | 10.63 |

| Gravel | 14.55 | 16.56 | 15.83 | 16.45 | 15.67 | 13.54 | 14.36 | 13.22 | 11.7 | 8.35 |

| Tile | 22.01 | 36.05 | 17.26 | 14.67 | 22.36 | 31.73 | 10.27 | 8.68 | 18.29 | 15.64 |

| Soil | 13.54 | 14.14 | 13.43 | 11.13 | 14.37 | 15.62 | 12.38 | 13.69 | 10.5 | 11.48 |

| Initialize G∈[0.5 1]; SD∈{Dij = 0, j = 1, 2, … , 6; i = 1, 2, … , 2n}; B∈{0, 1}; n = 0; Ti∈{1, 2, …, 6} |

| Repeat: |

| (1) Collect terrain images: color, depth, and infrared; |

| (2) Run the obstacle detection module and output B |

| if B = 1 then |

| Run image infilling processing I |

| Jump to (2) |

| else if B = 0 then |

| continue |

| end |

| (3) Run the terrain classifier module and output SD |

| for i = 1; i ≤ 2n; i++ |

| if max {Dij, (j = 1, 2, …, 6)} < 0.3 then |

| n++ |

| Run image segmentation processing |

| Run image infilling processing II |

| Jump to repeat (3); |

| Else if |

| output the subscript j of max {Dij, (j = 1, 2, …, 6)}; |

| Ti = j |

| end |

| end |

| (4) Output classification results and gait G |

| for i = 1; i ≤ 2n–1; i++ |

| if Ti = 1 or 2 then G = 0.5 |

| else if Ti = 3 or 4 then G = 0.75 |

| else if Ti = 5 or 6 then G = 0.83 |

| end |

| end |

| T = T1,T2,T3, …, T2n–1 |

| (5) Run the robot |

| Until: The robot is switched off. |

| Note: G is the walking gait; typically, 0.5 for tripod gait, 0.75 for quadruped gait, and 0.83 for wave gait. SD is the confidence score; j refers to terrain type: 1 for asphalt, 2 for tile, 3 for soil, 4 for gravel, 5 for sand, 6 for grass; i is the serial number of images; B refers to the result of obstacle detection: B = 1 means there is an obstacle, B = 0 means no obstacle. Ti is the output label of the terrain classifier. Image infilling processing I represents the ILI module, and image infilling processing II represents the SPI module. |

| Author or Method (Year) | Feature | Classification Method | Number of Terrains | For Mixed Terrain? | Application |

|---|---|---|---|---|---|

| Khan (2011) [8] | SURF/DAISY | SVM | 5 | No | Visual terrain classification |

| Zenker (2013) [11] | SURF/SIFT | SVM | 8 | No | Energy-efficient gait |

| Filitchkin (2012) [17] | SURF | SVM | 6 | Yes | Selecting predetermined gaits |

| Lee (2011) [37] | SURF | ANN | 5 | No | Off-road terrain classification for UGV |

| Ordonez (2013) [38] | Characteristic frequency of leg current | PNN | 4 | No | Robot planning and motor control |

| Holder (2016) [39] | Convolutional encoder–decoder | CNN/SVM | 12 | Yes | Real-time road-scene understanding |

| Dallaire (2015) [40] | Tactile data | Mixture of Gaussians | 12 | No | Gait switching |

| Manduchi (2005) [41] | Color-based | Mixture of Gaussians | 3 | No | Recognizing different terrains and obstacles |

| This paper (2017) | SURF-BRISK | SVM | 6 | Yes | All kinds of outdoor robots |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Y.; Jia, C.; Ma, C.; Liu, Q. SURF-BRISK–Based Image Infilling Method for Terrain Classification of a Legged Robot. Appl. Sci. 2019, 9, 1779. https://doi.org/10.3390/app9091779

Zhu Y, Jia C, Ma C, Liu Q. SURF-BRISK–Based Image Infilling Method for Terrain Classification of a Legged Robot. Applied Sciences. 2019; 9(9):1779. https://doi.org/10.3390/app9091779

Chicago/Turabian StyleZhu, Yaguang, Chaoyu Jia, Chao Ma, and Qiong Liu. 2019. "SURF-BRISK–Based Image Infilling Method for Terrain Classification of a Legged Robot" Applied Sciences 9, no. 9: 1779. https://doi.org/10.3390/app9091779

APA StyleZhu, Y., Jia, C., Ma, C., & Liu, Q. (2019). SURF-BRISK–Based Image Infilling Method for Terrain Classification of a Legged Robot. Applied Sciences, 9(9), 1779. https://doi.org/10.3390/app9091779