ARPS: A Framework for Development, Simulation, Evaluation, and Deployment of Multi-Agent Systems

Abstract

:1. Introduction

2. Related Work

Agent-Based Modelling (ABM)

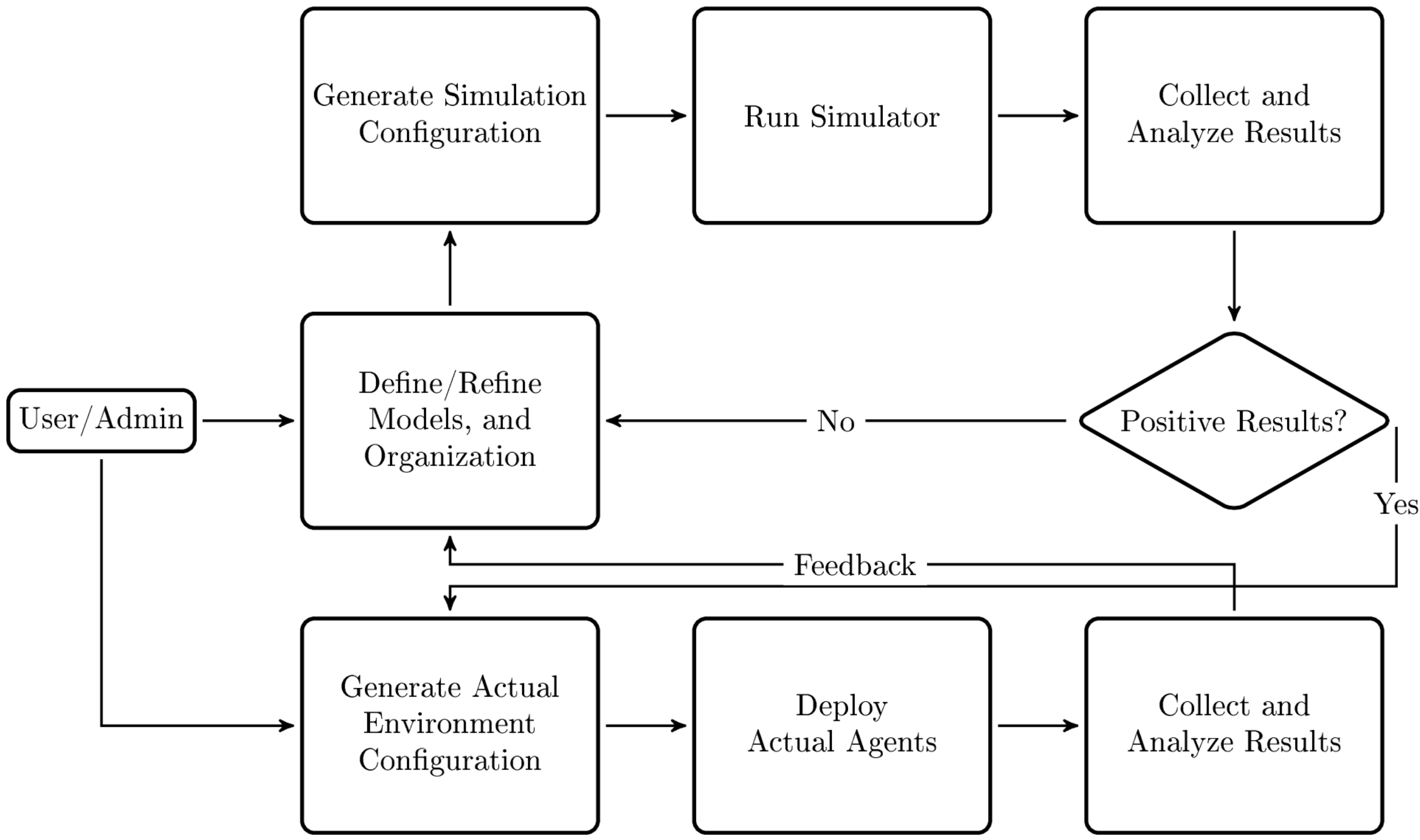

3. Our Approach

3.1. Background

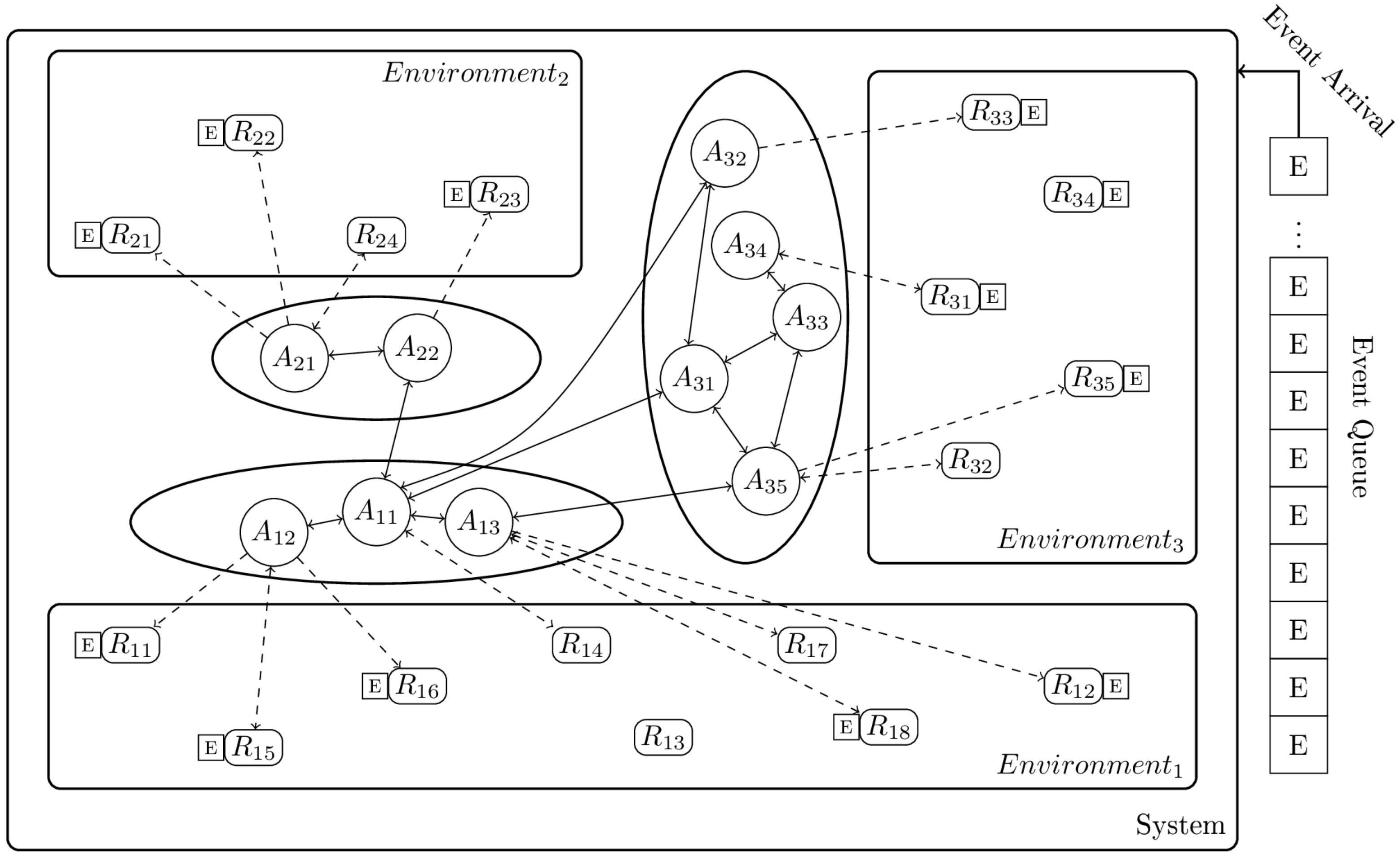

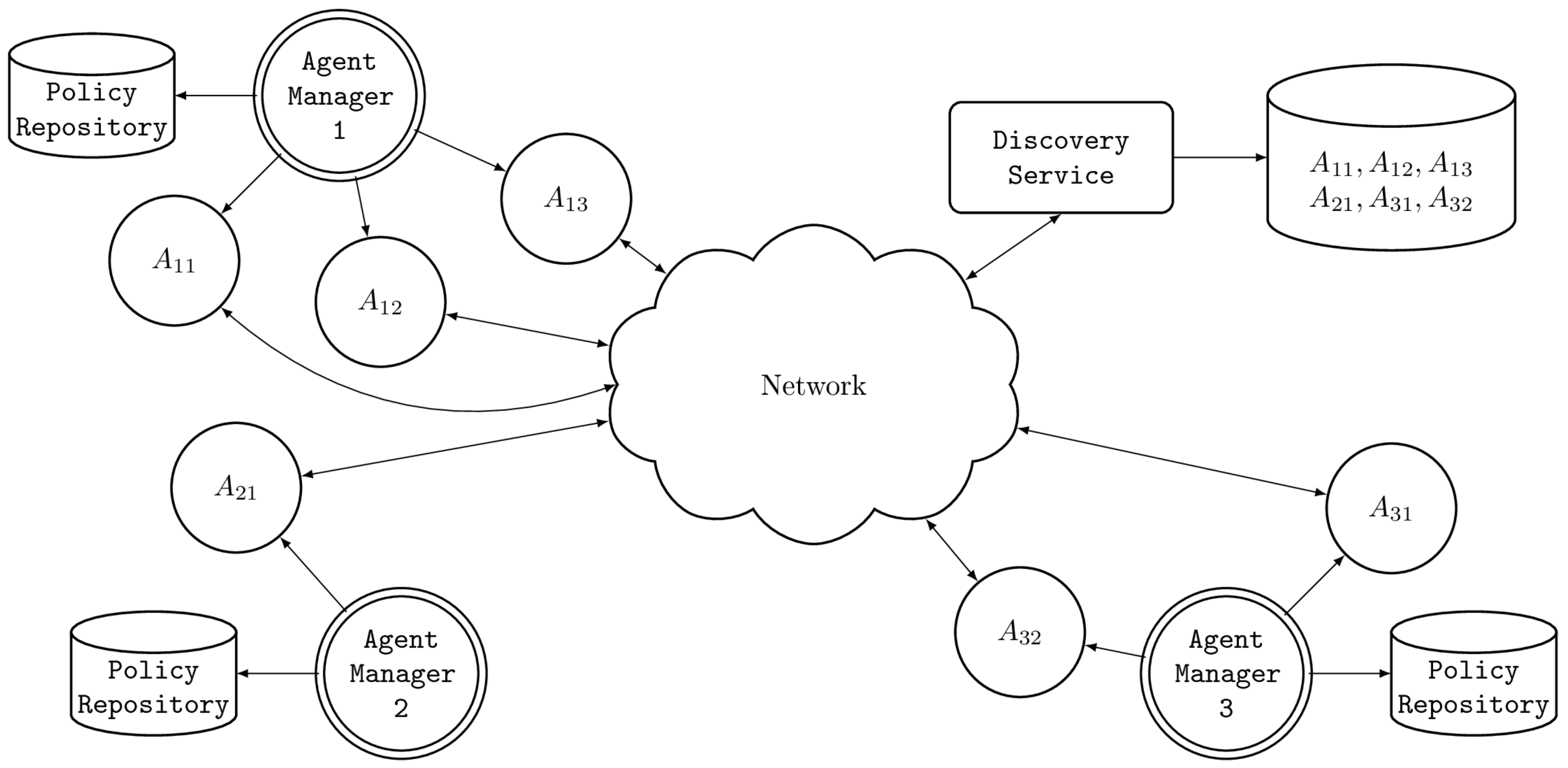

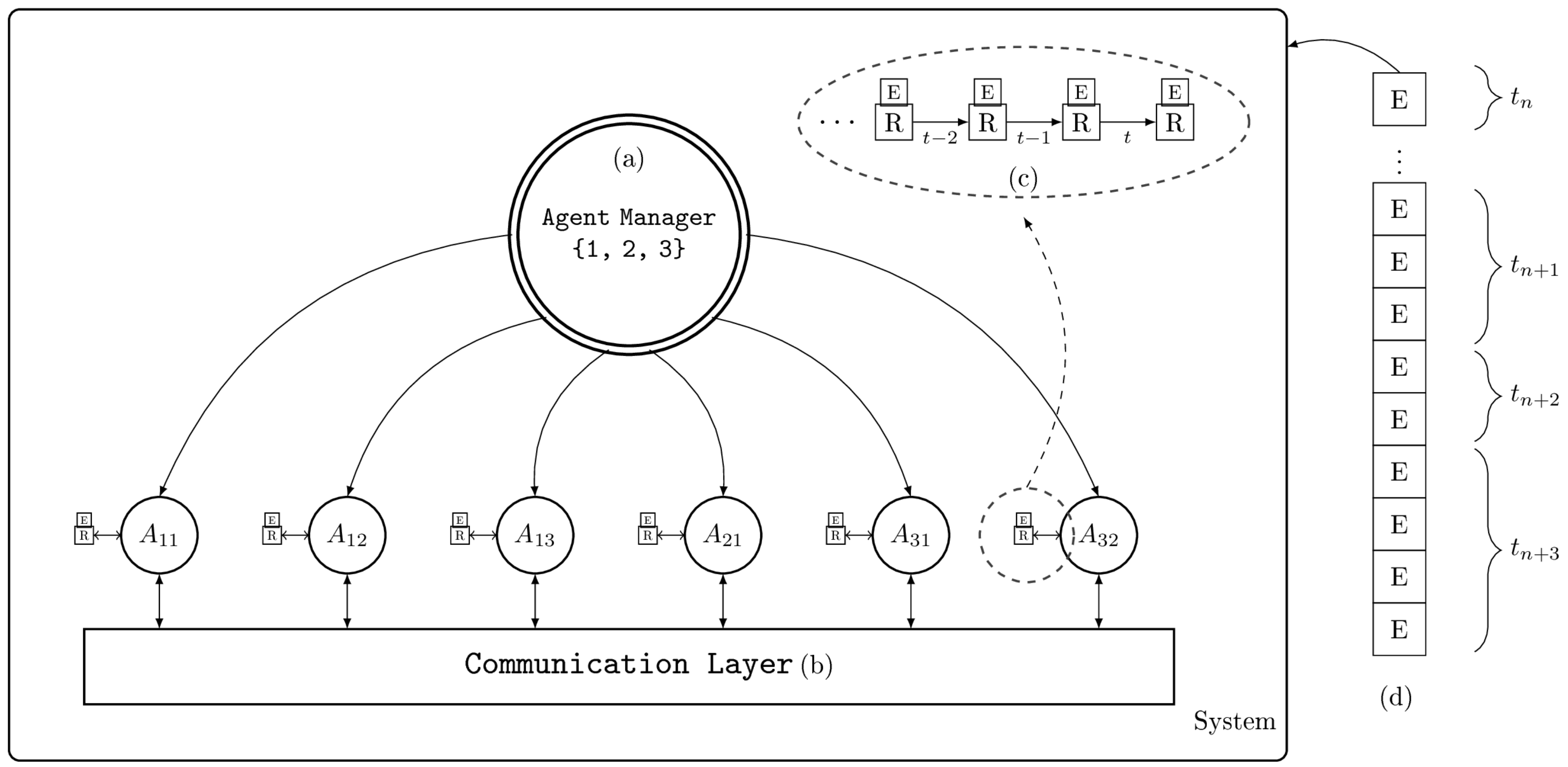

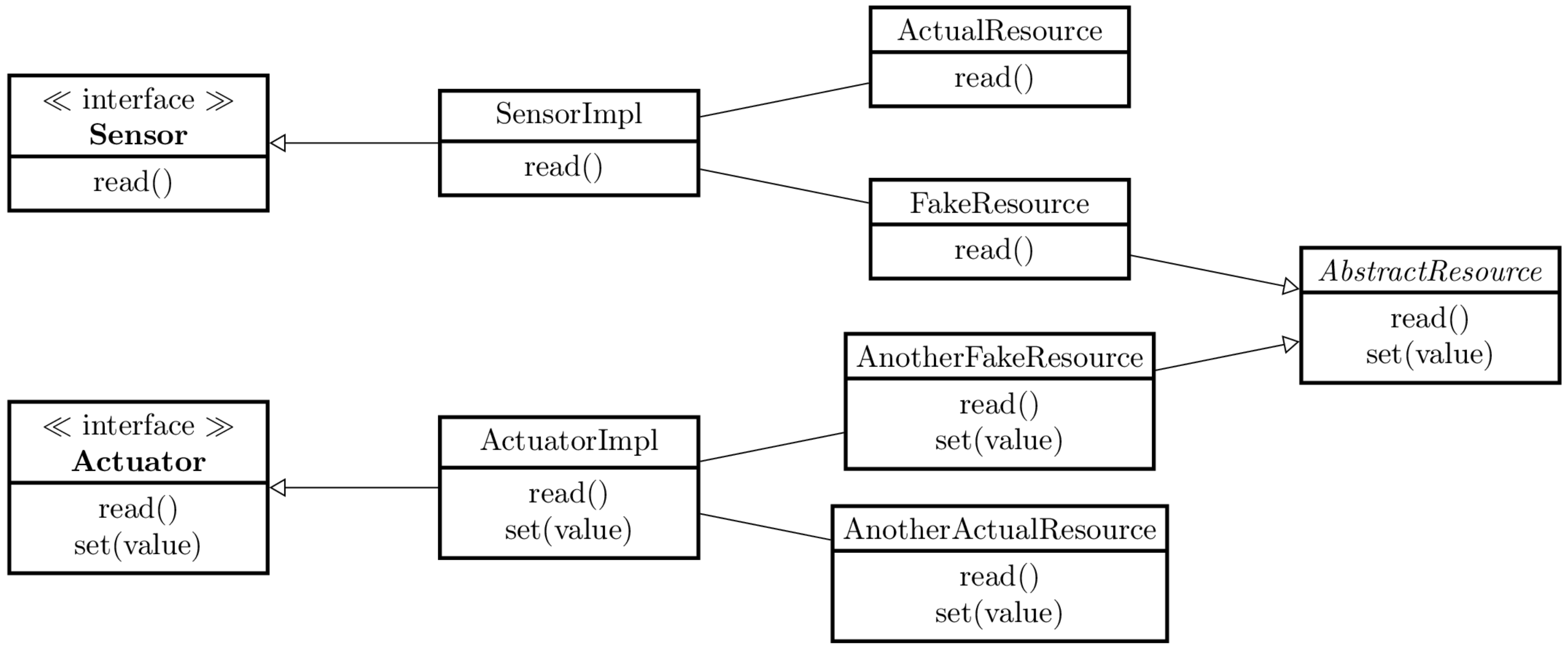

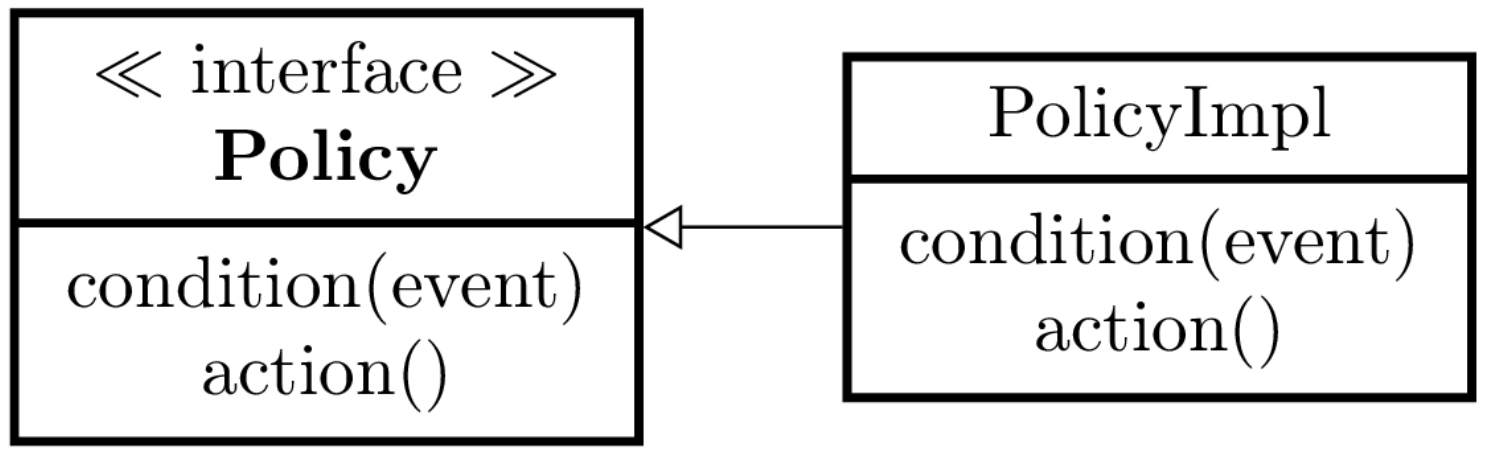

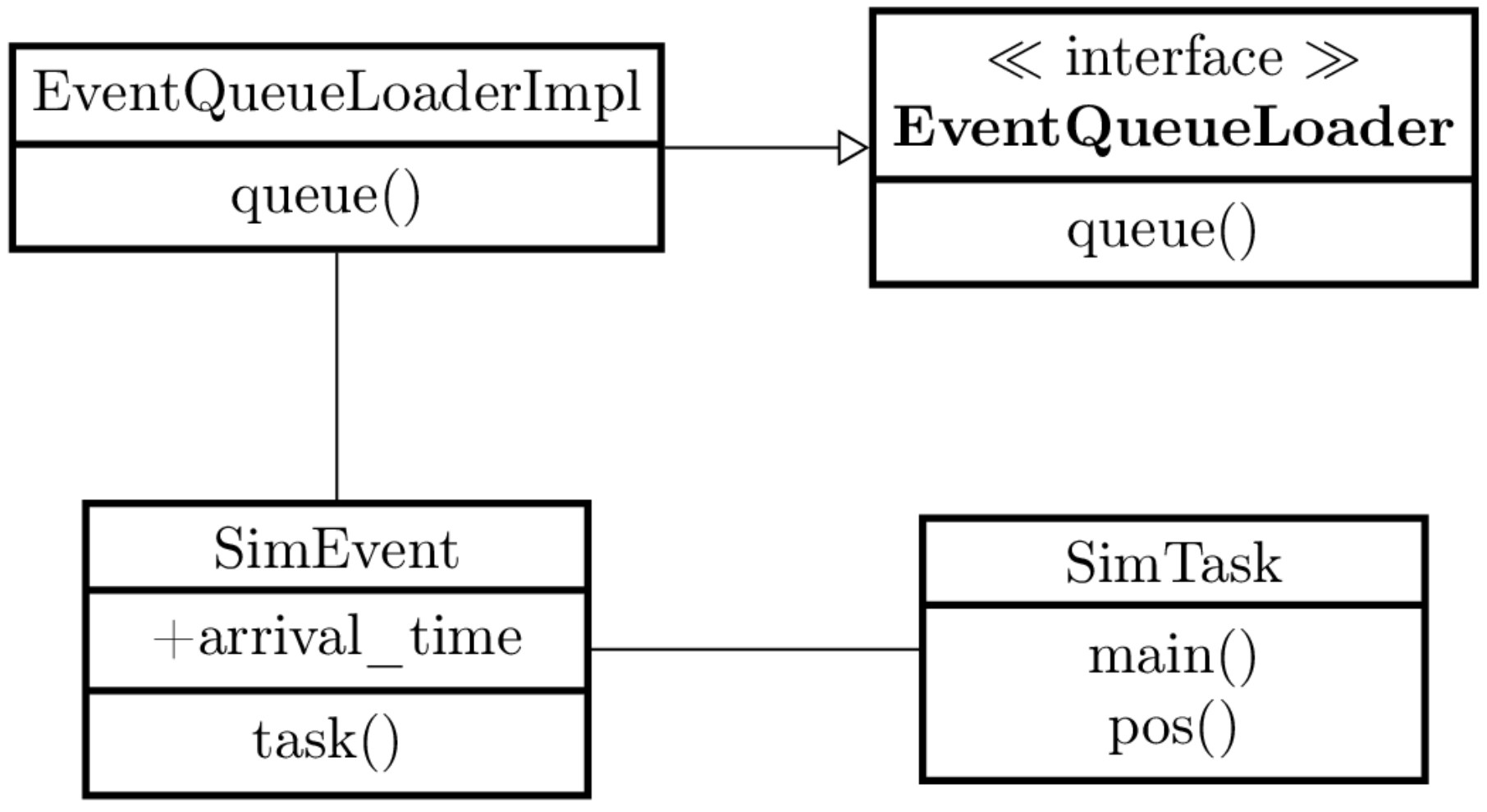

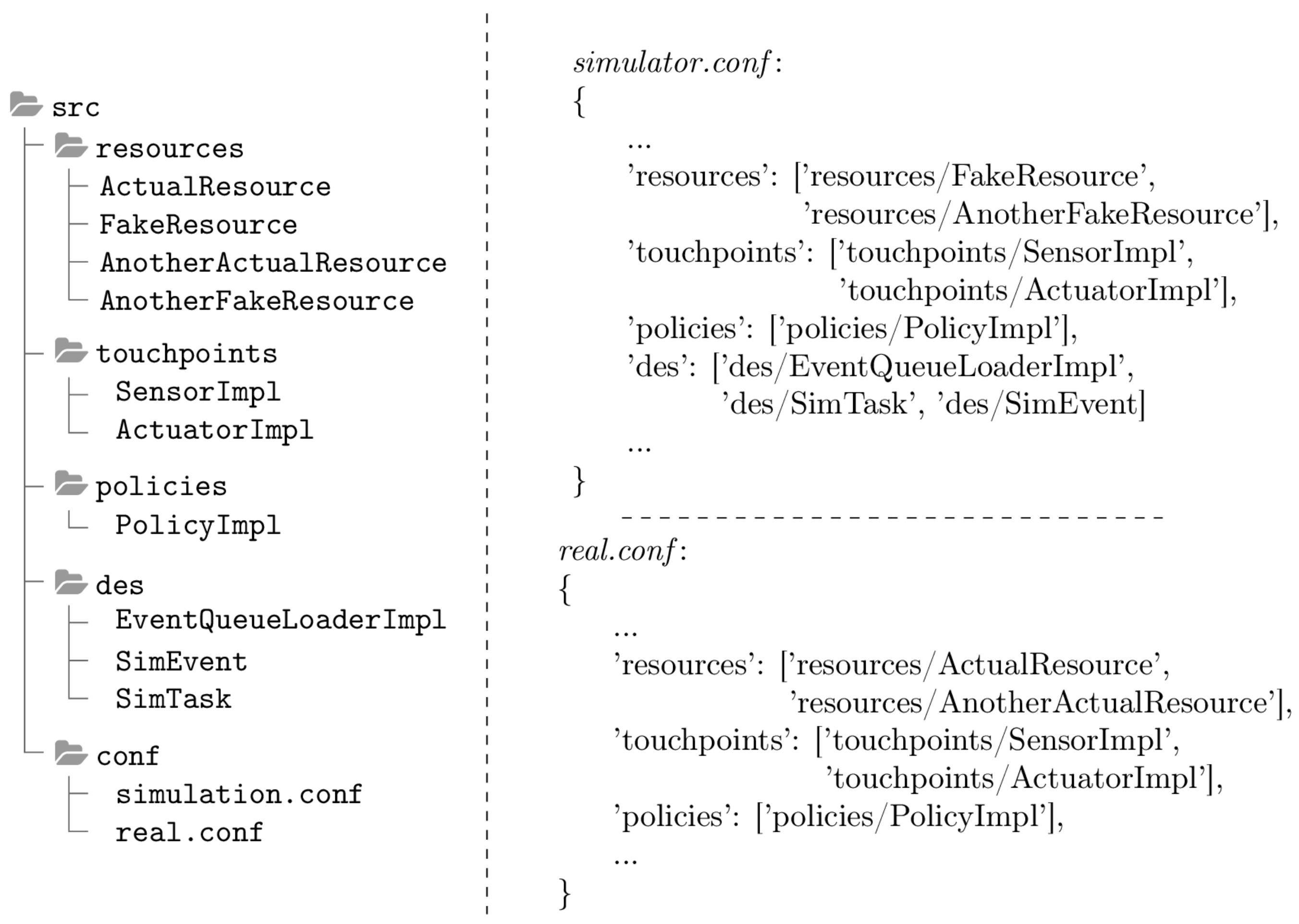

3.2. Architecture

3.2.1. Interoperability

3.2.2. Agent Model

3.2.3. Simulation

4. Demonstration of the Framework

4.1. ARPS Framework Usage

- Collect data about the resources in the environment using the available sensors.

- Create a model of the environment, construct the resources behaviour, their relationship with each other.

- Create the actuators and the policies to drive the agents based on the available resources.

- Create a model of the events that will modify the environment.

- Run the simulation, take a snapshot of the environment containing the agents deployed along with their policies, and collect the results in the CSV format.

- Use more suitable external tools to evaluate the results.

- Use a snapshot to deploy the agent managers and their agents in the real environment.

- Modify the environment model, the policies, or the events and re-run step 4 to improve the agents in the actual environment.

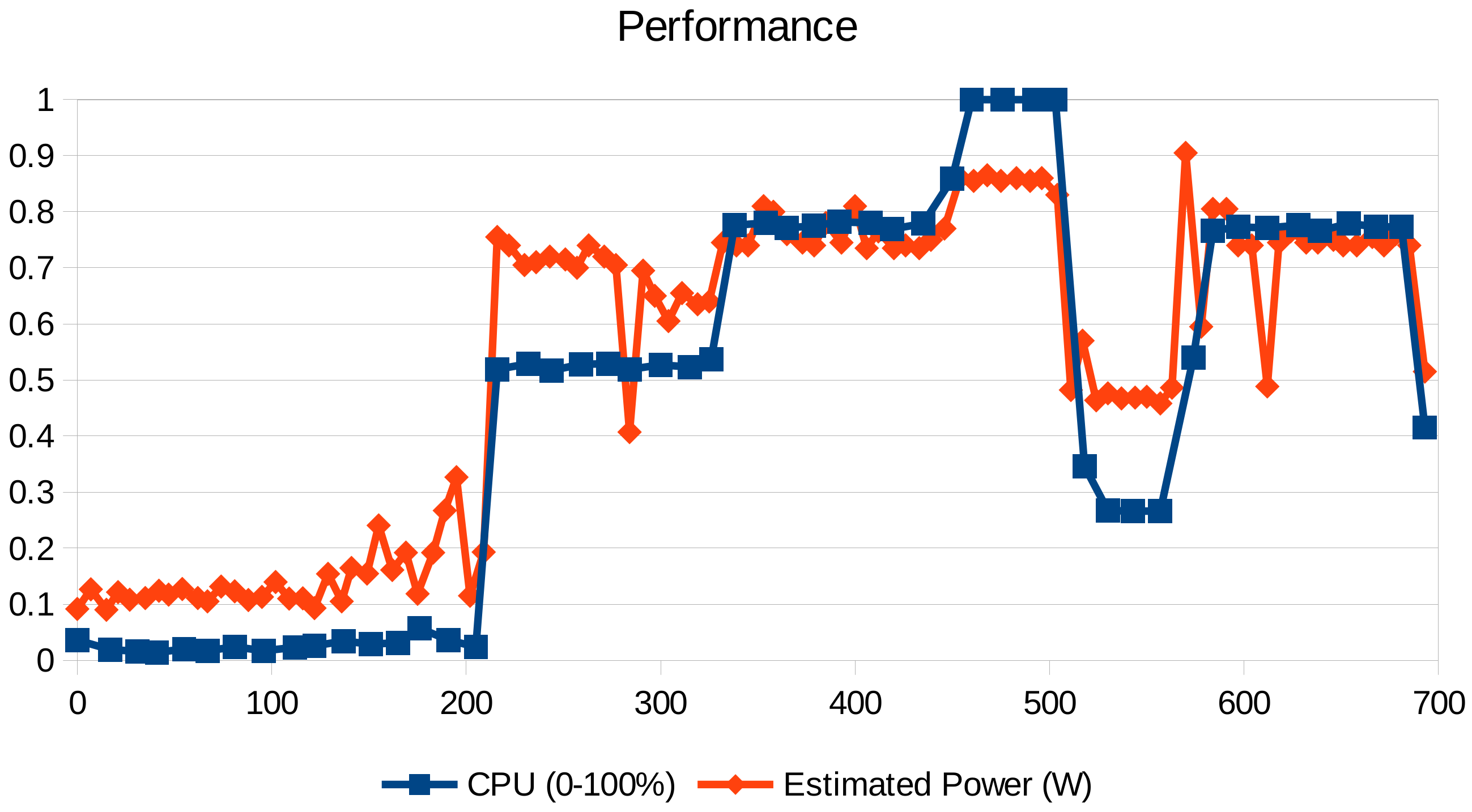

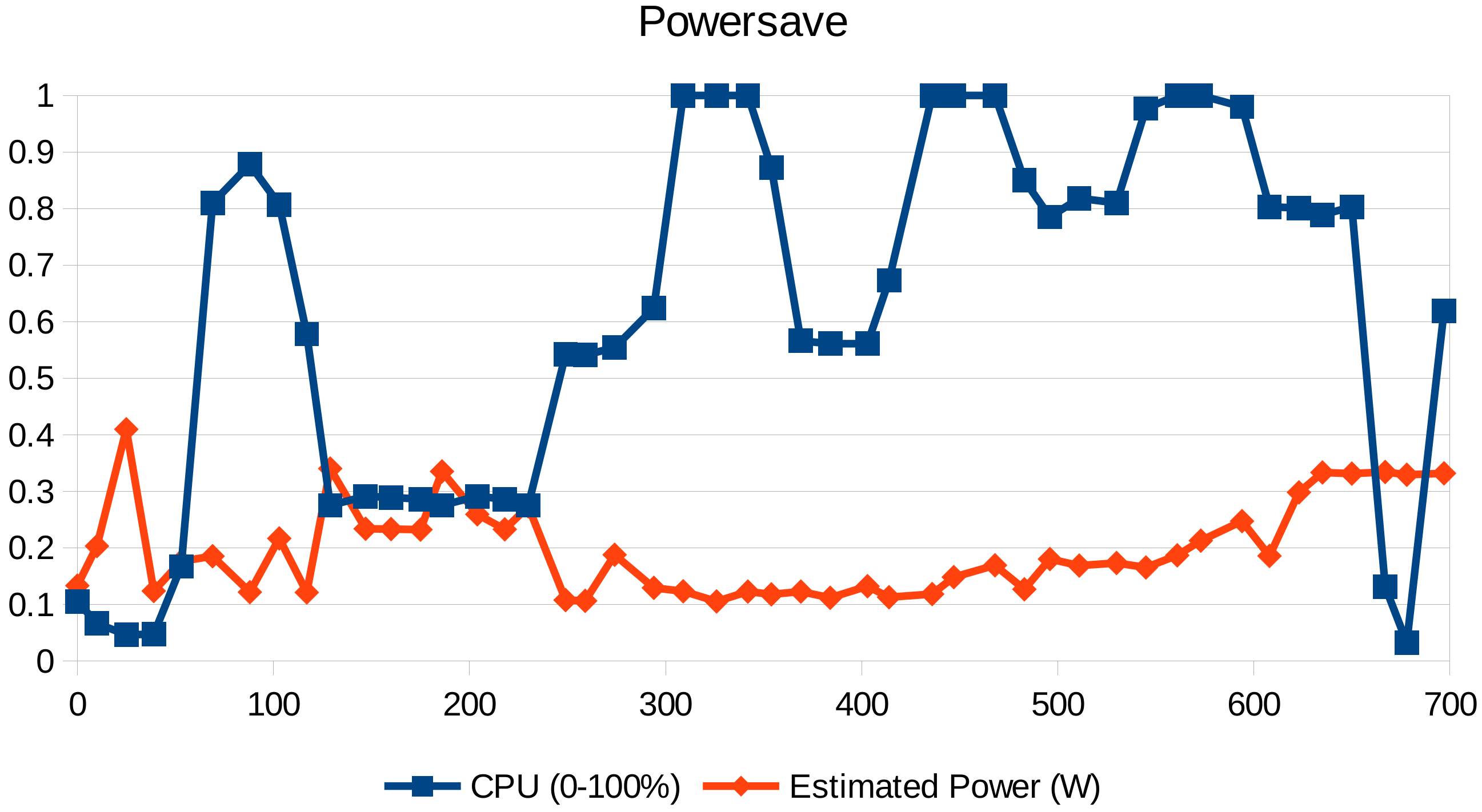

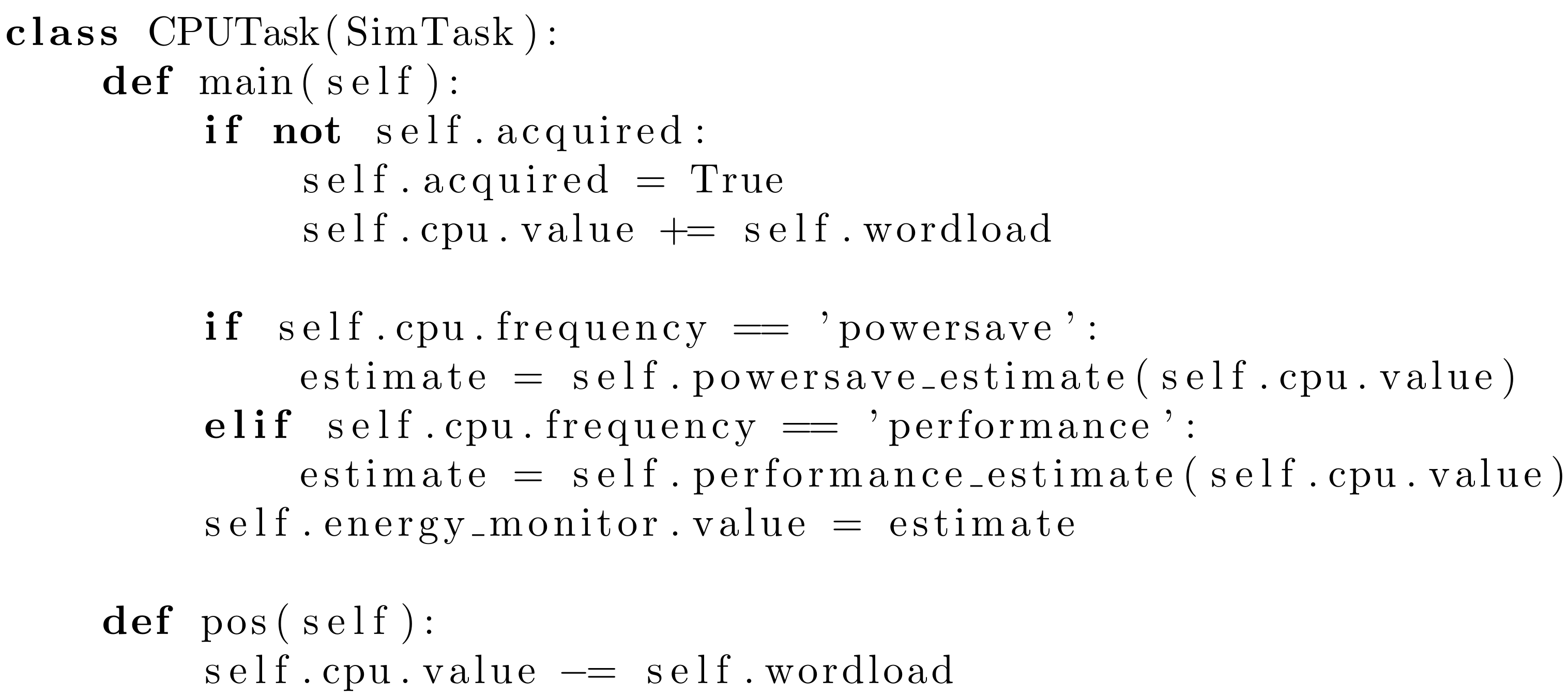

4.2. Example: Data Centre Management

5. Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Wangermann, J.P.; Stengel, R.F. Optimization and coordination of multiagent systems using principled negotiation. J. Guid. Control Dyn. 1999, 22, 43–50. [Google Scholar] [CrossRef]

- Roche, R.; Blunier, B.; Miraoui, A.; Hilaire, V.; Koukam, A. Multi-agent systems for grid energy management: A short review. In Proceedings of the 36th Annual Conference on IEEE Industrial Electronics Society (IECON 2010), Glendale, AZ, USA, 7–10 November 2010. [Google Scholar] [CrossRef]

- Hadeli; Valckenaers, P.; Kollingbaum, M.; Brussel, H.V. Multi-Agent Coordination and Control Using Stigmergy. Comput. Ind. 2004, 53, 75–96. [Google Scholar] [CrossRef]

- Sislak, D.; Rehak, M.; Pechoucek, M. A-globe: Multi-Agent Platform with Advanced Simulation and Visualization Support. In Proceedings of the 2005 IEEE/WIC/ACM International Conference on Web Intelligence (WI’05), Compiegne, France, 19–22 September 2005. [Google Scholar] [CrossRef]

- Pěchouček, M.; Mařík, V. Industrial Deployment of Multi-Agent Technologies: Review and Selected Case Studies. Auton. Agents Multi-Agent Syst. 2008, 17, 397–431. [Google Scholar] [CrossRef]

- Open Source Initiative. The MIT License. 1988. Available online: https://opensource.org/licenses/MIT (accessed on 20 November 2016).

- Kravari, K.; Bassiliades, N. A Survey of Agent Platforms. J. Artif. Soc. Soc. Simul. 2015, 18, 1–11. [Google Scholar] [CrossRef]

- Bellifemine, F.; Bergenti, F.; Caire, G.; Poggi, A. Jade—A Java Agent Development Framework. In Multi-Agent Programming; Springer: Boston, MA, USA, 2005; pp. 125–147. [Google Scholar] [CrossRef]

- Poslad, S. Specifying Protocols for Multi-Agent Systems Interaction. ACM Trans. Auton. Adapt. Syst. 2007, 2, 15. [Google Scholar] [CrossRef]

- Šišlák, D.; Rehák, M.; Pěchouček, M.; Rollo, M.; Pavlíček, D. A-globe: Agent Development Platform with Inaccessibility and Mobility Support. In Software Agent-Based Applications, Platforms and Development Kits; Springer: Birkhäuser, Basel, 2005; pp. 21–46. [Google Scholar] [CrossRef]

- Helsinger, A.; Thome, M.; Wright, T. Cougaar: A scalable, distributed multi-agent architecture. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics (IEEE Cat. No.04CH37583), The Hague, The Netherlands, 10–13 October 2004. [Google Scholar] [CrossRef]

- Pokahr, A.; Braubach, L.; Lamersdorf, W. Jadex: A BDI Reasoning Engine. In Multi-Agent Programming; Springer: Boston, MA, USA, 2005; pp. 149–174. [Google Scholar] [CrossRef]

- Rao, A.S.; Georgeff, M.P. BDI Agents: From Theory to Practice. In Proceedings of the ICMAS: International Conference on Multi-Agent Systems, San Francisco, CA, USA; 1995; Volume 95, pp. 312–319. [Google Scholar]

- Boissier, O.; Bordini, R.H.; Hübner, J.F.; Ricci, A.; Santi, A. Multi-Agent Oriented Programming with Jacamo. Sci. Comput. Program. 2013, 78, 747–761. [Google Scholar] [CrossRef]

- Rao, A.S. AgentSpeak(L): BDI agents speak out in a logical computable language. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 1996; pp. 42–55. [Google Scholar] [CrossRef]

- Bordini, R.H.; Hübner, J.F.; Wooldridge, M. Programming multi-agent systems in AgentSpeak using Jason; John Wiley & Sons: Chichester, WS, UK, 2007; Volume 8. [Google Scholar]

- Hübner, J.F.; Boissier, O.; Kitio, R.; Ricci, A. Instrumenting Multi-Agent Organisations with Organisational Artifacts and Agents. Auton. Agents Multi-Agent Syst. 2009, 20, 369–400. [Google Scholar] [CrossRef]

- Ricci, A.; Piunti, M.; Viroli, M.; Omicini, A. Environment Programming in CArtAgO. In Multi-Agent Programming; Springer: Boston, MA, USA, 2009; pp. 259–288. [Google Scholar] [CrossRef]

- Gutknecht, O.; Ferber, J. MadKit. In Proceedings of the Fourth International Conference on Autonomous Agents—AGENTS ’00, Barcelona, Spain, 3–7 June 2000; pp. 78–79. [Google Scholar] [CrossRef]

- Allan, R.J. Survey of Agent Based Modelling and Simulation Tools; Technical Report, DL-TR-2010-007; Computational Science and Engineering Department, STFC Daresbury Laboratory: Daresbury, Warrington, England, 2010. [Google Scholar]

- Railsback, S.F.; Lytinen, S.L.; Jackson, S.K. Agent-Based Simulation Platforms: Review and Development Recommendations. Simulation 2006, 82, 609–623. [Google Scholar] [CrossRef]

- Minar, N.; Burkhart, R.; Langton, C.; Askenazi, M. The Swarm Simulation System: A Toolkit for Building Multi-Agent Simulations; Working Papers 96-06-042; Santa Fe Institute: Santa Fe, NM, USA, 1996. [Google Scholar]

- Sklar, E. Netlogo, a Multi-Agent Simulation Environment. Artif. Life 2007, 13, 303–311. [Google Scholar] [CrossRef] [PubMed]

- Luke, S.; Cioffi-Revilla, C.; Panait, L.; Sullivan, K.; Balan, G. Mason: A Multiagent Simulation Environment. Simulation 2005, 81, 517–527. [Google Scholar] [CrossRef]

- North, M.J.; Collier, N.T.; Ozik, J.; Tatara, E.R.; Macal, C.M.; Bragen, M.; Sydelko, P. Complex Adaptive Systems Modeling With Repast Simphony. Complex Adapt. Syst. Modeling 2013, 1, 3. [Google Scholar] [CrossRef]

- Collier, N.; North, M. Parallel Agent-Based Simulation with Repast for High Performance Computing. Simulation 2012, 89, 1215–1235. [Google Scholar] [CrossRef]

- Niazi, M.; Hussain, A. Agent-Based Computing from Multi-Agent Systems to Agent-Based Models: A Visual Survey. Scientometrics 2011, 89, 479–499. [Google Scholar] [CrossRef]

- Drogoul, A.; Vanbergue, D.; Meurisse, T. Multi-agent Based Simulation: Where Are the Agents. In Multi-Agent-Based Simulation II; Springer: Berlin/Heidelberg, Germany, 2003; pp. 1–15. [Google Scholar] [CrossRef]

- Bosse, S.; Engel, U. Augmented Virtual Reality: Combining Crowd Sensing and Social Data Mining with Large-Scale Simulation Using Mobile Agents for Future Smart Cities. In Proceedings of the 5th International Electronic Conference on Sensors and Applications, Canary Islands, Tenerife, 25–27 September 2019; Volume 4, p. 49. [Google Scholar]

- Azimi, S.; Delavar, M.; Rajabifard, A. Multi-agent simulation of allocating and routing ambulances under condition of street blockage after natural disaster. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 325–332. [Google Scholar] [CrossRef]

- Kephart, J.; Chess, D. The Vision of Autonomic Computing. Computer 2003, 36, 41–50. [Google Scholar] [CrossRef]

- Tesauro, G.; Chess, D.M.; Walsh, W.E.; Das, R.; Segal, A.; Whalley, I.; Kephart, J.O.; White, S.R. A multi-agent systems approach to autonomic computing. In Proceedings of the Third International Joint Conference on Autonomous Agents and Multiagent Systems, New York, NY, USA, 19–23 July 2004; Volume 1, pp. 464–471. [Google Scholar]

- Norouzi, F.; Bauer, M. Autonomic Management for Energy Efficient Data Centers. In Proceedings of the Sixth International Conference on Cloud Computing, GRIDs, and Virtualization (Cloud Computing 2015), Nice, France, 22 March 2015; pp. 138–146. [Google Scholar]

- Schraven, M.; Guarnieri, C.; Baranski, M.; Müller, D.; Monti, A. Designing a Development Board for Research on IoT Applications in Building Automation Systems. In Proceedings of the International Symposium on Automation and Robotics in Construction (ISARC), Banff, AB, Canada, 2019; Volume 36, pp. 82–90. [Google Scholar] [CrossRef]

- Andročec, D.; Tomaš, B.; Kišasondi, T. Interoperability and lightweight security for simple IoT devices. In Proceedings of the 2017 40th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 22–26 May 2017; pp. 1285–1291. [Google Scholar] [CrossRef]

- Perkel, J.M. Programming: Pick Up Python. Nature 2015, 518, 125–126. [Google Scholar] [CrossRef] [PubMed]

- Ayer, V.M.; Miguez, S.; Toby, B.H. Why Scientists Should Learn To Program in Python. Powder Diffr. 2014, 29, S48–S64. [Google Scholar] [CrossRef]

- Foundation for Intelligent Physical Agents (FIPA). FIPA Agent Message Transport Protocol for HTTP Specification. 2002. Available online: http://www.fipa.org/specs/fipa00084/SC00084F.html (accessed on 10 June 2019).

- Ciortea, A.; Boissier, O.; Zimmermann, A.; Florea, A.M. Give Agents Some REST: A Resource-oriented Abstraction Layer for Internet-scale Agent Environments. In Proceedings of the 16th Conference on Autonomous Agents and MultiAgent Systems (AAMAS ’17), São Paulo, Brazil, 8–12 May 2017; International Foundation for Autonomous Agents and Multiagent Systems: Richland, SC, USA, 2017; pp. 1502–1504. [Google Scholar]

- Braubach, L.; Pokahr, A. Conceptual Integration of Agents with WSDL and RESTful Web Services. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; pp. 17–34. [Google Scholar] [CrossRef]

- Pablo, P.V.; Holgado-Terriza, J.A. Integration of MultiAgent Systems with Resource-Oriented Architecture for Management of IoT-Objects; IOS Press: Amsterdam, The Netherlands; Volume 23, Intelligent Environments; 2018; pp. 567–576. [Google Scholar] [CrossRef]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach; Pearson Education: Upper Saddle River, NJ, USA, 2009; Chapter 2. [Google Scholar]

- Kephart, J.O.; Walsh, W.E. An artificial intelligence perspective on autonomic computing policies. In Proceedings of the Fifth IEEE International Workshop on Policies for Distributed Systems and Networks, Yorktown Heights, NY, USA, 9 June 2004. [Google Scholar] [CrossRef]

- Intel Open Source. PowerTOP: Linux Tool to Diagnose Issues with Power Consumption and Power Management. 2007. Available online: https://01.org/powertop/ (accessed on 12 July 2019).

| HTTP Method | URI | Description |

|---|---|---|

| GET | /list_agents | show agents in the environment |

| GET | /list_policies | show policies available for agent |

| creation/modification | ||

| GET | /list_touchpoints | show sensors and actuators available in the |

| system for monitoring | ||

| GET | /monitor_logs | retrieve the state of the sensor and |

| actuators monitored by the agents created to | ||

| this end |

| HTTP Method | URI | Description |

|---|---|---|

| POST | /agents | Creates an agent; policies and the |

| fixed interval is specified in the | ||

| JSON body of the message | ||

| PUT | /agents/[agent_id] | Modify the agent’s policies or relationship |

| with other agents | ||

| GET | /agents/[agent_id]?[params=values] | Retrieve the internal state of the agent; |

| parameters are used to specify the type | ||

| of the content returned | ||

| DELETE | /agents/[agent_id] | Terminate the agent |

| HTTP Method | URI | Description |

|---|---|---|

| GET | /sim/run | Run simulation |

| GET | /sim/stop | Stop simulation |

| GET | /sim/status | Retrieve current status |

| GET | /sim/result | Retrieve result of the simulation in CSV format |

| GET | /sim/save | Retrieve the organization of the system |

| HTTP Method | URI | Description |

|---|---|---|

| PUT | /policy | Add or remove a policy accordingly to the |

| content of the message body | ||

| PUT | /meta_agent | Establishes or removes the relationship |

| between two agents | ||

| PUT | /action | Requests a user defined action to be executed by |

| the agent accordingly to the content | ||

| of the message body | ||

| GET | /info | Request information regarding the agent, such |

| as current policies, touchpoints, and | ||

| relationships | ||

| GET | /sensors | Request current state of the sensors |

| available to the agent | ||

| GET | /actuators | Request current state of the actuators |

| available to the agent |

| HTTP Method | URI | Description |

|---|---|---|

| GET | /agents | List all agents |

| PUT | /agents/[agent_id] | Register the agent |

| GET | /agents/[agent_id] | Retrieve the location of the agent: hostname and port |

| DELETE | /agents/[agent_id] | Unregister the agent |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Coelho Prado, T.; Bauer, M. ARPS: A Framework for Development, Simulation, Evaluation, and Deployment of Multi-Agent Systems. Appl. Sci. 2019, 9, 4483. https://doi.org/10.3390/app9214483

Coelho Prado T, Bauer M. ARPS: A Framework for Development, Simulation, Evaluation, and Deployment of Multi-Agent Systems. Applied Sciences. 2019; 9(21):4483. https://doi.org/10.3390/app9214483

Chicago/Turabian StyleCoelho Prado, Thiago, and Michael Bauer. 2019. "ARPS: A Framework for Development, Simulation, Evaluation, and Deployment of Multi-Agent Systems" Applied Sciences 9, no. 21: 4483. https://doi.org/10.3390/app9214483

APA StyleCoelho Prado, T., & Bauer, M. (2019). ARPS: A Framework for Development, Simulation, Evaluation, and Deployment of Multi-Agent Systems. Applied Sciences, 9(21), 4483. https://doi.org/10.3390/app9214483