Featured Application

the study can be used to monitor the cotton budding among large field fast.

Abstract

Monitoring the cotton budding rate is important for growers so that they can replant cotton in a timely fashion at locations at which cotton density is sparse. In this study, a true-color camera was mounted on an unmanned aerial vehicle (UAV) and used to collect images of young cotton plants to estimate the germination of cotton plants. The collected images were preprocessed by stitching them together to obtain the single orthomosaic image. The support-vector machine method and maximum likelihood classification method were conducted to identify the cotton plants in the image. The accuracy evaluation indicated the overall accuracy of the classification for SVM is 96.65% with the Kappa coefficient of 93.99%, while for maximum likelihood classification, the accuracy is 87.85% with a Kappa coefficient of 80.67%. A method based on the morphological characteristics of cotton plants was proposed to identify and count the overlapping cotton plants in this study. The analysis showed that the method can improve the detection accuracy by 6.3% when compared to without it. The validation based on visual interpretation indicated that the method presented an accuracy of 91.13%. The study showed that the minimal resolution of no less than 1.2 cm/pixel in practice for image collection is necessary in order to recognize cotton plants accurately.

1. Introduction

Satellite images with resolutions ranging from 0.3 m to thousands of meters are an important source of data at large spatial and temporal scales for general application purposes. In agricultural remote sensing research, based on different applications, the satellite remote sensing data have been widely used in vegetation growth monitoring, biomass estimation, agricultural drought monitoring, and nitrogen content monitoring of vegetation [1,2,3,4]. Although satellite remote sensing is an easy access way to obtain data from the Earth’s surface, the satellite images are heavy influenced by clouds, and limited image resolution is not adequate for some studies, e.g., crop phenotyping and crop germination monitoring, which require resolutions greater than 1 cm [5,6]. In addition, for applications that require regular observation of a specific area, e.g., daily, the long repeat visit cycle is another problem when relying on high resolution satellite data [7,8].

Recent technological improvements make use of multirotor and fixed-wing unmanned aerial vehicles (UAVs) as a convenient information acquisition method for scientific researchers and commercial operators [9,10]. Compared to the ground data collection method, e.g., robots or tractors [11,12], the main difference is that the UAV can work well without touching the ground. This is important for areas in which the ground is covered by plastic in order to decrease evapotranspiration and increase the ground temperature. Additionally, compared to satellite data, the most important advantages of UAVs are that the flight altitude can be controlled by the user, and the sensor devices, e.g., LiDAR devices, hyperspectral cameras, and true-color cameras, loaded on the UAV can be changed according to the user’s purposes. As a result, the resolution of the observation data (resolution greater than 1 cm) satisfies the requirements of many applications.

Due to the versatility and flexibility of UAVs, numerous studies have adopted the UAVs as a basic information collection tool. For example, Chen et al. used UAV images and the maximum likelihood classification method to examine the germination rate of cotton seeds at the Texas A&M AgriLife Research and Extension Center in Corpus Christi, TX [13]. Berni et al. used thermal and narrowband multispectral cameras on a UAV to monitor vegetation [14]. Leduc and Knudby used UAV orthomosaics at a resolution of 5 cm to map wild leeks, an endangered plant species of Eastern North America [15]. Chen et al. measured vegetation height with UAV photogrammetry to monitor vegetation recovery [5]. Tainá et al. used a UAV and a modified normalized difference vegetation index (NDVImod) for Chlorophyll Detection in Water Bodies [16]. Among these researches, in order to achieve the recognition of the plants or interested objects, several imagery classification methods have been adopted, e.g., the support vector machine (SVM), random decision forests (RF), neural network, Bayesian network, and the maximum likelihood classification method [17,18,19,20,21,22,23].

The ability to monitor the cotton budding rate as quickly as possible is important for growers, because if this information is known, they can replant the cotton in areas in which cotton density is sparse before it is too late. This is especially significant for growers in Xinjiang province, who often have thousands of acres of planted cotton. Normally, the task of checking the cotton budding rate is performed manually. The manual method is accurate but time-consuming and expensive; as a result, some cotton fields fail to be replanted before the window of opportunity to replant closes. Hence, a technology which can be used in Xinjiang province to help the growers to quickly monitor the cotton budding rate within a large area is very desirable. In this study, according to the characteristics of the machine for automatic cotton planter and the actual situation of cotton land in Xinjiang province, a UAV was used to collect the cotton images, the support vector machine (SVM) algorithm was used to get the classified image, and a method was proposed to monitor the cotton budding rate.

2. Background and Experiment

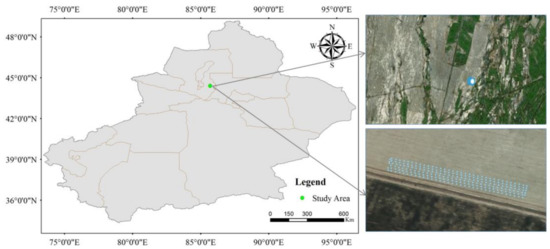

The experiment was carried out in Qitai County, Xinjiang, China, a map of which is shown in Figure 1. Xinjiang province is China’s largest provincial administrative region, with an area of 1,660,000 km2. Since it is far from the sea and located on the northwest border of China, this region has a typical temperate continental climate, with a mean yearly precipitation of 150 mm, and drought tolerant crops. Qitai County is located in the north of Xinjiang Province. The mean temperature of this area is 6.6 °C, and the mean precipitation is 245 mm. According to the statistical data, cotton is the main crop grown in the county.

Figure 1.

The location of the study area in Qitai County, Xinjiang, China (Left), the satellite image of the study area (upper right, data source from bing map), and the locations of captured images (Bottom right).

The 99 m × 25 m study area was established in Qitai County, which is a small part of a farm with more than 133 hectares of cotton fields in Qitai County, as shown in the bottom right of the image. The study area was sprayed with herbicide on 15 April 2017, and then the cotton was planted on 17 April 2017 by using an automatic sowing machine with spacing of 70 cm. The cotton image collection experiment was performed on 12 May 2017, 14:25 UTC, when the height of most cotton plants was about 8 cm. The UAV used in the study was the DJI phantom 4 with an RGB camera with a resolution of 4000 × 3000 and focal length of 4 mm carried on. The flight was carried out at a flying altitude of 15 m, which provided a ground sampling distance (GSD) of 5.2 mm/pixel, and the flying speed was 1.5 m/s−1. Additionally, the overlapping along the direction of flight was set to 75%, and the overlapping across the flight was 60%. During the experiment, the weather conditions for this day were sunny and breezy, with a maximum temperature of 28 °C and a low of 16 °C. The experiment obtained total 156 images, and 118 images among them were selected, as the bottom figure showed in Figure 1.

3. Evaluation of Budding Rate

3.1. Image Stitching

The image stitching of UAV images is much more complex than the satellite remote sensing data, and depends on the geolocation provides by itself. Currently, in order to generate an orthophoto from the UAV images, several steps need to be included: structure-from-motion (SfM), dense point cloud construction, meshing the surface, texture mesh, adding geolocation information to the textured mesh, and generation the orthophoto [10]. In the SfM process, the first step is to extract features from the images, and then match the features to create camera tracks, and finally to perform SfM construction to obtain the sparse point cloud [24]. Dense construction is the key step to obtaining a depth-map of the scenes, and these depth points will be useful for creating the mesh of the surface [25]. In this study, OpenSfM (https://github.com/mapillary/OpenSfM) was selected as the basic SfM and dense construction tool after migrating to the windows platform. Next, the Poisson algorithm [26] was used to convert dense point cloud to meshing and the textured meshing was yielded by using the mvs-texturing module (https://github.com/nmoehrle/mvs-texturing) [27]. Finally, the geolocation was done, and an orthophoto was obtained. In the study, the hardware used to perform the stitching is Intel Xeon E5-2630 × 2, Random Access Memory (RAM) of 128 GB, with a disk of 2 TB. The Python of version 3.7, was adopted as the glue language to call OpenSfM, Mvs-texturing, etc., to obtain the orthophoto image. As for this study, a total processing time of 3018 s was used.

3.2. Image Classification by SVM

After obtaining the stitching orthophoto of the cotton field, the cotton in the stitching image needs to be discriminated from the background. In this study, the SVM method was selected to perform the cotton field classification. As for the parameters of the SVM method, the radial basis function was used as kernel type with gamma of 0.25 and penalty value of 100. Five classes—cotton, soil, dark shadow, plastic and un-classified—were distinguished, and the training samples were obtained manually using remote sensing visualization and analysis software, ENVI 5.2 (https://www.harrisgeospatial.com). After using the samples to classify the orthophoto, the classification result was obtained. Rules, including the number of neighboring pixels being 8 and the minimum size of the smallest object being 3, were adopted to remove isolated pixels in the classification image.

3.3. Identification of Cotton Plants

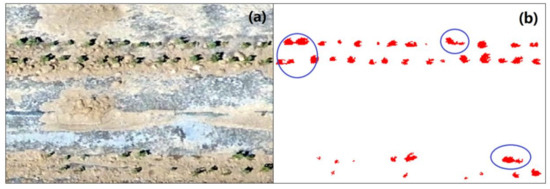

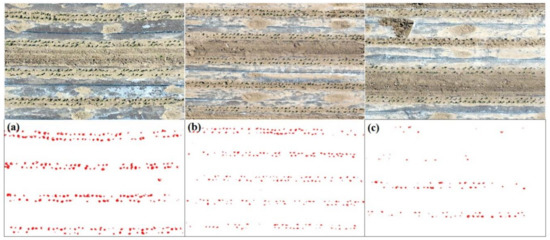

To count the germination rate correctly, the cotton plants should be identified and extracted from the classification images correctly. A problem in counting (one isolated polygon composed of cotton pixels was consider as one cotton plant) the number of cotton plants is the overlap of leaves, which decreases the germination rate of cotton. The overlapping of leaves is a common scenario in observation, for cottons present different growth status even if they have the same sowing date. Figure 2, a zoomed-in image subset from Figure 4, is presented to illustrate this problem. Figure 2a is the true-color image and 2b is the corresponding classification result. As shown in Figure 2a, the cotton plants can be clearly seen in the true-color image with a resolution of 5.2 mm, and the classification results are reasonable, as most of the cotton plants were correctly distinguished from the background objects. However, as the blue circles show in Figure 2b, some of the cotton plants are contiguous, meaning that a set of isolated points is recognized as one cotton plant; thus, the number of cotton plants is underestimated. Hence, before counting the number of the cotton plants, this problem should be fixed.

Figure 2.

A zoomed-in image of the study region, the true color image (a) and classification results of cotton plants (b).

If we consider the shape of the cotton plant to be a circle, with as the radius of the circle., when two cotton plants with a radius of are connected (not exactly overlapping), the maximal axis length of the shape composed by these two cotton plants is less than , and the minimal axis length of the shape for the composed shape should be less than . However, in practice, the ratio of overlapping is not fixed, and it is very difficult to describe it using a fixed formula. This study considers that for the cotton plant shape in the classified image, when the maximum axial length is greater than and the minimum axial length is less than , an overlap between the two cotton plants is identified. Equation (1) describes this further.

In Equation (1), is the maximal axis length of the shape, is the minimal axis length, is the mean radius of the cotton plant, and in Equation (2), is the mean area of the cotton plant.

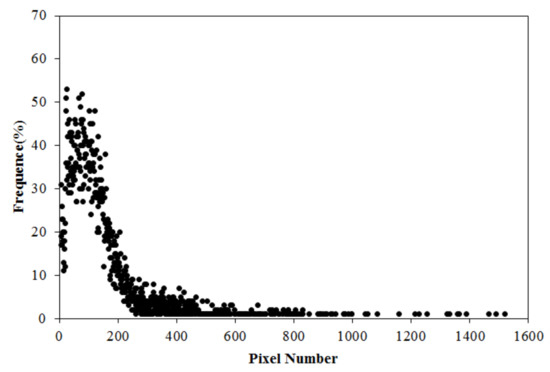

Determining the mean area of the cotton plant is difficult, because the size of the plant varies with growth time. Obviously, a fixed value should not be used. The cotton images from the validation areas shown in Figure 4 were selected. The pixel number of each cotton plant was counted, and cotton plants having a pixel number less than 3 were removed. Then, the frequency distribution chart of the number of pixels of the cotton plant was composed, as shown in Figure 3.

Figure 3.

Frequency distribution chart of the cotton plant area.

As shown in Figure 3, the pixel distribution is similar to a gamma distribution; most of the cotton plants had a pixel number of less than 200, and no more than 10% of cotton plants had a pixel number greater than 200. Considering the fact that most of the cotton plants should not overlapping each other during the unearthed stage of germination, the area of cotton plants with the maximum possibility can be used as the mean area of the total cotton plant. Equation (3) depicts this point.

In Equation (3), is the frequency of the pixel number, is the maximal frequency of the pixel number, is the number of pixels appearing times, is the total number of bins which meet the conditions. is the area of a pixel and can be calculated by the GSD (ground sample distance) of the data, but in this study, was assigned to 1 to simplify the calculation. In this study, the obtained from Equation (3) and Figure 3 is 65.09.

Once an overlap of cotton plants had been identified by Equations (1)–(3), the following equation was used to segment and count the overlapping plants:

where is the new number of cotton plants.

3.4. Calculation Budding Rate

In Xinjiang Province, cotton was sown using sowing machinery, and the sowing rate and row spacing were fixed. Hence, the total number of cotton seeds planted in the field can be calculated by the sowing rate, and the cotton germination rate was calculated by Equation (5):

where is the monitored germination rate, is the total number of the overlapping cotton plants, is the number of non-overlapping cotton plants. is the number of sown cotton seed that can be calculated from the sowing condition parameters, as described in Equation (6):

where is the total number of the rows sown, is the length of one row, is the speed of the sowing machinery, and is the time interval of sowing.

4. Results

4.1. Results

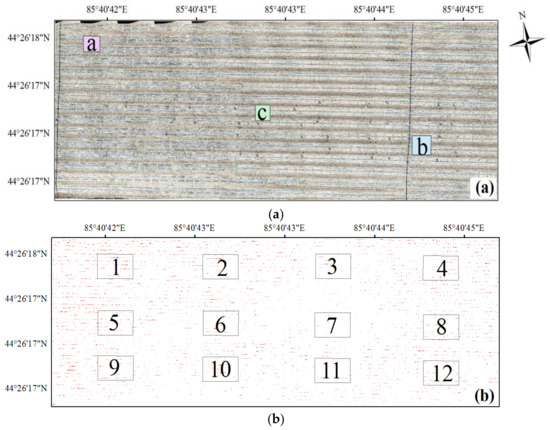

After obtaining the UAV images of study field, these images were stitched together by the methods presented in Section 3.1 to obtain the orthophoto. Figure 4a shows the original stitching orthophoto. Then, the orthophoto was classified to obtain the classification result and the cotton pixels were abstracted, as shown in Figure 4b. As shown in Figure 4a, the dark lines in the true color image are irrigation pipelines. Because the cotton planting area was covered with plastic film and the cotton plants were small, the background reflectance is large. In general, as shown in the classification result, the distribution of cotton plants is inhomogeneous: the center part and the bottom-left part of the study area present sparse coverage of cotton plants.

Figure 4.

The true-color stitching image (a) and the classification result of the study area (b); red points in (b) are the identified cotton plants, the rectangles with number showed in Figure 4b are the validation samples.

Due to the small size of cotton plants, it is almost impossible to easily see the classified cotton pixels from the overall result presented in Figure 4b. Figure 5 shows some zoomed-in images selected from Figure 4, such as the rectangles presented in Figure 4a. In general, Figure 5c presents a sparse distribution of cotton plants, while Figure 5a,b indicates a dense distribution of cotton plants. This is similar as shown in Figure 4b, Figure 5a,b are located in the areas with dense cotton plants, Figure 5c is located in the center of Figure 4b, where the distribution of cotton plants is quite sparse. Although the cotton seeds were sowed on the same date, they presented different budding rate. This is due to the covered plastic film of filed, as we discussed in Section 5.2.

Figure 5.

Zoomed-in areas of the mosaic and classification image, the upper images are the true-color mosaic images, and the bottom images are the corresponding classification results, red points are cotton plants. (a–c) are regions presented in Figure 4a.

4.2. Accuracy Evaluation

The validation data were created manually for each class, and a confusion matrix was calculated to evaluate the accuracy of the two classification methods, as shown in Table 1 and Table 2. The overall accuracy of the SVM method is 96.65%, and the Kappa coefficient (a statistic that is used to measure inter-rater reliability) [28] is 93.99%. This indicated the SVM method used in the study performed reasonable classification accuracy.

Table 1.

Confusion Matrix of the classification (pixel).

Table 2.

Confusion Matrix of the classification (percent).

The visual manual interpretation was carefully performed on these samples, and the number of cotton plants for each selected row was manually counted, as shown in Figure 4b, 12 equal areas were selected from the image as the validation samples. In addition, the number of seeds sown was also counted, and the detailed amount of seeds sown for each sample was presented in Table 3. As shown in Table 3, the sowing count for each sample varied, the reason for which is that although the size of the samples are equal, the locations of the samples are slightly different.

Table 3.

The accuracy of cotton plant germination rate monitoring by the method used in this study.

The number of cotton plants identified by the method used in this study is presented in Table 3. The column labeled ‘non split’ means that the overlapping cotton plants were not identified and segmented, and cotton plants composed of an overlapping cotton plant were counted as one cotton plant. The column labeled ‘non split’ means the number of cotton plants which were split by Equations (1) through (5). The germination rates for manual interpretation, non split, and split are listed in the table.

As shown in Figure 4b, samples 3, 7, and 11 had lower cotton plant density than the other samples, and samples 1 and 5 had higher cotton plant density. The germination rate by manual interpretation shown in Table 3 indicates similar results. The germination rates for samples 3, 7, and 11 were 0.485, 0.470, and 0.509, and the germination rates for samples 1 and 5 were 0.683 and 0.664, respectively. The minimum and maximum germination rates were 0.470 and 0.683 for samples 7 and 1, respectively.

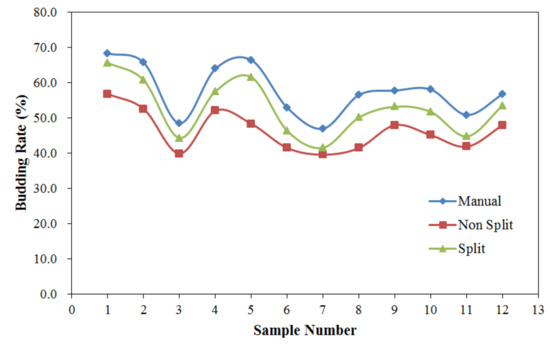

The budding rates of the validation samples obtained from manual interpretation, non split, and split methods are shown in Figure 6 in order to further compare their differences. As shown in Figure 6, in general, the manual interpretation has the highest accuracy as compared to the other methods for all the validation samples. The split method always has a higher germination rate than the non split method, with overlapping indicating that overlapping cotton plants were present. For different samples, due to the different growth status of the cotton plants, the overlapping degree of overlapping cotton plants varied. The germination rate of the split method for sample 5 was larger than the non split method, but for samples 7 and 11, the difference in germination rate was small. All in all, the split method presented an increase in detection accuracy of 6.3% over the non split method, and the mean germination rates for manual interpretation, non split, and split were 57.7%, 46.3%, and 52.6%, respectively. Furthermore, since the data collection in the study was conducted by a camera loaded on a UAV, it is more reasonable to evaluate the accuracy of the proposal method using the visual manual interpretation result as the real data rather than the number of seeds sown. Hence, the method presented in the study achieved an accuracy of 91.13%.

Figure 6.

The budding rates of the validation samples obtained from the three methods.

5. Discussion

According to the experiments on cotton germination, the germination rate can reach 90% in the laboratory [29]. The mean germination rate of this field study was 56.26%, as presented in Section 4, indicated that the study field has a low germination rate. Usually, the factors that influence the monitored germination rate are the following: the resolution of the flight image, the days to emergence, the identification algorithm, and weeds in the study field.

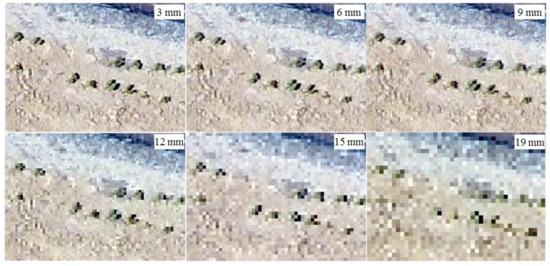

5.1. Influence of Resolution

This study relied heavily on high-resolution images, which play the most important role in recognizing cotton plants correctly; hence, we discuss the manner in which the resolution influences the result. As shown in Figure 7, the cotton field image was obtained at a resolution of approximately 3 mm/pixel in the study field, and this image was resampled to a resolution of 6 mm/pixel, 9 mm/pixel, 1.2 cm/pixel, 1.5 cm/pixel, and 1.9 cm/pixel to further compare. As shown in Figure 7, there was no large difference between the images 3-and 6-mm/pixel resolution images; visual inspection can identify the cotton easily. However, as the resolution decreased, the images became blurrier. When the resolution was less than 1.2 cm/pixel, the cotton plants are especially difficult to recognize, and with resolutions of 1.5 and 1.8 cm/pixel, small cotton plant cannot be identified in the images. However, the resolution of the flight image is the key parameter, which not only influences the monitoring accuracy but also the cost. To achieve higher resolution with a UAV system, the flight altitude must be lower and the imaging area smaller. Higher resolution means higher time consumption and an increase in monitoring cost. Considering the recognition accuracy and the cost, we suggest using a resolution of no less than 1.2 cm/pixel in practice for image collection.

Figure 7.

The cotton field images at different resolutions obtain from the UAV.

5.2. Influences of Emergence Date and Weeds

The day of emergence decides the size of the cotton plant when monitoring the germination rate. To obtain the best images to monitor the budding rate, the day of flight is the key parameter. If the flight time is too early, then the size of the cotton is too small to be observed easily; alternatively, if the flight time is too late, the opportunity to count the number of overlapping cotton plants is lost. Choosing the best window to monitor the budding rate has no fixed rule; the growth rate of the budding seed depends on different environmental parameters, such as temperature, soil moisture, and type of seed. In this study, the flight occurred when the farmer was beginning to replant the cotton, the date of the flight in this study was appropriate for the image collection.

The weeds in the study field are another source of error that can adversely affect the germination rate. In this study, the unique processing steps during sowing in Xinjiang Province make the results unaffected by the weeds, i.e., an herbicide was used in the field before sowing. Then, the sowing machine laid drip irrigation pipes, and the soil was covered with plastic film to improve the ground temperature, and finally, cotton was sown with equal spacing. The above steps provide many advantages for this study. First of all, the herbicide killed most of the weed seeds, thus inhibiting the growth of weeds. Then, the plastic film ensures that the weeds cannot emerge at the same time as the initial stage of growth of the cotton. Those steps make sure that almost no weeds were found in the field.

Although the plastic film provides many benefits, in practice, it also leads to many difficulties in identifying the cotton plants. In the sowing process, the soil was placed on the plastic film to prevent the plastic film from being blown away. As a result, for some of the germinated seeds, if the upper part of the seed was covered by the plastic film, it could not pass through the plastic film after germination. As the red rectangle shows in Figure 6b, due to being covered by plastic film and soil, almost no cotton plants emerged from the surface, which definitely decreased the total monitored cotton budding rate. The effect of the plastic film means that the budding rate monitored by the study is an apparent budding rate, which does not include the part covered by the plastic film. In fact, according to several years of statistical results, in which the plastic film was removed from the field, the actual budding rate of this area is greater than 75%.

Compared to the actual budding rate for this area, the monitored apparent budding rate for the cotton of 56.26% in the study was quite low. However, monitoring the apparent budding rate is the main purpose of this study, and it is useful for the growers. Usually, sprouted cotton seedlings covered by the plastic will not penetrate and emerge from the film, and the temperature between the ground and film is higher than the outside air temperature. If the plastic film covering the cotton plant is not removed, as temperature increases, the cotton plant will be killed by the high temperature. As a result, for locations with a low density of cotton plants, growers not only remove the plastic film but also replant the cotton seeds. Hence, the apparent budding rate presented in this study will provide more useful information for growers in Xinjiang Province.

5.3. Possible Improvements and Comparison with Other Studies

Monitoring of the budding rate of cotton was also conducted by Chen R. et al. [11], and their study achieved an estimation accuracy ranging from 81.0% to 99.5%, with an average of 88.6% in terms of counting germinated cotton plants. In our study, the presented method achieved an accuracy of 91.13%, which is slightly higher than that of the previous study. The possible reasons for the improvement are: (1) the study filed was covered by plastic, which decreased the influences of weeds and reduced background interference; (2) the studies used different cotton plant identification methods. In their study, a maximum likelihood method was used to identify the cotton plant, but no accuracy of the maximum likelihood method was reported in their study. Hence, in this study, we also used the maximum likelihood method to obtain the classification result, and the accuracy validation shows the maximum likelihood method achieved accuracy is 87.85%, and the Kappa coefficient of 80.67%. While the SVM method used in our study performed better classification accuracy, this would improve the final accuracy; (3) this study also used a method for decreasing the influence of overlapping cotton.

While the study presented a reasonable monitoring accuracy by using an RGB true color camera, it would benefit more if the study employed a near-infrared (NIR) camera [30,31]. The NIR camera can provide more information on the cotton plants than the RGB camera, and it would greatly highlight the presence of the cotton plants. Hence, a NIR camera can be considered to make a comparison between the RGB camera further, especially, to identify if the NIR camera can provide more information of cotton plants which under the plastic film than the RGB camera. Besides, the method presented in the study to decrease the influence of the overlapping of cotton relies on the correct data collection date. This means that when the sizes of the cotton plants are large, or the overlaps are heavy, this method may not work well.

6. Conclusions

In this study, a true-color camera loaded on a UAV was used to collect images of cotton plants. The collected images were processed to obtain the stitched image of the study area, and the SVM and maximum likelihood classification method were used to discriminate the cotton plants from the stitched image. The SVM performed better than the maximum likelihood classification method, with an accuracy of 96.65% compared to 87.85% of the maximum likelihood classification method. The method used in the study to count the overlapping cotton plants presented an increased detection accuracy of 6.3% over the method that did not consider overlapping. The accuracy of the proposed method is 91.13%, and the mean germination rates for manual interpretation, non split and split were 57.7%, 46.3%, and 52.6%, respectively. The resolution of the flight images was opposite to the cost, and the appropriate resolution for monitoring the budding rate of the cotton should not be greater than 12 mm/pixel. While the use of plastic film in the field allowed the monitored budding rate to be unaffected by weeds, it also caused a decrease in the apparent monitored cotton budding rate. However, the apparent budding rate is more useful in practice for the grower to replant and manage the cotton field.

Author Contributions

Data curation, L.X., L.C., G.X., Y.W. and T.Y.; Investigation, T.Y.; Methodology, L.X., R.Z. and Y.H.; Validation, G.X. and Y.W.; Writing—original draft, L.X. and L.C.; Writing—review & editing, L.X., R.Z., L.C. and Y.H.

Acknowledgments

This research was funded in part by the National Key R&D Program of China (2016YFD0200701-2), The National Natural Science Foundation of China (31601228), Zhang Ruirui’s Beijing Nova Program (Z181100006218029), and BAAFS Innovation Ability Construction Program 2018 (KJCX20180424). We thank Deying Ma and her team from Xinjiang Agricultural University for supporting the experiment.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Clevers, J.G.P.W.; van Leeuwen, H.J.C. Combined use of optical and microwave remote sensing data for crop growth monitoring. Remote Sens. Environ. 1996, 56, 42–51. [Google Scholar] [CrossRef]

- Zhao, F.; Xu, B.; Yang, X.; Jin, Y.; Li, J.; Xia, L.; Ma, H. Remote sensing estimates of grassland aboveground biomass based on MODIS net primary productivity (NPP): A case study in the Xilingol grassland of Northern China. Remote Sens. 2014, 6, 5368–5386. [Google Scholar] [CrossRef]

- Xia, L.; Zhao, F.; Mao, K.; Yuan, Z.; Zuo, Z.; Xu, T. SPI-based analyses of drought changes over the past 60 years in China’s major crop-growing areas. Remote Sens. 2018, 10, 171. [Google Scholar] [CrossRef]

- Bobbink, R.; Hornung, M.; Roelofs, J.G. The effects of air-borne nitrogen pollutants on species diversity in natural and semi-natural European vegetation. J. Ecol. 1998, 86, 717–738. [Google Scholar] [CrossRef]

- Kwak, G.-H.; Park, N.-W. Impact of texture information on crop classification with machine learning and UAV images. Appl. Sci. 2019, 9, 643. [Google Scholar] [CrossRef]

- Zhou, C.; Ye, H.; Xu, Z.; Hu, J.; Shi, X.; Hua, S.; Yue, J.; Yang, G. Estimating maize-leaf coverage in field conditions by applying a machine learning algorithm to UAV remote sensing images. Appl. Sci. 2019, 9, 2389. [Google Scholar] [CrossRef]

- Carvajal-Ramírez, F.; Agüera-Vega, F.; Martínez-Carricondo, P.J. Effects of image orientation and ground control points distribution on unmanned aerial vehicle photogrammetry projects on a road cut slope. J. Appl. Remote Sens. 2016, 10, 34004. [Google Scholar] [CrossRef]

- Zein, T. Fit-for-purpose land administration: An implementation model for cadastre and land administration systems. In Proceedings of the Land and Poverty Conference 2016: Scaling up Responsible Land Governance, Washington, DC, USA, 14–18 March 2016. [Google Scholar]

- Stöcker, C.; Bennett, R.; Nex, F.; Gerke, M.; Zevenbergen, J. Review of the current state of UAV regulations. Remote Sens. 2017, 9, 459. [Google Scholar] [CrossRef]

- Xia, L.; Zhang, R.R.; Chen, L.P.; Zhao, F.; Jiang, H.J. Stitching of hyper-spectral UAV images based on feature bands selection. IFAC-PapersOnLine 2016, 49, 1–4. [Google Scholar] [CrossRef]

- Pilli, S.K.; Nallathambi, B.; George, S.J.; Diwanji, V. eAGROBOT-A robot for early crop disease detection using image processing. In Proceedings of the IEEE Sponsored 2nd International Conference on Electronics and Communication System (ICECS 2015), Coimbatore, India, 26–27 February 2015. [Google Scholar]

- Reiser, D.; Sehsah, E.-S.; Bumann, O.; Morhard, J.; Griepentrog, H.W. Development of an autonomous electric robot implement for intra-row weeding in vineyards. Agriculture 2019, 9, 18. [Google Scholar] [CrossRef]

- Chen, R.; Chu, T.; Landivar, J.A.; Yang, C.H.; Maeda, M.M. Monitoring cotton (Gossypium hirsutum L.) germination using ultrahigh-resolution UAS images. Precis. Agric. 2018, 19, 161–177. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suárez, L.; Elias, F. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Leduc, M.-B.; Knudby, A.J. Mapping wild leek through the forest canopy using a UAV. Remote Sens. 2018, 10, 70. [Google Scholar] [CrossRef]

- Guimarães, T.T.; Veronez, M.R.; Koste, E.C.; Gonzaga, L.; Bordin, F.; Inocencio, L.C.; Larocca, A.P.C.; de Oliveira, M.Z.; Vitti, D.C.; Mauad, F.F. An alternative method of spatial autocorrelation for chlorophyll detection in water bodies using remote sensing. Sustainability 2017, 9, 416. [Google Scholar] [CrossRef]

- Diago, M.P.; Sanz-Garcia, A.; Millan, B.; Blasco, J.; Tardaguila, J. Assessment of flower number per inflorescence in grapevine by image analysis under field conditions. J. Sci. Food Agric. 2014, 94, 1981–1987. [Google Scholar] [CrossRef]

- Dias, P.A.; Tabb, A.; Medeiros, H. Multispecies fruit flower detection using a refined semantic segmentation network. IEEE Robot. Autom. Lett. 2018, 3, 3003–3010. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Ho, T.K. The random subspace method for constructing decision forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1–22. [Google Scholar]

- Hu, W.; Huang, Y.; Wei, L.; Zhang, F.; Li, H. Deep convolutional neural networks for hyperspectral image classification. J. Sens. 2015, 2015, 258619. [Google Scholar] [CrossRef]

- Friedman, N.; Geiger, D.; Goldszmidt, M. Bayesian network classifiers. Mach. Learn. 1997, 29, 131–163. [Google Scholar] [CrossRef]

- Erbek, F.S.; Özkan, C.; Taberner, M. Comparison of maximum likelihood classification method with supervised artificial neural network algorithms for land use activities. Int. J. Remote Sens. 2004, 25, 1733–1748. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the world from internet photo collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef]

- Shen, S. Accurate multiple view 3D reconstruction using patch-based stereo for large-scale scenes. IEEE Trans. Image Process. 2013, 22, 1901–1914. [Google Scholar] [CrossRef] [PubMed]

- Kazhdan, M.M.; Bolitho, M.; Hoppe, H. Poisson surface reconstruction. In Proceedings of the Fourth Eurographics Symposium on Geometry Processing, Cagliari, Italy, 26–28 June 2006. [Google Scholar]

- Waechter, M.; Moehrle, N.; Goesele, M. Let there be color! Large-scale texturing of 3D reconstructions. In Computer Vision—ECCV 2014. Lecture Notes in Computer Science 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Krzyzanowski, F.C.; Delouche, J.C. Germination of cotton seed in relation to temperature. Rev. Bras. Sementes 2011, 33, 543–548. [Google Scholar] [CrossRef]

- Suo, C.; McGovern, E.; Gilmer, A. Coastal dune vegetation mapping using a multispectral sensor mounted on an UAS. Remote Sens. 2019, 11, 1814. [Google Scholar] [CrossRef]

- He, J.; Zhang, N.; Su, X.; Lu, J.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Estimating leaf area index with a new vegetation index considering the influence of rice panicles. Remote Sens. 2019, 11, 1809. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).