PDDL Planning with Natural Language-Based Scene Understanding for UAV-UGV Cooperation

Abstract

1. Introduction

2. Related Work

2.1. Heterogeneous Multi-Robot Cooperation Planning

2.2. Natural Language-Based Scene Understanding

2.3. Connecting Symbolic Planning and Deep Learning

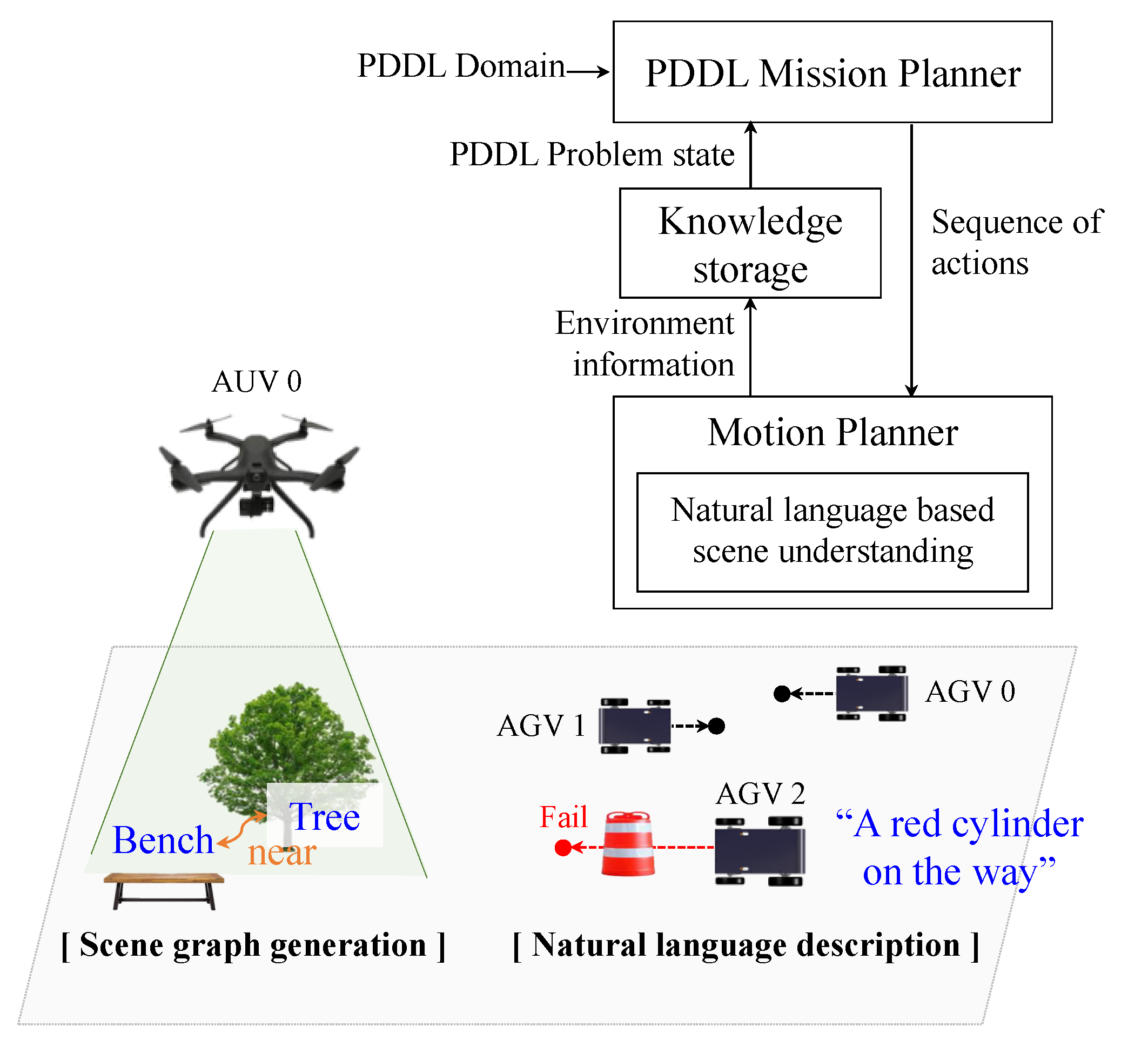

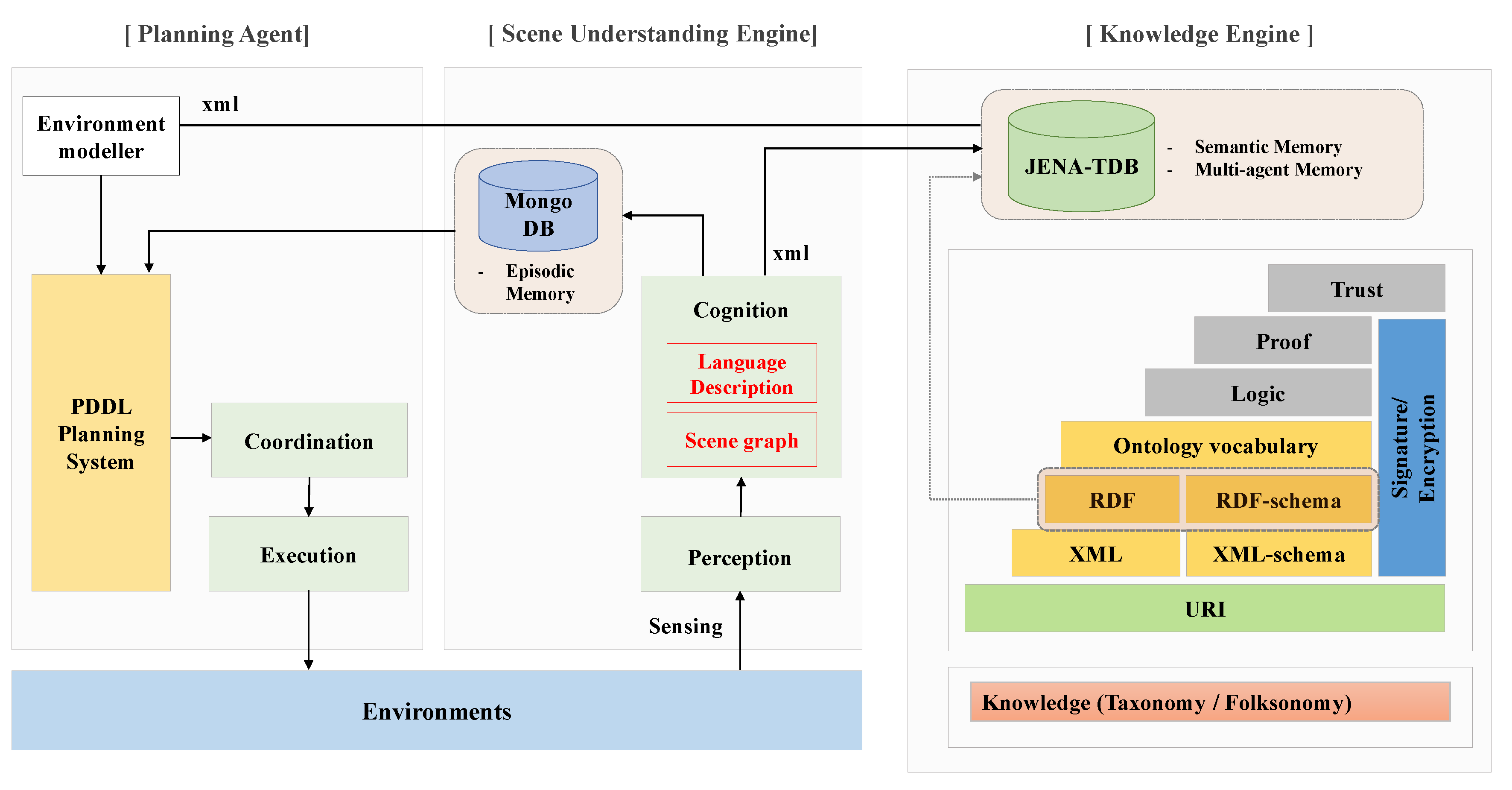

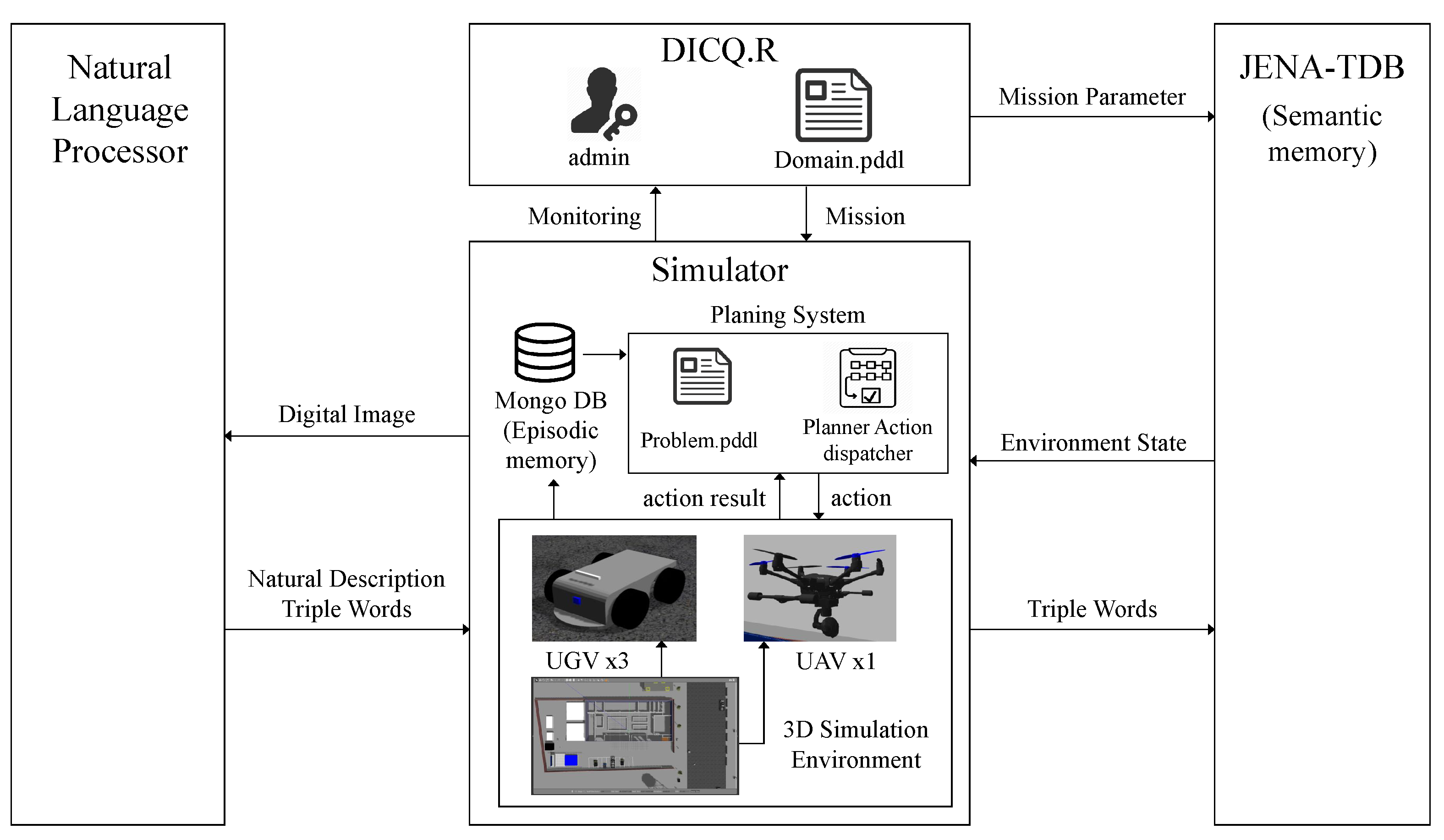

3. Architecture

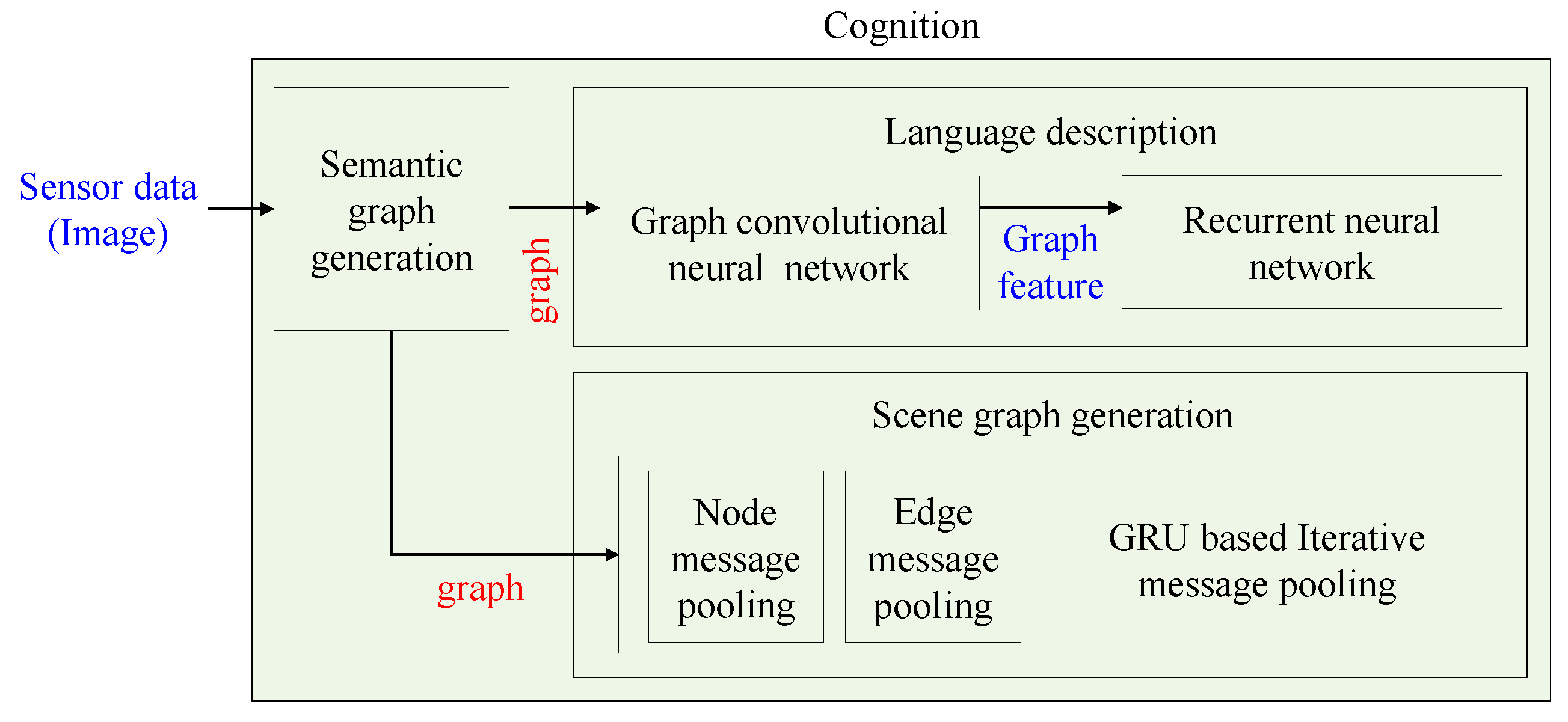

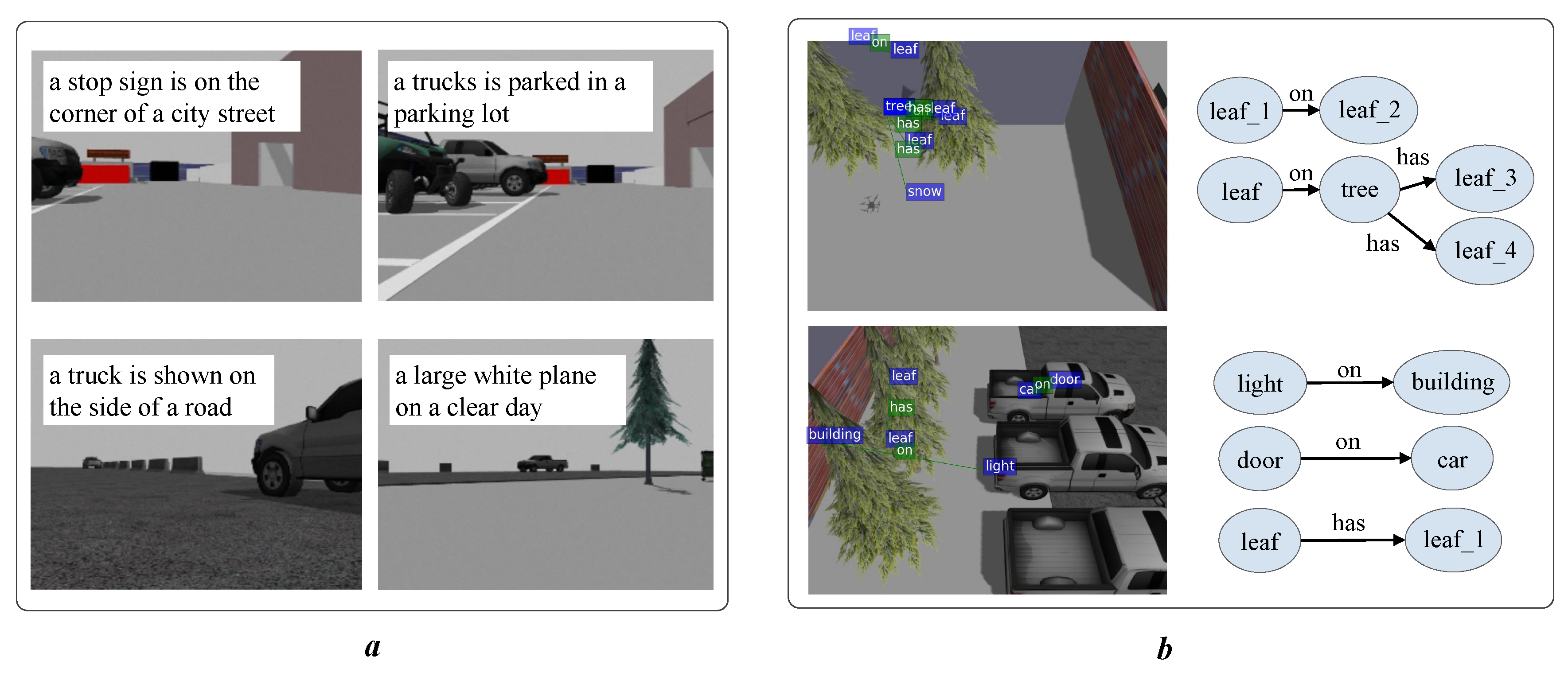

3.1. Natural Language-Based Cognition

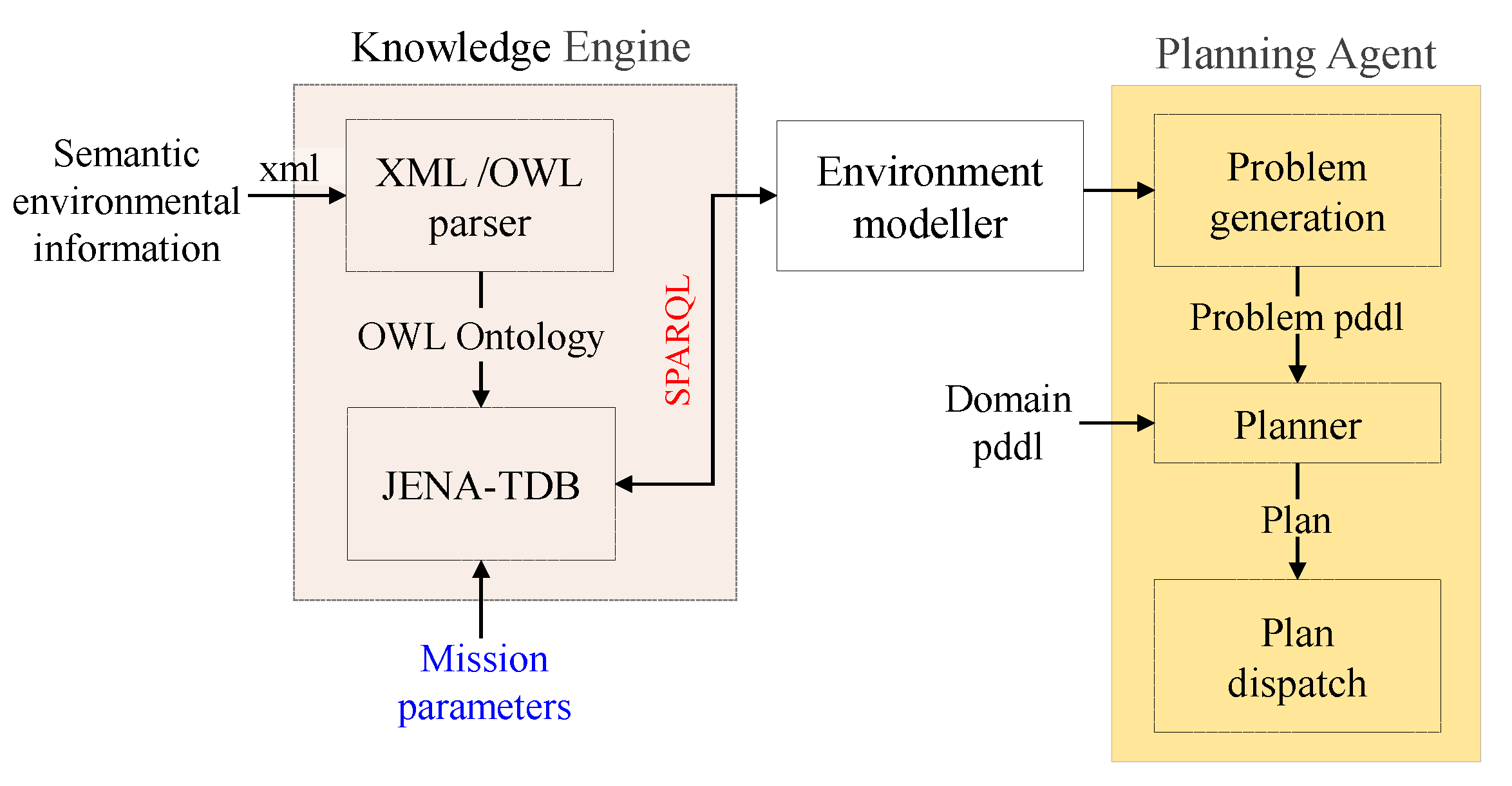

3.2. Knowledge Engine

3.3. PDDL Planning Agent

4. Experiment

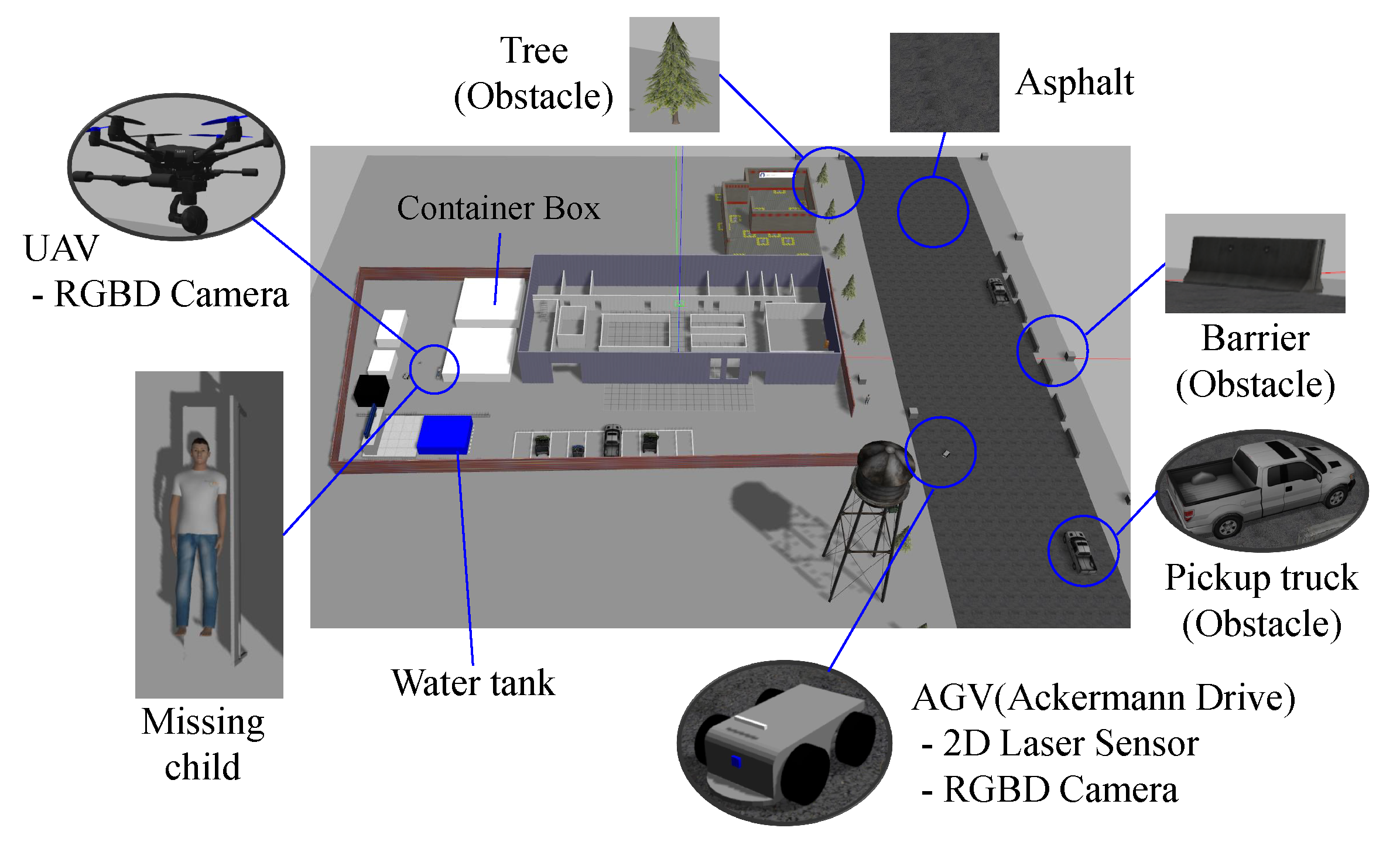

4.1. Experiment Setting

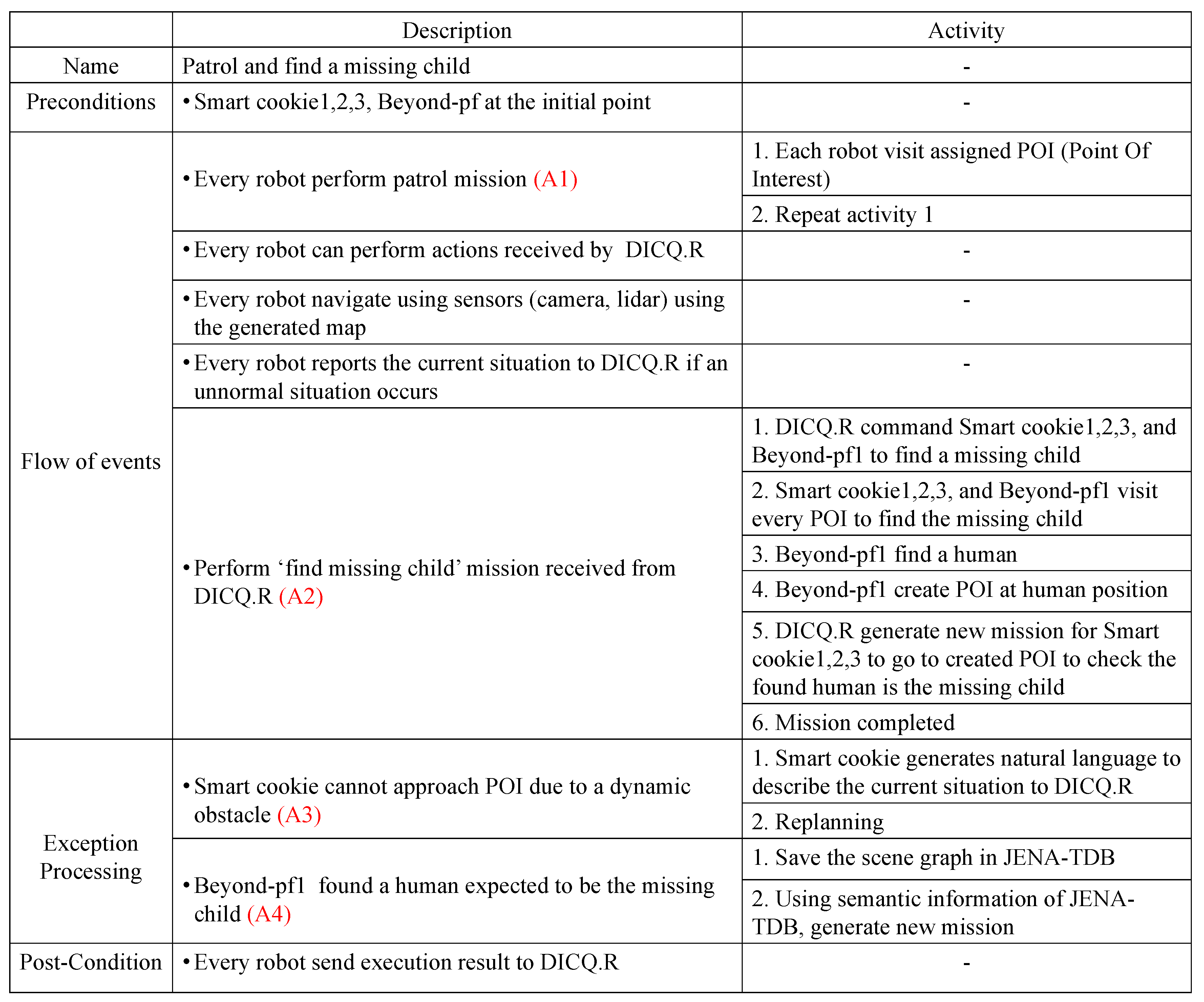

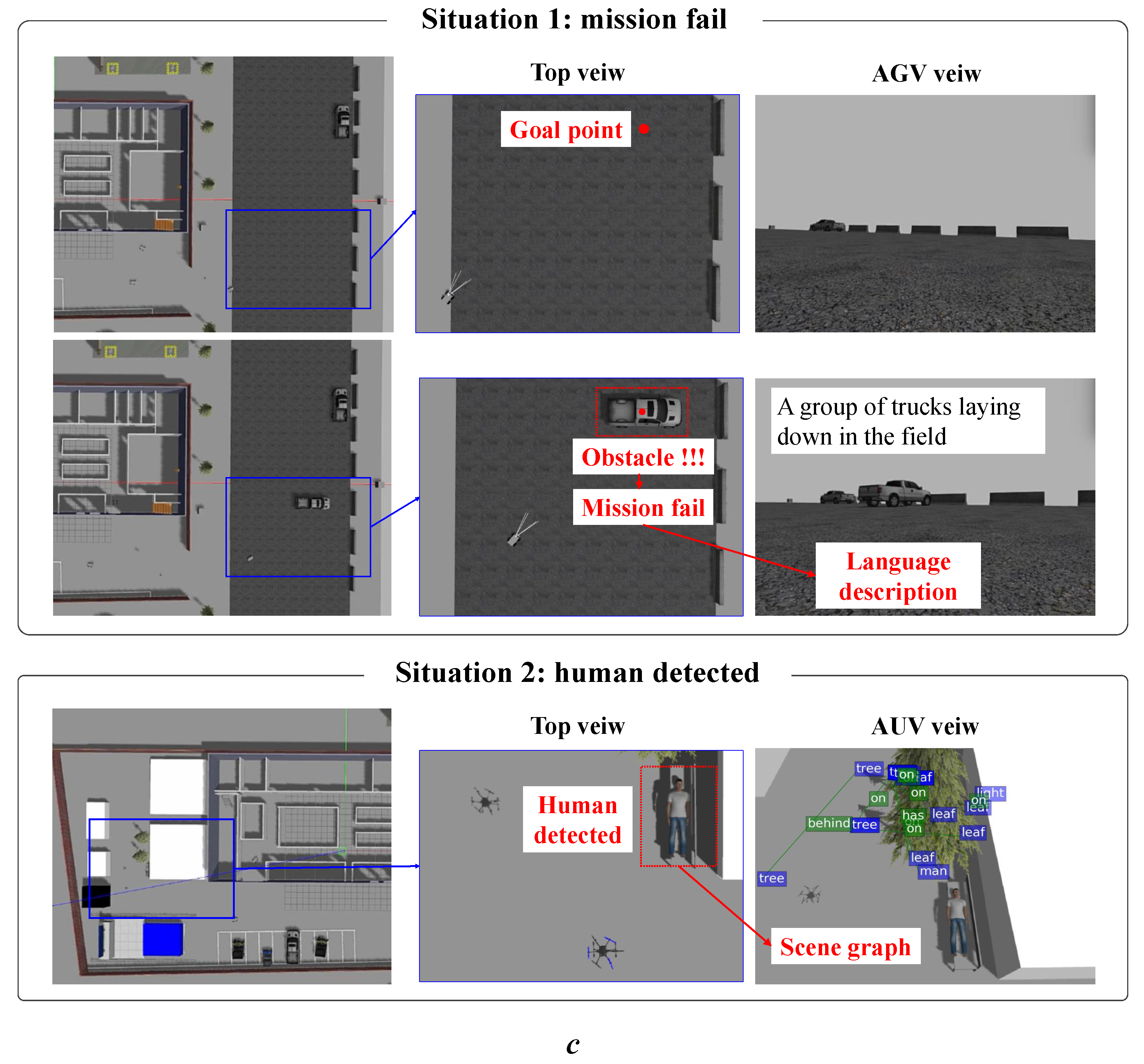

4.2. Scenario

4.3. Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| PDDL | Planning Domain Definition Language |

| ROS | Robot Operating System |

| SLAM | Simultaneous Localization and Mapping |

| RNN | Recurrent Neural Networks |

| GCN | Graph Convolutional Network |

| LSTM | Long Short-Term Memory |

| CNN | Convolutional Neural Network |

| GRU | Gated Recurrent Unit |

| UAV | Unmanned Aerial Vehicle |

| UGV | Unmanned Ground Vehicle |

| VQA | Visual Question and Answering |

| RDF | Resource Description Framework |

| SPARQL | SPARQL Protocol and RDF Query Language |

| ROS | Robot Operating System |

| POI | Point of Interest |

Appendix A. Architecture of the Trained Neural Network for Language Description

| Layer Type | Filters/ Units | Output Size | Connected to | Number of Parameters |

|---|---|---|---|---|

| Input(Node) | - | - | - | |

| Input(Edge) | - | - | - | |

| Graph convolution1 | 1024 | Input(Node) | 4,262,912 | |

| Input(Edge) | ||||

| Graph convolution2 | 64 | Graph convolution1 | 65,536 | |

| Fully Connected1 | - | 512 | Graph convolution2 | 2,621,952 |

| Input(Words) | - | 44 | - | - |

| Embedding | 256 | Input(Words) | 798,976 | |

| LSTM1 | 256 | Embedding | 525,312 | |

| LSTM2 | 1000 | 1000 | Fully Connected1 | 7,076,000 |

| LSTM1 | ||||

| Fully Connected2 | - | 3121 | LSTM2 | 3,124,121 |

References

- Rosa, L.; Cognetti, M.; Nicastro, A.; Alvarez, P.; Oriolo, G. Multi-task cooperative control in a heterogeneous ground-air robot team. IFAC-PapersOnLine 2015, 48, 53–58. [Google Scholar] [CrossRef]

- Wally, B.; Vyskocil, J.; Novak, P.; Huemer, C.; Sindelar, R.; Kadera, P.; Mazak, A.; Wimmer, M. Flexible Production Systems: Automated Generation of Operations Plans based on ISA-95 and PDDL. IEEE Robot. Autom. Lett. 2019, 4, 4062–4069. [Google Scholar] [CrossRef]

- Chu, F.J.; Xu, R.; Seguin, L.; Vela, P. Toward Affordance Detection and Ranking on Novel Objects for Real-world Robotic Manipulation. IEEE Robot. Autom. Lett. 2019, 4, 4070–4077. [Google Scholar] [CrossRef]

- Miranda, D.S.S.; de Souza, L.E.; Bastos, G.S. A ROSPlan-Based Multi-Robot Navigation System. In Proceedings of the 2018 Workshop on Robotics in Education, Joao Pessoa, Brazil, 6–10 November 2018; pp. 248–253. [Google Scholar]

- Zhang, S.; Jiang, Y.; Sharon, G.; Stone, P. Multirobot symbolic planning under temporal uncertainty. In Proceedings of the 16th Conference on Autonomous Agents and MultiAgent Systems, Sao Paulo, Brazil, 8–12 May 2017; pp. 501–510. [Google Scholar]

- Corah, M.; O’Meadhra, C.; Goel, K.; Michael, N. Communication-Efficient Planning and Mapping for Multi-Robot Exploration in Large Environments. IEEE Robot. Autom. Lett. 2019, 4, 1715–1721. [Google Scholar] [CrossRef]

- Bowman, S.L.; Atanasov, N.; Daniilidis, K.; Pappas, G.J. Probabilistic data association for semantic slam. In Proceedings of the International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; pp. 1722–1729. [Google Scholar]

- Young, T.; Hazarika, D.; Poria, S.; Cambria, E. Recent trends in deep learning based natural language processing. IEEE Comput. Intell. Mag. 2018, 13, 55–75. [Google Scholar] [CrossRef]

- Aneja, J.; Deshpande, A.; Schwing, A.G. Convolutional image captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5561–5570. [Google Scholar]

- Zhang, L.; Wei, L.; Shen, P.; Wei, W.; Zhu, G.; Song, J. Semantic SLAM Based on Object Detection and Improved Octomap. IEEE Access 2018, 6, 75545–75559. [Google Scholar] [CrossRef]

- Karpathy, A.; Fei-Fei, L. Deep visual-semantic alignments for generating image descriptions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3128–3137. [Google Scholar]

- Yao, T.; Pan, Y.; Li, Y.; Mei, T. Exploring visual relationship for image captioning. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 684–699. [Google Scholar]

- Walter, M.R.; Hemachandra, S.; Homberg, B.; Tellex, S.; Teller, S. A framework for learning semantic maps from grounded natural language descriptions. Int. J. Robot. Res. 2014, 33, 1167–1190. [Google Scholar] [CrossRef]

- Johnson, J.; Krishna, R.; Stark, M.; Li, L.J.; Shamma, D.; Bernstein, M.; Li, F.-F. Image retrieval using scene graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3668–3678. [Google Scholar]

- Ma, L.; Lu, Z.; Li, H. Learning to answer questions from image using convolutional neural network. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Garnelo, M.; Arulkumaran, K.; Shanahan, M. Towards deep symbolic reinforcement learning. arXiv 2016, arXiv:1609.05518. [Google Scholar]

- Asai, M.; Fukunaga, A. Classical planning in deep latent space: Bridging the subsymbolic-symbolic boundary. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Mao, J.; Gan, C.; Kohli, P.; Tenenbaum, J.B.; Wu, J. The Neuro-Symbolic concept learner: Interpreting scenes, words, and sentences from natural supervision. arXiv 2019, arXiv:1904.12584. [Google Scholar]

- Cashmore, M.; Fox, M.; Long, D.; Magazzeni, D.; Ridder, B.; Carrera, A.; Palomeras, N.; Hurtos, N.; Carreras, M. Rosplan: Planning in the robot operating system. In Proceedings of the Twenty-Fifth International Conference on Automated Planning and Scheduling, Jerusalem, Israel, 7–11 June 2015. [Google Scholar]

- Gautam, A.; Mohan, S. A review of research in multi-robot systems. In Proceedings of the IEEE 7th International Conference on Industrial and Information Systems, Chennai, India, 6–9 August 2012; pp. 1–5. [Google Scholar]

- Wurm, K.M.; Dornhege, C.; Nebel, B.; Burgard, W.; Stachniss, C. Coordinating heterogeneous teams of robots using temporal symbolic planning. Auton. Robots 2013, 34, 277–294. [Google Scholar] [CrossRef]

- Jang, I.; Shin, H.S.; Tsourdos, A.; Jeong, J.; Kim, S.; Suk, J. An integrated decision-making framework of a heterogeneous aerial robotic swarm for cooperative tasks with minimum requirements. Auton. Robots 2019, 233, 2101–2118. [Google Scholar] [CrossRef]

- Kingry, N.; Liu, Y.C.; Martinez, M.; Simon, B.; Bang, Y.; Dai, R. Mission planning for a multi-robot team with a solar-powered charging station. In Proceedings of the International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 5233–5238. [Google Scholar]

- Reis, J.C.; Lima, P.U.; Garcia, J. Efficient distributed communications for multi-robot systems. In Robot Soccer World Cup; Springer: Berlin, Germay, 2013; pp. 280–291. [Google Scholar]

- Jiang, J.; Lu, Z. Learning attentional communication for multi-agent cooperation. In Advances in Neural Information Processing Systems: Annual Conference on Neural Information Processing Systems, Montreal, QC, Canada, 2–8 December 2018; Neural Information Processing Systems: San Diego, CA, USA, 2018; pp. 7254–7264. [Google Scholar]

- Foerster, J.; Assael, I.A.; de Freitas, N.; Whiteson, S. Learning to communicate with deep multi-agent reinforcement learning. In Advances in Neural Information Processing Systems: Annual Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Neural Information Processing Systems: San Diego, CA, USA, 2016; pp. 2137–2145. [Google Scholar]

- Himri, K.; Ridao, P.; Gracias, N.; Palomer, A.; Palomeras, N.; Pi, R. Semantic SLAM for an AUV using object recognition from point clouds. IFAC-PapersOnLine 2018, 51, 360–365. [Google Scholar] [CrossRef]

- Li, L.; Liu, Z.; Özgïner, Ü.; Lian, J.; Zhou, Y.; Zhao, Y. Dense 3D Semantic SLAM of traffic environment based on stereo vision. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium, Changshu, China, 26–30 June 2018; pp. 965–970. [Google Scholar]

- Mao, M.; Zhang, H.; Li, S.; Zhang, B. SEMANTIC-RTAB-MAP (SRM): A semantic SLAM system with CNNs on depth images. Math. Found. Comput. 2019, 2, 29–41. [Google Scholar] [CrossRef]

- Lu, J.; Xiong, C.; Parikh, D.; Socher, R. Knowing when to look: Adaptive attention via a visual sentinel for image captioning. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 375–383. [Google Scholar]

- Lu, J.; Yang, J.; Batra, D.; Parikh, D. Hierarchical question-image co-attention for visual question answering. In Advances in Neural Information Processing Systems: Annual Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Neural Information Processing Systems: San Diego, CA, USA, 2016; pp. 289–297. [Google Scholar]

- Dai, B.; Zhang, Y.; Lin, D. Detecting visual relationships with deep relational networks. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3076–3086. [Google Scholar]

- Srivastava, S.; Fang, E.; Riano, L.; Chitnis, R.; Russell, S.; Abbeel, P. Combined task and motion planning through an extensible planner-independent interface layer. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 639–646. [Google Scholar]

- Dornhege, C.; Hertle, A.; Nebel, B. Lazy evaluation and subsumption caching for search-based integrated task and motion planning. In Proceedings of the International Conference on Robotics and Automation Workshop on AI-Based Robotics, Karlsruhe, Germany, 6–10 May 2013. [Google Scholar]

- Manso, L.J.; Bustos, P.; Alami, R.; Milliez, G.; Núnez, P. Planning human-robot interaction tasks using graph models. In Proceedings of the International Workshop on Recognition and Action for Scene Understanding, Valletta, Malta, 5 September 2015; pp. 15–27. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE international conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4490–4499. [Google Scholar]

- Ghesu, F.C.; Georgescu, B.; Zheng, Y.; Grbic, S.; Maier, A.; Hornegger, J.; Comaniciu, D. Multi-scale deep reinforcement learning for real-time 3D-landmark detection in CT scans. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 176–189. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3D shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 1912–1920. [Google Scholar]

- Zhang, Q.; Sornette, D. Learning like humans with Deep Symbolic Networks. arXiv 2017, arXiv:1707.03377. [Google Scholar]

- Liao, Q.; Poggio, T. Object-Oriented Deep Learning; Center for Brains, Minds and Machines: Cambridge, MA, USA, 2017. [Google Scholar]

- Moon, J.; Lee, B. Scene understanding using natural language description based on 3D semantic graph map. Intell. Serv. Robot. 2018, 11, 347–354. [Google Scholar] [CrossRef]

- Xu, D.; Zhu, Y.; Choy, C.B.; Li, F.-F. Scene graph generation by iterative message passing. In Proceedings of the IEEE international conference on Computer Vision and Pattern Recognition, Venice, Italy, 22–29 October 2017; pp. 5410–5419. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

| 0.000: | (goto point indoor cookie0 POI0 POI0) | [20.000] |

| 0.000: | (goto point outdoor cookie1 POI12 POI12) | [20.000] |

| 0.000: | (goto point street cookie2 POI2 POI2) | [20.000] |

| 0.000: | (fly beyond0 POI6 POI6) | [20.000] |

| 20.001: | (goto point indoor cookie0 POI0 POI1) | [20.000] |

| 20.001: | (goto point outdoor cookie1 POI12 POI13) | [20.000] |

| 20.001: | (goto point street cookie2 POI2 POI3) | [20.000] |

| 20.001: | (fly beyond0 POI6 POI7) | [20.000] |

| 40.001: | (goto point indoor cookie0 POI1 POI10) | [20.000] |

| 40.001: | (goto point outdoor cookie1 POI13 POI14) | [20.000] |

| 40.001: | (goto point street cookie2 POI3 POI4) | [20.000] |

| 40.001: | (fly beyond0 POI7 POI8) | [20.000] |

| 60.001: | (goto point indoor cookie0 POI10 POI11) | [20.000] |

| 60.001: | (goto point outdoor cookie1 POI14 POI15) | [20.000] |

| 60.001: | (goto point street cookie2 POI4 POI5) | [20.000] |

| 60.001: | (fly beyond0 POI8 POI9) | [20.000] |

| 80.001: | (detect beyond0 POI9 human) | [20.000] |

| 0.000: | (goto point outdoor cookie1 POI15 POI16) | [20.000] |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moon, J.; Lee, B.-H. PDDL Planning with Natural Language-Based Scene Understanding for UAV-UGV Cooperation. Appl. Sci. 2019, 9, 3789. https://doi.org/10.3390/app9183789

Moon J, Lee B-H. PDDL Planning with Natural Language-Based Scene Understanding for UAV-UGV Cooperation. Applied Sciences. 2019; 9(18):3789. https://doi.org/10.3390/app9183789

Chicago/Turabian StyleMoon, Jiyoun, and Beom-Hee Lee. 2019. "PDDL Planning with Natural Language-Based Scene Understanding for UAV-UGV Cooperation" Applied Sciences 9, no. 18: 3789. https://doi.org/10.3390/app9183789

APA StyleMoon, J., & Lee, B.-H. (2019). PDDL Planning with Natural Language-Based Scene Understanding for UAV-UGV Cooperation. Applied Sciences, 9(18), 3789. https://doi.org/10.3390/app9183789