Abstract

Spatiotemporal fusion methods provide an effective way to generate both high temporal and high spatial resolution data for monitoring dynamic changes of land surface. But existing fusion methods face two main challenges of monitoring the abrupt change events and accurately preserving the spatial details of objects. The Flexible Spatiotemporal DAta Fusion method (FSDAF) was proposed, which can monitor the abrupt change events, but its predicted images lacked intra-class variability and spatial details. To overcome the above limitations, this study proposed a comprehensive and automated fusion method, the Enhanced FSDAF (EFSDAF) method and tested it for Landsat–MODIS image fusion. Compared with FSDAF, the EFSDAF has the following strengths: (1) it considers the mixed pixels phenomenon of a Landsat image, and the predicted images by EFSDAF have more intra-class variability and spatial details; (2) it adjusts the differences between Landsat images and MODIS images; and (3) it improves the fusion accuracy in the abrupt change area by introducing a new residual index (RI). Vegetation phenology and flood events were selected to evaluate the performance of EFSDAF. Its performance was compared with the Spatial and Temporal Adaptive Reflectance Fusion Model (STARFM), the Spatial and Temporal Reflectance Unmixing Model (STRUM), and FSDAF. Results show that EFSDAF can monitor the changes of vegetation (gradual change) and flood (abrupt change), and the fusion images by EFSDAF are the best from both visual and quantitative evaluations. More importantly, EFSDAF can accurately generate the spatial details of the object and has strong robustness. Due to the above advantages of EFSDAF, it has great potential to monitor long-term dynamic changes of land surface.

1. Introduction

High spatial resolution remote sensing images with highly frequent observations are significant for monitoring dynamic changes of land surface [1,2,3,4], especially for monitoring short-term and abrupt change events such as floods and forest fires [5,6]. However, due to technical and budget limitations, existing single satellite sensors cannot simultaneously generate high temporal and high spatial resolution images [2,7,8]. For example, the remote sensing images acquired from Landsat, SPOT, and Sentinel 2 satellites have a high spatial resolution (10–30 m), but they have a long coverage period of 5–30 days (hereinafter called “fine resolution” images). The other satellites images (e.g., MODIS and VIIRS) have a daily sampling frequency, but spatial resolution ranges between 250 and 1000 m (hereinafter called “coarse resolution” images). To overcome this constraint, spatiotemporal fusion methods of remote sensing data have been developed to synthesize high spatial and temporal resolution images for monitoring the dynamic changes of land surface by fusing coarse resolution images and fine resolution images [9,10]. In the past decade, the synthetic high spatiotemporal resolution images have been widely used in vegetation phenology monitoring [9,11], urban surface temperatures [12,13,14], urbanization [15], crop yield estimating [16,17], and monitoring sudden and short-term change events (e.g., flood) [18].

According to the literature, the existing spatiotemporal fusion methods can be classified into three categories [19,20,21]: weight function-based, unmixing-based, learning-based. Weight function-based methods estimate the fine resolution images by weighting the similar neighboring information of all input images. The Spatial and Temporal Adaptive Reflectance Fusion Model (STARFM) [7] was the earliest proposed and most widely used spatiotemporal fusion method, which blended the Landsat and MODIS imagery to synthesize Landsat-like images by using a weighted function, but STARFM cannot predict heterogeneous landscape. Then, the Spatial Temporal Adaptive Algorithm for mapping Reflectance Change (STAARCH) [2] and the Enhanced STARFM (ESTARFM) [8] were developed to improve STARFM’s performance in disturbance areas and heterogeneous areas, respectively. However, both STAARCH and ESTARFM required two pairs of MODIS and Landsat images for input data, which are less suitable in cloudy places. Unmixing-based methods predict the fine resolution images by unmixing the coarse resolution images based on the spectral mixing theory. The Multi-sensor Multi-resolution Technique (MMT) [22] was originally proposed based on unmixing, and existing fusion methods based on unmixing were an improvement to MMT. Methods, such as the Spatial Temporal Data Fusion Approach (STDFA) [23] and the Spatial and Temporal Reflectance Unmixing Model (STRUM) [24], were typical unmixing-based methods. The advantages of unmixing-based methods have high computational efficiency, which can generate the time series of images with high spatiotemporal resolution. However, the fusion methods based on unmixing only unmix the coarse resolution images, which did not consider mixed pixels phenomenon of fine resolution images, and its predicted fine resolution images lacked intra-class variability and spatial details [19,25]. In addition, most of both the weight function-based methods and unmixing-based methods supposed the land cover will not change between base date and prediction date, which cannot monitor the abrupt change events.

Learning-based methods have been proposed in recent years, which employ the machine learning algorithms to perform feature learning between coarse resolution images and fine resolution images [26], and simulating the fine resolution images based on the structural similarity of the input images. The SParse-representation-based SpatioTemporal reflectance Fusion Model (SPSTFM) [27] was the first learning-based algorithm, which established a correspondence between the fine resolution and coarse resolution images through the dictionary pair learning to generate the predicted fine resolution images. Following SPSTFM, Song [28] developed another dictionary-based algorithm that only required a pair of coarse resolution and fine resolution images. One of the important improvements of the learning-based methods is that it can predict land cover change events. Although many fusion methods of learning based have recently been proposed, such as Error-Bound-regularized SParse coding (EBSPTM) [29], Extreme Learning Machine (ELM) [30], and Hybrid Color Mapping (HCM) [31], the learning-based fusion methods are relatively novel, which have not been widely used [32], In addition, learning-based methods only consider the statistical relationships between the fine resolution images and coarse resolution images instead of any physical properties of remote sensing signals [28], which predicted fine resolution images cannot exactly remain spatial detail feature and shapes of objects.

Previous literature shows that existing fusion methods have two main improvements including monitoring the abrupt change events and accurately capturing the spatial details of land surface. To solve the problems, hybrid methods were proposed in recent years, and the Flexible Spatiotemporal DAta Fusion method (FSDAF) [32] was a typical hybrid method. FSDAF was based on spectral unmixing analysis and a thin plate spline (TPS) interpolator and combined the weighted function to predict fine resolution images. Because the information of land cover change can be captured by using a TPS interpolator for the MODIS images at the prediction date, the FSDAF can monitor abrupt change events and has been widely used recently. However, the FSDAF did not consider mixed pixels for Landsat images, and its predicted fine resolution images lacked intra-class variability and spatial details. Specifically, the FSDAF has the following three limitations: (1) it directly performs the hard-classification for Landsat image, and its predicted Landsat-like images lack intra-class variability and spatial details; (2) it directly assigns the temporal changes of coarse resolution images to fine resolution images without considering the differences between Landsat and MODIS images; and (3) it introduces a homogeneity index (HI) to guide the residual distribution, which is derived from the classification map at the base date. The HI will not be suitable for guiding the residual distribution when there are land cover changes and misclassifications [33]. In addition, more input parameters were required to be set before the operation of FSDAF, which increased the computational complexity.

To solve the above limitations of the FSDAF method, this study developed a comprehensive and automated fusion method, an enhanced FSDAF (EFSDAF), and tested it by fusing the Landsat images and MODIS images. Compared with FSDAF, EFSDAF has the following strengths: (1) it considers the mixed pixels in Landsat images and uses a globally representative spectral linear mixture model (SVD) of the Landsat pixels for spectral unmixing; (2) it adjusts the differences between Landsat images and MODIS images using a linear model; and (3) it proposes a new residual index (RI) to guide the residual distribution based on the interpolator results of MODIS images at the base date and prediction date. In addition, EFSDAF is an automated spatiotemporal fusion method that does not require additional input parameters compared to FSDAF. In this study, we tested the EFSDAF in the areas of vegetation phenology and flood events and compared it with three other popular fusion methods: STARFM, STRUM, and FSDAF.

2. Method

2.1. Definitions and Notations

Before introducing the details of EFSDAF, some important definitions and notations are given here for convenience and clarity.

and define the base date and the prediction date, respectively. is the number of fine resolution pixels within one coarse resolution pixel. m represents the m-th endmembers within one coarse resolution pixel or one fine resolution pixel. and denote the coordinate index of the i-th coarse resolution pixels and the j-th fine pixels within the i-th coarse pixels, respectively, and j = 1 … . The coarse resolution images observed at and are stored in and , and the fine resolution images observed at and are stored in and . b is the number of bands for coarse resolution images and fine resolution images. and denote the abundances of the fine resolution pixels at and , and and denote the abundances of the coarse resolution pixels at and , respectively.

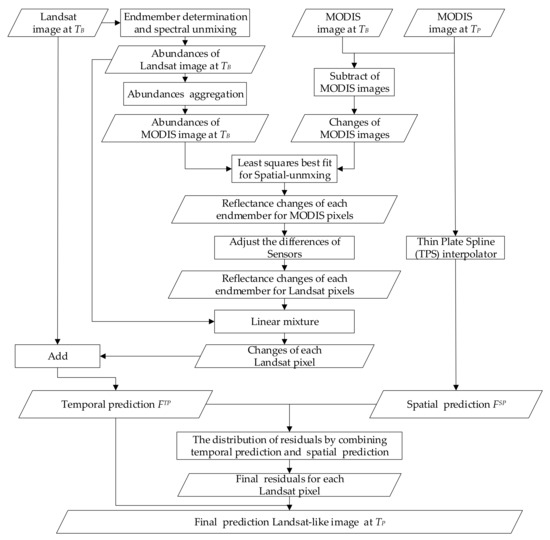

2.2. Theoretical Basis of EFSDAF

Like FSDAF, the input images of EFSDAF include, a MODIS image at , a Landsat image at and a MODIS image at . The output of EFSDAF is a predicted fine resolution image (e.g., Landsat-like) at . Unlike FSDAF, EFSDAF considers more than one land cover type in both the Landsat images (30 m) and MODIS images (500 m). Therefore, the endmembers determination and abundances extraction of the Landsat image at are firstly carried out in EFSDAF. EFSDAF includes five main steps: (1) endmember determination and spectral unmixing for Landsat image at ; (2) temporal prediction () for no land cover change from to ; (3) spatial prediction () for land cover change at ; (4) residual distribution by using a new residual index (RI); and (5) final prediction of a Landsat-like image using neighborhood in a sliding window. The workflow of EFSDAF is outlined by the flowchart in Figure 1. Detailed descriptions of each step in EFSDAF are given below. The code of EFSDAF can be found at the URL https://github.com/max19951001/EFSDAF.git.

Figure 1.

Flowchart of the proposed Enhanced Flexible Spatiotemporal DAta Fusion (EFSDAF) method.

2.2.1. Endmember Determination and Spectral Unmixing for Landsat Images at

Endmember extraction and spectral unmixing of Landsat images at are the first step and key step for EFSDAF in this paper due to the heterogeneity of land surface with more than one land cover type in Landsat pixels. In this study, a globally representative spectral linear mixture model (SVD model) [34,35,36] shared by Small for Landsat images was selected as endmembers that were classified into three types: substrate (S), vegetation (V), and dark surface (D) [20], and as a linear method, the fully constrained least squares (FCLS) [37] method was applied to the spectral unmixing. The abundance calculated by FCLS varies from 0 to 1, and the sum of is 1.

2.2.2. Temporal Prediction ( ) for No Land Cover Change from to

- Temporal changes of each endmember at the MODIS pixels

The temporal prediction of EFSDAF assumes no land cover change from to . In other words, the endmembers and abundances of Landsat pixels will not change from to . According to the linear mixture model [38], the values of Landsat pixels are a linear mixture of endmembers’ values and abundances. Hence, the values of Landsat pixels at and can be expressed as:

where defines the number of endmembers and m is the m-th endmembers for one Landsat pixel, and denote the reflectance values of each endmember for all Landsat image bands at and , respectively. is the system error. According to the above assumption, the endmembers and abundances of Landsat pixels will not change from to , i.e., = , and is constant. From Equations (1) and (2), we have:

where denotes the temporal changes of the Landsat images. are the reflectance changes of each endmember for all Landsat image bands. Among the variables above, if is known, can be calculated by a linear mixture model. Therefore, the key is to solve .

Similar to the temporal changes of Landsat images, the temporal changes of MODIS images from to can be expressed as:

where denotes the temporal changes of the MODIS images. are the reflectance changes of each endmember for all MODIS image bands. Among the variables above, could be calculated through . Due to the previous assumption of no land cover change from to , the types and spatial distributions of the endmembers should be the same for Landsat and MODIS images in the same regions. Hence, can be aggregated from with:

where is calculated by averaging the endmember abundances of all Landsat pixels in one MODIS pixel. However, is the ratio of the number of Landsat pixels for class to in FSDAF. Although and from Equations (4) and (5) are known variables, Equation (4) cannot be solved because there are unknown values of .

In this study, assuming that the land cover types are the same for a small area according to the first law of geography [39] stating that near things are more related than distant things, it is reasonable that are the same in a small area [20]. However, FSDAF assumes that the temporal changes of each class are the same among all MODIS pixels, which does not match the actual situation. A sliding window of MODIS pixels is introduced to perform spatial unmixing in this study, and the size of the window should be larger than the number of endmembers [40]. The equations [32] are as follows:

where can be calculated by computing a least squares best fit solution. Selecting k of the purest MODIS pixels of each endmember in the sliding window for least squares best fit aims to reduce the errors by collinearity [32]. The final determined MODIS pixels for least squares best fit should be smaller than the size of the sliding window and larger than the number of endmembers.

- Adjustment of the differences between the Landsat images and MODIS images

Theoretically speaking, the reflectance values of the Landsat pixels corresponding to the MODIS pixels are the same. However, due to the physical differences of the sensors, the effects of bandwidth, weather conditions, and atmospheric correction, differences between MODIS images and Landsat images are inevitable. As with previous studies [20,41], a linear model is introduced in this study to adjust the differences between Landsat images and MODIS images.

where and are the slope and interception of the linear model, respectively. From Equations (7) and (8), the differences between and can be calculated as the following Equation (9).

can be obtained from Equations (5)–(9) in this study. However, the of FSDAF were calculated by assigning the temporal changes of MODIS images to Landsat images without considering the differences of Landsat and MODIS images.

- Temporal prediction for the fine resolution image at

is linearly mixed with from Equation (3), and the final temporal prediction can be calculated by Equation (10) as follows:

Although the final temporal prediction can be calculated by Equation (10), all of the above calculations are based on the assumption of no land cover change from to . Hence, the temporal prediction cannot accurately predict abrupt change events from to . In addition, because this prediction only uses the information of the temporal changes between and , we call Section 2.2.2 the temporal prediction.

2.2.3. Spatial Prediction () for Land Cover Change at

- Analysis and calculation of the residual

The temporal prediction from Section 2.2.2 is not an accurate prediction when there are land cover changes. Specifically, there is a certain deviation between the true values () and the temporal prediction values (). This study introduces a residual R between the and the as follows:

Furthermore, the value of one MODIS pixel is equal to the average of all of Landsat pixels within one MODIS pixels and a system deviation [7]. Hence, the values of MODIS pixels at and can be expressed as:

From Equations (11)–(13), we have:

As can be seen from the above Equations (11)–(14), the calculation and distribution of the residual R is a key step for obtaining the final prediction at .

- Spatial prediction of the MODIS image at

As described above, the source of the residual R is in the area of land cover change. However, the information of land cover change can only be obtained from the MODIS image at . Therefore, downscaling the MODIS image to Landsat image level is the vital step to obtain the information of the land cover change at . In EFSDAF, a Thin Plate Spline (TPS) interpolation method is applied to downscale the MODIS image at . TPS is a spatial interpolation technique for point data based on spatial dependence [42], which was used to the spatiotemporal fusion method because it has high interpolation accuracy [32,43]. The interpolation result of the MODIS image at will be marked as , which is another prediction of the Landsat image at . Unlike FSDAF, the MODIS image at is also interpolated using the TPS method to calculate the residual index (RI) in Section 2.2.4 for EFSDAF, and its result will be marked as . Because the spatial information is obtained from MODIS images using the TPS interpolator method, we call Section 2.2.3 spatial prediction.

2.2.4. Residual Distribution by Using a New Residual Index (RI)

From Equation (11), the predicted Landsat-like image is calculated by adding a reasonable residual based on the temporal prediction. Therefore, the residual distribution is the key step for the final prediction. In FSDAF, a homogeneity index (HI) derived from the classification map of the Landsat image at was introduced to guide the residual distribution. Because the HI only depends on the classification map at , it will be unreasonable when the land cover type has changed from to or the classification map is wrong [33]. Hence, a new residual index (RI) is proposed to reasonably guide the residual distribution in this study, and more residual will be assigned to the area of land cover change. According to Section 2.2.3, the spatial variation can be expressed as:

where defines the changes of interpolation results of MODIS images from to , and the larger , the larger the changes of land cover type. In EFSDAF, we assume the changes of land cover type occur in a small area. If in one Landsat pixel is greater than the average of the , and we think the Landsat pixel is the area of land cover change. The RI can be calculated as follows:

where is equal to 1 when the k-th Landsat pixels is determined to the pixel of land cover change within a sliding window; otherwise, is equal to 0. defines the window size. The RI is the ratio of the numbers of = 1 in the window to . The range of RI is 0–1, and a larger RI indicates more residual to the area of land cover change.

Then we use the same weighting function to distribute the final residual as FSDAF. The weighting function is defined as follow:

where = , and is the residual for the area of land cover change. , and is the residual for area of no land cover change.

The final residual can be calculated as follows:

where is the normalized .

According to Equations (10) and (11), the final changes of the Landsat images between and can be obtained from Equation (19):

where and are the final changes of Landsat images and the changes of temporal prediction from to , respectively.

2.2.5. Final Prediction of the Landsat-Like Image Using Neighborhood in a Sliding Window

Theoretically, the final prediction of Landsat-like image at can be obtained by the following equation.

However, uncertain errors are inevitable in EFSDAF because of the complexity of the calculation process and the characteristics of pixel-by-pixel. In addition, the final prediction will lead to block effects because the residual distribution is for the MODIS pixels. In this study, EFSDAF adopts the same solution as STARFM, which uses the information of neighborhood to reduce the uncertainties and smooth final prediction in a sliding window. The final predicted Landsat-like image can be calculated by Equation (21), and detailed information can be found in STARFM [7].

where n is the number of similar pixels for Landsat pixels in a sliding window, and is the weight for each similar pixel.

3. Testing Experiment

3.1. Study Area and Data

The EFSDAF is proposed to accurately monitor dynamic changes of land surface including gradual change (e.g., vegetation phenology) and abrupt change events (e.g., flood). In this study, vegetation phenology and flood events are selected to evaluate the performance of EFSDAF.

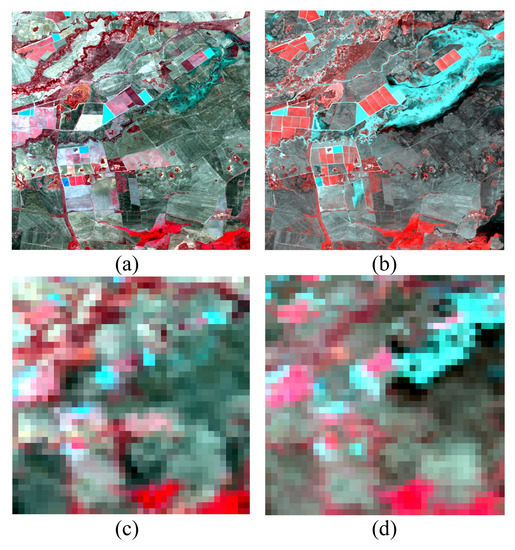

The selected two datasets were from the datasets shared by Emelyanova [10], which were collected in the Lower Gwydir Catchment in Northern New South Wales, Australia (149.2815° E, 29.0855° S, hereinafter called “Gwydir”) and have been widely applied in the evaluation of spatiotemporal fusion methods [10,20,21,32,44]. All images were atmospherically corrected and geographically co-registered, which was resampled to 25 m with the nearest neighbor method by Emelyanova. In EFSDAF, we aggregated the MODIS pixels to 500 m resolution in the process of residual distribution. Two pairs of Landsat and MODIS images of the first dataset (vegetation phenology) were acquired on 5 July 2004 and 6 August 2004 in Gwydir, and the size of the selected images is . The first dataset was a typical heterogeneous region, and temporal dynamic changes were mainly caused by phenological change from 5 July to 6 August 2004. The selected Landsat and MODIS images of the first dataset are shown in Figure 2. The second dataset (flood) was acquired on 11 November 2004 and 12 December 2004, and the size of selected images is 800 × 800 pixels. A large flood occurred from 26 November 2004 to 12 December 2004, and the dataset was a typical abrupt change area. The selected Landsat and MODIS images of the second dataset are shown in Figure 3.

Figure 2.

Test data with gradual change (vegetation phenology) in Gwydir. (a) Landsat image of 5 July 2004; (b) Landsat image of 6 August 2004; (c) and (d) MODIS images of the same periods as Landsat images. All images use NIR-red-green as R-G-B.

Figure 3.

Test data with abrupt change (flood) in Gwydir. (a) Landsat image of 26 November 2004; (b) Landsat image of 12 December 2004; (c) and (d) MODIS images of the same periods to Landsat images. All images use NIR-red-green as R-G-B.

3.2. Comparison and Evaluation of EFSDAF with STARFM, STRUM, and FSDAF

In this study, we compared EFSDAF with three other fusion methods: STARFM, STRUM, and FSDAF. Because these three fusion methods have been widely used, and each of them only needs one pair of Landsat and MODIS images at and one MODIS image at as input data.

All fusion images are qualitatively and quantitatively evaluated by comparing the predicted Landsat-like image with true images at . The qualitative evaluation is to visually compare the predicted images with the true images, and the differences between the predicted images of the four fusion methods are magnified and highlighted by using yellow elliptical circles and black squares. Representative assessment indexes as quantitative evaluation are used to objectively evaluate the predicted images and true images pixel by pixel, and they are Average Difference (AD), Root Mean Square Error (RMSE), Correlation Coefficient (CC), and Structure Similarity (SSIM) [45], respectively. The evaluation criteria are as follows: the closer AD and RMSE are to 0, the closer the predicted value to the true value; the closer CC and SSIM are to 1, the more similar the predicted images are to the true images.

4. Results

4.1. Experiment in Gradual Change Area (Vegetation Phenology)

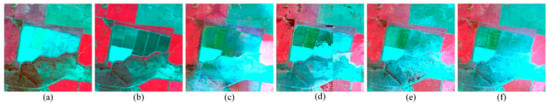

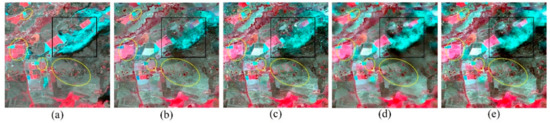

From Figure 4, the predicted Landsat-like images by four methods can monitor the changes of vegetation growth, and the results of the four fusion methods are similar to the true image on the whole. However, the yellow elliptical circles in Figure 4 show the differences of the four methods, and we can get that STARFM blurs the boundaries of different objects, STRUM produces block effects, FSDAF cannot retain the spatial details, and EFSDAF is closest to the true image. Especially, EFSDAF can accurately retain the boundaries of objects from Figure 5, and it can capture more spatial details of the object than FSDAF.

Figure 4.

Visual comparison of the predicted Landsat-like images by STARFM, STRUM, FSDAF, and EFSDAF in dataset 1 (vegetation phenology). From left to right, green-red-NIR (standard false color) composites of (a) a true Landsat image of 6 August 2004; (b–e) predicted images of 6 August 2004 by STARFM, STRUM, FSDAF, and EFSDAF, respectively. (Figure 4 shows the predicted Landsat-like images of 6 August 2004 by four fusion methods in gradual change area, and the yellow elliptical circles represent the intuitive differences of the four fusion methods. The zoom-in black square areas marked in Figure 4 are used to visually evaluate the performance of the four methods for the vegetation phenology in heterogeneous regions (Figure 5)).

Figure 5.

Zoomed in scenes of the black square areas marked in Figure 4. From left to right, (a) true Landsat image of 5 July 2004(base date); (b) true Landsat image of 6 August 2004(prediction date); (c–f) predicted images of 6 August 2004 by STARFM, STRUM, FSDAF and EFSDAF, respectively.

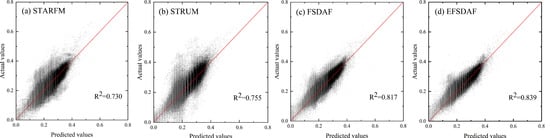

From quantitative indices of four methods in Table 1, the fusion results by four methods can achieve high accuracy. For all six bands and the mean, it can be seen that the accuracy of EFSDAF is the highest by comparing it with the other three methods, which have lower AD and RMSE, as well as higher CC and SSIM. Taking the mean of all bands as an example, the CC values of the four methods are 0.807, 0.826, 0.858, and 0.883 from left to right, respectively. In addition, because the vegetation phenology change happened in dataset 1, the prediction accuracy of band 4 (NIR) can better illustrate the performance of the four methods. From the scatter plot of Figure 6, it can be confirmed that the synthetic image values by EFSDAF are closer to the true values than the other three methods.

Table 1.

The quantitative assessment of the Landsat-like images by STARFM, STRUM, FSDAF, and EFSDAF in dataset 1.

Figure 6.

Scatter plots of the true values and predicted values for NIR band in dataset 1. From left to right, (a–d) the scatter plots for STARFM, STRUM, FSDAF and EFSDAF, respectively.

4.2. Experiment in Abrupt Change Area (Flood)

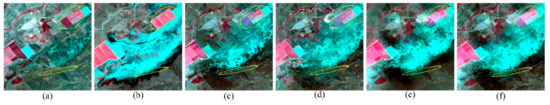

From the visual comparison, only FSDAF and EFSDAF can capture the information of land cover change in Figure 7, it can be more clearly and accurately seen from the zoom-in black area in Figure 8. In addition, the spatial details restored by EFSDAF are most complete and most similar with the true image from the yellow elliptical circles of Figure 7. From the yellow elliptical circles of Figure 8, the small flood change information can be accurately captured by EFSDAF, but it cannot be captured by FSDAF.

Figure 7.

Visual comparison of Landsat-like images predicted by STARFM, STRUM, FSDAF, and EFSDAF in dataset 2 (flood). From left to right, green-red-NIR (standard false color) composites of (a) true Landsat image of 12 December 2004; (b–e) predicted images of 12 December 2004 by STARFM, STRUM, FSDAF, and EFSDAF, respectively. (Figure 7 presents the predicted Landsat-like images of 12 December 2004 by four fusion methods in the abrupt change area, and the yellow elliptical circles of Figure 7 represent the differences of the four fusion methods. The zoom-in black square areas marked in Figure 7 are used to more clearly compare the fusion results by the four methods in the land cover change area (Figure 8)).

Figure 8.

Zoomed in scenes of the black square areas marked in Figure 7. From left to right, (a) true Landsat image of 26 November 2004 (base date); (b) true Landsat image of 12 December 2004 (prediction date); (c–f) predicted images of 12 December 2004 by STARFM, STRUM, FSDAF, and EFSDAF, respectively.

Comparing the results of the quantitative indices in Table 2, STARFM and STRUM present similar results, and EFSDAF is better than FSDAF (CC 0.808 vs. 0.827, SSIM 0.796 vs. 0.815 for band 4). Furthermore, since the water body information shows lower reflectivity for band 4 (NIR), we compare the scatter plots of band 4 for the four methods in Figure 9. It can be concluded that FSDAF and EFSDAF are far superior to STARFM and STRUM, and EFSDAF is better than FSDAF.

Table 2.

Quantitative assessment of the Landsat-like images by STARFM, STRUM, FSDAF, and EFSDAF in dataset 2.

Figure 9.

Scatter plots of the true values and the predicted values for NIR band in dataset 2. From left to right, (a–d) the scatter plots for STARFM, STRUM, FSDAF and EFSDAF, respectively.

5. Discussion

The proposed EFSDAF method in this paper was an improvement on FSDAF. EFSDAF considered the mixed pixels of both Landsat images and MODIS images, and it predicted the Landsat-like images by combining spectral unmixing analysis and TPS interpolator. The experiment results showed that the fusion results by EFSDAF were better than FSDAF.

5.1. Improvements of EFSDAF Compared with FSDAF

The fusion results of EFSDAF were better than FSDAF due to the following reasons. Firstly, EFSDAF considered the mixed pixels phenomenon of the fine resolution image (e.g., Landsat), which was the most significant improvement of EFSDAF. In this study, the SVD model was introduced to perform the spectral unmixing by using a fully constrained least squares method. Moreover, the abundances of MODIS pixels were calculated by averaging the abundances of all Landsat pixels in one MODIS pixel, and the final changes of Landsat images were solved by a linear mixture that combined the abundances of endmembers and the variations of endmembers for Landsat images from to . Hence, the proposed EFSDAF considered the intra-class variability, and it can reserve more spatial details than FSDAF, which can be seen in the yellow elliptical circles of Figure 4 and Figure 7. Secondly, the spatial unmixing of the coarse resolution image (e.g., MODIS) was performed in a sliding window, which was different from the spatial unmixing of FSDAF using the whole MODIS image. It was more in line with the actual situation by using sliding windows for spatial unmixing due to the heterogeneity of land surface. The size of the sliding window for spatial unmixing was recommended to MODIS pixels because the fusion accuracy is the best. Furthermore, selecting k purest MODIS pixels of a sliding window for spatial unmixing ensured the minimal impacts of collinearity [32]. Thirdly, the differences between the Landsat and MODIS images were considered, which included the differences of the sensors and the pre-processing errors. In this study, we used a linear model to adjust the differences between the Landsat and MODIS images, but FSDAF did not consider the differences. Finally, a new residual index (RI) was introduced to guide the residual distribution instead of the homogeneity index (HI) of FSDAF. The HI will not be suitable to guide the residual distribution when there are land cover changes or misclassification [33] because it was calculated from the classification map at . The proposed RI adequately considered the source of the errors, and it was based on the differences of the interpolator results between the MODIS image at and the MODIS image at . Greater differences represent more significant land cover change and will have a larger RI value. The RI was calculated in a sliding window, and the size of the window should not be too large because the result will not be accurate for the edge of different land cover types. The recommended size was Landsat pixels according to Section 2.2.5 in this study. More information of land cover change can be monitored by EFSDAF from the yellow elliptical circles in Figure 8e,f. In addition, EFSDAF is an automated fusion method compared to FSDAF because additional input parameters were not required.

5.2. Influence of Endmember Variability on EFSDAF

The endmember variability directly affected the abundances of the Landsat image which may further affect the final fusion precision of EFSDAF. Hence, we evaluated the performance of EFSDAF by using different endmember numbers. In this study, the SVD model shared by Small and 3~5 endmembers extracted from the Landsat image at were selected to test the influence of endmember variability on EFSDAF, and the final results of the two datasets were shown in Table 3 below (three bands for testing, NIR-red-green). As can be seen from Table 3, the predicted results of EFSDAF using four different endmembers are close in the two datasets. Therefore, the influence of endmember variability on the final fusion results can be negligible, and EFSDAF is robust to endmember variability. For the automated operation of EFSDAF, the SVD model of Landsat image was used to perform the spectral unmixing in this study.

Table 3.

The fusion precision of EFSDAF by using different endmember numbers in two datasets (NIR-red-green).

5.3. The Effect of Input Images on the Predicted Values of EFSDAF

Like FSDAF, the minimum input images were used to predict the final Landsat-like images in EFSDAF. Hence, the fusion accuracy of EFSDAF was directly influenced by the input images [10,19]. Particularly, the spatial information and temporal information of the input images would be fully utilized in EFSDAF. Figure 10 showed the differences between the predicted Landsat-like image of EFSDAF and input images in dataset 2. From Figure 10b–d, it can be seen that the land cover type has changed, and the information of land cover change can be captured by comparing Figure 10b with Figure 10c. However, there was an obvious difference between the predicted image in the yellow ellipse area of Figure 10b and the true image (Figure 10a). After comparing and analyzing the input images and the predicted image in Figure 10a,d, it can be concluded that the input MODIS image at lacks the information of land cover change in the yellow ellipse from Figure 10c; therefore, the final predicted image in Figure 10a was different from the true image at from Figure 10a. However, comparing the abrupt change area of MODIS images from to in Figure 10c,d, EFSDAF can completely capture the spatial details in Figure 10b. In other words, EFSDAF can only accurately monitor the information of abrupt change in MODIS images. Hence, the quality of the input images is crucial to the fusion accuracy of EFSDAF.

Figure 10.

Differences comparison between the predicted image by EFSDAF and input images in dataset 2. From left to right: (a) Landsat image at ; (b) Landsat-like image by EFSDAF at ; (c) MODIS image at ; (d) MODIS image at .

5.4. Applications of EFSDAF to other Remote Sensing Products and Sensors

The EFSDAF in this study was tested using surface reflectance values of MODIS and Landsat images, but the purpose of EFSDAF is to study various complex surface dynamics. Therefore, EFSDAF can be applied to vegetation monitoring (e.g., NDVI), crop growth, and other sudden events such as urbanization, forest fires, and landslides. In addition, with the frequent launch of satellites for the Earth Observation System in recent years, existing satellite sensors such as Sentinel 2 MSI (10, 20, 60 m) images can replace Landsat images as fine resolution images, and like Sentinel 3 OLCI (300 m), NPP-VIIRS images (375/750 m) can replace MODIS images as coarse resolution images. We are looking forward to testing EFSDAF for other applications as well as satellite sensors.

5.5. Limitations of EFSDAF

EFSDAF can predict both gradual change and abrupt change events, but it cannot accurately monitor tiny changes (e.g., small flood information in this study) because tiny changes are not shown in one MODIS pixel. In other words, the changes of Landsat pixels can be displayed in one MODIS pixel in this study. Therefore, the prediction accuracy of tiny changes can be improved by blending Landsat images (30 m) and Sentinel 3 OLCI images (300 m), but the predicted images still cannot monitor all actual changes. In addition, the computational efficiency of EFSDAF is the slowest compared with other three methods due to the complexity of the calculation process. However, the computational efficiency of fusion methods will be ignored in the future with the rapid development of high-performance computing platforms like Google Earth Engine (GEE) [46].

6. Conclusions

In order to improve the shortcomings of FSDAF, this study developed the Enhanced FSDAF (EFSDAF) to accurately monitor dynamic changes of land surface. EFSDAF improved FSDAF in the following aspects: (1) unlike existing spatiotemporal fusion methods of unmixing based (e.g., STRUM, FSDAF), EFSDAF considered the mixed pixels of fine resolution images (Landsat), and the fusion results by EFSDAF takes into account intra-class variability and can accurately reserve the spatial details of land surface; (2) the differences between the coarse resolution images and the fine resolution images were adjusted; (3) a new residual index (RI) was proposed to guide the residual distribution, which improved the fusion accuracy in the abrupt change area. Experimental results demonstrated that:

- (1)

- EFSDAF can accurately monitor both the gradual change and abrupt change events. More importantly, EFSDAF can reserve more spatial details of land surface and has a stronger robustness than FSDAF.

- (2)

- EFSDAF can monitor more information of land cover change than FSDAF by introducing a new residual index (RI) to guide residual distribution because the proposed RI considers the actual source of residual.

- (3)

- EFSDAF is an automated fusion method because it does not need additional input parameters, which has great potential to monitor long-term dynamic changes of land surface using high spatiotemporal images.

In addition, we also expect that EFSDAF can be applied to other products of remote sensing and other satellite sensors.

Author Contributions

C.S. designed and developed the EFSDAF code and wrote the manuscript. X.W. directed and revised the manuscript. M.Z. and X.L. processed the experimental data. L.N. and H.H. made the Figures and Charts. X.Z. revised the manuscript.

Funding

This study was financially supported by National Natural Science Foundation of China [Grant number 41971387 and Grant number 41071271], Shaanxi Province Natural Science Foundation [Grant number 2015JM4132], Strategic Priority Research Program of Chinese Academy of Sciences, China [Grant number XDA2004030201], and Shaanxi Key Laboratory of Ecology and Environment of River Wetland [Grant number SXSD201701].

Acknowledgments

The authors are grateful to I. Emelyanova for sharing test data in the remote sensing community and thank C. Small for sharing the spectra of Global SVD Endmembers. Thanks to the FSDAF code shared by Xiaolin Zhu and the STRUM code provided by Jianhang Ma. Most of all, I want to thank my girlfriend Qiaona Sun for the constant support and encouragement.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yang, X.; Lo, C. Using a time series of satellite imagery to detect land use and land cover changes in the Atlanta, Georgia metropolitan area. Int. J. Remote Sens. 2002, 23, 1775–1798. [Google Scholar] [CrossRef]

- Hilker, T.; Wulder, M.A.; Coops, N.C.; Linke, J.; McDermid, G.; Masek, J.G.; Gao, F.; White, J.C. A new data fusion model for high spatial-and temporal-resolution mapping of forest disturbance based on Landsat and MODIS. Remote Sens. Environ. 2009, 113, 1613–1627. [Google Scholar] [CrossRef]

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172. [Google Scholar] [CrossRef]

- Townshend, J.R.; Masek, J.G.; Huang, C.; Vermote, E.F.; Gao, F.; Channan, S.; Sexton, J.O.; Feng, M.; Narasimhan, R.; Kim, D. Global characterization and monitoring of forest cover using Landsat data: opportunities and challenges. Int. J. Digital Earth 2012, 5, 373–397. [Google Scholar] [CrossRef]

- Zhang, F.; Zhu, X.; Liu, D. Blending MODIS and Landsat images for urban flood mapping. Int. J. Remote Sens. 2014, 35, 3237–3253. [Google Scholar] [CrossRef]

- Filipponi, F. Exploitation of Sentinel-2 Time Series to Map Burned Areas at the National Level: A Case Study on the 2017 Italy Wildfires. Remote Sens. 2019, 11, 622. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Hilker, T.; Wulder, M.A.; Coops, N.C.; Seitz, N.; White, J.C.; Gao, F.; Masek, J.G.; Stenhouse, G. Generation of dense time series synthetic Landsat data through data blending with MODIS using a spatial and temporal adaptive reflectance fusion model. Remote Sens. Environ. 2009, 113, 1988–1999. [Google Scholar] [CrossRef]

- Emelyanova, I.V.; McVicar, T.R.; Van Niel, T.G.; Li, L.T.; van Dijk, A.I. Assessing the accuracy of blending Landsat–MODIS surface reflectances in two landscapes with contrasting spatial and temporal dynamics: A framework for algorithm selection. Remote Sens. Environ. 2013, 133, 193–209. [Google Scholar] [CrossRef]

- Gao, F.; Hilker, T.; Zhu, X.; Anderson, M.; Masek, J.; Wang, P.; Yang, Y. Fusing Landsat and MODIS data for vegetation monitoring. IEEE Geosci. Remote Sens. Mag. 2015, 3, 47–60. [Google Scholar] [CrossRef]

- Zhao, Z.-Q.; He, B.-J.; Li, L.-G.; Wang, H.-B.; Darko, A. Profile and concentric zonal analysis of relationships between land use/land cover and land surface temperature: Case study of Shenyang, China. Energy Build. 2017, 155, 282–295. [Google Scholar] [CrossRef]

- Wu, P.; Shen, H.; Zhang, L.; Göttsche, F.-M. Integrated fusion of multi-scale polar-orbiting and geostationary satellite observations for the mapping of high spatial and temporal resolution land surface temperature. Remote Sens. Environ. 2015, 156, 169–181. [Google Scholar] [CrossRef]

- Weng, Q.; Fu, P.; Gao, F. Generating daily land surface temperature at Landsat resolution by fusing Landsat and MODIS data. Remote Sens. Environ. 2014, 145, 55–67. [Google Scholar] [CrossRef]

- Wang, J.; Huang, B. A spatiotemporal satellite image fusion model with autoregressive error correction (AREC). Int. J. Remote Sens. 2018, 39, 6731–6756. [Google Scholar] [CrossRef]

- Gao, F.; Anderson, M.; Daughtry, C.; Johnson, D. Assessing the variability of corn and soybean yields in central Iowa using high spatiotemporal resolution multi-satellite imagery. Remote Sens. 2018, 10, 1489. [Google Scholar] [CrossRef]

- Wang, Q.; Atkinson, P.M. Spatio-temporal fusion for daily Sentinel-2 images. Remote Sens. Environ. 2018, 204, 31–42. [Google Scholar] [CrossRef]

- Huang, C.; Chen, Y.; Zhang, S.; Liu, R.; Shi, K.; Li, L.; Wu, J. Blending NPP-VIIRS and Landsat OLI Images for Flood Inundation Monitoring; CSIRO: Canberra, Australia, 2015. [Google Scholar]

- Zhu, X.; Cai, F.; Tian, J.; Williams, T. Spatiotemporal fusion of multisource remote sensing data: literature survey, taxonomy, principles, applications, and future directions. Remote Sens. 2018, 10, 527. [Google Scholar]

- Ma, J.; Zhang, W.; Marinoni, A.; Gao, L.; Zhang, B. An improved spatial and temporal reflectance unmixing model to synthesize time series of landsat-like images. Remote Sens. 2018, 10, 1388. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Xu, B. Comparison of spatiotemporal fusion models: A review. Remote Sens. 2015, 7, 1798–1835. [Google Scholar] [CrossRef]

- Zhukov, B.; Oertel, D.; Lanzl, F.; Reinhackel, G. Unmixing-based multisensor multiresolution image fusion. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1212–1226. [Google Scholar] [CrossRef]

- Wu, M.; Niu, Z.; Wang, C.; Wu, C.; Wang, L. Use of MODIS and Landsat time series data to generate high-resolution temporal synthetic Landsat data using a spatial and temporal reflectance fusion model. J. Appl. Remote Sens. 2012, 6, 063507. [Google Scholar]

- Gevaert, C.M.; García-Haro, F.J. A comparison of STARFM and an unmixing-based algorithm for Landsat and MODIS data fusion. Remote Sens. Environ. 2015, 156, 34–44. [Google Scholar] [CrossRef]

- Belgiu, M.; Stein, A. Spatiotemporal image fusion in remote sensing. Remote Sens. 2019, 11, 818. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Xu, B. A hierarchical spatiotemporal adaptive fusion model using one image pair. Int. J. Digital Earth 2017, 10, 639–655. [Google Scholar] [CrossRef]

- Huang, B.; Song, H. Spatiotemporal reflectance fusion via sparse representation. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3707–3716. [Google Scholar] [CrossRef]

- Song, H.; Huang, B. Spatiotemporal satellite image fusion through one-pair image learning. IEEE Trans. Geosci. Remote Sens. 2012, 51, 1883–1896. [Google Scholar] [CrossRef]

- Wu, B.; Huang, B.; Zhang, L. An error-bound-regularized sparse coding for spatiotemporal reflectance fusion. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6791–6803. [Google Scholar] [CrossRef]

- Liu, X.; Deng, C.; Wang, S.; Huang, G.-B.; Zhao, B.; Lauren, P. Fast and accurate spatiotemporal fusion based upon extreme learning machine. IEEE Geosci. Remote Sens. Lett. 2016, 13, 2039–2043. [Google Scholar] [CrossRef]

- Kwan, C.; Budavari, B.; Gao, F.; Zhu, X. A hybrid color mapping approach to fusing MODIS and landsat images for forward prediction. Remote Sens. 2018, 10, 520. [Google Scholar] [CrossRef]

- Zhu, X.; Helmer, E.H.; Gao, F.; Liu, D.; Chen, J.; Lefsky, M.A. A flexible spatiotemporal method for fusing satellite images with different resolutions. Remote Sens. Environ. 2016, 172, 165–177. [Google Scholar] [CrossRef]

- Liu, M.; Yang, W.; Zhu, X.; Chen, J.; Chen, X.; Yang, L.; Helmer, E.H. An Improved Flexible Spatiotemporal DAta Fusion (IFSDAF) method for producing high spatiotemporal resolution normalized difference vegetation index time series. Remote Sens. Environ. 2019, 227, 74–89. [Google Scholar] [CrossRef]

- Sousa, D.; Small, C. Global cross-calibration of Landsat spectral mixture models. Remote Sens. Environ. 2017, 192, 139–149. [Google Scholar] [CrossRef]

- Small, C.; Milesi, C. Multi-scale standardized spectral mixture models. Remote Sens. Environ. 2013, 136, 442–454. [Google Scholar] [CrossRef]

- Small, C. The Landsat ETM+ spectral mixing space. Remote Sens. Environ. 2004, 93, 1–17. [Google Scholar] [CrossRef]

- Heinz, D.C. Fully constrained least squares linear spectral mixture analysis method for material quantification in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 529–545. [Google Scholar] [CrossRef]

- Ma, W.-K.; Bioucas-Dias, J.M.; Chan, T.-H.; Gillis, N.; Gader, P.; Plaza, A.J.; Ambikapathi, A.; Chi, C.-Y. A signal processing perspective on hyperspectral unmixing: Insights from remote sensing. IEEE Signal Proces. Mag. 2013, 31, 67–81. [Google Scholar] [CrossRef]

- Steven, M.D.; Malthus, T.J.; Baret, F.; Xu, H.; Chopping, M.J. Intercalibration of vegetation indices from different sensor systems. Remote Sens. Environ. 2003, 88, 412–422. [Google Scholar] [CrossRef]

- Zurita-Milla, R.; Kaiser, G.; Clevers, J.; Schneider, W.; Schaepman, M. Downscaling time series of MERIS full resolution data to monitor vegetation seasonal dynamics. Remote Sens. Environ. 2009, 113, 1874–1885. [Google Scholar] [CrossRef]

- Wu, M.; Wu, C.; Huang, W.; Niu, Z.; Wang, C.; Li, W.; Hao, P. An improved high spatial and temporal data fusion approach for combining Landsat and MODIS data to generate daily synthetic Landsat imagery. Inf. Fus. 2016, 31, 14–25. [Google Scholar] [CrossRef]

- Dubrule, O. Comparing splines and kriging. Comput. Geosci. 1984, 10, 327–338. [Google Scholar]

- Chen, X.; Li, W.; Chen, J.; Rao, Y.; Yamaguchi, Y. A combination of TsHARP and thin plate spline interpolation for spatial sharpening of thermal imagery. Remote Sens. 2014, 6, 2845–2863. [Google Scholar] [CrossRef]

- Jarihani, A.; McVicar, T.; Van Niel, T.; Emelyanova, I.; Callow, J.; Johansen, K. Blending Landsat and MODIS data to generate multispectral indices: A comparison of “Index-then-Blend” and “Blend-then-Index” approaches. Remote Sens. 2014, 6, 9213–9238. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).