Supervector Extraction for Encoding Speaker and Phrase Information with Neural Networks for Text-Dependent Speaker Verification †

Abstract

1. Introduction

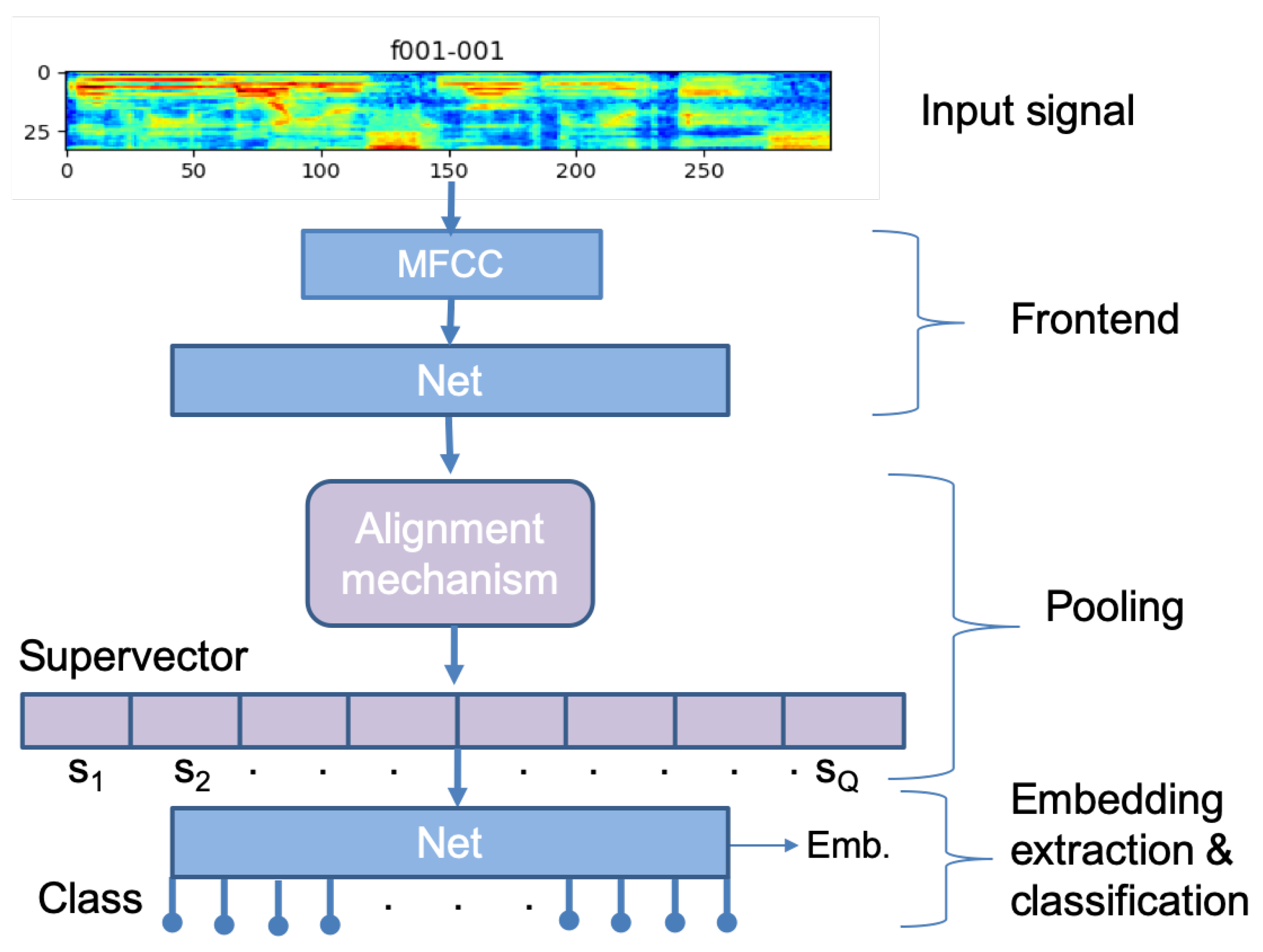

2. Deep Neural Network Based on Alignment

2.1. System Description

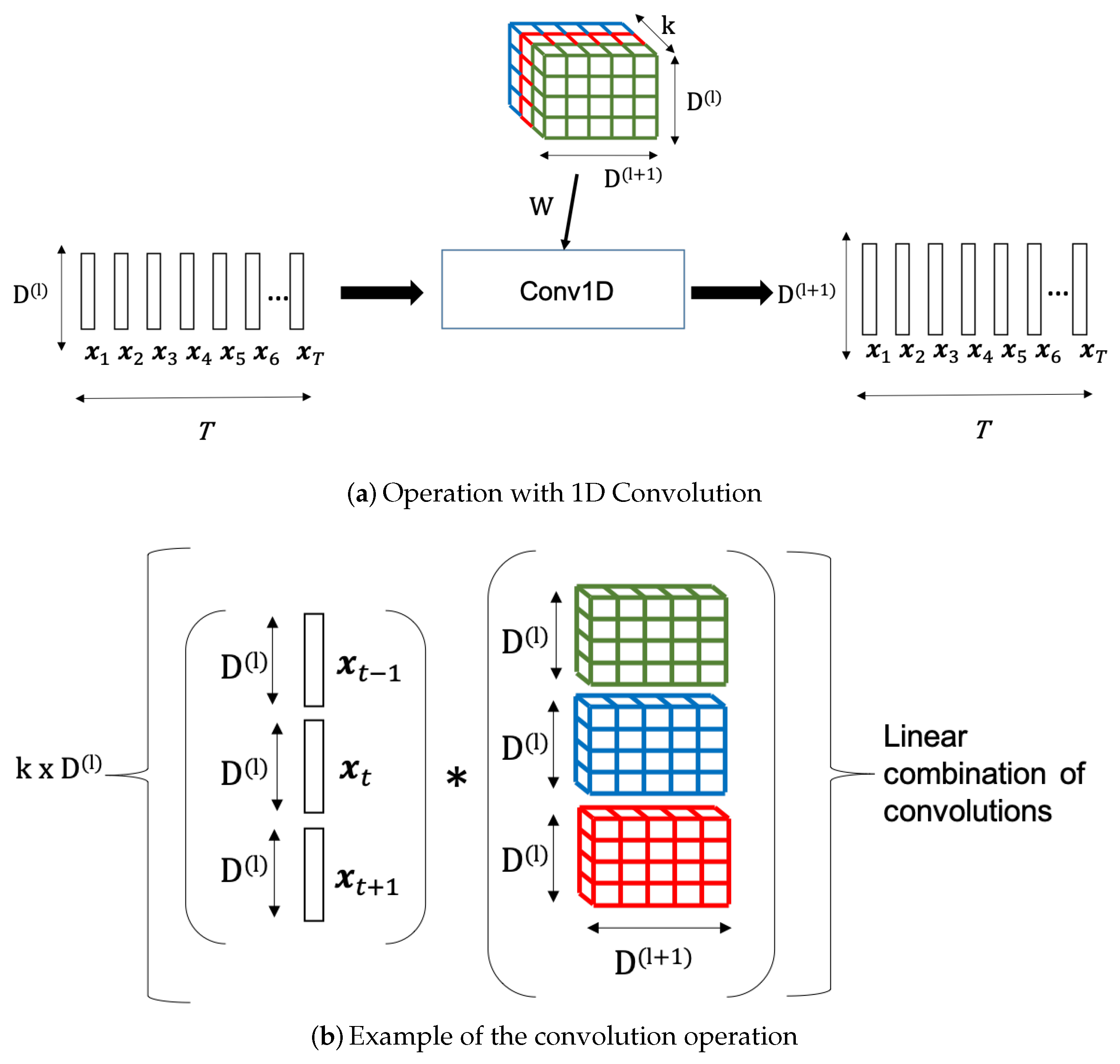

2.2. Front-End Network Architecture

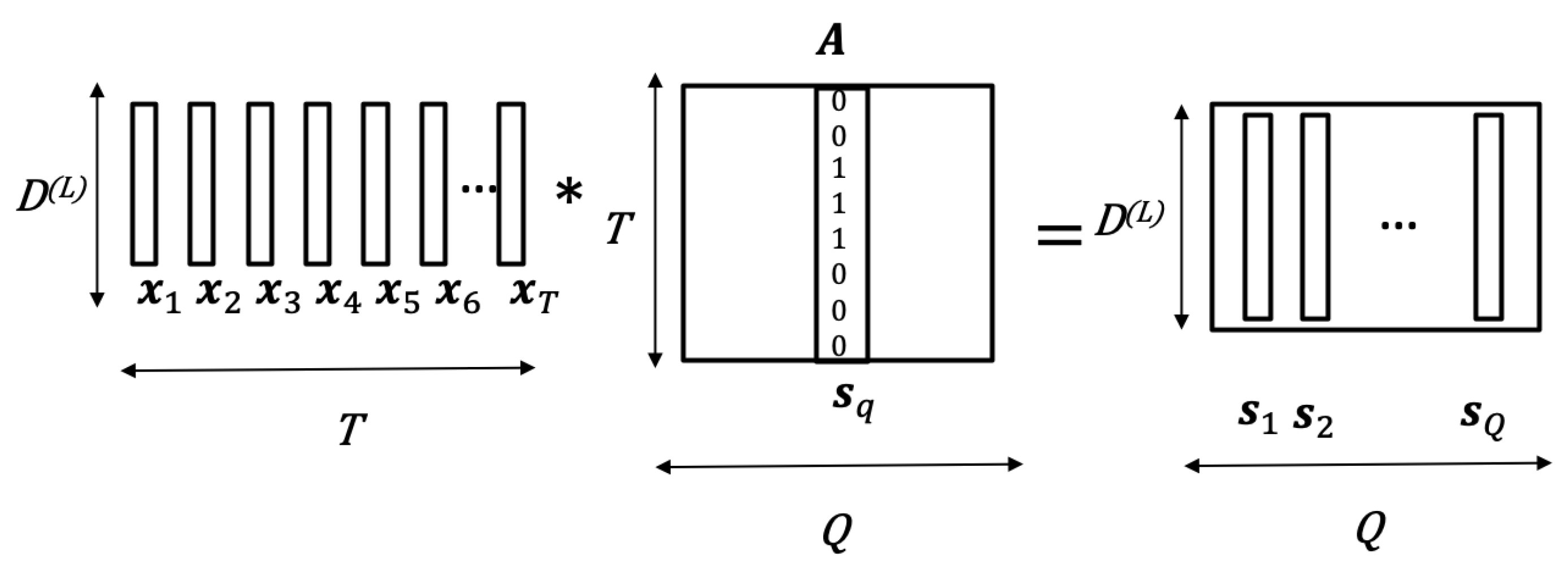

2.3. Alignment Mechanism

3. Experimental Setup

3.1. Data

3.2. Experimental Description

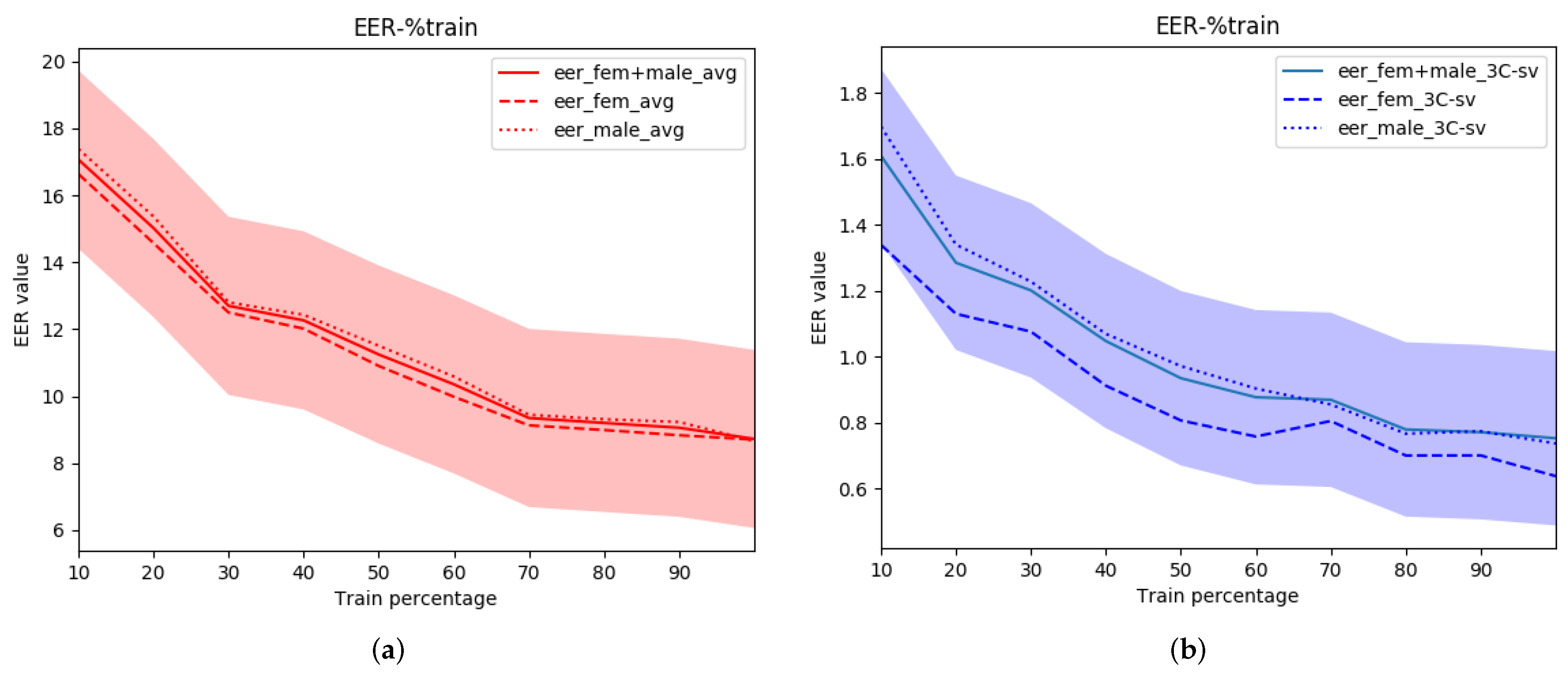

4. Results

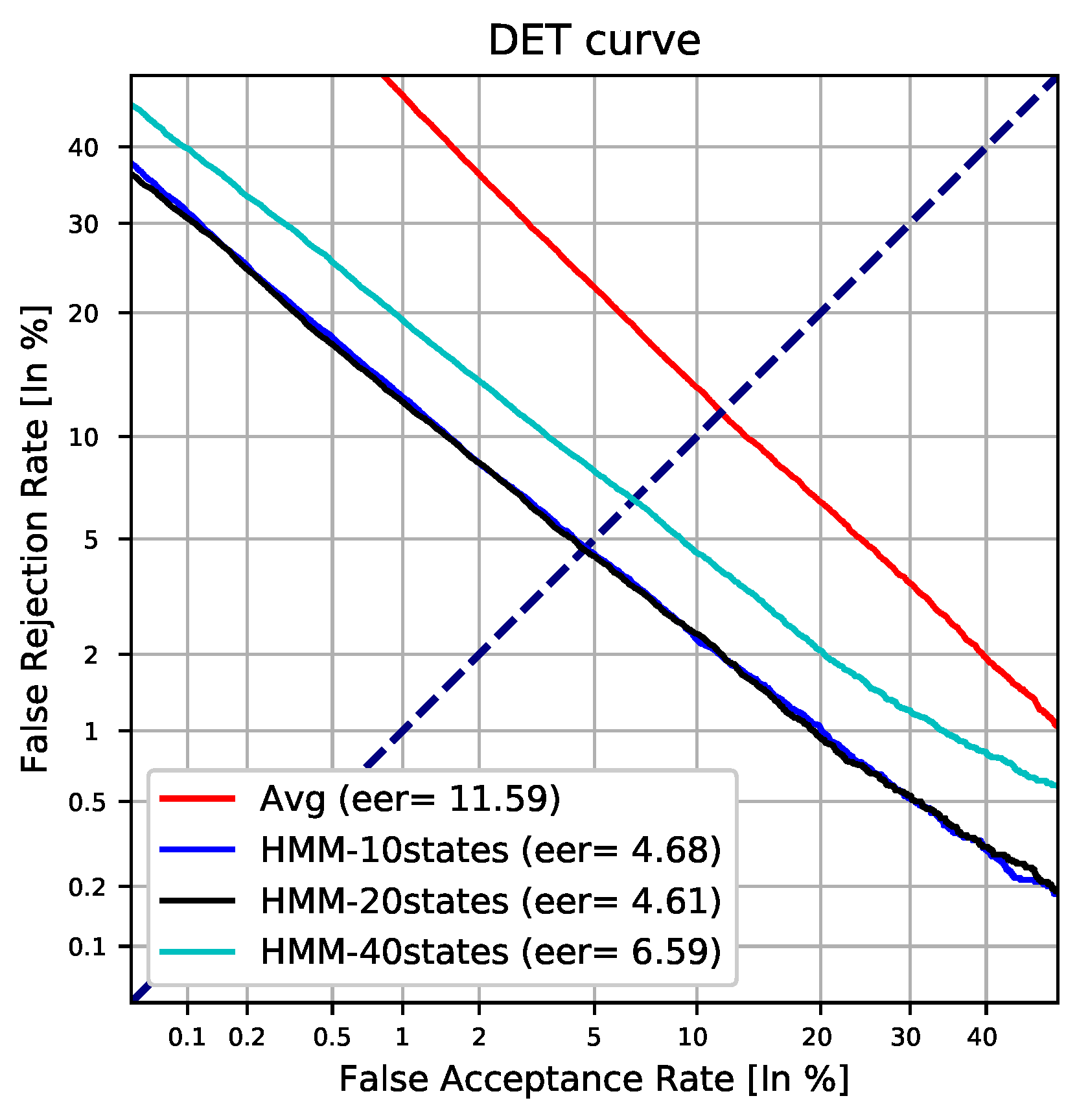

4.1. Experiments with RSR-Part I

4.2. Experiments with RSR-Part II

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Taigman, Y.; Yang, M.; Ranzato, M.; Wolf, L. DeepFace: Closing the Gap to Human-Level Performance in Face Verification. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar] [CrossRef]

- Schroff, F.; Kalenichenko, D.; Philbin, J. FaceNet: A unified embedding for face recognition and clustering. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar] [CrossRef]

- Nguyen, H.V.; Bai, L. Cosine similarity metric learning for face verification. In Proceedings of the Asian Conference on Computer Vision, Queenstown, New Zealand, 8–12 November 2010; pp. 709–720. [Google Scholar]

- Liu, Y.; Qian, Y.; Chen, N.; Fu, T.; Zhang, Y.; Yu, K. Deep feature for text-dependent speaker verification. Speech Commun. 2015, 73, 1–13. [Google Scholar] [CrossRef]

- Malykh, E.; Novoselov, S.; Kudashev, O. On residual CNN in text-dependent speaker verification task. In Proceedings of the International Conference on Speech and Computer, Hatfield, Hertfordshire, UK, 12–16 September 2017; pp. 593–601. [Google Scholar]

- Kenny, P.; Ouellet, P.; Dehak, N.; Gupta, V.; Dumouchel, P. A study of interspeaker variability in speaker verification. IEEE Trans. Audio Speech Lang. Process. 2008, 16, 980–988. [Google Scholar] [CrossRef]

- Dehak, N.; Kenny, P.J.; Dehak, R.; Dumouchel, P.; Ouellet, P. Front-end factor analysis for speaker verification. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 788–798. [Google Scholar] [CrossRef]

- Lozano-Diez, A.; Silnova, A.; Matejka, P.; Glembek, O.; Plchot, O.; Pešán, J.; Burget, L.; Gonzalez-Rodriguez, J. Analysis and optimization of bottleneck features for speaker recognition. Proc. Odyssey 2016, 2016, 352–357. [Google Scholar]

- Lei, Y.; Scheffer, N.; Ferrer, L.; McLaren, M. A novel scheme for speaker recognition using a phonetically-aware deep neural network. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 1695–1699. [Google Scholar]

- Ghahabi, O.; Hernando, J. Deep belief networks for i-vector based speaker recognition. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 1700–1704. [Google Scholar]

- Bhattacharya, G.; Alam, J.; Kenny, P. Deep speaker embeddings for short-duration speaker verification. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017; pp. 1517–1521. [Google Scholar]

- Snyder, D.; Garcia-Romero, D.; Povey, D.; Khudanpur, S. Deep neural network embeddings for text-independent speaker verification. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017; pp. 999–1003. [Google Scholar]

- Snyder, D.; Garcia-Romero, D.; Sell, G.; Povey, D.; Khudanpur, S. X-vectors: Robust dnn embeddings for speaker recognition. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5329–5333. [Google Scholar]

- Zeinali, H.; Sameti, H.; Burget, L. HMM-based phrase-independent i-vector extractor for text-dependent speaker verification. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 1421–1435. [Google Scholar] [CrossRef]

- Valenti, G.; Daniel, A.; Evans, N.W. On the Influence of Text Content on Pass-Phrase Strength for Short-Duration Text-Dependent Automatic Speaker Authentication. In Proceedings of the INTERSPEECH, San Francisco, CA, USA, 8–12 September 2016; pp. 3623–3627. [Google Scholar]

- You, H.; Li, W.; Li, L.; Zhu, J. Lexicon-based local representation for text-dependent speaker verification. IEICE Trans. Inf. Syst. 2017, 100, 587–589. [Google Scholar] [CrossRef]

- Zeinali, H.; Burget, L.; Sameti, H.; Glembek, O.; Plchot, O. Deep neural networks and hidden Markov models in i-vector-based text-dependent speaker verification. In Proceedings of the Odyssey-The Speaker and Language Recognition Workshop, Bilbao, Spain, 21–24 June 2016; pp. 24–30. [Google Scholar]

- Dey, S.; Madikeri, S.; Ferras, M.; Motlicek, P. Deep neural network based posteriors for text-dependent speaker verification. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 5050–5054. [Google Scholar]

- Heigold, G.; Moreno, I.; Bengio, S.; Shazeer, N. End-to-end text-dependent speaker verification. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 5115–5119. [Google Scholar]

- Miguel, A.; Villalba, J.; Ortega, A.; Lleida, E.; Vaquero, C. Factor Analysis with Sampling Methods for Text Dependent Speaker Recognition. In Proceedings of the 15th Annual Conference of the International Speech Communication Association, Interspeech, Singapore, 14–18 September 2014; pp. 1342–1346. [Google Scholar]

- Miguel, A.; Llombart, J.; Ortega, A.; Lleida, E. Tied Hidden Factors in Neural Networks for End-to-End Speaker Recognition. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017; pp. 2819–2823. [Google Scholar]

- Larcher, A.; Lee, K.A.; Ma, B.; Li, H. Text-dependent speaker verification: Classifiers, databases and RSR2015. Speech Commun. 2014, 60, 56–77. [Google Scholar] [CrossRef]

- Zhong, Z.; Zheng, L.; Kang, G.; Li, S.; Yang, Y. Random erasing data augmentation. arXiv 2017, arXiv:1708.04896. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in PyTorch. In Proceedings of the NIPS 2017 Workshop Autodiff Decision Program Chairs, Long Beach, CA, USA, 9 December 2017. [Google Scholar]

- Stafylakis, T.; Kenny, P.; Ouellet, P.; Perez, J.; Kockmann, M.; Dumouchel, P. I-Vector/PLDA Variants for Text-Dependent Speaker Recognition; Centre de Recherche Informatique de Montreal (CRIM): Montreal, QC, Canada, 2013. [Google Scholar]

- Kenny, P.; Stafylakis, T.; Ouellet, P.; Alam, M.J. JFA-based front ends for speaker recognition. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 1705–1709. [Google Scholar]

- The NIST Year 2010 Speaker Recognition Evaluation Plan. 2010. Available online: https://www.nist.gov/sites/default/files/documents/itl/iad/mig/NIST$_$SRE10$_$evalplan-r6.pdf (accessed on 1 August 2019).

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Mingote, V.; Miguel, A.; Ortega, A.; Lleida, E. Optimization of the Area Under the ROC Curve using Neural Network Supervectors for Text-Dependent Speaker Verification. arXiv 2019, arXiv:1901.11332. [Google Scholar]

| Architecture | Results (EER%/DCF10) | |||||

|---|---|---|---|---|---|---|

| Front-End | Pooling | |||||

| Layers | Kernel | Type | States | Fem | Male | Fem + Male |

| 3 | − | |||||

| − | − | 10 | ||||

| 1 | ||||||

| 3 | ||||||

| 3 | ||||||

| 3 | ||||||

| − | − | 20 | ||||

| 1 | ||||||

| 3 | ||||||

| 3 | ||||||

| 3 | ||||||

| − | − | 40 | ||||

| 1 | ||||||

| 3 | ||||||

| 3 | ||||||

| 3 | ||||||

| − | − | 80 | ||||

| 1 | ||||||

| 3 | ||||||

| 3 | ||||||

| 3 | ||||||

| Architecture | Results (EER%/DCF10) | |||||

|---|---|---|---|---|---|---|

| Front-End | Pooling | |||||

| Layers | Kernel | Type | States | Fem | Male | Fem + Male |

| 3 | − | |||||

| − | − | 10 | ||||

| 1 | ||||||

| 3 | ||||||

| 3 | ||||||

| 3 | ||||||

| − | − | 20 | ||||

| 1 | ||||||

| 3 | ||||||

| 3 | ||||||

| 3 | ||||||

| − | − | 40 | ||||

| 1 | ||||||

| 3 | ||||||

| 3 | ||||||

| 3 | ||||||

| − | − | 80 | ||||

| 1 | ||||||

| 3 | ||||||

| 3 | ||||||

| 3 | ||||||

| Architecture | Results (EER%/DCF10) | |||||

|---|---|---|---|---|---|---|

| Front-End | Pooling | |||||

| Layers | Kernel | Type | States | Fem | Male | Fem + Male |

| 3 | − | |||||

| − | − | 10 | ||||

| 1 | ||||||

| 3 | ||||||

| 3 | ||||||

| 3 | ||||||

| − | − | 20 | ||||

| 1 | ||||||

| 3 | ||||||

| 3 | ||||||

| 3 | ||||||

| − | − | 40 | ||||

| 1 | ||||||

| 3 | ||||||

| 3 | ||||||

| 3 | ||||||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mingote, V.; Miguel, A.; Ortega, A.; Lleida, E. Supervector Extraction for Encoding Speaker and Phrase Information with Neural Networks for Text-Dependent Speaker Verification. Appl. Sci. 2019, 9, 3295. https://doi.org/10.3390/app9163295

Mingote V, Miguel A, Ortega A, Lleida E. Supervector Extraction for Encoding Speaker and Phrase Information with Neural Networks for Text-Dependent Speaker Verification. Applied Sciences. 2019; 9(16):3295. https://doi.org/10.3390/app9163295

Chicago/Turabian StyleMingote, Victoria, Antonio Miguel, Alfonso Ortega, and Eduardo Lleida. 2019. "Supervector Extraction for Encoding Speaker and Phrase Information with Neural Networks for Text-Dependent Speaker Verification" Applied Sciences 9, no. 16: 3295. https://doi.org/10.3390/app9163295

APA StyleMingote, V., Miguel, A., Ortega, A., & Lleida, E. (2019). Supervector Extraction for Encoding Speaker and Phrase Information with Neural Networks for Text-Dependent Speaker Verification. Applied Sciences, 9(16), 3295. https://doi.org/10.3390/app9163295