The Usability Testing of VR Interface for Tourism Apps

Abstract

Featured Application

Abstract

1. Introduction

2. Literature Review

2.1. Virtual Reality and Product Categories

2.2. Usability Testing

2.3. Usability Test Scale

3. Materials and Methods

3.1. Research Design and Subjects

3.2. Materials

3.3. Questionnaire Design

4. Results and Discussion

4.1. Background Analysis of Subjects

4.2. Usability Analysis: QUIS

4.3. Usability Analysis: SUS

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Rothstein, J.D.; Jennings, L.; Moorthy, A.; Yang, F.; Gee, L.; Romano, K.; Hutchful, D.; Labrique, A.B.; LeFevre, A.E. Qualitative assessment of the feasibility, usability, and acceptability of a mobile client data app for community-based maternal, neonatal, and child care in rural Ghana. Int. J. Telemed. App. 2016, 2016. [Google Scholar] [CrossRef] [PubMed]

- Lim, J.; Cloete, G.; Dunsmuir, D.T.; Payne, B.A.; Scheffer, C.; Dadelszen, P.; Dumont, G.A.; Ansermino, J.M. Usability and Feasibility of PIERS on the Move: An mHealth App for Pre-Eclampsia Triage. JMIR Mhealth Uhealth 2015, 3, 37. [Google Scholar] [CrossRef] [PubMed]

- Ghanbari, H.; Ansari, S.; Ghannam, M.; Lathkar-Pradhan, S.; Kratz, A.; Oral, H.; Najarian, K.; Clauw, D.; Nallamothu, B. Feasibility and Usability of a Mobile Application to Assess Symptoms and Affect in Patients with Atrial Fibrillation: A Pilot Study. J. Atr. Fibrillation 2017, 10, 1672. [Google Scholar] [CrossRef] [PubMed]

- Sun, T.; Dunsmuir, D.; Miao, I.; Devoy, G.M.; West, N.C.; Görges, M.; Lauder, G.R.; Ansermino, J.M. In-hospital usability and feasibility evaluation of Panda, an app for the management of pain in children at home. Pediatric Anesthesia 2018, 28, 897–905. [Google Scholar] [CrossRef] [PubMed]

- Al Ayubi, S.U.; Parmanto, B.; Branch, R.; Ding, D. A persuasive and social mHealth application for physical activity: a usability and feasibility study. JMIR Mhealth Uhealth. 2014, 2, 25. [Google Scholar] [CrossRef] [PubMed]

- Fu, M.R.; Axelrod, D.; Guth, A.A.; Wang, Y.; Scagliola, J.; Hiotis, K.; Rampertaap, K.; El-Shammaa, N. Usability and feasibility of health IT interventions to enhance Self-Care for Lymphedema Symptom Management in breast cancer survivors. Int. Interv. 2016, 5, 56–64. [Google Scholar] [CrossRef] [PubMed]

- Chalil, M.K.; Greenstein, J.S. An investigation of the efficacy of collaborative virtual reality systems for moderated remote usability testing. Appl. Ergon. 2017, 65, 501–514. [Google Scholar] [CrossRef] [PubMed]

- Sutcliffe, A.G.; Kaur, K.D. Evaluating the usability of virtual reality user interfaces. Behav. Inf. Technol. 2010, 19, 415–426. [Google Scholar] [CrossRef]

- de França, A.C.P.; Neto, J.P.; Soares, M.M. ethods and Procedures to Usability Testing in Virtual Reality Systems. In Advances in Ergonomics in Design, Proceedings of the AHFE 2017 International Conference on Ergonomics in Design, Los Angeles, California, USA. July 17–21, 2017; Springer: Berlin/Heidelberg, Germany, 2017; Volume 588, pp. 45–51. [Google Scholar]

- Pereira, R.E.; Moore, H.F.; Gheisari, M.; Esmaeili, B. Development and usability testing of a panoramic augmented reality environment for fall hazard safety training. In Advances in Informatics and Computing in Civil and Construction Engineering. Proceedings of the 35th CIB W78 2018 Conference: IT in Design, Construction, and Management, Chicago, IL, USA; Springer International Publishing: Berlin/Heidelberg, Germany, October 2018; pp. 271–279. [Google Scholar]

- Hald, K.; Rehm, M.; Moeslund, T.B. Testing Augmented Reality Systems for Spotting Sub-Surface Impurities. In IFIP Working Conference on Human Work Interaction Design, Proceedings of 5th IFIP WG 13.6 Working Conference, HWID 2018, Espoo, Finland, 20–21 August 2018; Springer: Cham, Swizerland, 2018; Volume 544, pp. 103–112. [Google Scholar]

- Boletsis, C.; McCallum, S. Augmented reality cubes for cognitive gaming: preliminary usability and game experience testing. Int. J. Serious Games. 2016, 3, 3–18. [Google Scholar] [CrossRef]

- Liu, Y.F.; Yang, N.; Li, A.; Paterson, J.; McPherson, D.; Cheng, T.; Yang, A.Y. Usability Evaluation for Drone Mission Planning in Virtual Reality. In Proceedings of International Conference on Virtual, Augmented and Mixed Reality, Las Vegas, NV, USA, 15–20 July 2018; Springer: Cham, Switzerland, 2018; pp. 313–330. [Google Scholar]

- Amano, T.; Kajita, S.; Yamaguchi, H.; Higashino, T.; Takai, M. Smartphone Applications Testbed Using Virtual Reality. In Proceedings of the 15th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, New York, NY, USA, November 2018; ACM: New York, NY, USA, 2018; pp. 422–431. [Google Scholar]

- Tussyadiah, I.P.; Wang, D.; Jung, T.H.; Dieck, M.C.T. Virtual reality, presence, and attitude change: Empirical evidence from tourism. Tour. Manag. 2018, 66, 140–154. [Google Scholar] [CrossRef]

- Diemer, J.; Alpers, G.W.; Peperkorn, H.M.; Shiban, Y.; Mühlberger, A. The impact of perception and presence on emotional reactions: A review of research in virtual reality. Front. Psychol. 2015, 6, 26. [Google Scholar] [CrossRef] [PubMed]

- Schuemie, M.J.; Van Der Straaten, P.; Krijn, M.; Van Der Mast, C.A. Research on presence in virtual reality: A survey. Cyberpsychol Behav. 2001, 4, 183–201. [Google Scholar] [CrossRef] [PubMed]

- Preece, J. A Guide to Usability—Human Factors in Computing; Addison-Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 1993. [Google Scholar]

- Altarteer, S.; Charissis, V.; Harrison, D.; Chan, W. Interactive virtual reality shopping and the impact in luxury brands. In Proceedings of the International Conference on Virtual, Augmented and Mixed Reality; Las Vegas, NV, USA, 21–26 July 2013, Springer: Berlin, Heidelberg; pp. 221–230.

- AlFalah, S.F.; Harrison, D.K.; Charissis, V.; Evans, D. An investigation of a healthcare management system with the use of multimodal interaction and 3D simulation: A technical note. J. Enter. Inf. Manag. 2013, 26, 183–197. [Google Scholar] [CrossRef]

- Whitney, D.; Rosen, E.; Phillips, E.; Konidaris, G.; Tellex, S. Comparing robot grasping teleoperation across desktop and virtual reality with ROS reality. In Proceedings of the International Symposium on Robotics Research, Puerto Varas, Chile, 11–14 December 2017. [Google Scholar]

- Gu, G.; Hwang, G.; Hur, Y. Development of a VR Juggler-based Virtual Reality Interface for Scientific Visualization Application. KIISE Trans. Comput. Pract. 2016, 22, 488–496. [Google Scholar] [CrossRef]

- Chin, J.P.; Diehl, V.A.; Norman, K.L. Development of an instrument measuring user satisfaction of the human-computer interface. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Washington, DC, USA, 15–19 May 1988; ACM: New York, NY, USA, 1988; pp. 213–218. [Google Scholar]

- Harper, B.D.; Norman, K.L. Improving User Satisfaction: The Questionnaire for User Interaction Satisfaction. In Proceedings of the 1st Annual Mid-Atlantic Human Factors Conference, Virginia Beach, VA, USA, 25–26 February 1993; pp. 224–228. [Google Scholar]

- Tullis, T.S.; Stetson, J.N. A Comparison of Questionnaires for Assessing Website Usability. In Proceedings of the Usability Professionals Association (UPA) 2004 Conference, Minneapolis, MN, USA, 7–11 June 2004. [Google Scholar]

- Brooke, J. SUS: A “quick and dirty” usability scale. In Usability Evaluation in Industry; Jordan, P.W., Thomas, B., We-erdmeester, B.A., McClelland, A.L., Eds.; Taylor and Francis: London, UK, 1996. [Google Scholar]

- Bangor, A.; Kortum, P.T.; Miller, J.A. An empirical evaluation of the System Usability Scale (SUS). Int. J. Hum. Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

| Name | Google Street View | VeeR VR | Sites in VR |

|---|---|---|---|

| Pictures |  |  |  |

| Type | 360°round-view photos | 360°round-view film | 360°round-view images |

| Usage mode | 1. Open the app. 2. Select an option. 3. Put the mobile phone on a display. 4. Experience the 360°round-view. 5. Control the front and back directions through a Bluetooth remote controller. 6. Take the mobile phone out. | 1. Open the app. 2. Select option. 3. Put the mobile phone on a display. 4. Experience the 360°round-view. 5. Focus on the selected film. 6. Take the mobile phone out. | 1. Open the app. 2. Put the mobile phone on a display. 3. Focus on the selected option. 4. Experience the 360°round-view. 5. Focus on other places. 6. Take the mobile phone out. |

| Operating mode | Bluetooth game rocker VR BOX CASE mobile controller | Fixation point (watch for a while) | Fixation point (watch for a while) |

| Operational diversity (VR mode) | Low (no choice) | Middle (able to select the length of the film) | High (able to select other positions) |

| Ease of use | Simple | Difficult | Moderate |

| Information volume (content) | More (global) | More (diversified) | Less (only particular places) |

| Diction (VR interface) | Less (No textual description) | Less (No textual description) | Less (No textual description) |

| Sound | No | Yes | No |

| Tourist destination | Eiffel Tower | Eiffel Tower | Eiffel Tower |

| Items | Number of Items | Items | Content |

|---|---|---|---|

| 1. Basic information and background | 12 | Multiple choice | Respondents’ backgrounds |

| 2. QUIS | 13 | Five-point SD scale | Respondents’ satisfaction: 2.1 Overall response 2.2 Interface representation 2.3 Interface information 2.4 Interface learnability |

| 3. SUS | 10 | Five-point Likert scale/converted to 1–100 scale | Perceived interface usability |

| Items | Gender | Amount | Percentage (%) |

|---|---|---|---|

| Gender | Male | 15 | 50% |

| Female | 15 | 50% | |

| Education | University | 25 | 83.3% |

| Institution | 5 | 16.7% |

| Group | Google Street View | VeeR VR | Sites in VR |

|---|---|---|---|

| Overall response | 4.03 (0.72) | 3.37 (1.00) | 4.03 (0.89) |

| Interface representation | 3.97 (0.72) | 3.33 (1.06) | 3.97 (1.03) |

| Interface information | 3.60 (0.72) | 3.07 (1.01) | 3.83 (0.87) |

| Interface learnability | 4.30 (0.88) | 3.80 (0.96) | 4.13 (0.86) |

| Overall response | |||

| Significance | Google Street View | VeeR VR | Sites in VR |

| Google Street View | 0.016 * | 1.000 | |

| VeeR VR | 0.016 * | 0.016 * | |

| Sites in VR | 1.000 | 0.016 * | |

| Interface representation | |||

| Significance | Google Street View | VeeR VR | Sites in VR |

| Google Street View | 0.040 * | 1.000 | |

| VeeR VR | 0.040 * | 0.040 * | |

| Sites in VR | 1.000 | 0.040 * | |

| Interface information | |||

| Significance | Google Street View | VeeR VR | Sites in VR |

| Google Street View | 0.069 | 0.591 | |

| VeeR VR | 0.069 | 0.005 * | |

| Sites in VR | 0.591 | 0.005 * | |

| Interface learnability | |||

| Significance | Google Street View | VeeR VR | Sites in VR |

| Google Street View | 0.105 | 0.774 | |

| VeeR VR | 0.105 | 0.362 | |

| Sites in VR | 0.774 | 0.362 | |

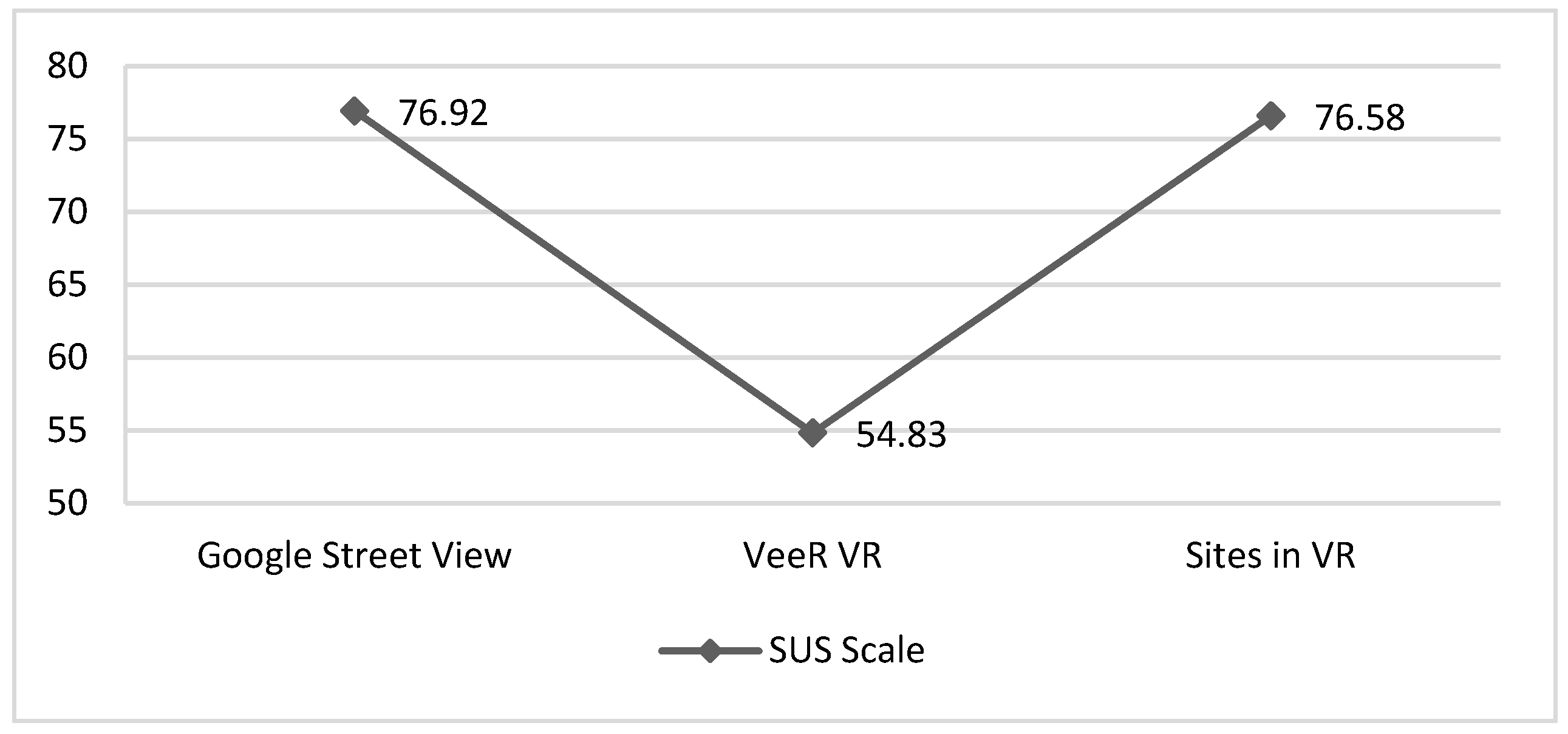

| Group | Google Street View | VeeR VR | Sites in VR |

|---|---|---|---|

| SUS | 76.92 (18.77) | 54.83 (22.86) | 76.58 (19.53) |

| Significance | Google Street View | VeeR VR | Sites in VR |

|---|---|---|---|

| Google Street View | 0.000 * | 0.998 | |

| VeeR VR | 0.000 * | 0.000 * | |

| Sites in VR | 0.998 | 0.000 * |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fang, Y.-M.; Lin, C. The Usability Testing of VR Interface for Tourism Apps. Appl. Sci. 2019, 9, 3215. https://doi.org/10.3390/app9163215

Fang Y-M, Lin C. The Usability Testing of VR Interface for Tourism Apps. Applied Sciences. 2019; 9(16):3215. https://doi.org/10.3390/app9163215

Chicago/Turabian StyleFang, Yu-Min, and Chun Lin. 2019. "The Usability Testing of VR Interface for Tourism Apps" Applied Sciences 9, no. 16: 3215. https://doi.org/10.3390/app9163215

APA StyleFang, Y.-M., & Lin, C. (2019). The Usability Testing of VR Interface for Tourism Apps. Applied Sciences, 9(16), 3215. https://doi.org/10.3390/app9163215