A XGBoost Model with Weather Similarity Analysis and Feature Engineering for Short-Term Wind Power Forecasting

Abstract

1. Introduction

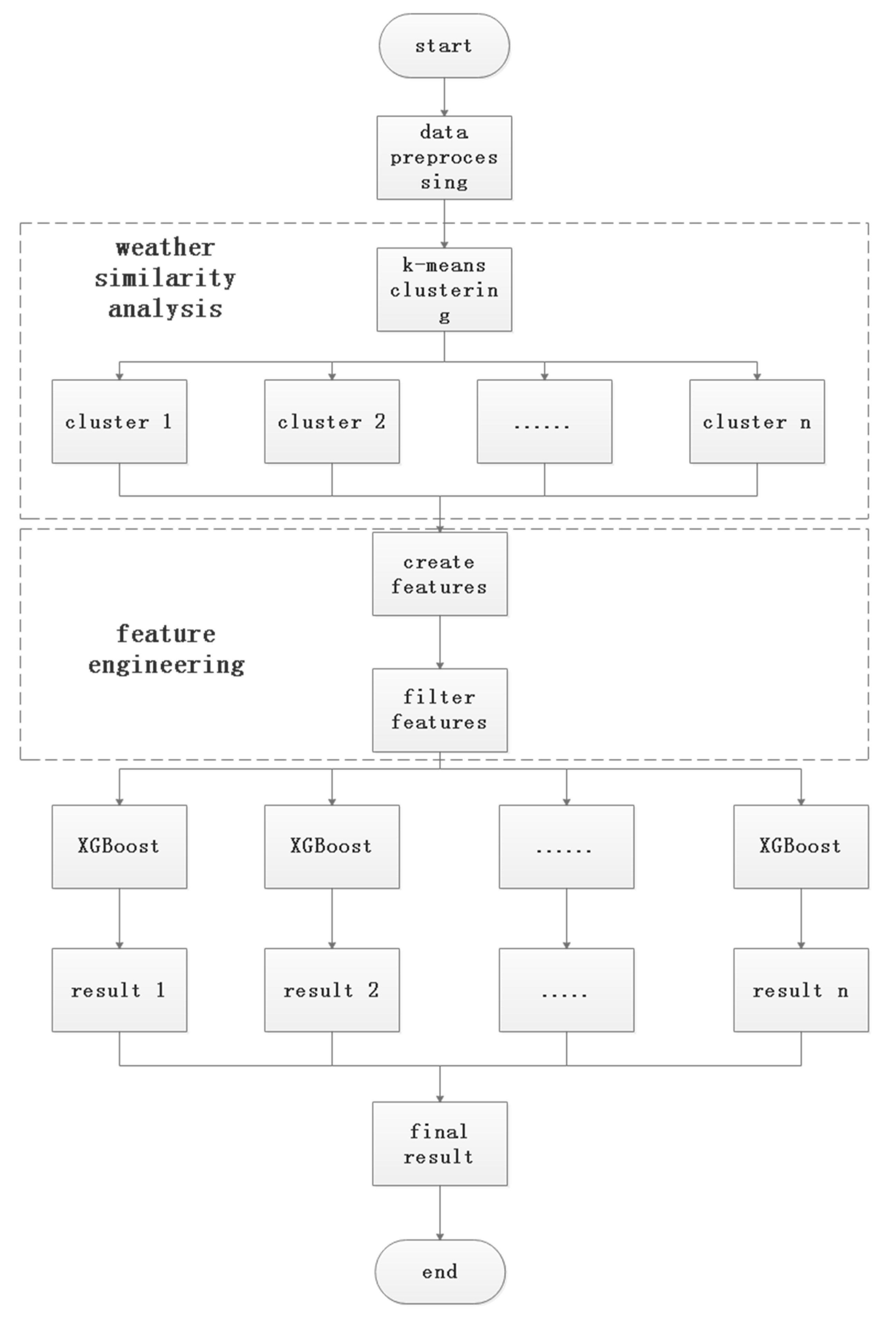

2. Methodology

3. Model Establishment

3.1. Data Preprocessing

- Data cleaning: There are outliers in the original data. For example, there are some points in the original data, whose corresponding wind speed is equal to zero, but the corresponding wind power is smaller than zero. It is obvious that these data are unreasonable and need to be cleaned.

- Missing data filling: After data cleaning is completed, there will be gaps in the data. It is necessary to fill these missing values, because if the filling of missing values is reasonable, the prediction accuracy of the model can be improved. The k-Nearest Neighbor (KNN) algorithm is adopted to find the nearest neighbor in the time series to fill the missing values in the data.

- Data normalization: The purpose of data normalization is to reduce the influence of order of magnitude on the model, accelerate the training speed of the model, and make the model more accurate than before. Min-max normalization maps data between zero and one, whose transformation formula is described by Equation (5):

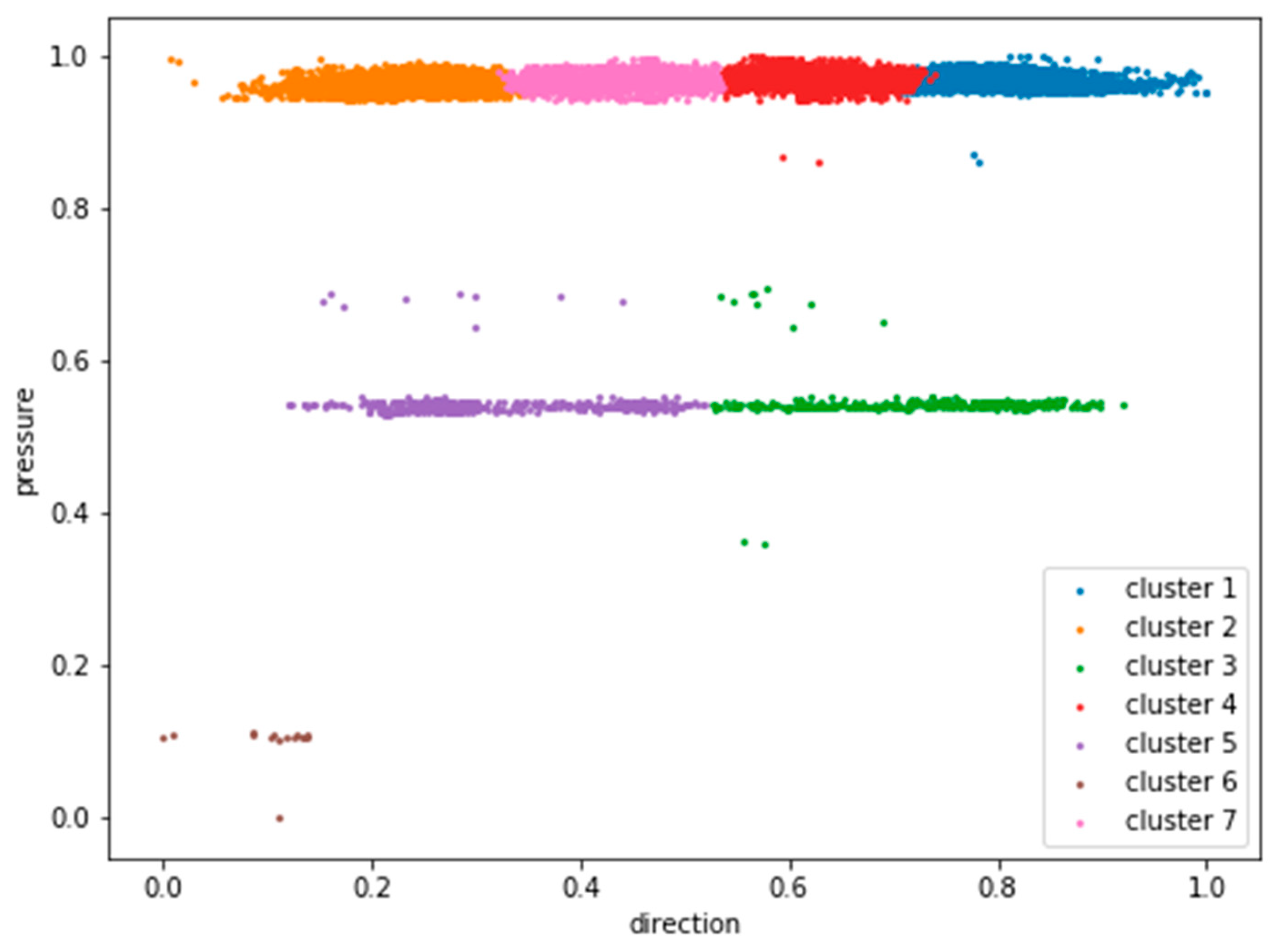

3.2. Weather Similarity Analysis

| Algorithm 1. K-means Algorithm |

| Input: Dataset S = {x1, x2, …, xh} |

| Procedure: |

| 1: select k samples from dataset S as the original centroid vector c = { c1, c2, …, ck } |

| 2: for every sample xi then |

| 3: calculate the euclidean distance between xi and cj (j = 1,2,…,k) |

| 4: find the nearest centroid cj and let ui = j where ui represent the corresponding cluster of xi |

| 5: end for |

| 6: u = { u1, u2, …, uk } |

| 7: for every cluster then |

| 8: calculate the centroid of every cluster vi |

| 9: end for |

| 10: v = { v1, v2, …, vk }, u = {u1, u2, …, uh} |

| 11: if v == c then |

| 12: return u |

| 13: else |

| 14: c = v and go to step 2 |

| 15: end if |

| Output: vector u which shows the cluster label of every sample |

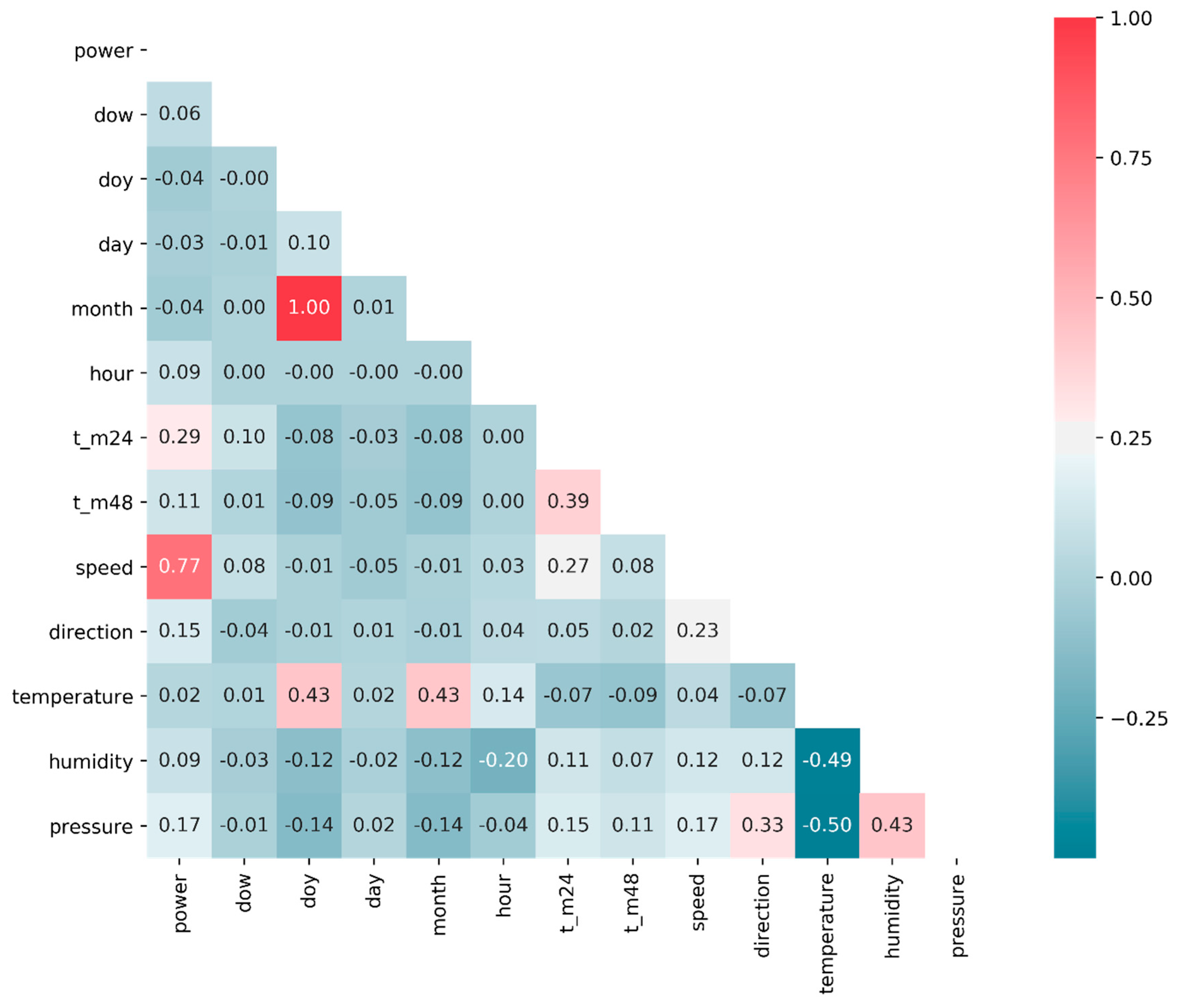

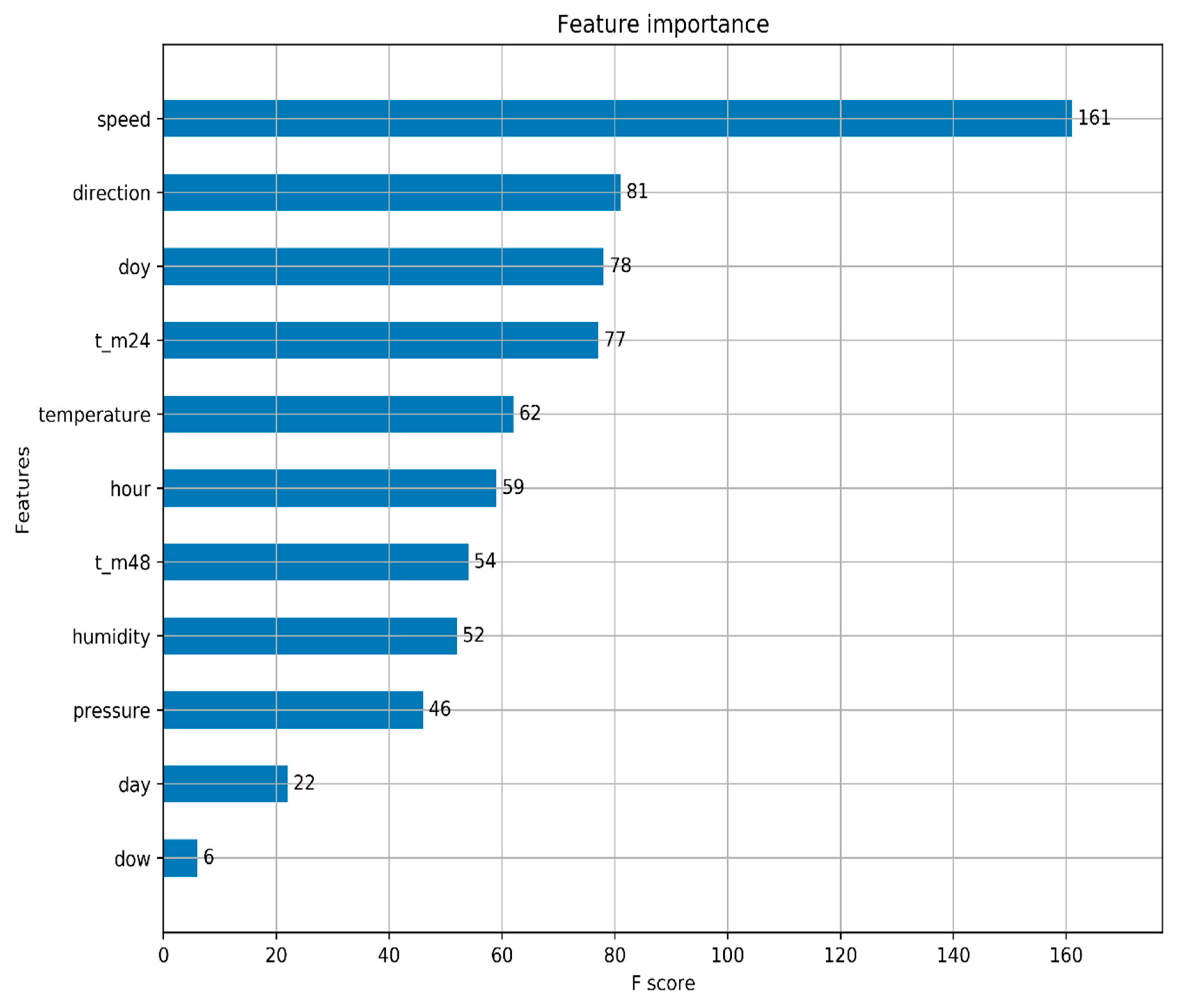

3.3. Feature Engineering

3.4. The Structure of Proposed Model

4. Experiments

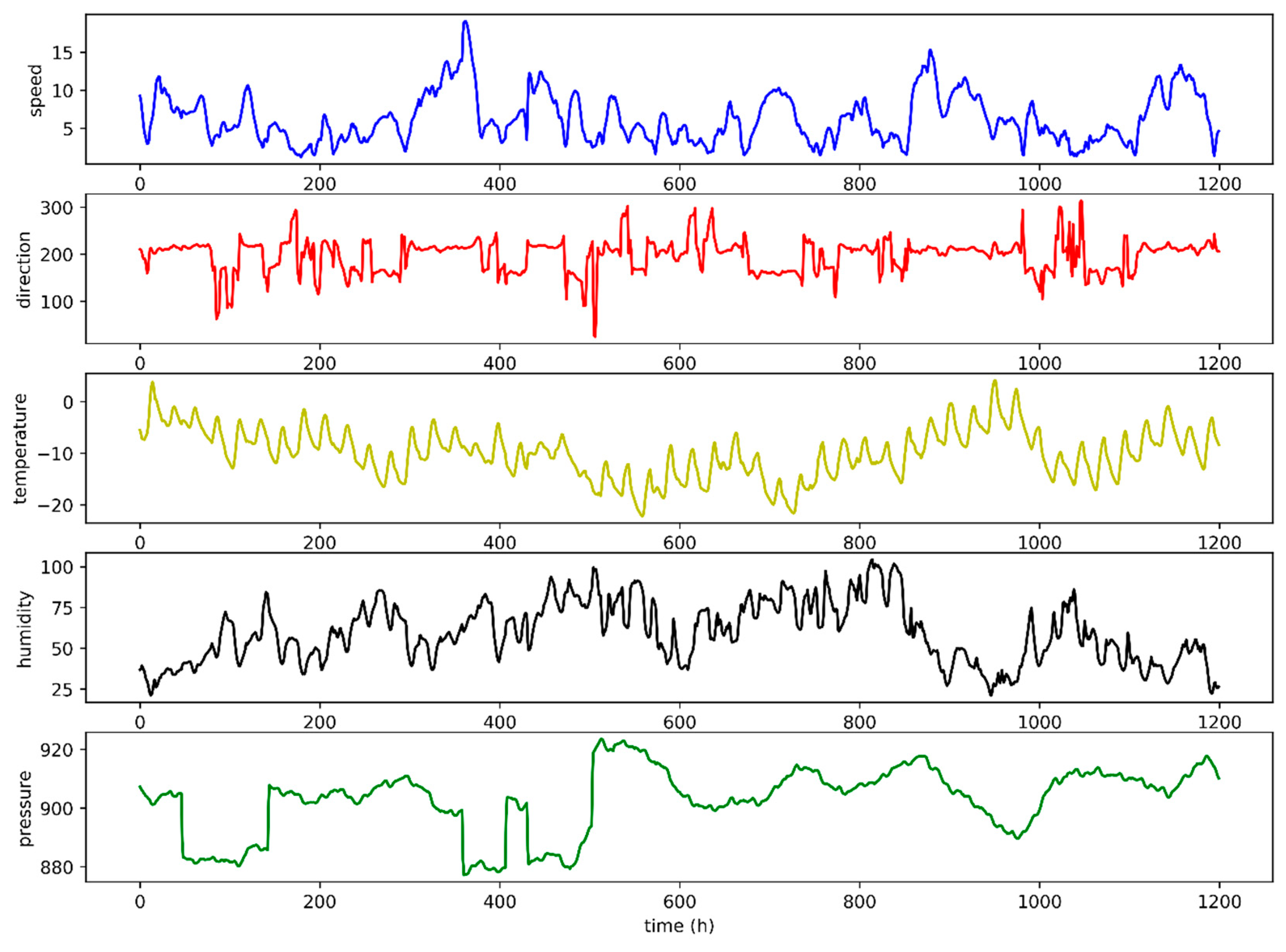

4.1. Description of Data

4.2. Evaluating Indicator

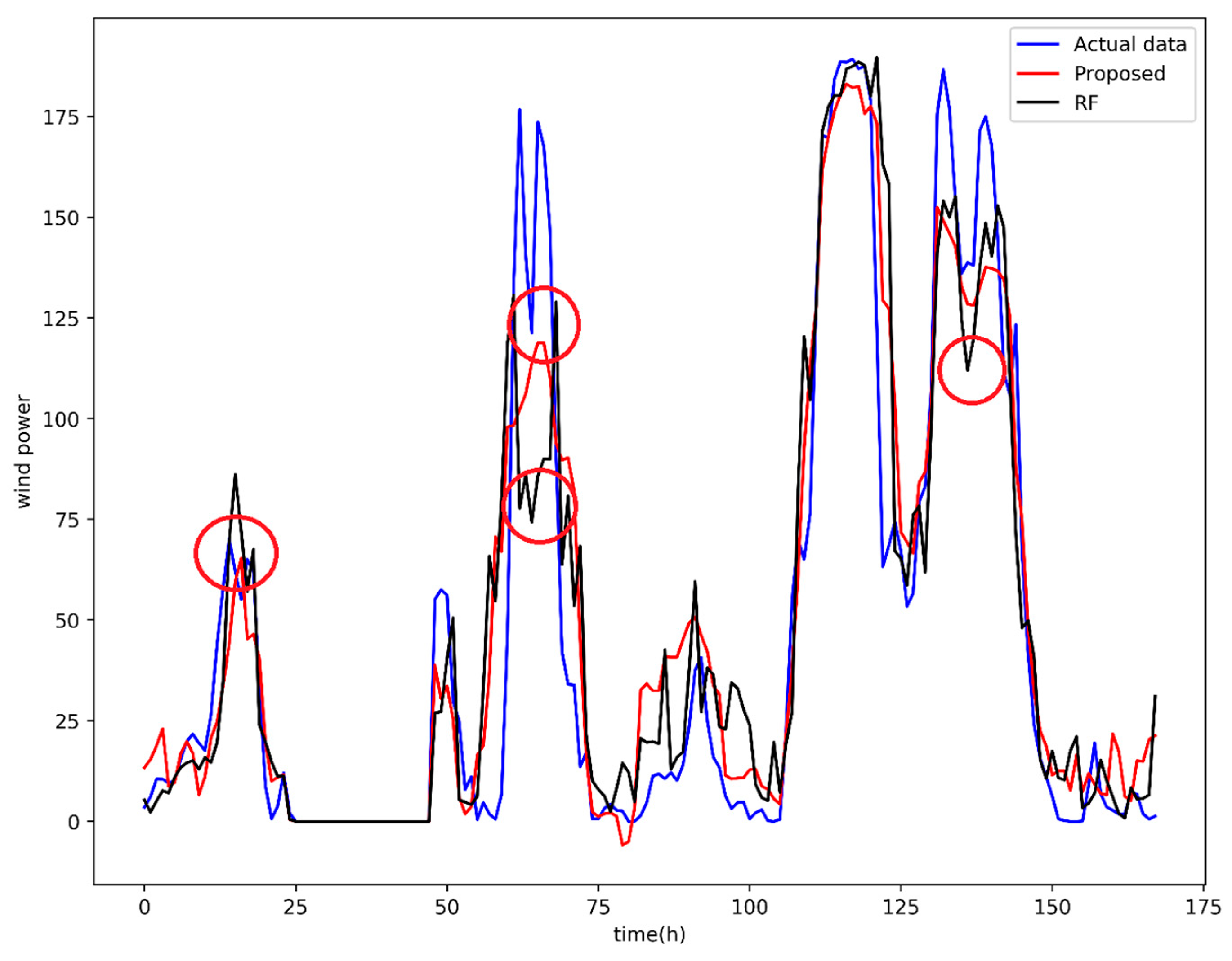

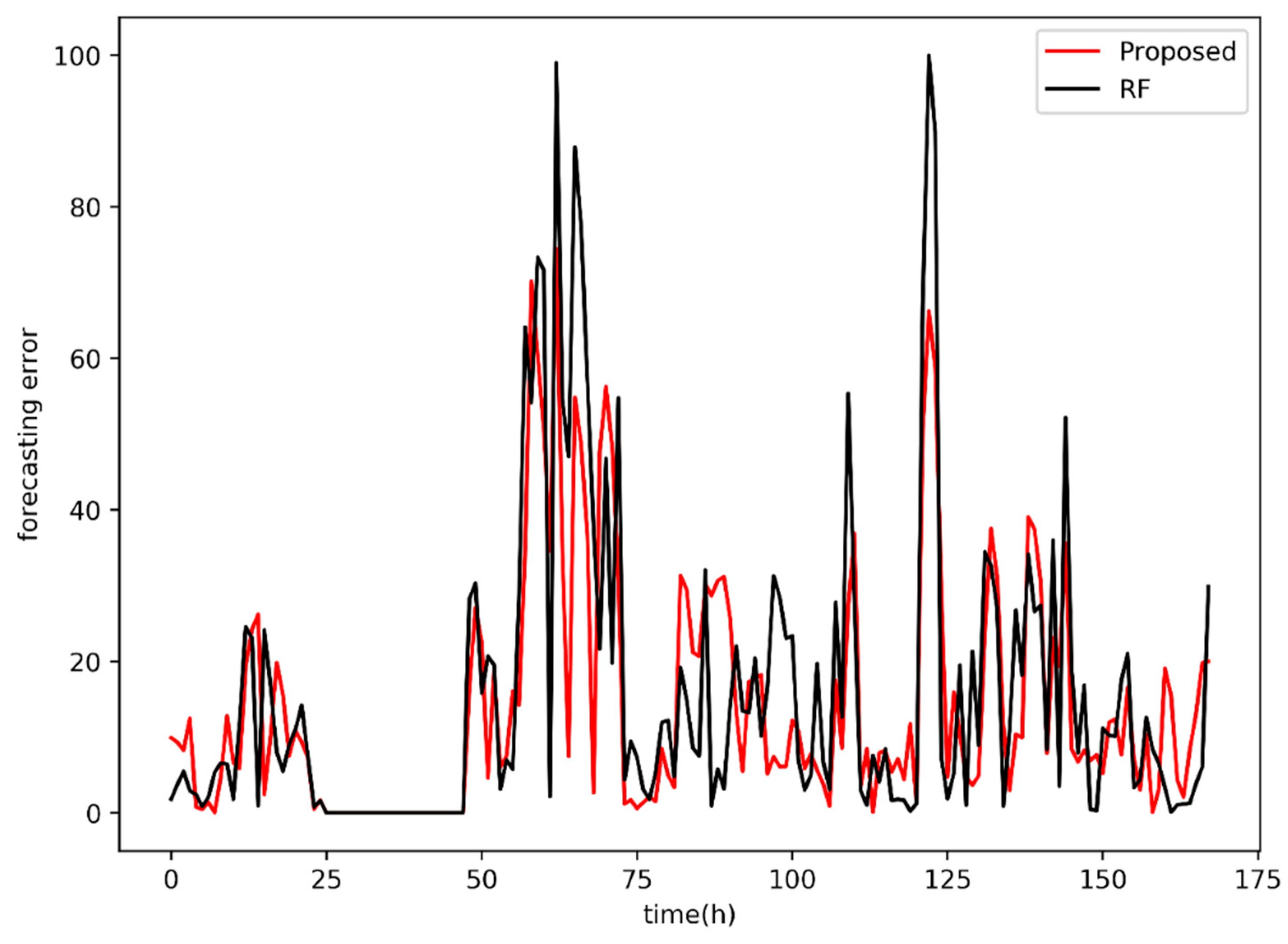

4.3. Wind Power Forecasting Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Okumus, I.; Dinler, A. Current status of wind energy forecasting and a hybrid method for hourly predictions. Energy Convers. Manag. 2016, 123, 362–371. [Google Scholar] [CrossRef]

- Zhao, Y.; Ye, L.; Li, Z.; Song, X.; Lang, Y.; Su, J. A novel bidirectional mechanism based on time series model for wind power forecasting. Appl. Energy 2016, 177, 793–803. [Google Scholar] [CrossRef]

- Xia, S.; Chan, K.W.; Luo, X.; Bu, S.; Ding, Z.; Zhou, B. Optimal sizing of energy storage system and its cost-benefit analysis for power grid planning with intermittent wind generation. Renew. Energy 2018, 122, 472–486. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, J.; Wang, X. Review on probabilistic forecasting of wind power generation. Renew. Sustain. Energy Rev. 2014, 32, 255–270. [Google Scholar] [CrossRef]

- Costa, A.; Crespo, A.; Navarro, J.; Lizcano, G.; Madsen, H.; Feitosa, E. A review on the young history of the wind power short-term prediction. Renew. Sustain. Energy Rev. 2008, 12, 1725–1744. [Google Scholar] [CrossRef]

- Ma, L.; Luan, S.; Jiang, C.; Liu, H.; Zhang, Y. A review on the forecasting of wind speed and generated power. Renew Sustain. Energy Rev. 2009, 13, 915–920. [Google Scholar]

- Lu, J.; Wang, B.; Ren, H.; Zhao, D.; Wang, F.; Shafie-khah, M.; Catalao, J.P.S. Two-Tier Reactive Power and Voltage Control Strategy Based on ARMA Renewable Power Forecasting Models. Energies 2017, 10, 1518. [Google Scholar] [CrossRef]

- Shi, J.; Qu, X.; Zeng, S. Short-Term Wind Power Generation Forecasting: Direct Versus Indirect Arima-Based Approaches. Int. J. Green Energy 2011, 8, 100–112. [Google Scholar] [CrossRef]

- Chen, H.; Li, F.; Wang, Y. Wind power forecasting based on outlier smooth transition autoregressive GARCH model. J. Mod. Power Syst. Clean 2018, 6, 532–539. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Ortiz-Garcia, E.G.; Perez-Bellido, A.M.; Portilla-Figueras, A.; Prieto, L. Short term wind speed prediction based on evolutionary support vector regression algorithms. Expert. Syst. Appl. 2011, 38, 4052–4057. [Google Scholar] [CrossRef]

- Ortiz-Garcia, E.G.; Salcedo-Sanz, S.; Perez-Bellido, A.M.; Gascon-Moreno, J.; Portilla-Figueras, J.A.; Prieto, L. Short-term wind speed prediction in wind farms based on banks of support vector machines. Wind Energy 2011, 14, 193–207. [Google Scholar] [CrossRef]

- Yang, L.; He, M.; Zhang, J.; Vittal, V. Support-Vector-Machine-Enhanced Markov Model for Short-Term Wind Power Forecast. IEEE Trans. Sustain. Energy 2015, 6, 791–799. [Google Scholar] [CrossRef]

- Ranganayaki, V.; Deepa, S.N. SVM Based Neuro Fuzzy Model for Short Term Wind Power Forecasting. Natl. Acad. Sci. Lett. 2017, 40, 131–134. [Google Scholar] [CrossRef]

- Gonzalez, C.; Mira-McWilliams, J.; Juarez, I. Important variable assessment and electricity price forecasting based on regression tree models: Classification and regression trees, Bagging and Random Forests. IET Gener. Transm. Dis. 2015, 9, 1120–1128. [Google Scholar] [CrossRef]

- Fang, S.; Chiang, H. A High-Accuracy Wind Power Forecasting Model. IEEE Trans. Power Syst. 2017, 32, 1589–1590. [Google Scholar] [CrossRef]

- Lahouar, A.; Slama, J.B.H. Hour-ahead wind power forecast based on random forests. Renew. Energy 2017, 109, 529–541. [Google Scholar] [CrossRef]

- Li, T.; Li, Y.; Liao, M.; Wang, W.; Zeng, C. A New Wind Power Forecasting Approach Based on Conjugated Gradient Neural Network. Math. Probl. Eng. 2016, 2016. [Google Scholar] [CrossRef]

- Huang, D.; Gong, R.; Gong, S. Prediction of Wind Power by Chaos and BP Artificial Neural Networks Approach Based on Genetic Algorithm. J. Electr. Eng. Technol. 2015, 10, 41–46. [Google Scholar] [CrossRef]

- Wang, J.; Fang, K.; Pang, W.; Sun, J. Wind Power Interval Prediction Based on Improved PSO and BP Neural Network. J. Electr. Eng. Technol. 2017, 12, 989–995. [Google Scholar] [CrossRef]

- Chang, W. An RBF Neural Network Combined with OLS Algorithm and Genetic Algorithm for Short-Term Wind Power Forecasting. J. Appl. Math. 2013, 2013. [Google Scholar] [CrossRef]

- Wang, K.; Qi, X.; Liu, H.; Song, J. Deep belief network based k-means cluster approach for short-term wind power forecasting. Energy 2018, 165, 840–852. [Google Scholar] [CrossRef]

- Shi, Z.; Liang, H.; Dinavahi, V. Direct Interval Forecast of Uncertain Wind Power Based on Recurrent Neural Networks. IEEE Trans. Sustain. Energy 2018, 9, 1177–1187. [Google Scholar] [CrossRef]

- Liu, Y.; Guan, L.; Hou, C.; Han, H.; Liu, Z.; Sun, Y.; Zheng, M. Wind Power Short-Term Prediction Based on LSTM and Discrete Wavelet Transform. Appl. Sci. 2019, 9, 1108. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Perez-Bellido, A.M.; Ortiz-Garcia, E.G.; Portilla-Figueras, A.; Prieto, L.; Paredes, D. Hybridizing the fifth generation mesoscale model with artificial neural networks for short-term wind speed prediction. Renew. Energy 2009, 34, 1451–1457. [Google Scholar] [CrossRef]

- Hervas-Martinez, C.; Salcedo-Sanz, S.; Gutierrez, P.A.; Ortiz-Garcia, E.G.; Prieto, L. Evolutionary product unit neural networks for short-term wind speed forecasting in wind farms. Neural. Comput. Appl. 2012, 21, 993–1005. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Perez-Bellido, A.M.; Ortiz-Garcia, E.G.; Portilla-Figueras, A.; Prieto, L.; Correoso, F. Accurate short-term wind speed prediction by exploiting diversity in input data using banks of artificial neural networks. Neurocomputing 2009, 72, 1336–1341. [Google Scholar] [CrossRef]

- Jiang, P.; Li, X.; Dong, Y. Research and Application of a New Hybrid Forecasting Model Based on Genetic Algorithm Optimization: A Case Study of Shandong Wind Farm in China. Math. Probl. Eng. 2015, 2015, 14. [Google Scholar] [CrossRef]

- Nielsen, H.A.; Nielsen, T.S.; Madsen, H.; San Isidro Pindado, M.J.; Marti, I. Optimal combination of wind power forecasts. Wind Energy 2007, 10, 471–482. [Google Scholar] [CrossRef]

- Pang, L.; Wang, J.; Zhao, L.; Wang, C.; Zhan, H. A Novel Protein Subcellular Localization Method With CNN-XGBoost Model for Alzheimer’s Disease. Front. Genet. 2018, 9, 751. [Google Scholar] [CrossRef]

- Jain, A.K. Data clustering: 50 years beyond K-means. Pattern Recognit. Lett. 2010, 31, 651–666. [Google Scholar] [CrossRef]

| Feature | Meaning |

|---|---|

| dow | day of the week (integer 0–6) |

| doy | day of the year (integer 0–365) |

| day | day of the month (integer 1–31) |

| month | month of the year (integer 1–12) |

| hour | hour of the day (integer 0–23) |

| minute | minute of the day (integer 0–1339) |

| t_m24 | wind power value from 24 h earlier |

| t_m48 | wind power value from 48 h earlier |

| Proposed | RF | CART | BPNN | XGBoost | SVR | |

|---|---|---|---|---|---|---|

| MSE | 434.06 | 520.20 | 948.51 | 738.97 | 542.11 | 1174.73 |

| RMSE | 20.83 | 22.81 | 31.51 | 27.18 | 23.28 | 34.27 |

| MAE | 13.74 | 14.40 | 19.39 | 20.37 | 16.92 | 23.37 |

| RMAE | 3.71 | 3.79 | 4.40 | 4.51 | 4.11 | 4.83 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, H.; Wu, Y. A XGBoost Model with Weather Similarity Analysis and Feature Engineering for Short-Term Wind Power Forecasting. Appl. Sci. 2019, 9, 3019. https://doi.org/10.3390/app9153019

Zheng H, Wu Y. A XGBoost Model with Weather Similarity Analysis and Feature Engineering for Short-Term Wind Power Forecasting. Applied Sciences. 2019; 9(15):3019. https://doi.org/10.3390/app9153019

Chicago/Turabian StyleZheng, Huan, and Yanghui Wu. 2019. "A XGBoost Model with Weather Similarity Analysis and Feature Engineering for Short-Term Wind Power Forecasting" Applied Sciences 9, no. 15: 3019. https://doi.org/10.3390/app9153019

APA StyleZheng, H., & Wu, Y. (2019). A XGBoost Model with Weather Similarity Analysis and Feature Engineering for Short-Term Wind Power Forecasting. Applied Sciences, 9(15), 3019. https://doi.org/10.3390/app9153019