Improving Generative and Discriminative Modelling Performance by Implementing Learning Constraints in Encapsulated Variational Autoencoders

Abstract

Featured Application

Abstract

1. Introduction

1.1. Deep Generative Models

1.2. Approach Overview

2. Related Works

3. Encapsulated Variational Autoencoders

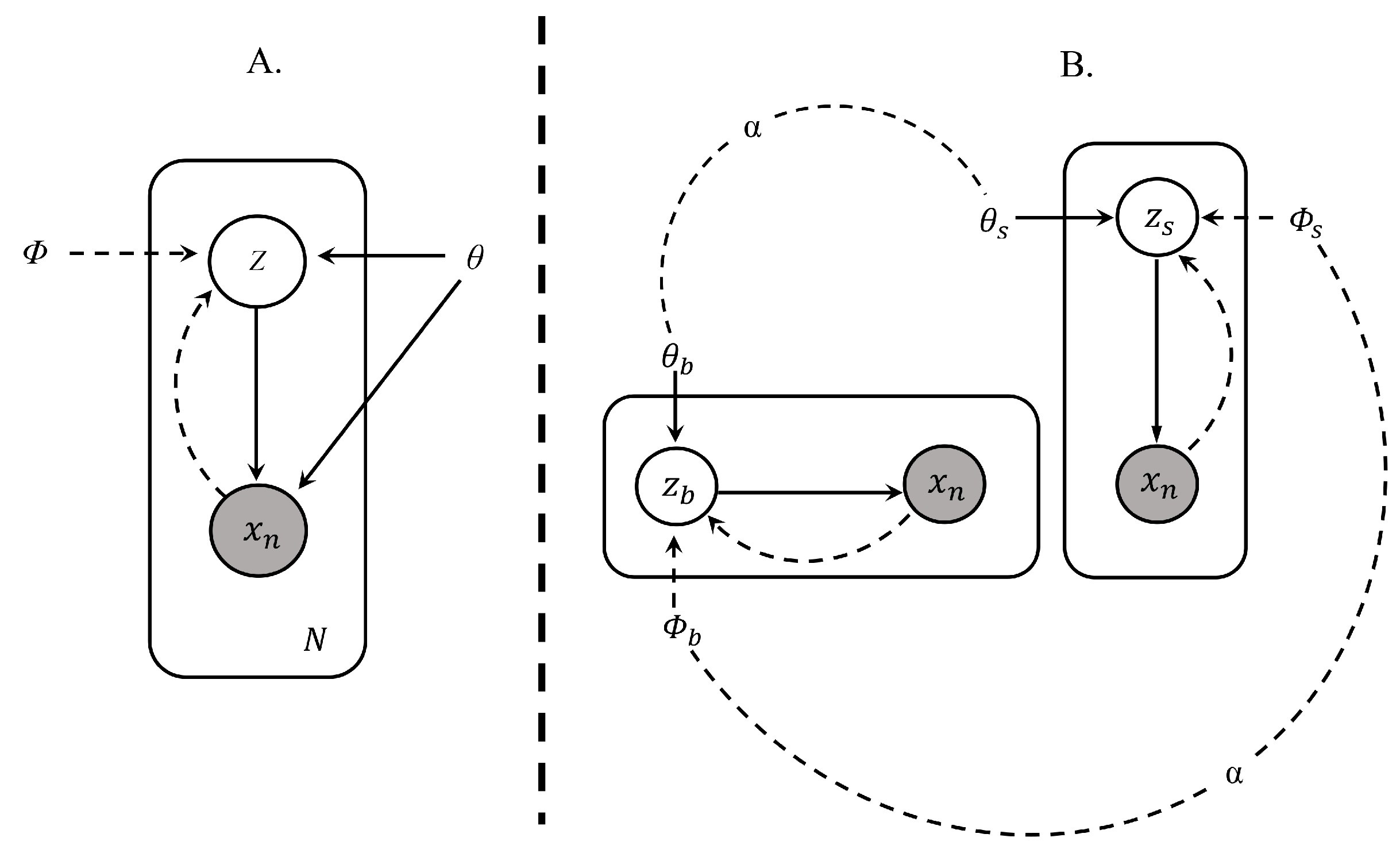

3.1. Brief VAE Review

3.2. EVAE

3.3. Parameterisations on EVAE

4. Learning Constraints in EVAE

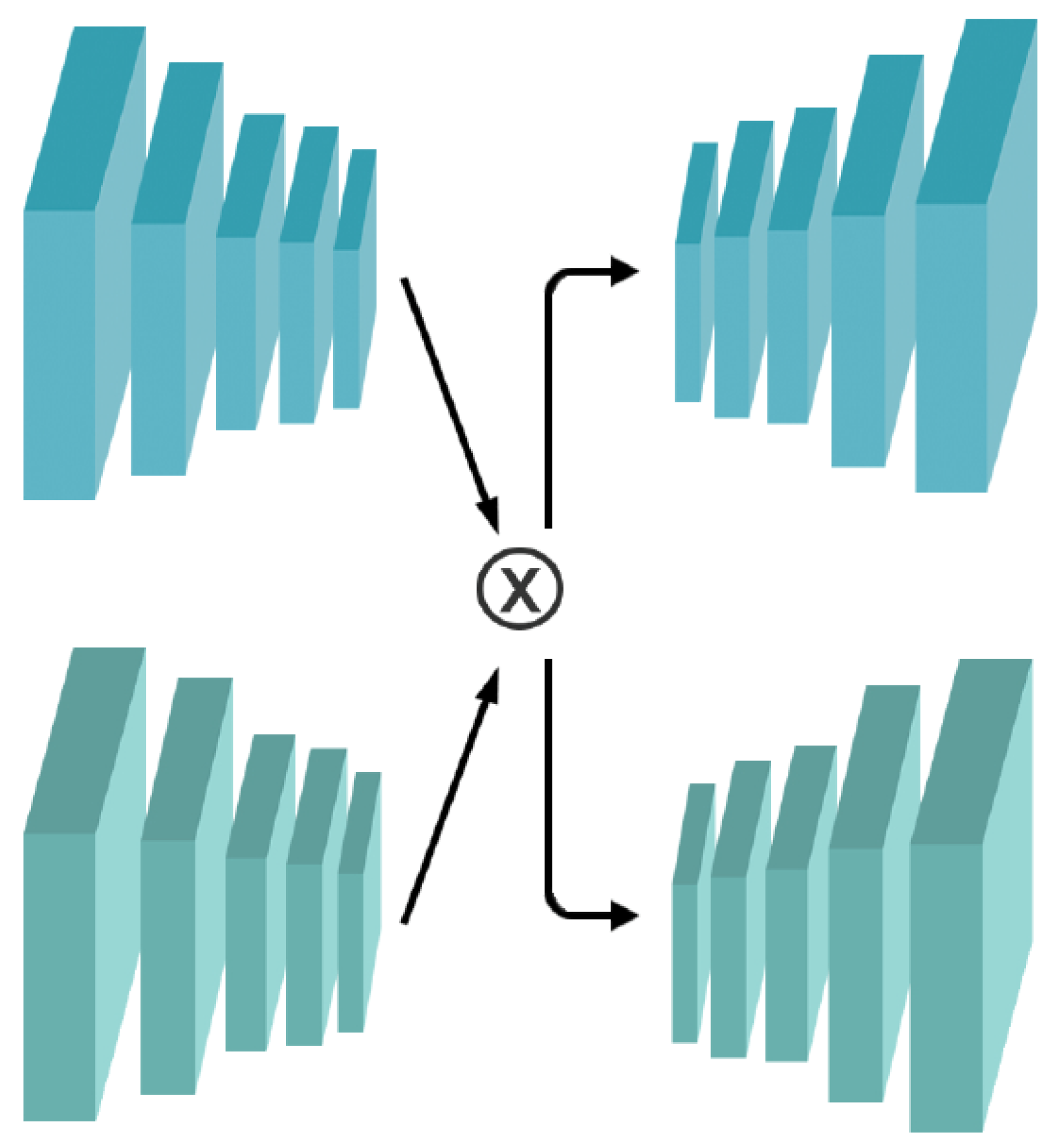

4.1. Joint Encoder and Decoder

4.2. Learning Objective

4.3. Learning Constraints

4.3.1. Dependence-Minimisation Constraint

4.3.2. Regularisation Constraint

5. EVAE Parameter Estimation

| Algorithm 1 EVAE learning algorithm. |

| Require: Dataset X; encoder: ; decoder: Initialise the parameters of Set value of hyperparameter repeat while fix do random sampling from , where Approximate via the differentiating the learning objective in Equation (9) w.r.t Update via an off-shelf gradient-ascent algorithm, e.g., Adam, using end while while fix do random sampling from , where Approximate by differentiating learning objective in Equation (9) w.r.t Update via an off-shelf gradient-ascent algorithm, e.g., Adam, using end while until elbo (objective) is converged to a certain level Return optimal |

6. Empirical Experiments

6.1. Experiment Setup

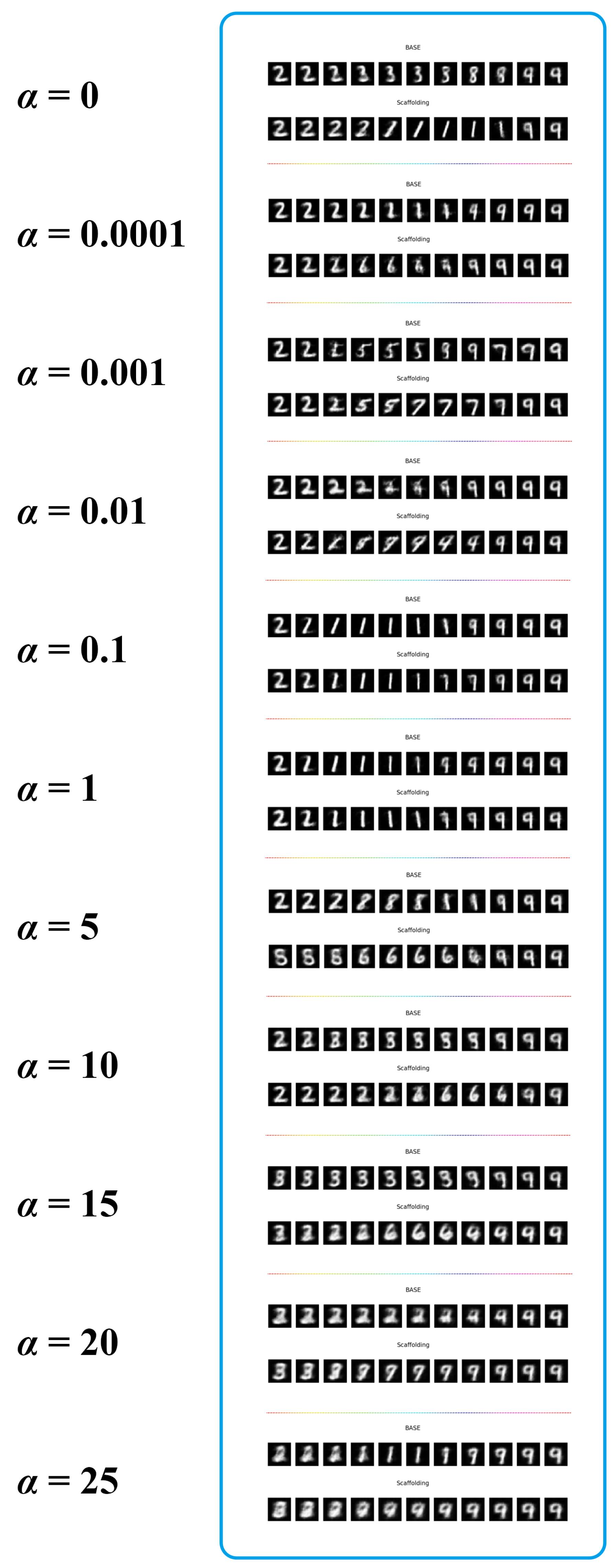

6.2. Generative Task

6.3. Discriminative Task

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| VAE | Variational Autoencoder |

| EVAE | Encapsulated Variational Autoencoders |

| BNN | Bayesian Neural Network |

| ReLU | Rectified Linear Unit |

| ADVI | Automatic Differentiation Variational Inference |

| S3C | Spike-and-Slab Sparse-Coding Approach |

| VAT | Virtual Adversarial Training |

| FC | Fully Connected Layer |

| Conv | Convolutional Layer |

| ANOVA | Analysis of Variance |

| SaaS | Speed as a Supervisor |

Appendix A. Collapsed Generation

References

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed]

- Lake, B.M.; Salakhutdinov, R.; Tenenbaum, J.B. Human-level concept learning through probabilistic program induction. Science 2015, 350, 1332–1338. [Google Scholar] [CrossRef] [PubMed]

- Salakhutdinov, R. Learning deep generative models. Annu. Rev. Stat. Its Appl. 2015, 2, 361–385. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Germain, M.; Gregor, K.; Murray, I.; Larochelle, H. Made: Masked autoencoder for distribution estimation. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 881–889. [Google Scholar]

- Chen, X.; Duan, Y.; Houthooft, R.; Schulman, J.; Sutskever, I.; Abbeel, P. Infogan: Interpretable representation learning by information maximizing generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 2172–2180. [Google Scholar]

- Ng, A.Y.; Jordan, M.I. On discriminative vs. generative classifiers: A comparison of logistic regression and naive bayes. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 9–14 December 2002; pp. 841–848. [Google Scholar]

- Ulusoy, I.; Bishop, C.M. Generative versus discriminative methods for object recognition. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 258–265. [Google Scholar]

- Xiong, H.; Rodríguez-Sánchez, A.J.; Szedmak, S.; Piater, J. Diversity priors for learning early visual features. Front. Comput. Neurosci. 2015, 9, 104. [Google Scholar] [CrossRef] [PubMed]

- Buesing, L.; Macke, J.H.; Sahani, M. Learning stable, regularised latent models of neural population dynamics. Network Comput. Neural Syst. 2012, 23, 24–47. [Google Scholar] [CrossRef] [PubMed]

- Desjardins, G.; Courville, A.; Bengio, Y. Disentangling factors of variation via generative entangling. arXiv 2012, arXiv:1210.5474. [Google Scholar]

- Tenenbaum, J.B.; Freeman, W.T. Separating style and content with bilinear models. Neural Comput. 2000, 12, 1247–1283. [Google Scholar] [CrossRef] [PubMed]

- Higgins, I.; Matthey, L.; Pal, A.; Burgess, C.; Glorot, X.; Botvinick, M.; Mohamed, S.; Lerchner, A. beta-vae: Learning basic visual concepts with a constrained variational framework. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Mathieu, M.F.; Zhao, J.J.; Zhao, J.; Ramesh, A.; Sprechmann, P.; LeCun, Y. Disentangling factors of variation in deep representation using adversarial training. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 5040–5048. [Google Scholar]

- Kulkarni, T.D.; Whitney, W.F.; Kohli, P.; Tenenbaum, J. Deep convolutional inverse graphics network. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 2539–2547. [Google Scholar]

- Yakhnenko, O.; Silvescu, A.; Honavar, V. Discriminatively trained markov model for sequence classification. In Proceedings of the Fifth IEEE International Conference on Data Mining (ICDM’05), Houston, TX, USA, 27–30 November 2005; pp. 498–505. [Google Scholar]

- Jaakkola, T.; Haussler, D. Exploiting generative models in discriminative classifiers. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 29 November–4 December 1999; pp. 487–493. [Google Scholar]

- Rasmus, A.; Berglund, M.; Honkala, M.; Valpola, H.; Raiko, T. Semi-supervised learning with ladder networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 3546–3554. [Google Scholar]

- Goodfellow, I.; Courville, A.; Bengio, Y. Large-scale feature learning with spike-and-slab sparse coding. arXiv 2012, arXiv:1206.6407. [Google Scholar]

- Miyato, T.; Maeda, S.I.; Ishii, S.; Koyama, M. Virtual adversarial training: A regularization method for supervised and semi-supervised learning. IEEE Trans. Pattern Anal. Mach. Intell. 2018, arXiv:1704.03976. [Google Scholar] [CrossRef] [PubMed]

- Csiszár, I.; Shields, P.C. Information theory and statistics: A tutorial. In Foundations and Trends® in Communications and Information Theory; Now Publishers: Hanover, MA, USA, 2004; Volume 1, pp. 417–528. [Google Scholar]

- Blei, D.M.; Kucukelbir, A.; McAuliffe, J.D. Variational Inference: A Review for Statisticians. J. Am. Stat. Assoc. 2017, 112, 859–877. [Google Scholar] [CrossRef]

- Barlow, H.B. Possible principles underlying the transformation of sensory messages. Sens. Commun. 1961, 1, 217–234. [Google Scholar]

- Schenk, T.; McIntosh, R.D. Do we have independent visual streams for perception and action? Cogn. Neurosci. 2010, 1, 52–62. [Google Scholar] [CrossRef] [PubMed]

- Koshizen, T.; Akatsuka, K.; Tsujino, H. A computational model of attentive visual system induced by cortical neural network. Neurocomputing 2002, 44, 881–887. [Google Scholar] [CrossRef]

- Oliver, A.; Odena, A.; Raffel, C.A.; Cubuk, E.D.; Goodfellow, I. Realistic evaluation of deep semi-supervised learning algorithms. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 3235–3246. [Google Scholar]

- Tieleman, T.; Hinton, G. Lecture 6.5-rmsprop: Divide the gradient by a running average of its recent magnitude. Coursera Neural Networks Mach. Learn. 2012, 4, 26–31. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Borji, A. Pros and Cons of GAN Evaluation Measures. arXiv 2018, arXiv:1802.03446. [Google Scholar] [CrossRef]

- Cicek, S.; Fawzi, A.; Soatto, S. SaaS: Speed as a Supervisor for Semi-supervised Learning. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 149–163. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 1195–1204. [Google Scholar]

- Grandvalet, Y.; Bengio, Y. Semi-supervised learning by entropy minimization. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 5–8 December 2005; pp. 529–536. [Google Scholar]

| Dataset | Model Architecture and Training Configurations |

|---|---|

| MNIST digits | Input: 784 (flattened 28 × 28 × 1) |

| Base encoder: FC 500, 300, ReLU activation | |

| Scaffolding encoder:FC 500, 200, 100, | |

| ReLU activation | |

| Decoders FC 200, 784, | |

| Sigmoid activation, Gaussian | |

| Batch size: 100 | |

| num of batch: 100 | |

| optimiser: rmsprop [28] | |

| CIFAR-10(4K) | Input: 32 × 32 × 3 |

| Base encoder: Conv 32 × 3 × 3 (stride 2), | |

| Pooling(stride 2). | |

| Conv 64 × 3 × 3 (stride 2) FC 200. | |

| ReLU activation | |

| Scaffolding Encoder: Conv 32 × 3 × 3 (stride 2), | |

| Pooling(stride 2). | |

| Conv 32 × 3 × 3 (stride 2) FC 200. Categorical. | |

| ReLU activation | |

| Batch Size: 100 | |

| num of batch: 50 | |

| optimiser:Adam [29] |

| the value of | 0 | 1 | 5 | 10 | 15 | 20 | 25 | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| average similarity rating |

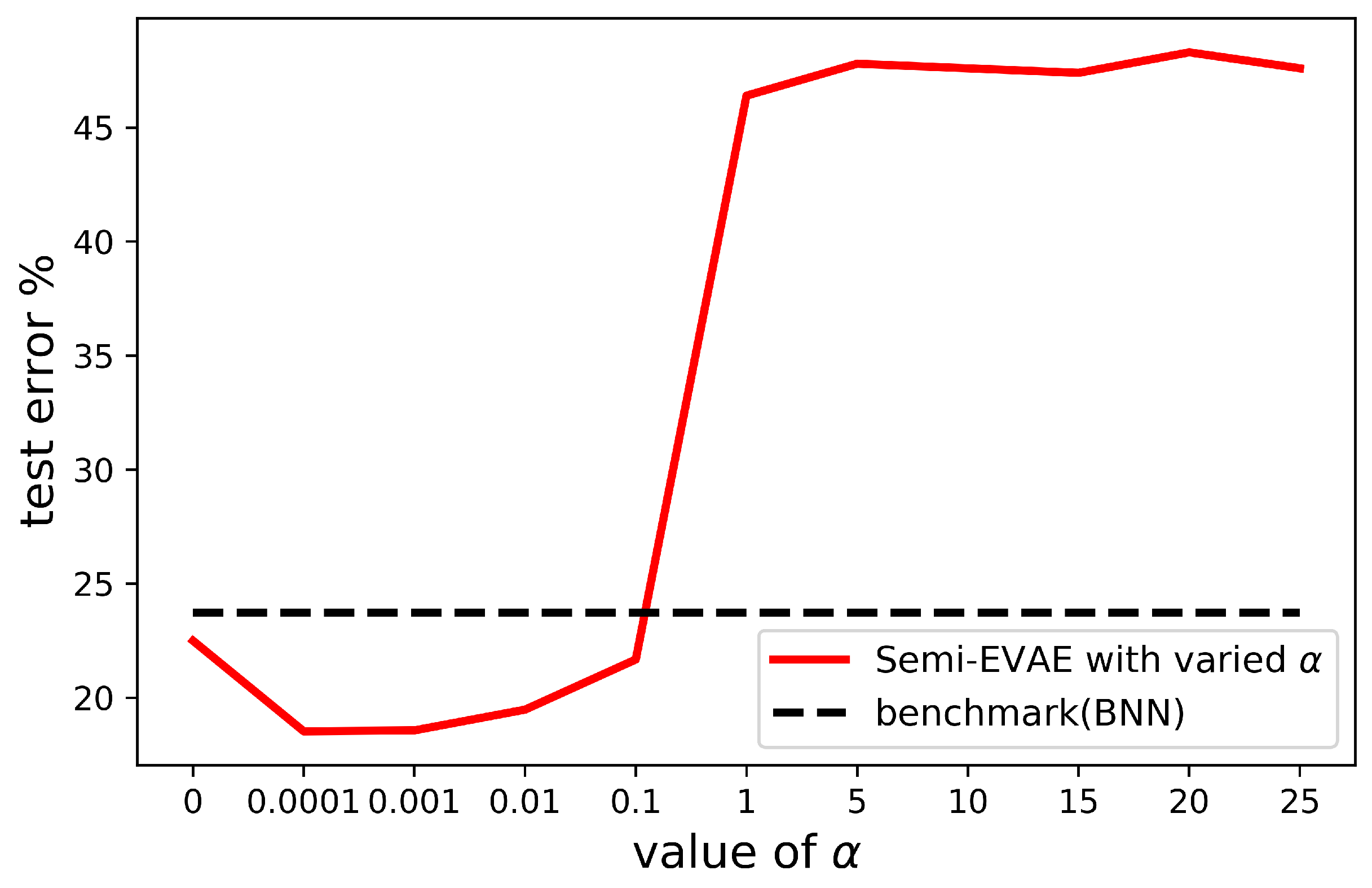

| Model | Test Error(%) |

|---|---|

| Our implementation | |

| S3C [20] | |

| Ladder networks [19] | |

| VAT [21] | |

| semisupervised EVAE () | |

| semisupervised EVAE () | |

| semisupervised EVAE () | |

| semisupervised EVAE () | |

| semisupervised EVAE () | |

| semisupervised EVAE () | |

| semisupervised EVAE () | |

| semisupervised EVAE () | |

| semisupervised EVAE () | |

| semisupervised EVAE () | |

| semisupervised EVAE () | |

| Benchmark: supervised BNN | |

| Other implementations | |

| VAT [21] (in [27]) | |

| VAT + EntMin [21,33] (in [31]) | |

| Mean Teacher [32] (in [27]) | |

| Mean Teacher [32] (in [31]) | |

| SaaS [31] (in [31]) | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, W.; Quan, C.; Luo, Z.-W. Improving Generative and Discriminative Modelling Performance by Implementing Learning Constraints in Encapsulated Variational Autoencoders. Appl. Sci. 2019, 9, 2551. https://doi.org/10.3390/app9122551

Bai W, Quan C, Luo Z-W. Improving Generative and Discriminative Modelling Performance by Implementing Learning Constraints in Encapsulated Variational Autoencoders. Applied Sciences. 2019; 9(12):2551. https://doi.org/10.3390/app9122551

Chicago/Turabian StyleBai, Wenjun, Changqin Quan, and Zhi-Wei Luo. 2019. "Improving Generative and Discriminative Modelling Performance by Implementing Learning Constraints in Encapsulated Variational Autoencoders" Applied Sciences 9, no. 12: 2551. https://doi.org/10.3390/app9122551

APA StyleBai, W., Quan, C., & Luo, Z.-W. (2019). Improving Generative and Discriminative Modelling Performance by Implementing Learning Constraints in Encapsulated Variational Autoencoders. Applied Sciences, 9(12), 2551. https://doi.org/10.3390/app9122551