Depression Detection Using Relative EEG Power Induced by Emotionally Positive Images and a Conformal Kernel Support Vector Machine

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

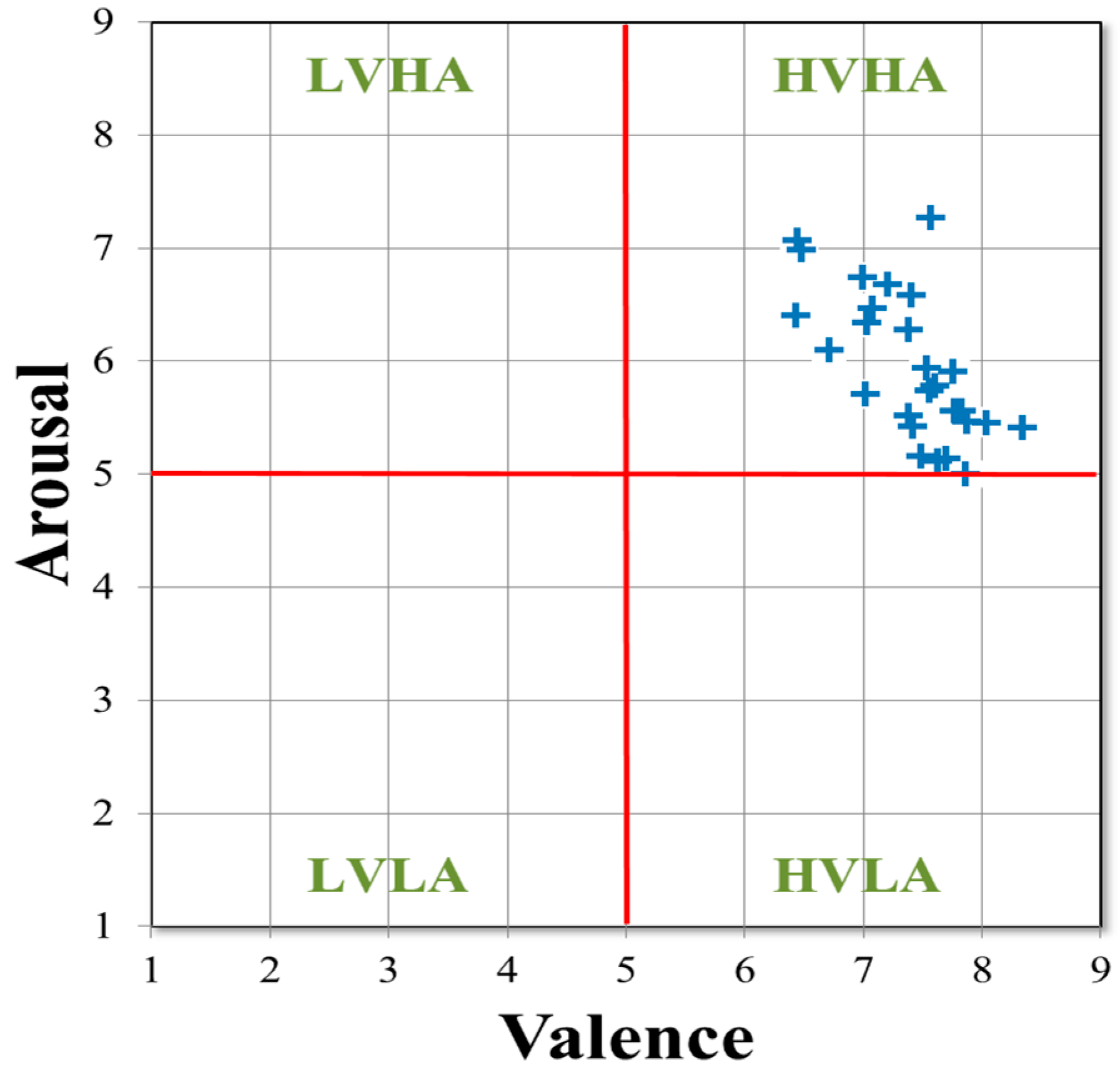

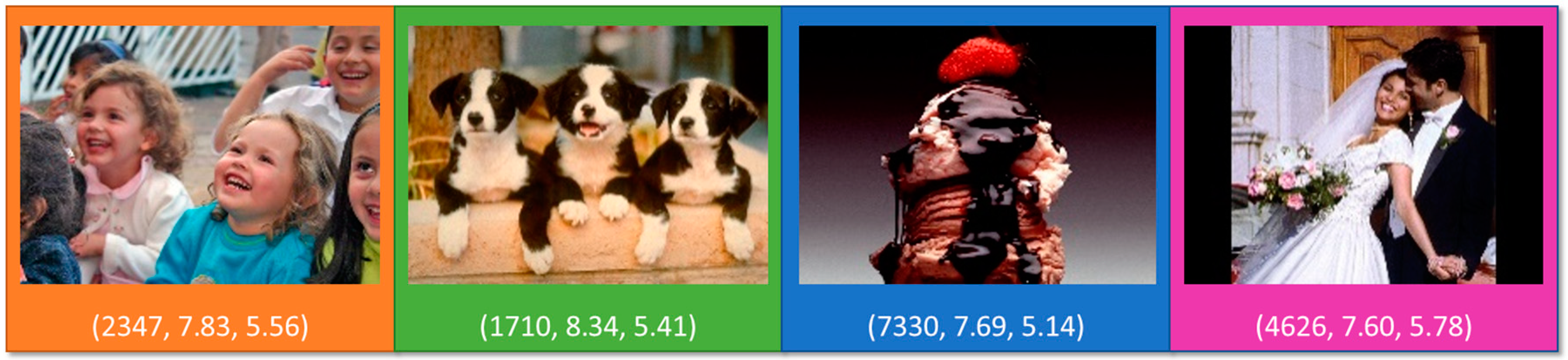

2.2. Emotional Stimuli

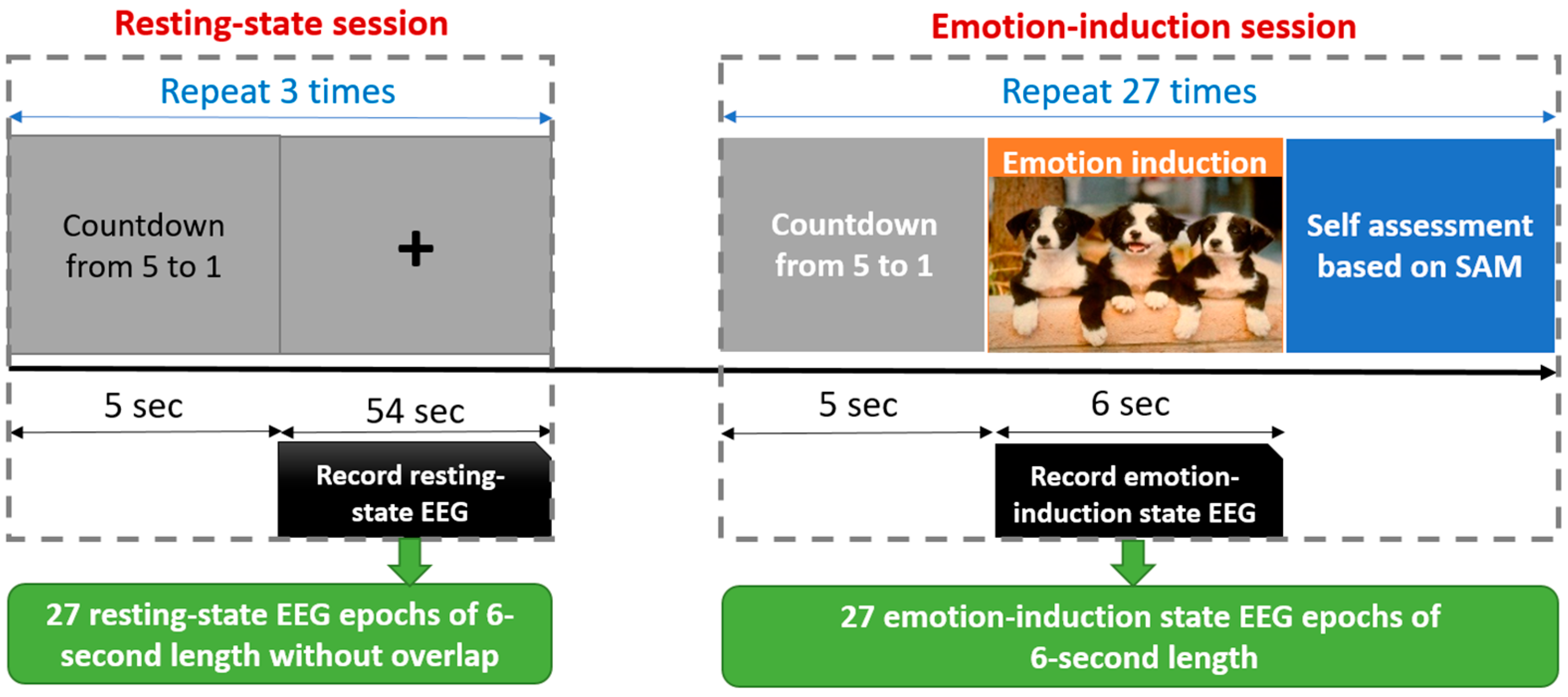

2.3. Resting-State and Emotion-Induction EEG Data Collection

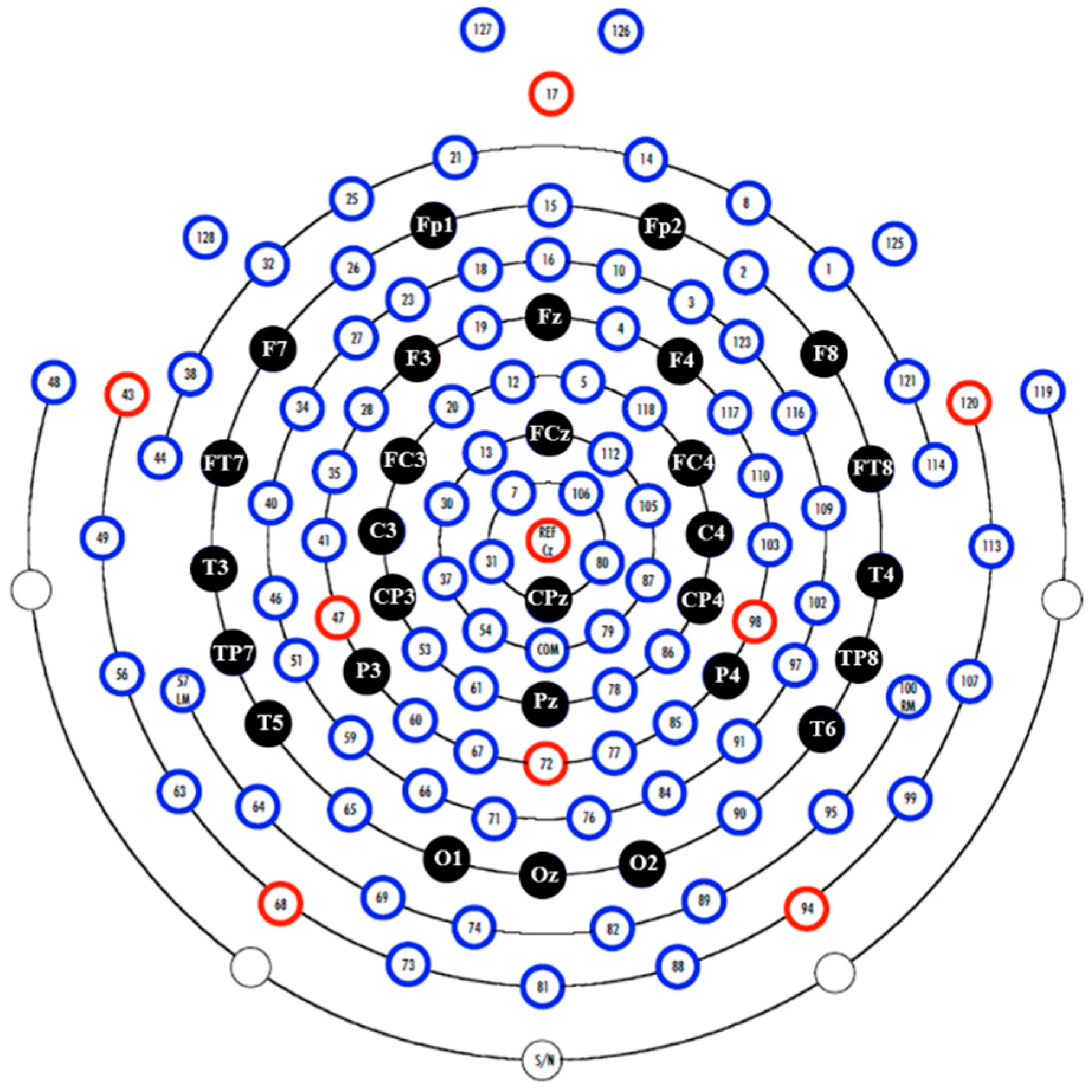

2.4. Apparatus, Settings, and EEG Preprocessing

2.5. Feature Extraction

2.6. Classification

2.6.1. LDA

2.6.2. QDA

2.6.3. SVM

2.6.4. CK-SVM

2.7. Performance Evaluation

2.7.1. LOPO-CV

2.7.2. Parameter Optimization

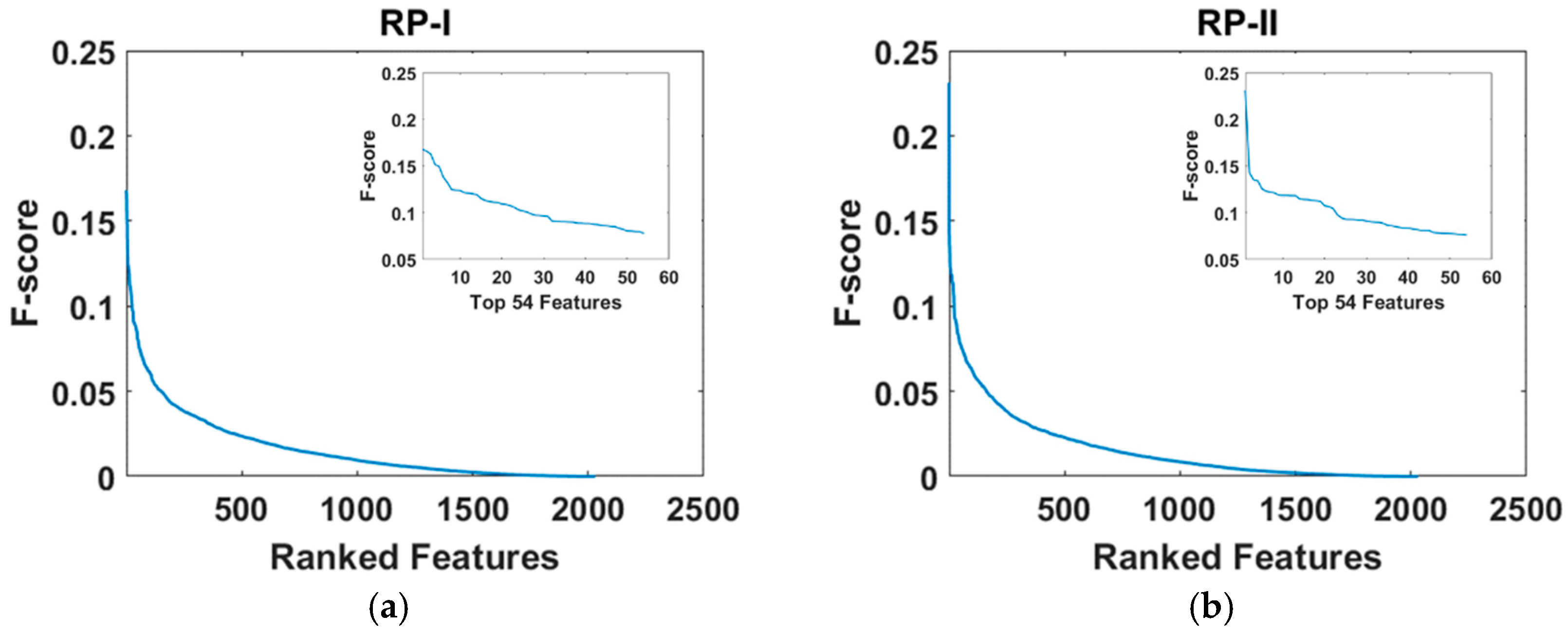

2.7.3. Feature Dimension Reduction

2.8. Statistical Analysis

3. Results

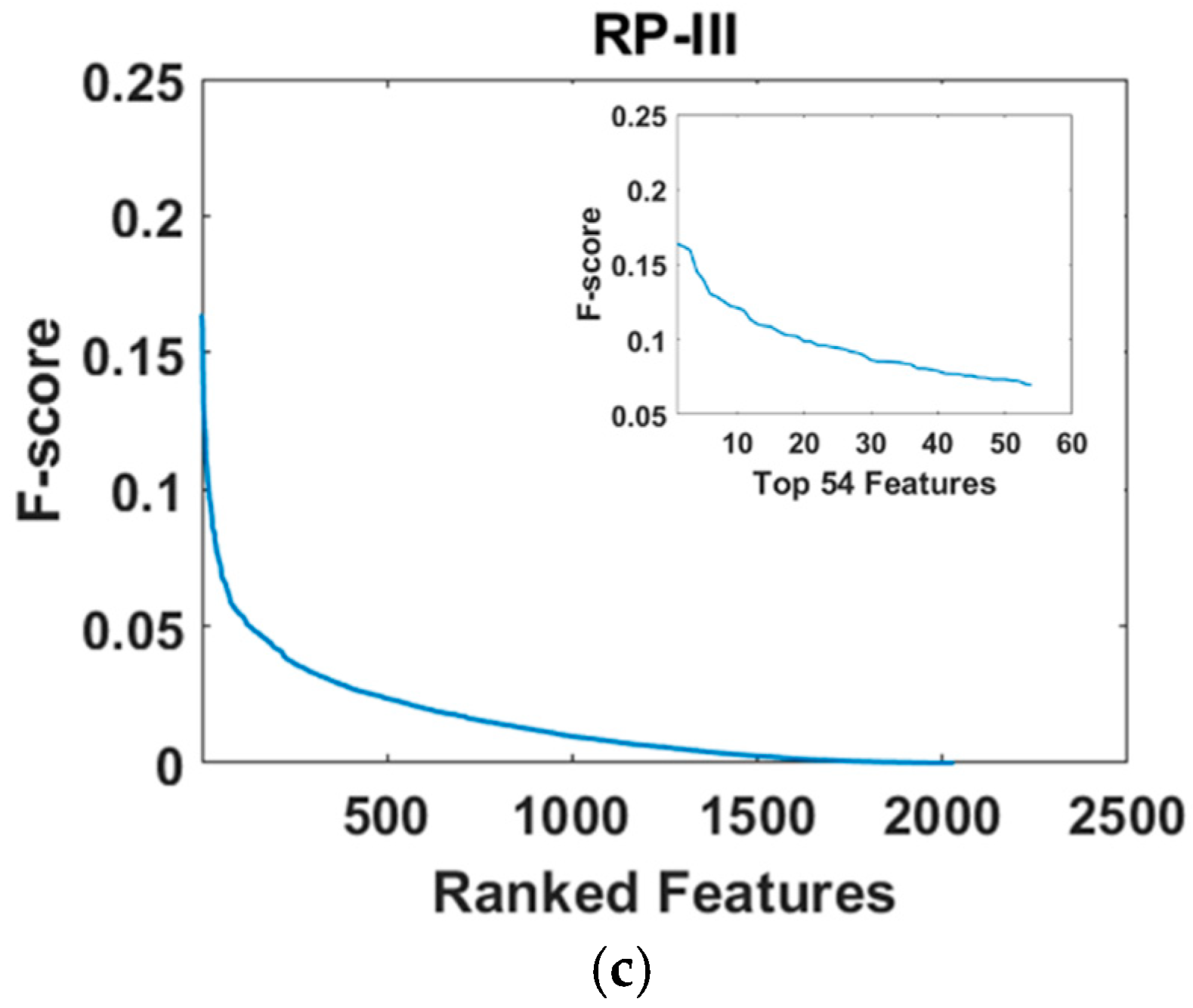

3.1. Statistical Analysis Results of Subjective Ratings of Valence and Arousal

3.2. LOPO-CV Classification Results Based on LDA Classifier and Top-N-F-Score-Ranked Features

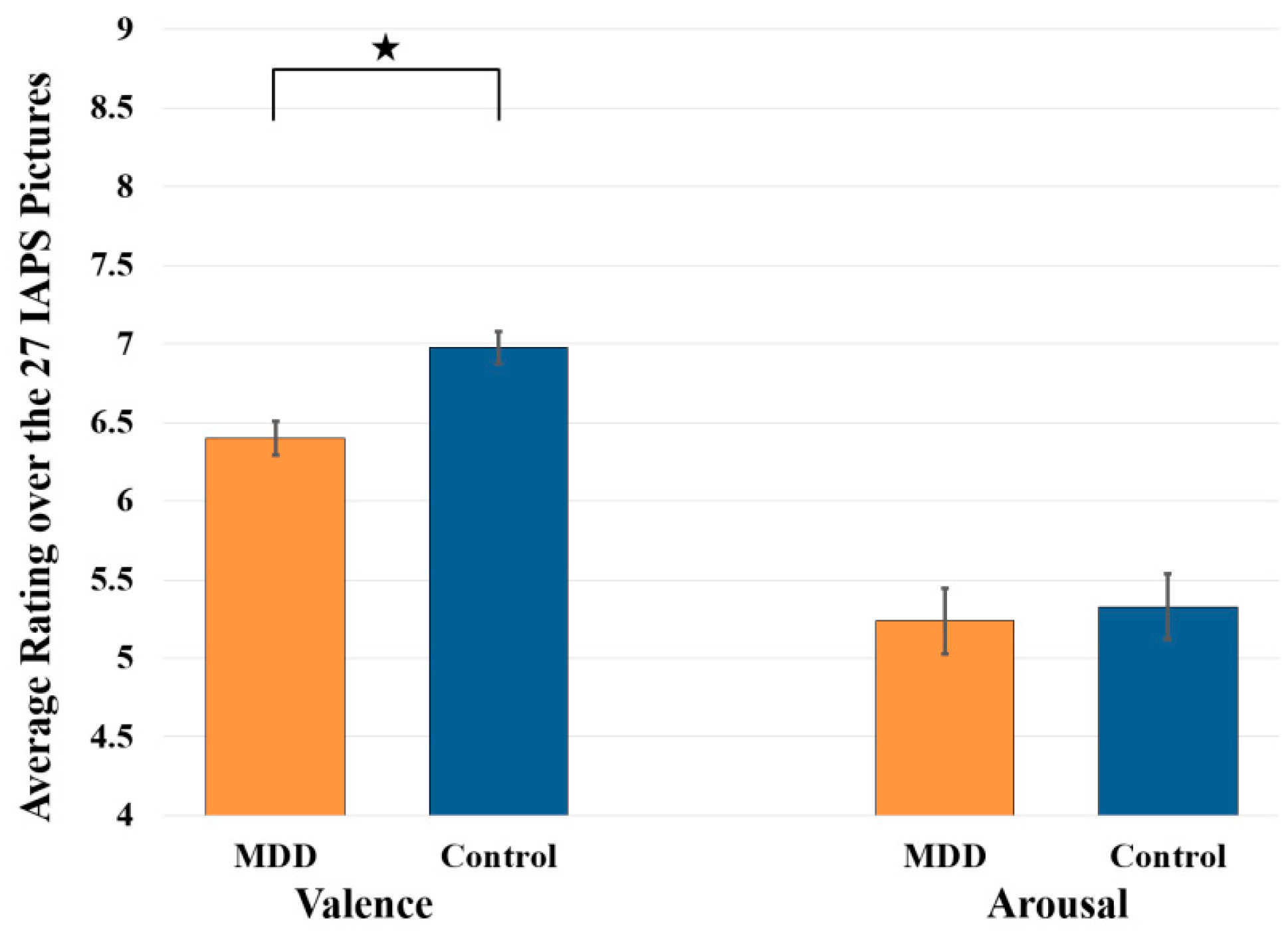

3.3. Comparison of Top-N-F-Score-Ranked Features Across the Three Types of Relative Power During Resting State and Emotion-Induction State

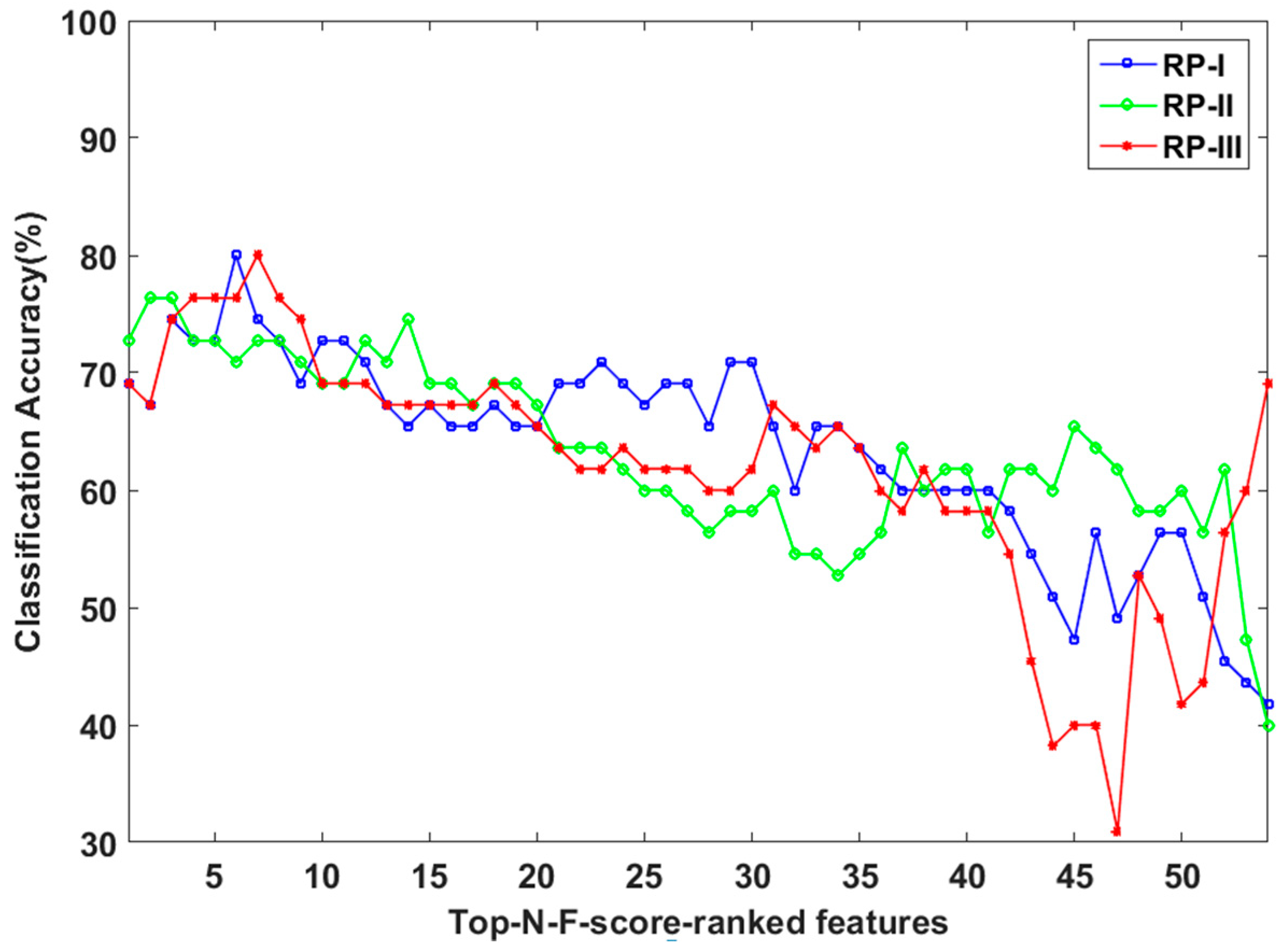

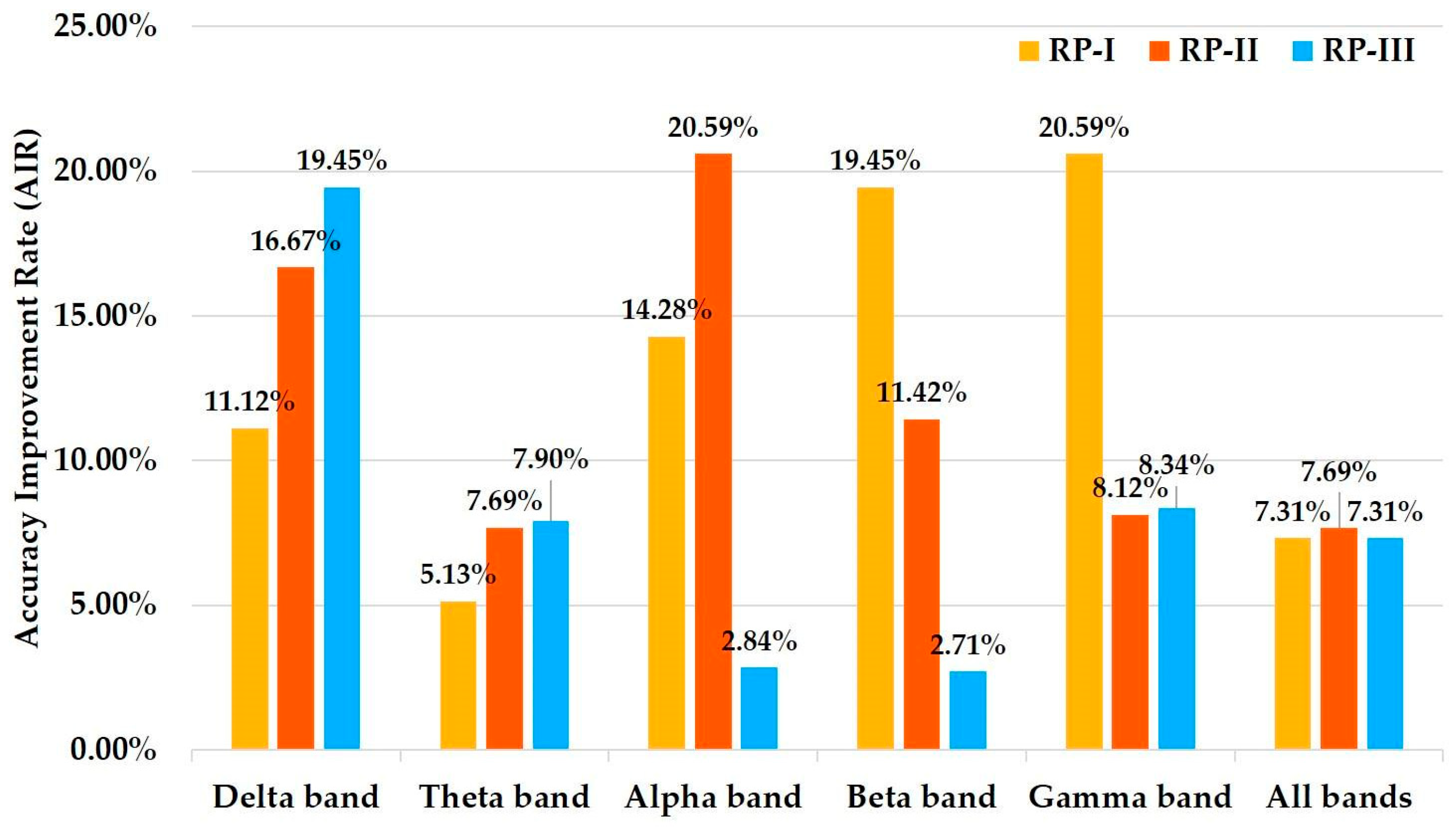

3.4. Comparison of LOPO-CV Classification Performance Among Different Classifiers in the-Emotion Induction State

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders: DSM-5, 5th ed.; DSM-5 Task Force; American Psychiatric Association: Washington, DC, USA, 2013; p. xliv. 947p. [Google Scholar]

- Snyder, H.R. Major depressive disorder is associated with broad impairments on neuropsychological measures of executive function: A meta-analysis and review. Psychol. Bull. 2013, 139, 81–132. [Google Scholar] [CrossRef] [PubMed]

- Burt, D.B.; Zembar, M.J.; Niederehe, G. Depression and memory impairment: A meta-analysis of the association, its pattern, and specificity. Psychol. Bull. 1995, 117, 285–305. [Google Scholar] [CrossRef] [PubMed]

- Nock, M.K.; Dempsey, C.L.; Aliaga, P.A.; Brent, D.A.; Heeringa, S.G.; Kessler, R.C.; Stein, M.B.; Ursano, R.J.; Benedek, D. Psychological autopsy study comparing suicide decedents, suicide ideators, and propensity score matched controls: Results from the study to assess risk and resilience in service members (Army STARRS). Psychol. Med. 2017, 47, 2663–2674. [Google Scholar] [CrossRef] [PubMed]

- Vos, T.; Flaxman, A.D.; Naghavi, M.; Lozano, R.; Michaud, C.; Ezzati, M.; Shibuya, K.; Salomon, J.A.; Abdalla, S.; Aboyans, V.; et al. Years lived with disability (YLDs) for 1160 sequelae of 289 diseases and injuries 1990–2010: A systematic analysis for the global burden of disease study 2010. Lancet 2012, 380, 2163–2196. [Google Scholar] [CrossRef]

- Mumtaz, W.; Malik, A.S.; Yasin, M.A.M.; Xia, L.K. Review on EEG and ERP predictive biomarkers for major depressive disorder. Biomed. Signal Process. Control 2015, 22, 85–98. [Google Scholar] [CrossRef]

- Liu, Y.H.; Wang, S.H.; Hu, M.R. A self-paced P300 healthcare brain-computer interface system with SSVEP-based switching control and kernel FDA plus SVM-based detector. Appl. Sci. 2016, 6, 142. [Google Scholar] [CrossRef]

- Segrave, R.A.; Cooper, N.R.; Thomson, R.H.; Croft, R.J.; Sheppard, D.M.; Fitzgerald, P.B. Individualized alpha activity and frontal asymmetry in major depression. Clin. EEG Neurosci. 2011, 42, 45–52. [Google Scholar] [CrossRef] [PubMed]

- Tas, C.; Cebi, M.; Tan, O.; Hizli-Sayar, G.; Tarhan, N.; Brown, E.C. EEG power, cordance and coherence differences between unipolar and bipolar depression. J. Affect. Disord. 2015, 172, 184–190. [Google Scholar] [CrossRef] [PubMed]

- Hosseinifard, B.; Moradi, M.H.; Rostami, R. Classifying depression patients and normal subjects using machine learning techniques and nonlinear features from EEG signal. Comput. Methods Programs Biomed. 2013, 109, 339–345. [Google Scholar] [CrossRef] [PubMed]

- Mumtaz, W.; Xia, L.K.; Ali, S.S.A.; Yasin, M.A.M.; Hussain, M.; Malik, A.S. Electroencephalogram (EEG)-based computer-aided technique to diagnose major depressive disorder (MDD). Biomed. Signal Process. Control 2017, 31, 108–115. [Google Scholar] [CrossRef]

- Liao, S.C.; Wu, C.T.; Huang, H.C.; Cheng, W.T.; Liu, Y.H. Major depression detection from EEG signals using kernel eigen-filter-bank common spatial patterns. Sensors 2017, 17, 1385. [Google Scholar] [CrossRef] [PubMed]

- Watson, D.; Weber, K.; Assenheimer, J.S.; Clark, L.A.; Strauss, M.E.; McCormick, R.A. Testing a tripartite model: I. Evaluating the convergent and discriminant validity of anxiety and depression symptom scales. J. Abnorm. Psychol. 1995, 104, 3–14. [Google Scholar] [CrossRef] [PubMed]

- Pizzagalli, D.A. Depression, stress, and anhedonia: Toward a synthesis and integrated model. Annu. Rev. Clin. Psychol. 2014, 10, 393–423. [Google Scholar] [CrossRef] [PubMed]

- Proudfit, G.H. The reward positivity: From basic research on reward to a biomarker for depression. Psychophysiology 2015, 52, 449–459. [Google Scholar] [CrossRef] [PubMed]

- Treadway, M.T.; Zald, D.H. Reconsidering anhedonia in depression: Lessons from translational neuroscience. Neurosci. Biobehav. Rev. 2011, 35, 537–555. [Google Scholar] [CrossRef] [PubMed]

- Dillon, D.G. The neuroscience of positive memory deficits in depression. Front. Psychol. 2015, 6, 1295. [Google Scholar] [CrossRef] [PubMed]

- Dillon, D.G.; Dobbins, I.G.; Pizzagalli, D.A. Weak reward source memory in depression reflects blunted activation of VTA/SN and parahippocampus. Soc. Cognit. Affect. Neurosci. 2014, 9, 1576–1583. [Google Scholar] [CrossRef] [PubMed]

- Dillon, D.G.; Pizzagalli, D.A. Mechanisms of memory disruption in depression. Trends Neurosci. 2018, 41, 137–149. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.P.; Wang, C.H.; Jung, T.P.; Wu, T.L.; Jeng, S.K.; Duann, J.R.; Chen, J.H. EEG-based emotion recognition in music listening. IEEE Trans. Biomed. Eng. 2010, 57, 1798–1806. [Google Scholar] [PubMed]

- Wu, S.; Amari, S. Conformal transformation of kernel functions: A data-dependent way to improve support vector machine classifiers. Neural Process. Lett. 2002, 15, 59–67. [Google Scholar] [CrossRef]

- Liu, Y.H.; Wu, C.T.; Cheng, W.T.; Hsiao, Y.T.; Chen, P.M.; Teng, J.T. Emotion recognition from single-trial EEG based on kernel Fisher’s emotion pattern and imbalanced quasiconformal kernel support vector machine. Sensors 2014, 14, 13361–13388. [Google Scholar] [CrossRef] [PubMed]

- Narsky, I.; Porter, F.C. Statistical Analysis Techniques in Particle Physics: Fits, Density Estimation and Supervised Learning; Wiley-VCH Verlag GmbH & Co.: Hoboken, NJ, USA, 2014. [Google Scholar]

- Barrick, E.M.; Dillon, D.G. An ERP study of multidimensional source retrieval in depression. Biol. Psychol. 2018, 132, 176–191. [Google Scholar] [CrossRef] [PubMed]

- Lecrubier, Y.; Sheehan, D.V.; Weiller, E.; Amorim, P.; Bonora, I.; Sheehan, K.H.; Janavs, J.; Dunbar, G.C. The Mini International Neuropsychiatric Interview (MINI). A short diagnostic structured interview: Reliability and validity according to the CIDI. Eur. Psychiatry 1997, 12, 224–231. [Google Scholar] [CrossRef]

- Beck, A.T.; Steer, R.A.; Brown, G.K. Manual for the Beck Depression Inventory-II; Psychological Corporation: San Antonio, TX, USA, 1996. [Google Scholar]

- Lang, P.J.; Bradley, M.M.; Cuthbert, B.N. International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual; Technical Report A-8; University of Florida: Gainesville, FL, USA, 2008. [Google Scholar]

- Dillon, D.G.; Pizzagalli, D.A. Evidence of successful modulation of brain activation and subjective experience during reappraisal of negative emotion in unmedicated depression. Psychiatry Res. 2013, 212, 99–107. [Google Scholar] [CrossRef] [PubMed]

- Bradley, M.M.; Lang, P.J. Measuring emotion: The self-assessment Manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 1994, 25, 49–59. [Google Scholar] [CrossRef]

- Peirce, J.W. PsychoPy—Psychophysics software in Python. J. Neurosci. Methods 2007, 162, 8–13. [Google Scholar] [CrossRef] [PubMed]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Mognon, A.; Jovicich, J.; Bruzzone, L.; Buiatti, M. ADJUST: An automatic EEG artifact detector based on the joint use of spatial and temporal features. Psychophysiology 2011, 48, 229–240. [Google Scholar] [CrossRef] [PubMed]

- Knott, V.; Mahoney, C.; Kennedy, S.; Evans, K. EEG power, frequency, asymmetry and coherence in male depression. Psychiatry Res. Neuroimaging 2001, 106, 123–140. [Google Scholar] [CrossRef]

- Liu, Y.H.; Liu, Y.C.; Chen, Y.J. Fast support vector data descriptions for novelty detection. IEEE Trans. Neural Netw. 2010, 21, 1296–1313. [Google Scholar] [PubMed]

- Everitt, B.; Skrondal, A. The Cambridge Dictionary of Statistics; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Jović, A.; Brkić, K.; Bogunović, N. A review of feature selection methods with applications. In Proceedings of the 2015 38th International Conference on Information and Communication Technology, Electronics and Microelectronics, Opatija, Croatia, 25–29 May 2015. [Google Scholar]

- Panthong, R.; Srivihok, A. Wrapper Feature subset selection for dimension reduction based on ensemble learning algorithm. Procedia Comput. Sci. 2015, 72, 162–169. [Google Scholar] [CrossRef]

- You, W.; Yang, Z.; Ji, G. Feature selection for high-dimensional multi-category data using PLS-based local recursive feature elimination. Expert Syst. Appl. 2014, 41, 1463–1475. [Google Scholar] [CrossRef]

- Fang, L.; Zhao, H.; Wang, P.; Yu, M.; Yan, J.; Cheng, W.; Chen, P. Feature selection method based on mutual information and class separability for dimension reduction in multidimensional time series for clinical data. Biomed. Signal Process. Control 2015, 21, 82–89. [Google Scholar] [CrossRef]

- Liu, Y.H.; Huang, S.A.; Huang, Y.D. Motor imagery EEG Classification for patients with amyotrophic lateral sclerosis using fractal dimension and Fisher’s criterion-based channel selection. Sensors 2017, 17, 1557. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.W.; Plataniotis, K.N.; Venetsanopoulos, A.N. Face recognition using kernel direct discriminant analysis algorithms. IEEE Trans. Neural Netw. 2003, 14, 117–126. [Google Scholar] [PubMed]

- Mühl, C.; Allison, B.; Nijholt, A.; Chanel, G. A survey of affective brain computer interfaces: Principles, state-of-the-art, and challenges. Brain Comput. Interfaces 2014, 1, 66–84. [Google Scholar] [CrossRef]

- Poria, S.; Cambria, E.; Bajpai, R.; Hussain, A. A review of affective computing: From unimodal analysis to multimodal fusion. Inf. Fusion 2017, 37, 98–125. [Google Scholar] [CrossRef]

- Zamani, N. Is international affective picture system (IAPS) appropriate for using in Iranian culture, comparing to the original normative rating based on a North American sample. Eur. Psychiatry 2017, 41, S520. [Google Scholar] [CrossRef]

- Lohani, M.; Gupta, R.; Srinivasan, N. Cross-cultural evaluation of the international affective picture system on an Indian Sample. Psychol. Stud. 2013, 58, 233–241. [Google Scholar] [CrossRef]

- Akar, S.A.; Kara, S.; Agambayev, S.; Bilgic, V. Nonlinear analysis of EEGs of patients with major depression during different emotional states. Comput. Biol. Med. 2015, 67, 49–60. [Google Scholar] [CrossRef] [PubMed]

- Hunt, M.; Auriemma, J.; Cashaw, A.C. Self-report bias and underreporting of depression on the BDI-II. J. Personal. Assess. 2003, 80, 26–30. [Google Scholar] [CrossRef] [PubMed]

- Brownhill, S.; Wilhelm, K.; Barclay, L.; Schmied, V. ‘Big build’: Hidden depression in men. Aust. N. Z. J. Psychiatry 2005, 39, 921–931. [Google Scholar] [PubMed]

- Sigmon, S.T.; Pells, J.J.; Boulard, N.E.; Whitcomb-Smith, S.; Edenfield, T.M.; Hermann, B.A.; LaMattina, S.M.; Schartel, J.G.; Kubik, E. Gender differences in self-reports of depression: The response bias hypothesis revisited. Sex Roles 2005, 53, 401–411. [Google Scholar] [CrossRef]

- Ryder, A.G.; Yang, J.; Zhu, X.; Yao, S.; Yi, J.; Heine, S.J.; Bagby, R.M. The cultural shaping of depression: Somatic symptoms in China, psychological symptoms in North America? J. Abnorm. Psychol. 2008, 117, 300–313. [Google Scholar] [CrossRef] [PubMed]

- Yeung, A.; Howarth, S.; Chan, R.; Sonawalla, S.; Nierenberg, A.A.; Fava, M. Use of the Chinese version of the Beck Depression Inventory for screening depression in primary care. J. Nerv. Ment. Dis. 2002, 190, 94–99. [Google Scholar] [CrossRef] [PubMed]

- DeRubeis, R.J.; Cohen, Z.D.; Forand, N.R.; Fournier, J.C.; Gelfand, L.A.; Lorenzo-Luaces, L. The Personalized Advantage Index: translating research on prediction into individualized treatment recommendations. A demonstration. PLoS ONE 2014, 9, e83875. [Google Scholar] [CrossRef] [PubMed]

- Webb, C.A.; Trivedi, M.H.; Cohen, Z.D.; Dillon, D.G.; Fournier, J.C.; Goer, F.; Fava, M.; McGrath, P.J.; Weissman, M.; Parsey, R.; et al. Personalized prediction of antidepressant v. placebo response: Evidence from the EMBARC study. Psychol. Med. 2018, in press. [Google Scholar] [CrossRef] [PubMed]

- Trivedi, M.H.; McGrath, P.J.; Fava, M.; Parsey, R.V.; Kurian, B.T.; Phillips, M.L.; Oquendo, M.A.; Bruder, G.; Pizzagalli, D.; Toups, M.; et al. Establishing moderators and biosignatures of antidepressant response in clinical care (EMBARC): Rationale and design. J. Psychiatr. Res. 2016, 78, 11–23. [Google Scholar] [CrossRef] [PubMed]

| Feature | State | Delta Band | Theta Band | ALPHA Band | Beta Band | Gamma Band | All Bands |

|---|---|---|---|---|---|---|---|

| RP-I | Resting | 65.45% (2) | 70.91% (7) | 63.64% (1) | 65.45% (44) | 61.82% (3) | 74.55% (7) |

| EI | 72.73% (2) | 74.55% (2) | 72.73% (31) | 78.18% (45) | 74.55% (2) | 80.00% (6) | |

| RP-II | Resting | 65.45% (53) | 70.91% (24) | 61.82% (3) | 63.64% (32) | 67.27% (3) | 70.91% (3) |

| EI | 76.36% (13) | 76.36% (35) | 74.55% (4) | 70.91% (19) | 72.73% (2) | 76.36% (2) | |

| RP-III | Resting | 65.45% (2) | 69.09% (5) | 63.64% (1) | 67.27% (39) | 65.45% (35) | 74.55% (7) |

| EI | 78.18% (2) | 74.55% (2) | 65.45% (3) | 69.09% (2) | 70.91% (2) | 80.00% (7) |

| Resting State | Emotion Induction | |||||

|---|---|---|---|---|---|---|

| Ranking | RP-I | RP-II | RP-III | RP-I | RP-II | RP-III |

| 1 | FP1-FP2(α) | T4-CP4(γ) | FP1-FP2(α) | FP1-FP2(δ) | FP1-FP2(α) | FP1-FP2(δ) |

| 2 | Fz-FCz(θ) | F8-C4(γ) | Fz-FCz(θ) | FP1-FP2(θ) | TP7-T6(β) | FP1-FP2(θ) |

| 3 | T4-CP4(γ) | FC3-CP3(θ) | FP1-FP2(θ) | TP7-T6(β) | TP7-T6(β) | |

| 4 | FT8-CP4(γ) | FT8-T4(δ) | C3-TP7(γ) | F4-CP3(θ) | ||

| 5 | FP1-FP2(θ) | CP3-CP4(γ) | C3-CP3(γ) | C3-CP3(γ) | ||

| 6 | FT8-T4(δ) | FT8-CP4(γ) | F4-CP3(θ) | F4-CP3(α) | ||

| 7 | F8-C4(γ) | FT8-T4(θ) | TP7-T6(γ) | |||

| # electrodes | 9 | 6 | 9 | 7 | 4 | 7 |

| Feature | LDA | QDA | SVM | CK-SVM |

|---|---|---|---|---|

| RP-I (6) | 80.00% | 65.45% | 81.82% | 81.82% |

| RP-II (2) | 76.36% | 74.55% | 78.18% | 80.00% |

| RP-III (7) | 80.00% | 70.91% | 80.00% | 83.64% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, C.-T.; Dillon, D.G.; Hsu, H.-C.; Huang, S.; Barrick, E.; Liu, Y.-H. Depression Detection Using Relative EEG Power Induced by Emotionally Positive Images and a Conformal Kernel Support Vector Machine. Appl. Sci. 2018, 8, 1244. https://doi.org/10.3390/app8081244

Wu C-T, Dillon DG, Hsu H-C, Huang S, Barrick E, Liu Y-H. Depression Detection Using Relative EEG Power Induced by Emotionally Positive Images and a Conformal Kernel Support Vector Machine. Applied Sciences. 2018; 8(8):1244. https://doi.org/10.3390/app8081244

Chicago/Turabian StyleWu, Chien-Te, Daniel G. Dillon, Hao-Chun Hsu, Shiuan Huang, Elyssa Barrick, and Yi-Hung Liu. 2018. "Depression Detection Using Relative EEG Power Induced by Emotionally Positive Images and a Conformal Kernel Support Vector Machine" Applied Sciences 8, no. 8: 1244. https://doi.org/10.3390/app8081244

APA StyleWu, C.-T., Dillon, D. G., Hsu, H.-C., Huang, S., Barrick, E., & Liu, Y.-H. (2018). Depression Detection Using Relative EEG Power Induced by Emotionally Positive Images and a Conformal Kernel Support Vector Machine. Applied Sciences, 8(8), 1244. https://doi.org/10.3390/app8081244