1. Introduction

This article describes a universal model for a home well suited for the well-being of the elderly and suggests how some technologies and types of interaction may be used to improve their lives. Some of the technologies and solutions proposed are already mainstream and present in some modern devices. Others, although are not yet largely disseminated, may become plausible solutions in the future. The model is universal and may be adapted for different types of people, but our particular case of study is mostly focuses on the elderly. These are undeniably a group of the society who can enrich their lives by integrating implementations of some of the proposed topics addressed.

The model assumes communications to several electronic devices in a home in order to monitor and keep track of user activities. This is truly one of the major challenges since it is important to gather some information which allows us to determine the condition of an elder living in her/his home. This can involve several aspects such as the health, the activities carried out and even the emotional state. Ways of measure these aspects are then compulsory to define a model which can interact with an elder in a positive and effective manner.

In the field of health, there is a lot of previous work in IoT intelligent devices intended for the elderly [

1,

2,

3,

4] and several solutions were applied with success. Actually, even with some complexity, some implementations of these are really straightforward. Physical activities and emotional states, however, are a little more complex than the previous (health).

1.1. Measuring Physical Activity

To measure physical activities the Metabolic Equivalent (MET) may be used. MET is a physiological measure expressing the energy cost of physical activities. This measurement is the one used in “The Adult Compendium of Physical Activities”, conceptualized by Dr. Bill Haskell from Stanford University and developed for use in epidemiologic studies to standardize the assignment of MET intensities in physical activity questionnaires [

5]. We opted for using the MET since it has been used extensively and effectively in a large variety of studies related to the measurement of physical activities e.g., [

6,

7,

8].

If a person is adopting a sedentary behaviour such as simply sitting quietly or additionally watching television or listening to music, it will have an energy expenditure within the range 1.0 to 1.5 MET. In practical terms, this means that the MET ranges from 1.0 to 1.5 kcal/kg/hour. If, instead, the person is performing more active tasks such as moderately bicycling on a stationary bike it may have a MET of about 6.8 (a little bit different then going uphill on an outdoor bike which can take as much as 16.0 MET).

1.2. Detecting Emotions

Another aspect that may be measured concerns the emotional state of a person. One way of reaching such goal is to determine the user emotions at different times. For this purpose, the Facial Action Coding System (FACS) may be useful, which has been used successfully in a large variety of fields to categorize facial expressions e.g., [

9,

10,

11,

12]. The FACS is a system which allows for describing facial movement, based on an anatomical analysis of observable facial movements, and it is often considered a common standard to systematically categorize the physical expression of emotions. This system, which has proven to be useful to psychologists and to animators, encodes movements of individual facial muscles from slight different instant changes in facial appearance. It has been established as an automated system which detects faces (e.g., in videos and most recently using automatic real-time recognition using 3D technology [

13]), extracting their geometrical features and then producing temporal profiles of each facial movement.

It was first published by Ekman and Friesen in 1978 [

14]. Since then, it has been revised and updated several times, such as in 2002, by the one from Paul Ekman, Wallace V. Friesen and Joseph C. Hager [

15] and more recently in 2017 (Facial Action Coding System 2.0—Manual of Scientific Codification of the Human Face from Freitas-Magalhães [

13]). This recent instrument to measure and scientifically understand the emotion in the human face presents 2000 segments in 4K, using 3D technology and automatic and real-time recognition.

The FACS use Action Units (AU) which represent the fundamental actions of individual muscles or groups of muscles. Emotions can, therefore, be represented by AU which can also be scored by their intensity ranging from minimal to maximal.

We identify two fields were the FACS may be used in the context of the purposed model. One is to recognize human expressions to measure the user mood. The other is to apply human expressions in avatars that may be used to communicate with users. So much as a virtual caregiver or friend which may accompany the user and provide feedback including different expressions—those that all of us learn to recognize since our early years. Indeed it may reveal to be a very useful enhancement in systems such as the virtual butler [

16].

Although the FACS may be used through image processing, using algorithms such as the Face Landmark Algorithm [

17], extracting characteristic points of the face from an image, the use of 3D scanning broadens the possibilities of the hardware and algorithms which may be exploited. If not so long ago, 3D scanning technology was too expensive to be considered in domestic solutions, the fact is that this is rapidly evolving. Thinking about recent advances, it is not so eccentric to think that it is probable to be present for everyday uses in a near future. Indeed, it is as already starting to appear in small and mainstream devices such as smartphones. An example is the TrueDepth camera included in the “new” iPhone X [

18], which allows analysing over 50 different facial muscles. This is achieved with the help of a built-in infrared emitter, which projects over 30,000 dots onto a user’s face to build a unique facial map. These dots are projected in a regular pattern and are then captured by an infrared camera for analysis. This system is somewhat similar than the one found on the original version of the Kinect sensor [

19] from Microsoft but built-in a much smaller scale. This sensor, especially the Windows version, has proven to be an effective solution for the low-cost acquisition of several real-world geometries in several projects [

20,

21,

22].

One of the advantages of this system is that it can also be used for facial recognition, one of the features integrated into the iPhone X (designed by Face ID). This also has some advantages in the field of security, especially when considering more traditional facial recognition systems based on image analysis, which can often be fooled with photographs and masks.

Such methods are evolving fast and trends indicate that they will become mainstream and be used effectively as secure authentication systems, in several applications such as unlocking systems, retrieving personal data, make use of social media, making payments, amongst others. Indeed, most systems already use advanced machine learning to recognize changes in the appearance of the users.

Even the multimedia ISO standard MPEG-4 includes tools such as the Face and Body Animation (FBA) [

23,

24], which enables a specific representation of a humanoid avatar and allows for very low bitrate compression and transmission of animation parameters [

25]. One component of this FBA is the Face Animation Parameters (FAP), to manipulate key feature control points in a mesh model to animate facial features. These were designed to be closely related to human facial muscles and are used in automatic speech recognition [

26] and biometrics [

27]. The FAP, representing several displacements and rotations of the feature points from the neutral face position, allows the representation of several facial expressions which may indeed be applied to Human-Computer Interaction (HCI) [

28].

Undeniably, there are several studies related to human emotions in HCI which represent different strategies or computational models for affect or emotion regulation e.g., [

29,

30,

31,

32,

33,

34,

35]. These also range a large multitude of domains, e.g., sports [

36], virtual storytelling [

37], games [

38] and even therapeutic fields [

39]. It is unarguable that emotions are important. In any single interaction between two (or more) humans both monitor and interpret each other’s emotional expressions.

2. A Model of Wellbeing for the Elderly

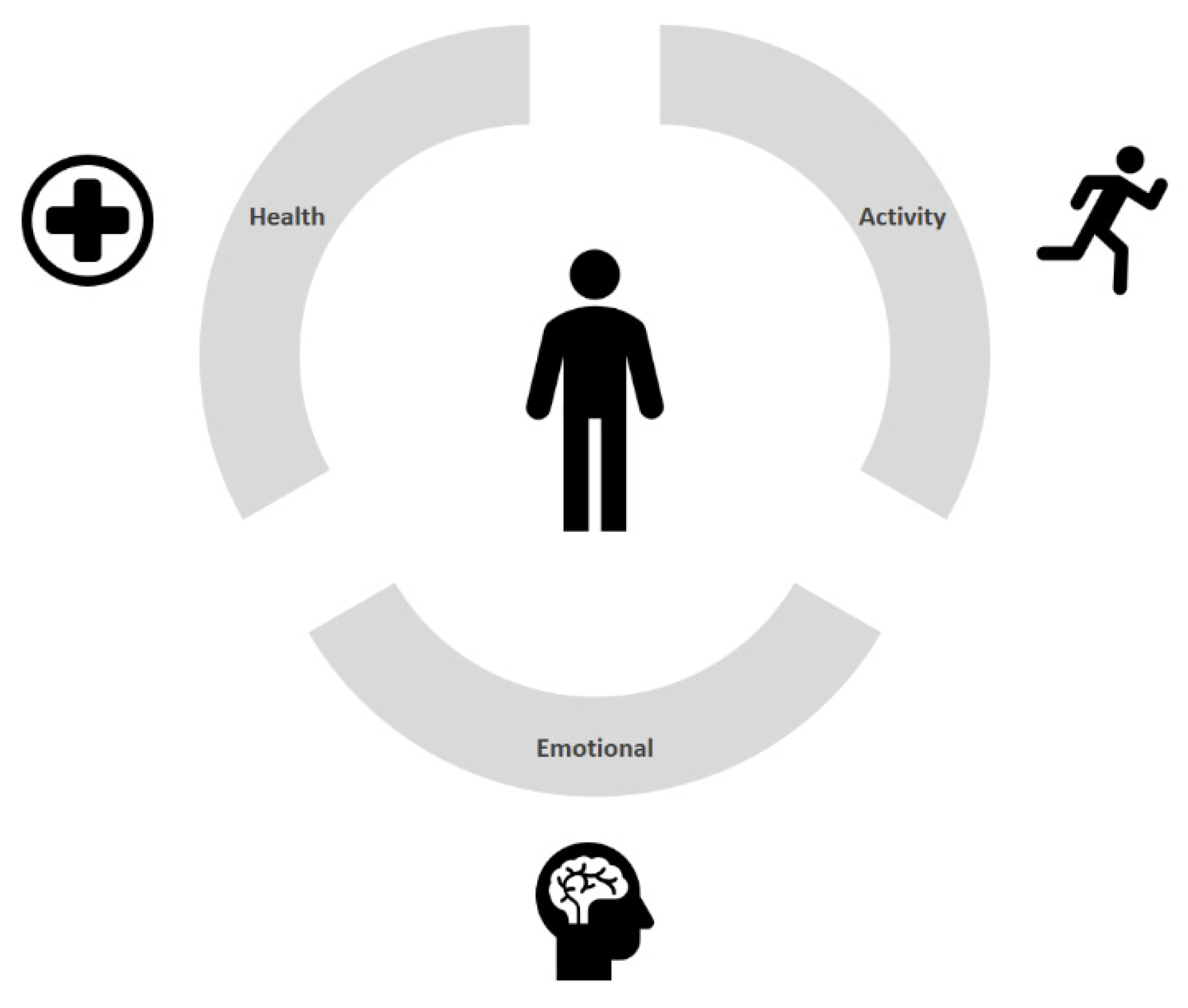

One of the foremost questions considered when trying to define a model for which an elders’ home IoT solution might be unified is which facets of a person’s life it should touch. Consequently, the model is composed of three dimensions: health, activity and emotional, as represented in

Figure 1.

Even if sometimes these are observed isolated from each other, in literature as well as in some solutions, the fact is that on some occasions they are intrinsically related. Hence a change in one of the dimensions will affect other(s). Certainly, a chronic health problem, for many persons, will affect their emotional state. Similarly, it may prevent them from performing some type of activities. This dependency which is observed sometimes isn’t by any means always negative. More positive relations may also occur. For instance, someone that is emotionally strong and is in a good physical shape (e.g., because of some daily activity) may also be less affected by some health complications and/or more easily surpass them.

Although other or more dimensions might have been selected, we selected these because of their noticeable contribution to the well-being of the elderly. Moreover, they were also chosen considering that some signals may be attained from sensors to determine some aspects related to these three dimensions. For example, a sensor measuring the heart rate can tell us something about the health. Sensors in appliances and sports machines can identify the activity that is being carried out and for how long and the type of activity (e.g., sports activity, entertainment—such as watching the favourite TV show). Finally, a camera capturing facial expressions can tell us something about the emotional state. For sure, all the factors that may influence a person’s well-being, their relative importance and what can actually be measured and/or influenced with today’s technology justifies further investigation.

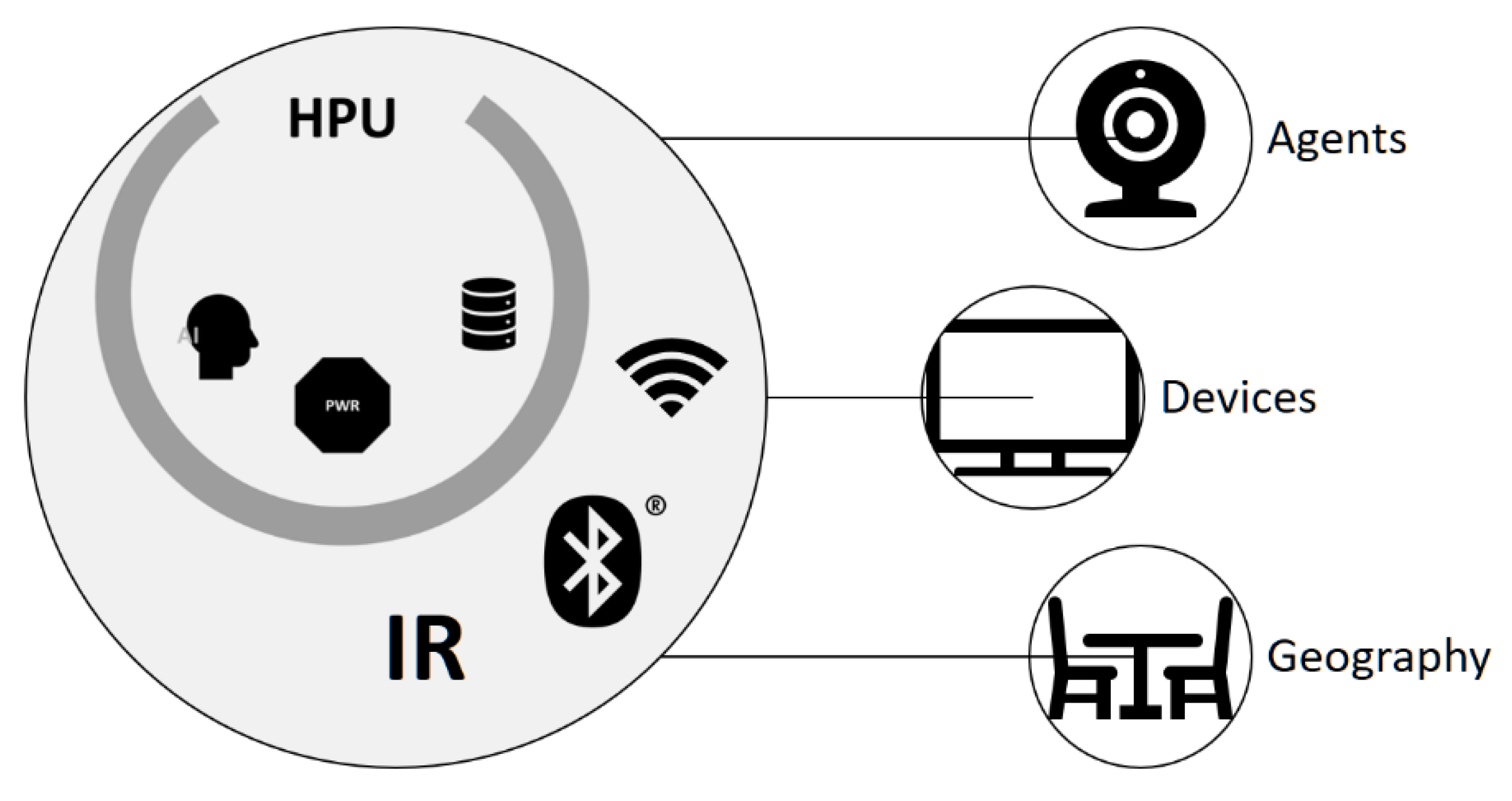

Considering these three dimensions, it is possible to identify all the entities which may be present in a home that in some way can intervene with the resident. We classify these entities into three categories: agents, devices and geography elements. These may be interpreted as an overview for much of the different types of objects that compose a home in the vast literature e.g., [

40,

41,

42,

43] that can be found nowadays about smart homes, smart environments or ambient assisted living (just to name a few of the most common terms found). Analogous to

Figure 1 these are represented as user-centred graphics as in

Figure 2.

On this classification, the agents represent all the entities that may be present in a home intended to collect or transmit information to the resident. Therefore, the agents may be classified as input agents, output agents or both. Examples of input agents are cameras, panic buttons, heartbeat reader modules, etc. Output modules are projectors, stereos, notification clocks, amongst others.

Agents may also be simultaneous input and output agents. An example of an output agent can be a bracelet which reads the user pulse and gives him information about eventual irregularities sensed.

Devices are the appliances of the house, i.e., the pieces of equipment operated electrically for performing domestic chores (e.g., a TV, a fridge, a washing machine, etc.). These can also act as agents, or interact with them, by collecting or transmitting information. For example, a fridge can act as an input agent, collecting information through integrated technology, which allows detecting all the goods inside it. This same fridge can also act as an output agent by emitting alerts when some specific item is missing or almost over. It can also emit alerts when expirations dates are near.

Finally, geography elements, designation inspired by the animation field, are all the home entities, non-electric, which may even become props (using the same animation analogy) if the user somehow intervenes with them (e.g., home furniture). For example, the user may seat on a couch, sleep on a bed or sit at the table having dinner.

2.1. The Model of a Wellbeing Home

Based upon the classification of the several entities of a home, a simple model may then be defined intended to improve the well-being of an elder’s life. Although this article is written taking into consideration the wellbeing of the elderly, the model may be used universally.

To notice also that it isn’t our intention to replace existing architectures or frameworks already presented in other projects ex: [

1,

2,

4,

16,

40,

41,

42,

43]. Truly some can “fit” inside this model, have their own model or represent also other models for smart homes. Our goal is to present a simplified model of a home well suited for the wellbeing of the elderly.

The model, represented in

Figure 3, besides the three types of entities (agents, devices and geography), also includes a Home Processing Unit (HPU) which is responsible for managing all the data received, managing and taking all the decisions. This element is thus something with computing capabilities (such as a personal computer). It is our belief that an intelligent home should centralize all the information in a central module of the home, which uses this information to perform informed actions.

Homes will then include a type of HPU which is going to be responsible for interacting with all the intelligent devices of the home in an integrated form. If one of the innovations of modern houses, promoted by home sellers a few years ago, was a central vacuum system in a house (composed by a central cleaner in the house and several connections points, where one could simply connect and still have access to the function expected: vacuum cleaning) it is most likely that future houses will integrate and be promoted as having some kind of HPU.

In some major appliance retail stores categories such as “Smart Home” are now appearing, which include several sensors, controllers, connectable devices and several other types of devices promoted as “smart”. These mainly work on a stand-alone basis, i.e., not within an integrated solution. Indeed, IoT devices seem to be a future trend, were around 20 billion of connected IoT related devices are forecast by 2023 [

44]. Nonetheless, a universal platform for them to work in an integrated manner doesn’t exist. This platform may take advantage of their full potential and even broaden their possibilities.

In order to have all the agents and/or devices communicating with a HPU, some sort of communication technology (or several) is necessary (e.g., Wi-Fi, Bluetooth, Infrared). This HPU will have the capability of communicating with all the home agents and/or devices. It may even use these to collect information of geography elements in the house (e.g., perform object detection and use segmentation algorithms on images or videos gathered from cameras). As such it can receive data from these entities (agents and devices) as well as sending data (including commands). To store all the data, one or more databases will then be necessary. Similarly, to allow to take decisions accordingly to the data received, it is necessary to assure some processing capabilities which may even include Artificial Intelligence (AI) algorithms.

The HPU presented in this article is centred on the well-being of the elder and will be driven by a Personal Well-being Rating (PWR) constantly being updated from the information gathered from the several entities present in the home. The PWR will be determined by the Personal Wellbeing Equation (PWE):

where,

The PWE is a function of a precondition (p). In practice, what this means is that it depends on a previous condition for a certain person (hence the “Personal” in the name of the Equation). It is not expected that every elder will have the same health, would perform the same activities or would have the same emotional responses. In other words, the Equation assumes that the wellbeing of a person depends on the three dimensions presented (health, activity and emotional) that can be monitored by agents and, ideally, somehow influenced.

Considering that we have the well-being rating that, based on the data collected by the agents, permits to discover when some dimension is affecting the general wellbeing of a person, the next step is to determine what to do to improve it. This may be achieved on a dimension approach, i.e., determining the dimension which is below some threshold and simply trigger an event. The challenge resides in to determine how to measure each dimension and what actions may be triggered.

Independently of the dimension there are two distinct ways of triggering an action: seamless (where the user doesn’t have to confirm any possible action before it happens—which doesn’t prevent her/him to cancel it, if desired) or with user intervention (where the user is explicitly solicited to manually confirm that he agrees with some action). Either way may be the most adequate depending on the specific scenario.

The next sections provide some insights about these concerns and give some ideas for possible future implementations in each dimension.

2.2. Activity

The first thing to consider in this dimension is how to determine the type of activities being carried out by the user on every single instant of time. This can be accomplished using IoT solutions but not only. It is easy to include agents to determine which devices are in use. It is trivial to determine if a TV or Hi-Fi system is turned on. Likewise, it is also easy to determine if a stationary bike is in use. One thing that simplifies this problem is the fact that all of these systems are electronic devices (even the more modest domestic stationary bikes include electronic components to monitor the activity—or electronic components can be easily coupled).

The aforementioned, however, is not although exactly true for any type of activity, which may not include electronic devices. For example, it may not be the most natural thing to ask someone to use some kind of wearables while dancing. The fact that music may be playing isn’t a synonym that someone is dancing—it may simply be seated listening to music, which takes a little bit less than the 4.5 MET that takes salsa dancing (estimated value). For such cases, further work may be required by using data collected from cameras or 3D sensors, and most likely using advanced imaging techniques, 3D techniques, or both and even Artificial Intelligence algorithms. One important component in all of these is a clock which measures the amount of time spent in each activity.

The strategy can be defined as an assembly of IoT devices, which keep track all the user activities and the time spent in each activity. All this information is sent to the HPU. This is then responsible for quantifying the total amount of MET spent in each period of time. Considered a hypothetic elder’s day, it could result in something such as what is represented (for simplification purposes several activities were omitted—e.g., taking medication, sitting on a toilet, etc.—and the table summarized) in

Table 1.

The aforementioned activities correspond to recognized Activities of Daily Living (ADL) [

45,

46], where the (b) activities correspond to basic ADL, i.e., those that must be accomplished every day by everyone who wishes to thrive by their one. The (i) activities correspond to instrumental ADL, i.e., those not necessary for fundamental functioning, but still very important to give someone the ability to live independently.

There are no devices specifically conceived to determine the activities performed by the user. However, even the 3D sensors found in many modern entertainment devices, such as the Kinect sensor, can cope with several users at the same type and be programmed to know at each time which user is doing what. We do believe that a well-considered MET measurement may integrate solutions to promote more healthy, active and fulfilling lifestyles.

Actions

Determining the activities performed by the user and the time spent in each one, is just one part of the problem. The second part of the problem is determining the actions that can be carried out when some dimension is below some threshold value. In the activity dimension, it wouldn’t be reasonable to force someone to perform an exercise, but there are some more subtle ways of improving the value of this activity. Knowing that the MET may be used to identify the energy expenditure of each activity, it is possible, for instance, to set an agent to play a music known for frequently make the home habitant dance and therefore spend 3.0 MET (estimated value of dancing a waltz) which is significantly more than the 1.3 MET from sitting quietly.

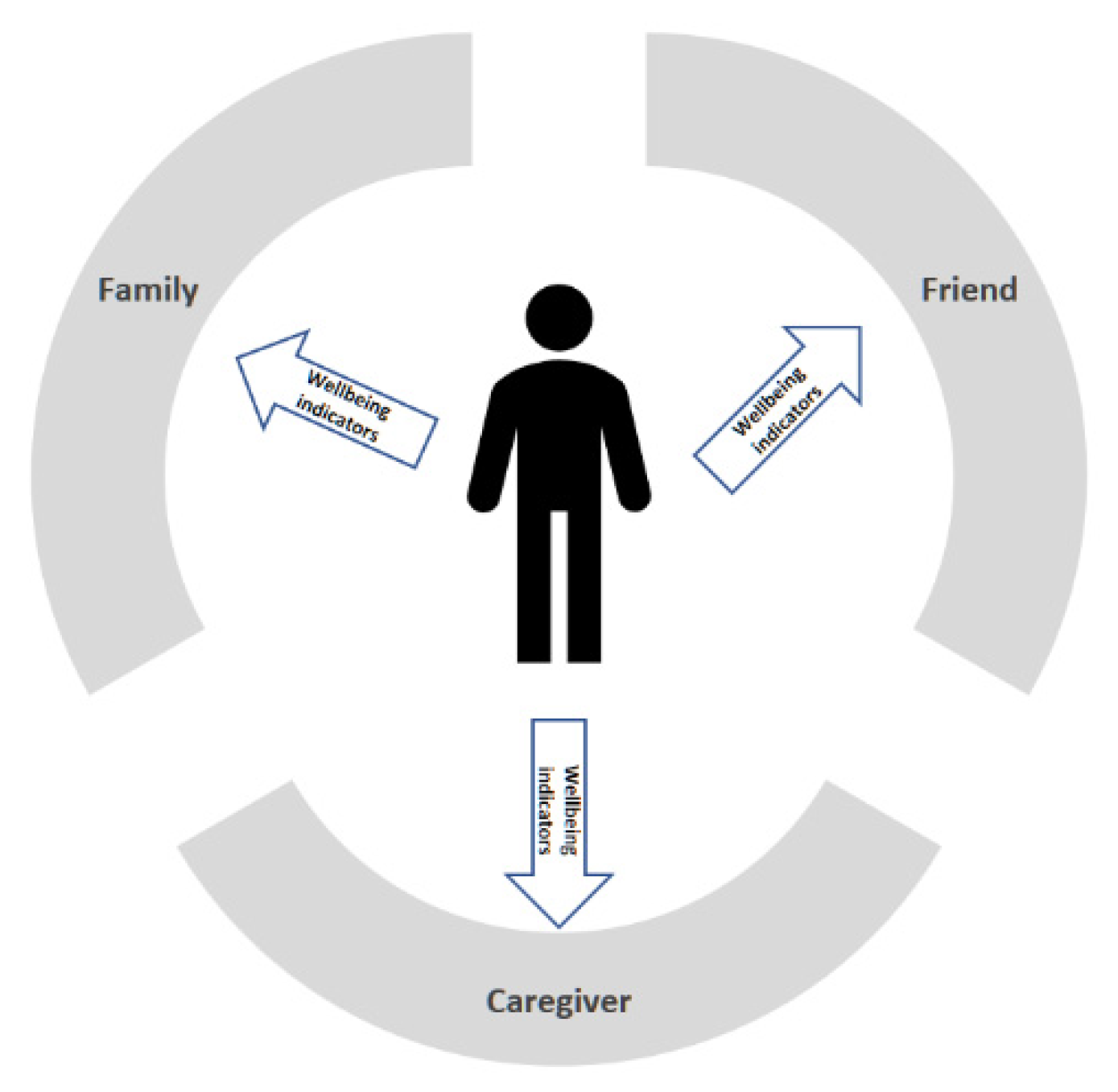

Another way is to simply inform a family member, a caregiver or a friend (represented in

Figure 4) that the value of this dimension is low, and this person would beneficiate from some physical activity.

Thus, what could be an eventual boring sedentary day, could become an outdoor day simply because a friend appeared at the door inviting for a walk accompanied by a good talk. This could also have some impact on the health and emotional dimensions. In addition, who knows if the friend who appeared wasn’t also with a low value in the activity value and even got alerted by its HPU (which suggested to find a friend for a walk). This makes us imagine a world of connected HPU collaborating to improve the life of everyone.

2.3. Emotional

One thing to consider regarding the emotions is exactly which emotions do we want to recognize. Actually, different authors define different emotions and we could go as far in this field at least until the ancient Greek treatise on the art of persuasion “Aristotle’s Rhetoric”, dating from 4th century BC [

47], passing through “The Expression of the Emotions in Man and Animals” of Charles Darwin in 1872 [

48], or even the eight basic emotions Robert Plutchik’s theory in 1980 [

49], which were represented in a “wheel of emotions” which includes different intensities.

The FACS also considers several emotions such as those in the list represented in

Table 2.

For now, from the list of

Table 2, the emotion we are most interested in is happiness. One could also consider sadness—which may be considered the opposite of happiness, but this could bring us a few problems. For example, it is normal for someone to feel sad for long periods of time, such as when mourning a loved one. In such case, however, it is a typical reaction and it doesn’t always prevent someone from showing happy feelings eventually during the day when facing some situations—e.g., when a grandchild gets a new achievement or makes a joke. The problem is that according to some studies, such as in [

50], sadness can last, on average, for 120 h. This is significantly more than other feelings. For these reasons, we are concentrated on happiness by now.

One way of knowing if a person is happy is for example if she/he is smiling. However, a person can’t be smiling all the time. As such, instead of measuring the amount of time a person is smiling, the approach consists of detecting the number of smiles per day. Nowadays there are several devices which can detect smiles. Modern cameras and even the latest smartphones can take pictures automatically when they detect smiles. Indeed, there are a lot of approaches that can be used to detect smiles e.g., [

51,

52,

53,

54,

55].

The approach may then be based on a count of smiles per day. It is evident that this depends on many factors, including the country of origin and the age of a person. It is well known, for example, that, generally speaking, children smile a whole lot more than adults. For example, a study says that an UK adult smiles 11 times a day [

56].

Actions

Knowing a way of recognizing that someone is happy is from their smile, the issue here is to trigger an emotional response which can be measured with a smile. It’s easy to find lists of things which cause people to smile e.g., [

57,

58]. An excerpt of ten things that can make people smile is in

Table 3, but there are many others.

The challenge resides in to determine which things may be triggered and controlled by the HPU, and which are more effective. For example, some are infeasible such as controlling the weather, tax rebates, making the favourite football team winning or making a pet greet his owner. Other is feasible and strategies may be defined to achieve these. It is possible to ask the user if she/he wants to watch photographs of their good moments, hear her/his favourite song or watch her/his favourite movie or TV show.

The next step, assuming there was a positive response from the user, is to choose the corresponding agent or device which is responsible for performing the corresponding task. This may be as simple as, for example, turn on a display or projecting photographs are known to make the user happy. While the activity is taking place, and even after, the HPU should keep collecting input data from the agents, in order to discover if the triggered reaction caused the desired response from the user (monitoring the PWR and taking proper actions in case of need). Another example is, when detecting a low emotional state, the HPU contact a close relative or loved one, which may then send a message to the elder or post some nice comment on social media.

2.4. Health

If for one hand this dimension is probably the one that may more severely impact the quality of life of the majority of the elders, on the other hand, it is the simplest to monitor (depending of course of the type of health indicators that we want to observe). Indeed, a lot of previous work exists in the field of intelligent devices for the elderly (e.g., in [

4] Chan et al. several smart home projects are presented), where health monitoring systems have been developed, such as: pulse, blood pressure, heart rate, fall detection devices and panic buttons. These essentially are made of wearable devices to monitor in real-time the health status of the elderly. These devices must have versatile functions and be user-friendly to allow elders to perform tasks with minor intrusion and disturbance, pain, inconvenience or movement restrictions [

1].

In [

2] an Android-based smartphone with a 3-axial accelerometer was used to detect falls of its holder in the context of a smart elderly home monitoring system. In this device, a panic button was also implemented. In [

3] Gaddam et al. use intelligent sensors with cognitive ability to implement a home monitoring system that can detect abnormal patterns in the daily home activities of the elderly. This also includes a panic button.

Certainly, previous work allows us, in a simple manner, to monitor several health indicators and send them to the HPU. The action is carried out, however, is noticeable a more sensible matter.

Actions

The action will always be dependent on the health status of every person. It may not be reasonable to have an HPU acting as a doctor and recommending medication when it detects an unusual variation in this dimension. Amongst all the possible negative consequences that this could represent, it would be meaningful knowing why there was a diminishing in this dimension. For example, a rise in the heartbeat may result from watching a scary movie (something that “smart” agents eventually could determine). A more reachable and feasible solution would simply send a message to a familiar, caregiver or family’s doctor. This person, knowing the medical history of the home resident, would be in the best condition to evaluate the situation and determine the best action to take.

More simple actions are simple alerts when the elder simply forgets to take some medication. In this situation, audio and/or visual information could be provided to alert the user to take their medicaments at certain times of the day.

A little less obvious is a different scenario where agents act in a preventive manner, in order to prevent some circumstances, which could lead to a situation where the user health could be compromised. One example is preventing accidental falls. Geography elements can be mapped inside a house and tracked when they are moved. This is essentially important for the elderly. For example, as time goes by some elders may develop diseases, such as cataracts, which lead to a decrease in vision. Agents can keep a map of all the house entities, keeping track of their location and eventually warn the user for some obstacles that may found when traversing the home and may lead her/him to fall. This can be achieved with cameras or 3D sensors, strategically collocated to avoid occlusion problems and with the aid of complex algorithms that follow the user in real time and detect potential risks of collision. In such scenarios, voice interfaces may also be included, where several aspects (e.g., type of language, on/offline system, context-aware, etc.) must be observed in order for them to become useful (in [

59] several of these aspects are discussed).

2.5. Privacy

Another question is if people would accept being monitored in the context of a smart home. On a UK study [

60] the perception of the purpose and benefits of smart home technologies, from the majority of participants, is: “the control of several devices in the house”; “improving security and safety”; “supporting assisted living or health”; “improve quality of life”; “provide care”. Though a significant amount (around 40 to 60% in each of the following items) also agree that “there is a risk that smart home technologies…”: “monitor private activities”; “are an invasion of privacy”; “are intrusive”. Nevertheless, the clear majority agree that “for there to be consumer confidence in smart home technologies, it is important that they…”: “guarantee privacy and confidentiality”; “securely hold all data collected”; “are reliable and easy to use”.

The benefits expected of technology are also presented in [

61] where one of “the most frequently mentioned benefit is an expected increase in safety”. Similarly, the importance the participants in studies presented also mention “that they expect that the use of technology for aging in place will increase their independence or reduce the burden on family caregivers” and that “The desire to age in place sometimes leads to acceptance of technology for aging in place”.

The issues concerning privacy are also of great concern for us. While we believe that most people would agree on a system that would improve their quality of life, there are some studies where some people show some discomfort. For example, a study conducted in [

62], showed that the majority of aged persons have no problem with audio, but refused the video modality.

While we believe that most elders won’t object to transmit some information to someone of their close circle of friends or family, to achieve a greater good, we don’t deny that in some cases this could represent a problem. It is impossible to make predictions about if this will become an issue, but the truth is that the model doesn’t prevent the users from using most of the solutions only in the privacy of their homes as personal monitor systems. Foremost, as we described in

Section 2.1, independently of the dimension of wellbeing there are two distinct ways of triggering an action: seamless (where the user doesn’t have to confirm any possible action before it happens—which doesn’t prevent her/him to cancel it, if desired) or with user intervention (where the user is explicitly solicited to manually confirm that he agrees with some action). This allows for a desired level of control and to the development of scenarios which may parameterize with only the options allowed by the users.

The next chapter describes further a study that we have conducted to gain more insights about most of these topics.

3. Results

We have conducted a formative evaluation to identify possible improvements of the model, to gather some insights about the main areas of concern and focus and gain more awareness about the needs of the elderly. This evaluation was accomplished through the means of seven interviews: a CEO of a company in the field of healthcare; an occupational therapist; a technical director and phycologist of an elders’ home; a doctor; a nurse; a direct-action and coordinator of physical activity program for the elderly; a professor in a higher school of health sciences specialized in people in their final stages of life. The common aspect with all of these is that all of them have or had close contact with elder within the scope of their professional activity.

The interviews included several questions whereas we present the most relevant for the evaluation of this study and summarize the main findings.

When asked if the dimensions considered for the study could represent the general well-being of the elders, none of the interviewed opposed against them. Nevertheless, other terms also come along, such as “social”, “psychological” (terms also referred in other literature [

63]) or even several other terms which represent several sub-areas of health dimensions. Indeed, although even other organizations were also considered, it was consensual that most of the new areas of well-being presented actually “fit” with the ones presented in the model. For example, the socialization contributes to improving the emotional state and the psychological, emotional and even spiritual aspects may be considered as contributors to the health dimension. It was also recognized that most dimensions are correlated, which confirms our initial presumptions (example: the physical activity may contribute to improving the health condition).

For such reasons it is our conviction that the proposed dimensions seem appropriate, a fact acknowledged by the several experts consulted, which doesn’t exclude possible future additional dimensions or organizations from being included in future work.

When asked if elderly preferred to live in their own homes, if they had the possibility of having a system that would be monitoring their condition and act in case of need, everyone confirmed that this is an undeniable truth. Similarly, they all agreed that it is difficult for an elder to accept a stranger in their home, i.e., accept social assistance from someone they don’t know. It was also referred that when willing to accept assistance they would prefer a familiar. After the initial resilience and well explained why the care is needed and the advantages for the elder, they would end up accepting the assistance. All the interviewed also agreed that once an elder finally accepts a stranger in their houses it is hard for them to accept new persons. Generally, all recognized that the elder would feel safer if they knew that they were being monitored at distance. The occupational therapist also referred, that the elders’ days are made of routines, so if, some routines are included at specific periods (e.g., video calls 2–3 days a week), after little time it would be the elders asking for these activities. It was also referred that one possible way of knowing something may eventually be wrong with an elder, is exactly by analysing these routines. For example, an elder which typically wakes up at 9 am and performs the same chores, and suddenly fail these routines may have a strong reason for such. This leads us to consider in the model the possibility of monitoring the daily chores.

Considering that technology may help the elderly to live more independently, the possibility of being monitored without having a stranger in their homes and, when possible, the person being someone they know, is a clear benefit. Similarly, the use of technology to include the routines in the several dimensions is easy to achieve. For example, video calls for the health (e.g., talking with their doctor, nurse, etc.) and emotional dimension (talking to a close one, something acknowledged by most of the interviewed as an important aspect, sometimes the elder only feel the need of someone to talk with) and alerts when it is time to perform specific activities (activity dimension).

When asked about the main concerns of the elder that live alone, several aspects were mentioned: the fear of dying alone; the fear of needing help and no one is present to help them; the fear of being unable to help their partners when needed; the concern about bothering other persons; safety issues (being robbed, accidents); loneliness; lack of security;

These are all aspects where technology based on the described dimensions can help. The agents can gather the necessary information to monitor each dimension, reacting properly in each situation.

A sensible matter is also if elders are willing to provide share their personal information (e.g., physiological data) with others. Although the general opinion amongst the experts was that they will be willing if the benefits were well explained, several concerns were also pointed out, such as: the need to have several profiles of access to the information; privacy concerns; data protection concerns; the persons to which the information will be shared with; the order in which the information flows (e.g., who would be contacted first).

Conversely, when asked about the possible use of video cameras to monitor the elders’ activities the majority of the interviewed (4 persons) mentioned that would be difficult to achieve, as the elderly would interpret it as an invasion of privacy. One of the interviewed (nurse) declared that it would be only possible with some of the elders. Only the direct-action specialist said that after some initial resilience, and after explaining all the benefits and purpose of the videos cameras, the majority of the elderly would agree with these devices. During one of the initial interviews two ideas came up: the possible use of video cameras only on a specific place in the house, known by the elders, and the possibility of only using the cameras by explicit evocation by the elders or eventually after some occurrence which implies urgent help. When faced with this possibility all agreed that it would be accepted by the elders.

For such reasons, and considering the importance of private data, possible implementations would have to be created supported in privacy and security concerns.

As for the reason why the elders don’t perform more physical activities, the responses were: no reason why—normally their physical activity was related within the context of some type of job/work, since they don’t work anymore, they simply don’t see the need; lack of motivation; absence of specific programs; lack of time; lack of information about the benefits of periodic physical activity; because they are tired; the cost of activities. Some also mentioned that they would do it if it was within the context of social activities or if recommended by the doctor. These recommendations can be integrated within the context of social games or virtual assistants which may improve both the activity and emotional dimension and consequently the health dimension.

Generally, all the interviewed considered that the model would be of much use to monitor and improve the well-being of the elderly. The nurse even denoted that some elder is already prone to technology since there are several which receive home support which has alarm buttons and GPS bracelets (which allows them to be located outside of their homes when they press a button).

4. Discussion

The model presented for a home conceived for the well-being of the elderly introduced in this article is the foundation of a series of projects which will be presented in the future literature. However, we can also backtrack the application of the model, with the vast list of solutions we have been working in the last few years. Although the model was presented considering the elders best interests it is observable that this may be considered a universal model.

Regarding the use of the MET of “The Adult Compendium of Physical Activities” there are limitations since it doesn’t account for differences in body mass, adiposity, age, sex, efficiency of movement, geographic and environmental conditions in which the activities are performed. However, this may serve as a reference and there is also always the possibility to use another type of measurements or even calculate measurements for each person. None of these alternatives poses obstacles to the correct application of the model.

There are also some constraints related to the FACS. For example, it deals with what is clearly visible in the face. There are also some features excluded from the FACS, such as facial sweating, tears, rashes, amongst others. Yet, most expressions are possible to determine with modern technologies in most of the cases. It is true that there will always be some situations where expressions can’t be determined based on the FACS (e.g., in a case of facial paralysis), but these are the exception. For such cases, or where someone is in more severe conditions, probably a caregiver or similar will always be present. The user wouldn’t then be taking the full advantage of a HPU equipped home (pretty much as a person who has a home equipped with a central vacuum cleaner, but which is no longer in conditions to vacuum clean). The model therefore assumes that there is a minimum extent of things that a person can do, which eventually allows her/him to live alone.

In some areas, those directly related with the dimensions of well-being considered (health, activity and emotional), some more future studies amongst specialists (e.g., doctors, nurses, psychologists, etc.) will benefit further developments of the model. Concerning the modern technology and our previous projects, there are strong evidence that the insights and solutions presented are plausible. The dimensions presented have noticeable contributions to the well-being of everyone, and we strongly believe that future projects founded on this model will allow an improvement of the quality of life of the elderly. Several aspects were discussed with a specialist which brought us several aspects to account for in future implementations. Moreover, the dimensions of well-being and human-computer interaction methods proposed are intended for the implementation of “more natural” interfaces which can effectively enrich the well-being of the elderly.

As mentioned in this article, the monitoring of the well-being status of the elder will be evaluated by the Personal Wellbeing Equation, where we are presently working.